12 Ceph 与 Kubernetes 集成

Ceph 与 Kubernetes 集成概述

Kubernetes 和 Ceph 集成提供了三种实现方案:

-

1 Volumes 存储卷

-

2 PV/PVC 持久化卷/持久化卷声明

-

3 StorageClass 动态存储类,动态创建PV和PVC

Ceph 与 Volumes 集成

目标:实现Ceph RBD和kubernetes volumes集成。

rbd 卷允许将 Rados 块设备 卷挂载到你的 Pod 中. 不像 emptyDir 那样会在删除 Pod 的同时也会被删除,rbd 卷的内容在删除 Pod 时 会被保存,卷只是被卸载。 这意味着 rbd 卷可以被预先填充数据,并且这些数据可以在 Pod 之间共享。

注意: 在使用 RBD 之前,你必须安装运行 Ceph。

RBD 的一个特性是它可以同时被多个用户以只读方式挂载。 这意味着你可以用数据集预先填充卷,然后根据需要在尽可能多的 Pod 中并行地使用卷。 不幸的是,RBD 卷只能由单个使用者以读写模式安装。不允许同时写入。

更多详情请参考

examples/volumes/rbd at master · kubernetes/examples · GitHub

Ceph与kubernetes完美集成-Happy云实验室-51CTO博客

准备工作

k8s 集群环境需要单独提供,这里使用的 allinone 模式部署的 k8s 集群

- k8s 节点安装 ceph-common

k8s 创建 rbd 设备映射需要使用

# k8s 节点执行命令,非 ceph 集群节点

[root@192.168.100.3 ~]# yum -y install ceph-common

- 创建pool

[root@node0 ceph-deploy]# ceph osd pool create kubernetes 8 8

pool 'kubernetes' created

[root@node0 ceph-deploy]# ceph osd lspools

1 ceph-demo

2 .rgw.root

3 default.rgw.control

4 default.rgw.meta

5 default.rgw.log

6 default.rgw.buckets.index

7 default.rgw.buckets.data

8 cephfs_metadata

9 cephfs_data

11 kubernetes # 新建的 pool

- 创建认证用户( k8s 集群访问 ceph 使用账号 )

[root@ ceph-deploy]# ceph auth get-or-create client.kubernetes mon 'profile rbd' osd 'profile rbd pool=kubernetes'

[client.kubernetes]

key = AQBqsGRj4/5+MxAAsxlw/VVnCdzcaQtwPe3oQg==

- 创建 secrets 对象存储将 Ceph 的认证 key 存储在 Secrets 中

获取上述步骤生成的key,并将其加密为base64格式

[root@node0 ceph-deploy]# echo AQBqsGRj4/5+MxAAsxlw/VVnCdzcaQtwPe3oQg== | base64

QVFCcXNHUmo0LzUrTXhBQXN4bHcvVlZuQ2R6Y2FRdHdQZTNvUWc9PQo=

- 创建定义secrets对象

[root@node0 ceph-deploy]# mkdir k8s

[root@node0 ceph-deploy]# cd k8s

[root@node0 k8s]# vim secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: ceph-secret

type: "kubernetes.io/rbd"

data:

key: QVFCcXNHUmo0LzUrTXhBQXN4bHcvVlZuQ2R6Y2FRdHdQZTNvUWc9PQo=

- 生成secrets

[root@node0 k8s]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.100.3 Ready master 44d v1.20.5

[root@node0 k8s]# kubectl apply -f secret.yaml

secret/ceph-secret created

[root@node0 k8s]# kubectl get secret

NAME TYPE DATA AGE

ceph-secret kubernetes.io/rbd 1 17s

default-token-wms5w kubernetes.io/service-account-token 3 44d

容器中调用RBD volumes

- 创建rbd块

[root@node0 k8s]# rbd create -p kubernetes --image-feature layering rbd.img --size 10G

[root@node-1 ~]# rbd info kubernetes/rbd.img

rbd image 'rbd.img':

size 10 GiB in 2560 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 196e6f8976f21

block_name_prefix: rbd_data.196e6f8976f21

format: 2

features: layering

op_features:

flags:

create_timestamp: Fri Nov 4 14:39:08 2022

access_timestamp: Fri Nov 4 14:39:08 2022

modify_timestamp: Fri Nov 4 14:39:08 2022

- Pod 中引用 RBD volumes

[root@node-1 volumes]# cat pods.yaml

apiVersion: v1

kind: Pod

metadata:

name: volume-rbd-demo

spec:

containers:

- name: pod-with-rbd

image: nginx:1.7.9

imagePullPolicy: IfNotPresent

ports:

- name: www

containerPort: 80

protocol: TCP

volumeMounts:

- name: rbd-demo

mountPath: /data

volumes:

- name: rbd-demo

rbd:

monitors:

- 192.168.100.130:6789

- 192.168.100.131:6789

- 192.168.100.132:6789

pool: kubernetes

image: rbd.img

fsType: ext4

user: kubernetes

secretRef:

name: ceph-secret

测试验证

- 生成 Pod

[root@node0 k8s]# kubectl apply -f pods.yaml

pod/volume-rbd-demo created

[root@node0 k8s]# kubectl get pods

NAME READY STATUS RESTARTS AGE

volume-rbd-demo 1/1 Running 0 14s

- 查看挂载的情况,可以看到RBD块存储挂载至data目录

[root@node0 k8s]# kubectl exec -it volume-rbd-demo -- bash

root@volume-rbd-demo:/# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 37G 13G 25G 35% /

tmpfs 64M 0 64M 0% /dev

tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup

/dev/rbd0 9.8G 37M 9.7G 1% /data # rbd 映射挂载

root@volume-rbd-demo:/# cd /data/

root@volume-rbd-demo:/data# ls -lh

total 16K

drwx------ 2 root root 16K Nov 4 06:55 lost+found

root@volume-rbd-demo:/data# echo test > test.txt

root@volume-rbd-demo:/data# cat test.txt

test

root@volume-rbd-demo:/data# ls -lh

total 20K

drwx------ 2 root root 16K Nov 4 06:55 lost+found

-rw-r--r-- 1 root root 5 Nov 4 06:57 test.txt

[root@192.168.100.3 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 3.8G 0 3.8G 0% /dev

tmpfs 3.9G 0 3.9G 0% /dev/shm

tmpfs 3.9G 13M 3.8G 1% /run

tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup

/dev/mapper/centos-root 37G 13G 25G 35% /

/dev/sda1 1014M 151M 864M 15% /boot

......

# rbd 映射

/dev/rbd0 9.8G 37M 9.7G 1% /var/lib/kubelet/plugins/kubernetes.io/rbd/mounts/kubernetes-image-rbd.img

PV 和 PVC 存储集成

准备工作

参考 Ceph 与 Volumes 集成 准备工作,创建好 pool,镜像,用户认证,secrets

- 创建rbd块

[root@node0 k8s]# rbd create -p kubernetes --image-feature layering demo-1.img --size 10G

# 查看 rbd 块

[root@node0 ceph-deploy]# rbd -p kubernetes ls

demo-1.img

rbd.img

定义 PV 和 PVC

- PV 定义,定义一块存储,抽象化为 PV

[root@node0 k8s]# cat pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: rbd-demo

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 10G

rbd:

monitors:

- 192.168.100.130:6789

- 192.168.100.131:6789

- 192.168.100.132:6789

pool: kubernetes

image: demo-1.img

fsType: ext4

user: kubernetes

secretRef:

name: ceph-secret

persistentVolumeReclaimPolicy: Retain

storageClassName: rbd

- PVC 定义引用 PV

[root@node0 k8s]# cat pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-demo

spec:

accessModes:

- ReadWriteOnce

volumeName: rbd-demo

resources:

requests:

storage: 10G

storageClassName: rbd

- 生成 PV 和 PVC

[root@node0 k8s]# kubectl apply -f pv.yaml

persistentvolume/rbd-demo created

[root@node0 k8s]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

rbd-demo 10G RWO Retain Available rbd 7s

[root@node0 k8s]# kubectl apply -f pvc.yaml

persistentvolumeclaim/pvc-demo created

[root@node0 k8s]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-demo Bound rbd-demo 10G RWO rbd 2s

# 再次查看 PV 状态,已经从 Available 转化为 Bound

[root@node0 k8s]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

rbd-demo 10G RWO Retain Bound default/pvc-demo rbd 3m53s

容器引用 PVC 存储

Pod 中引用 PVC

[root@node0 k8s]# cat pod-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-demo

spec:

containers:

- name: demo

image: nginx:1.7.9

imagePullPolicy: IfNotPresent

ports:

- name: www

protocol: TCP

containerPort: 80

volumeMounts:

- name: rbd

mountPath: /data

volumes:

- name: rbd

persistentVolumeClaim:

claimName: pvc-demo

测试验证

[root@node0 k8s]# kubectl get pods

NAME READY STATUS RESTARTS AGE

pod-demo 1/1 Running 0 20s

volume-rbd-demo 1/1 Running 0 21m

[root@node0 k8s]# kubectl exec -it pod-demo -- bash

root@pod-demo:/# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 37G 13G 25G 35% /

tmpfs 64M 0 64M 0% /dev

tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup

/dev/rbd1 9.8G 37M 9.7G 1% /data # rbd 映射挂载

root@pod-demo:/# cd /data/

root@pod-demo:/data# ls -lh

total 16K

drwx------ 2 root root 16K Nov 4 07:16 lost+found

root@pod-demo:/data# cp /etc/fstab ./

root@pod-demo:/data# ls -lh

total 20K

-rw-r--r-- 1 root root 37 Nov 4 07:18 fstab

drwx------ 2 root root 16K Nov 4 07:16 lost+found

Ceph 与 StorageClass 集成

参考文档

https://docs.ceph.com/en/latest/rbd/rbd-kubernetes/

Block Devices and Kubernetes

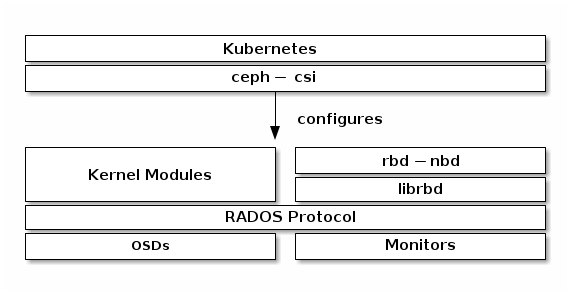

You may use Ceph Block Device images with Kubernetes v1.13 and later through

ceph-csi, which dynamically provisions RBD images to back Kubernetes

volumes and maps these RBD images as block devices (optionally mounting

a file system contained within the image) on worker nodes running

pods that reference an RBD-backed volume. Ceph stripes block device images as

objects across the cluster, which means that large Ceph Block Device images have

better performance than a standalone server!

To use Ceph Block Devices with Kubernetes v1.13 and higher, you must install

and configure ceph-csi within your Kubernetes environment. The following

diagram depicts the Kubernetes/Ceph technology stack.

Important

ceph-csi uses the RBD kernel modules by default which may not support all

Ceph CRUSH tunables or RBD image features.

Create a Pool

By default, Ceph block devices use the rbd pool. Create a pool for

Kubernetes volume storage. Ensure your Ceph cluster is running, then create

the pool.

$ ceph osd pool create kubernetes

Configure ceph-csi

Setup Ceph Client Authentication

Create a new user for Kubernetes and ceph-csi. Execute the following and

record the generated key:

$ ceph auth get-or-create client.kubernetes mon 'profile rbd' osd 'profile rbd pool=kubernetes' mgr 'profile rbd pool=kubernetes'

[client.kubernetes]

key = AQD9o0Fd6hQRChAAt7fMaSZXduT3NWEqylNpmg==

Generate ceph-csi cephx Secret

ceph-csi requires the cephx credentials for communicating with the Ceph

cluster. Generate a csi-rbd-secret.yaml file similar to the example below,

using the newly created Kubernetes user id and cephx key:

$ cat <<EOF > csi-rbd-secret.yaml

---

apiVersion: v1

kind: Secret

metadata:

name: csi-rbd-secret

namespace: default

stringData:

userID: kubernetes

userKey: AQD9o0Fd6hQRChAAt7fMaSZXduT3NWEqylNpmg==

EOF

Once generated, store the new Secret object in Kubernetes:

$ kubectl apply -f csi-rbd-secret.yaml

Configure ceph-csi Plugins

Create the required ServiceAccount and RBAC ClusterRole/ClusterRoleBinding

Kubernetes objects. These objects do not necessarily need to be customized for

your Kubernetes environment and therefore can be used as-is from the ceph-csi

deployment YAMLs:

$ kubectl apply -f https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-provisioner-rbac.yaml

$ kubectl apply -f https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-nodeplugin-rbac.yaml

Finally, create the ceph-csi provisioner and node plugins. With the

possible exception of the ceph-csi container release version, these objects do

not necessarily need to be customized for your Kubernetes environment and

therefore can be used as-is from the ceph-csi deployment YAMLs:

$ wget https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-rbdplugin-provisioner.yaml

$ kubectl apply -f csi-rbdplugin-provisioner.yaml

$ wget https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-rbdplugin.yaml

$ kubectl apply -f csi-rbdplugin.yaml

Important

The provisioner and node plugin YAMLs will, by default, pull the development

release of the ceph-csi container (quay.io/cephcsi/cephcsi:canary).

The YAMLs should be updated to use a release version container for

production workloads.

Using Ceph Block Devices

Create a StorageClass

The Kubernetes StorageClass defines a class of storage. Multiple StorageClass

objects can be created to map to different quality-of-service levels (i.e. NVMe

vs HDD-based pools) and features.

For example, to create a ceph-csi StorageClass that maps to the kubernetes

pool created above, the following YAML file can be used after ensuring that the

“clusterID” property matches your Ceph cluster’s fsid:

$ cat <<EOF > csi-rbd-sc.yaml

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: csi-rbd-sc

provisioner: rbd.csi.ceph.com

parameters:

clusterID: b9127830-b0cc-4e34-aa47-9d1a2e9949a8

pool: kubernetes

imageFeatures: layering

csi.storage.k8s.io/provisioner-secret-name: csi-rbd-secret

csi.storage.k8s.io/provisioner-secret-namespace: default

csi.storage.k8s.io/controller-expand-secret-name: csi-rbd-secret

csi.storage.k8s.io/controller-expand-secret-namespace: default

csi.storage.k8s.io/node-stage-secret-name: csi-rbd-secret

csi.storage.k8s.io/node-stage-secret-namespace: default

reclaimPolicy: Delete

allowVolumeExpansion: true

mountOptions:

- discard

EOF

$ kubectl apply -f csi-rbd-sc.yaml

Note that in Kubernetes v1.14 and v1.15 volume expansion feature was in alpha

status and required enabling ExpandCSIVolumes feature gate.

Create a PersistentVolumeClaim

A PersistentVolumeClaim is a request for abstract storage resources by a user.

The PersistentVolumeClaim would then be associated to a Pod resource to

provision a PersistentVolume, which would be backed by a Ceph block image.

An optional volumeMode can be included to select between a mounted file system

(default) or raw block device-based volume.

Using ceph-csi, specifying Filesystem for volumeMode can support both

ReadWriteOnce and ReadOnlyMany accessMode claims, and specifying Block

for volumeMode can support ReadWriteOnce, ReadWriteMany, and

ReadOnlyMany accessMode claims.

For example, to create a block-based PersistentVolumeClaim that utilizes

the ceph-csi-based StorageClass created above, the following YAML can be

used to request raw block storage from the csi-rbd-sc StorageClass:

$ cat <<EOF > raw-block-pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: raw-block-pvc

spec:

accessModes:

- ReadWriteOnce

volumeMode: Block

resources:

requests:

storage: 1Gi

storageClassName: csi-rbd-sc

EOF

$ kubectl apply -f raw-block-pvc.yaml

The following demonstrates and example of binding the above

PersistentVolumeClaim to a Pod resource as a raw block device:

$ cat <<EOF > raw-block-pod.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: pod-with-raw-block-volume

spec:

containers:

- name: fc-container

image: fedora:26

command: ["/bin/sh", "-c"]

args: ["tail -f /dev/null"]

volumeDevices:

- name: data

devicePath: /dev/xvda

volumes:

- name: data

persistentVolumeClaim:

claimName: raw-block-pvc

EOF

$ kubectl apply -f raw-block-pod.yaml

To create a file-system-based PersistentVolumeClaim that utilizes the

ceph-csi-based StorageClass created above, the following YAML can be used to

request a mounted file system (backed by an RBD image) from the csi-rbd-sc

StorageClass:

$ cat <<EOF > pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: rbd-pvc

spec:

accessModes:

- ReadWriteOnce

volumeMode: Filesystem

resources:

requests:

storage: 1Gi

storageClassName: csi-rbd-sc

EOF

$ kubectl apply -f pvc.yaml

The following demonstrates and example of binding the above

PersistentVolumeClaim to a Pod resource as a mounted file system:

$ cat <<EOF > pod.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: csi-rbd-demo-pod

spec:

containers:

- name: web-server

image: nginx

volumeMounts:

- name: mypvc

mountPath: /var/lib/www/html

volumes:

- name: mypvc

persistentVolumeClaim:

claimName: rbd-pvc

readOnly: false

EOF

$ kubectl apply -f pod.yaml

Ceph CSI 驱动安装

获取 ceph 集群 ID

[root@node0 csi]# ceph mon dump

epoch 3

# fsid 即为集群 ID

fsid 97702c43-6cc2-4ef8-bdb5-855cfa90a260

last_changed 2022-10-13 17:57:43.445773

created 2022-10-13 14:03:09.897152

min_mon_release 14 (nautilus)

0: [v2:192.168.100.130:3300/0,v1:192.168.100.130:6789/0] mon.node0

1: [v2:192.168.100.131:3300/0,v1:192.168.100.131:6789/0] mon.node1

2: [v2:192.168.100.132:3300/0,v1:192.168.100.132:6789/0] mon.node2

dumped monmap epoch 3

生成 cis configmap 配置

[root@node0 k8s]# mkdir csi

[root@node0 k8s]# cd csi/

[root@node0 csi]# cat csi-config-map.yaml

---

apiVersion: v1

kind: ConfigMap

data:

config.json: |-

[

{

# fsid

"clusterID": "97702c43-6cc2-4ef8-bdb5-855cfa90a260",

"monitors": [

"192.168.100.130:6789",

"192.168.100.131:6789",

"192.168.100.132:6789"

]

}

]

metadata:

name: ceph-csi-config

k8s 集群配置 ceph 应用信息

# 应用配置

[root@node0 csi]# kubectl apply -f csi-config-map.yaml

configmap/ceph-csi-config created

# 查看配置信息

[root@node0 csi]# kubectl get cm ceph-csi-config -o yaml

apiVersion: v1

data:

config.json: |-

[

{

# fsid

"clusterID": "97702c43-6cc2-4ef8-bdb5-855cfa90a260",

"monitors": [

"192.168.100.130:6789",

"192.168.100.131:6789",

"192.168.100.132:6789"

]

}

]

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","data":{"config.json":"[\n {\n # fsid\n \"clusterID\": \"97702c43-6cc2-4ef8-bdb5-855cfa90a260\",\n \"monitors\": [\n \"192.168.100.130:6789\",\n \"192.168.100.131:6789\",\n \"192.168.100.132:6789\"\n ]\n }\n]"},"kind":"ConfigMap","metadata":{"annotations":{},"name":"ceph-csi-config","namespace":"default"}}

creationTimestamp: "2022-11-04T08:20:19Z"

managedFields:

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:data:

.: {}

f:config.json: {}

f:metadata:

f:annotations:

.: {}

f:kubectl.kubernetes.io/last-applied-configuration: {}

manager: kubectl-client-side-apply

operation: Update

time: "2022-11-04T08:20:19Z"

name: ceph-csi-config

namespace: default

resourceVersion: "830613"

uid: a6a2843a-091a-4be7-af9d-9621ef0a5b4d

生成 ceph 集群 key 配置

# 查看集群 client.kubernetes 信息

[root@node0 csi]# ceph auth list | grep -A 3 "client.kubernetes"

installed auth entries:

client.kubernetes

key: AQBqsGRj4/5+MxAAsxlw/VVnCdzcaQtwPe3oQg==

caps: [mon] profile rbd

caps: [osd] profile rbd pool=kubernetes

# 生产 Secret 配置文件

[root@node0 csi]# cat csi-rbd-secret.yaml

---

apiVersion: v1

kind: Secret

metadata:

name: csi-rbd-secret

namespace: default

stringData: # stringData 数据需要提供元素数据,会自动完成 base64 转换

userID: kubernetes

userKey: AQBqsGRj4/5+MxAAsxlw/VVnCdzcaQtwPe3oQg==

k8s 配置 ceph 集群 key 信息

[root@node0 csi]# kubectl apply -f csi-rbd-secret.yaml

secret/csi-rbd-secret created

[root@node0 csi]# kubectl get secrets csi-rbd-secret -o yaml

apiVersion: v1

data:

userID: a3ViZXJuZXRlcw==

userKey: QVFCcXNHUmo0LzUrTXhBQXN4bHcvVlZuQ2R6Y2FRdHdQZTNvUWc9PQ==

kind: Secret

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Secret","metadata":{"annotations":{},"name":"csi-rbd-secret","namespace":"default"},"stringData":{"userID":"kubernetes","userKey":"AQBqsGRj4/5+MxAAsxlw/VVnCdzcaQtwPe3oQg=="}}

creationTimestamp: "2022-11-04T08:26:26Z"

managedFields:

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:data:

.: {}

f:userID: {}

f:userKey: {}

f:metadata:

f:annotations:

.: {}

f:kubectl.kubernetes.io/last-applied-configuration: {}

f:type: {}

manager: kubectl-client-side-apply

operation: Update

time: "2022-11-04T08:26:26Z"

name: csi-rbd-secret

namespace: default

resourceVersion: "831058"

uid: 872a0a7b-dbbe-4f0c-acb3-26a57b90f5c4

type: Opaque

# 解密查看数据信息

[root@node0 csi]# echo a3ViZXJuZXRlcw==| base64 -d

kubernetes

安装 ceph csi 驱动

# 部署 csi 的 rbac

## 下载资源文件

[root@node0 csi]# wget https://ghproxy.com/https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-provisioner-rbac.yaml

[root@node0 csi]# wget https://ghproxy.com/https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-nodeplugin-rbac.yaml

## 查看文件信息

[root@node0 csi]# ls -lh

total 32K

-rw-r--r-- 1 root root 330 Nov 4 16:19 csi-config-map.yaml

-rw-r--r-- 1 root root 1.2K Nov 4 16:36 csi-nodeplugin-rbac.yaml

-rw-r--r-- 1 root root 3.3K Nov 4 16:36 csi-provisioner-rbac.yaml

-rw-r--r-- 1 root root 171 Nov 4 16:23 csi-rbd-secret.yaml

## 部署资源清单

[root@node0 csi]# kubectl apply -f csi-provisioner-rbac.yaml

serviceaccount/rbd-csi-provisioner created

clusterrole.rbac.authorization.k8s.io/rbd-external-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role created

role.rbac.authorization.k8s.io/rbd-external-provisioner-cfg created

rolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role-cfg created

[root@node0 csi]# kubectl apply -f csi-nodeplugin-rbac.yaml

serviceaccount/rbd-csi-nodeplugin created

clusterrole.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

# 部署 cis 的 provisioner 和 plugin

## 下载文件

[root@node0 csi]# wget https://ghproxy.com/https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-rbdplugin-provisioner.yaml

[root@node0 csi]# wget https://ghproxy.com/https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-rbdplugin.yaml

## 替换文件内镜像,默认国内无法下载

[root@node0 csi]# sed -i "s#registry.k8s.io/#lank8s.cn/#" csi-rbdplugin.yaml

[root@node0 csi]# sed -i "s#registry.k8s.io/#lank8s.cn/#" csi-rbdplugin-provisioner.yaml

## 因为 k8s 集群为 allinone配置 ,需要修改 csi-rbdplugin-provisioner.yaml 配置文件的 pod 亲和性

[root@node0 csi]# cat csi-rbdplugin-provisioner.yaml

......

# affinity:

# podAntiAffinity:

# requiredDuringSchedulingIgnoredDuringExecution:

# - labelSelector:

# matchExpressions:

# - key: app

# operator: In

# values:

# - csi-rbdplugin-provisioner

# topologyKey: "kubernetes.io/hostname"

......

## 查看文件信息

[root@node0 csi]# ls -lh

total 24K

-rw-r--r-- 1 root root 330 Nov 4 16:19 csi-config-map.yaml

-rw-r--r-- 1 root root 8.0K Nov 4 16:33 csi-rbdplugin-provisioner.yaml

-rw-r--r-- 1 root root 7.1K Nov 4 16:34 csi-rbdplugin.yaml

-rw-r--r-- 1 root root 171 Nov 4 16:23 csi-rbd-secret.yaml

## 部署资源清单

[root@node0 csi]# kubectl apply -f csi-rbdplugin.yaml

daemonset.apps/csi-rbdplugin created

service/csi-metrics-rbdplugin created

[root@node0 csi]# kubectl apply -f csi-rbdplugin-provisioner.yaml

service/csi-rbdplugin-provisioner created

deployment.apps/csi-rbdplugin-provisioner created

查看部署 Pod 信息

[root@node0 csi]# kubectl get pods

NAME READY STATUS RESTARTS AGE

csi-rbdplugin-provisioner-6748c759b4-cj87s 0/7 Pending 0 23s

csi-rbdplugin-provisioner-6748c759b4-hwf4h 0/7 ContainerCreating 0 23s

csi-rbdplugin-provisioner-6748c759b4-sqwr9 0/7 Pending 0 23s

csi-rbdplugin-xntwg 0/3 ContainerCreating 0 27s

pod-demo 1/1 Running 0 86m

volume-rbd-demo 1/1 Running 0 107m

CSI 安装故障排障

排查 Pod 无法启原因

[root@localhost ~]# kubectl describe pods csi-rbdplugin-xntwg

Name: csi-rbdplugin-xntwg

Namespace: default

Priority: 2000001000

Priority Class Name: system-node-critical

Node: 192.168.100.3/192.168.100.3

Start Time: Fri, 04 Nov 2022 16:42:29 +0800

Labels: app=csi-rbdplugin

controller-revision-hash=586bfd9466

pod-template-generation=1

Annotations: <none>

Status: Pending

IP: 192.168.100.3

IPs:

IP: 192.168.100.3

Controlled By: DaemonSet/csi-rbdplugin

Containers:

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 14m default-scheduler Successfully assigned default/csi-rbdplugin-xntwg to 192.168.100.3

Warning FailedMount 12m kubelet Unable to attach or mount volumes: unmounted volumes=[ceph-csi-encryption-kms-config ceph-config], unattached volumes=[etc-selinux mountpoint-dir ceph-csi-encryption-kms-config host-dev ceph-logdir ceph-config rbd-csi-nodeplugin-token-t9bcr plugin-dir oidc-token keys-tmp-dir host-sys lib-modules registration-dir host-mount socket-dir ceph-csi-config]: timed out waiting for the condition

Warning FailedMount 10m kubelet Unable to attach or mount volumes: unmounted volumes=[ceph-config ceph-csi-encryption-kms-config], unattached volumes=[host-mount socket-dir oidc-token keys-tmp-dir registration-dir host-dev mountpoint-dir ceph-config host-sys lib-modules ceph-csi-config rbd-csi-nodeplugin-token-t9bcr etc-selinux ceph-csi-encryption-kms-config plugin-dir ceph-logdir]: timed out waiting for the condition

Warning FailedMount 8m22s (x11 over 14m) kubelet MountVolume.SetUp failed for volume "ceph-config" : configmap "ceph-config" not found

Warning FailedMount 8m2s kubelet Unable to attach or mount volumes: unmounted volumes=[ceph-csi-encryption-kms-config ceph-config], unattached volumes=[lib-modules ceph-csi-encryption-kms-config oidc-token keys-tmp-dir ceph-logdir host-sys mountpoint-dir ceph-config socket-dir host-dev host-mount etc-selinux rbd-csi-nodeplugin-token-t9bcr ceph-csi-config registration-dir plugin-dir]: timed out waiting for the condition

Warning FailedMount 4m18s (x13 over 14m) kubelet MountVolume.SetUp failed for volume "ceph-csi-encryption-kms-config" : configmap "ceph-csi-encryption-kms-config" not found

Pod 启动日志发现,服务确实 ceph-config 配置文件和 ceph-csi-encryption-kms-config 配置文件

提供 ceph-config-map configmap 文件

- 配置信息获取

[root@node0 csi]# cat ../../ceph.conf

[global]

fsid = 97702c43-6cc2-4ef8-bdb5-855cfa90a260

public_network = 192.168.100.0/24

cluster_network = 192.168.100.0/24

mon_initial_members = node0

mon_host = 192.168.100.130

auth_cluster_required = cephx # ceph-config-map configmap 参数

auth_service_required = cephx # ceph-config-map configmap 参数

auth_client_required = cephx # ceph-config-map configmap 参数

mon_max_pg_per_osd=1000

mon_allow_pool_delete = true

[client.rgw.node0]

rgw_frontends = "civetweb port=81"

[client.rgw.node1]

rgw_frontends = "civetweb port=81"

[osd]

osd crush update on start = false

- 配置文件

[root@node0 csi]# cat <<EOF > ceph-config-map.yaml

---

apiVersion: v1

kind: ConfigMap

data:

ceph.conf: |

[global]

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

# keyring is a required key and its value should be empty

keyring: |

metadata:

name: ceph-config

EOF

- 应用配置文件

[root@node0 csi]# kubectl apply -f ceph-config-map.yaml

configmap/ceph-config created

提供 ceph-csi-encryption-kms-config configmap 文件

- 配置文件

[root@node0 csi]# cat <<EOF > csi-kms-config-map.yaml

---

apiVersion: v1

kind: ConfigMap

data:

config.json: |-

{

"vault-test": {

"encryptionKMSType": "vault",

"vaultAddress": "http://vault.default.svc.cluster.local:8200",

"vaultAuthPath": "/v1/auth/kubernetes/login",

"vaultRole": "csi-kubernetes",

"vaultPassphraseRoot": "/v1/secret",

"vaultPassphrasePath": "ceph-csi/",

"vaultCAVerify": "false"

}

}

metadata:

name: ceph-csi-encryption-kms-config

EOF

- 应用配置文件

[root@node0 csi]# kubectl apply -f csi-kms-config-map.yaml

configmap/ceph-csi-encryption-kms-config created

查看 configmap 配置文件

[root@node0 csi]# kubectl get cm

NAME DATA AGE

ceph-config 2 95s

ceph-csi-config 1 42m

ceph-csi-encryption-kms-config 1 33s

kube-root-ca.crt 1 44d

重启服务

# 删除服务

[root@node0 csi]# kubectl delete -f csi-rbdplugin.yaml

daemonset.apps "csi-rbdplugin" deleted

service "csi-metrics-rbdplugin" deleted

[root@node0 csi]# kubectl delete -f csi-rbdplugin-provisioner.yaml

service "csi-rbdplugin-provisioner" deleted

deployment.apps "csi-rbdplugin-provisioner" deleted

# 部署服务

[root@node0 csi]# kubectl apply -f csi-rbdplugin-provisioner.yaml

service/csi-rbdplugin-provisioner created

deployment.apps/csi-rbdplugin-provisioner created

[root@node0 csi]# kubectl apply -f csi-rbdplugin.yaml

daemonset.apps/csi-rbdplugin created

service/csi-metrics-rbdplugin created

# 查看服务

[root@node0 csi]# kubectl get pods

NAME READY STATUS RESTARTS AGE

csi-rbdplugin-pkxx4 3/3 Running 0 1m1s

csi-rbdplugin-provisioner-5f45fb8994-f7g8g 7/7 Running 0 17s

csi-rbdplugin-provisioner-5f45fb8994-hvqhj 7/7 Running 0 17s

csi-rbdplugin-provisioner-5f45fb8994-ndqrf 7/7 Running 0 17s

pod-demo 1/1 Running 0 137m

volume-rbd-demo 1/1 Running 0 159m

PVC 动态申请空间

创建 StorageClass

[root@node0 csi]# cat <<EOF > csi-rbd-sc.yaml

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: csi-rbd-sc

provisioner: rbd.csi.ceph.com

parameters:

clusterID: 97702c43-6cc2-4ef8-bdb5-855cfa90a260

pool: kubernetes

imageFeatures: layering

csi.storage.k8s.io/provisioner-secret-name: csi-rbd-secret

csi.storage.k8s.io/provisioner-secret-namespace: default

csi.storage.k8s.io/controller-expand-secret-name: csi-rbd-secret

csi.storage.k8s.io/controller-expand-secret-namespace: default

csi.storage.k8s.io/node-stage-secret-name: csi-rbd-secret

csi.storage.k8s.io/node-stage-secret-namespace: default

reclaimPolicy: Delete

allowVolumeExpansion: true

mountOptions:

- discard

EOF

- 查看 secret 信息

[root@node0 csi]# kubectl get secret

NAME TYPE DATA AGE

ceph-secret kubernetes.io/rbd 1 3h5m

csi-rbd-secret Opaque 2 77m

default-token-wms5w kubernetes.io/service-account-token 3 44d

rbd-csi-nodeplugin-token-t9bcr kubernetes.io/service-account-token 3 64m

rbd-csi-provisioner-token-glz74 kubernetes.io/service-account-token 3 64m

应用配置文件

[root@node0 csi]# kubectl apply -f csi-rbd-sc.yaml

PVC 使用 StorageClass

- PVC 使用 StorageClass 申请 Filesystem 空间

[root@node0 csi]# cat <<EOF > pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: rbd-pvc

spec:

accessModes:

- ReadWriteOnce

volumeMode: Filesystem

resources:

requests:

storage: 1Gi

storageClassName: csi-rbd-sc

EOF

- 应用配置

[root@node0 csi]# kubectl apply -f pvc.yaml

persistentvolumeclaim/rbd-pvc created

- 查看 pvc 和 pv

[root@node0 csi]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

rbd-pvc Bound pvc-9bc784ff-ee7e-46c2-88c0-a9c46d91af26 1Gi RWO csi-rbd-sc 4s

[root@node0 csi]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-9bc784ff-ee7e-46c2-88c0-a9c46d91af26 1Gi RWO Delete Bound default/rbd-pvc csi-rbd-sc 1m

- 查看 rbd 信息

[root@node0 csi]# rbd -p kubernetes ls

csi-vol-85647987-c660-4c68-b39d-97824baaf9cd # 自动创建的 磁盘信息

demo-1.img

rbd.img

# 查看 rbd 信息

[root@node0 csi]# rbd info kubernetes/csi-vol-85647987-c660-4c68-b39d-97824baaf9cd

rbd image 'csi-vol-85647987-c660-4c68-b39d-97824baaf9cd':

size 1 GiB in 256 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 197a93331de02

block_name_prefix: rbd_data.197a93331de02

format: 2

features: layering

op_features:

flags:

create_timestamp: Fri Nov 4 17:49:46 2022

access_timestamp: Fri Nov 4 17:49:46 2022

modify_timestamp: Fri Nov 4 17:49:46 2022

容器调用 StorageClass

Pod 关联 PVC

[root@node0 csi]# cat <<EOF > pod.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: csi-rbd-demo-pod

spec:

containers:

- name: web-server

image: nginx

volumeMounts:

- name: mypvc

mountPath: /var/lib/www/html

volumes:

- name: mypvc

persistentVolumeClaim:

claimName: rbd-pvc

readOnly: false

EOF

- 应用配置

[root@node0 csi]# kubectl apply -f pod.yaml

pod/csi-rbd-demo-pod created

- 验证 Pod 信息

[root@node0 csi]# kubectl get pod

NAME READY STATUS RESTARTS AGE

csi-rbd-demo-pod 1/1 Running 0 41s

[root@node0 csi]# kubectl exec -it csi-rbd-demo-pod -- bash

root@csi-rbd-demo-pod:/# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 37G 15G 23G 40% /

tmpfs 64M 0 64M 0% /dev

tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup

/dev/mapper/centos-root 37G 15G 23G 40% /etc/hosts

shm 64M 0 64M 0% /dev/shm

/dev/rbd2 976M 2.6M 958M 1% /var/lib/www/html # rbd 挂载磁盘

root@csi-rbd-demo-pod:/# cd /var/lib/www/html/

root@csi-rbd-demo-pod:/var/lib/www/html# echo test > index.html

root@csi-rbd-demo-pod:/var/lib/www/html# ls

index.html lost+found

StorageClass 最终使用姿势

Pod 自动创建 pvc pv

[root@node0 csi]# cat <<EOF > pod_sc.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

selector:

matchLabels:

app: nginx # has to match .spec.template.metadata.labels

serviceName: "nginx"

replicas: 3 # by default is 1

template:

metadata:

labels:

app: nginx # has to match .spec.selector.matchLabels

spec:

terminationGracePeriodSeconds: 10

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

name: web

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "csi-rbd-sc"

resources:

requests:

storage: 1Gi

EOF

应用配置

[root@node0 csi]# kubectl apply -f pod_sc.yaml

service/nginx unchanged

statefulset.apps/web created

检查 pvc 和 pv

[root@node0 csi]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

www-web-0 Bound pvc-3a0bfdd2-4942-41e1-a0a6-4b8530c80c10 1Gi RWO csi-rbd-sc 2m21s

www-web-1 Bound pvc-e8004134-214f-4f7d-aca6-d5b1bcacce9e 1Gi RWO csi-rbd-sc 21s

www-web-2 Bound pvc-64251eba-e649-4053-b023-0280a67dcf75 1Gi RWO csi-rbd-sc 9s

[root@node0 csi]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-3a0bfdd2-4942-41e1-a0a6-4b8530c80c10 1Gi RWO Delete Bound default/www-web-0 csi-rbd-sc 2m22s

pvc-64251eba-e649-4053-b023-0280a67dcf75 1Gi RWO Delete Bound default/www-web-2 csi-rbd-sc 10s

pvc-e8004134-214f-4f7d-aca6-d5b1bcacce9e 1Gi RWO Delete Bound default/www-web-1 csi-rbd-sc 22s

[root@node0 csi]# rbd -p kubernetes ls

csi-vol-37fd341d-30c3-4561-bf8d-064372ebb8e6

csi-vol-5264949a-ce95-47b0-bb5c-34346c14d756

csi-vol-95c8b3ac-01a4-4e8c-809d-9c6326e24eee

csi-vol-85647987-c660-4c68-b39d-97824baaf9cd

demo-1.img

rbd.img

检查服务

[root@node0 csi]# kubectl get pods

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 77s

web-1 1/1 Running 0 71s

web-2 1/1 Running 0 59s

[root@node0 csi]# kubectl exec -it web-0 -- df

Filesystem 1K-blocks Used Available Use% Mounted on

overlay 38770180 15245220 23524960 40% /

tmpfs 65536 0 65536 0% /dev

tmpfs 3995032 0 3995032 0% /sys/fs/cgroup

/dev/mapper/centos-root 38770180 15245220 23524960 40% /etc/hosts

shm 65536 0 65536 0% /dev/shm

/dev/rbd3 999320 2564 980372 1% /usr/share/nginx/html

......

[root@node0 csi]# kubectl exec -it web-1 -- df

Filesystem 1K-blocks Used Available Use% Mounted on

overlay 38770180 15245220 23524960 40% /

tmpfs 65536 0 65536 0% /dev

tmpfs 3995032 0 3995032 0% /sys/fs/cgroup

/dev/mapper/centos-root 38770180 15245220 23524960 40% /etc/hosts

shm 65536 0 65536 0% /dev/shm

/dev/rbd4 999320 2564 980372 1% /usr/share/nginx/html

......

[root@node0 csi]# kubectl exec -it web-2 -- df

Filesystem 1K-blocks Used Available Use% Mounted on

overlay 38770180 15245220 23524960 40% /

tmpfs 65536 0 65536 0% /dev

tmpfs 3995032 0 3995032 0% /sys/fs/cgroup

/dev/mapper/centos-root 38770180 15245220 23524960 40% /etc/hosts

shm 65536 0 65536 0% /dev/shm

/dev/rbd5 999320 2564 980372 1% /usr/share/nginx/html

......

浙公网安备 33010602011771号

浙公网安备 33010602011771号