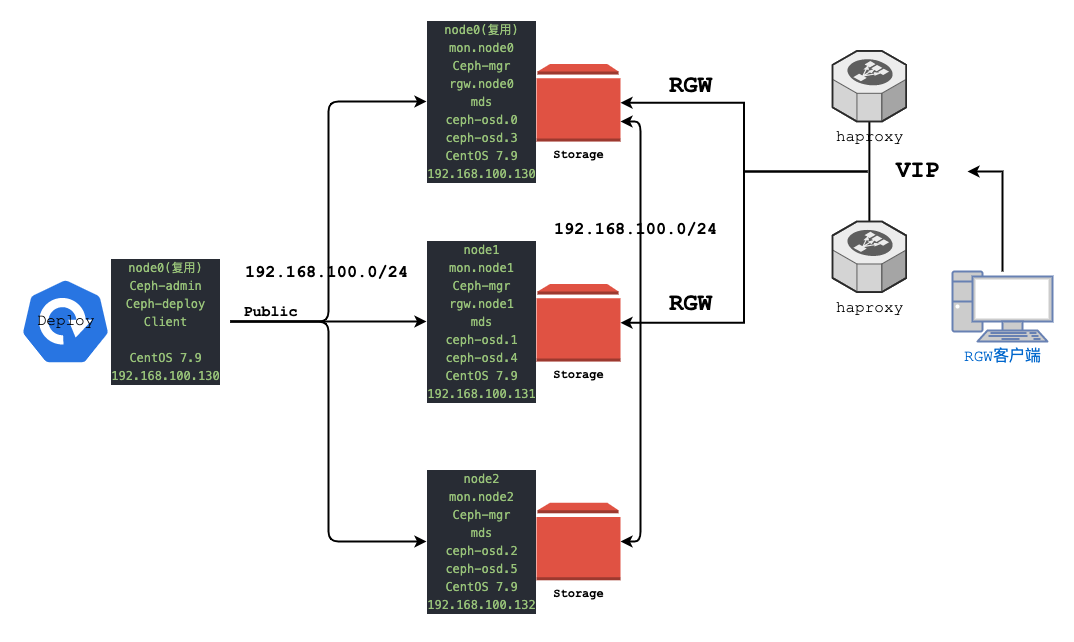

10 RGW 高可用集群

扩展 RGW 集群

node0 node1 节点都需要部署 rgw

当前集群只有一个 rgw 部署在 node0 节点

[root@node0 ceph-deploy]# ceph -s

cluster:

id: 97702c43-6cc2-4ef8-bdb5-855cfa90a260

health: HEALTH_OK

services:

mon: 3 daemons, quorum node0,node1,node2 (age 9d)

mgr: node1(active, since 12d), standbys: node2, node0

mds: cephfs-demo:1 {0=node1=up:active} 2 up:standby

osd: 6 osds: 6 up (since 5d), 6 in (since 12d)

rgw: 1 daemon active (node0) # 只有一个节点

task status:

data:

pools: 9 pools, 352 pgs

objects: 534 objects, 655 MiB

usage: 8.4 GiB used, 292 GiB / 300 GiB avail

pgs: 352 active+clean

ceph 集群 rgw 新增 node1 节点

新增节点默认端口:7480

[root@node0 ceph-deploy]# ceph-deploy rgw create node1

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy rgw create node1

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] rgw : [('node1', 'rgw.node1')]

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f5bf4168ea8>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function rgw at 0x7f5bf49bb0c8>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.rgw][DEBUG ] Deploying rgw, cluster ceph hosts node1:rgw.node1

[node1][DEBUG ] connected to host: node1

[node1][DEBUG ] detect platform information from remote host

[node1][DEBUG ] detect machine type

[ceph_deploy.rgw][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph_deploy.rgw][DEBUG ] remote host will use systemd

[ceph_deploy.rgw][DEBUG ] deploying rgw bootstrap to node1

[node1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[node1][WARNIN] rgw keyring does not exist yet, creating one

[node1][DEBUG ] create a keyring file

[node1][DEBUG ] create path recursively if it doesn't exist

[node1][INFO ] Running command: ceph --cluster ceph --name client.bootstrap-rgw --keyring /var/lib/ceph/bootstrap-rgw/ceph.keyring auth get-or-create client.rgw.node1 osd allow rwx mon allow rw -o /var/lib/ceph/radosgw/ceph-rgw.node1/keyring

[node1][INFO ] Running command: systemctl enable ceph-radosgw@rgw.node1

[node1][WARNIN] Created symlink from /etc/systemd/system/ceph-radosgw.target.wants/ceph-radosgw@rgw.node1.service to /usr/lib/systemd/system/ceph-radosgw@.service.

[node1][INFO ] Running command: systemctl start ceph-radosgw@rgw.node1

[node1][INFO ] Running command: systemctl enable ceph.target

[ceph_deploy.rgw][INFO ] The Ceph Object Gateway (RGW) is now running on host node1 and default port 7480

- 测试 node1 rgw 服务信息

[root@node0 ceph-deploy]# curl node1:7480

<?xml version="1.0" encoding="UTF-8"?>

<ListAllMyBucketsResult xmlns="http://s3.amazonaws.com/doc/2006-03-01/">

<Owner>

<ID>anonymous</ID>

<DisplayName></DisplayName>

</Owner>

<Buckets></Buckets>

</ListAllMyBucketsResult>

- 查看集群信息

[root@node0 ceph-deploy]# ceph -s

cluster:

id: 97702c43-6cc2-4ef8-bdb5-855cfa90a260

health: HEALTH_OK

services:

mon: 3 daemons, quorum node0,node1,node2 (age 9d)

mgr: node1(active, since 12d), standbys: node2, node0

mds: cephfs-demo:1 {0=node1=up:active} 2 up:standby

osd: 6 osds: 6 up (since 5d), 6 in (since 12d)

rgw: 2 daemons active (node0, node1) # rgw 服务新增了 node1 节点

task status:

data:

pools: 9 pools, 352 pgs

objects: 534 objects, 655 MiB

usage: 8.4 GiB used, 292 GiB / 300 GiB avail

pgs: 352 active+clean

修改 node1 rgw 服务使用 80 端口

- 修改配置

[root@node0 ceph-deploy]# cat ceph.conf

[global]

fsid = 97702c43-6cc2-4ef8-bdb5-855cfa90a260

public_network = 192.168.100.0/24

cluster_network = 192.168.100.0/24

mon_initial_members = node0

mon_host = 192.168.100.130

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

mon_max_pg_per_osd=1000

mon_allow_pool_delete = true

[client.rgw.node0]

rgw_frontends = "civetweb port=80"

# 新增 node1 配置信息

[client.rgw.node1]

rgw_frontends = "civetweb port=80"

[osd]

osd crush update on start = false

- 推送配置文件到 ceph 集群

[root@node0 ceph-deploy]# ceph-deploy --overwrite-conf config push node0 node1 node2

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy --overwrite-conf config push node0 node1 node2

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : True

[ceph_deploy.cli][INFO ] subcommand : push

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fac506283b0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] client : ['node0', 'node1', 'node2']

[ceph_deploy.cli][INFO ] func : <function config at 0x7fac50643c80>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.config][DEBUG ] Pushing config to node0

[node0][DEBUG ] connected to host: node0

[node0][DEBUG ] detect platform information from remote host

[node0][DEBUG ] detect machine type

[node0][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.config][DEBUG ] Pushing config to node1

[node1][DEBUG ] connected to host: node1

[node1][DEBUG ] detect platform information from remote host

[node1][DEBUG ] detect machine type

[node1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.config][DEBUG ] Pushing config to node2

[node2][DEBUG ] connected to host: node2

[node2][DEBUG ] detect platform information from remote host

[node2][DEBUG ] detect machine type

[node2][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

- 重启 ceph 集群 radosgw 服务

[root@node0 ceph-deploy]# ansible all -m shell -a "systemctl restart ceph-radosgw.target"

node2 | CHANGED | rc=0 >>

node1 | CHANGED | rc=0 >>

node0 | CHANGED | rc=0 >>

- 测试服务端口是否变更

[root@node0 ceph-deploy]# curl node1:7480

curl: (7) Failed connect to node1:7480; Connection refused

[root@node0 ceph-deploy]# curl node1:80

<?xml version="1.0" encoding="UTF-8"?>

<ListAllMyBucketsResult xmlns="http://s3.amazonaws.com/doc/2006-03-01/">

<Owner>

<ID>anonymous</ID>

<DisplayName></DisplayName>

</Owner>

<Buckets></Buckets>

</ListAllMyBucketsResult>

高可用介绍和准备

- 当前集群 radosgw 存在 2个节点

- node0 和 node1 那如何实现客户端访问 radosgw 的负载均衡

- 我们需要配置 harpoxy + keepalived 实现负载均衡效果

环境说明

harpoxy + keepalived 构建 RGW 高可用集群

| 主机名 | IP 地址 | 端口 | 软件 | VIP + 端口 |

|---|---|---|---|---|

| node0 | 192.168.100.130 | 81 | rgw+haproxy+keepalived | 192.168.100.100:80 (临时的虚拟IP) |

| node1 | 192.168.100.131 | 81 | rgw+haproxy+keepalived |

修改 radosgw 端口为 81,haproxy 服务使用 80 端口

# 修改配置文件

[root@node0 ceph-deploy]# cat ceph.conf

[global]

fsid = 97702c43-6cc2-4ef8-bdb5-855cfa90a260

public_network = 192.168.100.0/24

cluster_network = 192.168.100.0/24

mon_initial_members = node0

mon_host = 192.168.100.130

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

mon_max_pg_per_osd=1000

mon_allow_pool_delete = true

[client.rgw.node0]

rgw_frontends = "civetweb port=81" # 修改服务端口

[client.rgw.node1]

rgw_frontends = "civetweb port=81" # 修改服务端口

[osd]

osd crush update on start = false

# 推送配置文件到 ceph 集群

[root@node0 ceph-deploy]# ceph-deploy --overwrite-conf config push node0 node1 node2

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy --overwrite-conf config push node0 node1 node2

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : True

[ceph_deploy.cli][INFO ] subcommand : push

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7ff26c86e3b0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] client : ['node0', 'node1', 'node2']

[ceph_deploy.cli][INFO ] func : <function config at 0x7ff26c889c80>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.config][DEBUG ] Pushing config to node0

[node0][DEBUG ] connected to host: node0

[node0][DEBUG ] detect platform information from remote host

[node0][DEBUG ] detect machine type

[node0][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.config][DEBUG ] Pushing config to node1

[node1][DEBUG ] connected to host: node1

[node1][DEBUG ] detect platform information from remote host

[node1][DEBUG ] detect machine type

[node1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.config][DEBUG ] Pushing config to node2

[node2][DEBUG ] connected to host: node2

[node2][DEBUG ] detect platform information from remote host

[node2][DEBUG ] detect machine type

[node2][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

重启 ceph 集群 radosgw 服务

[root@node0 ceph-deploy]# ansible all -m shell -a "systemctl restart ceph-radosgw.target"

node2 | CHANGED | rc=0 >>

node1 | CHANGED | rc=0 >>

node0 | CHANGED | rc=0 >>

测试服务端口是否变更

# 查看 80 和 81 端口

[root@node0 ceph-deploy]# ss -tnlp | grep 80

[root@node0 ceph-deploy]# ss -tnlp | grep 81

LISTEN 0 128 *:81 *:* users:(("radosgw",pid=60280,fd=44))

# node0 节点

[root@node0 ceph-deploy]# curl node0:80

curl: (7) Failed connect to node1:80; Connection refused

[root@node0 ceph-deploy]# curl node0:81

<?xml version="1.0" encoding="UTF-8"?>

<ListAllMyBucketsResult xmlns="http://s3.amazonaws.com/doc/2006-03-01/">

<Owner>

<ID>anonymous</ID>

<DisplayName></DisplayName>

</Owner>

<Buckets></Buckets>

</ListAllMyBucketsResult>

# node1 节点

[root@node0 ceph-deploy]# curl node1:80

curl: (7) Failed connect to node1:80; Connection refused

[root@node0 ceph-deploy]# curl node1:81

<?xml version="1.0" encoding="UTF-8"?>

<ListAllMyBucketsResult xmlns="http://s3.amazonaws.com/doc/2006-03-01/">

<Owner>

<ID>anonymous</ID>

<DisplayName></DisplayName>

</Owner>

<Buckets></Buckets>

</ListAllMyBucketsResult>

配置 keepalived 高可用

keepalived 软件安装

- ansible host 配置

[root@node0 ceph-deploy]# cat /etc/ansible/hosts

......

[ceph]

node1

node2

[all]

node0

node1

node2

[rgw]

node0

node1

- 安装 keepalived 软件

[root@node0 ceph-deploy]# ansible rgw -m shell -a "yum install keepalived -y"

修改配置文件

- 修改 node0 节点 keepalived 配置

[root@node0 ceph-deploy]# cd /etc/keepalived/

[root@node0 keepalived]# ls -lh

total 4.0K

-rw-r--r-- 1 root root 3.6K Oct 1 2020 keepalived.conf

# 备份配置文件

[root@node0 keepalived]# cp keepalived.conf{,.bak}

# 修改配置文件

[root@node0 keepalived]# cat keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script chk_haproxy {

script "killall -0 haproxy"

interval 1

weight -20

}

vrrp_instance RGW {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.100.100/24

}

track_script {

chk_haproxy

}

}

- 推送配置信息到 node1 节点

[root@node0 keepalived]# scp ./keepalived.conf node1:/etc/keepalived/

keepalived.conf

- 修改 node1 节点配置信息

# 连接到 node1 节点

[root@node0 keepalived]# ssh node1

Last login: Thu Nov 3 14:40:47 2022 from node0

[root@node1 ~]# cd /etc/keepalived/

# 修改配置文件

[root@node1 keepalived]# cat keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script chk_haproxy {

script "killall -0 haproxy"

interval 1

weight -20

}

vrrp_instance RGW {

state BACKUP # 角色修改

interface ens33

virtual_router_id 51

priority 90 # 权重修改

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.100.100/24

}

track_script {

chk_haproxy

}

}

启动服务

# 退出 node1

[root@node1 keepalived]# exit

# 启动 keepalived 服务

[root@node0 keepalived]# ansible rgw -m shell -a "systemctl enable keepalived --now"

node1 | CHANGED | rc=0 >>

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.

node0 | CHANGED | rc=0 >>

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.

# 查看 keepalived 服务运行情况

[root@node0 keepalived]# ansible rgw -m shell -a "systemctl status keepalived"

node1 | CHANGED | rc=0 >>

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)

Active: active (running) since Thu 2022-11-03 14:53:03 CST; 2s ago

Process: 43266 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 43267 (keepalived)

CGroup: /system.slice/keepalived.service

├─43267 /usr/sbin/keepalived -D

├─43268 /usr/sbin/keepalived -D

└─43269 /usr/sbin/keepalived -D

Nov 03 14:53:03 node1 Keepalived_vrrp[43269]: SECURITY VIOLATION - scripts are being executed but script_security not enabled.

Nov 03 14:53:03 node1 Keepalived_vrrp[43269]: VRRP_Instance(RGW) removing protocol VIPs.

Nov 03 14:53:03 node1 Keepalived_vrrp[43269]: VRRP_Instance(RGW) removing protocol iptable drop rule

Nov 03 14:53:03 node1 Keepalived_vrrp[43269]: Using LinkWatch kernel netlink reflector...

Nov 03 14:53:03 node1 Keepalived_vrrp[43269]: VRRP_Instance(RGW) Entering BACKUP STATE

Nov 03 14:53:03 node1 Keepalived_vrrp[43269]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

Nov 03 14:53:03 node1 Keepalived_vrrp[43269]: /usr/bin/killall -0 haproxy exited with status 1

Nov 03 14:53:04 node1 Keepalived_vrrp[43269]: VRRP_Instance(RGW) Changing effective priority from 90 to 70

Nov 03 14:53:04 node1 Keepalived_vrrp[43269]: /usr/bin/killall -0 haproxy exited with status 1

Nov 03 14:53:05 node1 Keepalived_vrrp[43269]: /usr/bin/killall -0 haproxy exited with status 1

node0 | CHANGED | rc=0 >>

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)

Active: active (running) since Thu 2022-11-03 14:53:03 CST; 2s ago

Process: 52430 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 52431 (keepalived)

CGroup: /system.slice/keepalived.service

├─52431 /usr/sbin/keepalived -D

├─52432 /usr/sbin/keepalived -D

└─52433 /usr/sbin/keepalived -D

Nov 03 14:53:03 node0 Keepalived_vrrp[52433]: SECURITY VIOLATION - scripts are being executed but script_security not enabled.

Nov 03 14:53:03 node0 Keepalived_vrrp[52433]: VRRP_Instance(RGW) removing protocol VIPs.

Nov 03 14:53:03 node0 Keepalived_vrrp[52433]: VRRP_Instance(RGW) removing protocol iptable drop rule

Nov 03 14:53:03 node0 Keepalived_vrrp[52433]: Using LinkWatch kernel netlink reflector...

Nov 03 14:53:03 node0 Keepalived_vrrp[52433]: VRRP_Instance(RGW) Entering BACKUP STATE

Nov 03 14:53:03 node0 Keepalived_vrrp[52433]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

Nov 03 14:53:03 node0 Keepalived_vrrp[52433]: /usr/bin/killall -0 haproxy exited with status 1

Nov 03 14:53:04 node0 Keepalived_vrrp[52433]: VRRP_Instance(RGW) Changing effective priority from 100 to 80

Nov 03 14:53:04 node0 Keepalived_vrrp[52433]: /usr/bin/killall -0 haproxy exited with status 1

Nov 03 14:53:05 node0 Keepalived_vrrp[52433]: /usr/bin/killall -0 haproxy exited with status 1

查看 IP 信息

[root@node0 keepalived]# ip a l

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:81:75:65 brd ff:ff:ff:ff:ff:ff

inet 192.168.100.130/24 brd 192.168.100.255 scope global noprefixroute dynamic ens33

valid_lft 1147sec preferred_lft 1147sec

inet 192.168.100.100/24 scope global secondary ens33 # 新绑定了一个 IP 地址

valid_lft forever preferred_lft forever

inet6 fe80::ea04:47f0:b11e:9e2/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::cad3:6b55:3459:c179/64 scope link noprefixroute

valid_lft forever preferred_lft forever

配置 harpoxy 负载均衡

haproxy 软件安装

[root@node0 ~]# ansible rgw -m shell -a "yum install -y haproxy"

修改配置文件

[root@node0 ceph-deploy]# cat /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

frontend http_web *:80

mode http

default_backend rgw

backend rgw

balance roundrobin

mode http

server node0 192.168.100.130:81 check

server node1 192.168.100.131:81 check

复制配置文件到 node1 节点

[root@node0 ceph-deploy]# scp /etc/haproxy/haproxy.cfg node1:/etc/haproxy/

haproxy.cfg

启动 haproxy 服务

# 启动服务

[root@node0 ceph-deploy]# ansible rgw -m shell -a "systemctl enable haproxy --now"

node1 | CHANGED | rc=0 >>

Created symlink from /etc/systemd/system/multi-user.target.wants/haproxy.service to /usr/lib/systemd/system/haproxy.service.

node0 | CHANGED | rc=0 >>

Created symlink from /etc/systemd/system/multi-user.target.wants/haproxy.service to /usr/lib/systemd/system/haproxy.service.

# 检查服务运行情况

[root@node0 ceph-deploy]# ansible rgw -m shell -a "systemctl status haproxy"

node1 | CHANGED | rc=0 >>

● haproxy.service - HAProxy Load Balancer

Loaded: loaded (/usr/lib/systemd/system/haproxy.service; enabled; vendor preset: disabled)

Active: active (running) since Thu 2022-11-03 15:58:25 CST; 6s ago

Main PID: 52429 (haproxy-systemd)

CGroup: /system.slice/haproxy.service

├─52429 /usr/sbin/haproxy-systemd-wrapper -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid

├─52430 /usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -Ds

└─52431 /usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -Ds

Nov 03 15:58:25 node1 systemd[1]: Started HAProxy Load Balancer.

Nov 03 15:58:25 node1 haproxy-systemd-wrapper[52429]: haproxy-systemd-wrapper: executing /usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -Ds

node0 | CHANGED | rc=0 >>

● haproxy.service - HAProxy Load Balancer

Loaded: loaded (/usr/lib/systemd/system/haproxy.service; enabled; vendor preset: disabled)

Active: active (running) since Thu 2022-11-03 15:58:25 CST; 6s ago

Main PID: 61968 (haproxy-systemd)

CGroup: /system.slice/haproxy.service

├─61968 /usr/sbin/haproxy-systemd-wrapper -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid

├─61969 /usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -Ds

└─61970 /usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -Ds

Nov 03 15:58:25 node0 systemd[1]: Started HAProxy Load Balancer.

Nov 03 15:58:25 node0 haproxy-systemd-wrapper[61968]: haproxy-systemd-wrapper: executing /usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -Ds

检查 rgw 服务

# 查看端口情况

[root@node0 ceph-deploy]# ss -tnpl | grep *:80

LISTEN 0 128 *:80 *:* users:(("haproxy",pid=62425,fd=5))

# node0 节点 radosgw 服务

[root@node0 ceph-deploy]# curl node0:80

<?xml version="1.0" encoding="UTF-8"?>

<ListAllMyBucketsResult xmlns="http://s3.amazonaws.com/doc/2006-03-01/">

<Owner>

<ID>anonymous</ID>

<DisplayName></DisplayName>

</Owner>

<Buckets></Buckets>

</ListAllMyBucketsResult>

# node1 节点 radosgw 服务

[root@node0 ceph-deploy]# curl node1:80

<?xml version="1.0" encoding="UTF-8"?>

<ListAllMyBucketsResult xmlns="http://s3.amazonaws.com/doc/2006-03-01/">

<Owner>

<ID>anonymous</ID>

<DisplayName></DisplayName>

</Owner>

<Buckets></Buckets>

</ListAllMyBucketsResult>

修改客户端指向

s3 客户端配置

# 修改 s3cfg 配置文件

[root@node0 ceph-deploy]# vim /root/.s3cfg

......

#host_base = 192.168.100.130

#host_bucket = 192.168.100.130:80/%(bucket)s

host_base = 192.168.100.100 # 修改为 keepalived IP 地址

host_bucket = 192.168.100.100:80/%(bucket)s # 修改为 keepalived IP 地址

......

# 查看 bucket 信息

[root@node0 ceph-deploy]# s3cmd ls

2022-10-21 01:39 s3://ceph-s3-bucket

2022-10-21 03:16 s3://s3cmd-demo

2022-10-21 06:46 s3://swift-demo

# 新建 bucket 信息,测试功能

[root@node0 ceph-deploy]# s3cmd mb s3://test-1

Bucket 's3://test-1/' created

[root@node0 ceph-deploy]# s3cmd ls

2022-10-21 01:39 s3://ceph-s3-bucket

2022-10-21 03:16 s3://s3cmd-demo

2022-10-21 06:46 s3://swift-demo

2022-11-03 08:36 s3://test-1

swift 客户端配置

[root@node0 ceph-deploy]# cat swift_source.sh

# export ST_AUTH=http://192.168.100.130:80/auth

export ST_AUTH=http://192.168.100.100:80/auth # 修改为 keepalived IP 地址

export ST_USER=ceph-s3-user:swift

export ST_KEY=Gk1Br59ysIOh5tnwBQVqDMAHlspQCvHYixoz4Erz

# 查看 bucket 信息

[root@node0 ceph-deploy]# source swift_source.sh

[root@node0 ceph-deploy]# swift list

ceph-s3-bucket

s3cmd-demo

swift-demo

test-1

# 新建 bucket 信息,测试功能

[root@node0 ceph-deploy]# swift post test-2

[root@node0 ceph-deploy]# swift list

ceph-s3-bucket

s3cmd-demo

swift-demo

test-1

test-2

删除创建的 bucket

[root@node0 ceph-deploy]# s3cmd rb s3://test-1

Bucket 's3://test-1/' removed

[root@node0 ceph-deploy]# s3cmd ls

2022-10-21 01:39 s3://ceph-s3-bucket

2022-10-21 03:16 s3://s3cmd-demo

2022-10-21 06:46 s3://swift-demo

2022-11-03 08:39 s3://test-2

[root@node0 ceph-deploy]# swift delete test-2

test-2

[root@node0 ceph-deploy]# swift list

ceph-s3-bucket

s3cmd-demo

swift-demo

RGW 高可用集群测试

查看当前 keepalived IP 绑定情况

默认 keepalived IP 绑定在 node0 节点上

[root@node0 ceph-deploy]# ip a l

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:81:75:65 brd ff:ff:ff:ff:ff:ff

inet 192.168.100.130/24 brd 192.168.100.255 scope global noprefixroute dynamic ens33

valid_lft 1645sec preferred_lft 1645sec

inet 192.168.100.100/24 scope global secondary ens33 # keepalived IP

valid_lft forever preferred_lft forever

inet6 fe80::ea04:47f0:b11e:9e2/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::cad3:6b55:3459:c179/64 scope link noprefixroute

valid_lft forever preferred_lft forever

停止 haproxy 服务

[root@node0 ceph-deploy]# systemctl stop haproxy

查看 IP 漂移情况

[root@node0 ceph-deploy]# ssh node1

Last login: Thu Nov 3 16:21:47 2022 from node0

# keepalived IP 已经漂移到 node1 节点

[root@node1 ~]# ip a l

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:ce:d5:dc brd ff:ff:ff:ff:ff:ff

inet 192.168.100.131/24 brd 192.168.100.255 scope global noprefixroute dynamic ens33

valid_lft 1729sec preferred_lft 1729sec

inet 192.168.100.100/24 scope global secondary ens33 # keepalived IP

valid_lft forever preferred_lft forever

inet6 fe80::ea04:47f0:b11e:9e2/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::cad3:6b55:3459:c179/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::7dd2:fcda:997a:42ec/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

[root@node1 ~]# exit

logout

Connection to node1 closed.

测试客户端访问情况

# 测试 IP 是否能 ping 通

[root@node0 ceph-deploy]# ping 192.168.100.100

PING 192.168.100.100 (192.168.100.100) 56(84) bytes of data.

64 bytes from 192.168.100.100: icmp_seq=1 ttl=64 time=0.285 ms

64 bytes from 192.168.100.100: icmp_seq=2 ttl=64 time=0.499 ms

^C

--- 192.168.100.100 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 0.285/0.392/0.499/0.107 ms

# 客户端访问

[root@node0 ceph-deploy]# s3cmd ls

2022-10-21 01:39 s3://ceph-s3-bucket

2022-10-21 03:16 s3://s3cmd-demo

2022-10-21 06:46 s3://swift-demo

[root@node0 ceph-deploy]# swift list

ceph-s3-bucket

s3cmd-demo

swift-demo

恢复 haproxy 服务

[root@node0 ceph-deploy]# systemctl start haproxy

# 再次查看 Keepalived IP 漂移情况

[root@node0 ceph-deploy]# ip a l

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:81:75:65 brd ff:ff:ff:ff:ff:ff

inet 192.168.100.130/24 brd 192.168.100.255 scope global noprefixroute dynamic ens33

valid_lft 1467sec preferred_lft 1467sec

inet 192.168.100.100/24 scope global secondary ens33

valid_lft forever preferred_lft forever

inet6 fe80::ea04:47f0:b11e:9e2/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::cad3:6b55:3459:c179/64 scope link noprefixroute

valid_lft forever preferred_lft forever

# 测试客户端访问情况

[root@node0 ceph-deploy]# swift list

ceph-s3-bucket

s3cmd-demo

swift-demo

[root@node0 ceph-deploy]# ls

ceph.bootstrap-mds.keyring ceph.bootstrap-osd.keyring ceph.client.admin.keyring ceph.conf.bak ceph.mon.keyring get-pip.py s3client.py

ceph.bootstrap-mgr.keyring ceph.bootstrap-rgw.keyring ceph.conf ceph-deploy-ceph.log crushmap rdb swift_source.sh

[root@node0 ceph-deploy]# s3cmd ls

2022-10-21 01:39 s3://ceph-s3-bucket

2022-10-21 03:16 s3://s3cmd-demo

2022-10-21 06:46 s3://swift-demo

浙公网安备 33010602011771号

浙公网安备 33010602011771号