02 Ceph 安装部署

Ceph 安装手册

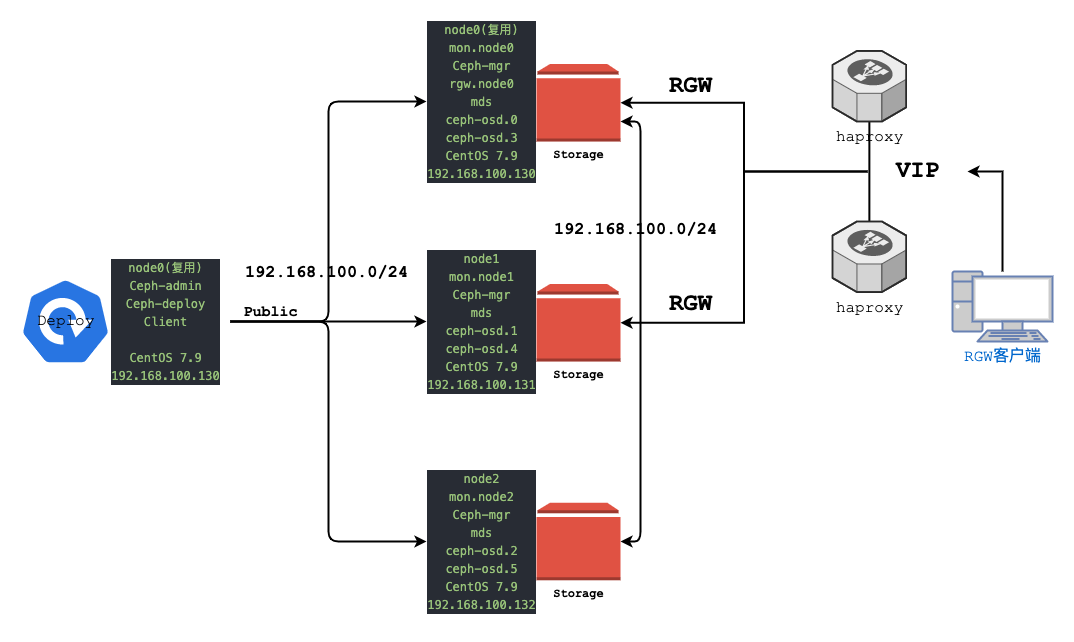

部署架构图

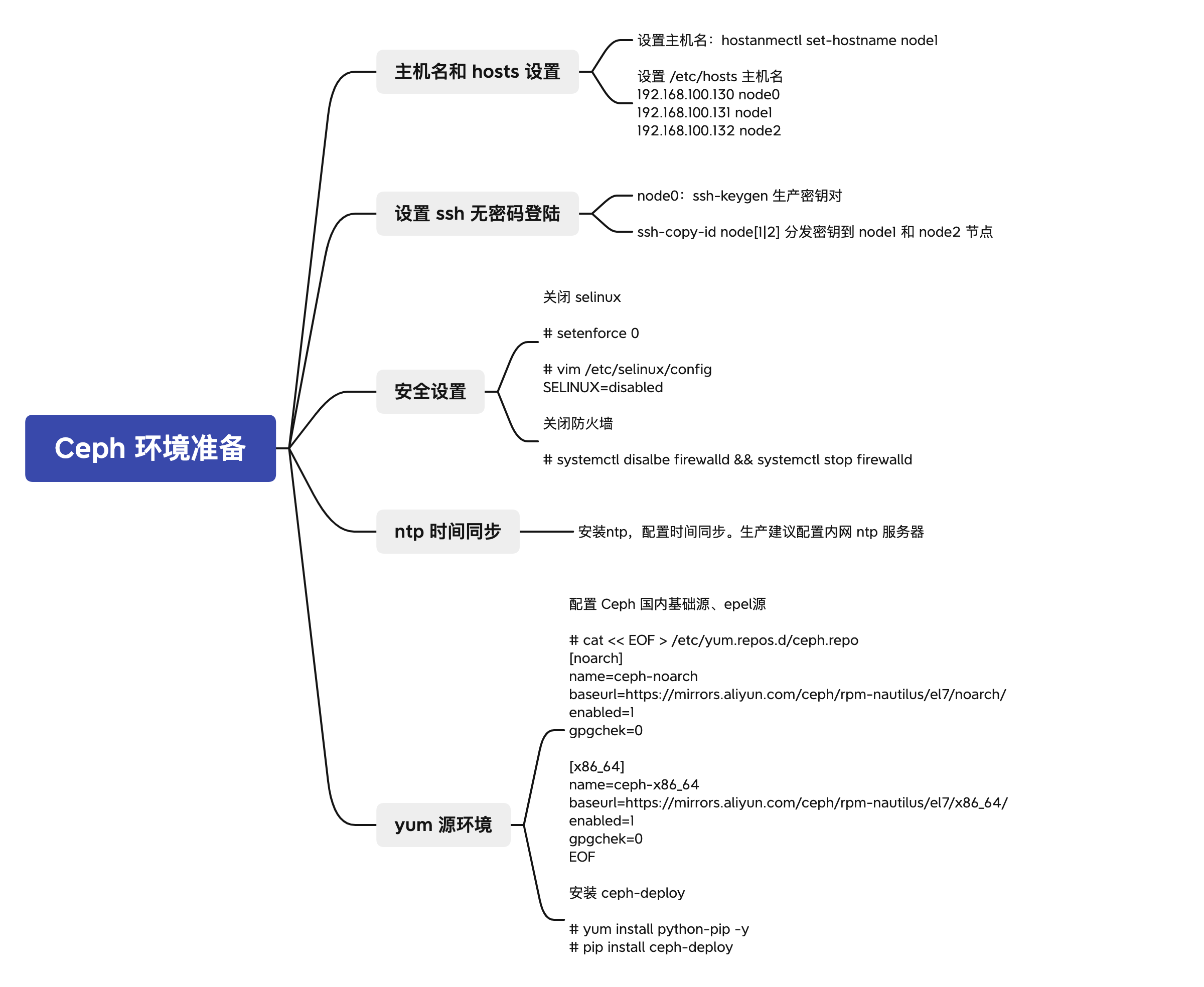

Ceph 基础环境准备

注意:以下步骤,除步骤

设置 ssh 无密码登陆,其他步骤所有节点均需要执行

主机名和 hosts 设置

设置主机名

$ hostanmectl set-hostname node[0|1|2]

设置 hosts

$ vim /etc/hosts

192.168.100.130 node0

192.168.100.131 node1

192.168.100.132 node2

设置 ssh 无密码登陆

此步骤只需要在 node0 上执行即可

# 生产密钥对

[root@node0 ~]$ ssh-keygen

# 分发密钥

[root@node0 ~]$ ssh-copy-id node1

[root@node0 ~]$ ssh-copy-id node2

安全设置

关闭 selinux

$ vim /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

$ setenforce 0

关闭 防火墙

$ systemctl disalbe firewalld && systemctl stop firewalld

ntp 时间同步

安装 ntp 软件

$ yum -y install ntp ntpdate ntp-doc

设置 时间同步

yum -y install ntp ntpdate ntp-doc

a | node0 节点

# 直接启动服务(生产环境建议配置为公司内网 ntp 服务器地址)

$ systemctl enable ntpd --now

$ systemctl status ntpd

# 查看时间同步情况

$ ntpq -pn

remote refid st t when poll reach delay offset jitter

==============================================================================

-193.182.111.141 192.36.143.153 2 u 1644 1024 62 310.598 25.603 21.193

+162.159.200.123 10.115.8.6 3 u 1041 1024 377 270.740 -6.318 5.122

+185.209.85.222 194.190.168.1 2 u 595 1024 377 191.147 -29.251 6.177

*36.110.235.196 10.218.108.2 2 u 1017 1024 377 35.870 3.234 2.771

b | node[1|2] 节点

# 修改时间同步源地址为 node0 IP 地址

$ vim /etc/ntp.conf

......

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

server 192.168.100.130 iburst

......

# 启动服务

$ systemctl enable ntpd --now

$ systemctl status ntpd

# 查看时间同步情况

$ ntpq -pn

remote refid st t when poll reach delay offset jitter

==============================================================================

*192.168.100.130 36.110.235.196 3 u 461 1024 377 0.334 1.744 1.644

yum 源环境设置

配置 Ceph 国内基础源、epel 源

# 设置源

$ cat << EOF > /etc/yum.repos.d/ceph.repo

[noarch]

name=ceph-noarch

baseurl=https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/noarch/

enabled=1

gpgchek=0

[x86_64]

name=ceph-x86_64

baseurl=https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/x86_64/

enabled=1

gpgchek=0

EOF

# 更新仓库

$ yum clean all

$ yum makecache

$ yum repolist

Ceph 安装

以下步骤如无特殊说明,只需要在

node0节点执行即可

安装 ceph-deploy 软件

# 安装 ceph-deploy

$ yum install python-pip

$ pip install ceph-deploy

# 查看 ceph-deploy 版本

$ ceph-deploy --version

2.0.1

安装 ceph 集群组件软件

此步骤需要在3个节点上运行

$ yum install ceph ceph-mds ceph-mgr ceph-mon ceph-radosgw -y

$ yum install -y --nogpgcheck librbd1

$ yum install -y --nogpgcheck libcephfs2

$ yum install -y --nogpgcheck ceph-mon

$ yum install -y --nogpgcheck ceph-mds

$ yum install -y --nogpgcheck ceph-mgr

$ yum install -y --nogpgcheck ceph-radosgw

$ yum install -y --nogpgcheck ceph

$ yum install ceph ceph-mds ceph-mgr ceph-mon ceph-radosgw -y

Ceph 集群初始化

创建目录,保存初始化集群信息

$ mkdir /data/ceph-deploy -pv

$ cd /data/ceph-deploy/

$ ceph-deploy new -h

$ ceph-deploy new --cluster-network 192.168.100.0/24 --public-network 192.168.100.0/24 node0

$ ls

ceph-deploy-ceph.log ceph.conf ceph.mon.keyring

$ ceph-deploy mon create-initial

$ ls -l

total 108

-rw-------. 1 root root 113 Oct 13 14:03 ceph.bootstrap-mds.keyring

-rw-------. 1 root root 113 Oct 13 14:03 ceph.bootstrap-mgr.keyring

-rw-------. 1 root root 113 Oct 13 14:03 ceph.bootstrap-osd.keyring

-rw-------. 1 root root 113 Oct 13 14:03 ceph.bootstrap-rgw.keyring

-rw-------. 1 root root 151 Oct 13 14:03 ceph.client.admin.keyring

-rw-r--r--. 1 root root 267 Oct 13 13:45 ceph.conf

-rw-r--r--. 1 root root 78676 Oct 13 18:02 ceph-deploy-ceph.log

-rw-------. 1 root root 73 Oct 13 13:45 ceph.mon.keyring

$ ceph -s

[errno 2] error connecting to the cluster

设置 admin 认证 , ceph -s 正常显示

# 节点分发管理员配置文件到所有节点

$ ceph-deploy admin node0 node1 node2

$ ceph -s

cluster:

id: 97702c43-6cc2-4ef8-bdb5-855cfa90a260

health: HEALTH_WARN

mon is allowing insecure global_id reclaim

services:

mon: 1 daemons, quorum node0 (age 49s)

mgr: no deameons active

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0.0 B used, 0 B / 0 B avail

pgs:

集群不健康问题处理

$ ceph config set mon auth_allow_insecure_global_id_reclaim false

$ ceph -s

cluster:

id: 97702c43-6cc2-4ef8-bdb5-855cfa90a260

health: HEALTH_OK

services:

mon: 1 daemons, quorum node0 (age 49s)

mgr: no deameons active

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0.0 B used, 0 B / 0 B avail

pgs:

## 参考文档:https://www.cnblogs.com/lvzhenjiang/p/14856572.html

集群监控 mgr 部署

$ ceph-deploy mgr create node0

$ ceph -s

cluster:

id: 97702c43-6cc2-4ef8-bdb5-855cfa90a260

health: HEALTH_OK

services:

mon: 1 daemons, quorum node0 (age 2m)

mgr: node0(active, since 5s)

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0.0 B used, 0 B / 0 B avail

pgs:

Ceph 集群添加 osd

添加 osd

$ ceph-deploy osd create node0 --data /dev/sdb

$ ceph-deploy osd create node1 --data /dev/sdb

$ ceph-deploy osd create node2 --data /dev/sdb

查看 osd

$ ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.14639 root default

-3 0.04880 host node0

0 hdd 0.04880 osd.0 up 1.00000 1.00000

-5 0.04880 host node1

1 hdd 0.04880 osd.1 up 1.00000 1.00000

-7 0.04880 host node2

2 hdd 0.04880 osd.2 up 1.00000 1.00000

查看 集群状态

$ ceph -s

cluster:

id: 97702c43-6cc2-4ef8-bdb5-855cfa90a260

health: HEALTH_OK

services:

mon: 1 daemons, quorum node0 (age 3h)

mgr: node0(active, since 3h)

osd: 3 osds: 3 up (since 3m), 3 in (since 3m)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 147 GiB / 150 GiB avail

pgs:

Ceph 集群扩容 monitor 服务

$ ceph-deploy mon add node1 --address 192.168.100.131

$ ceph-deploy mon add node2 --address 192.168.100.132

$ ceph -s

cluster:

id: 97702c43-6cc2-4ef8-bdb5-855cfa90a260

health: HEALTH_OK

services:

mon: 3 daemons, quorum node0,node1,node2 (age 0.187697s)

mgr: node0(active, since 3h)

osd: 3 osds: 3 up (since 5m), 3 in (since 5m)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 147 GiB / 150 GiB avail

pgs:

查看仲裁情况

$ ceph quorum_status --format json-pretty

{

"election_epoch": 12,

"quorum": [

0,

1,

2

],

"quorum_names": [

"node0",

"node1",

"node2"

],

"quorum_leader_name": "node0",

"quorum_age": 104,

"monmap": {

"epoch": 3,

"fsid": "97702c43-6cc2-4ef8-bdb5-855cfa90a260",

"modified": "2022-10-13 17:57:43.445773",

"created": "2022-10-13 14:03:09.897152",

"min_mon_release": 14,

"min_mon_release_name": "nautilus",

"features": {

"persistent": [

"kraken",

"luminous",

"mimic",

"osdmap-prune",

"nautilus"

],

"optional": []

},

"mons": [

{

"rank": 0,

"name": "node0",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "192.168.100.130:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "192.168.100.130:6789",

"nonce": 0

}

]

},

"addr": "192.168.100.130:6789/0",

"public_addr": "192.168.100.130:6789/0"

},

{

"rank": 1,

"name": "node1",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "192.168.100.131:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "192.168.100.131:6789",

"nonce": 0

}

]

},

"addr": "192.168.100.131:6789/0",

"public_addr": "192.168.100.131:6789/0"

},

{

"rank": 2,

"name": "node2",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "192.168.100.132:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "192.168.100.132:6789",

"nonce": 0

}

]

},

"addr": "192.168.100.132:6789/0",

"public_addr": "192.168.100.132:6789/0"

}

]

}

}

$ ceph mon stat

e3: 3 mons at {node0=[v2:192.168.100.130:3300/0,v1:192.168.100.130:6789/0],node1=[v2:192.168.100.131:3300/0,v1:192.168.100.131:6789/0],node2=[v2:192.168.100.132:3300/0,v1:192.168.100.132:6789/0]}, election epoch 12, leader 0 node0, quorum 0,1,2 node0,node1,node2

$ ceph mon dump

epoch 3

fsid 97702c43-6cc2-4ef8-bdb5-855cfa90a260

last_changed 2022-10-13 17:57:43.445773

created 2022-10-13 14:03:09.897152

min_mon_release 14 (nautilus)

0: [v2:192.168.100.130:3300/0,v1:192.168.100.130:6789/0] mon.node0

1: [v2:192.168.100.131:3300/0,v1:192.168.100.131:6789/0] mon.node1

2: [v2:192.168.100.132:3300/0,v1:192.168.100.132:6789/0] mon.node2

dumped monmap epoch 3

Ceph 集群扩容 mgr 服务

$ ceph-deploy mgr create node1 node2

$ ceph -s

cluster:

id: 97702c43-6cc2-4ef8-bdb5-855cfa90a260

health: HEALTH_OK

services:

mon: 3 daemons, quorum node0,node1,node2 (age 5m)

mgr: node0(active, since 3h), standbys: node1, node2

osd: 3 osds: 3 up (since 10m), 3 in (since 10m)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 147 GiB / 150 GiB avail

pgs:

浙公网安备 33010602011771号

浙公网安备 33010602011771号