RTR-03 Global Illumination

离线渲染能够实现间接光照效果,主流的方法有两个:辐射度算法和光线追踪算法。但是,这两个算法对于实时渲染而言都是难以承担的。因此,实时渲染的间接光照效果是需要额外实现的。这一系列的算法就是全局光照算法。全局光照算法有两类,一类是预计算的算法,令一类则不需要预计算。此外,全局光照算法一般是区分了漫反射光照和镜面反射光照的,当然也存在着通用算法。本文章介绍并实现的是,不需要预计算的全局光照算法,预计算算法会在另一篇文章中介绍并实现。此外,环境光遮蔽算法也会放在其他文章中介绍。

RSM

- 首先,我们介绍漫反射算法。经典的漫反射全局光照算法一般都是建立在RSM算法之上的。RSM算法是对shadow map的一次拓展[1],其实现思路也相应的很简洁。

Reflective Shadow Map

- 不同于shadow map,我们会在RSM中记录四张信息:depth、position、normal、flux。其中,depth信息是老生常谈了,而position和normal这里我记录的是世界坐标,更准确的说是着色计算空间的坐标,而flux来自光源,我在这里简单的使用了一个定向光源,然后对其radiance乘以漫反射BRDF作为shadow map中该片段的出射flux。

#version 450 core

layout(location = 0) in vec3 aPos;

layout(location = 1) in vec2 aTexCoord;

layout(location = 2) in vec3 aNorm;

out vec3 fragPos;

out vec3 normal;

out vec2 texCoord;

uniform mat4 lightSpaceMatrix;

uniform mat4 model;

void main()

{

gl_Position = lightSpaceMatrix * model * vec4(aPos, 1.0f);

fragPos = vec3(model * vec4(aPos, 1.0f));

mat3 normModel = transpose(inverse(mat3(model)));

normal = normalize(normModel * aNorm);

texCoord = aTexCoord;

}

Renderring

- 我们将shadow map中的片段作为间接光照的光源,这是很显然的,因为他们是所有的被直接照亮的位置。这些光源全都是漫反射的,不同于一般的各向一致反射,我们认为片段的出射光是在半球范围内余弦分布的。进一步的,我们对shading point接受这些光照时,也按照余弦形式来做半球采样。这就意味着,VPL仅仅能照亮处于其法向半球空间内的片段,这很合理。

Importance-driven Sampling

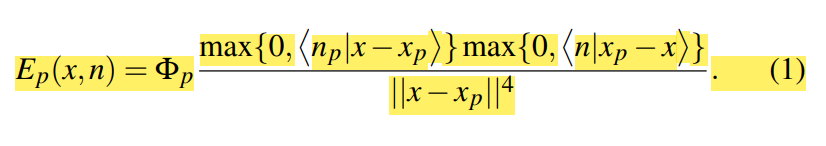

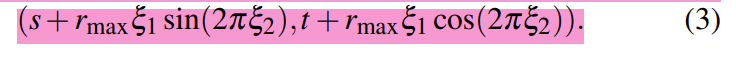

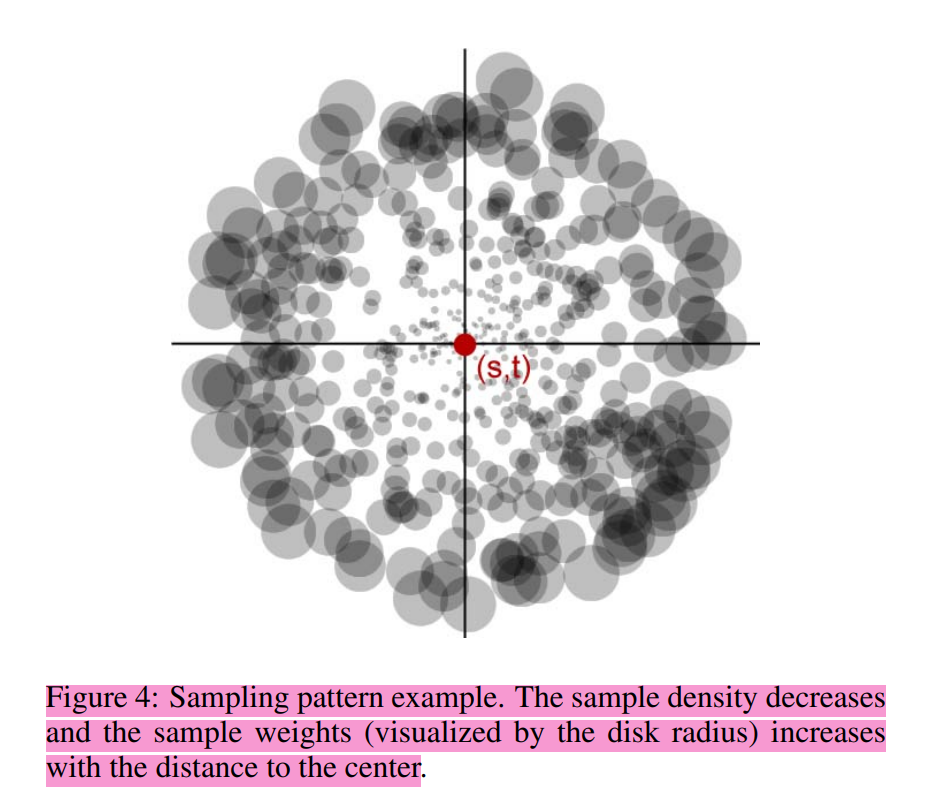

- 一个问题在于,我们如何选取shading point需要的VPL呢?我们通过重要性采样实现。首先,我们驾轻就熟地拿到shading point在shadow map中的纹理坐标\((s,t)\),然后我们在其附近采样。类似于3D空间的采样方法,我们使用两个均匀分布的随机数\(\xi_1\)与\(\xi_2\),分别控制半径和角度。在这里,我才采取了256个样本,我们将这些样本提前计算出来并传到shader中。考虑到其中有余弦计算,并且这是一个计算量较大的环节,所以可以提前将余弦计算的坐标值计算出来并传进去。紧接着,我们不能对采样值直接相加,因为他们相对于半径是有着采样概率密度分布PDF的,所以说,我们并不是在计算黎曼和,而是进行了蒙特卡洛积分,进一步的,\(PDF=\xi^2\),这是因为这是一个面积积分而不是半径积分。最后,取均值。显然的是,我们取样VPL时,并没有考虑到遮挡关系。

vec3 calcDirLight(DirLight light, vec3 normal, vec3 viewPos, vec3 fragPos)

{

//ambient

vec3 projCoord = fs_in.fragPosLightSpace.xyz / fs_in.fragPosLightSpace.w;

projCoord = projCoord * 0.5 + 0.5;

vec3 GI=vec3(0.0);

for(int i=0;i!=256;i++)

{

vec2 aSample = rmax * samples[i].x * vec2(sin(2.0f * PI * samples[i].y), cos(2.0f * PI * samples[i].y));

vec2 samCoord = projCoord.xy+aSample;

vec3 Flux = texture(gFlux, samCoord).rgb;

vec3 Np = texture(gNormal, samCoord).rgb;

vec3 Pp = texture(gPositionDepth, samCoord).rgb;

vec3 lightDir = fs_in.fragPos-Pp;

float l4 = pow(length(lightDir),4.0);

vec3 Ep = Flux * max(dot(Np,lightDir),0.0) * max(dot(fs_in.normal,-lightDir),0.0) / l4;

GI+=samples[i].x*samples[i].x*Ep;

}

//GI /=256.0f;

vec3 ambient = GI*texture(material.diffuse,fs_in.texCoord).rgb;

if(!onePass)

return ambient;

//diffuse

vec3 lightDir = normalize(-light.direction);

float diff = max(dot(normal, lightDir), 0.0);

vec3 diffuse = light.diffuse * diff * vec3(texture(material.diffuse, fs_in.texCoord));

//specular

vec3 viewDir = normalize(viewPos - fragPos);

vec3 halfwayDir = normalize(viewDir + lightDir);

float spec = pow(max(dot(halfwayDir, normal), 0.0), material.shininess);

vec3 specular = light.specular * spec * vec3(texture(material.specular, fs_in.texCoord));

float shadow = ShadowCalculation(fs_in.fragPosLightSpace, normal, lightDir);

return ambient + (1.0 - shadow) * (diffuse + specular);

}

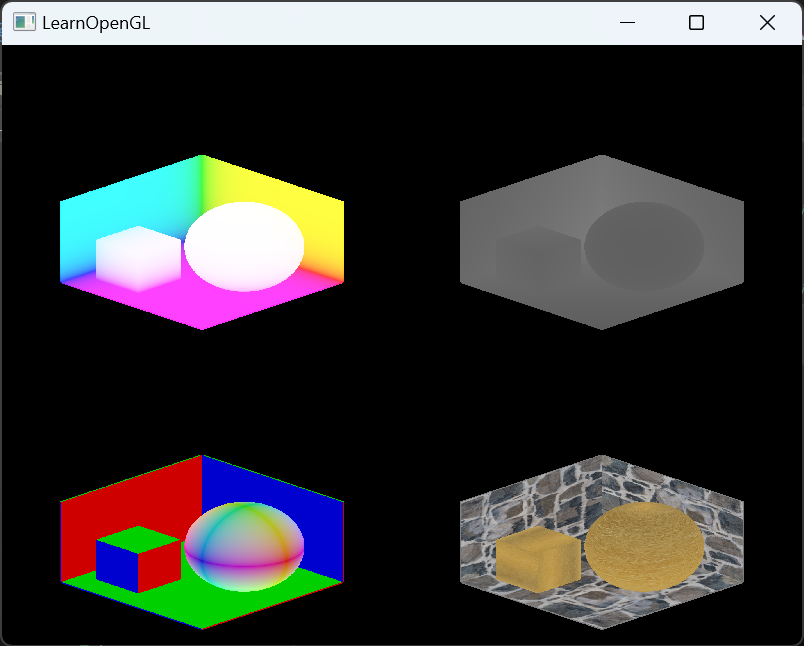

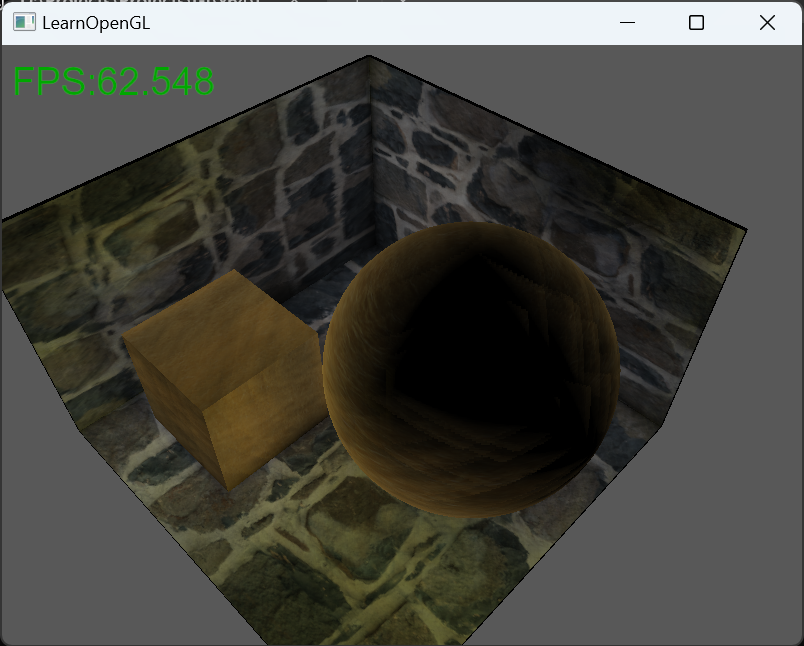

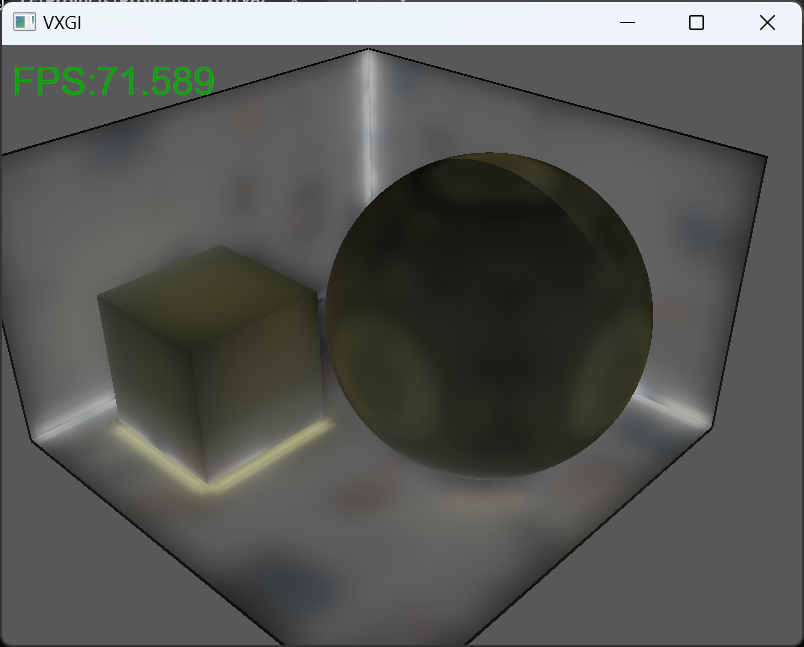

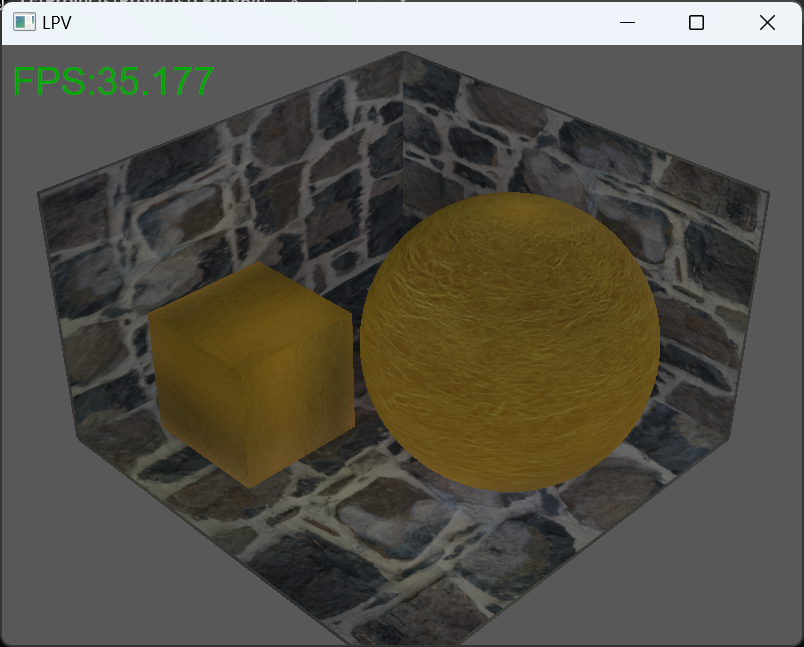

这便是间接光照效果,可以看出墙壁上出现了立方体和球的反射光。

Screen-Space Interpolation

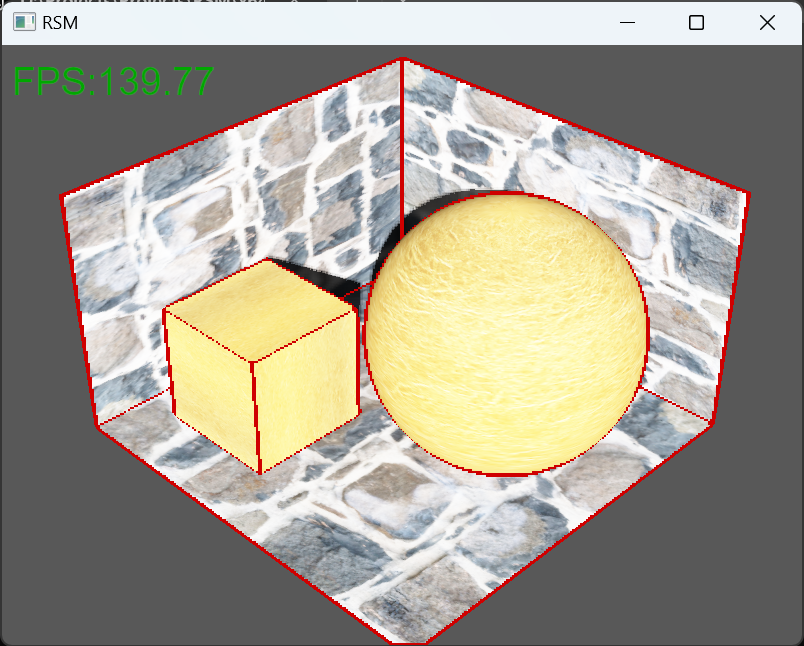

- 很显然的是,我们需要大量的采样,不可避免地效率就会很低,虽然还是比不上光线追踪的多次弹射迭代。作者给出了这个问题的一个缓解思路,那就是首先计算出低分辨率的渲染结果,然后在渲染高分辨率屏幕图像时采用低分辨率图像的插值实现效果,其中对于2D纹理而言是对四个pixel的插值,但是我们首先需要判断这四个pixel是否都是合理的:法线方向足够接近并且空间位置足够接近。如果存在三个过着四个pixel是合理的,我们就采取插值来获取,否则我们对这些片段重新计算。可以看出,不合格的位置是很少的:

- 作者说不合格的地方可以重新计算,考虑到这些不合格的位置很少并且都处于边缘位置,因此在大多数着色部位代码的局部性都比较不错,所以这种操作带来的分支并不会有很大的影响,所以确实可以起到加速作用。

vec3 calcDirLight(DirLight light, vec3 normal, vec3 viewPos, vec3 fragPos)

{

//TSET First Pass

vec2 texelSize = 1.0 / textureSize(Pass1Map, 0);

bool related[4]={false, false, false, false};

float weightRelated[4] = {0.0, 0.0, 0.0, 0.0};

float weightSum = 0.0;

int numRelated = 0;

vec2 screenPos =vec2(gl_FragCoord.x/800.0,gl_FragCoord.y/600.0);

for(int i=-1;i<=1;i+=2)

{

for(int j=-1;j<=1;j+=2)

{

vec2 testCoord = screenPos+vec2(i,j)*texelSize;

vec3 testPos = texture(Pass1Pos,testCoord).rgb;

vec3 testNorm = texture(Pass1Norm,testCoord).rgb;

vec4 testScreenPos = projection*view*vec4(testPos,1.0);

testScreenPos/=testScreenPos.w;

testScreenPos.xyz=testScreenPos.xyz*0.5+0.5;

float weight = abs((testScreenPos.x-screenPos.x)*(testScreenPos.y-screenPos.y));

weightRelated[(-i+1)+(-j+1)/2]=weight;

if(length(testPos-fs_in.fragPos)<1.0 && dot(testNorm, fs_in.normal)>0.5)

{

related[(i+1)+(j+1)/2]=true;

numRelated++;

}

}

}

vec3 ambient = vec3(0.0);

if(numRelated >= 3)

{

for(int i=-1;i<=1;i+=2)

{

for(int j=-1;j<=1;j+=2)

{

vec2 testCoord = screenPos.xy+vec2(i,j)*texelSize;

vec3 testColor = texture(Pass1Map,testCoord).rgb;

int id = (i+1)+(j+1)/2;

if(related[id])

{

ambient+=testColor*weightRelated[id];

weightSum+=weightRelated[id];

}

}

}

ambient/=weightSum;

}

else

{

vec3 projCoord = fs_in.fragPosLightSpace.xyz / fs_in.fragPosLightSpace.w;

projCoord = projCoord * 0.5 + 0.5;

vec3 GI=vec3(0.0);

for(int i=0;i!=256;i++)

{

vec2 aSample = rmax * samples[i].x * vec2(sin(2.0f * PI * samples[i].y), cos(2.0f * PI * samples[i].y));

vec2 samCoord = projCoord.xy+aSample;

vec3 Flux = texture(gFlux, samCoord).rgb;

vec3 Np = texture(gNormal, samCoord).rgb;

vec3 Pp = texture(gPositionDepth, samCoord).rgb;

vec3 lightDir = fs_in.fragPos-Pp;

float l4 = pow(length(lightDir),4.0);

vec3 Ep = Flux * max(dot(Np,lightDir),0.0) * max(dot(fs_in.normal,-lightDir),0.0) / l4;

GI+=samples[i].x*samples[i].x*Ep;

}

//GI /=256.0f;

ambient = GI*texture(material.diffuse,fs_in.texCoord).rgb;

}

//diffuse

vec3 lightDir = normalize(-light.direction);

float diff = max(dot(normal, lightDir), 0.0);

vec3 diffuse = light.diffuse * diff * vec3(texture(material.diffuse, fs_in.texCoord));

//specular

vec3 viewDir = normalize(viewPos - fragPos);

vec3 halfwayDir = normalize(viewDir + lightDir);

float spec = pow(max(dot(halfwayDir, normal), 0.0), material.shininess);

vec3 specular = light.specular * spec * vec3(texture(material.specular, fs_in.texCoord));

float shadow = ShadowCalculation(fs_in.fragPosLightSpace, normal, lightDir);

return ambient + (1.0 - shadow) * (diffuse + specular);

}

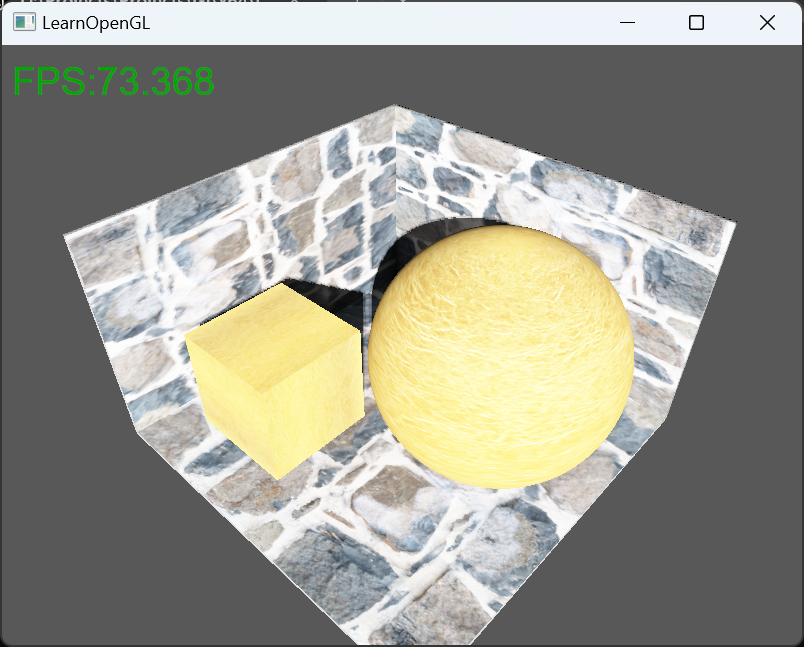

这是没有进行Screen插值的效果:

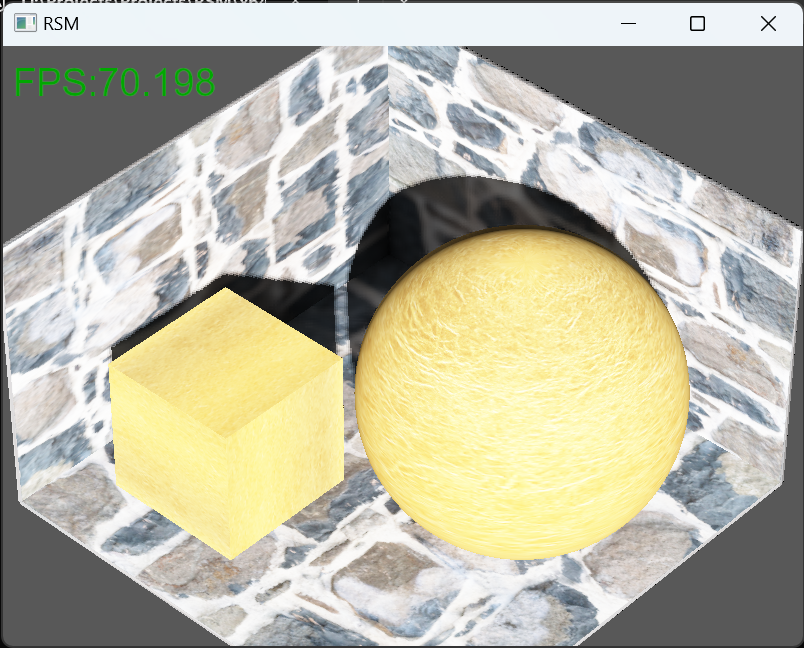

这是进行了Screen插值的效果,可以从阴影中看一下间接光照效果,是存在着模糊的,这也是该方法的代价了:

VXGI

- VXGI是一种体素方法[2]。

- 类似于RSM,VXGI也将直接光照的结果作为间接光照的光源VPL,但是不同于RSM的渲染方式,VXGI将直接光照结果注射到体素中。关于体素的记录,我们不是使用作者的方法,而是使用3D纹理来记录[3],这样可以方便的使用mipmap来获得体素的查询。

Voxalization

- 首先,我们需要将直接光照的计算结果注射进体素中。这个思路很简单,那就是我们对场景中的所有物体做直接光照计算,并且不做背面剔除和深度剔除,这样一来无论是否可见都会被计算并注射。

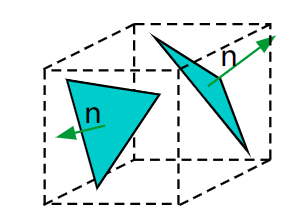

- 体素化的过程就是计算所有三角形的着色结果,然后注射到3D纹理的对应位置。计算过程是相似的,注射过程也只是需要找到3D纹理(准确的说在这里暂时的是使用其绑定的image3D)的坐标就可以了。在此时,我们面对的第一个问题其实是,如果采取一个面来渲染三角形,那么不是所有的三角形都有着较好的朝向的,因此,我们选择渲染六个方位,并且根据三角形的法线方向来选择具体的渲染方向。这一过程在几何着色器中实现。

#version 450 core

layout(triangles) in;

layout(triangle_strip, max_vertices=3)out;

in VS_OUT{

vec3 fragPos;

vec3 normal;

vec2 texCoord;

vec4 fragPosLightSpace;

}gs_in[];

out GS_OUT{

vec3 fragPos;

vec3 normal;

vec2 texCoord;

vec4 fragPosLightSpace;

int axis;

}gs_out;

uniform mat4 projectionX;

uniform mat4 projectionY;

uniform mat4 projectionZ;

void main()

{

vec3 edge1 = gl_in[0].gl_Position.xyz-gl_in[1].gl_Position.xyz;

vec3 edge2 = gl_in[2].gl_Position.xyz-gl_in[1].gl_Position.xyz;

vec3 N = abs(cross(edge1,edge2));

if(N.x>N.y && N.x>N.z)

{

gs_out.axis = 1;

}

else if(N.y>N.x && N.y>N.z)

{

gs_out.axis = 2;

}

else

{

gs_out.axis = 3;

}

mat4 Projection = gs_out.axis==1?projectionX:gs_out.axis==2?projectionY:projectionZ;

for(int i=0;i!=3;i++)

{

gl_Position = Projection*gl_in[i].gl_Position;

gs_out.fragPos = gs_in[i].fragPos;

gs_out.normal = gs_in[i].normal;

gs_out.texCoord = gs_in[i].texCoord;

gs_out.fragPosLightSpace = gs_in[i].fragPosLightSpace;

EmitVertex();

}

EndPrimitive();

}

- 紧接着,我们在片段着色器中来做光照计算。我们使用image3D来写入体素,需要注意的是,作者在此处实现了一个写入函数:一来,可能有多个三角形面在同一个体素中,所以需要使用他来累加(通过原子操作来实现),二来,当光源是固定的时,体素只需要写入一遍(利用初始条件0值来实现访问权限)。注意,我们写入的是四个数据,前三个自然就是我们需要注入的数据,至于第四个参数,在写入时会作为体素中三角形的计数作用,显然的是数量越大则相当于该体素的不透明度越大,因此在计算全局光照时,这个参数可以作为alpha来解释,此外,如果一个体素位于沟壑处,那么他周围的三角形就会越多,因此该参数还可以作为ao参数来使用。

#version 450 core

//layout(rgba8) uniform restrict image3D tex;

layout(binding = 0, r32ui) uniform volatile coherent uimage3D tex;

in GS_OUT{

vec3 fragPos;

vec3 normal;

vec2 texCoord;

vec4 fragPosLightSpace;

int axis;

} fs_in;

struct Material {

//vec3 ambient;

sampler2D diffuse;

sampler2D specular;

float shininess;

};

uniform Material material;

struct DirLight {

vec3 direction;

vec3 ambient;

vec3 diffuse;

vec3 specular;

};

uniform DirLight dirlight;

uniform vec3 viewPos;

uniform sampler2D gPositionDepth;

const float PI= 3.14159265359;

vec3 calcDirLight(DirLight light, vec3 normal, vec3 viewPos, vec3 fragPos);

float ShadowCalculation(vec4 fragPosLightSpace, vec3 normal, vec3 lightDir);

uint convVec4ToRGBA8(vec4 val);

vec4 convRGBA8ToVec4(uint val);

void imageAtomicRGBA8Avg(ivec3 coords, vec4 value);

uniform vec3 minPos;

uniform vec3 maxPos;

uniform int Step;

ivec3 posTrans(vec3 pos)

{

vec3 result = pos-(maxPos+minPos)/2;

result /=(maxPos-minPos);

result*=Step;

result+=vec3(Step/2);

return ivec3(result);

}

void main(void)

{

ivec3 write_Pos=posTrans(fs_in.fragPos);

vec3 result = calcDirLight(dirlight, fs_in.normal, viewPos, fs_in.fragPos);

imageAtomicRGBA8Avg(write_Pos, vec4(result,1.0));

//imageStore(tex,write_Pos,vec4(result,1.0));

}

vec3 calcDirLight(DirLight light, vec3 normal, vec3 viewPos, vec3 fragPos)

{

//diffuse

vec3 lightDir = normalize(-light.direction);

float diff = max(dot(normal, lightDir), 0.0);

vec3 diffuse = light.diffuse * diff * vec3(texture(material.diffuse, fs_in.texCoord));

//specular

vec3 viewDir = normalize(viewPos - fragPos);

vec3 halfwayDir = normalize(viewDir + lightDir);

float spec = pow(max(dot(halfwayDir, normal), 0.0), material.shininess);

vec3 specular = light.specular * spec * vec3(texture(material.specular, fs_in.texCoord));

float shadow = ShadowCalculation(fs_in.fragPosLightSpace, normal, lightDir);

return (1.0 - shadow) * (diffuse + specular);

}

float ShadowCalculation(vec4 fragPosLightSpace, vec3 normal, vec3 lightDir)

{

vec3 projCoord = fragPosLightSpace.xyz / fragPosLightSpace.w;

projCoord = projCoord * 0.5 + 0.5;

float currentDepth = projCoord.z;

float shadow = 0.0f;

float bias = max(0.05 * (1.0 - dot(normal, lightDir)), 0.005);

//PCF

vec2 texelSize = 1.0 / textureSize(gPositionDepth, 0);

for (int i = 0; i != 3; i++)

{

for (int j = 0; j != 3; j++)

{

float pcfDepth = texture(gPositionDepth, projCoord.xy + vec2(i, j) * texelSize).a;

shadow += currentDepth - bias > pcfDepth ? 1.0 : 0.0;;

}

}

shadow /= 9.0;

//远处

if (projCoord.z > 1.0f)

shadow = 0.0f;

return shadow;

}

vec4 convRGBA8ToVec4(uint val)

{

return vec4(float((val & 0x000000FF)),

float((val & 0x0000FF00) >> 8U),

float((val & 0x00FF0000) >> 16U),

float((val & 0xFF000000) >> 24U));

}

uint convVec4ToRGBA8(vec4 val)

{

return (uint(val.w) & 0x000000FF) << 24U |

(uint(val.z) & 0x000000FF) << 16U |

(uint(val.y) & 0x000000FF) << 8U |

(uint(val.x) & 0x000000FF);

}

void imageAtomicRGBA8Avg(ivec3 coords, vec4 val)

{

uint newVal = packUnorm4x8(val);

uint prevStoredVal = 0;

uint curStoredVal;

// Loop as long as destination value gets changed by other threads

while ((curStoredVal = imageAtomicCompSwap(tex, coords, prevStoredVal, newVal)) != prevStoredVal)

{

prevStoredVal = curStoredVal;

vec4 rval = unpackUnorm4x8(curStoredVal);

rval.w *= 256.0;

rval.xyz = (rval.xyz * rval.w); // Denormalize

vec4 curValF = rval + val; // Add new value

curValF.xyz /= (curValF.w); // Renormalize

curValF.w /= 256.0;

newVal = packUnorm4x8(curValF);

}

}

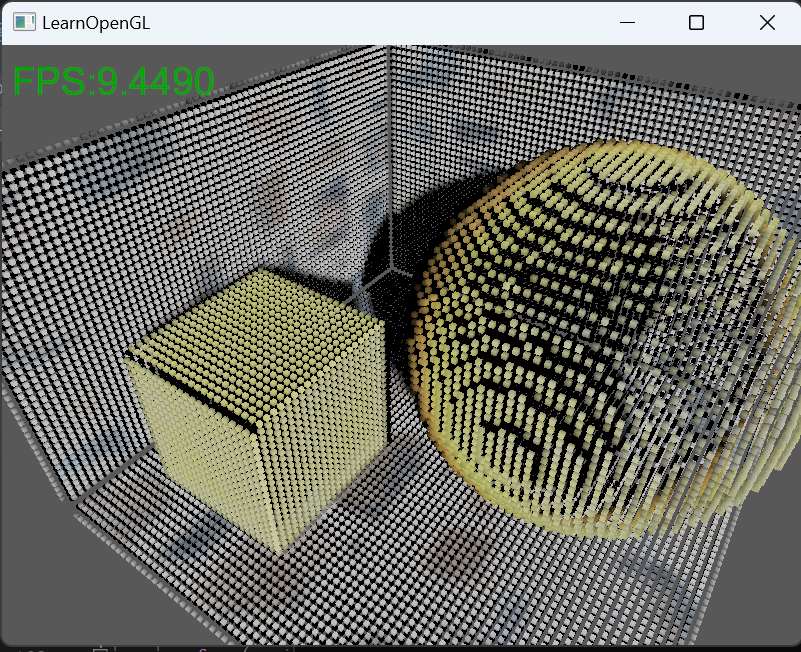

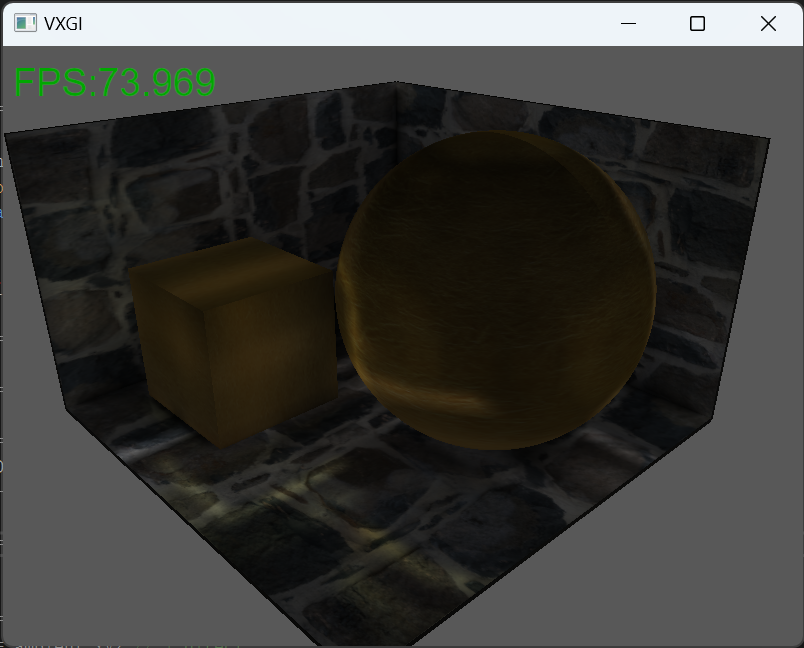

体素化结果如下:

Cone Tracing

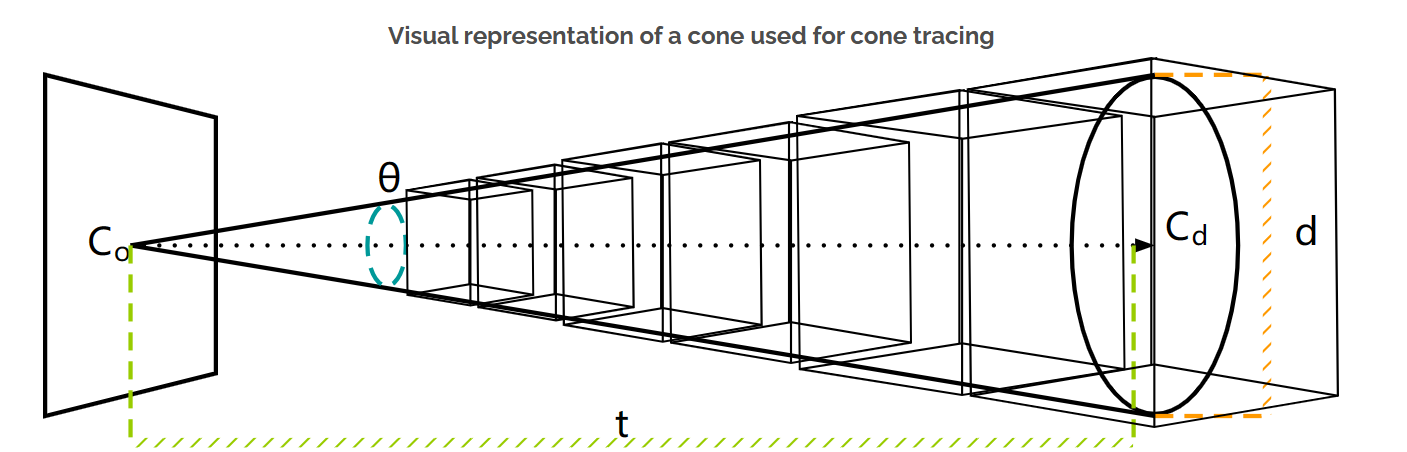

- VXGI的渲染使用的是cone tracing。我们使用一个圆锥来追踪该方向圆锥范围内的所有VPL。思路很简洁:我们从着色点位置\(fragPos\)出发,首先可以沿着法线方向\(normal\)偏移一个体素,作为起点\(start\),取消自着色;然后,沿着圆锥的中线方向\(direction\)移动距离t,那么我们此时就会位于\(start+t\);我们取此处的圆锥切面直径\(d=2.0\cdot tan(\frac{\theta}{2.0})\cdot t\),利用该直径来访问对应的mipmap层级的体素,\(mipLevel = log2(\frac{d}{texelSize})\),并使用此处的位置来索引体素,即一个3D纹理;紧接着,我们步进\(t\),来获取更远处的体素,因为我们的体素记录了alpha值,所以我们使用alpha值来实现blend,此外不断计算ao的blend结果;此外,我们设置终止条件,要么是t比较远,超出了体素纹理的范围,要么是alpha比较大,可以视作被遮挡。最终,我们获得了该方向的间接光照VPL的累积照明。

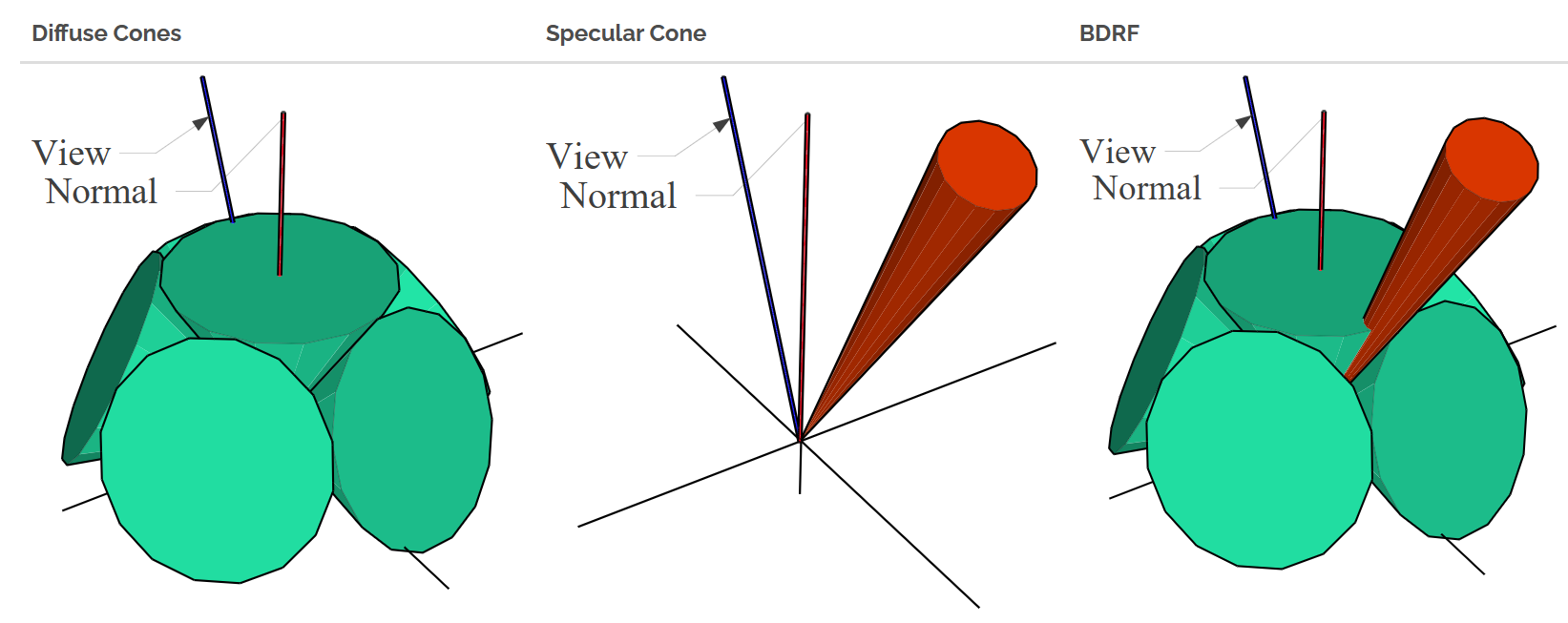

- 使用cone tracing,我们可以获得一个圆锥内部的光照。为什么使用圆锥呢?这是因为这跟我们的重要性采样思路很像。对于diffuse,我们是对半球面采样的,而对于specular,我们是对一个lobe来采样的,显然一个cone大差不差、不失体面地就像是一个lobe。当然,对于diffuse,我们不可能真的是用一个张角180°的圆锥,而是使用多个圆锥来包含半球面,此处我们使用了6个圆锥。因为这六个圆锥的立体角是一样的,所以我们大可以直接取均值,这是将各个方向的入射光平等看待了,实际上我们很多时候会进行重要性采样,比如余弦采样,因此我们可以预计算出每一个cone的余弦积分,作为权重。至于镜面反射,我们需要按照roughness来调整cone的张角。

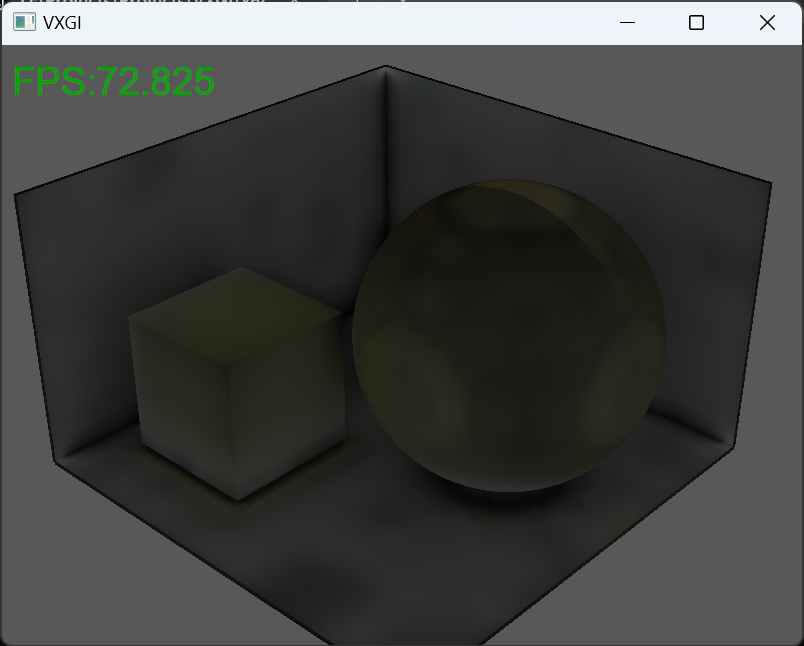

这个是没有使用AO的diffuse ambient,可以看出地面上接收到了来自立方体和球的反射光。

这个是使用了AO的diffuse ambient,可以看出在夹缝处要比上图更暗了。

这个是specular ambient,对于每一个物体,我给他们不同的roughness值,可以看出有着glossy效果。一个比较显著的问题是,当表面很光滑或者物体间距很近,specular效果比较差,但是对于glossy效果是不错的。

这个是间接光照的着色效果。

全局光照:

#version 450 core

uniform sampler3D tex;

out vec4 fragColor;

uniform vec3 minPos;

uniform vec3 maxPos;

uniform int Step;

in VS_OUT{

vec3 fragPos;

vec3 normal;

vec2 texCoord;

vec4 fragPosLightSpace;

} fs_in;

struct Material {

sampler2D diffuse;

sampler2D specular;

float roughness;

float shininess;

};

uniform Material material;

struct DirLight {

vec3 direction;

vec3 ambient;

vec3 diffuse;

vec3 specular;

};

uniform DirLight dirlight;

uniform vec3 viewPos;

uniform sampler2D gPositionDepth;

vec3 coneDirections[6] = {vec3(0, 0, 1),

vec3(0, 0.866025,0.5),

vec3(0.823639, 0.267617, 0.5),

vec3(0.509037, -0.700629, 0.5),

vec3(-0.509037, -0.700629, 0.5),

vec3(-0.823639, 0.267617, 0.5)};

float weight[6] = {1.0/4.0,

3.0/20.0,

3.0/20.0,

3.0/20.0,

3.0/20.0,

3.0/20.0};

const float PI = 3.14159265359;

const float tan30 = tan(PI/6.0);

const float MAX_ALPHA = 1.0;

const float MAX_LENGTH = length(maxPos-minPos);

const float lambda =0.1;

const float stepValue=0.1;

float ShadowCalculation(vec4 fragPosLightSpace, vec3 normal, vec3 lightDir);

vec3 calcDirLight(DirLight light, vec3 normal, vec3 viewPos, vec3 fragPos);

vec3 posTransToNdc(vec3 pos);

vec4 coneTracing(vec3 direction, vec3 N, float tanValue);

void main()

{

//ambient

vec3 normal = normalize(fs_in.normal);

vec3 tangent = normal.z > 0.001 ? vec3(0.0, 1.0, 0.0) : vec3(0.0, 0.0, 1.0);

vec3 bitangent = cross(normal, tangent);

tangent = cross(bitangent, normal);

mat3 TBN = mat3(tangent, bitangent, normal);

vec4 ambient = vec4(0.0);

for(int i=0;i!=6;i++)

{

ambient+=coneTracing(normalize(TBN * coneDirections[i]),fs_in.normal, tan30)*weight[i];

}

ambient.xyz*=(1.0-ambient.w);

vec3 viewDir=fs_in.fragPos-viewPos;

vec3 reflectDir = reflect(viewDir,fs_in.normal);

ambient.xyz +=coneTracing(reflectDir,fs_in.normal, tan(sin(material.roughness*PI/2.0)*PI/2.0)).xyz;

ambient.xyz*=texture(material.diffuse, fs_in.texCoord).xyz;

//direct

vec3 direct = calcDirLight(dirlight, fs_in.normal, viewPos, fs_in.fragPos);

vec3 result = ambient.xyz+ direct;

fragColor = vec4(result.xyz,1.0);

}

vec3 posTransToNdc(vec3 pos)

{

vec3 result = pos-(maxPos+minPos)/2;

result /=(maxPos-minPos);

result+=vec3(0.5);

return result;

}

vec4 coneTracing(vec3 direction, vec3 N, float tanValue)

{

float voxelSize = (maxPos-minPos).x/Step;

vec3 start = fs_in.fragPos+N*voxelSize;

vec3 color = vec3(0.0);

float alpha =0.0;

float occlusion=0.0;

float t = voxelSize;

while(alpha<MAX_ALPHA && t<MAX_LENGTH)

{

float d = max(voxelSize, 2.0*t*tanValue);

float mip = log2(d/voxelSize);

vec3 texCoord3D= posTransToNdc(start+t*direction);

vec4 result = textureLod(tex,texCoord3D,mip);

color += (1.0-alpha)*result.a*result.rgb;

alpha += (1.0-alpha)*result.a;

occlusion +=(1.0-occlusion)*result.a/(1.0+lambda*t);

t+=d*stepValue;

}

return vec4(color,occlusion);

}

vec3 calcDirLight(DirLight light, vec3 normal, vec3 viewPos, vec3 fragPos)

{

//diffuse

vec3 lightDir = normalize(-light.direction);

float diff = max(dot(normal, lightDir), 0.0);

vec3 diffuse = light.diffuse * diff * vec3(texture(material.diffuse, fs_in.texCoord));

//specular

vec3 viewDir = normalize(viewPos - fragPos);

vec3 halfwayDir = normalize(viewDir + lightDir);

float spec = pow(max(dot(halfwayDir, normal), 0.0), material.shininess);

vec3 specular = light.specular * spec * vec3(texture(material.specular, fs_in.texCoord));

float shadow = ShadowCalculation(fs_in.fragPosLightSpace, normal, lightDir);

return (1.0 - shadow) * (diffuse + specular);

}

float ShadowCalculation(vec4 fragPosLightSpace, vec3 normal, vec3 lightDir)

{

vec3 projCoord = fragPosLightSpace.xyz / fragPosLightSpace.w;

projCoord = projCoord * 0.5 + 0.5;

float currentDepth = projCoord.z;

float shadow = 0.0f;

float bias = max(0.05 * (1.0 - dot(normal, lightDir)), 0.005);

//PCF

vec2 texelSize = 1.0 / textureSize(gPositionDepth, 0);

for (int i = 0; i != 3; i++)

{

for (int j = 0; j != 3; j++)

{

float pcfDepth = texture(gPositionDepth, projCoord.xy + vec2(i, j) * texelSize).a;

shadow += currentDepth - bias > pcfDepth ? 1.0 : 0.0;;

}

}

shadow /= 9.0;

//远处

if (projCoord.z > 1.0f)

shadow = 0.0f;

return shadow;

}

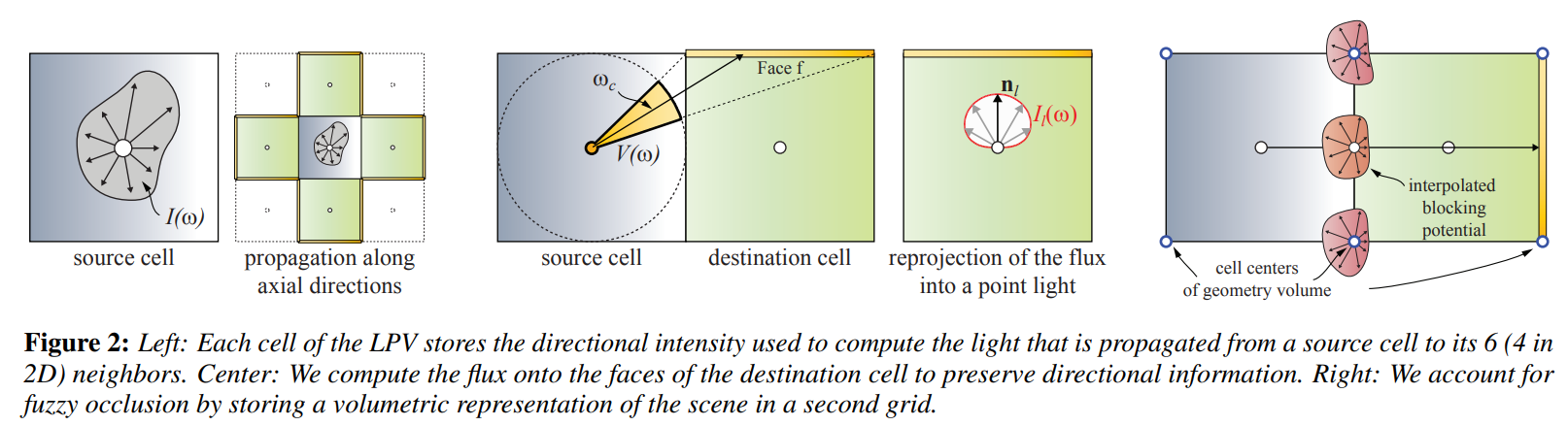

LPV

- LPV的实现需要RSM。同样的,LPV也将直接光照的结果作为VPL,相比于RSM和VXGI,LPV将VPL的radiant intensity记录在体素中,并进行传播。显然的是,要记录各个方向的radiant intensity并记录在每一个体素中,不能直接使用光照烘焙的结果,类似于预计算方法,我们对其进行编码压缩。这里,我们使用球面谐波SH。这是因为我们使用LPV仅仅计算diffuse ambient,而SH的低阶band就足以来描述diffuse了。一般是使用两阶。

Injection

- 首先,我们要将RSM中的flux转换为radient intensity并注射进体素中。与RSM一样,我们将VPL视作flux在半球空间内余弦分布。因此,我们表示出cos+的球谐系数,旋转向VPL的法线方向,并倍乘于flux。与VXGI一样,我们采取相似的方法注射。为了避免自照射,我们将VPL沿着法线方向移动半个体素大小,并将其放在其他体素中。

- 然后,我们将occlusion注射进入体素中。我们用如下的方法来描述一个pixel的occlusion,即用pixel的面积与体素的面积作比较,作为球谐系数的倍乘因子。这个也是很直观的,因为pixel的法线朝向就是他的最大遮挡方向。至于occlusion的来源,可以从RSM或者screen中获得,此处我只实现了RSM。需要注意的是,occlusion的体素中心位于radient intensity体素的角上,这是因为occlusion不是用于衡量体素的遮挡情况的,而更多的是用于衡量每个体素的六个面的遮挡情况,用于在propagation时进行衰减,而通过插值来实现更好的面occlusion评估。

Propagation

- 我们需要将一个体素的radient intensity传递到周围的六个体素中。我们将一个体素视作一个有着六个具有遮挡效果的面的盒子,在传播的时候认为邻面是无遮挡的,但是其余的五个面可能存在着对传播过去的radiant intensity的遮蔽效果,而每一个体素中记录的是出射光,也就是考虑了遮蔽之后出射该体素的光,因此,我们需要两步:计算出传播到邻近某个体素的每个面的flux,然后考虑遮蔽因子得到该体素这五个面出射的flux。

- 因为我们使用SH来记录radiant intensity,所以可以获得任意采样方向的分布值,进一步的,对某一个面通过采样做积分,就可以拿到该面的入射flux。

- 但是显然的,这样带来了大量的计算。因此,我们做如下的简化。我们假设入射某个面的radiant intensity是均匀的,并取做立体角中线上的采样值。(当然了,我发现这些采样值的夹角不是那么规则,但是也是可以近似看作30°等分的,所以为了不费脑子,我假设全部是30°等分)。那么,我们只需要计算出每一个面的立体角比重,然后相乘就可以了。(进一步的,我发现侧面和对面的立体角自然是不同的,但是也没有那么不同,我再次假设他们的立体角都是一样的)。

- 这样,我们就可以通过一个体素周围的六个体素得到其六个面的入射flux,就跟之前的injection一样,我们按照一样的思路将他们injection到该体素中。法线自然就是这六个面的外法线。

- 但是,在并入体素之前,我们首先要对每个面插值计算其occlusion,然后去衰减这个面的入射flux作为出射flux,再去injection并入体素中。

#version 450 core

layout(local_size_x = 8,local_size_y = 8,local_size_z = 8) in;

layout(binding = 0, rgba8) uniform image3D texR;

layout(binding = 1, rgba8) uniform image3D texG;

layout(binding = 2, rgba8) uniform image3D texB;

layout(binding = 3, rgba8) uniform image3D texR1;

layout(binding = 4, rgba8) uniform image3D texG1;

layout(binding = 5, rgba8) uniform image3D texB1;

layout(binding = 6, rgba8) uniform image3D texBlock;

const float PI = 3.14159265359;

vec4 cosineCoeffs= vec4(

0.886218,

0.0,

1.02328,

0.0);

int Factorial(int n);

float P(int l, int m, float x);

float K(int l, int m);

float SH(int l, int m, float theta, float phi);

mat3 Zrotate(float angle);

vec4 RotateSHCoefficients(vec4 unrotatedCoeffs, float theta, float phi);

vec4 LightProR(float theta, float phi, vec3 direction, vec3 Nf, ivec3 pos);

vec4 LightProG(float theta, float phi, vec3 direction, vec3 Nf, ivec3 pos);

vec4 LightProB(float theta, float phi, vec3 direction, vec3 Nf, ivec3 pos);

ivec3 posTrans(vec3 pos);

void LightPro6x1(ivec3 pos, inout vec4 sumR, inout vec4 sumG, inout vec4 sumB);

uniform vec3 minPos;

uniform vec3 maxPos;

uniform int Step;

uniform bool first;

void main()

{

ivec3 Pos = ivec3(gl_GlobalInvocationID.xyz);

vec4 valueR = imageLoad(texR1,Pos);

vec4 valueG = imageLoad(texG1,Pos);

vec4 valueB = imageLoad(texB1,Pos);

LightPro6x1(Pos,valueR,valueG,valueB);

imageStore(texR1,Pos,valueR);

imageStore(texG1,Pos,valueG);

imageStore(texB1,Pos,valueB);

}

void LightPro6x1(ivec3 pos, inout vec4 sumR, inout vec4 sumG, inout vec4 sumB)

{

//R

vec3 direction =vec3(0,-1,0);

sumR+=LightProR(90.0f,270.0f,direction,vec3(0,-1,0),pos-ivec3(direction));

sumR+=LightProR(60.0f,270.0f,direction,vec3(0,0,1),pos-ivec3(direction));

sumR+=LightProR(120.0f,270.0f,direction,vec3(0,0,-1),pos-ivec3(direction));

sumR+=LightProR(90.0f,240.0f,direction,vec3(-1,0,0),pos-ivec3(direction));

sumR+=LightProR(90.0f,300.0f,direction,vec3(1,0,0),pos-ivec3(direction));

direction =vec3(0,1,0);

sumR+=LightProR(90.0f,90.0f,direction,vec3(0,1,0),pos-ivec3(direction));

sumR+=LightProR(60.0f,90.0f,direction,vec3(0,0,1),pos-ivec3(direction));

sumR+=LightProR(120.0f,90.0f,direction,vec3(0,0,-1),pos-ivec3(direction));

sumR+=LightProR(90.0f,60.0f,direction,vec3(1,0,0),pos-ivec3(direction));

sumR+=LightProR(90.0f,120.0f,direction,vec3(-1,0,0),pos-ivec3(direction));

direction =vec3(-1,0,0);

sumR+=LightProR(90.0f,180.0f,direction,vec3(-1,0,0),pos-ivec3(direction));

sumR+=LightProR(60.0f,180.0f,direction,vec3(0,0,1),pos-ivec3(direction));

sumR+=LightProR(120.0f,180.0f,direction,vec3(0,0,-1),pos-ivec3(direction));

sumR+=LightProR(90.0f,150.0f,direction,vec3(0,0,1),pos-ivec3(direction));

sumR+=LightProR(90.0f,210.0f,direction,vec3(0,0,-1),pos-ivec3(direction));

direction =vec3(1,0,0);

sumR+=LightProR(90.0f,0.0f,direction,vec3(1,0,0),pos-ivec3(direction));

sumR+=LightProR(60.0f,0.0f,direction,vec3(0,0,1),pos-ivec3(direction));

sumR+=LightProR(120.0f,0.0f,direction,vec3(0,0,-1),pos-ivec3(direction));

sumR+=LightProR(90.0f,-30.0f,direction,vec3(0,-1,0),pos-ivec3(direction));

sumR+=LightProR(90.0f,30.0f,direction,vec3(0,1,0),pos-ivec3(direction));

direction =vec3(0,0,-1);

sumR+=LightProR(180.0f,0.0f,direction,vec3(0,0,-1),pos-ivec3(direction));

sumR+=LightProR(150.0f,0.0f,direction,vec3(1,0,0),pos-ivec3(direction));

sumR+=LightProR(150.0f,90.0f,direction,vec3(0,1,0),pos-ivec3(direction));

sumR+=LightProR(150.0f,180.0f,direction,vec3(-1,0,0),pos-ivec3(direction));

sumR+=LightProR(150.0f,270.0f,direction,vec3(0,-1,0),pos-ivec3(direction));

direction =vec3(0,0,1);

sumR+=LightProR(0.0f,0.0f,direction,vec3(0,0,1),pos-ivec3(direction));

sumR+=LightProR(30.0f,0.0f,direction,vec3(1,0,0),pos-ivec3(direction));

sumR+=LightProR(30.0f,90.0f,direction,vec3(0,1,0),pos-ivec3(direction));

sumR+=LightProR(30.0f,180.0f,direction,vec3(-1,0,0),pos-ivec3(direction));

sumR+=LightProR(30.0f,270.0f,direction,vec3(0,-1,0),pos-ivec3(direction));

//G

direction =vec3(0,-1,0);

sumG+=LightProG(90.0f,270.0f,direction,vec3(0,-1,0),pos-ivec3(direction));

sumG+=LightProG(60.0f,270.0f,direction,vec3(0,0,1),pos-ivec3(direction));

sumG+=LightProG(120.0f,270.0f,direction,vec3(0,0,-1),pos-ivec3(direction));

sumG+=LightProG(90.0f,240.0f,direction,vec3(-1,0,0),pos-ivec3(direction));

sumG+=LightProG(90.0f,300.0f,direction,vec3(1,0,0),pos-ivec3(direction));

direction =vec3(0,1,0);

sumG+=LightProG(90.0f,90.0f,direction,vec3(0,1,0),pos-ivec3(direction));

sumG+=LightProG(60.0f,90.0f,direction,vec3(0,0,1),pos-ivec3(direction));

sumG+=LightProG(120.0f,90.0f,direction,vec3(0,0,-1),pos-ivec3(direction));

sumG+=LightProG(90.0f,60.0f,direction,vec3(1,0,0),pos-ivec3(direction));

sumG+=LightProG(90.0f,120.0f,direction,vec3(-1,0,0),pos-ivec3(direction));

direction =vec3(-1,0,0);

sumG+=LightProG(90.0f,180.0f,direction,vec3(-1,0,0),pos-ivec3(direction));

sumG+=LightProG(60.0f,180.0f,direction,vec3(0,0,1),pos-ivec3(direction));

sumG+=LightProG(120.0f,180.0f,direction,vec3(0,0,-1),pos-ivec3(direction));

sumG+=LightProG(90.0f,150.0f,direction,vec3(0,0,1),pos-ivec3(direction));

sumG+=LightProG(90.0f,210.0f,direction,vec3(0,0,-1),pos-ivec3(direction));

direction =vec3(1,0,0);

sumG+=LightProG(90.0f,0.0f,direction,vec3(1,0,0),pos-ivec3(direction));

sumG+=LightProG(60.0f,0.0f,direction,vec3(0,0,1),pos-ivec3(direction));

sumG+=LightProG(120.0f,0.0f,direction,vec3(0,0,-1),pos-ivec3(direction));

sumG+=LightProG(90.0f,-30.0f,direction,vec3(0,-1,0),pos-ivec3(direction));

sumG+=LightProG(90.0f,30.0f,direction,vec3(0,1,0),pos-ivec3(direction));

direction =vec3(0,0,-1);

sumG+=LightProG(180.0f,0.0f,direction,vec3(0,0,-1),pos-ivec3(direction));

sumG+=LightProG(150.0f,0.0f,direction,vec3(1,0,0),pos-ivec3(direction));

sumG+=LightProG(150.0f,90.0f,direction,vec3(0,1,0),pos-ivec3(direction));

sumG+=LightProG(150.0f,180.0f,direction,vec3(-1,0,0),pos-ivec3(direction));

sumG+=LightProG(150.0f,270.0f,direction,vec3(0,-1,0),pos-ivec3(direction));

direction =vec3(0,0,1);

sumG+=LightProG(0.0f,0.0f,direction,vec3(0,0,1),pos-ivec3(direction));

sumG+=LightProG(30.0f,0.0f,direction,vec3(1,0,0),pos-ivec3(direction));

sumG+=LightProG(30.0f,90.0f,direction,vec3(0,1,0),pos-ivec3(direction));

sumG+=LightProG(30.0f,180.0f,direction,vec3(-1,0,0),pos-ivec3(direction));

sumG+=LightProG(30.0f,270.0f,direction,vec3(0,-1,0),pos-ivec3(direction));

//B

direction =vec3(0,-1,0);

sumB+=LightProB(90.0f,270.0f,direction,vec3(0,-1,0),pos-ivec3(direction));

sumB+=LightProB(60.0f,270.0f,direction,vec3(0,0,1),pos-ivec3(direction));

sumB+=LightProB(120.0f,270.0f,direction,vec3(0,0,-1),pos-ivec3(direction));

sumB+=LightProB(90.0f,240.0f,direction,vec3(-1,0,0),pos-ivec3(direction));

sumB+=LightProB(90.0f,300.0f,direction,vec3(1,0,0),pos-ivec3(direction));

direction =vec3(0,1,0);

sumB+=LightProB(90.0f,90.0f,direction,vec3(0,1,0),pos-ivec3(direction));

sumB+=LightProB(60.0f,90.0f,direction,vec3(0,0,1),pos-ivec3(direction));

sumB+=LightProB(120.0f,90.0f,direction,vec3(0,0,-1),pos-ivec3(direction));

sumB+=LightProB(90.0f,60.0f,direction,vec3(1,0,0),pos-ivec3(direction));

sumB+=LightProB(90.0f,120.0f,direction,vec3(-1,0,0),pos-ivec3(direction));

direction =vec3(-1,0,0);

sumB+=LightProB(90.0f,180.0f,direction,vec3(-1,0,0),pos-ivec3(direction));

sumB+=LightProB(60.0f,180.0f,direction,vec3(0,0,1),pos-ivec3(direction));

sumB+=LightProB(120.0f,180.0f,direction,vec3(0,0,-1),pos-ivec3(direction));

sumB+=LightProB(90.0f,150.0f,direction,vec3(0,0,1),pos-ivec3(direction));

sumB+=LightProB(90.0f,210.0f,direction,vec3(0,0,-1),pos-ivec3(direction));

direction =vec3(1,0,0);

sumB+=LightProB(90.0f,0.0f,direction,vec3(1,0,0),pos-ivec3(direction));

sumB+=LightProB(60.0f,0.0f,direction,vec3(0,0,1),pos-ivec3(direction));

sumB+=LightProB(120.0f,0.0f,direction,vec3(0,0,-1),pos-ivec3(direction));

sumB+=LightProB(90.0f,-30.0f,direction,vec3(0,-1,0),pos-ivec3(direction));

sumB+=LightProB(90.0f,30.0f,direction,vec3(0,1,0),pos-ivec3(direction));

direction =vec3(0,0,-1);

sumB+=LightProB(180.0f,0.0f,direction,vec3(0,0,-1),pos-ivec3(direction));

sumB+=LightProB(150.0f,0.0f,direction,vec3(1,0,0),pos-ivec3(direction));

sumB+=LightProB(150.0f,90.0f,direction,vec3(0,1,0),pos-ivec3(direction));

sumB+=LightProB(150.0f,180.0f,direction,vec3(-1,0,0),pos-ivec3(direction));

sumB+=LightProB(150.0f,270.0f,direction,vec3(0,-1,0),pos-ivec3(direction));

direction =vec3(0,0,1);

sumB+=LightProB(0.0f,0.0f,direction,vec3(0,0,1),pos-ivec3(direction));

sumB+=LightProB(30.0f,0.0f,direction,vec3(1,0,0),pos-ivec3(direction));

sumB+=LightProB(30.0f,90.0f,direction,vec3(0,1,0),pos-ivec3(direction));

sumB+=LightProB(30.0f,180.0f,direction,vec3(-1,0,0),pos-ivec3(direction));

sumB+=LightProB(30.0f,270.0f,direction,vec3(0,-1,0),pos-ivec3(direction));

}

ivec3 posTrans(vec3 pos)

{

vec3 result = pos-(maxPos+minPos)/2;

result /=(maxPos-minPos);

result*=Step;

result+=vec3(Step/2);

return ivec3(result);

}

vec4 LightProR(float theta, float phi, vec3 direction, vec3 Nf, ivec3 pos)

{

theta*=PI/180.0f;

phi*=PI/180.0f;

vec4 ywc = vec4(SH(0,0,theta,phi),SH(1,-1,theta,phi),SH(1,0,theta,phi),SH(1,1,theta,phi));

vec4 I = imageLoad(texR,pos);

float Iwc = dot(I,ywc);

if(!first)

{

vec3 posFace = vec3(pos)+direction+Nf*0.5+vec3(0.5);

vec4 B=vec4(0.0);

for(int i=-1;i<=1;i+=2)

{

for(int j=-1;j<=1;j+=2)

{

vec3 deltaPos;

if(Nf.x!=0)

deltaPos = vec3(0,i,j);

else if(Nf.y!=0)

deltaPos = vec3(i,0,j);

else

deltaPos = vec3(i,j,0);

B+=imageLoad(texBlock,ivec3(posFace+deltaPos*0.5));

}

}

B/=4.0;

float Bwc = dot(B,ywc);

Iwc*=(1.0-Bwc);

}

float dwf = 0.41;

float fluxf = dwf/PI*Iwc;

float fluxl = fluxf/PI;

float thetaFace = acos(Nf.z);

float phiFace =atan(Nf.y,Nf.x)+PI;

vec4 cosine = RotateSHCoefficients(cosineCoeffs, thetaFace, phiFace);

vec4 Il = cosine*fluxl;

return Il;

}

vec4 LightProG(float theta, float phi, vec3 direction, vec3 Nf, ivec3 pos)

{

theta*=PI/180.0f;

phi*=PI/180.0f;

vec4 ywc = vec4(SH(0,0,theta,phi),SH(1,-1,theta,phi),SH(1,0,theta,phi),SH(1,1,theta,phi));

vec4 I = imageLoad(texG,pos);

float Iwc = dot(I,ywc);

if(!first)

{

vec3 posFace = vec3(pos)+direction+Nf*0.5+vec3(0.5);

vec4 B=vec4(0.0);

for(int i=-1;i<=1;i+=2)

{

for(int j=-1;j<=1;j+=2)

{

vec3 deltaPos;

if(Nf.x!=0)

deltaPos = vec3(0,i,j);

else if(Nf.y!=0)

deltaPos = vec3(i,0,j);

else

deltaPos = vec3(i,j,0);

B+=imageLoad(texBlock,ivec3(posFace+deltaPos*0.5));

}

}

B/=4.0;

float Bwc = dot(B,ywc);

Iwc*=(1.0-Bwc);

}

float dwf = 0.41;

float fluxf = dwf/PI*Iwc;

float fluxl = fluxf/PI;

float thetaFace = acos(Nf.z);

float phiFace = atan(Nf.y,Nf.x)+PI;

vec4 cosine = RotateSHCoefficients(cosineCoeffs, thetaFace, phiFace);

vec4 Il = cosine*fluxl;

return Il;

}

vec4 LightProB(float theta, float phi, vec3 direction, vec3 Nf, ivec3 pos)

{

theta*=PI/180.0f;

phi*=PI/180.0f;

vec4 ywc = vec4(SH(0,0,theta,phi),SH(1,-1,theta,phi),SH(1,0,theta,phi),SH(1,1,theta,phi));

vec4 I = imageLoad(texB,pos);

float Iwc = dot(I,ywc);

if(!first)

{

vec3 posFace = vec3(pos)+direction+Nf*0.5+vec3(0.5);

vec4 B=vec4(0.0);

for(int i=-1;i<=1;i+=2)

{

for(int j=-1;j<=1;j+=2)

{

vec3 deltaPos;

if(Nf.x!=0)

deltaPos = vec3(0,i,j);

else if(Nf.y!=0)

deltaPos = vec3(i,0,j);

else

deltaPos = vec3(i,j,0);

B+=imageLoad(texBlock,ivec3(posFace+deltaPos*0.5));

}

}

B/=4.0;

float Bwc = dot(B,ywc);

Iwc*=(1.0-Bwc);

}

float dwf = 0.41;

float fluxf = dwf/PI*Iwc;

float fluxl = fluxf/PI;

float thetaFace = acos(Nf.z);

float phiFace = atan(Nf.y,Nf.x)+PI;

vec4 cosine = RotateSHCoefficients(cosineCoeffs, thetaFace, phiFace);

vec4 Il = cosine*fluxl;

return Il;

}

int Factorial(int n)

{

if (n <= 1)

return 1;

int k = n;

int fact = n;

while (--k > 1)

{

fact *= k;

}

return fact;

}

float P(int l, int m, float x)

{

//l=m

float Pmm = 1.0;

if (m > 0)

{

float item = sqrt(1.0 - x * x);

for (int i = 1; i <= m; i++)

{

Pmm *= item * (2.0 * i - 1.0) * (-1.0);

}

}

if (l == m)

return Pmm;

//l=m+1

float Pmp1m = (2.0 * m + 1.0) * x * Pmm;

if (l == m + 1)

return Pmp1m;

//l>m+1

float Plm = 1.0;

for (int i = m + 2; i <= l; i++)

{

Plm = x * (2.0 * i - 1.0) * Pmp1m - (i + m - 1.0) * Pmm;

Plm /= (i - m);

Pmm = Pmp1m;

Pmp1m = Plm;

}

return Plm;

}

float K(int l, int m)

{

float item = (2.0 * l + 1.0) / (4.0 * PI) * Factorial(l - m) / Factorial(l + m);

return sqrt(item);

}

float SH(int l, int m, float theta, float phi)

{

if (m == 0)

return K(l, m) * P(l, m, cos(theta));

if (m > 0)

return K(l, m) * P(l, m, cos(theta)) * sqrt(2.0) * cos(m * phi);

return K(l, -m) * P(l, -m, cos(theta)) * sqrt(2.0) * sin(-m * phi);

}

vec4 RotateSHCoefficients(vec4 unrotatedCoeffs, float theta, float phi)

{

vec4 rotatedCoeffs = vec4(0.0);

//Band 0 coefficient is unchanged

rotatedCoeffs.x = unrotatedCoeffs.x;

//Rotate band 1 coefficients

mat3 Xrotate90 = mat3(0,-1,0,1,0,0,0,0,1);

rotatedCoeffs.yzw = Zrotate(phi)*transpose(Xrotate90)*Zrotate(theta)*Xrotate90*unrotatedCoeffs.yzw;

return rotatedCoeffs;

}

mat3 Zrotate(float angle)

{

return mat3(cos(angle),0,-sin(angle),0,1,0,sin(angle),0,cos(angle));

}

Renderring

- 渲染的思路很简单,我们只需要根据该着色点的位置拿到经过了双线性插值的光照球谐,然后取着色点的负法线方向作为入射方向来采样就可以拿到flux了。这是因为我们将体素视作VPL,那么能够照射到着色点的就是直射光。实际上,被接受的flux就可以看作是着色点的radience了,可以直接进行着色计算了。当然了,作者认为该成分仍然需要进一步转换,即着色点是一个距离texelSize且尺寸为体素尺寸一半的着色面,这样会使得更暗一些,但是似乎这会使得着色效果受到体素化过程中的体素划分尺寸的影响。

- 上面的是diffuse ambient,实际上作者给出了glossy ambient的实现思路。我们可以按照反射方向查找多个体素,将其光照强度在负反射方向的采样累积,就可以得到镜面反射的入射光照。

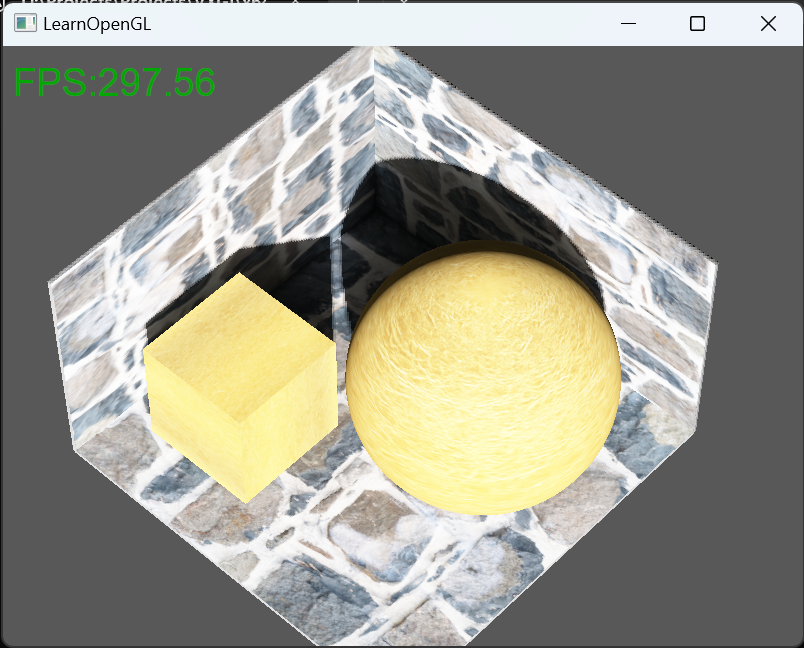

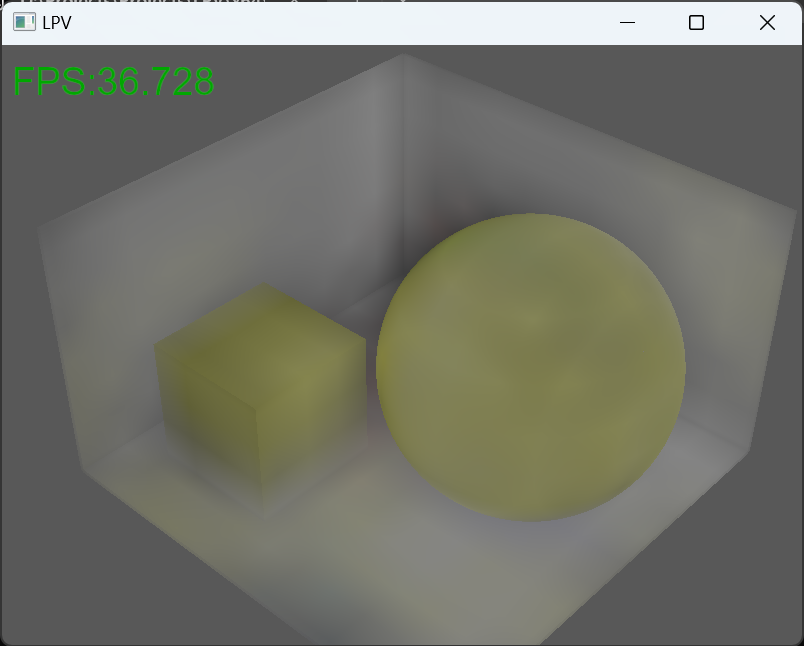

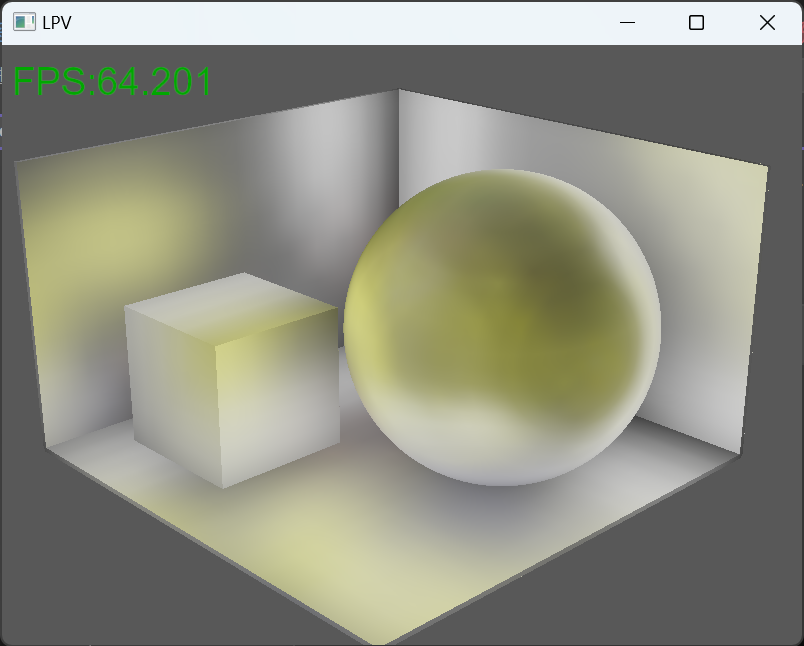

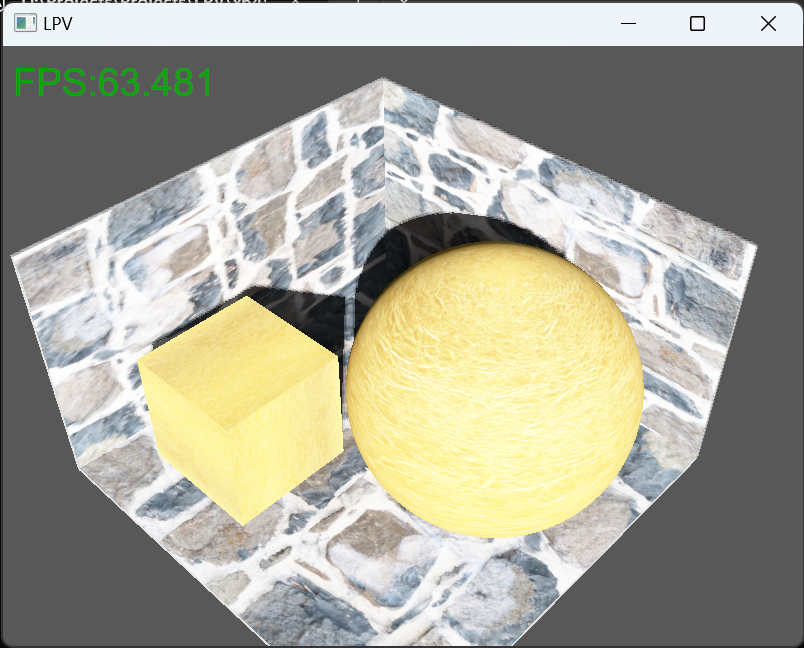

ambient光照计算效果:

全局光照:

void main()

{

//direct

vec3 direct = calcDirLight(dirlight, fs_in.normal, viewPos, fs_in.fragPos);

//indirect

vec3 Id = getLight(-fs_in.normal,fs_in.fragPos);

vec3 Is=vec3(0.0);

vec3 viewDir = -fs_in.fragPos+viewPos;

vec3 reflectDir = normalize(reflect(viewDir,fs_in.normal));

float texelSize = (maxPos-minPos).x/Step;

for(int i=1;i<=4;i++)

{

Is+=getLight(reflectDir,fs_in.fragPos-reflectDir*texelSize*i);

}

//Ls/=4.0;

vec3 I =Is+Id;

float len = texelSize*0.5;

vec3 L=I*len*len;

vec3 ambient = L* vec3(texture(material.diffuse, fs_in.texCoord));

//GI

vec3 GI = ambient + direct;

fragColor=vec4(L,1.0);

}

vec3 getLight(vec3 direction, vec3 worldPos)

{

float theta =acos(direction.z);

float phi = atan(direction.y,direction.x)+PI;

vec4 ylm = vec4(SH(0,0,theta,phi),SH(1,-1,theta,phi),SH(1,0,theta,phi),SH(1,1,theta,phi));

vec3 Pos = posTransToNdc(worldPos);

vec4 cR = textureLod(texR,Pos,0);

vec4 cG = textureLod(texG,Pos,0);

vec4 cB = textureLod(texB,Pos,0);

vec3 L = max(vec3(dot(cR,ylm),dot(cG,ylm),dot(cB,ylm)),0.0);

return L;

}

待续:屏幕空间算法与高光

参考

[1]Carsten Dachsbacher. Reflective Shadow Maps

[2]Cyril Crassin. Interactive Indirect Illumination Using Voxel Cone Tracing

[3]José Villegas. DEFERRED VOXEL SHADING FOR REAL TIME GLOBAL ILLUMINATION

[4]Anton Kaplanyan. Cascaded Light Propagation Volumes for Real-Time Indirect Illumination

本文来自博客园,作者:ETHERovo,转载请注明原文链接:https://www.cnblogs.com/etherovo/p/17484094.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号