OpenGL-05 高级光照

一、Blinn-Phong model

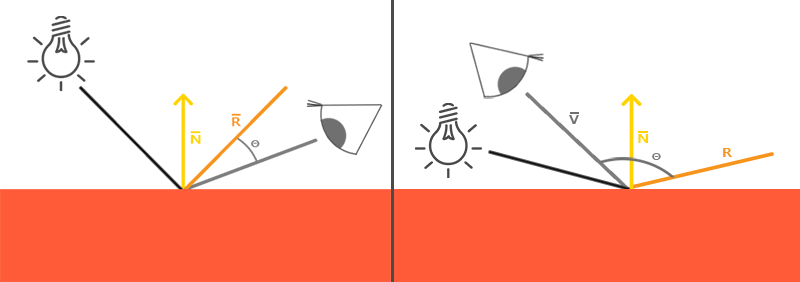

- 冯氏模型的镜面反射采取了观察方向与反射方向的夹角,这样会造成当夹角大于九十度时,就会造成镜面反射光为零,这样一般情况下问题不大,但是当镜面反射的glossy程度比较大时,就会出现镜面反射边缘的截断现象。

- Blinn-Phong model改用半程向量与法线的夹角,解决了问题,其他方面一样。

//specular

vec3 viewDir = normalize(viewPos - fragPos);

vec3 halfwayDir = normalize(viewDir + lightDir);

float spec = pow(max(dot(halfwayDir, normal), 0.0), material.shininess);

vec3 specular = light.specular * spec * vec3(texture(material.specular, texCoord));

二、Gamma矫正

- 在Gamma校正之前,首先需要理解什么是线性颜色空间。我们为什么要强调线性空间呢?顾名思义,线性空间就是指可以对颜色进行线性操作还能得到正确结果的颜色空间。事实上,线性空间只有一个,即自然空间。在自然界中,我们以光子的数量或者光的强度来描述亮度,而这个映射关系是线性的,即亮度与光子数量呈线性关系,那么对亮度的先线性操作就具备充分的物理意义。当然,这里对亮度的概念是一个定义,并非观感上的亮度,即观感亮度与光子数量是非线性的。

- 了解了线性亮度空间之后,我们就可以知悉,如果要对颜色进行计算,在模拟自然光照时,需要在线性空间进行计算才有直观的意义。

- 另外一个需要强调的点是,自然界的亮度,即光子数量,经过人的视觉系统的转换后,会呈现出亮度认知,而这一个过程是非线性的,大概会是1/2.2的幂次关系。这样一来,人会对暗区更加敏感,即人的观感亮度是非线性且偏亮的。应该认识的是,人的视觉系统是一个物理系统,将电磁波转换为电磁波或者是说将光子转换为电子的过程,这可以结合到后面的CRT显示器。

- CRT显示器是将电转换为光的物理设备,是一个与人的视觉系统反向处理的设备。这个设备的转换过程同样不是线性的,大致是2.2的幂次关系,这就意味着线性颜色空间经过CRT之后变成了偏暗的非线性空间。

- 结合人的视觉系统与CRT之后,一个直观的印象是,二者的非线性不是正好大致抵消,变成了线性了吗,这样一来,计算机中以线性方式计算的亮度值转换到人的视觉感知系统中的表达方式不也恰好是线性空间了吗?事实上,这种思路是错误的,因为在前面提起了,人的视觉系统的颜色表达空间是非线性的,而人类认知颜色亮度是从一个线性的自然空间认知的。因此,偶们要保证投射入人眼的光照形式的亮度空间必须是一个线性空间,恰如自然界。

- 结合:我们需要在计算机中以线性空间进行颜色计算 + CRT显示器的输出光的颜色空间必须是线性的,那么直观的结论就是,我们需要将计算出来的线性空间的颜色表达经过一步大约是1/2.2次幂的校正,然后送入显示器的光电转换设备,才能恰好得到线性空间,而人眼也可以观察到合理的亮度了,这一步便是Gamma校正。

- 在OpenGL中,实现Gamma校正有两种方式:

glEnable(GL_FRAMEBUFFER_SRGB);这个使得opengl再写入颜色缓冲时会自行进行gamma校正,将线性空间转换为sRGB空间,sRGB这个颜色空间大致对应于gamma2.2。这样一来,我们的shader只需要在线性空间做计算,不需要进行gamma矫正了。显然的是,这样使得我们只有使能权力,没有控制权力。- 另一个方法就是不开启GL_FRAMEBUFFER_SRGB,而是在片段着色器中自行进行gamma校正,然后让opengl写入RGB空间,相当于得到了sRGB空间。但是明显的是,我们有了更细粒化的操作权限。

//gamma

float gamma = 2.2;

fragColor.rgb = pow(fragColor.rgb, vec3(1.0 / gamma));

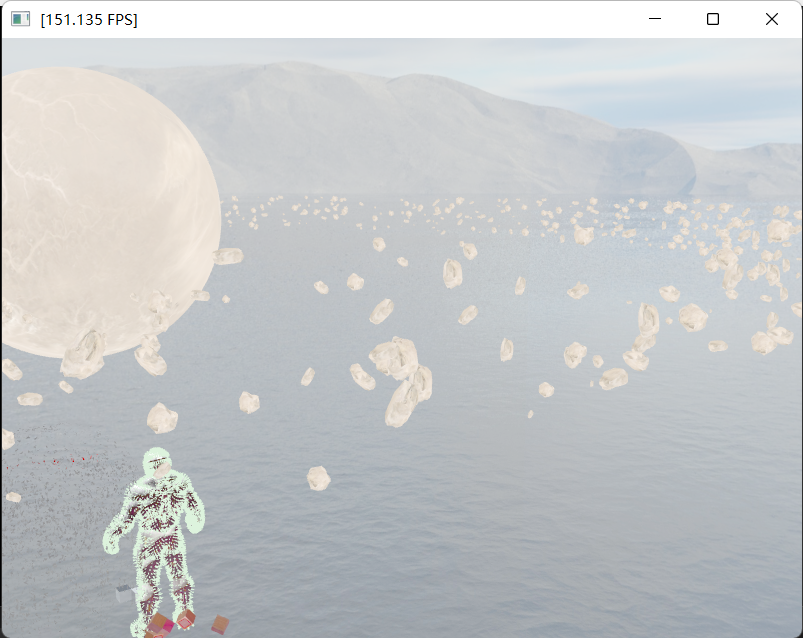

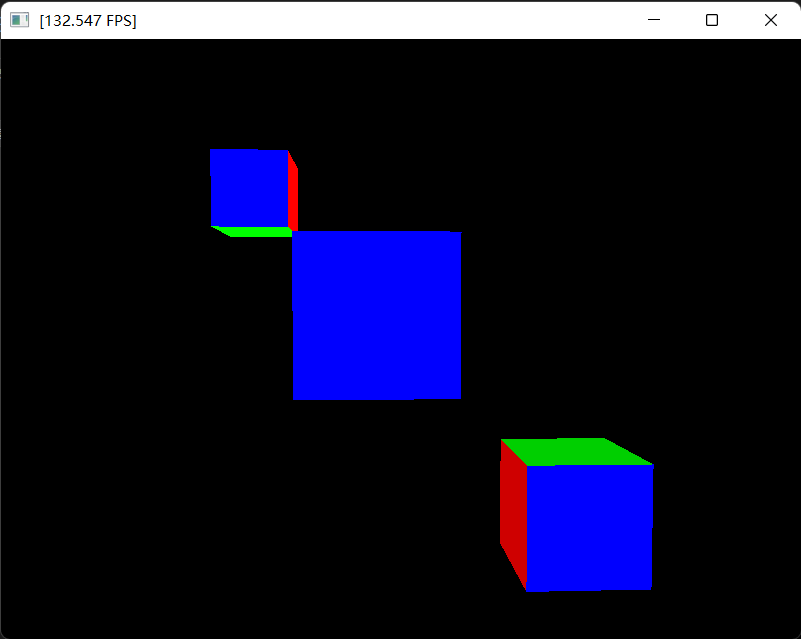

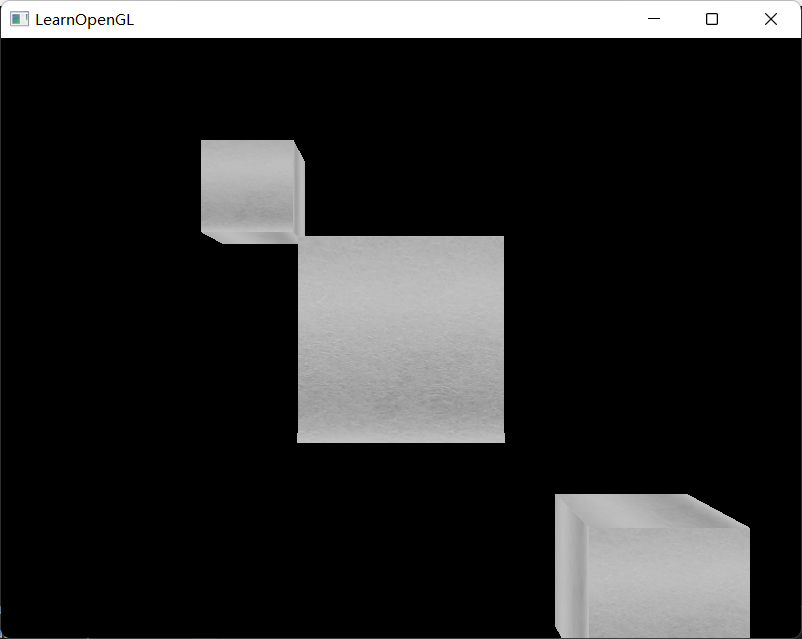

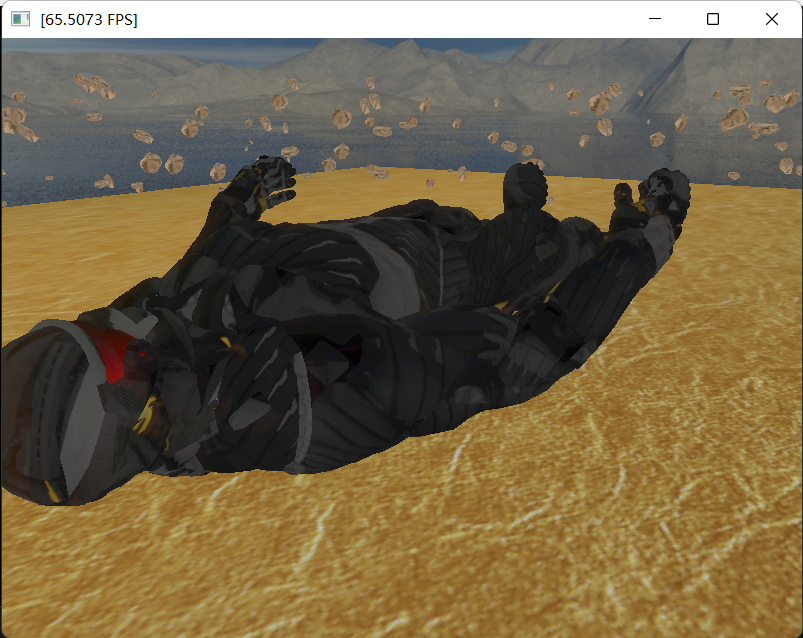

看看,多么亮!

- 一个明显的问题就是,经过了gamma校正的场景也太亮了吧,这是为什么呢?这是因为,我们观察到的场景中基本上都是基于纹理贴图渲染出来的,而纹理贴图并不是线性空间。纹理贴图是如何绘制出来的呢?显然是,艺术家们对着显示器调节出来的。那么显然的是,在艺术家的眼中,纹理贴图的亮度是正常的,即纹理贴图经过显示器后的亮度是线性空间的,那么显然的结论是,纹理贴图中记录的颜色本身不是线性空间的颜色,而是已经经过了gamma校正的sRGB空间的颜色。那么,我们将纹理映射出来的颜色进行gamma校正,就相当于进行了两次gamma校正,自然亮度会郭亮,反之可以想到,再不惊醒gamma校正时,纹理的颜色自行经过了gamma校正,所以显示的是合理颜色。然而,如果我们要对颜色进行计算,就意味着直接使用纹理贴图的sRGB颜色的计算结果是错误的。因此,对于纹理贴图,我们需要首先将她们由sRGB空间转换到线性空间。

- 第一种方法就是,我们在片段着色器中使用texture()提取到颜色后,首先经过一个反gamma校正。

float gamma = 2.2;

vec3 diffuseColor = pow(texture(diffuse, texCoords).rgb, vec3(gamma));

- 另一个比较方便的方法是,我们在使用glTexImage2D读取纹理数据时,通过将内部格式设置为GL_SRGB或者GL_SRGB_ALPHA来告知OpenGL将其由sRGB或者sRGBA转换为RGB或者RGBA

glTexture2D(GL_TEXTURE_2D,0,GL_SRGB,width,height,0GL_RGB,GL_UNSIGNED_BYTE,data);

- 然而,需要注意的是,只有颜色纹理需要经过反gamma校正,其他纹理像是深度纹理、法线纹理等等不存在类似的需求。同样的,我们在创建帧缓冲时,在为其纹理缓冲分配空间时也不需要进行转换,毕竟我们存入的颜色本身就是线性空间计算出来的结果。

int width, height, nrChannels;

unsigned char* data = stbi_load((dir+'/' + pat).c_str(), &width, &height, &nrChannels, 0);

if (data)

{

GLenum interformat,format;

if (nrChannels == 1)

{

interformat = GL_RED;

format = GL_RED;

}

else if (nrChannels == 3)

{

interformat = GL_SRGB;

format = GL_RGB;

}

else if (nrChannels == 4)

{

interformat = GL_SRGB_ALPHA;

format = GL_RGBA;

}

glTexImage2D(GL_TEXTURE_2D, 0, interformat, width, height, 0, format, GL_UNSIGNED_BYTE, data);

glGenerateMipmap(GL_TEXTURE_2D);

}

else

{

std::cout << "Failed to load texture." << std::endl;

}

stbi_image_free(data);

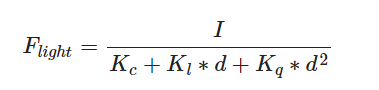

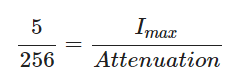

- 最后一个需要注意的地方是,当不采取gamma校正时,使用距离反比的光照衰减效果会很好,反而平方反比会使得光照衰减很快,这是因为显示器的gamma参数导致的距离反比更接近真实的平方反比,显然的,使用gamma校正之后,距离平方反比的效果更好。

- 你也许发现了为什么现在的效果还是有点亮呢,这个从天空盒就可以看出来了,我们之前使用的纹理已经经过gamma矫正了,现在的效果应该跟他一样才对,后来发现,这是因为我在渲染到帧缓冲纹理和从纹理渲染到屏幕帧缓冲上都做了一次gamma校正,呵呵我是傻逼,这里就不改了。

三、阴影

1. 阴影映射shadowmap

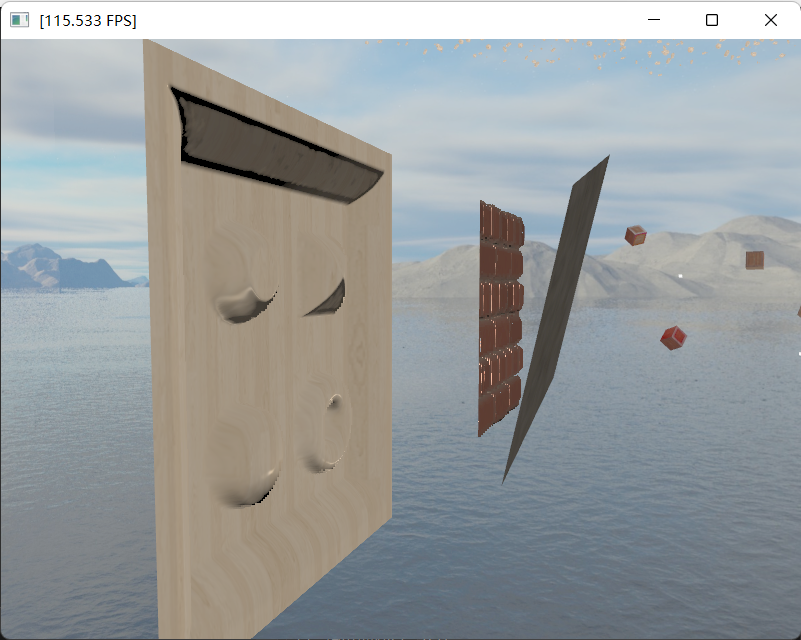

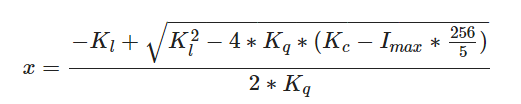

- 使用blinn-phong model得到的渲染结果,看起来好像是有明暗区分的,这是通过对三种成分的光照,尤其是diffuse和specular进行了余弦变换以及截断实现的,这样一来,我们就可以看到在同一物体上有着明暗的区分,好像是给出了阴影的效果。但是,当我们将多个物体放在一起观察时,就会发现,他们之间好像是不存在阴影的。这是一个显然的结论,我们使用着色模型达成的阴影效果实则是对法线判断的结果,其本质上是在进行光照强度效果的计算,而非遮挡计算,因此即便是有阴影效果也不是我们期待的物理遮挡造成的,此外,他们只是对单个mesh的着色计算,自然不存在交互。

- 使用shadow map来实现阴影效果。

- 首先,我们创建一个用于记录帧缓冲,用于记录对某一个光源而言,其观察到的深度信息。因此,我们会附加一个深度附件,同时因为我们需要在片段着色器中使用这个信息,所以我们使用深度纹理。此外,因为这个深度不是用于渲染到屏幕上的,所以我们不会采取屏幕的宽高,而是以分辨率的需求来设计宽高。最后一个需要注意的地方是,帧缓冲必须要有颜色缓冲,但是我们并不需要,所以明确告知OpenGL我们不对该帧缓冲进行颜色缓冲的读写。

unsigned int depthMapFBO;

glGenFramebuffers(1, &depthMapFBO);

glBindFramebuffer(GL_FRAMEBUFFER, depthMapFBO);

const unsigned int SHADOW_WIDTH = 1024, SHADOW_HEIGHT = 1024;

unsigned int depthMap;

glGenTextures(1, &depthMap);

glBindTexture(GL_TEXTURE_2D, depthMap);

glTexImage2D(GL_TEXTURE_2D, 0, GL_DEPTH_COMPONENT, SHADOW_WIDTH, SHADOW_HEIGHT, 0, GL_DEPTH_COMPONENT, GL_FLOAT, NULL);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_REPEAT);

glad_glFramebufferTexture2D(GL_FRAMEBUFFER, GL_DEPTH_ATTACHMENT, GL_TEXTURE_2D, depthMap, 0);

glDrawBuffer(GL_NONE);

glReadBuffer(GL_NONE);

glBindFramebuffer(GL_FRAMEBUFFER, 0);

- 然后,我们实现了向该帧缓冲中渲染的shader。该shader的作用只是深度测试以及深度写入,所以我们只需要进行顶点坐标的计算就可以了。

#version 330 core

layout(location = 0)in vec3 aPos;

uniform mat4 lightSpaceMatrix;

uniform mat4 model;

void main()

{

gl_Position = lightSpaceMatrix * model * vec4(aPos, 1.0);

}

#version 330 core

void main()

{

}

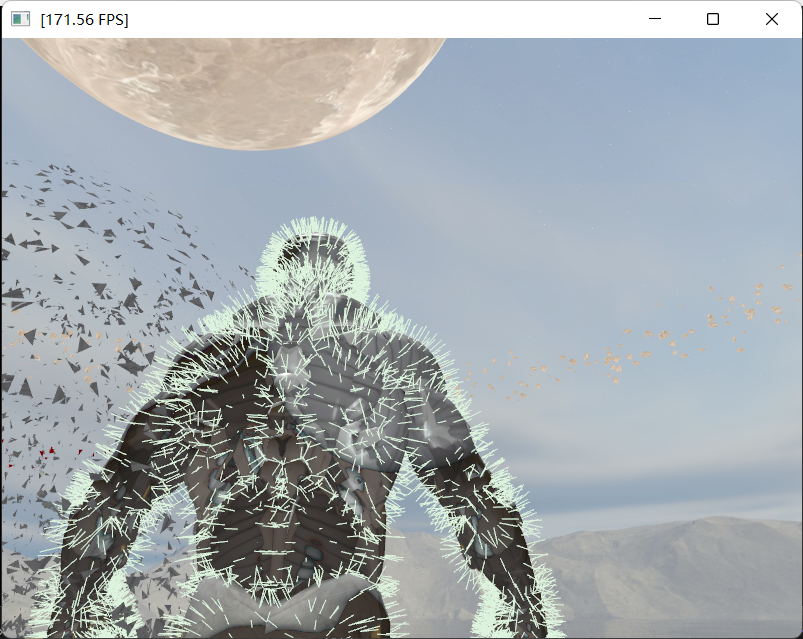

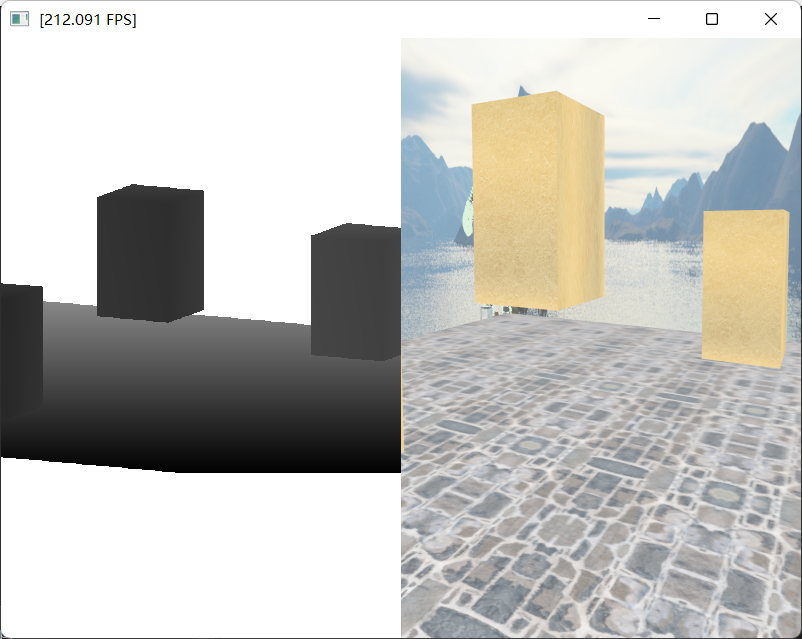

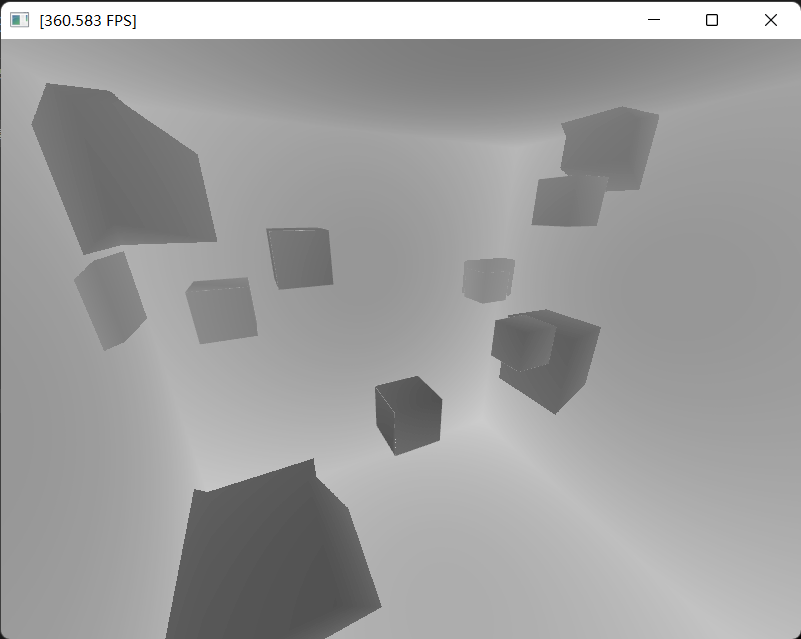

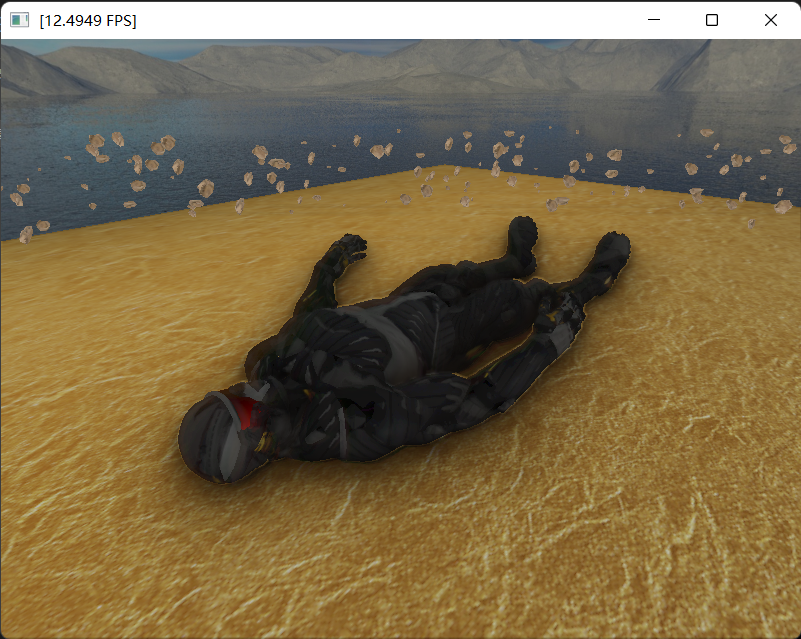

- 有了着色器之后,我们需要在渲染物体之前,首先渲染深度信息。这个着色器是用于对所有需要进行阴影效果的着色物体的,因此都进行一遍渲染。一个非常有趣的地方是,因为我们修改了帧缓冲的深度纹理的大小,不再是屏幕宽高,所以我们需要修改视口glViewport。这是因为,该函数是用于将NDC空间转换到屏幕空间时,进行xy方向坐标映射的宽高参数以及原点参数(偏执参数)。下图是我们将宽高减半的效果,可见,当我们渲染多重采样帧缓冲时,只能渲染左下角1/4部分,然后将该帧缓冲复原到中间帧缓冲,将中间帧缓冲渲染到屏幕上,即默认诊断冲时,又在此基础上只能渲染左下角1/4的部分,总的看来,之渲染了1/16的部分;此外如果宽高过大,比如两倍,就会引起只会将颜色缓冲的一部分(1/16)渲染到屏幕上。

- 因为深度纹理是在光源处观察到的深度信息,所以该shader输入的MVP矩阵是相对于光源的,首先,M矩阵不受影响,然后,V矩阵是由光源得到的,其中对于点光源而言可以直接看作一个camera,使用摄像机的view矩阵计算方法来得到其view矩阵,但是对于定向光而言不存在位置信息,只存在方向信息,回顾camera的view计算可知,我们是需要一个摄像机位置和一个观察方向(或者观察位置)的,因此,我们只能假定定向光有一个位置,实际上就是给深度纹理一个本来对于定向光而言不存在的中心位置,因此,得到的深度纹理一定是基于这个中心位置有边界的;最后,P矩阵也不需要变动,只是对于定向光使用正交,对于点光使用投影。

//shader

Shader shadowmap_Dir_Shader("res/shader/shadowMap_Dir_Vertex.shader", "res/shader/shadowMap_Dir_Fragment.shader");

//shadowmap

glViewport(0, 0, SHADOW_WIDTH, SHADOW_HEIGHT);

glBindFramebuffer(GL_FRAMEBUFFER, depthMapFBO);

glEnable(GL_DEPTH_TEST);

glDepthMask(GL_TRUE);

glClear(GL_DEPTH_BUFFER_BIT);

shadowmap_Dir_Shader.use();

float near_plane = 0.1f, far_plane = 30.0f;

glm::mat4 lightProjection = glm::ortho(-20.0f, 20.0f, -20.0f, 20.0f, near_plane, far_plane);

glm::mat4 lightView = glm::lookAt(glm::vec3(40.0f, 10.0f, 40.0f), glm::vec3(30.0f,3.0f,30.0f), glm::vec3(0.0f, 1.0f, 0.0f));

glm::mat4 lightSpaceMatrix = lightProjection * lightView;

shadowmap_Dir_Shader.setMat4("lightSpaceMatrix", glm::value_ptr(lightSpaceMatrix));

glBindVertexArray(stencilVAO);

model = glm::mat4(1.0);

model = glm::translate(model, glm::vec3(24.0, 3.1, 39.0));

model = glm::scale(model, glm::vec3(4.0));

model = glm::translate(model, glm::vec3(0.0, 0.5, 0.0));

shadowmap_Dir_Shader.setMat4("model", glm::value_ptr(model));

glDrawElements(GL_TRIANGLES, 36, GL_UNSIGNED_INT, 0);

model = glm::mat4(1.0);

model = glm::translate(model, glm::vec3(30.0, 6.0, 33.0));

model = glm::scale(model, glm::vec3(4.0));

model = glm::translate(model, glm::vec3(0.0, 0.5, 0.0));

shadowmap_Dir_Shader.setMat4("model", glm::value_ptr(model));

glDrawElements(GL_TRIANGLES, 36, GL_UNSIGNED_INT, 0);

model = glm::mat4(1.0);

model = glm::translate(model, glm::vec3(37.0, 4.0, 23.0));

model = glm::scale(model, glm::vec3(4.0));

model = glm::translate(model, glm::vec3(0.0, 0.5, 0.0));

shadowmap_Dir_Shader.setMat4("model", glm::value_ptr(model));

glDrawElements(GL_TRIANGLES, 36, GL_UNSIGNED_INT, 0);

model = glm::mat4(1.0f);

model = glm::translate(model, glm::vec3(30.0, 3.0, 30.0));

model = glm::scale(model, glm::vec3(50.0));

model = glm::translate(model, glm::vec3(0.0, -0.5, 0.0));

shadowmap_Dir_Shader.setMat4("model", glm::value_ptr(model));

glDrawElements(GL_TRIANGLES, 6, GL_UNSIGNED_INT, (const void*)(12 * sizeof(unsigned int)));

- 得到了深度纹理之后,我们将其渲染到屏幕上,看看效果。方法很简单,只是将深度纹理绘制到屏幕上,当然要注意修改视口。第一幅是正交变换,即定向光,第二幅是投影变换,即点光源。

#version 330 core

in vec2 texCoord;

uniform sampler2D depthmap;

uniform float near_plane;

uniform float far_plane;

out vec4 fragColor;

float LinearizeDepth(float depth)

{

float z = depth * 2.0 - 1.0; // Back to NDC

return (2.0 * near_plane * far_plane) / (far_plane + near_plane - z * (far_plane - near_plane));

}

void main()

{

float depthValue=texture(depthmap, texCoord).r;

fragColor = vec4(vec3(depthValue), 1.0);

//fragColor = vec4(vec3(LinearizeDepth(depthValue) / far_plane), 1.0);

}

//shadowmap

glBindFramebuffer(GL_FRAMEBUFFER, 0);

glDisable(GL_DEPTH_TEST);

glDisable(GL_STENCIL_TEST);

//glClearColor(0.0f, 0.1f, 0.0f, 1.0f);

//glClear(GL_COLOR_BUFFER_BIT);

glViewport(0, 0, SCR_WIDTH/2, SCR_HEIGHT);

depthmapShader.use();

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_2D, depthMap);

depthmapShader.setInt("depthmap", 0);

depthmapShader.setFloat("near_plane", near_plane);

depthmapShader.setFloat("far_plane", far_plane);

glBindVertexArray(frameVAO);

glDrawArrays(GL_TRIANGLES, 0, 6);

glViewport(0, 0, SCR_WIDTH, SCR_HEIGHT);

- 在得到了深度纹理之后,我们就需要在原本的blinn-phong model的着色器中,修改着色结果。只需要在顶点着色器中计算出光源的裁剪空间中顶点的位置,并输出给片段着色器,然后在片段着色器中转换到NDC空间,进一步的转换到[0,1]的坐标空间,这样xy轴就是纹理坐标,而z轴就是深度信息,这样一俩就可以查询深度纹理,将二者进行对比,然后利用对比结果判断是否显示该片段的diffuse和specular光照,当然ambient光照必须要显示的。画个图,漂亮!但不完全漂亮...

#version 330 core

layout(location = 0) in vec3 aPos;

layout(location = 1) in vec3 aColor;

layout(location = 2) in vec2 aTexCoord;

layout(location = 3) in vec3 aNorm;

out VS_OUT{

vec3 fragPos;

vec3 normal;

vec2 texCoord;

vec4 fragPosLightSpace;

} vs_out;

uniform mat4 model;

uniform mat4 view;

uniform mat4 projection;

uniform mat4 lightSpaceMatrix;

void main()

{

gl_Position = projection * view * model * vec4(aPos, 1.0f);

vs_out.fragPos = vec3(model * vec4(aPos, 1.0f));

vs_out.normal = normalize(mat3(transpose(inverse(model))) * aNorm);

vs_out.texCoord = aTexCoord;

vs_out.fragPosLightSpace = lightSpaceMatrix * model * vec4(aPos, 1.0f);

}

#version 330 core

out vec4 fragColor;

in VS_OUT{

vec3 fragPos;

vec3 normal;

vec2 texCoord;

vec4 fragPosLightSpace;

} fs_in;

uniform vec3 viewPos;

uniform sampler2D shadowMap;

struct Material {

//vec3 ambient;

sampler2D diffuse;

sampler2D specular;

float shininess;

};

uniform Material material;

struct DirLight {

vec3 direction;

vec3 ambient;

vec3 diffuse;

vec3 specular;

};

uniform DirLight dirlight;

struct PointLight {

vec3 position;

vec3 ambient;

vec3 diffuse;

vec3 specular;

float constant;

float linear;

float quadratic;

};

#define NR_POINT_LIGHTS 4

uniform PointLight pointlights[NR_POINT_LIGHTS];

struct SpotLight {

vec3 direction;

vec3 position;

vec3 ambient;

vec3 diffuse;

vec3 specular;

float cutOff;

float outerCutOff;

float constant;

float linear;

float quadratic;

};

uniform SpotLight spotlight;

vec3 calcDirLight(DirLight light, vec3 normal, vec3 viewPos, vec3 fragPos, float shadow);

vec3 calcSpotLight(SpotLight light, vec3 normal, vec3 viewPos, vec3 fragPos, float shadow);

vec3 calcPointLight(PointLight light, vec3 normal, vec3 viewPos, vec3 fragPos, float shadow);

float ShadowCalculation(vec4 fragPosLightSpace);

void main()

{

float shadow = ShadowCalculation(fs_in.fragPosLightSpace);

vec3 result = calcDirLight(dirlight, fs_in.normal, viewPos, fs_in.fragPos, shadow);

//for (int i = 0; i != NR_POINT_LIGHTS; i++)

//{

// result += calcPointLight(pointlights[i], fs_in.normal, viewPos, fs_in.fragPos, shadow);

//}

//

//result += calcSpotLight(spotlight, fs_in.normal, viewPos, fs_in.fragPos, shadow);

fragColor = vec4(result, 1.0f);

//gamma

float gamma = 2.2;

fragColor.rgb = pow(fragColor.rgb, vec3(1.0 / gamma));

}

vec3 calcDirLight(DirLight light, vec3 normal, vec3 viewPos, vec3 fragPos, float shadow)

{

//ambient

vec3 ambient = light.ambient * vec3(texture(material.diffuse, fs_in.texCoord));

//diffuse

vec3 lightDir = normalize(-light.direction);

float diff = max(dot(normal, lightDir), 0.0);

vec3 diffuse = light.diffuse * diff * vec3(texture(material.diffuse, fs_in.texCoord));

//specular

vec3 viewDir = normalize(viewPos - fragPos);

vec3 halfwayDir = normalize(viewDir + lightDir);

float spec = pow(max(dot(halfwayDir, normal), 0.0), material.shininess);

vec3 specular = light.specular * spec * vec3(texture(material.specular, fs_in.texCoord));

return ambient + (1.0 - shadow) * (diffuse + specular);

}

vec3 calcPointLight(PointLight light, vec3 normal, vec3 viewPos, vec3 fragPos, float shadow)

{

float distance = length(light.position - fragPos);

float attenuation = 1.0f / (light.constant + light.linear * distance + light.quadratic * distance * distance);

//ambient

vec3 ambient = light.ambient * vec3(texture(material.diffuse, fs_in.texCoord));

//diffuse

vec3 lightDir = normalize(light.position - fragPos);

float diff = max(dot(normal, lightDir), 0.0);

vec3 diffuse = light.diffuse * diff * vec3(texture(material.diffuse, fs_in.texCoord));

//specular

vec3 viewDir = normalize(viewPos - fragPos);

vec3 halfwayDir = normalize(viewDir + lightDir);

float spec = pow(max(dot(halfwayDir, normal), 0.0), material.shininess);

vec3 specular = light.specular * spec * vec3(texture(material.specular, fs_in.texCoord));

return (ambient + (1.0-shadow)*(diffuse + specular)) * attenuation;

}

vec3 calcSpotLight(SpotLight light, vec3 normal, vec3 viewPos, vec3 fragPos, float shadow)

{

//ambient

vec3 ambient = light.ambient * vec3(texture(material.diffuse, fs_in.texCoord));

//diffuse

vec3 lightDir = normalize(light.position - fragPos);

float diff = max(dot(normal, lightDir), 0.0);

vec3 diffuse = light.diffuse * diff * vec3(texture(material.diffuse, fs_in.texCoord));

//specular

vec3 viewDir = normalize(viewPos - fragPos);

vec3 halfwayDir = normalize(viewDir + lightDir);

float spec = pow(max(dot(halfwayDir, normal), 0.0), material.shininess);

vec3 specular = light.specular * spec * vec3(texture(material.specular, fs_in.texCoord));

float theta = dot(-lightDir, normalize(light.direction));

float epsilon = light.cutOff - light.outerCutOff;

float intensity = clamp((theta - light.outerCutOff) / epsilon, 0.0, 1.0);

float distance = length(light.position - fragPos);

float attenuation = 1.0f / (light.constant + light.linear * distance + light.quadratic * distance * distance);

return (ambient + (1.0 - shadow) * (diffuse + specular)) * intensity * attenuation;

}

float ShadowCalculation(vec4 fragPosLightSpace)

{

vec3 projCoord = fragPosLightSpace.xyz / fragPosLightSpace.w;

projCoord = projCoord * 0.5 + 0.5;

float closestDepth = texture(shadowMap, projCoord.xy).r;

float currentDepth = projCoord.z;

float shadow = currentDepth > closestDepth ? 1.0 : 0.0;

return shadow;

}

2.阴影失真

- 可以看到,在本应该被光照射到的地方存在着大量的失真,原因很简单:

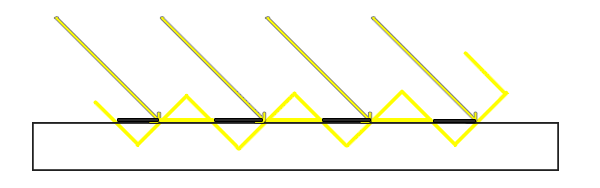

- 第一种方法:阴影偏移。我们把物体的深度减小一个bias,这样就可以避免片段深度与采样的深度纹理有交叉失真。注意原文的图画是错误的,他把深度贴图给抬升了。一个技巧是,因为光线的入射方向,或者说观察方向越是平行于平面,则失真越严重,因此可以使用法线方向与光线方向的余弦来动态调整bias。

float ShadowCalculation(vec4 fragPosLightSpace, vec3 normal, vec3 lightDir)

{

vec3 projCoord = fragPosLightSpace.xyz / fragPosLightSpace.w;

projCoord = projCoord * 0.5 + 0.5;

float closestDepth = texture(shadowMap, projCoord.xy).r;

float currentDepth = projCoord.z;

float bias = max(0.05 * (1.0 - dot(normal, lightDir)), 0.005);

float shadow = currentDepth-bias > closestDepth ? 1.0 : 0.0;

return shadow;

}

- 但是显然的一个现象是,我们抬升了物体的深度,来避免深度纹理的采样深度与真是深度的差异造成的失真,但是在其他的一些情况下,深度纹理的深度信息比真是深度略小,但却是真的有价值的评比,可以据此来生成阴影,那么此时就会造成阴影缺失,即彼得潘。

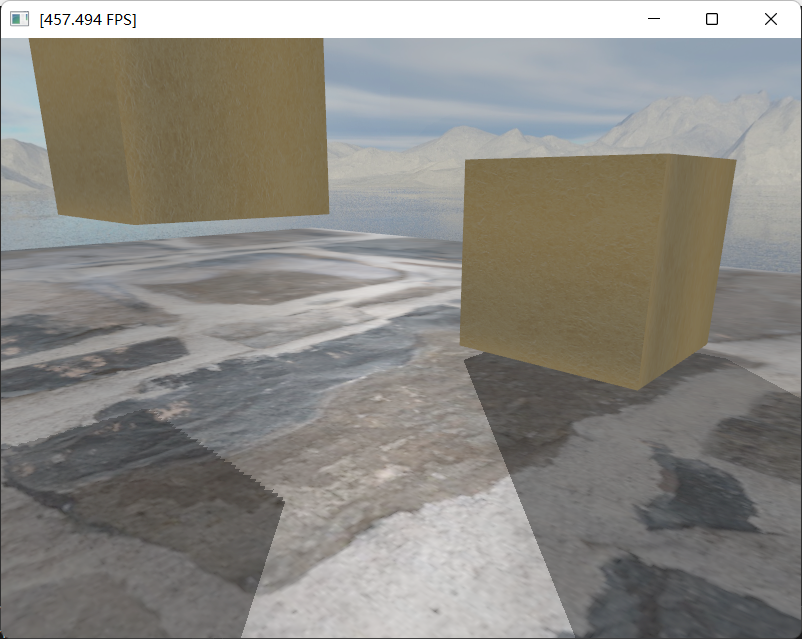

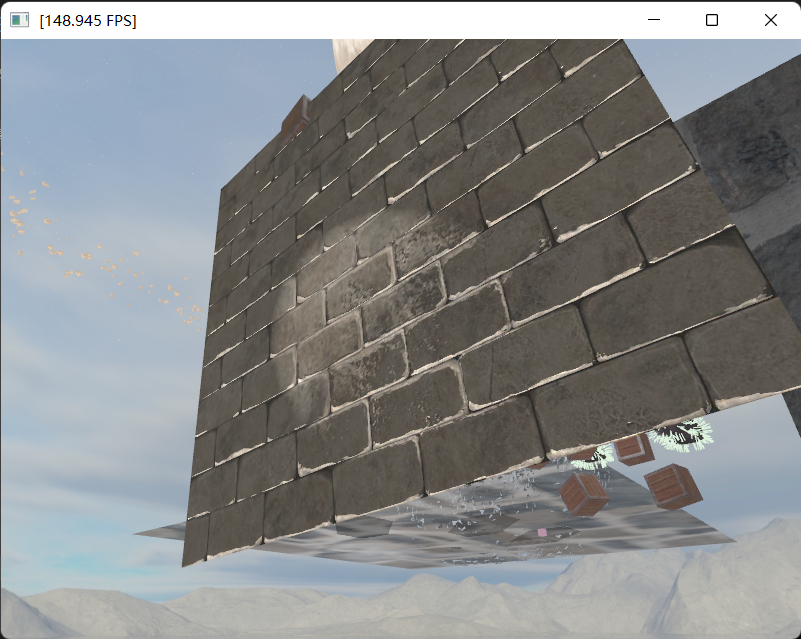

- 对于彼得潘问题,我们采取正面剔除,来获得背面的深度贴图,以此来解决阴影失真。这是因为对于闭合物体,使用背面的深度显然的就会有一个天然的深度bias,那么对于不需要阴影的正面来说就会很大程度的改善失真。可以看到,彼得潘问题被解决了,大部分地方的阴影失真也解决了,但是对于地板而言,他是一个单面的物体,或者被正面剔除而大部分地方解决了阴影失真,或者没有被剔除仍然存在阴影失真,但是即便被剔除了,地板与其他物体的交界处记录的深度是其他物体的深度,仍然会存在失真,如下:

//shadowmap

glViewport(0, 0, SHADOW_WIDTH, SHADOW_HEIGHT);

glBindFramebuffer(GL_FRAMEBUFFER, depthMapFBO);

glEnable(GL_DEPTH_TEST);

glDepthMask(GL_TRUE);

glClear(GL_DEPTH_BUFFER_BIT);

glEnable(GL_CULL_FACE);

glCullFace(GL_FRONT);

glFrontFace(GL_CCW);

shadowmap_Dir_Shader.use();

float near_plane = 0.1f, far_plane = 30.0f;

glm::mat4 lightProjection = glm::ortho(-20.0f, 20.0f, -20.0f, 20.0f, near_plane, far_plane);

glm::mat4 lightView = glm::lookAt(glm::vec3(40.0f, 10.0f, 40.0f), glm::vec3(30.0f,3.0f,30.0f), glm::vec3(0.0f, 1.0f, 0.0f));

glm::mat4 lightSpaceMatrix = lightProjection * lightView;

shadowmap_Dir_Shader.setMat4("lightSpaceMatrix", glm::value_ptr(lightSpaceMatrix));

glBindVertexArray(stencilVAO);

model = glm::mat4(1.0);

model = glm::translate(model, glm::vec3(24.0, 3.0, 39.0));

model = glm::scale(model, glm::vec3(4.0));

model = glm::translate(model, glm::vec3(0.0, 0.5, 0.0));

shadowmap_Dir_Shader.setMat4("model", glm::value_ptr(model));

glDrawElements(GL_TRIANGLES, 36, GL_UNSIGNED_INT, 0);

model = glm::mat4(1.0);

model = glm::translate(model, glm::vec3(30.0, 6.0, 33.0));

model = glm::scale(model, glm::vec3(4.0));

model = glm::translate(model, glm::vec3(0.0, 0.5, 0.0));

shadowmap_Dir_Shader.setMat4("model", glm::value_ptr(model));

glDrawElements(GL_TRIANGLES, 36, GL_UNSIGNED_INT, 0);

model = glm::mat4(1.0);

model = glm::translate(model, glm::vec3(37.0, 4.0, 23.0));

model = glm::scale(model, glm::vec3(4.0));

model = glm::translate(model, glm::vec3(0.0, 0.5, 0.0));

shadowmap_Dir_Shader.setMat4("model", glm::value_ptr(model));

glDrawElements(GL_TRIANGLES, 36, GL_UNSIGNED_INT, 0);

model = glm::mat4(1.0f);

model = glm::translate(model, glm::vec3(30.0, 3.0, 30.0));

model = glm::scale(model, glm::vec3(50.0));

model = glm::translate(model, glm::vec3(0.0, -0.5, 0.0));

shadowmap_Dir_Shader.setMat4("model", glm::value_ptr(model));

glDrawElements(GL_TRIANGLES, 6, GL_UNSIGNED_INT, (const void*)(12 * sizeof(unsigned int)));

glCullFace(GL_BACK);

glDisable(GL_CULL_FACE);

3. 深度纹理的范围

- 可以看到,在这里多了一块不应当存在的阴影,这是因为这个区域不在深度纹理的采样范围之内,而且我们对深度纹理的映射使用了GL_REPEAT,因此这个区域错误的评估了此处的最浅深度,为了解决这个问题,我们使用GL_CLAMP_TO_BREDER,并将边界设置为最深的深度1.0,这样虽然在深度纹理xy区域范围之外的地方不会存在阴影,但是总比出现莫名其妙的阴影要好得多。

好了,奇怪的阴影不见了。

const unsigned int SHADOW_WIDTH = 1024, SHADOW_HEIGHT = 1024;

unsigned int depthMap;

glGenTextures(1, &depthMap);

glBindTexture(GL_TEXTURE_2D, depthMap);

glTexImage2D(GL_TEXTURE_2D, 0, GL_DEPTH_COMPONENT, SHADOW_WIDTH, SHADOW_HEIGHT, 0, GL_DEPTH_COMPONENT, GL_FLOAT, NULL);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_BORDER);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_BORDER);

- 但是,可以看到,还有一大块区域是阴影,这是因为这一段区域超出了便准化设备空间的z向范围,即得到的深度都是大于我们的阴影纹理的最大深度值1.0的,所以,对于这部分区域,我们判断它的z值,如果大于1.0直接下结论这里木有阴影,虽然也很暴力,但是同样的,比有阴影要强。完美!

float ShadowCalculation(vec4 fragPosLightSpace, vec3 normal, vec3 lightDir)

{

vec3 projCoord = fragPosLightSpace.xyz / fragPosLightSpace.w;

projCoord = projCoord * 0.5 + 0.5;

float closestDepth = texture(shadowMap, projCoord.xy).r;

float currentDepth = projCoord.z;

//float bias = max(0.05 * (1.0 - dot(normal, lightDir)), 0.005);

float bias = 0;

float shadow = currentDepth-bias > closestDepth ? 1.0 : 0.0;

if (projCoord.z > 1.0f) :

shadow = 0.0f;

return shadow;

}

3.PCF

- 但还不够玩完美!可以看到,在阴影的边缘有锯齿,这个原因也很简单,这是因为阴影贴图的采样得到的。

- 最直接的方法是提高阴影贴图的分辨率。

- 另一个不那么直接的方法是PCF。即不是使用片段映射到的一处深度纹理,而是多处纹理,综合判断。一个最简单的方法就是取周围的纹理取平均值,作为shadow值,这样一来,就会出现0.0与1.0之间的shadow值,即不完全的阴影。在此处的平行光还没有太大的用处,但是在处理面光源时,这个可以用于模拟软阴影。

虽然还是有锯齿,但是好多了。

这里用到了textureSize(shadowMap,0)用于返回纹理(参数1)的0阶mipmap(参数2)的宽高,是一个vec2。

float ShadowCalculation(vec4 fragPosLightSpace, vec3 normal, vec3 lightDir)

{

vec3 projCoord = fragPosLightSpace.xyz / fragPosLightSpace.w;

projCoord = projCoord * 0.5 + 0.5;

float closestDepth = texture(shadowMap, projCoord.xy).r;

float currentDepth = projCoord.z;

float shadow = 0.0f;

float bias = 0.0f;// max(0.05 * (1.0 - dot(normal, lightDir)), 0.005);

//PCF

vec2 texelSize = 1.0 / textureSize(shadowMap, 0);

for (int i = 0; i != 3; i++)

{

for (int j = 0; j != 3; j++)

{

float pcfDepth = texture(shadowMap, projCoord.xy + vec2(i, j) * texelSize).r;

shadow += currentDepth - bias > pcfDepth ? 1.0 : 0.0;;

}

}

shadow /= 9.0;

//远处

if (projCoord.z > 1.0f)

shadow = 0.0f;

return shadow;

}

4.点光源阴影

- 你的2D深度贴图很不错,但是如果我拿出点光源,你又要怎么办呢?

- 针对点光源,就不能再使用2D的深度纹理,因为点光源会对所有方向有阴影贡献。因此,使用立方体贴图作为深度纹理,即万向阴影贴图。

- 首先我们创建一个附加了立方体贴图的帧缓冲。创建帧缓冲的过程没有新意,创建立方体贴图也没有新意,但是小的改动在于,我们在为立方体贴图的每一个面分配空间时(之前使用的也是glTexImage2D),与一般的2D的深度纹理缓冲的申请空间的参数大致一样,只是对于第一个参数不再是GL_TEXTURE_2D而是GL_TEXTURE_CUBE_MAP_POSITIVE_X等一系列立方体贴图的编号,这个自然也是有序的可以循环访问。最后,一个比较特殊的地方是,在为帧缓冲附加立方体纹理时,不再使用glFramebufferTexture2D,而是glFramebufferTexture,与glFramebufferTexture2D最大区别在于不再需要纹理的类型(之前用到了GL_TEXTURE_2D,而这里没有要求GL_TEXTUE_CUBE_MAP)。同样的,我们也告知不会使用颜色缓冲。

//立方体阴影贴图

unsigned int depthCubeMapFBO;

glGenFramebuffers(1, &depthCubeMapFBO);

glBindFramebuffer(GL_FRAMEBUFFER, depthCubeMapFBO);

unsigned int depthCubeMap;

glGenTextures(1, &depthCubeMap);

glBindTexture(GL_TEXTURE_CUBE_MAP, depthCubeMap);

for (unsigned int i = 0; i != 6; i++)

{

glTexImage2D(GL_TEXTURE_CUBE_MAP_POSITIVE_X + i, 0, GL_DEPTH_COMPONENT, SHADOW_WIDTH, SHADOW_HEIGHT, 0, GL_DEPTH_COMPONENT, GL_FLOAT, NULL);

}

glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_MAG_FILTER, GL_NEAREST);

glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_MIN_FILTER, GL_NEAREST);

glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_WRAP_R, GL_CLAMP_TO_EDGE);

glFramebufferTexture(GL_FRAMEBUFFER, GL_DEPTH_ATTACHMENT, depthCubeMap,0);

glDrawBuffer(GL_NONE);

glReadBuffer(GL_NONE);

glBindFramebuffer(GL_FRAMEBUFFER, 0);

- 同样的,我们需要首先对需要进行阴影效果的物体进行shadow map的渲染。要记得,我们的立方体贴图有六个面,这是与2D纹理的最大区别。一个直观的想法是,对帧缓冲分别附加这六个面,然后分别渲染深度图。但是这样实现起来比较繁琐,有一个简洁的方法是,使用几何着色器来实现。在几何着色器中,可以通过设置gl_Layer状态标记,来实现对不同面进行几何处理,类似于状态机。这样做的最大好处在于,不同的面在进行深度图处理的时候,由之前的2D深度图计算过程可知,其区别只在于顶点的View变换是不一样,而几何着色器可以进行顶点属性的计算,因此,通过gl_Layer来实现在不同的面之间的状态转移,然后对每个面按照其自身的View matrix进行顶点坐标的变换,就可以在一个几何着色器中实现对六个面的的深度图功能了。对于其中的View矩阵,我们可以将其作为全局变量数组传入几何着色器。当然,可以在CPU中提前将PV变换计算出来,相应的,我们在顶点着色器中只需要处理model变换。

- 而在片段着色器中,有着另外一个有趣的地方,那就是我们可以通过gl_FragDepth来写入深度缓冲。额外的用它来自行写入深度,是因为我们不再期望使用屏幕空间的z值来作为深度了,这是因为对于平行光,深度与z值具有天然的对应关系,但是对于点光源,z深度不同于光源距离,甚至经过投影变换z深度是非线性的。因此,我们期待直接计算出世界空间中光源与片段的距离,然后利用远平面深度far_plane来进行归一化。这就要求我们在几何着色器中需要输出顶点的世界坐标。

#version 330 core

layout(location = 0)in vec3 aPos;

uniform mat4 model;

void main()

{

gl_Position = model * vec4(aPos, 1.0);

}

#version 330 core

layout(triangles) in;

layout(triangle_strip, max_vertices = 18)out;

uniform mat4 shadowMatrixs[6];

out vec4 fragPos;

void main()

{

for (int face = 0; face != 6; face++)

{

gl_Layer = face;

for (int i = 0; i != 3; i++)

{

gl_Position = shadowMatrixs[face] * gl_in[i].gl_Position;

fragPos = gl_in[i].gl_Position;

EmitVertex();

}

EndPrimitive();

}

}

#version 330 core

in vec4 fragPos;

uniform vec3 lightPos;

uniform float far_plane;

void main()

{

float lightDistance = length(fragPos.xyz - lightPos);

lightDistance = lightDistance / far_plane;

gl_FragDepth = lightDistance;

}

//shader

Shader shadowmap_Dot_Shader("res/shader/shadowMap_Dot_Vertex.shader", "res/shader/shadowMap_Dot_Geometry.shader", "res/shader/shadowMap_Dot_Fragment.shader");

//变化矩阵数组

float aspect = (float)SHADOW_WIDTH / (float)SHADOW_HEIGHT;

float near = 0.1f, far = 200.0f;

glm::mat4 shadowProj = glm::perspective(glm::radians(90.0f), aspect, near, far);

vector<glm::mat4> shadowTransforms;

glm::vec3 dotLightPos = glm::vec3(-30.0f, -13.0f, 30.0f);

shadowTransforms.push_back(shadowProj * glm::lookAt(dotLightPos, dotLightPos + glm::vec3(1.0f, 0.0f, 0.0f), glm::vec3(0.0f, -1.0f, 0.0f)));

shadowTransforms.push_back(shadowProj * glm::lookAt(dotLightPos, dotLightPos + glm::vec3(-1.0f, 0.0f, 0.0f), glm::vec3(0.0f, -1.0f, 0.0f)));

shadowTransforms.push_back(shadowProj * glm::lookAt(dotLightPos, dotLightPos + glm::vec3(0.0f, 1.0f, 0.0f), glm::vec3(0.0f, 0.0f, 1.0f)));

shadowTransforms.push_back(shadowProj * glm::lookAt(dotLightPos, dotLightPos + glm::vec3(0.0f, -1.0f, 0.0f), glm::vec3(0.0f, 0.0f, -1.0f)));

shadowTransforms.push_back(shadowProj * glm::lookAt(dotLightPos, dotLightPos + glm::vec3(0.0f, 0.0f, 1.0f), glm::vec3(0.0f, -1.0f, 0.0f)));

shadowTransforms.push_back(shadowProj * glm::lookAt(dotLightPos, dotLightPos + glm::vec3(0.0f, 0.0f, -1.0f), glm::vec3(0.0f, -1.0f, 0.0f)));

//dot shadowmap

glBindFramebuffer(GL_FRAMEBUFFER, depthCubeMapFBO);

glViewport(0, 0, SHADOW_WIDTH, SHADOW_HEIGHT);

glEnable(GL_DEPTH_TEST);

glDepthMask(GL_TRUE);

glClear(GL_DEPTH_BUFFER_BIT);

shadowmap_Dot_Shader.use();

shadowmap_Dot_Shader.setFloat("far_plane", far);

shadowmap_Dot_Shader.setVec3("lightPos", dotLightPos);

glBindVertexArray(lightVAO);

for (unsigned int i = 0; i != 6; i++)

{

shadowmap_Dot_Shader.setMat4(("shadowMatrixs["+std::to_string(i)+"]").c_str(), glm::value_ptr(shadowTransforms[i]));

}

for (unsigned int i = 0; i != 6; i++)

{

model = glm::mat4(1.0);

model = glm::translate(model, glm::vec3(-30.0, -20.0, 30.0) + goldPositions[i]);

model = glm::scale(model, glm::vec3(4.0));

model = glm::translate(model, glm::vec3(0.0, 0.5, 0.0));

shadowmap_Dot_Shader.setMat4("model", glm::value_ptr(model));

glDrawElements(GL_TRIANGLES, 36, GL_UNSIGNED_INT, 0);

}

model = glm::mat4(1.0f);

model = glm::translate(model, glm::vec3(-30.0, -5.0, 30.0));

model = glm::scale(model, glm::vec3(50.0));

model = glm::translate(model, glm::vec3(0.0, 0.0, -1.0));

shadowmap_Dot_Shader.setMat4("model", glm::value_ptr(model));

glDrawElements(GL_TRIANGLES, 6, GL_UNSIGNED_INT, (const void*)(0 * 6 * sizeof(unsigned int)));

model = glm::mat4(1.0f);

model = glm::translate(model, glm::vec3(-30.0, -5.0, 30.0));

model = glm::scale(model, glm::vec3(50.0));

model = glm::translate(model, glm::vec3(0.0, 0.0, 1.0));

shadowmap_Dot_Shader.setMat4("model", glm::value_ptr(model));

glDrawElements(GL_TRIANGLES, 6, GL_UNSIGNED_INT, (const void*)(1 * 6 * sizeof(unsigned int)));

model = glm::mat4(1.0f);

model = glm::translate(model, glm::vec3(-30.0, -5.0, 30.0));

model = glm::scale(model, glm::vec3(50.0));

model = glm::translate(model, glm::vec3(0.0, -1.0, 0.0));

shadowmap_Dot_Shader.setMat4("model", glm::value_ptr(model));

glDrawElements(GL_TRIANGLES, 6, GL_UNSIGNED_INT, (const void*)(2 * 6 * sizeof(unsigned int)));

model = glm::mat4(1.0f);

model = glm::translate(model, glm::vec3(-30.0, -5.0, 30.0));

model = glm::scale(model, glm::vec3(50.0));

model = glm::translate(model, glm::vec3(0.0, 1.0, 0.0));

shadowmap_Dot_Shader.setMat4("model", glm::value_ptr(model));

glDrawElements(GL_TRIANGLES, 6, GL_UNSIGNED_INT, (const void*)(3 * 6 * sizeof(unsigned int)));

model = glm::mat4(1.0f);

model = glm::translate(model, glm::vec3(-30.0, -5.0, 30.0));

model = glm::scale(model, glm::vec3(50.0));

model = glm::translate(model, glm::vec3(-1.0, 0.0, 0.0));

shadowmap_Dot_Shader.setMat4("model", glm::value_ptr(model));

glDrawElements(GL_TRIANGLES, 6, GL_UNSIGNED_INT, (const void*)(4 * 6 * sizeof(unsigned int)));

model = glm::mat4(1.0f);

model = glm::translate(model, glm::vec3(-30.0, -5.0, 30.0));

model = glm::scale(model, glm::vec3(50.0));

model = glm::translate(model, glm::vec3(1.0, 0.0, 0.0));

shadowmap_Dot_Shader.setMat4("model", glm::value_ptr(model));

glDrawElements(GL_TRIANGLES, 6, GL_UNSIGNED_INT, (const void*)(5 * 6 * sizeof(unsigned int)));

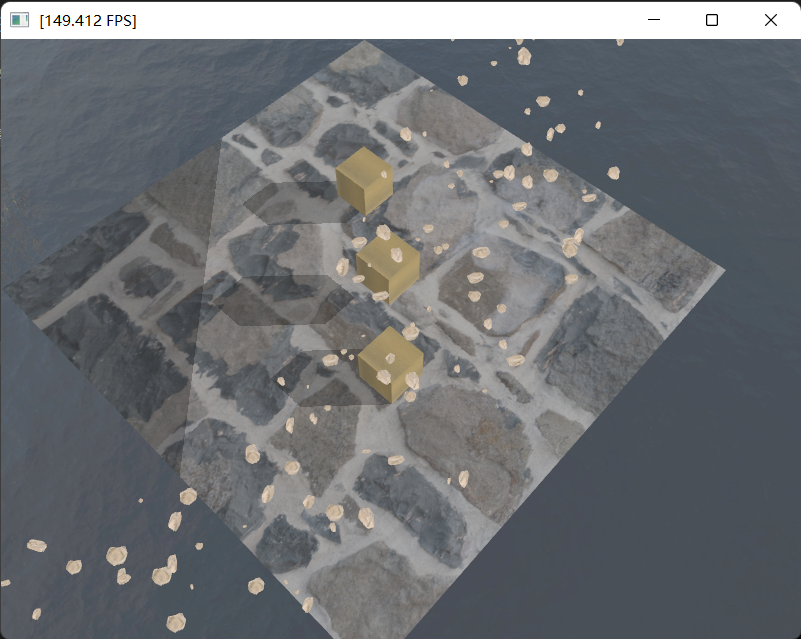

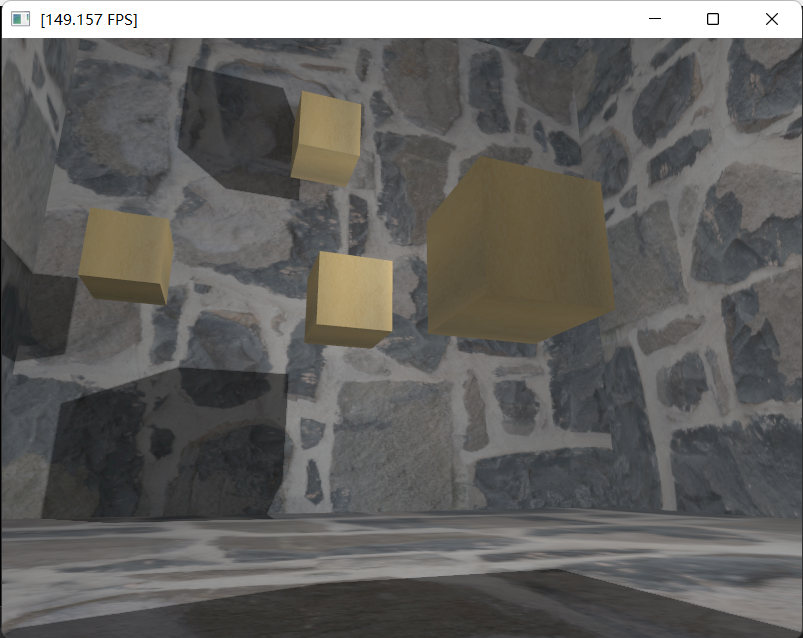

- 有了shader之后,我们就可以对物体进行shadowmap计算,然后将设深度图画出来看看。还不错。

- 接着,我们要实现利用深度图的阴影效果shader。顶点着色器与一般的布林冯模型没有区别,因为立方体贴图是使用世界坐标的方向矢量来映射,而非观察空间的xy坐标,因此不再需要在顶点着色器中计算观察空间坐标了。

- 片段着色器的shadow计算也只是修改为对光源和片段之间的矢量进行映射,其他地方没有大的改动。

- 最后,关于PCF,是采取对该矢量周围的三维空间中进行采样,来调整采样的方向。这里采取了20个方向,即立方体的顶点和边中点。此外,我们对采样的尺度进行了调整,当尺度较大时,阴影更软,尺度更小时,阴影更硬,所以我们可以根据观察到片段的距离来调整,使得远处更软(这样做好像不是很合理,应该按照光源、遮挡物、以及阴影的距离来实现软效果)。

- 完美!

#version 330 core

layout(location = 0) in vec3 aPos;

layout(location = 1) in vec3 aColor;

layout(location = 2) in vec2 aTexCoord;

layout(location = 3) in vec3 aNorm;

out VS_OUT{

vec3 fragPos;

vec3 normal;

vec2 texCoord;

} vs_out;

uniform mat4 model;

uniform mat4 view;

uniform mat4 projection;

void main()

{

gl_Position = projection * view * model * vec4(aPos, 1.0f);

vs_out.fragPos = vec3(model * vec4(aPos, 1.0f));

vs_out.normal = normalize(mat3(transpose(inverse(model))) * aNorm);

vs_out.texCoord = aTexCoord;

}

#version 330 core

out vec4 fragColor;

in VS_OUT{

vec3 fragPos;

vec3 normal;

vec2 texCoord;

} fs_in;

uniform vec3 viewPos;

uniform float far_plane;

uniform samplerCube shadowMap;

struct Material {

//vec3 ambient;

sampler2D diffuse;

sampler2D specular;

float shininess;

};

uniform Material material;

struct DirLight {

vec3 direction;

vec3 ambient;

vec3 diffuse;

vec3 specular;

};

uniform DirLight dirlight;

struct PointLight {

vec3 position;

vec3 ambient;

vec3 diffuse;

vec3 specular;

float constant;

float linear;

float quadratic;

};

uniform PointLight pointlight;

struct SpotLight {

vec3 direction;

vec3 position;

vec3 ambient;

vec3 diffuse;

vec3 specular;

float cutOff;

float outerCutOff;

float constant;

float linear;

float quadratic;

};

uniform SpotLight spotlight;

vec3 sampleOffsetDirections[20] = {

vec3(1, 1, 1), vec3(1, -1, 1), vec3(-1, -1, 1), vec3(-1, 1, 1),

vec3(1, 1, -1), vec3(1, -1, -1), vec3(-1, -1, -1), vec3(-1, 1, -1),

vec3(1, 1, 0), vec3(1, -1, 0), vec3(-1, -1, 0), vec3(-1, 1, 0),

vec3(1, 0, 1), vec3(-1, 0, 1), vec3(1, 0, -1), vec3(-1, 0, -1),

vec3(0, 1, 1), vec3(0, -1, 1), vec3(0, -1, -1), vec3(0, 1, -1)

};

vec3 calcSpotLight(SpotLight light, vec3 normal, vec3 viewPos, vec3 fragPos);

vec3 calcPointLight(PointLight light, vec3 normal, vec3 viewPos, vec3 fragPos);

float ShadowCalculation(vec3 fragPos, vec3 lightPos, vec3 normal, vec3 lightDir);

void main()

{

vec3 result = calcPointLight(pointlight, fs_in.normal, viewPos, fs_in.fragPos);

fragColor = vec4(result, 1.0f);

//gamma

float gamma = 2.2;

fragColor.rgb = pow(fragColor.rgb, vec3(1.0 / gamma));

}

vec3 calcPointLight(PointLight light, vec3 normal, vec3 viewPos, vec3 fragPos)

{

float distance = length(light.position - fragPos);

float attenuation = 1.0f / (light.constant + light.linear * distance + light.quadratic * distance * distance);

//ambient

vec3 ambient = light.ambient * vec3(texture(material.diffuse, fs_in.texCoord));

//diffuse

vec3 lightDir = normalize(light.position - fragPos);

float diff = max(dot(normal, lightDir), 0.0);

vec3 diffuse = light.diffuse * diff * vec3(texture(material.diffuse, fs_in.texCoord));

//specular

vec3 viewDir = normalize(viewPos - fragPos);

vec3 halfwayDir = normalize(viewDir + lightDir);

float spec = pow(max(dot(halfwayDir, normal), 0.0), material.shininess);

vec3 specular = light.specular * spec * vec3(texture(material.specular, fs_in.texCoord));

float shadow = ShadowCalculation(fragPos, light.position, normal, lightDir);

return (ambient + (1.0 - shadow) * (diffuse + specular))* attenuation;

}

vec3 calcSpotLight(SpotLight light, vec3 normal, vec3 viewPos, vec3 fragPos)

{

//ambient

vec3 ambient = light.ambient * vec3(texture(material.diffuse, fs_in.texCoord));

//diffuse

vec3 lightDir = normalize(light.position - fragPos);

float diff = max(dot(normal, lightDir), 0.0);

vec3 diffuse = light.diffuse * diff * vec3(texture(material.diffuse, fs_in.texCoord));

//specular

vec3 viewDir = normalize(viewPos - fragPos);

vec3 halfwayDir = normalize(viewDir + lightDir);

float spec = pow(max(dot(halfwayDir, normal), 0.0), material.shininess);

vec3 specular = light.specular * spec * vec3(texture(material.specular, fs_in.texCoord));

float theta = dot(-lightDir, normalize(light.direction));

float epsilon = light.cutOff - light.outerCutOff;

float intensity = clamp((theta - light.outerCutOff) / epsilon, 0.0, 1.0);

float distance = length(light.position - fragPos);

float attenuation = 1.0f / (light.constant + light.linear * distance + light.quadratic * distance * distance);

float shadow = ShadowCalculation(fragPos, light.position, normal, lightDir);

return (ambient + (1.0 - shadow) * (diffuse + specular)) * intensity * attenuation;

}

float ShadowCalculation(vec3 fragPos, vec3 lightPos, vec3 normal, vec3 lightDir)

{

vec3 fragToLight = fragPos - lightPos;

float currentDepth = length(fragToLight);

float shadow = 0.0f;

float bias = max(0.5 * (1.0 - dot(normal, lightDir)), 0.05);

int samples = 20;

float viewDistance = length(viewPos - fragPos);

float diskRadius = (1.0 + viewDistance / far_plane) /25.0f;

for (int i = 0; i != samples; i++)

{

float closestDepth = texture(shadowMap, fragToLight + sampleOffsetDirections[i] * diskRadius).r;

closestDepth *= far_plane;

shadow += currentDepth - bias > closestDepth ? 1.0 : 0.0;

}

shadow /= float(samples);

return shadow;

}

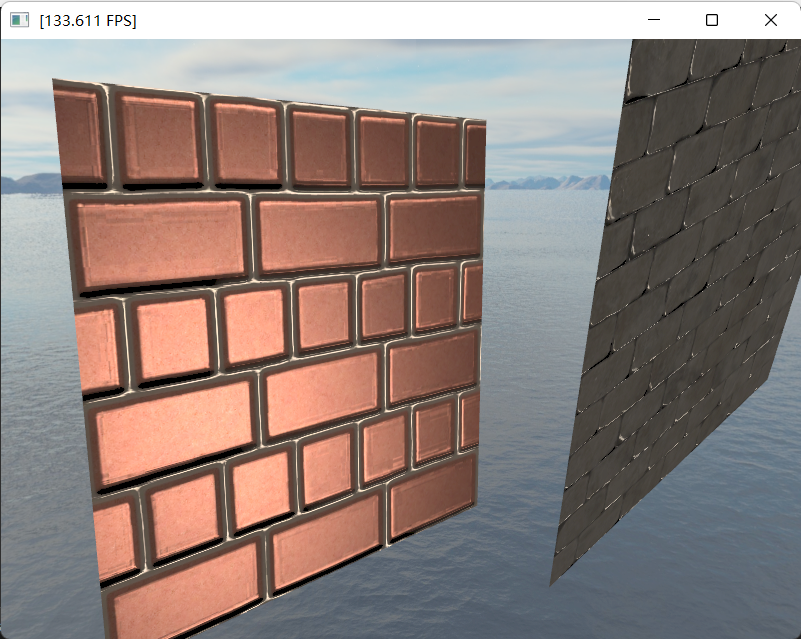

四、法线贴图

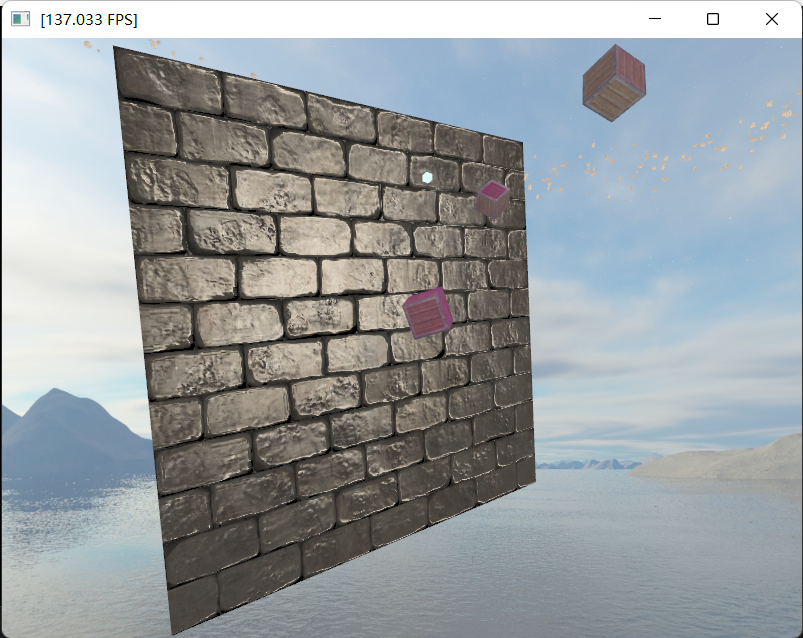

1. 法线贴图

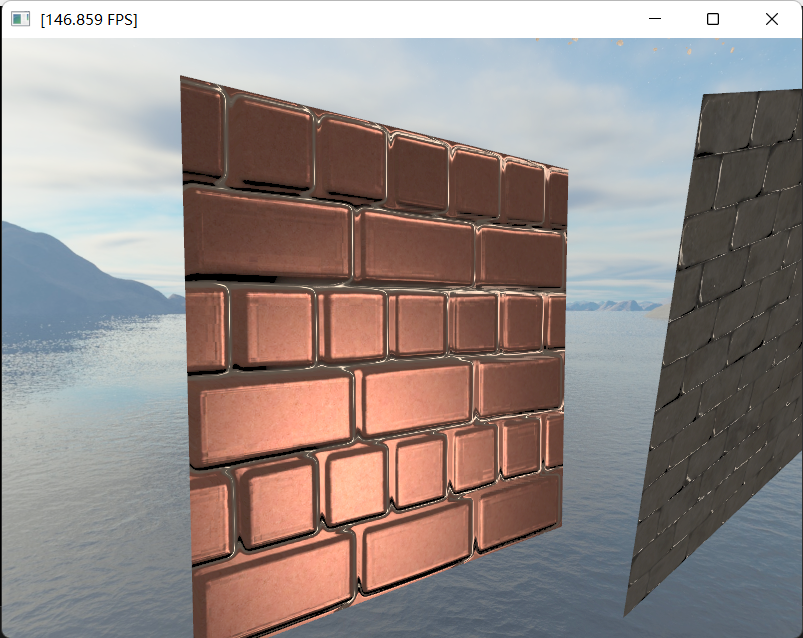

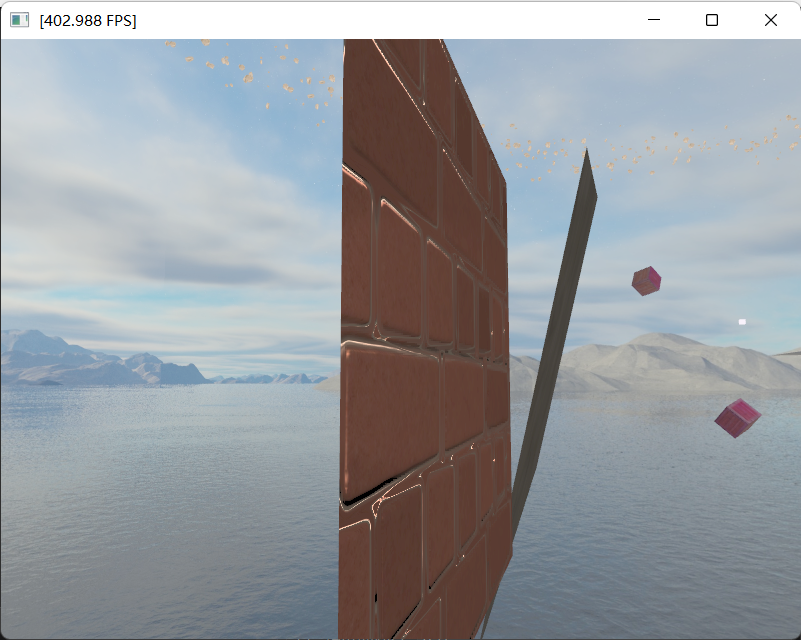

- 使用法线贴图在偏片段着色器中计算光照,代替顶点法线属性的插值,这一步代替很相似于使用反射纹理代替顶点的颜色属性。

- 但是,可以看出,法线纹理的效果只在三角形面朝向z正向时(还要求一个角度)有效,一旦平面倾斜,就会造成不好的后果。这是因为法线纹理的采样结果是固定的矢量,当我们在世界坐标中计算光照时,发现并没有随着平面的model变换而调整。

//normal map

unsigned int normalMap;

glGenTextures(1, &normalMap);

glBindTexture(GL_TEXTURE_2D, normalMap);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_MIRRORED_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_MIRRORED_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_LINEAR);

stbi_set_flip_vertically_on_load(true);

data = stbi_load("res/texture/brickwall_normal.jpg", &width, &height, &nrChannels, 0);

if (data)

{

glTexImage2D(GL_TEXTURE_2D, 0, GL_SRGB, width, height, 0, GL_RGB, GL_UNSIGNED_BYTE, data);

glGenerateMipmap(GL_TEXTURE_2D);

}

else

{

std::cout << "Failed to load brickwall_normal." << std::endl;

}

stbi_image_free(data);

unsigned int brickwall;

glGenTextures(1, &brickwall);

glBindTexture(GL_TEXTURE_2D, brickwall);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_MIRRORED_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_MIRRORED_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_LINEAR);

stbi_set_flip_vertically_on_load(true);

data = stbi_load("res/texture/brickwall.jpg", &width, &height, &nrChannels, 0);

if (data)

{

glTexImage2D(GL_TEXTURE_2D, 0, GL_SRGB, width, height, 0, GL_RGB, GL_UNSIGNED_BYTE, data);

glGenerateMipmap(GL_TEXTURE_2D);

}

else

{

std::cout << "Failed to load brickwall." << std::endl;

}

stbi_image_free(data);

//normal map

normalShader.use();

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_2D, normalMap);

glActiveTexture(GL_TEXTURE1);

glBindTexture(GL_TEXTURE_2D, brickwall);

glActiveTexture(GL_TEXTURE2);

glBindTexture(GL_TEXTURE_2D, brickwall);

model = glm::mat4(1.0f);

model = glm::translate(model, glm::vec3(0.0f, 0.0f, -20.0f));

model = glm::rotate(model, (float)glfwGetTime() * -1, glm::normalize(glm::vec3(1.0, 0.0, 1.0)));

model = glm::scale(model, glm::vec3(10.0f, 10.0f, 10.0f));

normalShader.setMat4("model",glm::value_ptr(model));

normalShader.setMat4("view", glm::value_ptr(view));

normalShader.setMat4("projection", glm::value_ptr(projection));

normalShader.setInt("normalMap", 0);

normalShader.setInt("material.diffuse", 1);

normalShader.setInt("material.specular", 2);

normalShader.setFloat("material.shininess", 32.0f);

normalShader.setVec3("viewPos", camera.Position);

normalShader.setVec3("dirlight.ambient", 0.05f, 0.05f, 0.05f);

normalShader.setVec3("dirlight.diffuse", 10.0f, 10.0f, 10.0f);

normalShader.setVec3("dirlight.specular", 8.0f, 8.0f, 8.0f);

normalShader.setVec3("dirlight.direction", -0.2f, -1.0f, -0.3f);

normalShader.setVec3("spotlight.ambient", 0.0f, 0.0f, 0.0f);

normalShader.setVec3("spotlight.diffuse", 10.0f, 10.0f, 10.0f);

normalShader.setVec3("spotlight.specular", 5.0f, 5.0f, 5.0f);

normalShader.setVec3("spotlight.direction", camera.Front);

normalShader.setVec3("spotlight.position", camera.Position);

normalShader.setFloat("spotlight.cutOff", glm::cos(glm::radians(12.5f)));

normalShader.setFloat("spotlight.outerCutOff", glm::cos(glm::radians(15.0f)));

normalShader.setFloat("spotlight.constant", 1.0f);

normalShader.setFloat("spotlight.linear", 0.09f);

normalShader.setFloat("spotlight.quadratic", 0.032f);

glBindVertexArray(VAO);

glDrawElements(GL_TRIANGLES, 6, GL_UNSIGNED_INT, 0);

- 那么,一个直接的想法就是,为什么不跟之前的顶点法线属性一样,采取normalModel变换呢。这个乍一看是很合理的,但是有一个前提是被忽略了的,当我们设置顶点属性的时候,顶点法线是该组顶点属性在局部坐标系下能够确保正确的,只是当顶点属性中的顶点位置发生变换的时候,为了保持法线与位置的在各种坐标系下,尤其是世界坐标系下的一致性,我们需要用到发现变换矩阵,当我们使用法线纹理的时候,我们同样可以保证定点属性在局部坐标系下是正确的,但是这个保证止步于纹理坐标,不能保证法线纹理的映射结果在局部坐标中也是正确的,所以不能直接使用法线变换矩阵。

- 因此,我们就需要引入切线空间,这个切线空间就可以解决局部坐标中的发现正确性问题,即将不能保证局部坐标下正确的法线纹理进行坐标变换到局部坐标系下。这样一来,就可以使用法线变换矩阵了。把他们综合起来,就是将法线纹理一步转换到世界坐标系。当然,也可以将世界坐标系下的位置,包括fragPos、lightPos和viewPos等光照计算需要的坐标,反向转换到法线纹理所在的空间,即切线空间。当然,要注意的是,将法线纹理转换到世界空间使用的是法线的model矩阵,而将位置坐标转换到切线空间使用的是基本的modle矩阵,这很容易理解。

2. 切线空间

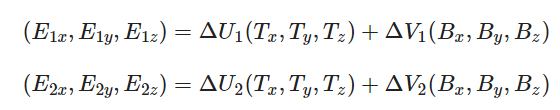

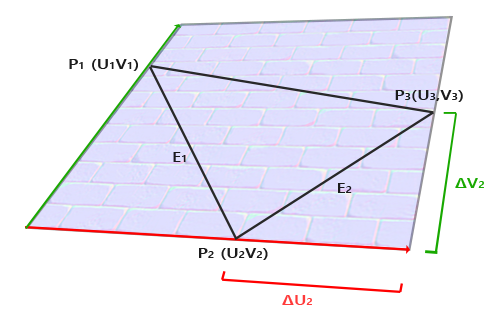

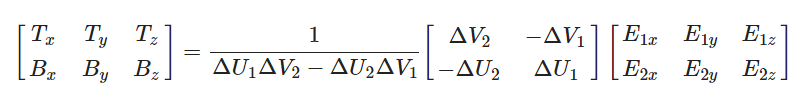

- 我们定义切线空间的三个基矢量是T,B,N,对于每一个三角形面而言,切线空间是它的局部坐标空间,但是不同于一般的局部坐标,正如之前所说的,法线纹理是保存在一个颜色纹理之中的RGB通道中的,分别对应空间中的xyz坐标,这个xyz坐标的坐标基矢量既不是顶点的局部坐标更不是之前见到的任何其他坐标如世界坐标、观察坐标等,我们把它定义为切线空间。很显然的,切线空间是平铺在三角形面上的,因此切线空间的N矢量就是面的法线,相应的T和B矢量是面上的两个正交基矢量,那么我们关注的重点就是如何确定T和B矢量在局部坐标系中的表示。

- T和B矢量是三角形面上的两个基矢量,那么很显然的是,我们要求解出TB实则只要拿到切线空间的坐标以及局部空间的坐标就可以计算出坐标变换矩阵,即拿到TB在局部空间中的坐标了。

\[P_{xyz}=TBN \cdot P_{uvn}

\]

即:

\[x=T_x \cdot u+B_x \cdot v+N_x \cdot n

\]

\[y=T_y \cdot u+B_y \cdot v+N_y \cdot n

\]

\[z=T_z \cdot u+B_z \cdot v+N_z \cdot n

\]

然而,一个问题在于,我们并不知道在切线空间中的n坐标是什么,但是幸运的是,他们的n坐标都是一样的,因此,我们只需要三个点的位置,并对他们做差就可以得到足以解出TB的方程了。

进一步的,可以得到:

- 一个需要注意的点是,计算出来的tangent和bitangent向量不是单位向量,需要标准化。

- 之前说到,我们是将normal、tangent和bitangent作为顶点属性的,因此我们在CPU端计算出tangent和bitangent,并传入顶点着色器中:

void normalSquare(unsigned int &VAO,unsigned int &VBO)

{

glm::vec3 pos1(-1.0f, 1.0f, 0.0f);

glm::vec3 pos2(-1.0f, -1.0f, 0.0f);

glm::vec3 pos3(1.0f, -1.0f, 0.0f);

glm::vec3 pos4(1.0f, 1.0f, 0.0f);

glm::vec2 uv1(0.0f, 1.0f);

glm::vec2 uv2(0.0f, 0.0f);

glm::vec2 uv3(1.0f, 0.0f);

glm::vec2 uv4(1.0f, 1.0f);

glm::vec3 nm(0.0f, 0.0f, 1.0f);

glm::vec3 edge1 = pos2 - pos1;

glm::vec3 edge2 = pos3 - pos1;

glm::vec2 deltaUV1 = uv2 - uv1;

glm::vec2 deltaUV2 = uv3 - uv1;

float f = 1.0f / (deltaUV1.x * deltaUV2.y - deltaUV1.y * deltaUV2.x);

glm::vec3 tangent1;

tangent1.x = f * (deltaUV2.y * edge1.x - deltaUV1.y * edge2.x);

tangent1.y = f * (deltaUV2.y * edge1.y - deltaUV1.y * edge2.y);

tangent1.z = f * (deltaUV2.y * edge1.z - deltaUV1.y * edge2.z);

tangent1=glm::normalize(tangent1);

glm::vec3 bitangent1;

bitangent1.x = f * (-deltaUV2.x * edge1.x + deltaUV1.x * edge2.x);

bitangent1.y = f * (-deltaUV2.x * edge1.y + deltaUV1.x * edge2.y);

bitangent1.z = f * (-deltaUV2.x * edge1.z + deltaUV1.x * edge2.z);

bitangent1 = glm::normalize(bitangent1);

edge1 = pos3 - pos1;

edge2 = pos4 - pos1;

deltaUV1 = uv3 - uv1;

deltaUV2 = uv4 - uv1;

f = 1.0f / (deltaUV1.x * deltaUV2.y - deltaUV1.y * deltaUV2.x);

glm::vec3 tangent2;

tangent2.x = f * (deltaUV2.y * edge1.x - deltaUV1.y * edge2.x);

tangent2.y = f * (deltaUV2.y * edge1.y - deltaUV1.y * edge2.y);

tangent2.z = f * (deltaUV2.y * edge1.z - deltaUV1.y * edge2.z);

tangent2 = glm::normalize(tangent2);

glm::vec3 bitangent2;

bitangent2.x = f * (-deltaUV2.x * edge1.x + deltaUV1.x * edge2.x);

bitangent2.y = f * (-deltaUV2.x * edge1.y + deltaUV1.x * edge2.y);

bitangent2.z = f * (-deltaUV2.x * edge1.z + deltaUV1.x * edge2.z);

bitangent2 = glm::normalize(bitangent2);

float vertices[] =

{

pos1.x,pos1.y,pos1.z,nm.x,nm.y,nm.z,uv1.x,uv1.y,tangent1.x,tangent1.y,tangent1.z,bitangent1.x,bitangent1.y,bitangent1.z,

pos2.x,pos2.y,pos2.z,nm.x,nm.y,nm.z,uv2.x,uv2.y,tangent1.x,tangent1.y,tangent1.z,bitangent1.x,bitangent1.y,bitangent1.z,

pos3.x,pos3.y,pos3.z,nm.x,nm.y,nm.z,uv3.x,uv3.y,tangent1.x,tangent1.y,tangent1.z,bitangent1.x,bitangent1.y,bitangent1.z,

pos1.x,pos1.y,pos1.z,nm.x,nm.y,nm.z,uv1.x,uv1.y,tangent2.x,tangent2.y,tangent2.z,bitangent2.x,bitangent2.y,bitangent2.z,

pos3.x,pos3.y,pos3.z,nm.x,nm.y,nm.z,uv3.x,uv3.y,tangent2.x,tangent2.y,tangent2.z,bitangent2.x,bitangent2.y,bitangent2.z,

pos4.x,pos4.y,pos4.z,nm.x,nm.y,nm.z,uv4.x,uv4.y,tangent2.x,tangent2.y,tangent2.z,bitangent2.x,bitangent2.y,bitangent2.z

};

glGenVertexArrays(1, &VAO);

glBindVertexArray(VAO);

glGenBuffers(1, &VBO);

glBindBuffer(GL_ARRAY_BUFFER,VBO);

glBufferData(GL_ARRAY_BUFFER, sizeof(vertices), &vertices, GL_STATIC_DRAW);

glEnableVertexAttribArray(0);

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 14 * sizeof(float), (void*)0);

glEnableVertexAttribArray(1);

glVertexAttribPointer(1, 3, GL_FLOAT, GL_FALSE, 14 * sizeof(float), (void*)(3 * sizeof(float)));

glEnableVertexAttribArray(2);

glVertexAttribPointer(2, 2, GL_FLOAT, GL_FALSE, 14 * sizeof(float), (void*)(6 * sizeof(float)));

glEnableVertexAttribArray(3);

glVertexAttribPointer(3, 3, GL_FLOAT, GL_FALSE, 14 * sizeof(float), (void*)(8 * sizeof(float)));

glEnableVertexAttribArray(4);

glVertexAttribPointer(4, 3, GL_FLOAT, GL_FALSE, 14 * sizeof(float), (void*)(11 * sizeof(float)));

}

- 在这里我们可以看到,一个三角形的每个顶点都有tangent、bitangent和normal属性,对于一个平坦的三角形来说,这三个属性显然是一样的,相应的三角形的片段的插值也是一样的,但是如果三角形之间共享顶点,那么一般的做法是将一个顶点的三个属性都做平均化处理,这样一来相邻三角形的法线会比较平缓的过渡,而非呈现出网格的生硬过度,自然的,中间插值的片段TBN也不再处处相同了。当然,在这里我们只是处理了一个四边形,无论是否平均化结果都是一样的。

- 我们的着色器拿到这三个属性之后,有两个思路来利用TBN变换:

- 第一个是,在片段着色器中使用TBN变换,将法线纹理采样的解雇做变换。这个只需要在顶点着色器中对三个适量做方向矢量的model变换,然后得到TBN矩阵输出到片段着色器中就可以了。

- 第一个是,在片段着色器中使用TBN变换,将法线纹理采样的解雇做变换。这个只需要在顶点着色器中对三个适量做方向矢量的model变换,然后得到TBN矩阵输出到片段着色器中就可以了。

#version 330 core

layout(location = 0) in vec3 aPos;

layout(location = 1) in vec3 aNorm;

layout(location = 2) in vec2 aTexCoord;

layout(location = 3) in vec3 aTangent;

layout(location = 3) in vec3 aBiTangent;

out VS_OUT{

vec3 fragPos;

vec2 texCoord;

vec3 normal;

mat3 TBN;

}vs_out;

uniform mat4 model;

uniform mat4 view;

uniform mat4 projection;

void main()

{

gl_Position = projection * view * model * vec4(aPos, 1.0f);

vs_out.fragPos = vec3(model * vec4(aPos, 1.0f));

vs_out.normal = normalize(mat3(transpose(inverse(model))) * aNorm);

vs_out.texCoord = aTexCoord;

mat4 normModel = transpose(inverse(model));

vec3 T = normalize(vec3(normModel * vec4(aTangent, 0.0)));

vec3 B = normalize(vec3(transpose(inverse(model)) * vec4(aBiTangent, 0.0)));

vec3 N = normalize(vec3(transpose(inverse(model)) * vec4(aNorm, 0.0)));

vs_out.TBN = mat3(T, B, N);

}

#version 330 core

out vec4 fragColor;

in VS_OUT{

vec3 fragPos;

vec2 texCoord;

vec3 normal;

mat3 TBN;

}fs_in;

uniform vec3 viewPos;

uniform sampler2D normalMap;

struct Material {

sampler2D diffuse;

sampler2D specular;

float shininess;

};

uniform Material material;

struct DirLight {

vec3 direction;

vec3 ambient;

vec3 diffuse;

vec3 specular;

};

uniform DirLight dirlight;

struct PointLight {

vec3 position;

vec3 ambient;

vec3 diffuse;

vec3 specular;

float constant;

float linear;

float quadratic;

};

#define NR_POINT_LIGHTS 4

uniform PointLight pointlights[NR_POINT_LIGHTS];

struct SpotLight {

vec3 direction;

vec3 position;

vec3 ambient;

vec3 diffuse;

vec3 specular;

float cutOff;

float outerCutOff;

float constant;

float linear;

float quadratic;

};

uniform SpotLight spotlight;

vec3 calcDirLight(DirLight light, vec3 normal, vec3 viewPos, vec3 fragPos);

vec3 calcSpotLight(SpotLight light, vec3 normal, vec3 viewPos, vec3 fragPos);

vec3 calcPointLight(PointLight light, vec3 normal, vec3 viewPos, vec3 fragPos);

void main()

{

vec3 normal = texture(normalMap, fs_in.texCoord).rgb;

normal = normalize(normal * 2.0 - 1.0);

normal = normalize(fs_in.TBN * normal);

vec3 result = calcDirLight(dirlight, normal, viewPos, fs_in.fragPos);

//for (int i = 0; i != NR_POINT_LIGHTS; i++)

//{

// result += calcPointLight(pointlights[i], normal, viewPos, fragPos);

//}

result += calcSpotLight(spotlight, normal, viewPos, fs_in.fragPos);

fragColor = vec4(result, 1.0f);

//gamma

float gamma = 2.2;

fragColor.rgb = pow(fragColor.rgb, vec3(1.0 / gamma));

}

vec3 calcDirLight(DirLight light, vec3 normal, vec3 viewPos, vec3 fragPos)

{

//ambient

vec3 ambient = light.ambient * vec3(texture(material.diffuse, fs_in.texCoord));

//diffuse

vec3 lightDir = normalize(-light.direction);

float diff = max(dot(normal, lightDir), 0.0);

vec3 diffuse = light.diffuse * diff * vec3(texture(material.diffuse, fs_in.texCoord));

//specular

vec3 viewDir = normalize(viewPos - fragPos);

vec3 halfwayDir = normalize(viewDir + lightDir);

float spec = pow(max(dot(halfwayDir, normal), 0.0), material.shininess);

vec3 specular = light.specular * spec * vec3(texture(material.specular, fs_in.texCoord));

return ambient + diffuse + specular;

}

vec3 calcPointLight(PointLight light, vec3 normal, vec3 viewPos, vec3 fragPos)

{

float distance = length(light.position - fragPos);

float attenuation = 1.0f / (light.constant + light.linear * distance + light.quadratic * distance * distance);

//ambient

vec3 ambient = light.ambient * vec3(texture(material.diffuse, fs_in.texCoord));

//diffuse

vec3 lightDir = normalize(light.position - fragPos);

float diff = max(dot(normal, lightDir), 0.0);

vec3 diffuse = light.diffuse * diff * vec3(texture(material.diffuse, fs_in.texCoord));

//specular

vec3 viewDir = normalize(viewPos - fragPos);

vec3 halfwayDir = normalize(viewDir + lightDir);

float spec = pow(max(dot(halfwayDir, normal), 0.0), material.shininess);

vec3 specular = light.specular * spec * vec3(texture(material.specular, fs_in.texCoord));

return (ambient + diffuse + specular) * attenuation;

}

vec3 calcSpotLight(SpotLight light, vec3 normal, vec3 viewPos, vec3 fragPos)

{

//ambient

vec3 ambient = light.ambient * vec3(texture(material.diffuse, fs_in.texCoord));

//diffuse

vec3 lightDir = normalize(light.position - fragPos);

float diff = max(dot(normal, lightDir), 0.0);

vec3 diffuse = light.diffuse * diff * vec3(texture(material.diffuse, fs_in.texCoord));

//specular

vec3 viewDir = normalize(viewPos - fragPos);

vec3 halfwayDir = normalize(viewDir + lightDir);

float spec = pow(max(dot(halfwayDir, normal), 0.0), material.shininess);

vec3 specular = light.specular * spec * vec3(texture(material.specular, fs_in.texCoord));

float theta = dot(-lightDir, normalize(light.direction));

float epsilon = light.cutOff - light.outerCutOff;

float intensity = clamp((theta - light.outerCutOff) / epsilon, 0.0, 1.0);

float distance = length(light.position - fragPos);

float attenuation = 1.0f / (light.constant + light.linear * distance + light.quadratic * distance * distance);

return (ambient + diffuse + specular) * intensity * attenuation;

}

//normal map

normalShader.use();

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_2D, normalMap);

glActiveTexture(GL_TEXTURE1);

glBindTexture(GL_TEXTURE_2D, brickwall);

glActiveTexture(GL_TEXTURE2);

glBindTexture(GL_TEXTURE_2D, brickwall);

model = glm::mat4(1.0f);

model = glm::translate(model, glm::vec3(0.0f, 0.0f, -20.0f));

model = glm::rotate(model, glm::radians(20.0f), glm::normalize(glm::vec3(1.0, 0.0, 1.0)));

model = glm::scale(model, glm::vec3(10.0f, 10.0f, 10.0f));

normalShader.setMat4("model",glm::value_ptr(model));

normalShader.setMat4("view", glm::value_ptr(view));

normalShader.setMat4("projection", glm::value_ptr(projection));

normalShader.setInt("normalMap", 0);

normalShader.setInt("material.diffuse", 1);

normalShader.setInt("material.specular", 2);

normalShader.setFloat("material.shininess", 168.0f);

normalShader.setVec3("viewPos", camera.Position);

normalShader.setVec3("dirlight.ambient", 0.0f, 0.0f, 0.0f);

normalShader.setVec3("dirlight.diffuse", 0.02f, 0.02f, 0.02f);

normalShader.setVec3("dirlight.specular", 3.0f, 3.0f, 3.0f);

normalShader.setVec3("dirlight.direction", 0.2f, 1.0f, -0.3f);

normalShader.setVec3("spotlight.ambient", 0.0f, 0.0f, 0.0f);

normalShader.setVec3("spotlight.diffuse", 0.02f, 0.02f, 0.02f);

normalShader.setVec3("spotlight.specular", 2.0f, 2.0f, 2.0f);

normalShader.setVec3("spotlight.direction", camera.Front);

normalShader.setVec3("spotlight.position", camera.Position);

normalShader.setFloat("spotlight.cutOff", glm::cos(glm::radians(12.5f)));

normalShader.setFloat("spotlight.outerCutOff", glm::cos(glm::radians(15.0f)));

normalShader.setFloat("spotlight.constant", 1.0f);

normalShader.setFloat("spotlight.linear", 0.09f);

normalShader.setFloat("spotlight.quadratic", 0.032f);

glBindVertexArray(normalVAO);

glDrawArrays(GL_TRIANGLES, 0, 6);

- 另一个方法是我们把光照计算放在切线空间中进行,这个方法在片段着色器中实现,需要做的计算比上一个方法更多,然而这并不是这个方法的应用方法。我们可以在顶点着色器中将所有光照计算需要的坐标和方向(viewPos、lightPos、fragPos、lightDir)进行向着切线空间的变换,而在片段着色器中不再进行法线纹理的转换,这样的优势在于,片段着色器的计算次数远大于顶点着色器。需要注意的是,空间坐标的变换使用model矩阵,而方向的变换使用normalModel矩阵。

#version 330 core

out vec4 fragColor;

struct DirLight {

vec3 direction;

vec3 ambient;

vec3 diffuse;

vec3 specular;

};

struct PointLight {

vec3 position;

vec3 ambient;

vec3 diffuse;

vec3 specular;

float constant;

float linear;

float quadratic;

};

struct SpotLight {

vec3 direction;

vec3 position;

vec3 ambient;

vec3 diffuse;

vec3 specular;

float cutOff;

float outerCutOff;

float constant;

float linear;

float quadratic;

};

in VS_OUT{

vec2 texCoord;

vec3 tangentFragPos;

vec3 tangentViewPos;

DirLight tangentDirlight;

SpotLight tangentSpotlight;

}fs_in;

struct Material {

sampler2D diffuse;

sampler2D specular;

float shininess;

};

uniform Material material;

uniform sampler2D normalMap;

vec3 calcDirLight(DirLight light, vec3 normal, vec3 viewPos, vec3 fragPos);

vec3 calcSpotLight(SpotLight light, vec3 normal, vec3 viewPos, vec3 fragPos);

vec3 calcPointLight(PointLight light, vec3 normal, vec3 viewPos, vec3 fragPos);

void main()

{

vec3 normal = texture(normalMap, fs_in.texCoord).rgb;

normal = normalize(normal * 2.0 - 1.0);

vec3 result = calcDirLight(fs_in.tangentDirlight, normal, fs_in.tangentViewPos, fs_in.tangentFragPos);

//for (int i = 0; i != NR_POINT_LIGHTS; i++)

//{

// result += calcPointLight(pointlights[i], normal, viewPos, fragPos);

//}

result += calcSpotLight(fs_in.tangentSpotlight, normal, fs_in.tangentViewPos, fs_in.tangentFragPos);

fragColor = vec4(result, 1.0f);

//gamma

float gamma = 2.2;

fragColor.rgb = pow(fragColor.rgb, vec3(1.0 / gamma));

}

vec3 calcDirLight(DirLight light, vec3 normal, vec3 viewPos, vec3 fragPos)

{

//ambient

vec3 ambient = light.ambient * vec3(texture(material.diffuse, fs_in.texCoord));

//diffuse

vec3 lightDir = normalize(-light.direction);

float diff = max(dot(normal, lightDir), 0.0);

vec3 diffuse = light.diffuse * diff * vec3(texture(material.diffuse, fs_in.texCoord));

//specular

vec3 viewDir = normalize(viewPos - fragPos);

vec3 halfwayDir = normalize(viewDir + lightDir);

float spec = pow(max(dot(halfwayDir, normal), 0.0), material.shininess);

vec3 specular = light.specular * spec * vec3(texture(material.specular, fs_in.texCoord));

return ambient + diffuse + specular;

}

vec3 calcPointLight(PointLight light, vec3 normal, vec3 viewPos, vec3 fragPos)

{

float distance = length(light.position - fragPos);

float attenuation = 1.0f / (light.constant + light.linear * distance + light.quadratic * distance * distance);

//ambient

vec3 ambient = light.ambient * vec3(texture(material.diffuse, fs_in.texCoord));

//diffuse

vec3 lightDir = normalize(light.position - fragPos);

float diff = max(dot(normal, lightDir), 0.0);

vec3 diffuse = light.diffuse * diff * vec3(texture(material.diffuse, fs_in.texCoord));

//specular

vec3 viewDir = normalize(viewPos - fragPos);

vec3 halfwayDir = normalize(viewDir + lightDir);

float spec = pow(max(dot(halfwayDir, normal), 0.0), material.shininess);

vec3 specular = light.specular * spec * vec3(texture(material.specular, fs_in.texCoord));

return (ambient + diffuse + specular) * attenuation;

}

vec3 calcSpotLight(SpotLight light, vec3 normal, vec3 viewPos, vec3 fragPos)

{

//ambient

vec3 ambient = light.ambient * vec3(texture(material.diffuse, fs_in.texCoord));

//diffuse

vec3 lightDir = normalize(light.position - fragPos);

float diff = max(dot(normal, lightDir), 0.0);

vec3 diffuse = light.diffuse * diff * vec3(texture(material.diffuse, fs_in.texCoord));

//specular

vec3 viewDir = normalize(viewPos - fragPos);

vec3 halfwayDir = normalize(viewDir + lightDir);

float spec = pow(max(dot(halfwayDir, normal), 0.0), material.shininess);

vec3 specular = light.specular * spec * vec3(texture(material.specular, fs_in.texCoord));

float theta = dot(-lightDir, normalize(light.direction));

float epsilon = light.cutOff - light.outerCutOff;

float intensity = clamp((theta - light.outerCutOff) / epsilon, 0.0, 1.0);

float distance = length(light.position - fragPos);

float attenuation = 1.0f / (light.constant + light.linear * distance + light.quadratic * distance * distance);

return (ambient + diffuse + specular) * intensity * attenuation;

}

#version 330 core

layout(location = 0) in vec3 aPos;

layout(location = 1) in vec3 aNorm;

layout(location = 2) in vec2 aTexCoord;

layout(location = 3) in vec3 aTangent;

layout(location = 3) in vec3 aBiTangent;

uniform vec3 viewPos;

uniform mat4 model;

uniform mat4 view;

uniform mat4 projection;

struct DirLight {

vec3 direction;

vec3 ambient;

vec3 diffuse;

vec3 specular;

};

uniform DirLight dirlight;

struct PointLight {

vec3 position;

vec3 ambient;

vec3 diffuse;

vec3 specular;

float constant;

float linear;

float quadratic;

};

#define NR_POINT_LIGHTS 4

uniform PointLight pointlights[NR_POINT_LIGHTS];

struct SpotLight {

vec3 direction;

vec3 position;

vec3 ambient;

vec3 diffuse;

vec3 specular;

float cutOff;

float outerCutOff;

float constant;

float linear;

float quadratic;

};

uniform SpotLight spotlight;

out VS_OUT{

vec2 texCoord;

vec3 tangentFragPos;

vec3 tangentViewPos;

DirLight tangentDirlight;

SpotLight tangentSpotlight;

}vs_out;

void main()

{

gl_Position = projection * view * model * vec4(aPos, 1.0f);

vs_out.texCoord = aTexCoord;

vec3 T = normalize(vec3(model * vec4(aTangent, 0.0)));

vec3 B = normalize(vec3(model * vec4(aBiTangent, 0.0)));

vec3 N = normalize(vec3(model * vec4(aNorm, 0.0)));

mat3 TBN = transpose(mat3(T, B, N));

mat4 normModel = transpose(inverse(model));

vec3 Tn = normalize(vec3(normModel * vec4(aTangent, 0.0)));

vec3 Bn = normalize(vec3(normModel * vec4(aBiTangent, 0.0)));

vec3 Nn = normalize(vec3(normModel * vec4(aNorm, 0.0)));

mat3 TBNn = transpose(mat3(Tn, Bn, Nn));

vec3 fragPos = vec3(model * vec4(aPos, 1.0f));

vs_out.tangentFragPos = TBN * fragPos;

vs_out.tangentViewPos = TBN * viewPos;

vs_out.tangentSpotlight = spotlight;

vs_out.tangentSpotlight.position = TBN * spotlight.position;

vs_out.tangentDirlight = dirlight;

vs_out.tangentDirlight.direction = TBNn * dirlight.direction;

}

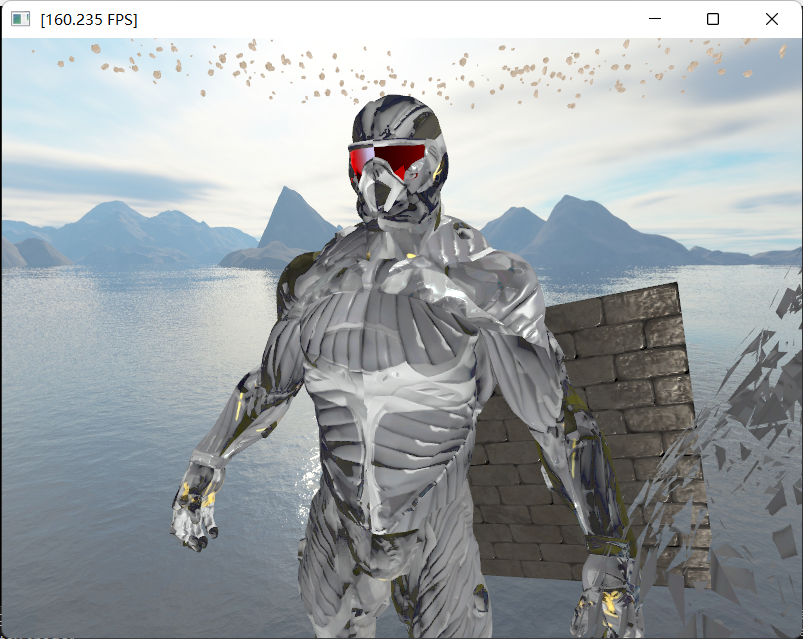

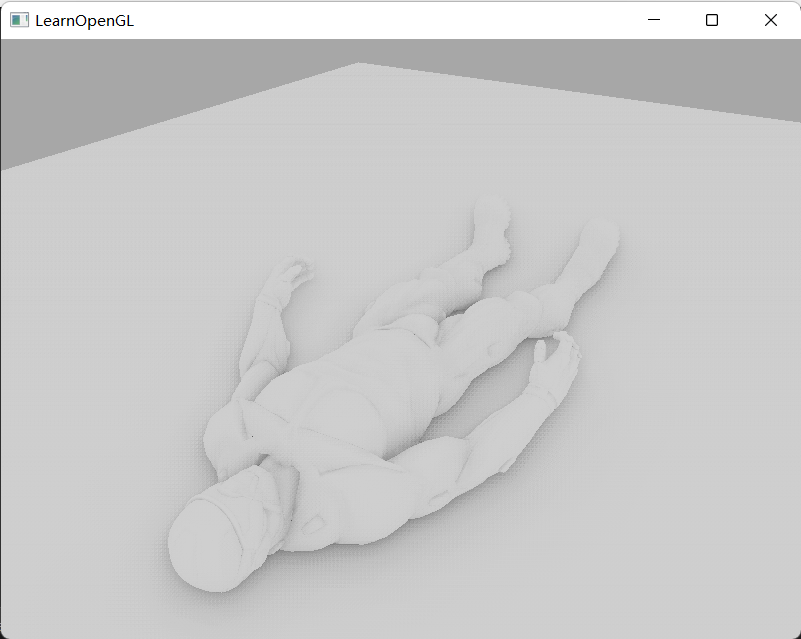

3.模型加载

- 在之前的模型加载中,首先在加载部分要求Assimp计算出tangent

const aiScene* scene = importer.ReadFile(path, aiProcess_Triangulate | aiProcess_GenSmoothNormals | aiProcess_FlipUVs | aiProcess_CalcTangentSpace);

- 然后,在mesh的定点中记录Tangent。

vector.x = mesh->mTangents[i].x;

vector.y = mesh->mTangents[i].y;

vector.z = mesh->mTangents[i].z;

vertex.Tangent = vector;

- 然后,加载norml贴图

vector<Texture> normalMaps = loadMaterialTextures(material, aiTextureType_HEIGHT, "texture_normal");

textures.insert(textures.end(), normalMaps.begin(), normalMaps.end());

- 然后,在绘制函数中,加入法线纹理

void Mesh::Draw(Shader shader) const

{

unsigned int diffuseNr = 1;

unsigned int specularNr = 1;

unsigned int normalNr = 1;

for (int i = 0; i != textures.size(); i++)

{

glActiveTexture(GL_TEXTURE0 + i);

glBindTexture(GL_TEXTURE_2D, textures[i].id);

string number;

string name = textures[i].type;

if (name == "texture_diffuse")

{

number = std::to_string(diffuseNr++);

}

else if (name == "texture_specular")

{

number = std::to_string(specularNr++);

}

else if (name == "texture_normal")

{

number = std::to_string(normalNr++);

}

shader.setInt(("material."+name+number).c_str(), i);

}

glBindVertexArray(VAO);

glDrawElements(GL_TRIANGLES, (GLsizei)indices.size(), GL_UNSIGNED_INT, 0);

glBindVertexArray(0);

}

- 相应的,修改shader

#version 330 core

layout(location = 0) in vec3 aPos;

layout(location = 1) in vec3 aNorm;

layout(location = 2) in vec2 aTexCoord;

layout(location = 3)in vec3 aTangent;

out vec3 fragPos;

out vec3 normal;

out vec2 texCoord;

out mat3 TBN;

uniform mat4 model;

uniform mat4 view;

uniform mat4 projection;

uniform mat4 normModel;

void main()

{

gl_Position = projection * view * model * vec4(aPos, 1.0f);

fragPos = vec3(model * vec4(aPos, 1.0f));

normal = normalize(mat3(normModel) * aNorm);

texCoord = aTexCoord;

vec3 T = normalize(vec3(normModel * vec4(aTangent, 0.0)));

vec3 N = normalize(vec3(normModel * vec4(aNorm, 0.0)));

T = normalize(T - dot(T, N) * N);

vec3 B = cross(N, T);

TBN = transpose(mat3(T, B, N));

}

vec3 normal = texture(material.texture_normal1, texCoord).rgb;

normal = normalize(normal * 2.0 - 1.0);

normal = normalize(TBN * normal);

4.格拉姆-施密特正交化过程(Gram-Schmidt process)

- 因为顶点的TBN是平均化的结果,所以不再满足正交关系,需要进行正交化:

vec3 T = normalize(vec3(model * vec4(tangent, 0.0)));

vec3 N = normalize(vec3(model * vec4(normal, 0.0)));

// re-orthogonalize T with respect to N

T = normalize(T - dot(T, N) * N);

// then retrieve perpendicular vector B with the cross product of T and N

vec3 B = cross(T, N);

mat3 TBN = mat3(T, B, N)

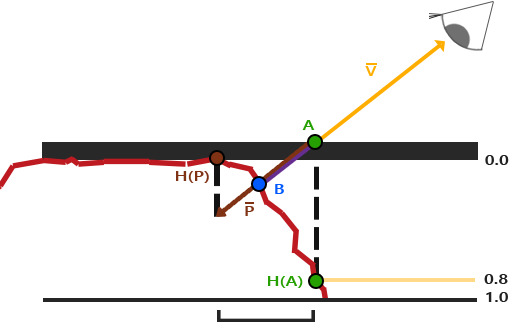

五、视差贴图

- 如果我们使用更细致的网格来实现高度效果,那会造成太大的代价,因此我们使用视差贴图。

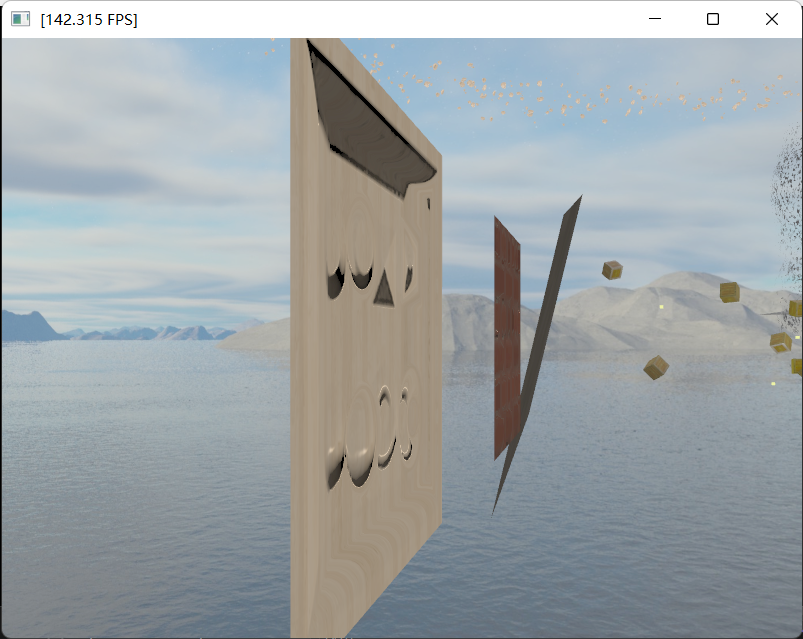

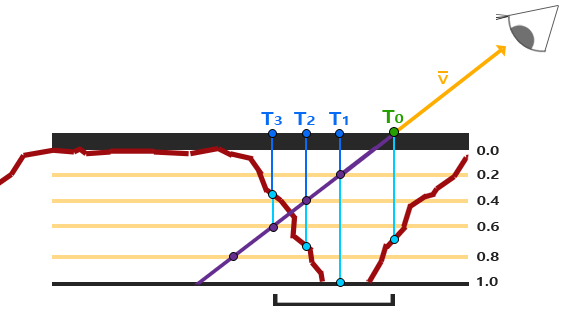

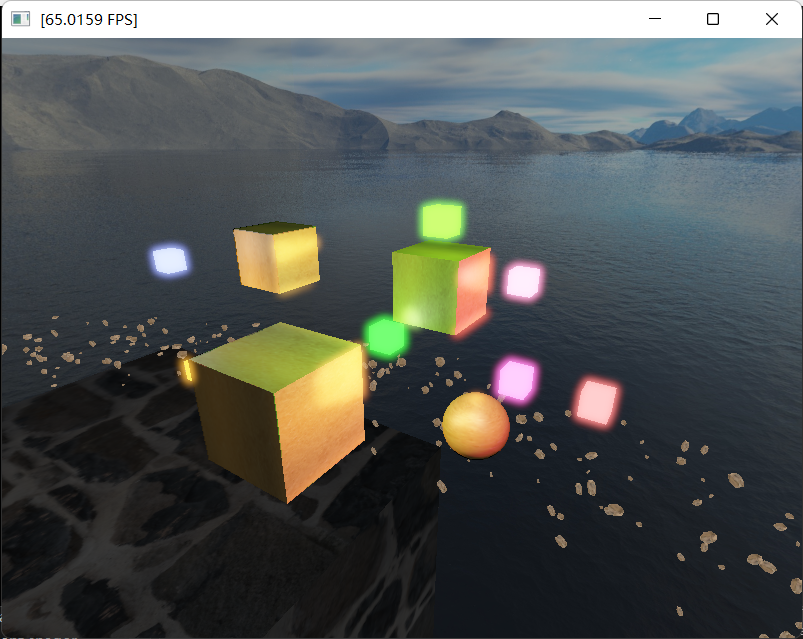

- 视差贴图用于尽量的将平面的fragPos转移到如果存在凹凸表面时的fragPos位置,并用该位置的信息来进行光线计算,相当于我们看到的是有凹凸的表面。一个直觉就是,如果平面fragPos处的深度或者高度越大,那么我们就应该偏移更多的距离,因此最简单的视差贴图是使用着色点位置的深度来进行一步计算。如图,我们使用该点的深度信息,将viewDir反向延伸一段距离,并取延伸之后的位置处进行光照计算。

- 一个问题就是,我们可以在世界坐标系中找到一个位置,但是我们若要拿到该位置的顶点属性是比较麻烦的。因此,我们将所有位置和方向转换到切线坐标系,那么我们找到的位置实则就是切线空间的坐标,也就是纹理映射坐标。而这一步已经在法线贴图中实现了。

- 最后,我们在片段着色器中计算出来新的纹理坐标,并用纹理坐标来计算后续的所有光照就可以了。

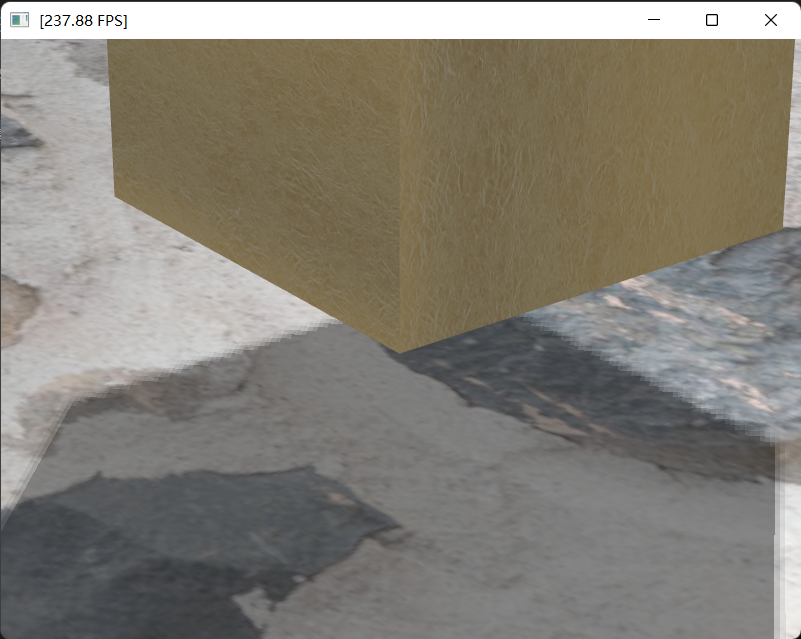

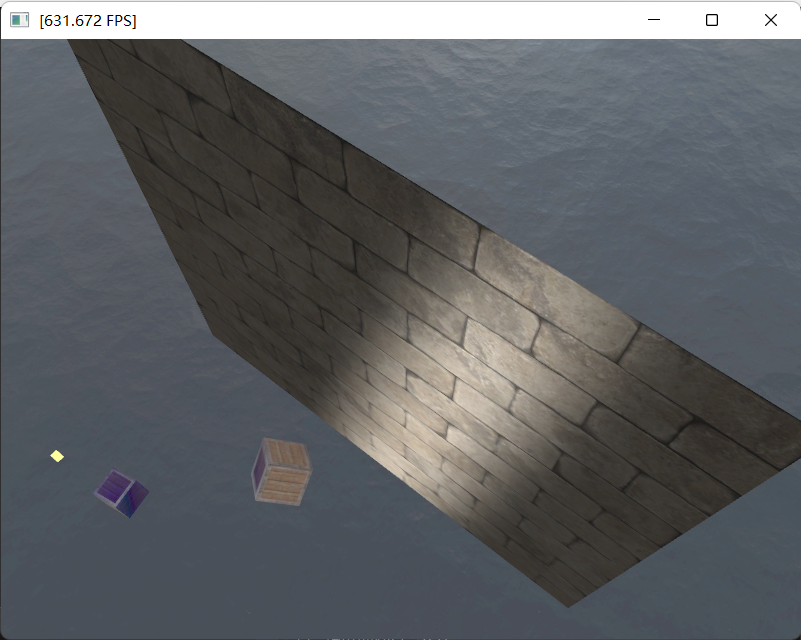

- 效果如下:

#version 330 core

out vec4 fragColor;

struct DirLight {

vec3 direction;

vec3 ambient;

vec3 diffuse;

vec3 specular;

};

struct PointLight {

vec3 position;

vec3 ambient;

vec3 diffuse;

vec3 specular;

float constant;

float linear;

float quadratic;

};

struct SpotLight {

vec3 direction;

vec3 position;

vec3 ambient;

vec3 diffuse;

vec3 specular;

float cutOff;

float outerCutOff;

float constant;

float linear;

float quadratic;

};

in VS_OUT{

vec2 texCoord;

vec3 tangentFragPos;

vec3 tangentViewPos;

DirLight tangentDirlight;

SpotLight tangentSpotlight;

}fs_in;

struct Material {

sampler2D diffuse;

sampler2D specular;

float shininess;

};

uniform Material material;

uniform sampler2D normalMap;

uniform sampler2D depthMap;

uniform float depth_scale;

vec3 calcDirLight(DirLight light, vec3 normal, vec3 viewPos, vec3 fragPos, vec2 texCoord);

vec3 calcSpotLight(SpotLight light, vec3 normal, vec3 viewPos, vec3 fragPos, vec2 texCoord);

vec3 calcPointLight(PointLight light, vec3 normal, vec3 viewPos, vec3 fragPos, vec2 texCoord);

vec2 ParallaxMapping(vec2 texCoord, vec3 viewDir);

void main()

{

vec3 viewDir = fs_in.tangentViewPos - fs_in.tangentFragPos;

vec2 texCoord = ParallaxMapping(fs_in.texCoord, viewDir);

vec3 normal = texture(normalMap, texCoord).rgb;

normal = normalize(normal * 2.0 - 1.0);

vec3 result = calcDirLight(fs_in.tangentDirlight, normal, fs_in.tangentViewPos, fs_in.tangentFragPos, texCoord);

//for (int i = 0; i != NR_POINT_LIGHTS; i++)

//{

// result += calcPointLight(pointlights[i], normal, viewPos, fragPos);

//}

result += calcSpotLight(fs_in.tangentSpotlight, normal, fs_in.tangentViewPos, fs_in.tangentFragPos, texCoord);

fragColor = vec4(result, 1.0f);

//gamma

float gamma = 2.2;

fragColor.rgb = pow(fragColor.rgb, vec3(1.0 / gamma));

}

vec3 calcDirLight(DirLight light, vec3 normal, vec3 viewPos, vec3 fragPos, vec2 texCoord)

{

//ambient

vec3 ambient = light.ambient * vec3(texture(material.diffuse, texCoord));

//diffuse

vec3 lightDir = normalize(-light.direction);

float diff = max(dot(normal, lightDir), 0.0);

vec3 diffuse = light.diffuse * diff * vec3(texture(material.diffuse, texCoord));

//specular

vec3 viewDir = normalize(viewPos - fragPos);

vec3 halfwayDir = normalize(viewDir + lightDir);

float spec = pow(max(dot(halfwayDir, normal), 0.0), material.shininess);

vec3 specular = light.specular * spec * vec3(texture(material.specular, texCoord));

return ambient + diffuse + specular;

}

vec3 calcPointLight(PointLight light, vec3 normal, vec3 viewPos, vec3 fragPos, vec2 texCoord)

{

float distance = length(light.position - fragPos);

float attenuation = 1.0f / (light.constant + light.linear * distance + light.quadratic * distance * distance);

//ambient

vec3 ambient = light.ambient * vec3(texture(material.diffuse, texCoord));

//diffuse

vec3 lightDir = normalize(light.position - fragPos);

float diff = max(dot(normal, lightDir), 0.0);

vec3 diffuse = light.diffuse * diff * vec3(texture(material.diffuse, texCoord));

//specular

vec3 viewDir = normalize(viewPos - fragPos);

vec3 halfwayDir = normalize(viewDir + lightDir);

float spec = pow(max(dot(halfwayDir, normal), 0.0), material.shininess);

vec3 specular = light.specular * spec * vec3(texture(material.specular, texCoord));

return (ambient + diffuse + specular) * attenuation;

}

vec3 calcSpotLight(SpotLight light, vec3 normal, vec3 viewPos, vec3 fragPos, vec2 texCoord)

{

//ambient

vec3 ambient = light.ambient * vec3(texture(material.diffuse, texCoord));

//diffuse

vec3 lightDir = normalize(light.position - fragPos);

float diff = max(dot(normal, lightDir), 0.0);

vec3 diffuse = light.diffuse * diff * vec3(texture(material.diffuse, texCoord));

//specular

vec3 viewDir = normalize(viewPos - fragPos);

vec3 halfwayDir = normalize(viewDir + lightDir);

float spec = pow(max(dot(halfwayDir, normal), 0.0), material.shininess);

vec3 specular = light.specular * spec * vec3(texture(material.specular, texCoord));

float theta = dot(-lightDir, normalize(light.direction));

float epsilon = light.cutOff - light.outerCutOff;

float intensity = clamp((theta - light.outerCutOff) / epsilon, 0.0, 1.0);

float distance = length(light.position - fragPos);

float attenuation = 1.0f / (light.constant + light.linear * distance + light.quadratic * distance * distance);

return (ambient + diffuse + specular) * intensity * attenuation;

}

vec2 ParallaxMapping(vec2 texCoord, vec3 viewDir)

{

float currentDepth = texture(depthMap, texCoord).r;

vec2 deltaTexCoord = viewDir.xy / viewDir.z * currentDepth * depth_scale;

return texCoord - deltaTexCoord;

}

- 有一个问题是,我们进行纹理坐标变换时,会有将坐标移动到0/1之外的可能,在这里可以直接丢弃。

if (texCoord.x > 1.0 || texCoord.y > 1.0 || texCoord.x < 0.0 || texCoord.y < 0.0)

{

discard;

}

- 另外,我们在角度很偏时,效果并不好,这是因为当角度很偏时,我们的坐标移动距离会很大。为此,我们使用陡峭视差贴图。

- 我们将深度分成多个层,同时将坐标移动距离也按照同样的层数分成不同的尺度,我们逐步的深入,同时移动位置,并对比层深度与该处的深度纹理值,直到轨迹与深度纹理交叉之后,便得到了比较接近的命中位置,即纹理坐标。

vec2 ParallaxMapping(vec2 texCoord, vec3 viewDir)

{

const float numLayers = 10.0;

float layerDepth = 1.0 / numLayers;

vec2 p= viewDir.xy / viewDir.z * height_scale;

vec2 deltaTexCoord = p / numLayers;

vec2 currentTexCoord = texCoord;

float currentDepthMapValue = texture(depthMap, currentTexCoord).r;

float currentLayerDepth = 0.0;

while (currentDepthMapValue > currentLayerDepth)

{

currentLayerDepth += layerDepth;

currentTexCoord -= deltaTexCoord;

currentDepthMapValue = texture(depthMap, currentTexCoord).r;

}

return currentTexCoord;

}

- 然后,我们可以按照角度来动态选择不同的层数或者说尺度,来减少采样的走样:

vec2 ParallaxMapping(vec2 texCoord, vec3 viewDir)

{

const float minLayers = 8.0f;

const float maxLayers = 32.0f;

float numLayers = mix(minLayers, maxLayers, abs(viewDir.z));

float layerDepth = 1.0 / numLayers;

vec2 p= viewDir.xy / viewDir.z * height_scale;

vec2 deltaTexCoord = p / numLayers;

vec2 currentTexCoord = texCoord;

float currentDepthMapValue = texture(depthMap, currentTexCoord).r;

float currentLayerDepth = 0.0;

while (currentDepthMapValue > currentLayerDepth)

{

currentLayerDepth += layerDepth;

currentTexCoord -= deltaTexCoord;

currentDepthMapValue = texture(depthMap, currentTexCoord).r;

}

return currentTexCoord;

}

- 进一步的,我们可以对相交前后的纹理坐标按照两次的深度差值进行插值,得到更精确的坐标。

vec2 ParallaxMapping(vec2 texCoord, vec3 viewDir)

{

const float minLayers = 8.0f;

const float maxLayers = 32.0f;

float numLayers = mix(minLayers, maxLayers, abs(viewDir.z));

float layerDepth = 1.0 / numLayers;

vec2 p = viewDir.xy / viewDir.z *height_scale;

vec2 deltaTexCoord = p / numLayers;

vec2 currentTexCoord = texCoord;

float currentDepthMapValue = texture(depthMap, currentTexCoord).r;

float currentLayerDepth = 0.0;

while (currentDepthMapValue > currentLayerDepth)

{

currentLayerDepth += layerDepth;

currentTexCoord -= deltaTexCoord;

currentDepthMapValue = texture(depthMap, currentTexCoord).r;

}

vec2 beforeTexCoord = currentTexCoord + deltaTexCoord;

float afterDepth = currentDepthMapValue - currentLayerDepth;

float beforeDepth = texture(depthMap, beforeTexCoord).r - currentLayerDepth + layerDepth;

float weight = afterDepth / (afterDepth - beforeDepth);

vec2 finalTexCoord = mix(currentTexCoord, beforeTexCoord, weight);

return finalTexCoord;

}

六、HDR

- 我们通过帧缓冲实现HDR,在附加颜色缓冲时使用GL_RGB16或者GL_RGB32,即浮点帧缓冲,因为普通帧缓冲是8位的,即0-255映射到0-1的单色通道值,会默认截断,一般使用16就可以了。

- 然后,我们将渲染到屏幕上的shader进行修改,加入色调映射算法,这里有两个算法:Reinhasd与曝光。其中Reinhard对于暗区会损失信息,而曝光算法可以调节曝光度,来实现不同亮度的适应。

#version 330 core

in vec2 texCoord;

out vec4 fragColor;

uniform sampler2D texColorBuffer;

uniform float exposure;

void main()

{

vec3 hdrColor = texture(texColorBuffer, texCoord).rgb;

//Reinhard

//vec3 mapped = hdrColor / (hdrColor + vec3(1.0f));

//exposure

vec3 mapped = vec3(1.0) - exp(-hdrColor * exposure);

//gamma

float gamma = 2.2;

mapped = pow(mapped, vec3(1.0 / gamma));

fragColor = vec4(mapped, 1.0);

}

七、泛光

- 泛光是指光晕效果,需要用到高斯模糊,以及需要在帧缓冲之间的配合。这个效果的操作比较多,所以我把它写成了一个类。

- 首先,我们需要提取出亮度比较高的成分,然后对他进行高斯模糊,最后将原本的颜色缓冲和处理后的颜色缓冲叠加在一起。

- 第一步,提取出高亮度成分,显然我们需要使用一个有颜色纹理缓冲的帧缓冲来实现并保存这个高亮度的纹理。一个简单的方法是,在原本绘制出的帧缓冲的颜色缓冲基础上,进行提取并保存在新的帧缓冲中。另一个比较有趣的方法是,我们在帧缓冲中附加两个颜色缓冲,然后在片段着色器中同时绘制这两个缓冲。

- 首先,我们需要申请两个颜色缓冲,这个时候,我们之前的glGenTextures的第一个参数为什么需要存在就解释得清了。另外第二个参数直接输入数组名,这显然是因为数组名转换成了指针,就不需要取址了。

unsigned int colorBuffers[2];

glGenTextures(2,colorBuffers);

- 然后,我们将这两个颜色缓冲绑定在帧缓冲中,他们仍然是单独执行附加操作的,只是记得我们之前glFramebufferTexture的参数GL_COLOR_ATTACHMENT可以有一个后缀吗,我们一般使用GL_COLOR_ATTACHMENT0,这时候分别对他们绑定GL_COLOR_ATTACHMENT0和GL_COLOR_ATTACHMENT1就可以了,显然的是这是连续分布的,可以循环操作。

- 到这一步也只是给他附加了两个颜色缓冲而已,这并不意味着我们就可以在片段着色器中访问这两个缓冲了,需们需要告诉帧缓冲。

unsigned int sttachments[2]={GL_COLOR_ATTACHMENT0,GL_COLOR_ATTACHMENT1};

glDrawBuffers(2,attachments);

- 现在,我们就可以在片段着色器中同时绘制两个颜色缓冲了,只需要使用layout(location)来说明输出就可以了。

layout(location=0) out vec4 fragColor;

layout(locaiton=1) out vec4 brightColor;

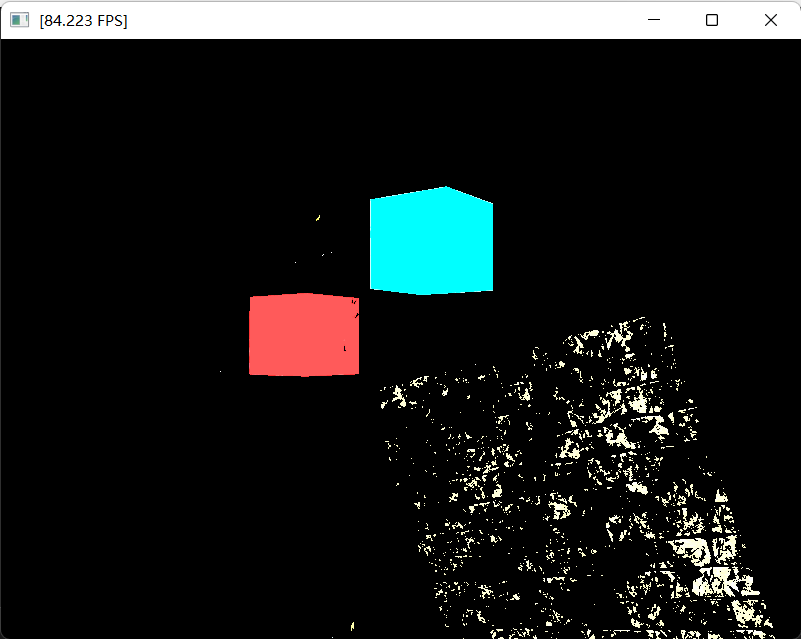

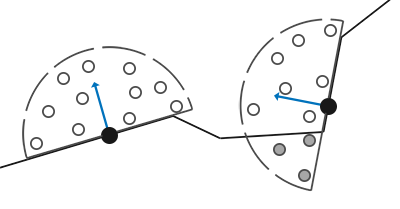

- 那么,我们如何提取出高亮部分呢。我们把RGB转换成灰度值,然后对灰度值的强度进行判断就可以了。如果片段的灰度值超过阈值,那么就给着色,否则给他黑色。这里有趣的是,只要我们使用浮点帧缓冲,那么我们就可以使用1.0甚至是大于1.0的阈值,这可以让我们在设置阈值时可以给出一个很直观的方案。画图如下:红配蓝,最好看!

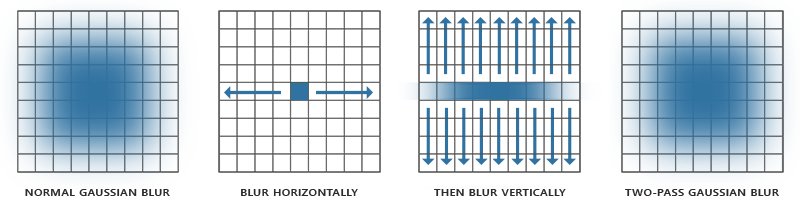

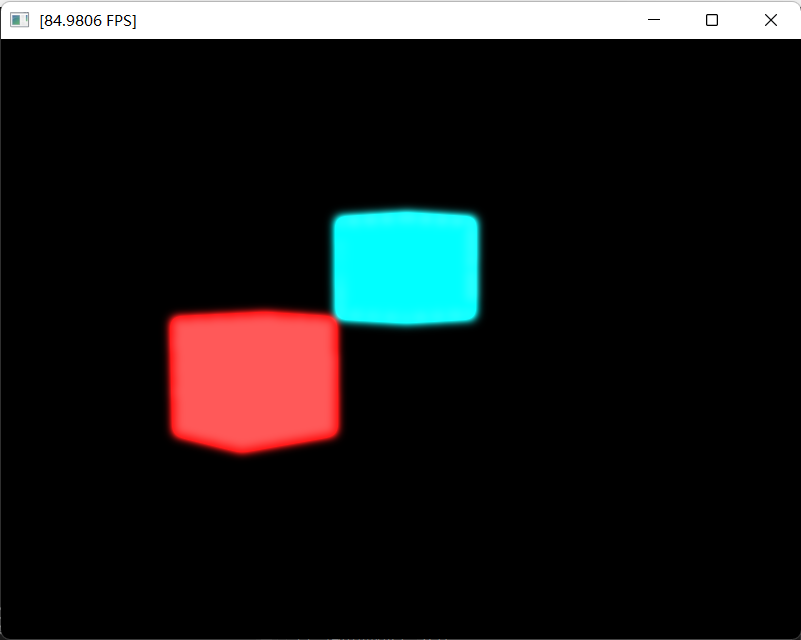

- 第二步,我们需要给高亮纹理进行高斯模糊,来模拟光晕。高斯模糊的原理很简单,那就是对像素周围的像素都取高斯分布的权重来作贡献,实现模糊效果,可见就是一个我们很熟悉的卷积而已。高斯模糊或者说卷积,一个比较麻烦的问题是大的卷积核会造成大的计算性能要求,这是一个\(N^2\)操作。然而,有趣的是二维的高斯可以拆解成两个一维高斯的乘积,那么我们只需要分别对横轴与纵轴进行高斯模糊就可以了,这是一个\(2N\)操作。进一步的,拆解的操作给了我们很大的信心,我们可以进行多次高斯模糊,这是因为多次模糊可以使用小的卷积核模拟大的卷积核,这也是CNN里面的老生常谈的玩意了。具体的实现方法,只需要我们创建两个帧缓冲,然后为他们分别附加一个颜色纹理,然后对他们交错进行高斯模糊,分别执行纵横的模糊操作,并且互相作为输入输出,当然显然的是第一步的颜色纹理输入是我们之前的高亮纹理。

效果如下:

- 拿到了基础的颜色纹理和模糊后的高亮纹理,我们只需要进行异步简单的操作就可以了,即将他们的对应纹理映射的结果加起来就好了。效果如下:可以看到,不止这两个光源有光晕了,之前我给加入强光照射的墙壁也有了光晕,有米有夏季微风吹拂、万物盎然,你打量着熟悉的小屋却忽地有一种陌生的感觉呢。另外,红配蓝不是简单说说的。

#ifndef BLOOM_H

#define BLOOM_H

#include <glad/glad.h>

#include <GLFW/glfw3.h>

#include <iostream>

#include "shader.h"

#include "image.h"

#include <glm/glm.hpp>

#include <glm/gtc/matrix_transform.hpp>

#include <glm/gtc/type_ptr.hpp>

#include "camera.h"

#include "model.h"

#include <map>

class Bloom {

public:

Bloom();

void DrawBloom(unsigned int colorBuffer);

unsigned int bloomColorBuffer;

private:

void GetFramebuffer();

void GetVertexArray();

void GetBlurFramebuffer();

void GetResultFramebuffer();

Shader twobufferShader = Shader("res/shader/twobufferVertex.shader", "res/shader/twobufferFragment.shader");

Shader blurShader = Shader("res/shader/blurVertex.shader", "res/shader/blurFragment.shader");

Shader bloomShader = Shader("res/shader/bloomVertex.shader", "res/shader/bloomFragment.shader");

void DrawTwoBuffer(unsigned int colorBuffer);

void DrawGaussBlur();

unsigned int bloomFBO;

unsigned int colorBuffers[2];

unsigned int blurFBO[2];

unsigned int blurColorBuffers[2];

unsigned int resultFBO;

unsigned int WIDTH = 800;

unsigned int HEIGHT = 600;

unsigned int frameVAO;

unsigned int frameVBO;

};

Bloom::Bloom()

{

GetFramebuffer();

GetVertexArray();

GetBlurFramebuffer();

GetResultFramebuffer();

}

void Bloom::GetFramebuffer()

{

glGenFramebuffers(1, &bloomFBO);

glBindFramebuffer(GL_FRAMEBUFFER, bloomFBO);

glGenTextures(2, colorBuffers);

for (unsigned int i = 0; i != 2; i++)

{

glBindTexture(GL_TEXTURE_2D, colorBuffers[i]);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGB16F, WIDTH, HEIGHT, 0, GL_RGB, GL_UNSIGNED_BYTE, NULL);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0 + i, GL_TEXTURE_2D, colorBuffers[i], 0);

}

unsigned int attachments[2] = { GL_COLOR_ATTACHMENT0, GL_COLOR_ATTACHMENT1 };

glDrawBuffers(2, attachments);

glBindFramebuffer(GL_FRAMEBUFFER, 0);

}

void Bloom::GetVertexArray()

{

float frameVertices[] =

{

-1.0, -1.0, 0.0, 0.0,

1.0, -1.0, 1.0, 0.0,

1.0, 1.0, 1.0, 1.0,

1.0, 1.0, 1.0, 1.0,

-1.0, 1.0, 0.0, 1.0,

-1.0, -1.0, 0.0, 0.0

};

glGenBuffers(1, &frameVBO);

glGenVertexArrays(1, &frameVAO);

glBindVertexArray(frameVAO);

glBindBuffer(GL_ARRAY_BUFFER, frameVBO);

glBufferData(GL_ARRAY_BUFFER, sizeof(frameVertices), frameVertices, GL_STATIC_DRAW);

glVertexAttribPointer(0, 2, GL_FLOAT, GL_FALSE, 4 * sizeof(float), (void*)0);

glEnableVertexAttribArray(0);

glVertexAttribPointer(1, 2, GL_FLOAT, GL_FALSE, 4 * sizeof(float), (void*)(2 * sizeof(float)));

glEnableVertexAttribArray(1);

glBindVertexArray(0);

}

void Bloom::GetBlurFramebuffer()

{

glGenFramebuffers(2, blurFBO);

glGenTextures(2, blurColorBuffers);

for (unsigned int i = 0; i != 2; i++)

{

glBindFramebuffer(GL_FRAMEBUFFER, blurFBO[i]);

glBindTexture(GL_TEXTURE_2D, blurColorBuffers[i]);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGB16F, WIDTH, HEIGHT, 0, GL_RGB, GL_UNSIGNED_BYTE, NULL);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, blurColorBuffers[i], 0);

}

glBindFramebuffer(GL_FRAMEBUFFER, 0);

}

void Bloom::GetResultFramebuffer()

{

glGenFramebuffers(1, &resultFBO);

glBindFramebuffer(GL_FRAMEBUFFER, resultFBO);

glGenTextures(1, &bloomColorBuffer);

glBindTexture(GL_TEXTURE_2D, bloomColorBuffer);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGB16F, WIDTH, HEIGHT, 0, GL_RGB, GL_UNSIGNED_BYTE, NULL);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);