基于docker的高可用kafka集群部署

在docker已经安装的前提下,执行下面脚本完成kafka集群部署:

[root@hpsserver03 kafka]# sh install_kafka.sh 10.21.35.4 docker-compose version 1.29.2, build 5becea4c The zookeeper image is already existed. The wurstmeister/kafka is already existed. The zookeeper_kafka is already existed. create local dir for kafka cluster create local dir for zookeeper cluster Creating zookeeper2 ... done Creating zookeeper0 ... done Creating zookeeper1 ... done Creating kafka1 ... done Creating kafka0 ... done Creating kafka2 ... done Creating kafka-manager ... done Name Command State Ports --------------------------------------------------------------------------------------------------------------------------------- kafka-manager ./start-kafka-manager.sh Up 0.0.0.0:19095->9000/tcp,:::19095->9000/tcp kafka0 start-kafka.sh Up 0.0.0.0:19092->9092/tcp,:::19092->9092/tcp kafka1 start-kafka.sh Up 0.0.0.0:19093->9093/tcp,:::19093->9093/tcp kafka2 start-kafka.sh Up 0.0.0.0:19094->9094/tcp,:::19094->9094/tcp zookeeper0 /docker-entrypoint.sh zkSe ... Up 0.0.0.0:12181->2181/tcp,:::12181->2181/tcp, 2888/tcp, 3888/tcp, 8080/tcp zookeeper1 /docker-entrypoint.sh zkSe ... Up 0.0.0.0:12182->2181/tcp,:::12182->2181/tcp, 2888/tcp, 3888/tcp, 8080/tcp zookeeper2 /docker-entrypoint.sh zkSe ... Up 0.0.0.0:12183->2181/tcp,:::12183->2181/tcp, 2888/tcp, 3888/tcp, 8080/tcp

install_kafka.sh脚本内容如下:

#!/bin/bash IP_ADDR=$1 if [ ! $IP_ADDR ];then echo "address is empty, please input the ip address of this host." exit 1 fi CURR_DIR=$( cd "$( dirname "${BASH_SOURCE[0]}" )" && pwd ) DC_EXIST=`docker-compose -version | awk '{print $3}'` if [ ! ${DC_EXIST%?} ];then curl -L "https://github.com/docker/compose/releases/download/1.29.1/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose chmod +x /usr/local/bin/docker-compose ln -s /usr/local/bin/docker-compose /usr/bin/docker-compose fi docker-compose -version if [ $? -ne 0 ];then echo "docker-compose install failed, please check." exit 1 fi #Get zookeeper image zkimage=`docker images | grep zookeeper | awk {'print $1'}` if [ -n "$zkimage" ] then echo 'The zookeeper image is already existed.' else echo 'Pull the latest zookeeper image.' docker pull zookeeper fi #Get kafka image kfkimage=`docker images | grep 'wurstmeister/kafka' | awk {'print $1'}` if [ -n "$kfkimage" ] then echo 'The wurstmeister/kafka is already existed.' else echo 'Pull the image.' docker pull wurstmeister/kafka fi #Create network for zookeeper-kafka cluster zknet=`docker network ls | grep zookeeper_kafka | awk {'print $2'}` if [ -n "$zknet" ] then echo 'The zookeeper_kafka is already existed.' else echo 'Create zookeeper_kafka.' docker network create --subnet 172.30.0.0/16 zookeeper_kafka fi echo "create local dir for kafka cluster" mkdir -p $CURR_DIR/kafka_data/kafka0/{data,log} mkdir -p $CURR_DIR/kafka_data/kafka1/{data,log} mkdir -p $CURR_DIR/kafka_data/kafka2/{data,log} echo "create local dir for zookeeper cluster" mkdir -p $CURR_DIR/zookeeper_data/zookeeper0/{data,datalog} mkdir -p $CURR_DIR/zookeeper_data/zookeeper1/{data,datalog} mkdir -p $CURR_DIR/zookeeper_data/zookeeper2/{data,datalog} if [ ! -e $CURR_DIR/kafka-compose.yaml ];then cat > $CURR_DIR/kafka-compose.yaml << EOF version: '3.3' services: zookeeper0: image: zookeeper restart: always hostname: zookeeper0 container_name: zookeeper0 ports: - 12181:2181 volumes: - $CURR_DIR/zookeeper_data/zookeeper0/data:/data - $CURR_DIR/zookeeper_data/zookeeper0/datalog:/datalog environment: ZOO_MY_ID: 1 ZOO_SERVERS: server.1=0.0.0.0:2888:3888;2181 server.2=zookeeper1:2888:3888;2181 server.3=zookeeper2:2888:3888;2181 zookeeper1: image: zookeeper restart: always hostname: zookeeper1 container_name: zookeeper1 ports: - 12182:2181 volumes: - $CURR_DIR/zookeeper_data/zookeeper1/data:/data - $CURR_DIR/zookeeper_data/zookeeper1/datalog:/datalog environment: ZOO_MY_ID: 2 ZOO_SERVERS: server.1=zookeeper0:2888:3888;2181 server.2=0.0.0.0:2888:3888;2181 server.3=zookeeper2:2888:3888;2181 zookeeper2: image: zookeeper restart: always hostname: zookeeper2 container_name: zookeeper2 ports: - 12183:2181 volumes: - $CURR_DIR/zookeeper_data/zookeeper2/data:/data - $CURR_DIR/zookeeper_data/zookeeper2/datalog:/datalog environment: ZOO_MY_ID: 3 ZOO_SERVERS: server.1=zookeeper0:2888:3888;2181 server.2=zookeeper1:2888:3888;2181 server.3=0.0.0.0:2888:3888;2181 kafka0: image: wurstmeister/kafka restart: always depends_on: - zookeeper0 - zookeeper1 - zookeeper2 container_name: kafka0 ports: - 19092:9092 environment: KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://$IP_ADDR:19092 KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9092 KAFKA_ZOOKEEPER_CONNECT: zookeeper0:2181,zookeeper1:2181,zookeeper2:2181 KAFKA_BROKER_ID: 0 volumes: - $CURR_DIR/kafka_data/kafka0/data:/data - $CURR_DIR/kafka_data/kafka0/log:/datalog kafka1: image: wurstmeister/kafka restart: always depends_on: - zookeeper0 - zookeeper1 - zookeeper2 container_name: kafka1 ports: - 19093:9093 environment: KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://$IP_ADDR:19093 KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9093 KAFKA_ZOOKEEPER_CONNECT: zookeeper0:2181,zookeeper1:2181,zookeeper2:2181 KAFKA_BROKER_ID: 1 volumes: - $CURR_DIR/kafka_data/kafka1/data:/data - $CURR_DIR/kafka_data/kafka1/log:/datalog kafka2: image: wurstmeister/kafka restart: always depends_on: - zookeeper0 - zookeeper1 - zookeeper2 container_name: kafka2 ports: - 19094:9094 environment: KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://$IP_ADDR:19094 KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9094 KAFKA_ZOOKEEPER_CONNECT: zookeeper0:2181,zookeeper1:2181,zookeeper2:2181 KAFKA_BROKER_ID: 2 volumes: - $CURR_DIR/kafka_data/kafka2/data:/data - $CURR_DIR/kafka_data/kafka2/log:/datalog kafka-manager: image: sheepkiller/kafka-manager:latest restart: always container_name: kafka-manager hostname: kafka-manager ports: - 19095:9000 links: - kafka0 - kafka1 - kafka2 external_links: - zookeeper0 - zookeeper1 - zookeeper2 environment: ZK_HOSTS: zookeeper0:2181,zookeeper1:2181,zookeeper2:2181 TZ: CST-8 networks: default: external: name: zookeeper_kafka EOF fi

#创建启动脚本

cat > $CURR_DIR/startup.sh <<EOF

#!/bin/bash

docker-compose -f $CURR_DIR/kafka-compose.yaml up -d

docker-compose -f $CURR_DIR/kafka-compose.yaml ps

EOF

#创建停止脚本

cat > $CURR_DIR/stop.sh <<EOF

#!/bin/bash

docker-compose -f $CURR_DIR/kafka-compose.yaml down

EOF

#赋予shell脚本可执行权限

chmod +x $CURR_DIR/*.sh

#执行启动脚本开始部署集群

sh $CURR_DIR/startup.sh

启动成功后再浏览器输入kafka-manager的URL:(IP为执行脚本时的输入参数,端口为19095)

http://10.21.35.4:19095/

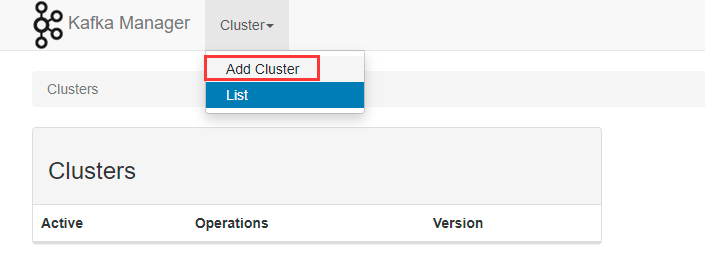

添加集群:

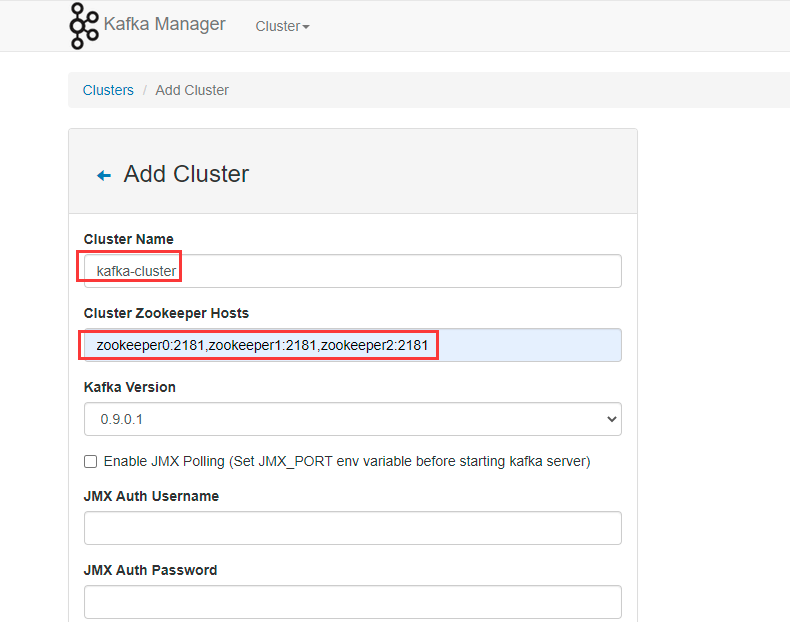

输入以下参数:

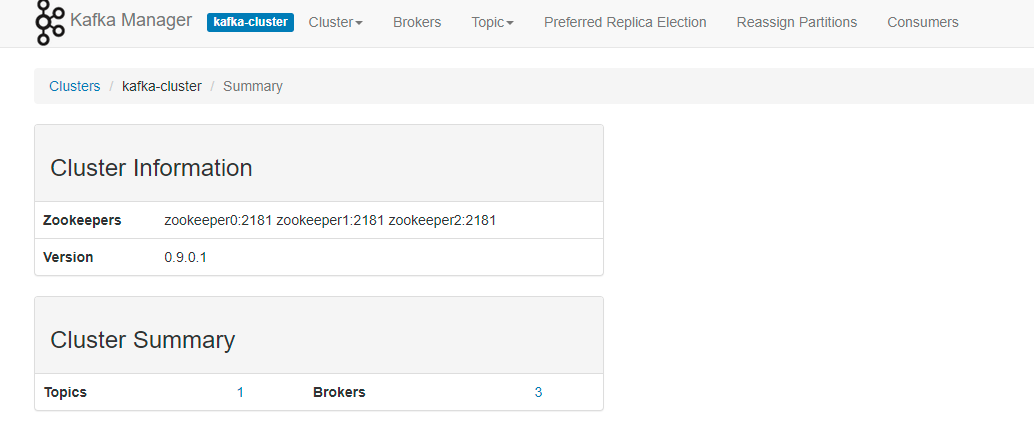

集群名称(自定义):kafka-cluster

zookeeper信息:zookeeper0:2181,zookeeper1:2181,zookeeper2:2181

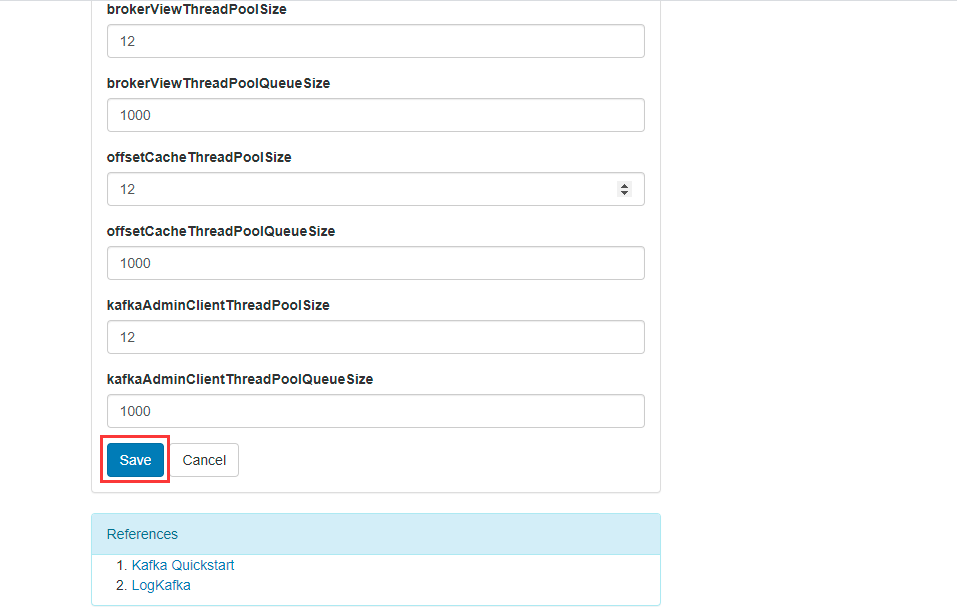

最后点击保存即可。

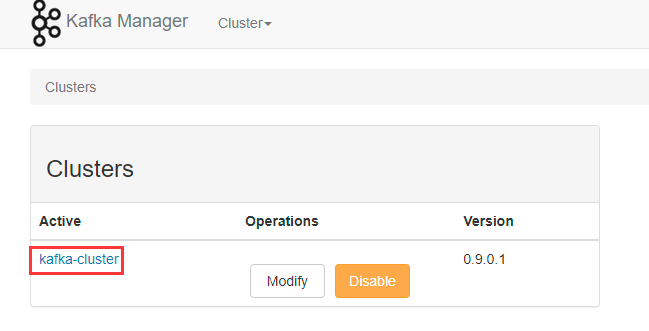

添加完成后回到主页面:

点击集群名称(kafka-cluster):

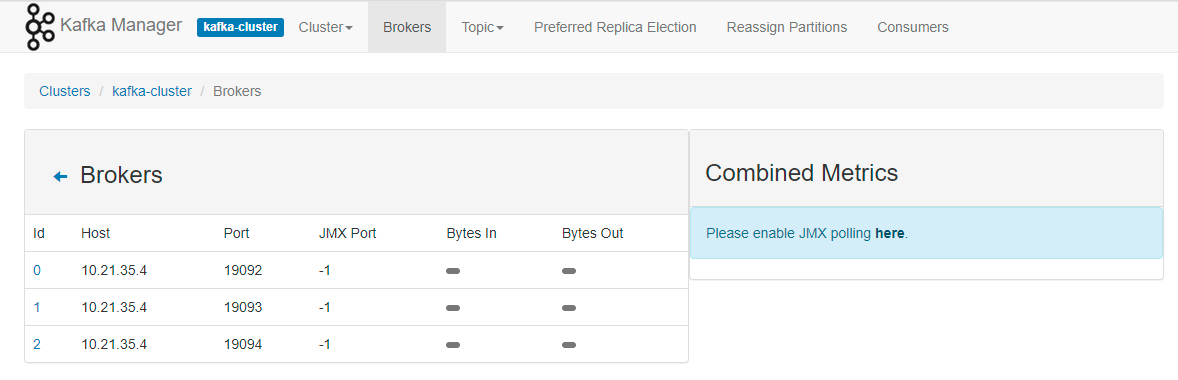

包含3个brokers:

应用访问时,kafka地址为:10.21.35.4:19092,10.21.35.4:19093,10.21.35.4:19094

至此,高可用的kafka集群部署完成。