OpenShift 4.2 添加RHEL节点

OpenShift 4.2版本下如何加入RHEL 7.6的节点。

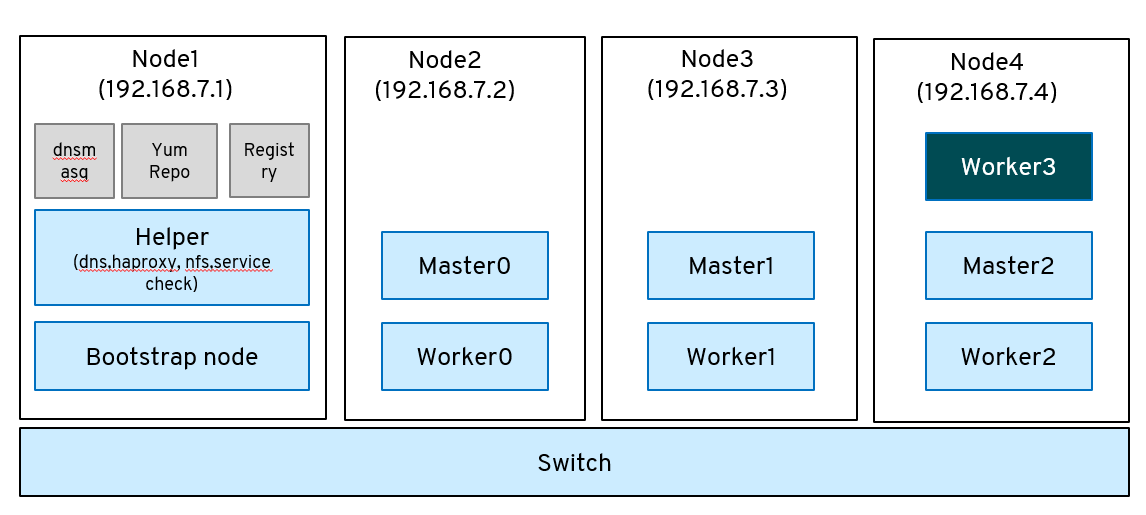

部署架构图

1.worker3所在的物理机

- 建立一个helper-woker03.cfg文件用于节点虚机的建立和启动,注意nameserver字段应该指到helper也就是dns和负载均衡所在的机器。

[root@localhost data]# cat helper-worker03.cfg # System authorization information auth --enableshadow --passalgo=sha512 # Use CDROM installation media cdrom # Use graphical install text # Run the Setup Agent on first boot firstboot --enable ignoredisk --only-use=vda # Keyboard layouts keyboard --vckeymap=us --xlayouts='us' # System language lang en_US.UTF-8 # Network information network --bootproto=static --device=eth0 --gateway=192.168.7.1 --ip=192.168.7.19 --netmask=255.255.255.0 --nameserver=192.168.7.11 --ipv6=auto --activate network --hostname=worker-3.ocp4.redhat.ren # Root password rootpw --plaintext redhat # System services services --enabled="chronyd" # System timezone timezone Asia/Shanghai --isUtc --ntpservers=0.centos.pool.ntp.org,1.centos.pool.ntp.org,2.centos.pool.ntp.org,3.centos.pool.ntp.org # System bootloader configuration bootloader --append=" crashkernel=auto" --location=mbr --boot-drive=vda # Partition clearing information clearpart --none --initlabel # Disk partitioning information part pv.156 --fstype="lvmpv" --ondisk=vda --size=229695 part /boot --fstype="xfs" --ondisk=vda --size=1024 volgroup vg0 --pesize=4096 pv.156 logvol / --fstype="xfs" --size=229184 --name=root --vgname=vg0 logvol swap --fstype="swap" --size=508 --name=swap --vgname=vg0 reboot %packages @^minimal @core chrony kexec-tools %end %addon com_redhat_kdump --enable --reserve-mb='auto' %end %anaconda pwpolicy root --minlen=6 --minquality=1 --notstrict --nochanges --notempty pwpolicy user --minlen=6 --minquality=1 --notstrict --nochanges --emptyok pwpolicy luks --minlen=6 --minquality=1 --notstrict --nochanges --notempty %end

- 然后建立虚机

virt-install --name="ocp4-worker3" --vcpus=2 --ram=8192 \ --disk path=/data/kvm/ocp4-worker3.qcow2,bus=virtio,size=230 \ --os-variant centos7.0 --network bridge=br0,model=virtio \ --boot menu=on --location /data/rhel-server-7.6-x86_64-dvd.iso \ --initrd-inject helper-worker03.cfg --extra-args "inst.ks=file:/helper-worker03.cfg" --noautoconsole

virsh start ocp4-worker3

2.woker3节点

- 登录新建的虚机,添加yum源

[base] name=base baseurl=http://192.168.7.1:8080/repo/rhel-7-server-rpms/ enabled=1 gpgcheck=0 [ansible] name=ansible baseurl=http://192.168.7.1:8080/repo/rhel-7-server-ansible-2.8-rpms/ enabled=1 gpgcheck=0 [extra] name=extra baseurl=http://192.168.7.1:8080/repo/rhel-7-server-extras-rpms/ enabled=1 gpgcheck=0 [ose] name=ose baseurl=http://192.168.7.1:8080/repo/rhel-7-server-ose-4.2-rpms/ enabled=1 gpgcheck=0 [tmp] name=tmp baseurl=http://192.168.7.1:8080/repo/tmp/ enabled=1 gpgcheck=0

- 先安装openshift-clients-4.2.0和openshift-hyperkube-4.2.0版本(因为同步的repository只有4.2.1,会报错)

在同步repository的过程中会发现没有4.2.0这个版本,只有4.2.1版本,这时候通过命令将这两个包所需要的所有rpm拉到本地。(这一步坑得比较惨)

repotrack -p ./tmp/ openshift-hyperkube-4.2.0

repotrack -p ./tmp/ openshift-clients-4.2.0

yum install -y openshift-clients-4.2.0 openshift-hyperkube-4.2.0

安装完成后为了避免和其他包的冲突把tmp的enabled修改为0。

- 修改/etc/hosts,把镜像仓库地址加入,因为nameserver解析不了vm.redhat.com和registry.redhat.com,所以干脆直接写在/etc/hosts上。

[root@worker-3 ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.7.1 vm.redhat.ren 192.168.7.1 registry.redhat.ren

3.Helper节点

- 修改named server能够解析woker-3.ocp4.redhat.ren

- 修改zonefile.db

[root@helper named]# cat /var/named/zonefile.db $TTL 1W @ IN SOA ns1.ocp4.redhat.ren. root ( 2019102900 ; serial 3H ; refresh (3 hours) 30M ; retry (30 minutes) 2W ; expiry (2 weeks) 1W ) ; minimum (1 week) IN NS ns1.ocp4.redhat.ren. IN MX 10 smtp.ocp4.redhat.ren. ; ; ns1 IN A 192.168.7.11 smtp IN A 192.168.7.11 ; helper IN A 192.168.7.11 helper IN A 192.168.7.11 ; ; The api points to the IP of your load balancer api IN A 192.168.7.11 api-int IN A 192.168.7.11 ; ; The wildcard also points to the load balancer *.apps IN A 192.168.7.11 ; ; Create entry for the bootstrap host bootstrap IN A 192.168.7.12 ; ; Create entries for the master hosts master-0 IN A 192.168.7.13 master-1 IN A 192.168.7.14 master-2 IN A 192.168.7.15 ; ; Create entries for the worker hosts worker-0 IN A 192.168.7.16 worker-1 IN A 192.168.7.17 worker-2 IN A 192.168.7.18 worker-3 IN A 192.168.7.19 ; ; The ETCd cluster lives on the masters...so point these to the IP of the masters etcd-0 IN A 192.168.7.13 etcd-1 IN A 192.168.7.14 etcd-2 IN A 192.168.7.15 ; ; The SRV records are IMPORTANT....make sure you get these right...note the trailing dot at the end... _etcd-server-ssl._tcp IN SRV 0 10 2380 etcd-0.ocp4.redhat.ren. _etcd-server-ssl._tcp IN SRV 0 10 2380 etcd-1.ocp4.redhat.ren. _etcd-server-ssl._tcp IN SRV 0 10 2380 etcd-2.ocp4.redhat.ren. ; ;EOF

- 修改反向解析文件reverse.db

[root@helper named]# cat /var/named/reverse.db $TTL 1W @ IN SOA ns1.ocp4.redhat.ren. root ( 2019102900 ; serial 3H ; refresh (3 hours) 30M ; retry (30 minutes) 2W ; expiry (2 weeks) 1W ) ; minimum (1 week) IN NS ns1.ocp4.redhat.ren. ; ; syntax is "last octet" and the host must have fqdn with trailing dot 13 IN PTR master-0.ocp4.redhat.ren. 14 IN PTR master-1.ocp4.redhat.ren. 15 IN PTR master-2.ocp4.redhat.ren. ; 12 IN PTR bootstrap.ocp4.redhat.ren. ; 11 IN PTR api.ocp4.redhat.ren. 11 IN PTR api-int.ocp4.redhat.ren. ; 16 IN PTR worker-0.ocp4.redhat.ren. 17 IN PTR worker-1.ocp4.redhat.ren. 18 IN PTR worker-2.ocp4.redhat.ren. 19 IN PTR worker-3.ocp4.redhat.ren. ; ;EOF

- 重启服务

systemctl start named

systemctl status named

通过/usr/local/bin/helpernodecheck或者ping来进行验证解析正确。

- ssh-copy-id root@worker-3.ocp4.redhat.ren

- 更新或者下载yum源

# subscription-manager repos \ --enable="rhel-7-server-rpms" \ --enable="rhel-7-server-extras-rpms" \ --enable="rhel-7-server-ansible-2.8-rpms" \ --enable="rhel-7-server-ose-4.2-rpms

- 安装软件

yum install openshift-ansible openshift-clients jq

- Ansible Inventory file和运行安装

[root@helper openshift-ansible]# cat /etc/ansible/hosts [all:vars] ansible_user=root #ansible_become=True openshift_kubeconfig_path="/root/ocp4/auth/kubeconfig" [new_workers]

worker-3.ocp4.redhat.ren

cd /usr/share/ansible/openshift-ansible

ansible-playbook -i /etc/ansible/hosts playbooks/scaleup.yml

TASK [openshift_node : Approve node CSR] *********************************************************************************************************************************************** Thursday 31 October 2019 20:41:32 +0800 (0:00:00.472) 0:01:50.089 ****** FAILED - RETRYING: Approve node CSR (6 retries left). FAILED - RETRYING: Approve node CSR (5 retries left). FAILED - RETRYING: Approve node CSR (4 retries left). changed: [worker-3.ocp4.redhat.ren -> localhost] => (item=worker-3.ocp4.redhat.ren) TASK [openshift_node : Wait for nodes to report ready] ********************************************************************************************************************************* Thursday 31 October 2019 20:41:48 +0800 (0:00:15.940) 0:02:06.030 ****** FAILED - RETRYING: Wait for nodes to report ready (36 retries left). FAILED - RETRYING: Wait for nodes to report ready (35 retries left). FAILED - RETRYING: Wait for nodes to report ready (34 retries left). FAILED - RETRYING: Wait for nodes to report ready (33 retries left). FAILED - RETRYING: Wait for nodes to report ready (32 retries left). FAILED - RETRYING: Wait for nodes to report ready (31 retries left). FAILED - RETRYING: Wait for nodes to report ready (30 retries left). FAILED - RETRYING: Wait for nodes to report ready (29 retries left). FAILED - RETRYING: Wait for nodes to report ready (28 retries left). changed: [worker-3.ocp4.redhat.ren -> localhost] => (item=worker-3.ocp4.redhat.ren) PLAY RECAP ***************************************************************************************************************************************************************************** localhost : ok=1 changed=1 unreachable=0 failed=0 skipped=3 rescued=0 ignored=0 worker-3.ocp4.redhat.ren : ok=39 changed=29 unreachable=0 failed=0 skipped=4 rescued=0 ignored=0 Thursday 31 October 2019 20:42:35 +0800 (0:00:46.976) 0:02:53.007 ****** =============================================================================== openshift_node : Wait for nodes to report ready -------------------------------------------------------------------------------------------------------------------------------- 46.98s openshift_node : Install openshift support packages ---------------------------------------------------------------------------------------------------------------------------- 30.90s openshift_node : Install openshift packages ------------------------------------------------------------------------------------------------------------------------------------ 30.44s openshift_node : Reboot the host and wait for it to come back ------------------------------------------------------------------------------------------------------------------ 20.51s openshift_node : Approve node CSR ---------------------------------------------------------------------------------------------------------------------------------------------- 15.94s openshift_node : Pull release image --------------------------------------------------------------------------------------------------------------------------------------------- 9.34s openshift_node : Pull MCD image ------------------------------------------------------------------------------------------------------------------------------------------------- 4.19s openshift_node : Apply ignition manifest ---------------------------------------------------------------------------------------------------------------------------------------- 1.65s openshift_node : Get machine controller daemon image from release image --------------------------------------------------------------------------------------------------------- 1.08s openshift_node : Get cluster nodes ---------------------------------------------------------------------------------------------------------------------------------------------- 0.87s openshift_node : Setting sebool container_manage_cgroup ------------------------------------------------------------------------------------------------------------------------- 0.82s openshift_node : Update CA trust ------------------------------------------------------------------------------------------------------------------------------------------------ 0.78s openshift_node : Check for cluster no proxy ------------------------------------------------------------------------------------------------------------------------------------- 0.73s openshift_node : Write /etc/containers/registries.conf -------------------------------------------------------------------------------------------------------------------------- 0.68s openshift_node : Check for cluster http proxy ----------------------------------------------------------------------------------------------------------------------------------- 0.67s openshift_node : Check for cluster https proxy ---------------------------------------------------------------------------------------------------------------------------------- 0.66s openshift_node : Wait for bootstrap endpoint to show up ------------------------------------------------------------------------------------------------------------------------- 0.63s openshift_node : Enable the CRI-O service --------------------------------------------------------------------------------------------------------------------------------------- 0.59s openshift_node : Create CNI dirs for crio --------------------------------------------------------------------------------------------------------------------------------------- 0.54s openshift_node : Approve node-bootstrapper CSR ---------------------------------------------------------------------------------------------------------------------------------- 0.47s [root@helper openshift-ansible]#

查看csr

[root@helper auth]# oc get csr NAME AGE REQUESTOR CONDITION csr-4hhc9 3m47s system:serviceaccount:openshift-machine-config-operator:node-bootstrapper Approved,Issued csr-bn8ww 3m33s system:node:worker-3.ocp4.redhat.ren Approved,Issued [root@helper auth]# oc get nodes NAME STATUS ROLES AGE VERSION master-0.ocp4.redhat.ren Ready master 34h v1.14.6+c07e432da master-1.ocp4.redhat.ren Ready master 34h v1.14.6+c07e432da master-2.ocp4.redhat.ren Ready master 34h v1.14.6+c07e432da worker-0.ocp4.redhat.ren Ready worker 34h v1.14.6+c07e432da worker-1.ocp4.redhat.ren Ready worker 34h v1.14.6+c07e432da worker-2.ocp4.redhat.ren Ready worker 34h v1.14.6+c07e432da worker-3.ocp4.redhat.ren Ready worker 118s v1.14.6+c07e432da

安装过程中会把新加入的节点重启一次,启动完成后发送csr请求以及自动审批,然后加入集群中。

浙公网安备 33010602011771号

浙公网安备 33010602011771号