OpenShift 项目的备份和恢复实验

本测试记录从openshift 3.6环境中导出项目,然后在将项目环境恢复到Openshift 3.11中所需要的步骤

从而指导导入导出的升级过程。

1.安装Openshift 3.6版本

过程略

2.安装OpenShift 3.11版本

过程略

3.在Openshift 3.6版本中建立各类资源

- 创建用户

htpasswd /etc/origin/master/htpasswd eric

htpasswd /etc/origin/master/htpasswd alice

- 给节点打标签

oc label node node2.example.com application=eric-tomcat

[root@master ~]# oc get node node2.example.com --show-labels NAME STATUS AGE VERSION LABELS node2.example.com Ready 1d v1.6.1+5115d708d7 application=eric-tomcat,beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=node2.example.com,region=infra,zone=default

- 导入镜像

docker load -i tomcat.tar docker tag docker.io/tomcat:8-slim registry.example.com/tomcat:8-slim docker push registry.example.com/tomcat:8-slim

- 创建项目ericproject1

用eric用户登录

oc new-project ericproject1 oc import-image tomcat:8-slim --from=registry.example.com/tomcat:8-slim --insecure --confirm oc new-app tomcat:8-slim --name=ericapp1 oc expose service ericapp1 oc scale dc/ericapp1 --replicas=3

oc new-app tomcat:8-slim --name=ericapp2

oc expose service ericapp2

- 创建项目ericproject2

用eric用户登录

oc new-project ericproject2 oc import-image tomcat:8-slim --from=registry.example.com/tomcat:8-slim --insecure --confirm oc new-app tomcat:8-slim --name=eric-tomcat oc expose service eric-tomcat

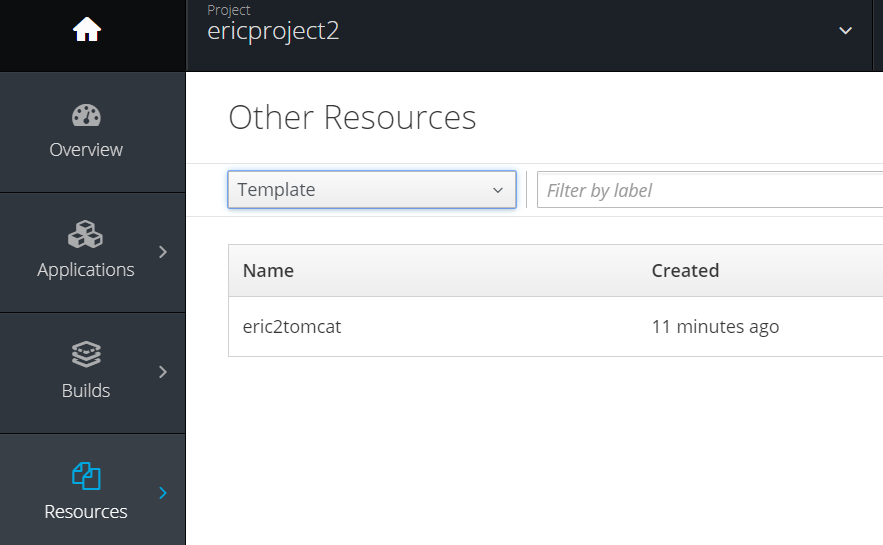

- 建立template

[root@master ~]# cat eric2tomcat-project2.yaml apiVersion: v1 kind: Template metadata: creationTimestamp: null name: eric2tomcat objects: - apiVersion: v1 kind: DeploymentConfig metadata: annotations: openshift.io/generated-by: OpenShiftNewApp creationTimestamp: null generation: 1 labels: app: ${APP_NAME} name: ${APP_NAME} spec: replicas: 1 selector: app: ${APP_NAME} deploymentconfig: ${APP_NAME} strategy: activeDeadlineSeconds: 21600 resources: {} rollingParams: intervalSeconds: 1 maxSurge: 25% maxUnavailable: 25% timeoutSeconds: 600 updatePeriodSeconds: 1 type: Rolling template: metadata: annotations: openshift.io/generated-by: OpenShiftNewApp creationTimestamp: null labels: app: ${APP_NAME} deploymentconfig: ${APP_NAME} spec: containers: - image: registry.example.com/tomcat@sha256:8f701fff708316aabc01520677446463281b5347ba1d6e9e237dd21de600f809 imagePullPolicy: IfNotPresent name: ${APP_NAME} ports: - containerPort: 8080 protocol: TCP resources: {} terminationMessagePath: /dev/termination-log terminationMessagePolicy: File dnsPolicy: ClusterFirst restartPolicy: Always schedulerName: default-scheduler securityContext: {} terminationGracePeriodSeconds: 30 test: false triggers: - type: ConfigChange - imageChangeParams: automatic: true containerNames: - ${APP_NAME} from: kind: ImageStreamTag name: tomcat:8-slim namespace: ericproject2 type: ImageChange status: availableReplicas: 0 latestVersion: 0 observedGeneration: 0 replicas: 0 unavailableReplicas: 0 updatedReplicas: 0 - apiVersion: v1 kind: Service metadata: annotations: openshift.io/generated-by: OpenShiftNewApp creationTimestamp: null labels: app: ${APP_NAME} name: ${APP_NAME} spec: ports: - name: 8080-tcp port: 8080 protocol: TCP targetPort: 8080 selector: app: ${APP_NAME} deploymentconfig: ${APP_NAME} sessionAffinity: None type: ClusterIP status: loadBalancer: {} - apiVersion: v1 kind: Route metadata: annotations: openshift.io/host.generated: "true" creationTimestamp: null labels: app: ${APP_NAME} name: ${APP_NAME} spec: host: ${APP_NAME}-ericproject2.app.example.com port: targetPort: 8080-tcp to: kind: Service name: ${APP_NAME} weight: 100 wildcardPolicy: None status: ingress: - conditions: - lastTransitionTime: 2019-03-07T15:16:35Z status: "True" type: Admitted host: ${APP_NAME}-ericproject2.app.example.com routerName: router wildcardPolicy: None parameters: - name: APP_NAME displayname: application name value: myapp

oc create -f eric2tomcat-project2.yaml

- 基于template建立应用

oc new-app eric2tomcat

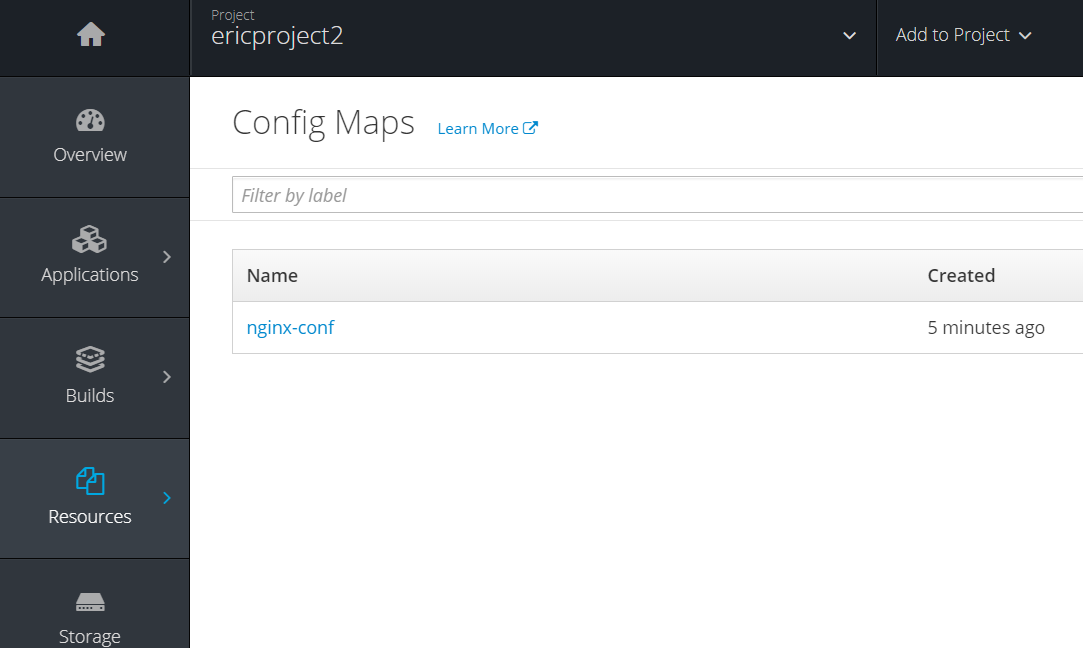

- 建立configmap

[root@master ~]# cat nginx.conf user nginx; worker_processes 1; error_log /var/log/nginx/error.log warn; pid /var/run/nginx.pid; events { worker_connections 1024; } http { include /etc/nginx/mime.types; default_type application/octet-stream; log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"'; access_log /var/log/nginx/access.log main; sendfile on; #tcp_nopush on; keepalive_timeout 65; #gzip on; include /etc/nginx/conf.d/*.conf; }

oc create configmap nginx-conf --from-file=nginx.conf

- gluster pv相关设置

[root@master ~]# cat gluster-endpoints.yaml apiVersion: v1 kind: Endpoints metadata: name: gluster-endpoints subsets: - addresses: - ip: 192.168.56.107 ports: - port: 1 protocol: TCP - addresses: - ip: 192.168.56.108 ports: - port: 1 protocol: TCP

[root@master ~]# cat gluster-service.yaml apiVersion: v1 kind: Service metadata: name: gluster-service spec: ports: - port: 1

[root@master ~]# cat gluster-pv.yaml apiVersion: v1 kind: PersistentVolume metadata: name: gluster-pv spec: capacity: storage: 10Gi accessModes: - ReadWriteMany glusterfs: endpoints: gluster-endpoints path: /gv0 readOnly: false persistentVolumeReclaimPolicy: Retain

[root@master ~]# cat tomcat-claim.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: tomcat-claim spec: accessModes: - ReadWriteMany resources: requests: storage: 1Gi

- 用alice账户登录创建项目

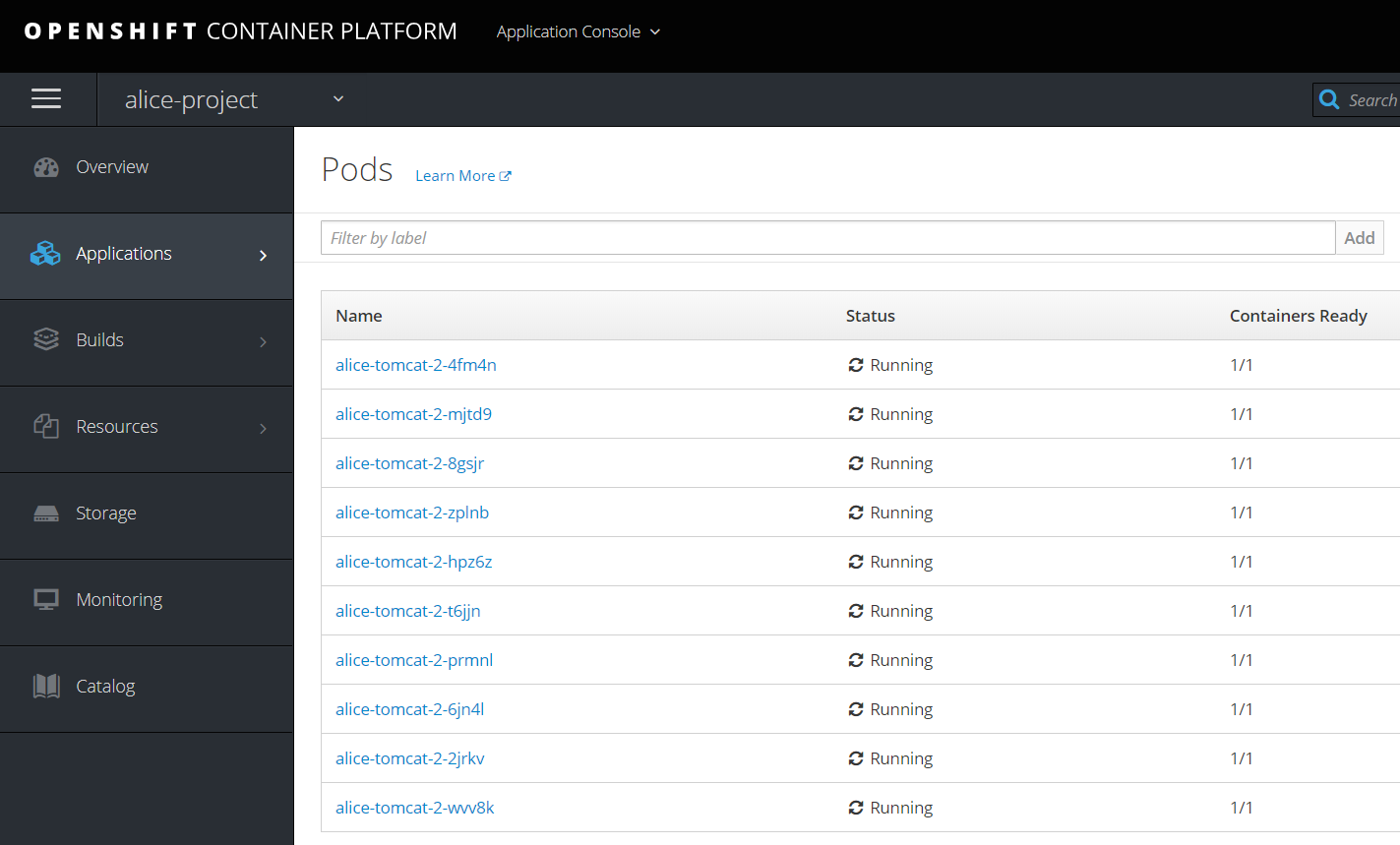

oc new-project alice-project oc import-image tomcat:8-slim --from=registry.example.com/tomcat:8-slim --insecure --confirm oc new-app tomcat:8-slim --name=alice-tomcat oc expose service alice-tomcat oc scale dc/alice-tomcat --replicas=10

4.开始资源导出过程

以下在OpenShift 3.6的集群环境下操作。

先下载jq和安装(在执行导出的集群的节点和执行导入的集群节点上都需要安装)

https://stedolan.github.io/jq/

执行导出

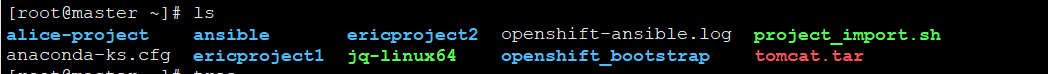

./project_export.sh ericproject1 ./project_export.sh ericproject2 ./project_export.sh alice-project

导出完成后发现当前目录下有这三个目录

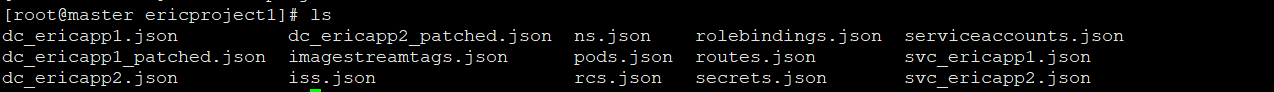

导出后进入项目查看内容

简单写了个批量导出项目的脚本

[root@master ~]# cat all_export.sh result="true"; systemproject=(kube-system kube-public kube-service-catalog default logging management-infra openshift openshift-infra) for i in $(oc get projects | awk 'NR>1{print $1}'); do # echo $i for j in ${systemproject[@]}; do # echo $j if [ $i == $j ]; then # echo "enter" result="false" fi; done if [ $result == "true" ]; then echo $i; ./project_export.sh $i; fi; result="true" done

导出截取了一段ericproject2

ericproject2

###########

# WARNING #

###########

This script is distributed WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND

Beware ImageStreams objects are not importables due to the way they work

See https://github.com/openshift/openshift-ansible-contrib/issues/967

for more information

Exporting namespace to ericproject2/ns.json Exporting 'rolebindings' resources to ericproject2/rolebindings.json Exporting 'serviceaccounts' resources to ericproject2/serviceaccounts.json Exporting 'secrets' resources to ericproject2/secrets.json Exporting deploymentconfigs to ericproject2/dc_*.json Patching DC... Patching DC... Exporting 'bc' resources to ericproject2/bcs.json Skipped: list empty Exporting 'builds' resources to ericproject2/builds.json Skipped: list empty Exporting 'is' resources to ericproject2/iss.json Exporting 'imagestreamtags' resources to ericproject2/imagestreamtags.json Exporting 'rc' resources to ericproject2/rcs.json Exporting services to ericproject2/svc_*.json Exporting 'po' resources to ericproject2/pods.json Exporting 'podpreset' resources to ericproject2/podpreset.json the server doesn't have a resource type "podpreset" Skipped: no data Exporting 'cm' resources to ericproject2/cms.json Exporting 'egressnetworkpolicies' resources to ericproject2/egressnetworkpolicies.json Skipped: list empty Exporting 'rolebindingrestrictions' resources to ericproject2/rolebindingrestrictions.json Skipped: list empty Exporting 'cm' resources to ericproject2/cms.json Exporting 'egressnetworkpolicies' resources to ericproject2/egressnetworkpolicies.json Skipped: list empty Exporting 'rolebindingrestrictions' resources to ericproject2/rolebindingrestrictions.json Skipped: list empty Exporting 'limitranges' resources to ericproject2/limitranges.json Skipped: list empty Exporting 'resourcequotas' resources to ericproject2/resourcequotas.json Skipped: list empty Exporting 'pvc' resources to ericproject2/pvcs.json Skipped: list empty Exporting 'pvc' resources to ericproject2/pvcs_attachment.json Skipped: list empty Exporting 'routes' resources to ericproject2/routes.json Exporting 'templates' resources to ericproject2/templates.json Exporting 'cronjobs' resources to ericproject2/cronjobs.json Skipped: list empty Exporting 'statefulsets' resources to ericproject2/statefulsets.json Skipped: list empty Exporting 'hpa' resources to ericproject2/hpas.json Skipped: list empty Exporting 'deploy' resources to ericproject2/deployments.json Skipped: list empty Exporting 'replicasets' resources to ericproject2/replicasets.json Skipped: list empty Exporting 'poddisruptionbudget' resources to ericproject2/poddisruptionbudget.json Skipped: list empty Exporting 'daemonset' resources to ericproject2/daemonset.json Skipped: list empty

5.执行导入过程

将三个目录全部拷贝到执行导入的节点,OpenShift 3.11的版本

- 先导入镜像

docker load -i tomcat.tar docker tag docker.io/tomcat:8-slim registry.example.com/tomcat:8-slim docker push registry.example.com/tomcat:8-slim

- 以admin的身份登录,然后运行

./project_import.sh ericproject1 ./project_import.sh ericproject2 ./project_import.sh alice-project

6. 恢复到3.11后的验证

- 用户

[root@master ~]# oc get users NAME UID FULL NAME IDENTITIES admin 3d7951e7-422a-11e9-90df-080027dc991a htpasswd_auth:admin

可见导入过程并不会对用户进行任何操作,但实际环境中openshift集群都是连接LDAP或其他外部用户,所以这关系不大。

- 项目

[root@master ~]# oc projects You have access to the following projects and can switch between them with 'oc project <projectname>': * alice-project default ericproject1 ericproject2 kube-public kube-system management-infra openshift openshift-console openshift-infra openshift-logging openshift-metrics-server openshift-monitoring openshift-node openshift-sdn openshift-web-console Using project "alice-project" on server "https://master.example.com:8443".

通过admin能看到所有的导入项目,进入项目后因为image stream的问题,发现有些DeploymentConfig一直在deploy阶段,但并无实例运行

运行下面的命令让实例重新装载

oc delete pod alice-tomcat-1-deploy oc rollout latest alice-tomcat

然后就可以看到实例全部装载成功

- label

可见并没有将我们的label导入到新环境中

[root@master ~]# oc get nodes --show-labels NAME STATUS ROLES AGE VERSION LABELS master.example.com Ready master 2d v1.11.0+d4cacc0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=master.example.com,node-role.kubernetes.io/master=true node1.example.com Ready infra 2d v1.11.0+d4cacc0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=node1.example.com,node-role.kubernetes.io/infra=true node2.example.com Ready compute 2d v1.11.0+d4cacc0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=node2.example.com,node-role.kubernetes.io/compute=true

- 权限RBAC

[root@master ~]# oc get rolebinding NAME ROLE USERS GROUPS SERVICE ACCOUNTS SUBJECTS admin /admin alice system:deployers /system:deployer deployer system:image-builders /system:image-builder builder system:image-pullers /system:image-puller system:serviceaccounts:alice-project [root@master ~]# oc project ericproject1 Now using project "ericproject1" on server "https://master.example.com:8443". [root@master ~]# oc get rolebinding NAME ROLE USERS GROUPS SERVICE ACCOUNTS SUBJECTS admin /admin eric system:deployers /system:deployer deployer system:image-builders /system:image-builder builder system:image-pullers /system:image-puller system:serviceaccounts:ericproject1 [root@master ~]# oc project ericproject2 Now using project "ericproject2" on server "https://master.example.com:8443". [root@master ~]# oc get rolebinding NAME ROLE USERS GROUPS SERVICE ACCOUNTS SUBJECTS admin /admin eric system:deployers /system:deployer deployer system:image-builders /system:image-builder builder system:image-pullers /system:image-puller system:serviceaccounts:ericproject2 [root@master ~]#

可见所有的项目权限都保存下来。

7.升级建议

因为原有的集群下节点数目和新的集群很可能不一样,因此单纯的备份etcd和恢复etcd的办法上有很大风险。

这种模式下,采用项目导入导出的方式不失为一种较为安全的方式。

需要注意的地方包括:

- 用户不会导出,但在openshift的权限信息会保存。

- 节点的Label不会导出

- 导入导出过程需要rollout。

- 用glusterfs的时候,每个project的gluster-endpoint资源没有保存下来,估计和gluster-service没有关联相关

- 因为pv不是属于项目资源而属于整个集群资源,导入项目前,先建立pv

- 遇到pod无法启动很多时候和mount存储有关系

浙公网安备 33010602011771号

浙公网安备 33010602011771号