第十章 scrapy框架

scrapy框架

- 什么是框架?

- 就是一个集成了很多功能并且具有很强通用性的一个项目模板。

- 如何学习框架?

- 专门学习框架封装的各种功能的详细用法。

- 什么是scrapy?

- 爬虫中封装好的一个明星框架。功能:高性能的持久化存储,异步的数据下载,高性能的数据解析,分布式

- scrapy框架的基本使用

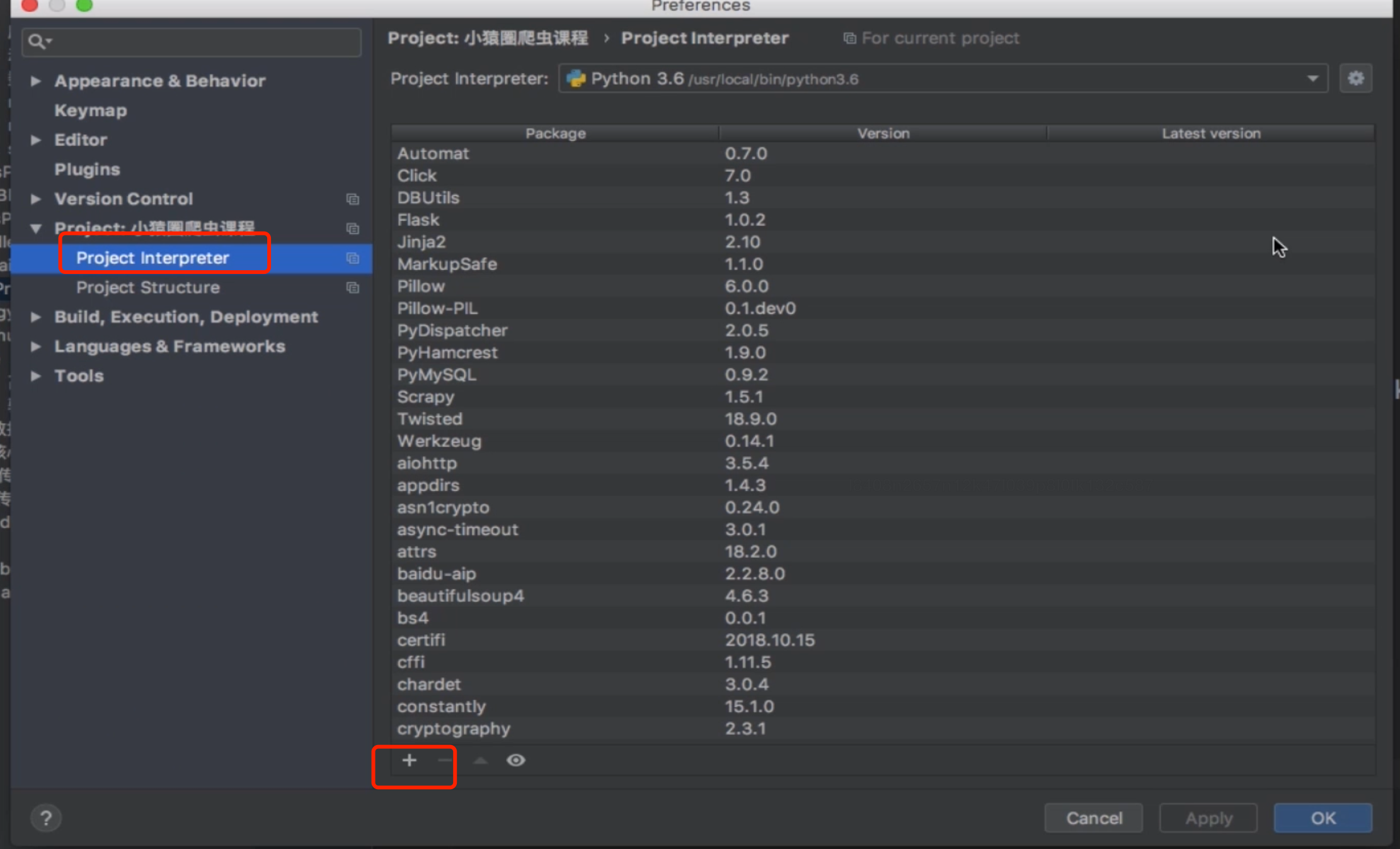

- 环境的安装:

- mac or linux:pip install scrapy

- windows:

- pip install wheel

- 下载twisted,下载地址为http://www.lfd.uci.edu/~gohlke/pythonlibs/#twisted

- 安装twisted:pip install Twisted‑17.1.0‑cp36‑cp36m‑win_amd64.whl #cp36 对应的是python3.6版本 在此文件的目录下 打开cmd命令行窗口进行下载

- pip install pywin32

- pip install scrapy

测试:在终端里录入scrapy指令,没有报错即表示安装成功!

- 创建一个工程:scrapy startproject xxxPro

- 进入工程:cd xxxPro

- 在spiders子目录中创建一个爬虫文件spiderName

- scrapy genspider spiderName www.xxx.com

- 执行工程:

- scrapy crawl spiderName

- scrapy基础使用(爬虫文件 设置)

first.py:

# -*- coding: utf-8 -*- import scrapy class FirstSpider(scrapy.Spider): #爬虫文件的名称:通过爬虫文件的名称可以指定的定位到某一个具体的爬虫文件 爬虫源文件的唯一标识 name = 'first' #允许的域名:用来限定start_urls列表中哪些可以进行请求发送 只可以爬取指定域名下的页面数据 allowed_domains = ['www.qiushibaike.com'] #起始url:当前工程将要爬取的页面所对应的url 可以有多个url start_urls = ['http://www.qiushibaike.com/'] #用作数据解析的 #解析方法:对获取的页面数据进行指定内容的解析 #response:根据起始url列表发起请求,请求成功后返回的响应对象 #parse方法的返回值:必须为迭代器或者空(有几个url就会有几个response对象,有几个对象就会掉用几次parse) def parse(self, response): print(response.text)#获取响应对象中的页面数据

setting文件设置 不遵循robots.txt 协议(要是遵从robots.txt几乎所有的网站都不能爬取)

# Obey robots.txt rules # 不遵循robots.txt 协议 ROBOTSTXT_OBEY = False # 让终端只显示指定类型的日志信息 LOG_LEVEL = 'ERROR'

- scrapy数据解析

举例:(创建qiubai工程)

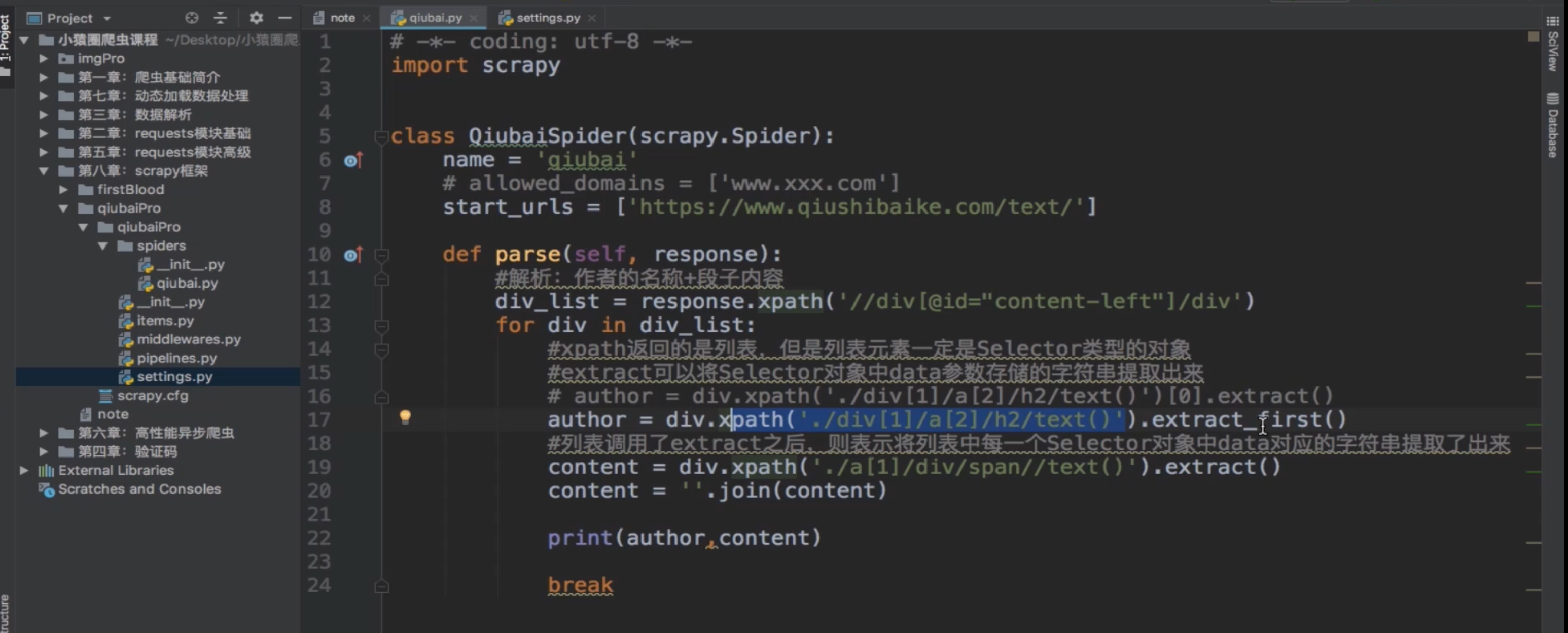

qiubai.py:(数据解析)

# -*- coding: utf-8 -*- import scrapyclass QiubaiSpider(scrapy.Spider): name = 'qiubai' # allowed_domains = ['www.xxx.com'] start_urls = ['https://www.qiushibaike.com/text/'] # # def parse(self, response): # #解析:作者的名称+段子内容 # div_list = response.xpath('//div[@id="content-left"]/div') # for div in div_list: # #xpath返回的是列表,但是列表元素一定是Selector类型的对象 # #extract可以将Selector对象中data参数存储的字符串提取出来 # # author = div.xpath('./div[1]/a[2]/h2/text()')[0].extract() # author = div.xpath('./div[1]/a[2]/h2/text()').extract_first() # #列表调用了extract之后,则表示将列表中每一个Selector对象中data对应的字符串提取了出来 # content = div.xpath('./a[1]/div/span//text()').extract() # content = ''.join(content) # break

settings.py:(设置)

# Crawl responsibly by identifying yourself (and your website) on the user-agent 网页抓包获取 USER_AGENT = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.169 Safari/537.36' # Obey robots.txt rules ROBOTSTXT_OBEY = False LOG_LEVEL = 'ERROR'

- scrapy持久化存储(两种方式)

- 基于终端指令:

- 要求:只可以将parse方法的返回值存储到本地的文本文件中

- 注意:持久化存储对应的文本文件的类型只可以为:'json', 'jsonlines', 'jl', 'csv', 'xml', 'marshal', 'pickle

- 指令:scrapy crawl xxx -o filePath

- 好处:简介高效便捷

- 缺点:局限性比较强(数据只可以存储到指定后缀的文本文件中)

import scrapy from qiubaiPro.items import QiubaiproItem class QiubaiSpider(scrapy.Spider): name = 'qiubai' # allowed_domains = ['www.xxx.com'] start_urls = ['https://www.qiushibaike.com/text/'] def parse(self, response): #解析:作者的名称+段子内容 div_list = response.xpath('//div[@id="content-left"]/div') all_data = [] #存储所有解析到的数据 for div in div_list: #xpath返回的是列表,但是列表元素一定是Selector类型的对象 #extract可以将Selector对象中data参数存储的字符串提取出来 # author = div.xpath('./div[1]/a[2]/h2/text()')[0].extract() author = div.xpath('./div[1]/a[2]/h2/text()').extract_first() #列表调用了extract之后,则表示将列表中每一个Selector对象中data对应的字符串提取了出来 content = div.xpath('./a[1]/div/span//text()').extract() content = ''.join(content) dic = { 'author':author, 'content':content } all_data.append(dic) return all_data

终端命令:

scrapy crawl qiubai -o ./qiubai.csv

- 基于管道:

- 编码流程:

- 数据解析

- 在item类中定义相关的属性

- 将解析的数据封装存储到item类型的对象

- 将item类型的对象提交给管道进行持久化存储的操作

- 在管道类的process_item中要将其接受到的item对象中存储的数据进行持久化存储操作

- 在配置文件中开启管道

- 好处:

- 通用性强。

qiubai.py:

import scrapy from qiubaiPro.items import QiubaiproItem class QiubaiSpider(scrapy.Spider): name = 'qiubai' # allowed_domains = ['www.xxx.com'] start_urls = ['https://www.qiushibaike.com/text/'] # 第一步数据解析 def parse(self, response): #解析:作者的名称+段子内容 div_list = response.xpath('//div[@id="content-left"]/div') all_data = [] #存储所有解析到的数据 for div in div_list: #xpath返回的是列表,但是列表元素一定是Selector类型的对象 #extract可以将Selector对象中data参数存储的字符串提取出来 # author = div.xpath('./div[1]/a[2]/h2/text()')[0].extract() author = div.xpath('./div[1]/a[2]/h2/text() | ./div[1]/span/h2/text()').extract_first() #列表调用了extract之后,则表示将列表中每一个Selector对象中data对应的字符串提取了出来 content = div.xpath('./a[1]/div/span//text()').extract() content = ''.join(content) # 第三步 将解析的数据封装存储到item类型的对象 item = QiubaiproItem() item['author'] = author item['content'] = content # 第四步 将item类型的对象提交给管道进行持久化存储的操作 yield item#将item提交给了管道

item.py:

# 第二步:在item类中定义相关属性 import scrapy class QiubaiproItem(scrapy.Item): # define the fields for your item here like: author = scrapy.Field() content = scrapy.Field() # pass

Pipelines.py:

import pymysql class QiubaiproPipeline(object): fp = None # 文件属性 #重写父类的一个方法:该方法只在开始爬虫的时候被调用一次 def open_spider(self,spider): print('开始爬虫......') self.fp = open('./qiubai.txt','w',encoding='utf-8') # 打开文件 #专门用来处理item类型对象 #该方法可以接收爬虫文件提交过来的item对象 #该方法每接收到一个item就会被调用一次 def process_item(self, item, spider): author = item['author'] content= item['content'] self.fp.write(author+':'+content+'\n') return item #就会传递给下一个即将被执行的管道类 def close_spider(self,spider): print('结束爬虫!') self.fp.close() # 结束掉用一次

命令:

scrapy crawl qiubai

- 面试题: 如何实现?(通过管道的形式 同一份数据存到两个不同的平台中)

- 管道文件中一个管道类对应的是将数据存储到一种平台

- 爬虫文件提交的item只会给管道文件中第一个被执行的管道类接受

- process_item中的return item表示将item传递给下一个即将被执行的管道类

Pipelines.py:

# -*- coding: utf-8 -*- import pymysql # 将爬取到的数据一份存储到本地一份存储到数据库一份, # QiubaiproPipeline 本地 class QiubaiproPipeline(object): fp = None # 文件属性 #重写父类的一个方法:该方法只在开始爬虫的时候被调用一次 def open_spider(self,spider): print('开始爬虫......') self.fp = open('./qiubai.txt','w',encoding='utf-8') # 打开文件 #专门用来处理item类型对象 #该方法可以接收爬虫文件提交过来的item对象 #该方法没接收到一个item就会被调用一次 def process_item(self, item, spider): author = item['author'] content= item['content'] self.fp.write(author+':'+content+'\n') return item # 就会传递给下一个即将被执行的管道类 def close_spider(self,spider): print('结束爬虫!') self.fp.close() # 结束掉用一次 #管道文件中一个管道类对应将一组数据存储到一个平台或者载体中 class mysqlPileLine(object): conn = None cursor = None def open_spider(self,spider): # 游标 连接数据库 self.conn = pymysql.Connect(host='127.0.0.1',port=3306,user='root',password='123456',db='qiubai',charset='utf8') def process_item(self,item,spider): self.cursor = self.conn.cursor() # 数据库插入数据 try: self.cursor.execute('insert into qiubai values("%s","%s")'%(item["author"],item["content"])) # 数据提交 self.conn.commit() except Exception as e: print(e) # 插入异常 回滚 self.conn.rollback() return item # 就会传递给下一个即将被执行的管道类 # 关闭连接 def close_spider(self,spider): # 关闭游标 self.cursor.close() # 关闭连接 self.conn.close() #爬虫文件提交的item类型的对象最终会提交给哪一个管道类? #提交给优先级高的 先执行的管道类

settings.py:

ITEM_PIPELINES = { 'qiubaiPro.pipelines.QiubaiproPipeline': 300, 'qiubaiPro.pipelines.mysqlPileLine': 301, #300表示的是优先级,数值越小优先级越高 }

- 基于Spider的全站数据爬取

- 全站就是将网站中某板块下的全部页码对应的页面数据进行爬取

- 需求:爬取校花网中的照片的名称

- 实现方式:

- 将所有页面的url添加到start_urls列表(不推荐)

- 自行手动进行请求发送(推荐)

- 手动请求发送:

- yield scrapy.Request(url,callback):callback专门用做于数据解析

新建工程文件xiaohaupro

spiders/xiaohua.py:

# -*- coding: utf-8 -*- import scrapy # 抓取校花网 每张图片的标题 class XiaohuaSpider(scrapy.Spider): name = 'xiaohua' # allowed_domains = ['www.xxx.com'] # 首页 start_urls = ['http://www.521609.com/meinvxiaohua/'] # 从第2页开始 #生成一个通用的url模板(不可变) url = 'http://www.521609.com/meinvxiaohua/list12%d.html' page_num = 2 def parse(self, response): # 首页数据 li_list = response.xpath('//*[@id="content"]/div[2]/div[2]/ul/li') # 遍历li标签 for li in li_list: # 拿到图片名称 img_name = li.xpath('./a[2]/b/text() | ./a[2]/text()').extract_first() print(img_name) if self.page_num <= 11: # 每页的url new_url = format(self.url%self.page_num) self.page_num += 1 #手动请求发送:callback回调函数是专门用作于数据解析 yield scrapy.Request(url=new_url,callback=self.parse)

settings.py:

# -*- coding: utf-8 -*- # Scrapy settings for xiaohuaPro project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://doc.scrapy.org/en/latest/topics/settings.html # https://doc.scrapy.org/en/latest/topics/downloader-middleware.html # https://doc.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'xiaohuaPro' SPIDER_MODULES = ['xiaohuaPro.spiders'] NEWSPIDER_MODULE = 'xiaohuaPro.spiders' USER_AGENT = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.169 Safari/537.36' # Crawl responsibly by identifying yourself (and your website) on the user-agent #USER_AGENT = 'xiaohuaPro (+http://www.yourdomain.com)' LOG_LEVEL = 'ERROR' # Obey robots.txt rules ROBOTSTXT_OBEY = False # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #} # Enable or disable spider middlewares # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'xiaohuaPro.middlewares.XiaohuaproSpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # 'xiaohuaPro.middlewares.XiaohuaproDownloaderMiddleware': 543, #} # Enable or disable extensions # See https://doc.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} # Configure item pipelines # See https://doc.scrapy.org/en/latest/topics/item-pipeline.html #ITEM_PIPELINES = { # 'xiaohuaPro.pipelines.XiaohuaproPipeline': 300, #} # Enable and configure the AutoThrottle extension (disabled by default) # See https://doc.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

items.py:

# -*- coding: utf-8 -*- import scrapy class XiaohuaproItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() pass

pipelines.py:

# -*- coding: utf-8 -*- class XiaohuaproPipeline(object): def process_item(self, item, spider): return item

middlewares.py:

# -*- coding: utf-8 -*- # Define here the models for your spider middleware # # See documentation in: # https://doc.scrapy.org/en/latest/topics/spider-middleware.html from scrapy import signals class XiaohuaproSpiderMiddleware(object): # Not all methods need to be defined. If a method is not defined, # scrapy acts as if the spider middleware does not modify the # passed objects. @classmethod def from_crawler(cls, crawler): # This method is used by Scrapy to create your spiders. s = cls() crawler.signals.connect(s.spider_opened, signal=signals.spider_opened) return s def process_spider_input(self, response, spider): # Called for each response that goes through the spider # middleware and into the spider. # Should return None or raise an exception. return None def process_spider_output(self, response, result, spider): # Called with the results returned from the Spider, after # it has processed the response. # Must return an iterable of Request, dict or Item objects. for i in result: yield i def process_spider_exception(self, response, exception, spider): # Called when a spider or process_spider_input() method # (from other spider middleware) raises an exception. # Should return either None or an iterable of Response, dict # or Item objects. pass def process_start_requests(self, start_requests, spider): # Called with the start requests of the spider, and works # similarly to the process_spider_output() method, except # that it doesn’t have a response associated. # Must return only requests (not items). for r in start_requests: yield r def spider_opened(self, spider): spider.logger.info('Spider opened: %s' % spider.name) class XiaohuaproDownloaderMiddleware(object): # Not all methods need to be defined. If a method is not defined, # scrapy acts as if the downloader middleware does not modify the # passed objects. @classmethod def from_crawler(cls, crawler): # This method is used by Scrapy to create your spiders. s = cls() crawler.signals.connect(s.spider_opened, signal=signals.spider_opened) return s def process_request(self, request, spider): # Called for each request that goes through the downloader # middleware. # Must either: # - return None: continue processing this request # - or return a Response object # - or return a Request object # - or raise IgnoreRequest: process_exception() methods of # installed downloader middleware will be called return None def process_response(self, request, response, spider): # Called with the response returned from the downloader. # Must either; # - return a Response object # - return a Request object # - or raise IgnoreRequest return response def process_exception(self, request, exception, spider): # Called when a download handler or a process_request() # (from other downloader middleware) raises an exception. # Must either: # - return None: continue processing this exception # - return a Response object: stops process_exception() chain # - return a Request object: stops process_exception() chain pass def spider_opened(self, spider): spider.logger.info('Spider opened: %s' % spider.name)

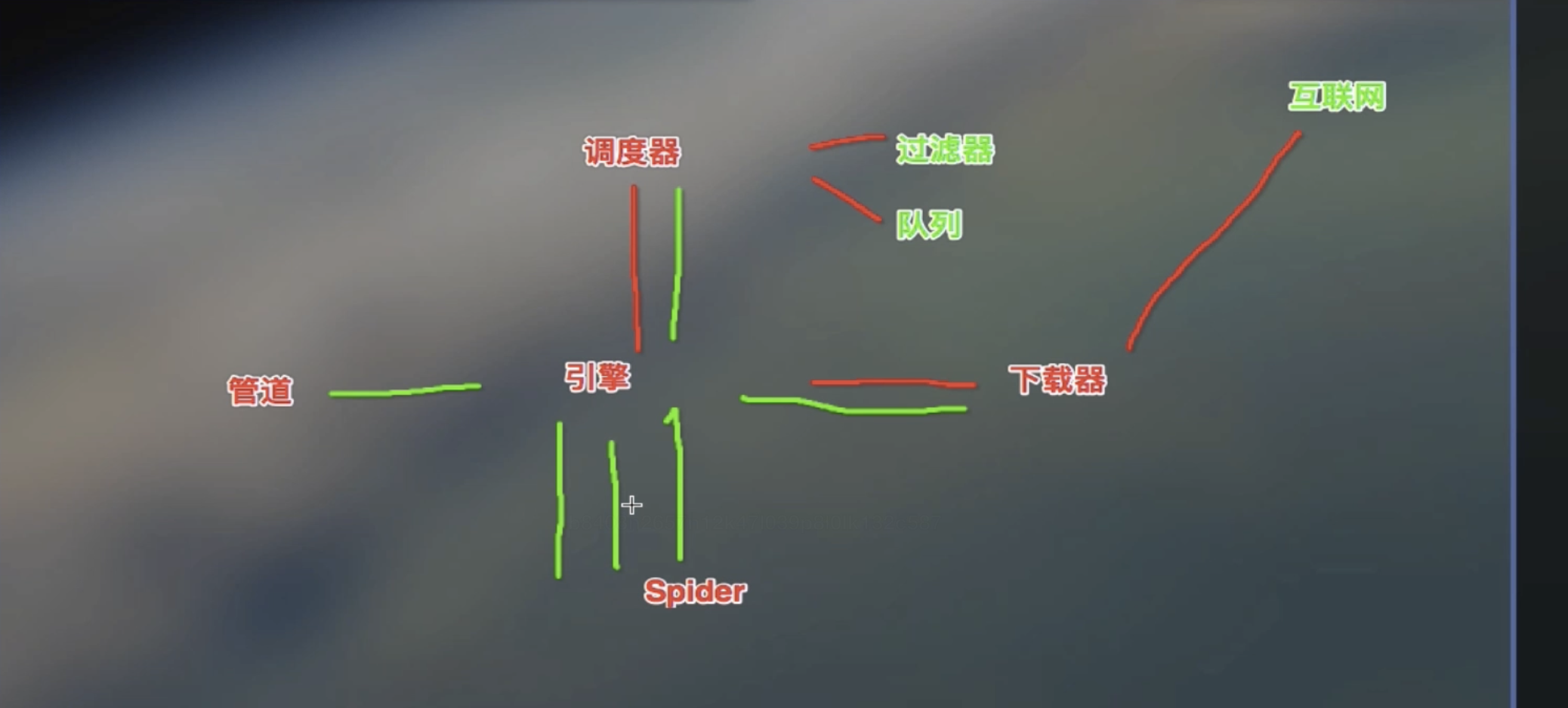

- 五大核心组件

引擎(Scrapy)

用来处理整个系统的数据流处理, 还有可以触发事务(框架核心),(什么时候调用什么方法)

调度器(Scheduler)

用来接受引擎发过来的请求, 压入队列中, 并在引擎再次请求的时候返回. 可以想像成一个URL(抓取网页的网址或者说是链接)的优先队列, 由它来决定下一个要抓取的网址是什么, 同时去除重复的网址

下载器(Downloader)

用于下载网页内容, 并将网页内容返回给蜘蛛(Scrapy下载器是建立在twisted这个高效的异步模型上的)

爬虫(Spiders)

爬虫是主要干活的, 用于从特定的网页中提取自己需要的信息, 即所谓的实体(Item)。用户也可以从中提取出链接,让Scrapy继续抓取下一个页面。

项目管道(Pipeline)

负责处理爬虫从网页中抽取的实体,主要的功能是持久化实体、验证实体的有效性、清除不需要的信息。当页面被爬虫解析后,将被发送到项目管道,并经过几个特定的次序处理数据。

spider产生url并发送请求,进行数据解析 ,将请求的url封装成对象给引擎,引擎在把请求对象给调度器,调度器进行去重并压到队列当中 并在引擎再次请求的时候返回,最终给到下载器通过互联网进行数据下载并返回给引擎再去给到管道进行数据处理

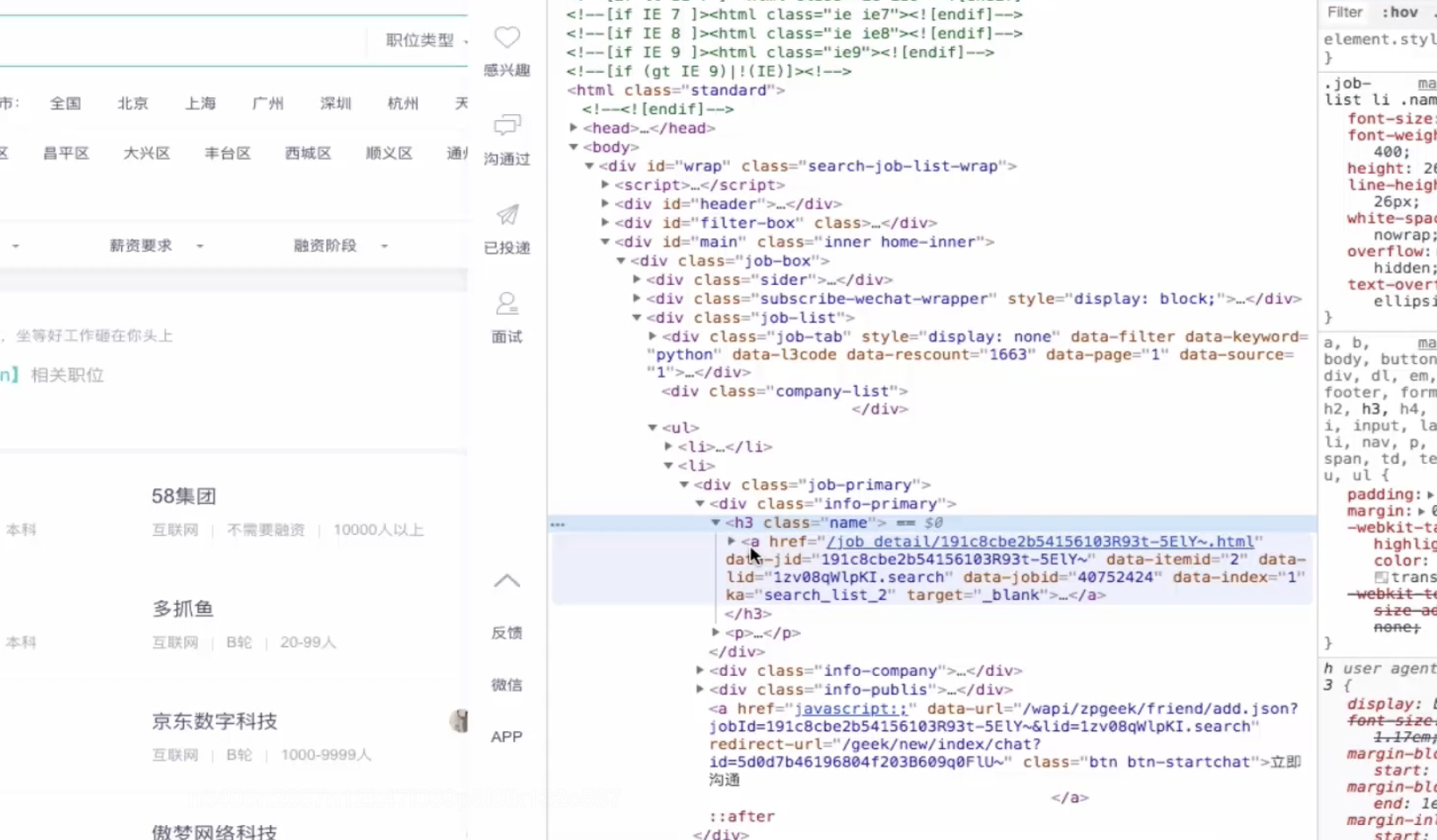

- 请求传参

- 使用场景:如果爬取解析的数据不在同一张页面中。(深度爬取 进入到详情页爬取)

- 需求:爬取boss的岗位名称,岗位描述

settings.py:

# -*- coding: utf-8 -*- # Scrapy settings for bossPro project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://doc.scrapy.org/en/latest/topics/settings.html # https://doc.scrapy.org/en/latest/topics/downloader-middleware.html # https://doc.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'bossPro' LOG_LEVEL = 'ERROR' SPIDER_MODULES = ['bossPro.spiders'] NEWSPIDER_MODULE = 'bossPro.spiders' USER_AGENT = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.169 Safari/537.36' # Crawl responsibly by identifying yourself (and your website) on the user-agent #USER_AGENT = 'bossPro (+http://www.yourdomain.com)' # Obey robots.txt rules ROBOTSTXT_OBEY = False # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #} # Enable or disable spider middlewares # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'bossPro.middlewares.BossproSpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # 'bossPro.middlewares.BossproDownloaderMiddleware': 543, #} # Enable or disable extensions # See https://doc.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} # Configure item pipelines # See https://doc.scrapy.org/en/latest/topics/item-pipeline.html ITEM_PIPELINES = { 'bossPro.pipelines.BossproPipeline': 300, } # Enable and configure the AutoThrottle extension (disabled by default) # See https://doc.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

boss.py:

# -*- coding: utf-8 -*- import scrapy from bossPro.items import BossproItem class BossSpider(scrapy.Spider): name = 'boss' # allowed_domains = ['www.xxx.com'] start_urls = ['https://www.zhipin.com/job_detail/?query=python&city=101010100&industry=&position='] url = 'https://www.zhipin.com/c101010100/?query=python&page=%d' page_num = 2 # 回调函数接受item # 极细详情页岗位描述 def parse_detail(self,response): item = response.meta['item'] job_desc = response.xpath('//*[@id="main"]/div[3]/div/div[2]/div[2]/div[1]/div//text()').extract() job_desc = ''.join(job_desc) # print(job_desc) #岗位描述 item['job_desc'] = job_desc yield item #解析首页中的岗位名称 def parse(self, response): li_list = response.xpath('//*[@id="main"]/div/div[3]/ul/li') for li in li_list: # 持续化存储 item = BossproItem() job_name = li.xpath('.//div[@class="info-primary"]/h3/a/div[1]/text()').extract_first() item['job_name'] = job_name # print(job_name) # 岗位名称 detail_url = 'https://www.zhipin.com'+li.xpath('.//div[@class="info-primary"]/h3/a/@href').extract_first() #对详情页发请求获取详情页的页面源码数据 #手动请求的发送 #请求传参:meta={},可以将meta字典传递给请求对应的回调函数 yield scrapy.Request(detail_url,callback=self.parse_detail,meta={'item':item}) #分页操作 (爬前三页) if self.page_num <= 3: new_url = format(self.url%self.page_num) self.page_num += 1 # 发请求 yield scrapy.Request(new_url,callback=self.parse)

item.py:

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy class BossproItem(scrapy.Item): # define the fields for your item here like: job_name = scrapy.Field() job_desc = scrapy.Field() # pass

- 图片数据爬取之ImagesPipeline 管道类

- 基于scrapy爬取字符串类型的数据和爬取图片类型的数据区别?

- 字符串:只需要基于xpath进行解析且提交管道进行持久化存储

- 图片:xpath解析出图片src的属性值。单独的对图片地址发起请求获取图片二进制类型的数据

- 使用ImagesPipeline:

- 只需要将img的src的属性值进行解析,提交到管道,管道就会对图片的src进行请求发送获取图片的二进制类型的数据,且还会帮我们进行持久化存储。

- 需求:爬取站长素材中的高清图片

创建imgpro项目:scrapy startproject imgspro

- 使用流程:

- 数据解析(图片的地址)

img.py:

# -*- coding: utf-8 -*- import scrapy from imgsPro.items import ImgsproItem class ImgSpider(scrapy.Spider): name = 'img' # allowed_domains = ['www.xxx.com'] start_urls = ['http://sc.chinaz.com/tupian/'] def parse(self, response): div_list = response.xpath('//div[@id="container"]/div') for div in div_list: #注意:解析时使用伪属性 src2 # 拿到图片地址 src = div.xpath('./div/a/img/@src2').extract_first() # item = ImgsproItem() item['src'] = src yield item

- 将存储图片地址的item提交到制定的管道类

item.py:

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy class ImgsproItem(scrapy.Item): # define the fields for your item here like: src = scrapy.Field() # pass

- 在管道文件中自定制一个基于ImagesPipeLine的一个管道类

- get_media_request

- file_path

- item_completed

pipelines.py:

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html # class ImgsproPipeline(object): # def process_item(self, item, spider): # return item from scrapy.pipelines.images import ImagesPipeline import scrapy class imgsPileLine(ImagesPipeline): #就是可以根据图片地址进行图片数据的请求 def get_media_requests(self, item, info): yield scrapy.Request(item['src']) #指定图片存储的路径 def file_path(self, request, response=None, info=None): imgName = request.url.split('/')[-1] return imgName def item_completed(self, results, item, info): return item #返回给下一个即将被执行的管道类

- 在配置文件中:

- 指定图片存储的目录:IMAGES_STORE = './imgs_bobo'

- 指定开启的管道:自定制的管道类

settings.py:

# -*- coding: utf-8 -*- # Scrapy settings for imgsPro project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://doc.scrapy.org/en/latest/topics/settings.html # https://doc.scrapy.org/en/latest/topics/downloader-middleware.html # https://doc.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'imgsPro' SPIDER_MODULES = ['imgsPro.spiders'] NEWSPIDER_MODULE = 'imgsPro.spiders' LOG_LEVEL = 'ERROR' # Crawl responsibly by identifying yourself (and your website) on the user-agent USER_AGENT = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36' # Obey robots.txt rules ROBOTSTXT_OBEY = False # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #} # Enable or disable spider middlewares # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'imgsPro.middlewares.ImgsproSpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # 'imgsPro.middlewares.ImgsproDownloaderMiddleware': 543, #} # Enable or disable extensions # See https://doc.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} # Configure item pipelines # See https://doc.scrapy.org/en/latest/topics/item-pipeline.html ITEM_PIPELINES = { 'imgsPro.pipelines.imgsPileLine': 300, } # Enable and configure the AutoThrottle extension (disabled by default) # See https://doc.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage' #指定图片存储的目录 IMAGES_STORE = './imgs_bobo'

- 中间件

- 下载中间件

- 位置:引擎和下载器之间(下载中间件)

- 作用:批量拦截到整个工程中所有的请求和响应

- 拦截请求:

- UA伪装:process_request

- 代理IP:process_exception:return request

middle.py:

# -*- coding: utf-8 -*- import scrapy class MiddleSpider(scrapy.Spider): #爬取百度 name = 'middle' # allowed_domains = ['www.xxxx.com'] start_urls = ['http://www.baidu.com/s?wd=ip'] def parse(self, response): page_text = response.text with open('./ip.html','w',encoding='utf-8') as fp: fp.write(page_text)

middleares.py:

# -*- coding: utf-8 -*- # Define here the models for your spider middleware # # See documentation in: # https://doc.scrapy.org/en/latest/topics/spider-middleware.html from scrapy import signals import random class MiddleproDownloaderMiddleware(object): # Not all methods need to be defined. If a method is not defined, # scrapy acts as if the downloader middleware does not modify the # passed objects. user_agent_list = [ "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 " "(KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1", "Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 " "(KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 " "(KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 " "(KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6", "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 " "(KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1", "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 " "(KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5", "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 " "(KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3", "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 " "(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24", "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 " "(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24" ] PROXY_http = [ '153.180.102.104:80', '195.208.131.189:56055', ] PROXY_https = [ '120.83.49.90:9000', '95.189.112.214:35508', ] #拦截请求 def process_request(self, request, spider): #UA伪装 request.headers['User-Agent'] = random.choice(self.user_agent_list) #为了验证代理的操作是否生效 request.meta['proxy'] = 'http://183.146.213.198:80' return None #拦截所有的响应 def process_response(self, request, response, spider): # Called with the response returned from the downloader. # Must either; # - return a Response object # - return a Request object # - or raise IgnoreRequest return response #拦截发生异常的请求 def process_exception(self, request, exception, spider): if request.url.split(':')[0] == 'http': #代理 request.meta['proxy'] = 'http://'+random.choice(self.PROXY_http) else: request.meta['proxy'] = 'https://' + random.choice(self.PROXY_https) return request #将修正之后的请求对象进行重新的请求发送

settings.py:

# -*- coding: utf-8 -*- # Scrapy settings for middlePro project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://doc.scrapy.org/en/latest/topics/settings.html # https://doc.scrapy.org/en/latest/topics/downloader-middleware.html # https://doc.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'middlePro' SPIDER_MODULES = ['middlePro.spiders'] NEWSPIDER_MODULE = 'middlePro.spiders' # Crawl responsibly by identifying yourself (and your website) on the user-agent #USER_AGENT = 'middlePro (+http://www.yourdomain.com)' # Obey robots.txt rules ROBOTSTXT_OBEY = False # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #} # Enable or disable spider middlewares # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'middlePro.middlewares.MiddleproSpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html DOWNLOADER_MIDDLEWARES = { 'middlePro.middlewares.MiddleproDownloaderMiddleware': 543, } # Enable or disable extensions # See https://doc.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} # Configure item pipelines # See https://doc.scrapy.org/en/latest/topics/item-pipeline.html #ITEM_PIPELINES = { # 'middlePro.pipelines.MiddleproPipeline': 300, #} # Enable and configure the AutoThrottle extension (disabled by default) # See https://doc.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

- 拦截响应:

- 篡改响应数据,响应对象

- 需求:爬取网易新闻中的新闻数据(标题和内容)

- 1.通过网易新闻的首页解析出五大板块对应的详情页的url(没有动态加载)

- 2.每一个板块对应的新闻标题都是动态加载出来的(动态加载)

- 3.通过解析出每一条新闻详情页的url获取详情页的页面源码,解析出新闻内容

wangyiyun.py:

# -*- coding: utf-8 -*- import scrapy from selenium import webdriver from wangyiPro.items import WangyiproItem class WangyiSpider(scrapy.Spider): name = 'wangyi' # allowed_domains = ['www.cccom'] start_urls = ['https://news.163.com/'] models_urls = [] #存储五个板块对应详情页的url #解析五大板块对应详情页的url #实例化一个浏览器对象 def __init__(self): self.bro = webdriver.Chrome(executable_path='/Users/bobo/Desktop/小猿圈爬虫课程/chromedriver') def parse(self, response): li_list = response.xpath('//*[@id="index2016_wrap"]/div[1]/div[2]/div[2]/div[2]/div[2]/div/ul/li') alist = [3,4,6,7,8] for index in alist: model_url = li_list[index].xpath('./a/@href').extract_first() self.models_urls.append(model_url) #依次对每一个板块对应的页面进行请求 for url in self.models_urls:#对每一个板块的url进行请求发送 yield scrapy.Request(url,callback=self.parse_model) #每一个板块对应的新闻标题相关的内容都是动态加载 def parse_model(self,response): #解析每一个板块页面中对应新闻的标题和新闻详情页的url # response.xpath() div_list = response.xpath('/html/body/div/div[3]/div[4]/div[1]/div/div/ul/li/div/div') for div in div_list: title = div.xpath('./div/div[1]/h3/a/text()').extract_first() new_detail_url = div.xpath('./div/div[1]/h3/a/@href').extract_first() item = WangyiproItem() item['title'] = title #对新闻详情页的url发起请求 yield scrapy.Request(url=new_detail_url,callback=self.parse_detail,meta={'item':item}) def parse_detail(self,response):#解析新闻内容 content = response.xpath('//*[@id="endText"]//text()').extract() content = ''.join(content) item = response.meta['item'] item['content'] = content yield item def closed(self,spider): self.bro.quit()

middlewares.py:

# -*- coding: utf-8 -*- # Define here the models for your spider middleware # # See documentation in: # https://doc.scrapy.org/en/latest/topics/spider-middleware.html from scrapy import signals from scrapy.http import HtmlResponse from time import sleep class WangyiproDownloaderMiddleware(object): # Not all methods need to be defined. If a method is not defined, # scrapy acts as if the downloader middleware does not modify the # passed objects. def process_request(self, request, spider): # Called for each request that goes through the downloader # middleware. # Must either: # - return None: continue processing this request # - or return a Response object # - or return a Request object # - or raise IgnoreRequest: process_exception() methods of # installed downloader middleware will be called return None #该方法拦截五大板块对应的响应对象,进行篡改 def process_response(self, request, response, spider):#spider爬虫对象 bro = spider.bro#获取了在爬虫类中定义的浏览器对象 #挑选出指定的响应对象进行篡改 #通过url指定request #通过request指定response if request.url in spider.models_urls: bro.get(request.url) #五个板块对应的url进行请求 sleep(3) page_text = bro.page_source #包含了动态加载的新闻数据 #response #五大板块对应的响应对象 #针对定位到的这些response进行篡改 #实例化一个新的响应对象(符合需求:包含动态加载出的新闻数据),替代原来旧的响应对象 #如何获取动态加载出的新闻数据? #基于selenium便捷的获取动态加载数据 new_response = HtmlResponse(url=request.url,body=page_text,encoding='utf-8',request=request) return new_response else: #response #其他请求对应的响应对象 return response def process_exception(self, request, exception, spider): # Called when a download handler or a process_request() # (from other downloader middleware) raises an exception. # Must either: # - return None: continue processing this exception # - return a Response object: stops process_exception() chain # - return a Request object: stops process_exception() chain pass

settings.py:

# -*- coding: utf-8 -*- # Scrapy settings for wangyiPro project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://doc.scrapy.org/en/latest/topics/settings.html # https://doc.scrapy.org/en/latest/topics/downloader-middleware.html # https://doc.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'wangyiPro' SPIDER_MODULES = ['wangyiPro.spiders'] NEWSPIDER_MODULE = 'wangyiPro.spiders' # Crawl responsibly by identifying yourself (and your website) on the user-agent #USER_AGENT = 'wangyiPro (+http://www.yourdomain.com)' USER_AGENT = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.169 Safari/537.36' # Obey robots.txt rules ROBOTSTXT_OBEY = False # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #} # Enable or disable spider middlewares # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'wangyiPro.middlewares.WangyiproSpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html DOWNLOADER_MIDDLEWARES = { 'wangyiPro.middlewares.WangyiproDownloaderMiddleware': 543, } # Enable or disable extensions # See https://doc.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} # Configure item pipelines # See https://doc.scrapy.org/en/latest/topics/item-pipeline.html ITEM_PIPELINES = { 'wangyiPro.pipelines.WangyiproPipeline': 300, } LOG_LEVEL = 'ERROR' # Enable and configure the AutoThrottle extension (disabled by default) # See https://doc.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

pipeliines.py:

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html class WangyiproPipeline(object): def process_item(self, item, spider): print(item) return item

items.py:

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy class WangyiproItem(scrapy.Item): # define the fields for your item here like: title = scrapy.Field() content = scrapy.Field()

- CrawlSpider:本质是类,Spider的一个子类

- 作用:全站数据爬取的方式(某板块下所有页码数据进行爬取)

- 第一种方式 基于Spider:手动请求

- 第二种方式 基于CrawlSpider

- CrawlSpider的使用:

- 创建一个工程

- cd XXX

- 创建爬虫文件(CrawlSpider):

- scrapy genspider -t crawl 爬虫文件名称 www.xxxx.com

- 链接提取器:

- 作用:根据指定的规则(allow)进行指定链接的提取

- 规则解析器:

- 作用:将链接提取器提取到的链接进行指定规则(callback)的解析

#需求:爬取sun网站中的编号,新闻标题,新闻内容,标号

- 分析:爬取的数据没有在同一张页面中。

- 1.可以使用链接提取器提取所有的页码链接

- 2.让链接提取器提取所有的新闻详情页的链接

sun.py:

# -*- coding: utf-8 -*- import scrapy from scrapy.linkextractors import LinkExtractor from scrapy.spiders import CrawlSpider, Rule from sunPro.items import SunproItem,DetailItem #需求:爬取sun网站中的编号,新闻标题,新闻内容,标号 class SunSpider(CrawlSpider): name = 'sun' # allowed_domains = ['www.xxx.com'] start_urls = ['http://wz.sun0769.com/index.php/question/questionType?type=4&page='] #链接提取器:根据指定规则(allow="正则")进行指定链接的提取 link = LinkExtractor(allow=r'type=4&page=\d+') link_detail = LinkExtractor(allow=r'question/\d+/\d+\.shtml') rules = ( #规则解析器:将链接提取器提取到的链接进行指定规则(callback)的解析操作 Rule(link, callback='parse_item', follow=True), #follow=True:可以将链接提取器 继续作用到 连接提取器提取到的链接 所对应的页面中 Rule(link_detail,callback='parse_detail') ) #http://wz.sun0769.com/html/question/201907/421001.shtml #http://wz.sun0769.com/html/question/201907/420987.shtml #解析新闻编号和新闻的标题 #如下两个解析方法中是不可以实现请求传参! #如法将两个解析方法解析的数据存储到同一个item中,可以以此存储到两个item def parse_item(self, response): #注意:xpath表达式中不可以出现tbody标签 tr_list = response.xpath('//*[@id="morelist"]/div/table[2]//tr/td/table//tr') for tr in tr_list: new_num = tr.xpath('./td[1]/text()').extract_first() new_title = tr.xpath('./td[2]/a[2]/@title').extract_first() item = SunproItem() item['title'] = new_title item['new_num'] = new_num yield item #解析新闻内容和新闻编号 def parse_detail(self,response): new_id = response.xpath('/html/body/div[9]/table[1]//tr/td[2]/span[2]/text()').extract_first() new_content = response.xpath('/html/body/div[9]/table[2]//tr[1]//text()').extract() new_content = ''.join(new_content) # print(new_id,new_content) item = DetailItem() item['content'] = new_content item['new_id'] = new_id yield item

settings.py:

# -*- coding: utf-8 -*- # Scrapy settings for sunPro project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://doc.scrapy.org/en/latest/topics/settings.html # https://doc.scrapy.org/en/latest/topics/downloader-middleware.html # https://doc.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'sunPro' SPIDER_MODULES = ['sunPro.spiders'] NEWSPIDER_MODULE = 'sunPro.spiders' USER_AGENT = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.169 Safari/537.36' LOG_LEVEL = 'ERROR' # Crawl responsibly by identifying yourself (and your website) on the user-agent #USER_AGENT = 'sunPro (+http://www.yourdomain.com)' # Obey robots.txt rules ROBOTSTXT_OBEY = False # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #} # Enable or disable spider middlewares # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'sunPro.middlewares.SunproSpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # 'sunPro.middlewares.SunproDownloaderMiddleware': 543, #} # Enable or disable extensions # See https://doc.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} # Configure item pipelines # See https://doc.scrapy.org/en/latest/topics/item-pipeline.html ITEM_PIPELINES = { 'sunPro.pipelines.SunproPipeline': 300, } # Enable and configure the AutoThrottle extension (disabled by default) # See https://doc.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

item.py:

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy class SunproItem(scrapy.Item): # define the fields for your item here like: title = scrapy.Field() new_num = scrapy.Field() class DetailItem(scrapy.Item): new_id = scrapy.Field() content = scrapy.Field()

pipelins.py:

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html class SunproPipeline(object): def process_item(self, item, spider): #如何判定item的类型 #将数据写入数据库时,如何保证数据的一致性 if item.__class__.__name__ == 'DetailItem': print(item['new_id'],item['content']) pass else: print(item['new_num'],item['title']) return item

- 分布式爬虫

- 概念:我们需要搭建一个分布式的机群,让其对一组资源进行分布联合爬取。(多条电脑 向共享的调度器中获取url)

- 作用:提升爬取数据的效率

- 如何实现分布式?

- 安装一个scrapy-redis的组件

- 原生的scarapy是不可以实现分布式爬虫,必须要让scrapy结合着scrapy-redis组件一起实现分布式爬虫。

- 为什么原生的scrapy不可以实现分布式?

- 调度器不可以被分布式机群共享

- 管道不可以被分布式机群共享

- scrapy-redis组件作用:

- 可以给原生的scrapy框架提供可以被共享的管道和调度器

- 实现流程

- 创建一个工程(原有指令可以)

- 创建一个基于CrawlSpider的爬虫文件

fbs.py:

# -*- coding: utf-8 -*- import scrapy from scrapy.linkextractors import LinkExtractor from scrapy.spiders import CrawlSpider, Rule from fbsPro.items import FbsproItem from scrapy_redis.spiders import RedisCrawlSpider class FbsSpider(RedisCrawlSpider): name = 'fbs' # allowed_domains = ['www.xxx.com'] # start_urls = ['http://www.xxx.com/'] redis_key = 'sun' rules = ( Rule(LinkExtractor(allow=r'type=4&page=\d+'), callback='parse_item', follow=True), ) def parse_item(self, response): tr_list = response.xpath('//*[@id="morelist"]/div/table[2]//tr/td/table//tr') for tr in tr_list: new_num = tr.xpath('./td[1]/text()').extract_first() new_title = tr.xpath('./td[2]/a[2]/@title').extract_first() item = FbsproItem() item['title'] = new_title item['new_num'] = new_num yield item

- 修改当前的爬虫文件fbs.py:

- 导包:from scrapy_redis.spiders import RedisCrawlSpider

- 将start_urls和allowed_domains进行注释

- 添加一个新属性:redis_key = 'sun' 可以被共享的调度器队列的名称

- 编写数据解析相关的操作

- 将当前爬虫类的父类修改成RedisCrawlSpider

item.py:

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy class FbsproItem(scrapy.Item): # define the fields for your item here like: title = scrapy.Field() new_num = scrapy.Field() # pass

settings.py:

# -*- coding: utf-8 -*- # Scrapy settings for fbsPro project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://doc.scrapy.org/en/latest/topics/settings.html # https://doc.scrapy.org/en/latest/topics/downloader-middleware.html # https://doc.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'fbsPro' SPIDER_MODULES = ['fbsPro.spiders'] NEWSPIDER_MODULE = 'fbsPro.spiders' # Crawl responsibly by identifying yourself (and your website) on the user-agent #USER_AGENT = 'fbsPro (+http://www.yourdomain.com)' # Obey robots.txt rules ROBOTSTXT_OBEY = False USER_AGENT = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.169 Safari/537.36' # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #} # Enable or disable spider middlewares # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'fbsPro.middlewares.FbsproSpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # 'fbsPro.middlewares.FbsproDownloaderMiddleware': 543, #} # Enable or disable extensions # See https://doc.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} # Configure item pipelines # See https://doc.scrapy.org/en/latest/topics/item-pipeline.html # ITEM_PIPELINES = { # 'fbsPro.pipelines.FbsproPipeline': 300, # } # Enable and configure the AutoThrottle extension (disabled by default) # See https://doc.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage' #指定管道 ITEM_PIPELINES = { 'scrapy_redis.pipelines.RedisPipeline': 400 } #指定调度器 # 增加了一个去重容器类的配置, 作用使用Redis的set集合来存储请求的指纹数据, 从而实现请求去重的持久化 DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter" # 使用scrapy-redis组件自己的调度器 SCHEDULER = "scrapy_redis.scheduler.Scheduler" # 配置调度器是否要持久化, 也就是当爬虫结束了, 要不要清空Redis中请求队列和去重指纹的set。如果是True, 就表示要持久化存储, 就不清空数据, 否则清空数据 SCHEDULER_PERSIST = True #指定redis REDIS_HOST = '127.0.0.1' #redis远程服务器的ip(修改) REDIS_PORT = 6379

- 修改配置文件settings

- 指定使用可以被共享的管道:

ITEM_PIPELINES = {

'scrapy_redis.pipelines.RedisPipeline': 400

}

- 指定调度器:

# 增加了一个去重容器类的配置, 作用使用Redis的set集合来存储请求的指纹数据, 从而实现请求去重的持久化

DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter"

# 使用scrapy-redis组件自己的调度器

SCHEDULER = "scrapy_redis.scheduler.Scheduler"

# 配置调度器是否要持久化, 也就是当爬虫结束了, 要不要清空Redis中请求队列和去重指纹的set。如果是True, 就表示要持久化存储, 就不清空数据, 否则清空数据

SCHEDULER_PERSIST = True

- 指定redis服务器:

- redis相关操作配置:

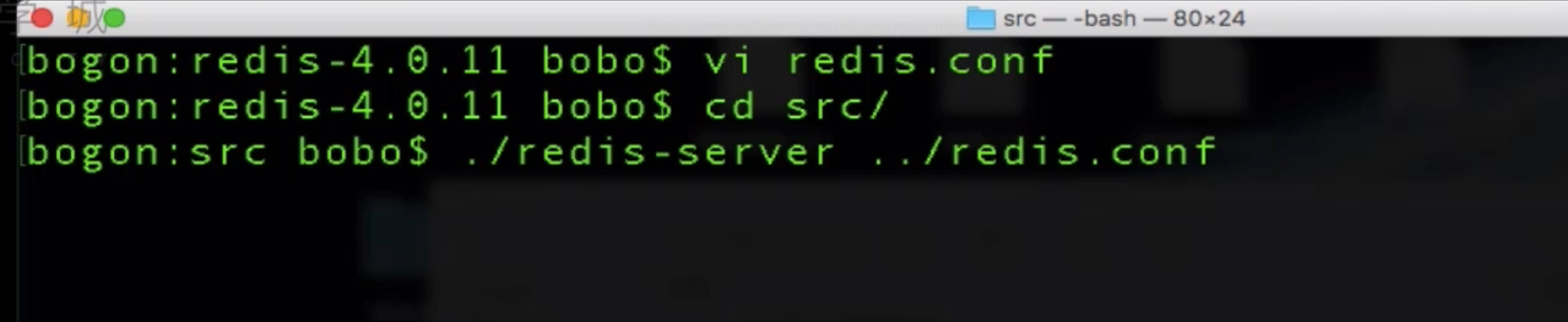

- 配置redis的配置文件:

- linux或者mac:redis.conf

- windows:redis.windows.conf

- 代开配置文件修改:

- 将bind 127.0.0.1进行删除

- 关闭保护模式:protected-mode yes改为no

- 结合着配置文件开启redis服务

- redis-server 配置文件

- 启动客户端:

- redis-cli

- 执行工程:(将项目拷贝到多台电脑 执行此命令)

- scrapy runspider xxx.py

- 向调度器的队列中放入一个起始的url:

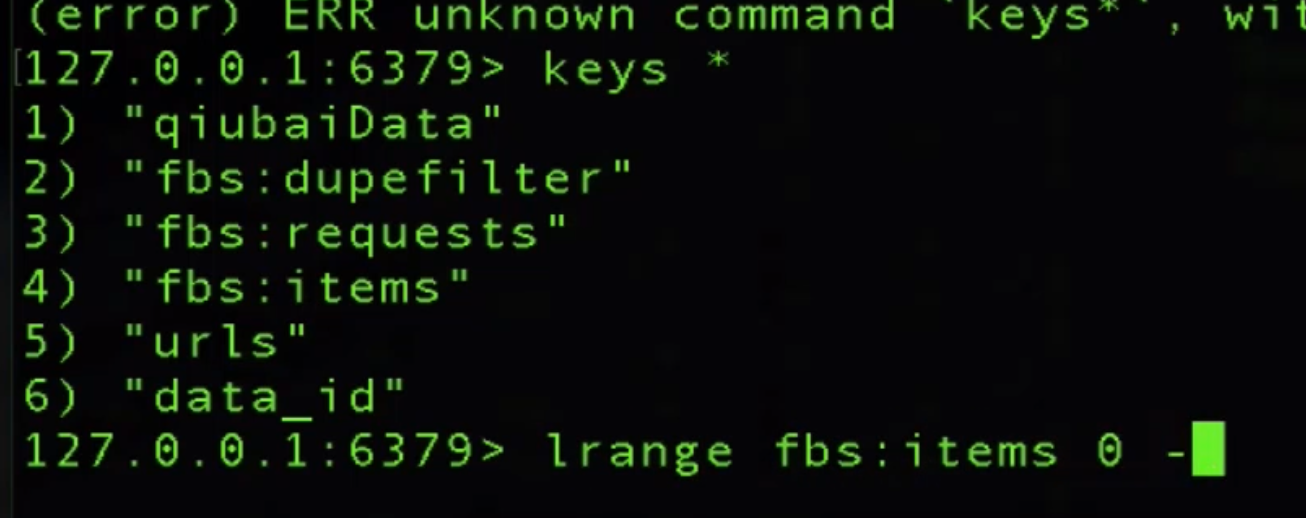

- 调度器的队列在redis的客户端中

- lpush xxx www.xxx.com

- 爬取到的数据存储在了redis的proName:items这个数据结构中

下载scrapy-redis:

增量式爬虫

- 概念:监测网站数据更新的情况,只会爬取网站最新更新出来的数据。

- 分析:

- 指定一个起始url

- 基于CrawlSpider获取其他页码链接

- 基于Rule将其他页码链接进行请求

- 从每一个页码对应的页面源码中解析出每一个电影详情页的URL

- 核心:检测电影详情页的url之前有没有请求过

- 将爬取过的电影详情页的url存储

- 存储到redis的set数据结构

- 对详情页的url发起请求,然后解析出电影的名称和简介

- 进行持久化存储

movie.py:

# -*- coding: utf-8 -*- import scrapy from scrapy.linkextractors import LinkExtractor from scrapy.spiders import CrawlSpider, Rule from redis import Redis from moviePro.items import MovieproItem class MovieSpider(CrawlSpider): name = 'movie' # allowed_domains = ['www.ccc.com'] start_urls = ['https://www.4567tv.tv/frim/index1.html'] rules = ( Rule(LinkExtractor(allow=r'/frim/index1-\d+\.html'), callback='parse_item', follow=True), ) # 创建redis链接对象 conn = Redis(host='127.0.0.1', port=6379) #用于解析每一个页码对应页面中的电影详情页的url def parse_item(self, response): li_list = response.xpath('/html/body/div[1]/div/div/div/div[2]/ul/li') for li in li_list: # 获取详情页的url detail_url = 'https://www.4567tv.tv' + li.xpath('./div/a/@href').extract_first() # 将详情页的url存入redis的set中 ex = self.conn.sadd('urls', detail_url) if ex == 1: print('该url没有被爬取过,可以进行数据的爬取') yield scrapy.Request(url=detail_url, callback=self.parst_detail) else: print('数据还没有更新,暂无新数据可爬取!') # 解析详情页中的电影名称和类型,进行持久化存储 def parst_detail(self, response): item = MovieproItem() item['name'] = response.xpath('/html/body/div[1]/div/div/div/div[2]/h1/text()').extract_first() item['desc'] = response.xpath('/html/body/div[1]/div/div/div/div[2]/p[5]/span[2]//text()').extract() item['desc'] = ''.join(item['desc']) yield item

pipelines.py:

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html from redis import Redis class MovieproPipeline(object): conn = None def open_spider(self,spider): self.conn = spider.conn def process_item(self, item, spider): dic = { 'name':item['name'], 'desc':item['desc'] } # print(dic) self.conn.lpush('movieData',dic) return item

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY