使用VGG模型进行猫狗大战

import numpy as np import matplotlib.pyplot as plt import os import torch import torch.nn as nn import torchvision from torchvision import models,transforms,datasets import time import json

1、下载数据

! wget https://static.leiphone.com/cat_dog.rar

! unrar x cat_dog.rar

2、数据处理

datasets 是 torchvision 中的一个包,可以用做加载图像数据。它可以以多线程(multi-thread)的形式从硬盘中读取数据,使用 mini-batch 的形式,在网络训练中向 GPU 输送。在使用CNN处理图像时,需要进行预处理。图片将被整理成 224×224×3 的大小,同时还将进行归一化处理。

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]) vgg_format = transforms.Compose([ transforms.CenterCrop(224), transforms.ToTensor(), normalize, ]) #这里进行了修改,包括训练数据、验证数据、以及测试数据,分别在三个目录train/val/test import shutil data_dir = './cat_dog' os.mkdir("./cat_dog/train/cat") os.mkdir("./cat_dog/train/dog") os.mkdir("./cat_dog/val/cat") os.mkdir("./cat_dog/val/dog") for i in range(10000): cat_name = './cat_dog/train/cat_'+str(i)+'.jpg'; dog_name = './cat_dog/train/dog_'+str(i)+'.jpg'; shutil.move(cat_name,"./cat_dog/train/cat") shutil.move(dog_name,"./cat_dog/train/dog") for i in range(1000): cat_name = './cat_dog/val/cat_'+str(i)+'.jpg'; dog_name = './cat_dog/val/dog_'+str(i)+'.jpg'; shutil.move(cat_name,"./cat_dog/val/cat") shutil.move(dog_name,"./cat_dog/val/dog") #读取测试问题的数据集 test_path = "./cat_dog/test/dogs_cats" os.mkdir(test_path) #移动到test_path for i in range(2000): name = './cat_dog/test/'+str(i)+'.jpg' shutil.move(name,"./cat_dog/test/dogs_cats") file_list=os.listdir("./cat_dog/test/dogs_cats") #将图片名补全,防止读取顺序不对 for file in file_list: #填充0后名字总共10位,包括扩展名 filename = file.zfill(10) new_name =''.join(filename) os.rename(test_path+'/'+file,test_path+'/'+new_name) #将所有图片数据放到dsets内 dsets = {x: datasets.ImageFolder(os.path.join(data_dir, x), vgg_format) for x in ['train','val','test']} dset_sizes = {x: len(dsets[x]) for x in ['train','val','test']} dset_classes = dsets['train'].classes

loader_train = torch.utils.data.DataLoader(dsets['train'], batch_size=64, shuffle=True, num_workers=6) loader_valid = torch.utils.data.DataLoader(dsets['val'], batch_size=5, shuffle=False, num_workers=6) #加入测试集 loader_test = torch.utils.data.DataLoader(dsets['test'], batch_size=5,shuffle=False, num_workers=6) ''' valid 数据一共有2000张图,每个batch是5张,因此,下面进行遍历一共会输出到 400 同时,把第一个 batch 保存到 inputs_try, labels_try,分别查看 ''' count = 1 for data in loader_test: print(count, end=',') if count%50==0: print() if count == 1: inputs_try,labels_try = data count +=1 print(labels_try) print(inputs_try.shape)

# 显示图片的小程序 def imshow(inp, title=None): # Imshow for Tensor. inp = inp.numpy().transpose((1, 2, 0)) mean = np.array([0.485, 0.456, 0.406]) std = np.array([0.229, 0.224, 0.225]) inp = np.clip(std * inp + mean, 0,1) plt.imshow(inp) if title is not None: plt.title(title) plt.pause(0.001) # pause a bit so that plots are updated

# 显示 labels_try 的5张图片,即valid里第一个batch的5张图片 out = torchvision.utils.make_grid(inputs_try) imshow(out, title=[dset_classes[x] for x in labels_try])

3. 创建 VGG Model

!wget https://s3.amazonaws.com/deep-learning-models/image-models/imagenet_class_index.json

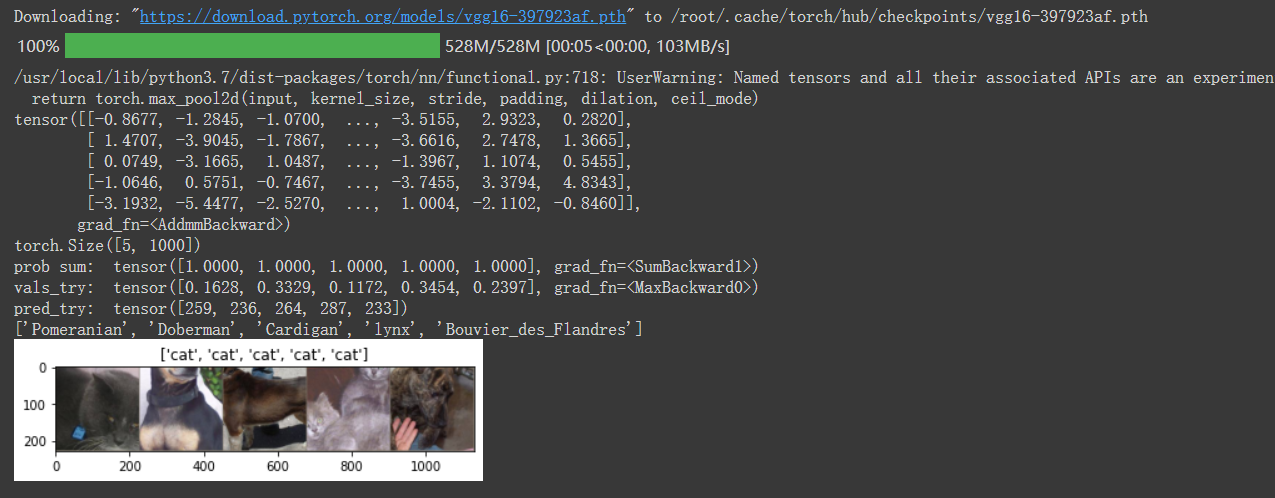

model_vgg = models.vgg16(pretrained=True) with open('./imagenet_class_index.json') as f: class_dict = json.load(f) dic_imagenet = [class_dict[str(i)][1] for i in range(len(class_dict))] inputs_try , labels_try = inputs_try.to(device), labels_try.to(device) model_vgg = model_vgg.to(device) outputs_try = model_vgg(inputs_try) print(outputs_try) print(outputs_try.shape) ''' 可以看到结果为5行,1000列的数据,每一列代表对每一种目标识别的结果。 但是我也可以观察到,结果非常奇葩,有负数,有正数, 为了将VGG网络输出的结果转化为对每一类的预测概率,我们把结果输入到 Softmax 函数 ''' m_softm = nn.Softmax(dim=1) probs = m_softm(outputs_try) vals_try,pred_try = torch.max(probs,dim=1) print( 'prob sum: ', torch.sum(probs,1)) print( 'vals_try: ', vals_try) print( 'pred_try: ', pred_try) print([dic_imagenet[i] for i in pred_try.data]) imshow(torchvision.utils.make_grid(inputs_try.data.cpu()), title=[dset_classes[x] for x in labels_try.data.cpu()])

4. 修改最后一层,冻结前面层的参数

print(model_vgg) model_vgg_new = model_vgg; for param in model_vgg_new.parameters(): param.requires_grad = False model_vgg_new.classifier._modules['6'] = nn.Linear(4096, 2) model_vgg_new.classifier._modules['7'] = torch.nn.LogSoftmax(dim = 1) model_vgg_new = model_vgg_new.to(device) print(model_vgg_new.classifier)

5. 训练并测试全连接层

包括三个步骤:第1步,创建损失函数和优化器;第2步,训练模型;第3步,测试模型。

''' 第一步:创建损失函数和优化器 损失函数 NLLLoss() 的 输入 是一个对数概率向量和一个目标标签. 它不会为我们计算对数概率,适合最后一层是log_softmax()的网络. ''' criterion = nn.NLLLoss() # 学习率 lr = 0.001 # 随机梯度下降 optimizer_vgg = torch.optim.SGD(model_vgg_new.classifier[6].parameters(),lr = lr) ''' 第二步:训练模型 ''' def train_model(model,dataloader,size,epochs=1,optimizer=None): model.train() for epoch in range(epochs): running_loss = 0.0 running_corrects = 0 count = 0 for inputs,classes in dataloader: inputs = inputs.to(device) classes = classes.to(device) outputs = model(inputs) loss = criterion(outputs,classes) optimizer = optimizer optimizer.zero_grad() loss.backward() optimizer.step() _,preds = torch.max(outputs.data,1) # statistics running_loss += loss.data.item() running_corrects += torch.sum(preds == classes.data) count += len(inputs) print('Training: No. ', count, ' process ... total: ', size) epoch_loss = running_loss / size epoch_acc = running_corrects.data.item() / size print('Loss: {:.4f} Acc: {:.4f}'.format( epoch_loss, epoch_acc)) # 模型训练 train_model(model_vgg_new,loader_train,size=dset_sizes['train'], epochs=1, optimizer=optimizer_vgg)

#验证模型正确率的代码 def test_model(model,dataloader,size): model.eval() predictions = np.zeros(size) all_classes = np.zeros(size) all_proba = np.zeros((size,2)) i = 0 running_loss = 0.0 running_corrects = 0 for inputs,classes in dataloader: inputs = inputs.to(device) classes = classes.to(device) outputs = model(inputs) loss = criterion(outputs,classes) _,preds = torch.max(outputs.data,1) # statistics running_loss += loss.data.item() running_corrects += torch.sum(preds == classes.data) predictions[i:i+len(classes)] = preds.to('cpu').numpy() all_classes[i:i+len(classes)] = classes.to('cpu').numpy() all_proba[i:i+len(classes),:] = outputs.data.to('cpu').numpy() i += len(classes) print('validing: No. ', i, ' process ... total: ', size) epoch_loss = running_loss / size epoch_acc = running_corrects.data.item() / size print('Loss: {:.4f} Acc: {:.4f}'.format( epoch_loss, epoch_acc)) return predictions, all_proba, all_classes #predictions, all_proba, all_classes = test_model(model_vgg_new,loader_valid,size=dset_sizes['val']) #如果使用的是已有的模型,应该跑下面这行代码 predictions, all_proba, all_classes = test_model(model_new,loader_valid,size=dset_sizes['val'])

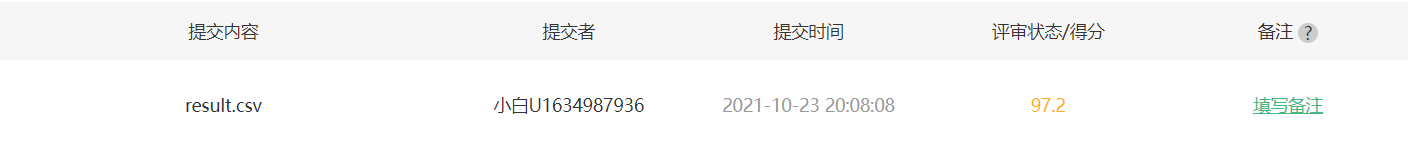

#这个是对测试集进行预测的代码 def result_model(model,dataloader,size): model.eval() predictions=np.zeros((size,2),dtype='int') i = 0 for inputs,classes in dataloader: inputs = inputs.to(device) outputs = model(inputs) #_表示的就是具体的value,preds表示下标,1表示在行上操作取最大值,返回类别 _,preds = torch.max(outputs.data,1) predictions[i:i+len(classes),1] = preds.to('cpu').numpy(); predictions[i:i+len(classes),0] = np.linspace(i,i+len(classes)-1,len(classes)) #可在过程中看到部分结果 print(predictions[i:i+len(classes),:]) i += len(classes) print('creating: No. ', i, ' process ... total: ', size) return predictions result = result_model(model_vgg_new,loader_test,size=dset_sizes['test']) #如果使用的是已有的模型,应该跑下面这行代码 result = result_model(model_new,loader_test,size=dset_sizes['test']) #这里是生成结果的文件,上传到AI研习社可以看到正确率 np.savetxt("./cat_dog/result.csv",result,fmt="%d",delimiter=",")

6. 可视化模型预测结果(主观分析)

主观分析就是把预测的结果和相对应的测试图像输出出来看看,一般有四种方式:

随机查看一些预测正确的图片

随机查看一些预测错误的图片

预测正确,同时具有较大的probability的图片

预测错误,同时具有较大的probability的图片

最不确定的图片,比如说预测概率接近0.5的图片

# 单次可视化显示的图片个数 n_view = 8 correct = np.where(predictions==all_classes)[0] from numpy.random import random, permutation idx = permutation(correct)[:n_view] print('random correct idx: ', idx) loader_correct = torch.utils.data.DataLoader([dsets['valid'][x] for x in idx], batch_size = n_view,shuffle=True) for data in loader_correct: inputs_cor,labels_cor = data # Make a grid from batch out = torchvision.utils.make_grid(inputs_cor) imshow(out, title=[l.item() for l in labels_cor]) print(all_classes) # 类似的思路,可以显示错误分类的图片,这里不再重复代码

浙公网安备 33010602011771号

浙公网安备 33010602011771号