soft Exponential activation function

全文 https://ieeexplore.ieee.org/document/7526959

Soft Exponential Activation Function

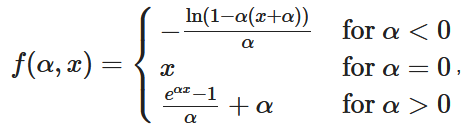

A Soft Exponential Activation Function is a parametric neuron activation function that is based on the inperpolation of the identity, logarithm and exponential functions .

- Context:

- It is usually defined as

-

- It can (typically) be used in Radial Basis Function Neural Network and Fourier Neural Networks.

- Example(s):

- …

- Counter-Example(s):

- a Softmax-based Activation Function,

- a Rectified-based Activation Function,

- a Heaviside Step Activation Function,

- a Ramp Function-based Activation Function,

- a Logistic Sigmoid-based Activation Function,

- a Hyperbolic Tangent-based Activation Function,

- a Gaussian-based Activation Function,

- a Softsign Activation Function,

- a Softshrink Activation Function,

- an Adaptive Piecewise Linear Activation Function,

- a Maxout Activation Function,

- a Long Short-Term Memory Unit-based Activation Function,

- a Bent Identity Activation Function,

- an Inverse Square Root Unit-based Activation Function,

- a Sinusoid-based Activation Function.

- See: Artificial Neural Network, Artificial Neuron, Neural Network Topology, Neural Network Layer, Neural Network Learning Rate.

References

2018

- (Wikipedia, 2018) ⇒ https://en.wikipedia.org/wiki/Activation_function#Comparison_of_activation_functions Retrieved:2018-2-18.

- The following table compares the properties of several activation functions that are functions of one fold from the previous layer or layers:

Here, H is the Heaviside step function.

α is a stochastic variable sampled from a uniform distribution at training time and fixed to the expectation value of the distribution at test time.

2015

- (Gofrey & Gashler, 2015) ⇒ Godfrey, L. B., & Gashler, M. S. (2015, November). A continuum among logarithmic, linear, and exponential functions, and its potential to improve generalization in neural networks. In Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K), 2015 7th International Joint Conference on (Vol. 1, pp. 481-486). IEEE, arXiv:1602.01321.

- ABSTRACT: We present the soft exponential activation function for artificial neural networks that continuously interpolates between logarithmic, linear, and exponential functions. This activation function is simple, differentiable, and parameterized so that it can be trained as the rest of the network is trained. We hypothesize that soft exponential has the potential to improve neural network learning, as it can exactly calculate many natural operations that typical neural networks can only approximate, including addition, multiplication, inner product, distance, polynomials, and sinusoids.

- 1. Godfrey, Luke B.; Gashler, Michael S. (2016-02-03). "A continuum among logarithmic, linear, and exponential functions, and its potential to improve generalization in neural networks". 7th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management: KDIR 1602: 481–486. arXiv:1602.01321. Bibcode 2016arXiv160201321G.

- Gashler, Michael S.; Ashmore, Stephen C. (2014-05-09). “Training Deep Fourier Neural Networks To Fit Time-Series Data". arXiv:1405.2262 Freely accessible cs.NE.

浙公网安备 33010602011771号

浙公网安备 33010602011771号