scrapy中如何处理大文件下载?

scrapy不建议通过爬虫文件来发送请求下载大文件,而是通过scrapy已经封装好的管道类去执行,效率更高

管道类:

- from scrapy.pipelines.files import FilesPipeline # 专门用来下载文件的管道类

- from scrapy.pipelines.images import ImagesPipeline # 专门用来下载图片、音频、视频等二进制文件(该类继承自上面的FilesPipeline)

我们定义一个类去继承自上面任意一个管道类,然后重写以下3个方法:

-

-

get_media_requests(self, item, info):发送请求

-

file_path(self, request, response=None, info=None, *, item=None):返回文件名

-

item_completed(self, results, item, info):返回item,供后续管道继续处理

-

配置文件settings.py:

- 如果使用的FilesPipeline类,则需要定义变量:FILES_STORE = '文件夹名'

- 如果使用的ImagesPipeline类,则需要定义变量:IMAGES_STORE = '文件夹名'

- 文件夹如果没有事先创建的话,则会自动创建,该文件夹用于存放下载的文件

案例:

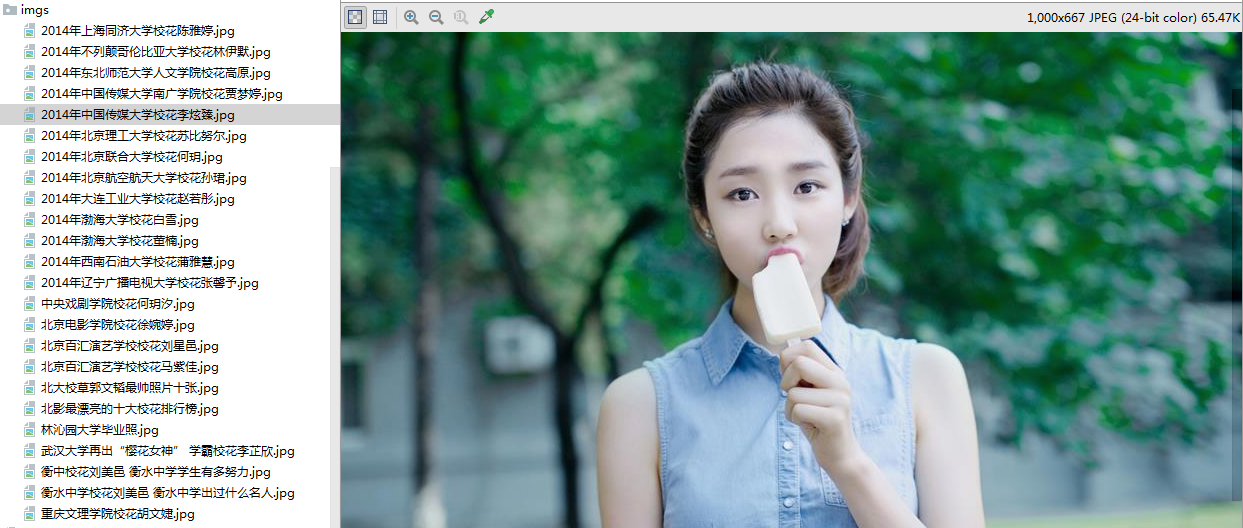

- 爬取中国大学生校花网首页中的图片

- url:http://www.xiaohuar.com/daxue/

-

items.py

import scrapy class BigfileItem(scrapy.Item): name = scrapy.Field() #图片名 src = scrapy.Field() #图片地址 -

spiders/xiaohua.py

import scrapy

from bigFile.items import BigfileItem class XiaohuaSpider(scrapy.Spider): name = 'xiaohua' allowed_domains = ['www.xiaohuar.com'] start_urls = ['http://www.xiaohuar.com/daxue/'] def parse(self, response): divs = response.xpath('//div[@class="card diy-box shadow mb-5"]') for div in divs: name = div.xpath('./a/img/@alt').extract_first()+'.jpg' #图片名 src = div.xpath('./a/img/@src').extract_first() #图片下载地址 item = BigfileItem() item['name'] = name item['src'] = src yield item -

middlewares.py

from fake_useragent import UserAgent class BigfileDownloaderMiddleware: def process_request(self, request, spider): request.headers['User-Agent'] = UserAgent(use_cache_server=False).random #给每个请求添上随机UA return None -

pipelines.py

from scrapy.pipelines.images import ImagesPipeline from scrapy import Request class BigfilePipeline(ImagesPipeline): # 根据图片地址发送请求 def get_media_requests(self, item, info): yield Request(item['src'],meta={'item':item}) #只需要返回图片名 def file_path(self, request, response=None, info=None, *, item=None): item = request.meta['item'] return item['name'] #返回item,供后续管道继续处理 def item_completed(self, results, item, info): return item -

settings.py

BOT_NAME = 'bigFile' SPIDER_MODULES = ['bigFile.spiders'] NEWSPIDER_MODULE = 'bigFile.spiders' ROBOTSTXT_OBEY = False IMAGES_STORE = './imgs' #下载好的图片,存储在imgs文件夹下 #启用下载中间件 DOWNLOADER_MIDDLEWARES = { 'bigFile.middlewares.BigfileDownloaderMiddleware': 543, } #启用管道 ITEM_PIPELINES = { 'bigFile.pipelines.BigfilePipeline': 300, } -

爬取效果演示