实验3:OpenFlow协议分析实践

实验3:OpenFlow协议分析实践

一、实验目的

能够运用 wireshark 对 OpenFlow 协议数据交互过程进行抓包;

能够借助包解析工具,分析与解释 OpenFlow协议的数据包交互过程与机制。

二、实验环境

下载虚拟机软件Oracle VisualBox;

在虚拟机中安装Ubuntu 20.04 Desktop amd64,并完整安装Mininet;

三、实验要求

(一)基本要求

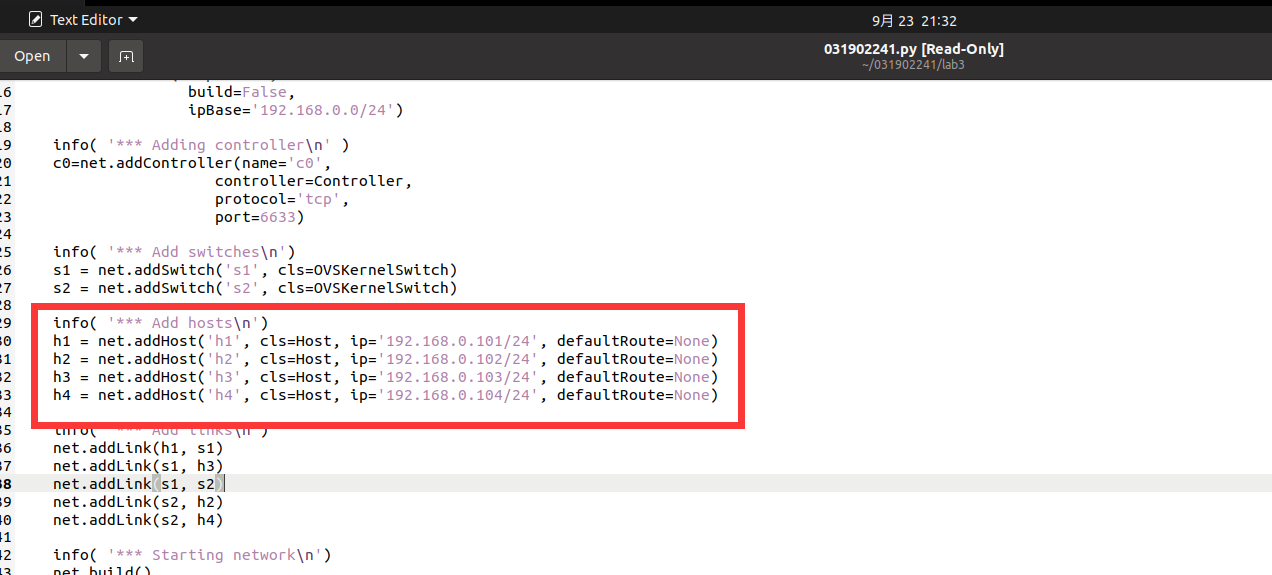

搭建下图所示拓扑,完成相关 IP 配置,并实现主机与主机之间的 IP 通信。用抓包软件获取控制器与交换机之间的通信数据包。

| 主机 | IP地址 |

|---|---|

| h1 | 192.168.0.101/24 |

| h2 | 192.168.0.102/24 |

| h3 | 192.168.0.103/24 |

| h4 | 192.168.0.104/24 |

1. 配置网段

2. 配置ip地址

3. 保存拓扑为python文件

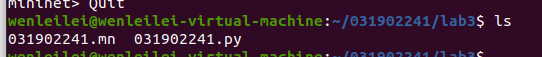

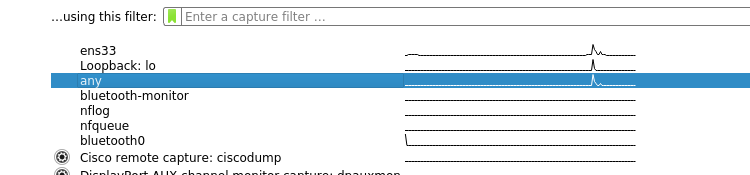

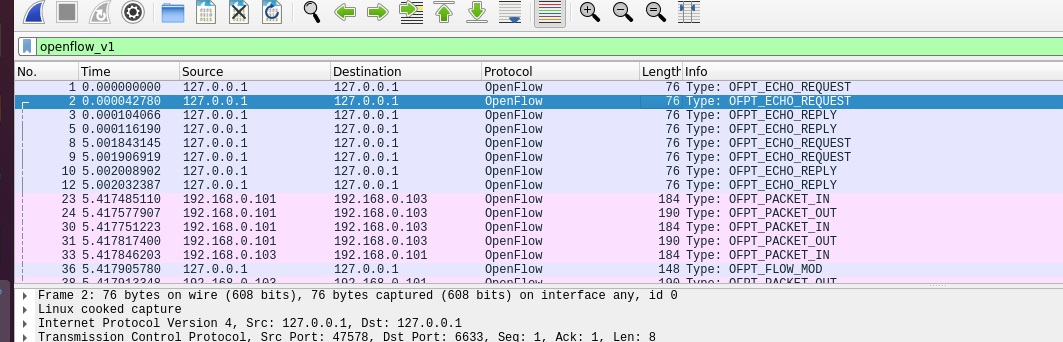

4. 运行sudo wireshark命令,并选择any模式进行抓包,开启另一个终端,命令行运行031902241.py文件,运行pingall

5. 查看抓包结果,分析OpenFlow协议中交换机与控制器的消息交互过程(截图以其中一个交换机为例)

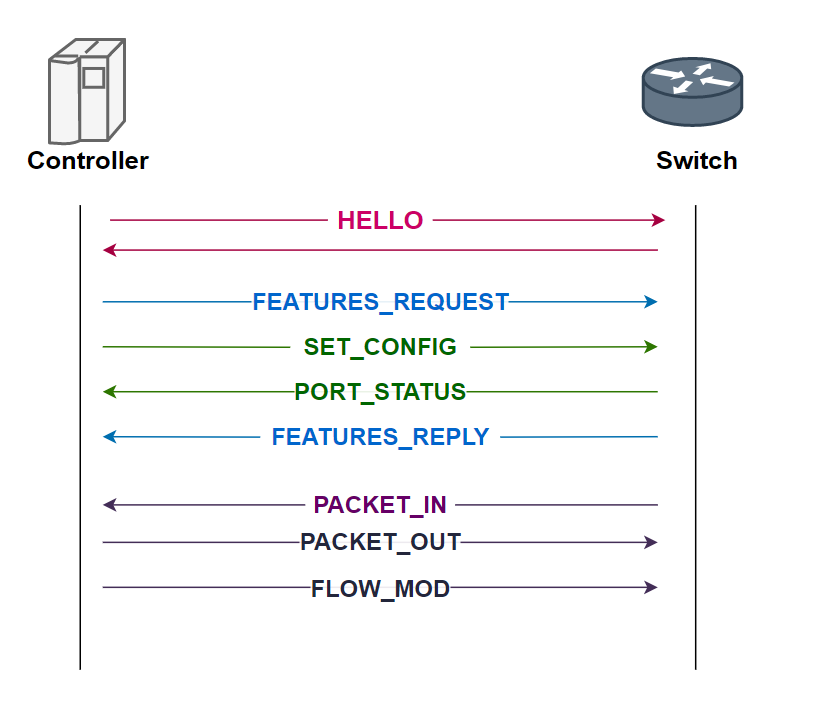

-

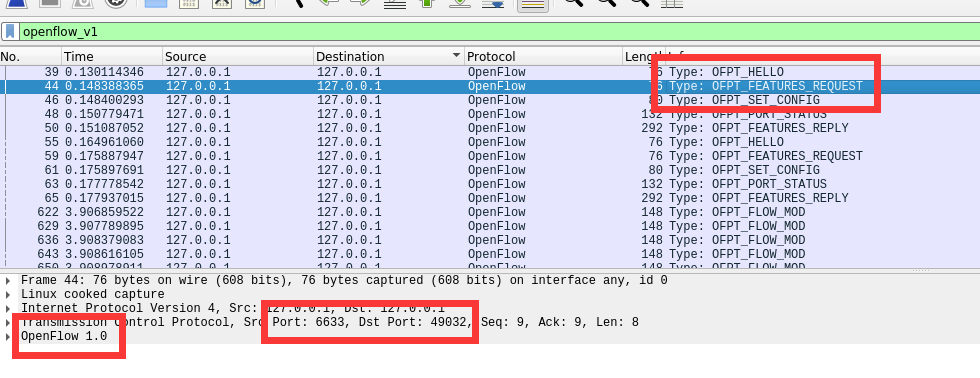

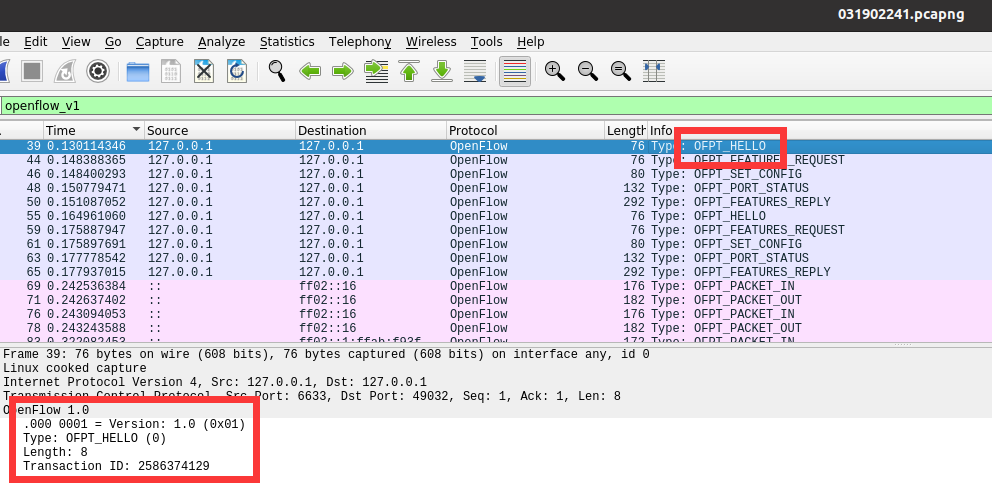

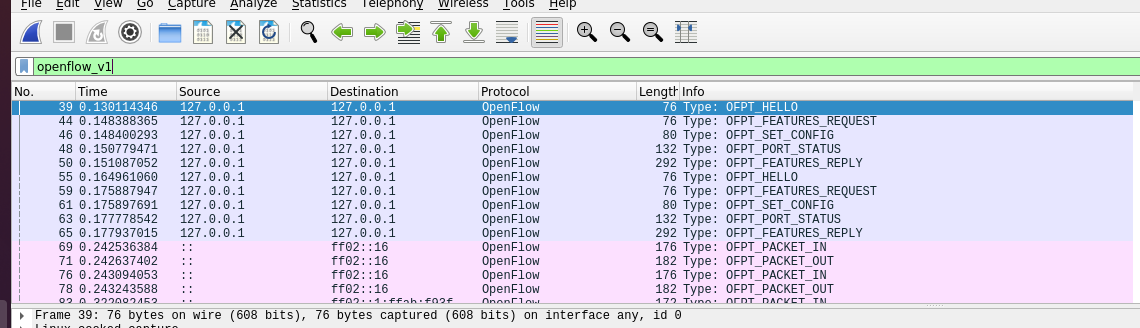

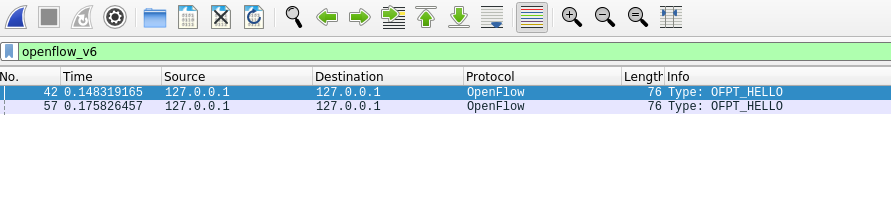

OFPT_HELLO 源端口6633 -> 目的端口49032,从控制器到交换机

-

也有源端口49032 -> 目的端口6633的,即交换机到控制器的另一个包,此处协议为openflow1.5

控制器与交换机建立连接,并使用OpenFlow 1.0 -

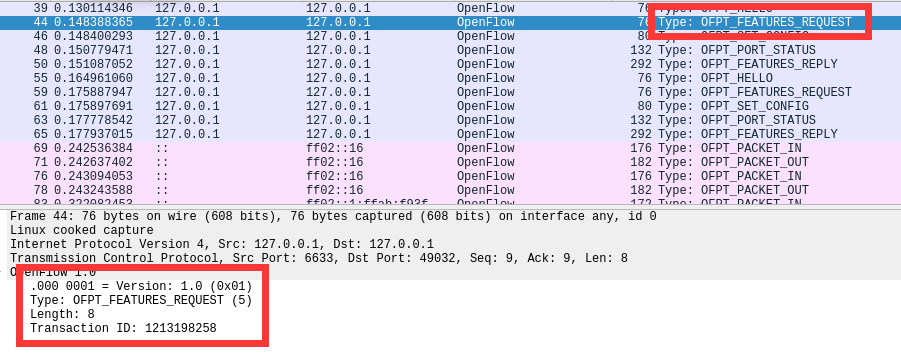

OFPT_FEATURES_REQUEST 源端口6633 -> 目的端口49032,从控制器到交换机

控制器请求交换器的特征信息 -

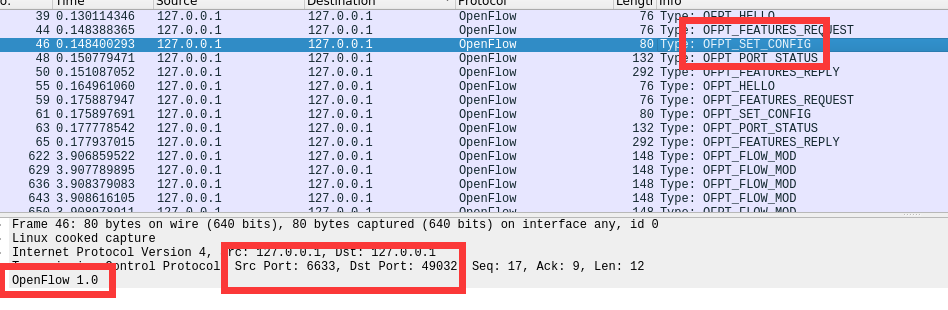

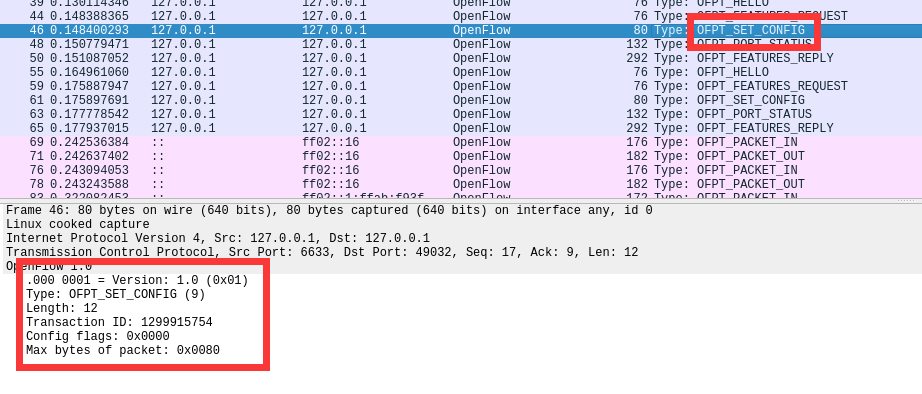

OFPT_SET_CONFIG 源端口6633 -> 目的端口49032,从控制器到交换机

控制器要求交换机按照所给出的信息进行配置 -

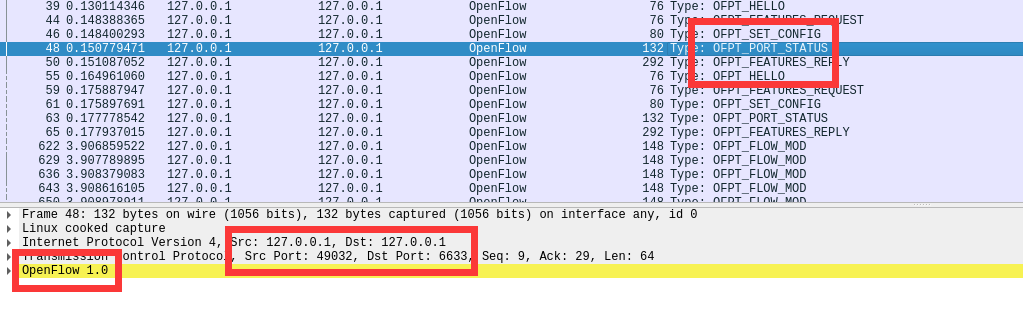

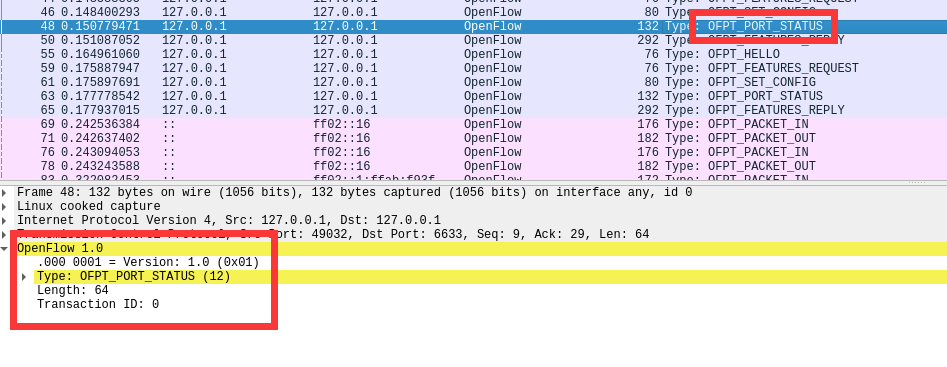

OFPT_PORT_STATUS 源端口49032 -> 目的端口6633,从交换机到控制器

当交换机端口发生变化时,交换机告知控制器相应的端口状态 -

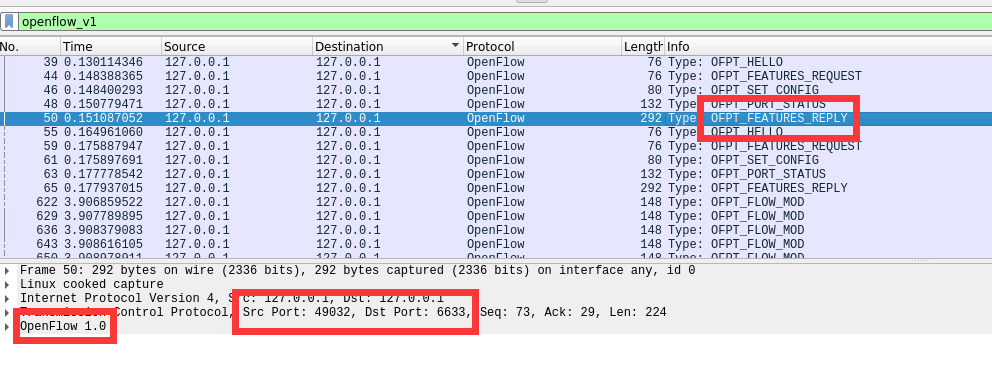

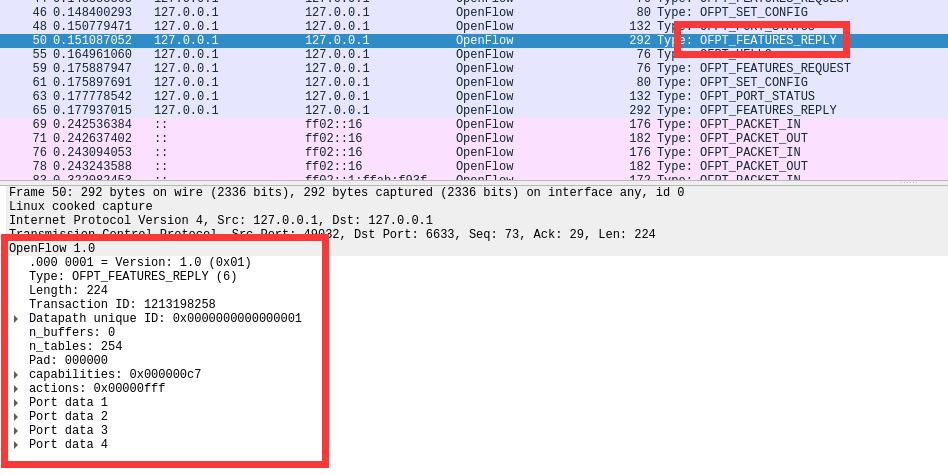

OFPT_FEATURES_REPLY 源端口49032 -> 目的端口6633,从交换机到控制器

交换机告知控制器它的特征信息 -

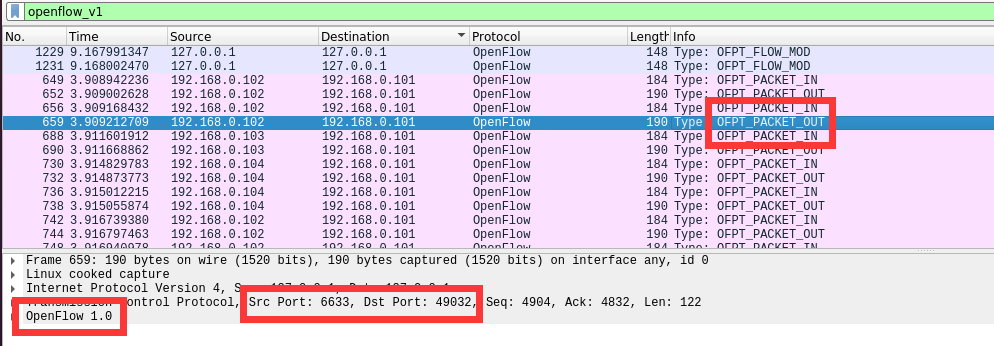

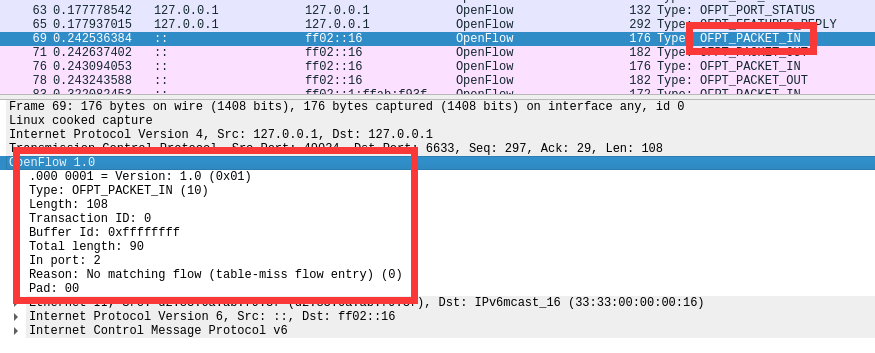

OFPT_PACKET_IN 源端口49032 -> 目的端口6633,从交换机到控制器

交换机告知控制器有数据包进来,请求控制器指示 -

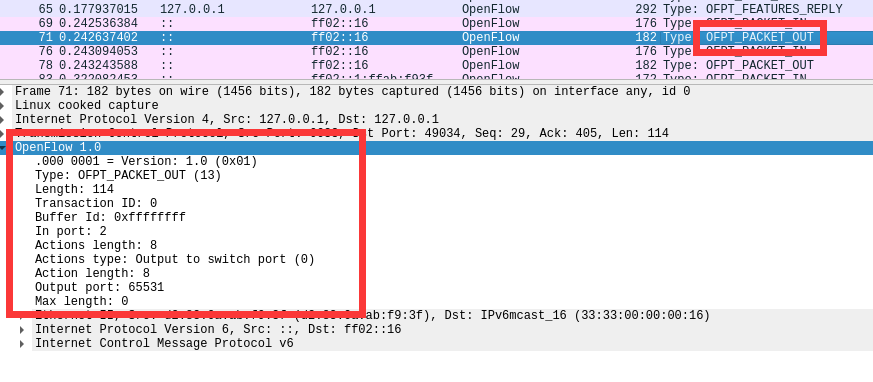

OFPT_PACKET_OUT 源端口6633 -> 目的端口49032,从控制器到交换机

控制器要求交换机按照所给出的action进行处理 -

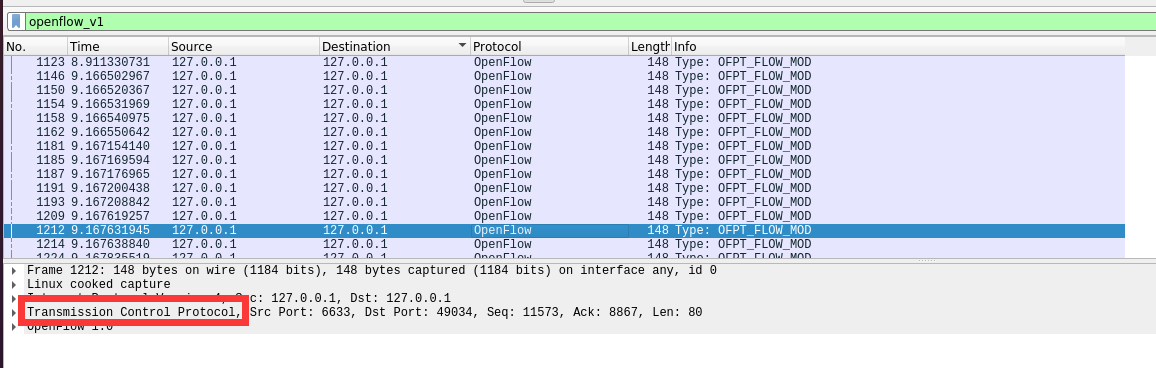

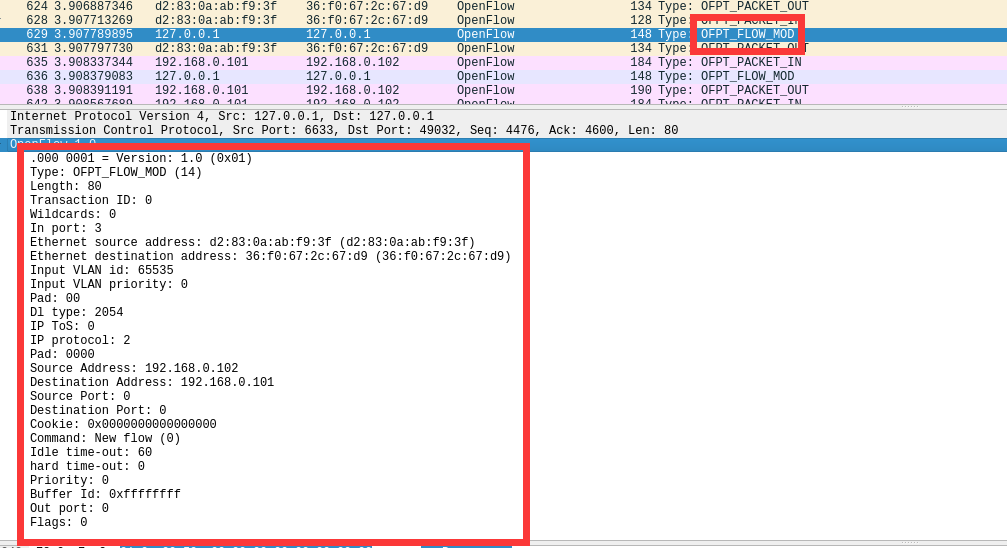

OFPT_FLOW_MOD 源端口6633 -> 目的端口49032,从控制器到交换机

控制器对交换机进行流表的添加、删除、变更等操作 -

上述OFPT_PACKET_IN、OFPT_PACKET_OUT、OFPT_FLOW_MOD三种消息报文的交互会频繁多次出现在交换机和控制器之间。

6.画出相关交互图或流程图:

7.回答问题:交换机与控制器建立通信时是使用TCP协议还是UDP协议?

如图所示为(Transmission Control Protocol)TCP协议。

(二)进阶要求

将抓包结果对照OpenFlow源码,了解OpenFlow主要消息类型对应的数据结构定义。相关数据结构可在openflow安装目录openflow/include/openflow当中的openflow.h头文件中查询到

1. HELLO

struct ofp_header {

uint8_t version; /* OFP_VERSION. */

uint8_t type; /* One of the OFPT_ constants. */

uint16_t length; /* Length including this ofp_header. */

uint32_t xid; /* Transaction id associated with this packet.

Replies use the same id as was in the request

to facilitate pairing. */

};

struct ofp_hello {

struct ofp_header header;

};

可以看到对应了HELLO报文的四个参数

2. FEATURES_REQUEST

源码参数格式与HELLO相同,与上述ofp_header结构体中数据相同

3.SET_CONFIG

控制器下发的交换机配置数据结构体

/* Switch configuration. */

struct ofp_switch_config {

struct ofp_header header;

uint16_t flags; /* OFPC_* flags. */

uint16_t miss_send_len; /* Max bytes of new flow that datapath should

send to the controller. */

};

4. PORT_STATUS

/* A physical port has changed in the datapath */

struct ofp_port_status {

struct ofp_header header;

uint8_t reason; /* One of OFPPR_*. */

uint8_t pad[7]; /* Align to 64-bits. */

struct ofp_phy_port desc;

};

5. FEATURES_REPLY

struct ofp_switch_features {

struct ofp_header header;

uint64_t datapath_id; /* Datapath unique ID. The lower 48-bits are for

a MAC address, while the upper 16-bits are

implementer-defined. */

uint32_t n_buffers; /* Max packets buffered at once. */

uint8_t n_tables; /* Number of tables supported by datapath. */

uint8_t pad[3]; /* Align to 64-bits. */

/* Features. */

uint32_t capabilities; /* Bitmap of support "ofp_capabilities". */

uint32_t actions; /* Bitmap of supported "ofp_action_type"s. */

/* Port info.*/

struct ofp_phy_port ports[0]; /* Port definitions. The number of ports

is inferred from the length field in

the header. */

};

/* Description of a physical port */

struct ofp_phy_port {

uint16_t port_no;

uint8_t hw_addr[OFP_ETH_ALEN];

char name[OFP_MAX_PORT_NAME_LEN]; /* Null-terminated */

uint32_t config; /* Bitmap of OFPPC_* flags. */

uint32_t state; /* Bitmap of OFPPS_* flags. */

/* Bitmaps of OFPPF_* that describe features. All bits zeroed if

* unsupported or unavailable. */

uint32_t curr; /* Current features. */

uint32_t advertised; /* Features being advertised by the port. */

uint32_t supported; /* Features supported by the port. */

uint32_t peer; /* Features advertised by peer. */

};

可以看到与图中信息一一对应,包括交换机物理端口的信息

6. PACKET_IN

PACKET_IN有两种情况:

- 交换机查找流表,发现没有匹配条目,但是这种包没有抓到过

enum ofp_packet_in_reason {

OFPR_NO_MATCH, /* No matching flow. */

OFPR_ACTION /* Action explicitly output to controller. */

};

- 有匹配条目,对应的action是OUTPUT=CONTROLLER,固定收到向控制器发送包

struct ofp_packet_in {

struct ofp_header header;

uint32_t buffer_id; /* ID assigned by datapath. */

uint16_t total_len; /* Full length of frame. */

uint16_t in_port; /* Port on which frame was received. */

uint8_t reason; /* Reason packet is being sent (one of OFPR_*) */

uint8_t pad;

uint8_t data[0]; /* Ethernet frame, halfway through 32-bit word,

so the IP header is 32-bit aligned. The

amount of data is inferred from the length

field in the header. Because of padding,

offsetof(struct ofp_packet_in, data) ==

sizeof(struct ofp_packet_in) - 2. */

};

7. PACKET_OUT

struct ofp_packet_out {

struct ofp_header header;

uint32_t buffer_id; /* ID assigned by datapath (-1 if none). */

uint16_t in_port; /* Packet's input port (OFPP_NONE if none). */

uint16_t actions_len; /* Size of action array in bytes. */

struct ofp_action_header actions[0]; /* Actions. */

/* uint8_t data[0]; */ /* Packet data. The length is inferred

from the length field in the header.

(Only meaningful if buffer_id == -1.) */

};

8. FLOW_MOD

struct ofp_flow_mod {

struct ofp_header header;

struct ofp_match match; /* Fields to match */

uint64_t cookie; /* Opaque controller-issued identifier. */

/* Flow actions. */

uint16_t command; /* One of OFPFC_*. */

uint16_t idle_timeout; /* Idle time before discarding (seconds). */

uint16_t hard_timeout; /* Max time before discarding (seconds). */

uint16_t priority; /* Priority level of flow entry. */

uint32_t buffer_id; /* Buffered packet to apply to (or -1).

Not meaningful for OFPFC_DELETE*. */

uint16_t out_port; /* For OFPFC_DELETE* commands, require

matching entries to include this as an

output port. A value of OFPP_NONE

indicates no restriction. */

uint16_t flags; /* One of OFPFF_*. */

struct ofp_action_header actions[0]; /* The action length is inferred

from the length field in the

header. */

};

struct ofp_action_header {

uint16_t type; /* One of OFPAT_*. */

uint16_t len; /* Length of action, including this

header. This is the length of action,

including any padding to make it

64-bit aligned. */

uint8_t pad[4];

};

实验总结

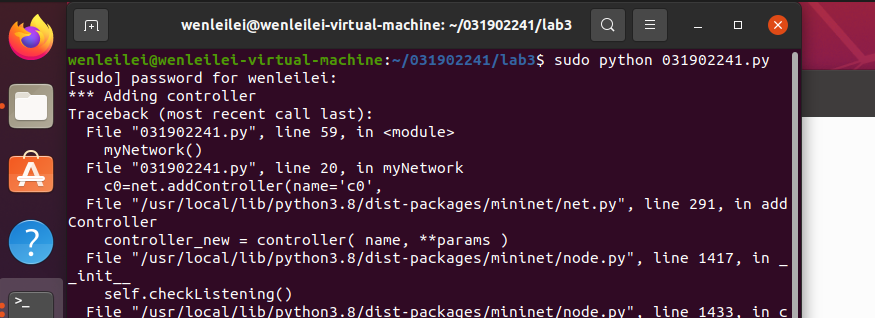

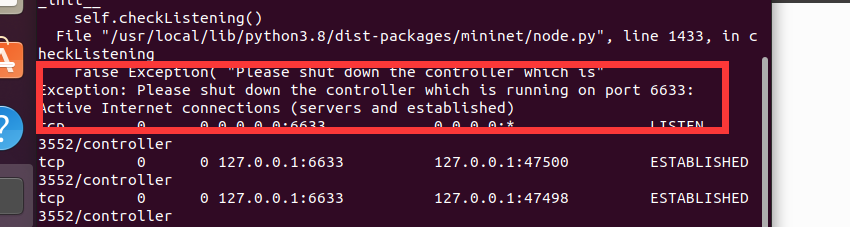

(一) 出现的问题

- 在

ctrl-c停止h1 ping h2后,无法再次运行python文件

.

错误原因:

因为在退出mininet的时候是使用ctrl-c退出的,所以导致 controller 没有被正常杀死,6633 端口一直被占用。

两种解决方法:

sudo killall ovs-controllersudo mn -c

- 抓不到hello包

需要先打开wireshark抓包再运行python文件 - 通过过滤规则openflow_v4发现无法抓取到openflow1.3的数据包

发现交换机版本为openflow1.5,选择过滤规则为openflow_v6,可以抓取到Hello包

通过选择过滤规则可以发现只有openflow1.0和openflow1.5的数据包

(二)个人心得

本次实验我们通过抓取数据包、分析数据包学习了OpenFlow协议下控制器和交换机的交互过程。本次实验的操作难度不大,只需要我们搭建好拓扑、修改好网段及IP地址,再进行抓包即可,但由于理论知识点比较多所以撰写实验报告的难度较大。交换机和控制器的交互过程涉及到多个不同用途的数据包,通过wireshark我们捕获所有数据包,后续通过设置过滤规则进行数据包的过滤,查看每一个OpenFlow数据包的源端口、目的端口、类型和相关字段。进阶要求中通过数据包字段和源码的比较让我更进一步了解与掌握了OpenFlow协议。实验内容并不难,整理实验报告的过程中也能加深对整个交互过程的理解,截图很多,整理起来需要足够的耐心。实验中遇到的问题都得到了解决,对mininet的一些命令行操作也更加的熟练了。

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】凌霞软件回馈社区,博客园 & 1Panel & Halo 联合会员上线

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】博客园社区专享云产品让利特惠,阿里云新客6.5折上折

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步