很早就开始关注:Beautyleg 高清丝袜美腿.关注之后开始觉得打开了新世界的大门,如果有相同观点的,那么你很有品味.说真的,学习爬虫的动力之一就是想把里面的图片爬取下来。哈哈哈!!!

给大家放点爬取完的图片,激励下大家赶紧动手。嘿嘿嘿

1.Beauty.py

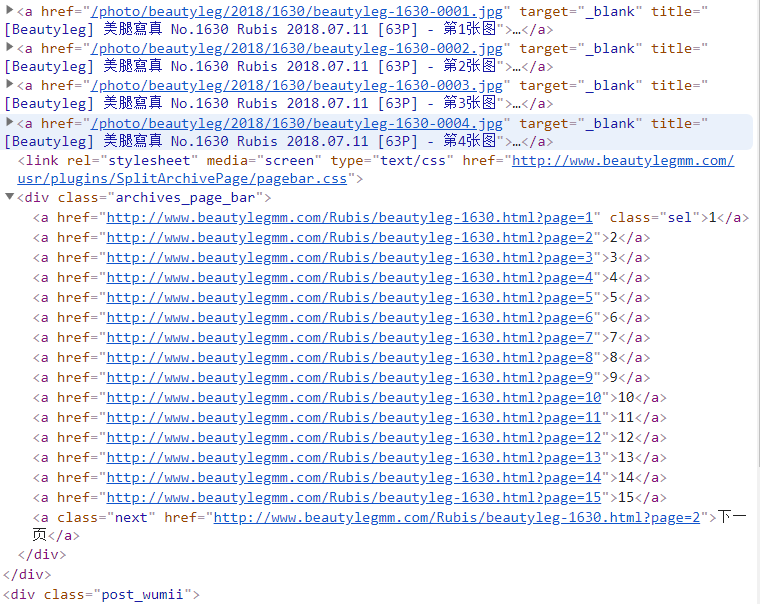

# -*- coding: utf-8 -*- import scrapy from scrapy.pipelines.images import ImagesPipeline from scrapy.linkextractors import LinkExtractor from beauty.items import BeautyItem import pdb class BeautySpider(scrapy.Spider): name = 'Beauty' allowed_domains = ['www.beautylegmm.com'] start_urls = ['http://www.beautylegmm.com/'] def parse(self, response): le = LinkExtractor(restrict_css='div.post_weidaopic') #pdb.set_trace() for link in le.extract_links(response): yield scrapy.Request(link.url,callback=self.parse_url) le2 = LinkExtractor(restrict_css='ol.page-navigator') for link2 in le2.extract_links(response): yield scrapy.Request(link2.url,callback=self.parse) def parse_url(self,response): photo = BeautyItem() if response.css('div.post a::attr(href)'): for href in response.css('div.post a::attr(href)')[:4]: #<a href=''>有很多,就只有前4个是图片地址,取前4个连接就够了.没有域名:<a href="/photo/beautyleg/2018/1630/beautyleg-1630-0001.jpg"> full_url = response.urljoin(href.extract()) #使用response.urljoin(),整合src,获得图片的绝对路径:http://www.beautylegmm.com/photo/beautyleg/2018/1630/beautyleg-1630-0001.jpg photo['images_url'] = full_url yield photo le1 = LinkExtractor(restrict_css='div.grid_10 div.post') #获取页数:<a href="http://www.beautylegmm.com/Rubis/beautyleg-1630.html?page=1" > for link1 in le1.extract_links(response): #pdb.set_trace() yield scrapy.Request(link1.url,callback=self.parse_url)

2.items.py

import scrapy class BeautyItem(scrapy.Item): images_url = scrapy.Field() images = scrapy.Field()

3.pipelines.py

import scrapy from scrapy.exceptions import DropItem from scrapy.pipelines.images import ImagesPipeline import pdb class BeautyPipeline(ImagesPipeline): def get_media_requests(self,item,info): yield scrapy.Request(item['images_url']) def item_completed(self,results,item,info): images_path = [x['path'] for ok,x in results if ok] if not images_path: raise DropItem ('item contain no images') return item

4.settings.py

USER_AGENT ={ #设置浏览器的User_agent

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1",

"Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24"

}

CONCURRENT_REQUESTS = 16 #同时来16个请求

DOWNLOAD_DELAY = 0.2 #0.2s后开启处理第一个请求

ROBOTSTXT_OBEY = False

LOAD_TRUNCATED_IMAGES = True

COOKIES_ENABLED = False

ITEM_PIPELINES = {

'beauty.pipelines.BeautyPipeline': 1,

}

好了,有感兴趣的小伙伴,遇到什么问题可以来咨询我!!!

浙公网安备 33010602011771号

浙公网安备 33010602011771号