关于ext4文件系统概述

前言: 目前大部分Linux操作系统使用的文件系统是ext4和xfs, 了解ext4在磁盘中的分布

1. 容量概念

对于储存几个概念的解析:

sector(扇区) :

1.磁盘最小的储存单位,可以通过命令行 fdisk -l得知单位每sector的大小(一般是512byte)

2.机械硬盘HDD的可用空间大小计算公式是 heads(磁头数量) cylinders(柱面数量) sectors(扇区数量) * 每个sector大小(512byte)

3.所以固态可用空间的总大小是 sectors(扇区数量) * 每个sector大小(512byte)。

4.这几个属性是固定不能修改, 但可以通过命令读取得到。因为固态硬盘SSD没有磁头柱面的概念。

// 1073741824 bytes的大小刚好是 sectors * 512 bytes 得出来的 root@xxxxxx:~# fdisk -l /dev/rbd0 Disk /dev/rbd0: 1 GiB, 1073741824 bytes, 2097152 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 4194304 bytes / 4194304 bytes Disklabel type: dos Disk identifier: 0x5510f42b Device Boot Start End Sectors Size Id Type /dev/rbd0p1 8192 2097151 2088960 1020M 83 Linux root@xxxxxx:~#

block (块) :

1.是文件系统EXT4,FAT32,XFS等最小的储存单位,使用命令 blkid 可查看文件系统类型。

2.每一个block只能存储一个文件的数据。

3.当格式化一个文件系统时,如果选择不当,就会造成大量的磁盘空间浪费。

4.默认操作系统每个block的大小是4k(4096bytes),一个block由连续的sector组成。

5.一个文件在文件系统中的block不一定是连续的。

inode (索引节点) :

1.记录文件的权限、属性和数据所在块block的号码。

2.每个文件都有且仅有一个的inode,每个inode都有自己的编号,可以把inode简单地理解为文档索引。

data block (数据区块) :

1.block可以区分成两种概念,第一就是上述说的文件系统中最小的储存单位(存在1个block存下多个inode的说法),第二也是这里谈及到数据区块

2.存储的文件内容,也叫数据区块(data block),每个block都有自己的编号,Ext2支持的单位block容量仅为1k、2k、4k

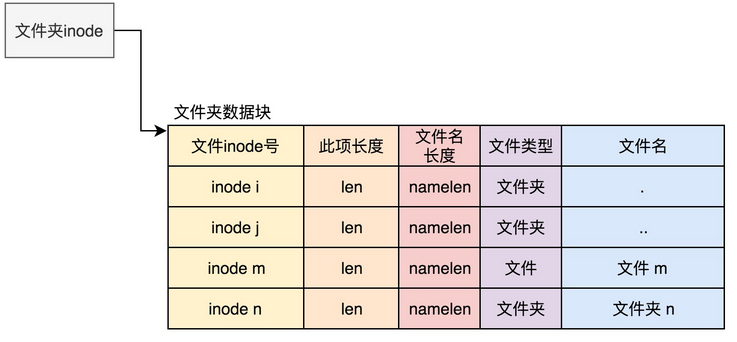

目录的data block保存该目录下所有文件以及子目录的名字,inode编号等信息。

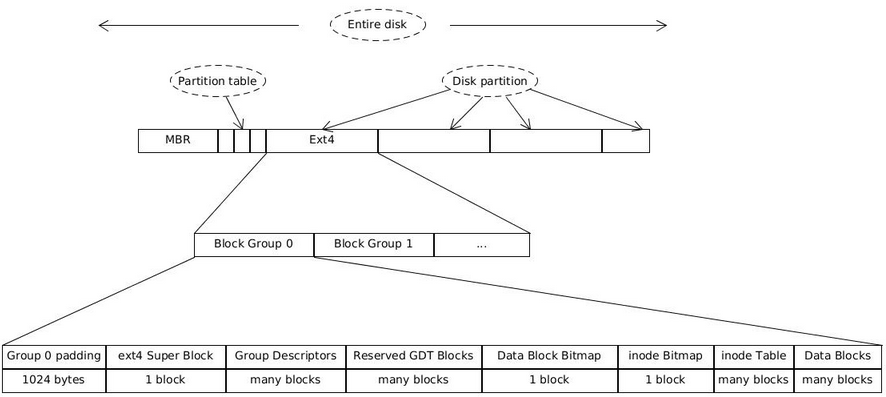

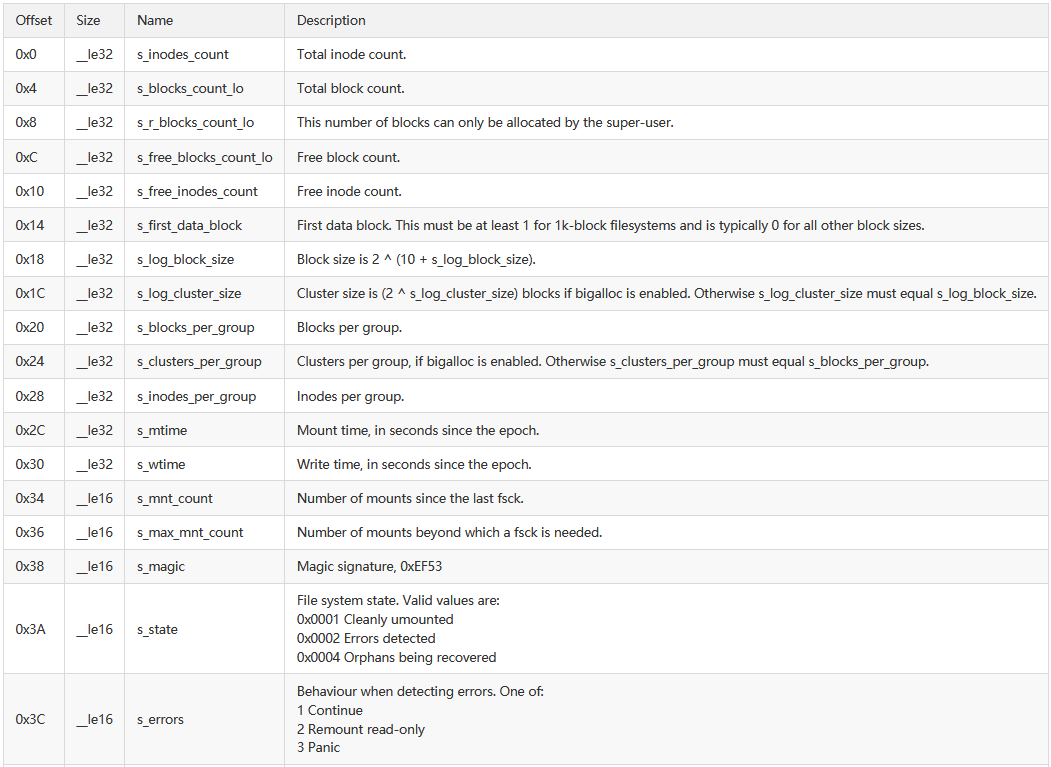

2. Ext4文件系统磁盘布局

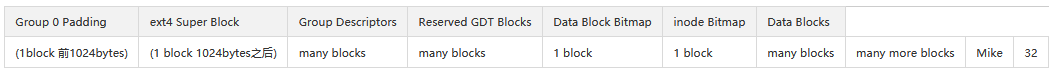

Ext4文件系统把整个分区划分成各个block group(块组),每个block group由superblock(超级块),block group(区块群组), block bitmap(区块对照表), inode bitmap(inode 对照表)和group descriptor以及inode table、data block组成。

1024 bytes 的 Group 0 Padding(boot block)只有 块组0 有,用于装载该分区的操作系统。MBR 为主引导记录用来引导计算机。在计算机启动时,BIOS 读入并执行 MBR,MBR 作的第一件事就是确定活动分区(这对应于双系统的计算机开机时选择启动项,单系统的直接就能确定了所以就不需要选择),读入活动分区的引导块(Boot block),引导块再加载该分区中的操作系统。

官方网站:https://ext4.wiki.kernel.org/index.php/Ext4_Disk_Layout

图2为简略图省略了块组描述与预留GDT部分。

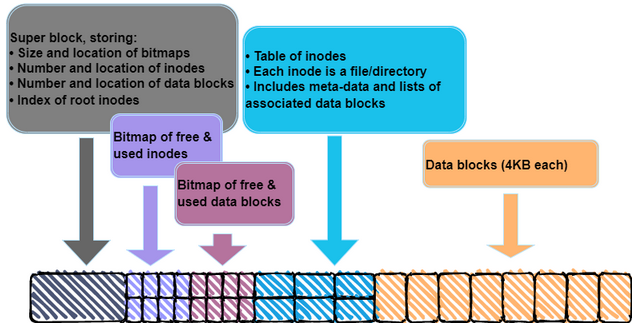

superblock(超级块)

1.记录文件系统(filesystem)的整体信息,包括inode/block的总量、使用量、剩余量、大小、以及文件系统的格式和相关信息。

备注:superblock对于文件系统太重要了,但是文件系统的superblock又只有一个,所以除了第一个block group含有superblock外,后续block group都可能会含有备份的superblock,目的就是为了避免superblock单点无法救援的问题。

block bitmap(区块对照表)

1.一个block只能被一个文件使用,当我们新增文件时,肯定需要使用新的block来记录文件数据。那么如何快速地知道,哪些block是新的?哪些block是已经使用了的?block bitmap就是这样被设计出来,记录所有使用和未使用的block号码。同样的,

2.我们删除文件时,先从block bitmap中找到对应的block号码,然后更新标志为未使用,最后释放block。

inode bitmap(inode 对照表)

和block bitmap一样的设计理念,只不过它记录地是已使用和未使用的inode号码,这里就不再敖述了。

group descriptor

描述每个区段(block group)开始和结束的block号码,以及说明每个区段(inodemap、blockmap、inode table)分别介于哪些block号码之间。

inode table

该组所有inode的合集,可以通过inode编号 以inode table起始位置作偏移找到inode所在的位置。

data block

上节已叙述,为存放文件或目录数据的地方。

2.1 获取ext4分区相关信息

可以使用dumpe2fs 打印ext4分区的详细信息。

Inode count: 65280 Block count: 261120 Block size: 4096 Inode size: 256 Blocks per group: 32768 Inodes per group: 8160

可以从以上获取得整个分区inode的数量是65280 block的数量261120,一个block的大小是4K,每个block group有 32768 block (4096 * 32768 大小)

其中一个block 可以存放 4096Bytes (block size) / 2566Bytes (Inode size) = 16 个 inode

一个 block group 有 8160 个 inode

root@xxxxxx:~# dumpe2fs /dev/rbd0p1 dumpe2fs 1.44.1 (24-Mar-2018) Filesystem volume name: <none> Last mounted on: /mnt/ceph-vol1 Filesystem UUID: 6f074db1-8f6f-4313-851d-fa3c9b7590f8 Filesystem magic number: 0xEF53 Filesystem revision #: 1 (dynamic) Filesystem features: has_journal ext_attr resize_inode dir_index filetype needs_recovery extent 64bit flex_bg sparse_super large_file huge_file dir_nlink extra_isize metadata_csum Filesystem flags: signed_directory_hash Default mount options: user_xattr acl Filesystem state: clean Errors behavior: Continue Filesystem OS type: Linux Inode count: 65280 Block count: 261120 Reserved block count: 13056 Free blocks: 247156 Free inodes: 65267 First block: 0 Block size: 4096 Fragment size: 4096 Group descriptor size: 64 Reserved GDT blocks: 127 Blocks per group: 32768 Fragments per group: 32768 Inodes per group: 8160 Inode blocks per group: 510 RAID stride: 1024 RAID stripe width: 1024 Flex block group size: 16 Filesystem created: Wed Oct 14 17:42:24 2020 Last mount time: Thu Oct 15 17:35:48 2020 Last write time: Thu Oct 15 17:35:48 2020 Mount count: 2 Maximum mount count: -1 Last checked: Wed Oct 14 17:42:24 2020 Check interval: 0 (<none>) Lifetime writes: 153 MB Reserved blocks uid: 0 (user root) Reserved blocks gid: 0 (group root) First inode: 11 Inode size: 256 Required extra isize: 32 Desired extra isize: 32 Journal inode: 8 Default directory hash: half_md4 Directory Hash Seed: 6a8799b4-6528-499d-9f62-fde45cb8991a Journal backup: inode blocks Checksum type: crc32c Checksum: 0x1ab86e25 Journal features: journal_64bit journal_checksum_v3 Journal size: 16M Journal length: 4096 Journal sequence: 0x00000010 Journal start: 1 Journal checksum type: crc32c Journal checksum: 0xdc3ea2cb Group 0: (Blocks 0-32767) csum 0xe2fe [ITABLE_ZEROED] Primary superblock at 0, Group descriptors at 1-1 Reserved GDT blocks at 2-128 Block bitmap at 129 (+129), csum 0x06292657 Inode bitmap at 137 (+137), csum 0xc6f39952 Inode table at 145-654 (+145) 28533 free blocks, 8143 free inodes, 6 directories, 8143 unused inodes Free blocks: 4235-32767 Free inodes: 18-8160 Group 1: (Blocks 32768-65535) csum 0x4d02 [INODE_UNINIT, ITABLE_ZEROED] Backup superblock at 32768, Group descriptors at 32769-32769 Reserved GDT blocks at 32770-32896 Block bitmap at 130 (bg #0 + 130), csum 0xfa6bcbac Inode bitmap at 138 (bg #0 + 138), csum 0x00000000 Inode table at 655-1164 (bg #0 + 655) 27518 free blocks, 8160 free inodes, 0 directories, 8160 unused inodes Free blocks: 32898-33791, 38912-65535 Free inodes: 8161-16320 Group 2: (Blocks 65536-98303) csum 0xba1c [INODE_UNINIT, ITABLE_ZEROED] Block bitmap at 131 (bg #0 + 131), csum 0x8c3dbe87 Inode bitmap at 139 (bg #0 + 139), csum 0x00000000 Inode table at 1165-1674 (bg #0 + 1165) 28672 free blocks, 8160 free inodes, 0 directories, 8160 unused inodes Free blocks: 69632-98303 Free inodes: 16321-24480 Group 3: (Blocks 98304-131071) csum 0x579e [INODE_UNINIT, BLOCK_UNINIT, ITABLE_ZEROED] Backup superblock at 98304, Group descriptors at 98305-98305 Reserved GDT blocks at 98306-98432 Block bitmap at 132 (bg #0 + 132), csum 0x00000000 Inode bitmap at 140 (bg #0 + 140), csum 0x00000000 Inode table at 1675-2184 (bg #0 + 1675) 32639 free blocks, 8160 free inodes, 0 directories, 8160 unused inodes Free blocks: 98433-131071 Free inodes: 24481-32640 Group 4: (Blocks 131072-163839) csum 0xfc69 [INODE_UNINIT, BLOCK_UNINIT, ITABLE_ZEROED] Block bitmap at 133 (bg #0 + 133), csum 0x00000000 Inode bitmap at 141 (bg #0 + 141), csum 0x00000000 Inode table at 2185-2694 (bg #0 + 2185) 32768 free blocks, 8160 free inodes, 0 directories, 8160 unused inodes Free blocks: 131072-163839 Free inodes: 32641-40800 Group 5: (Blocks 163840-196607) csum 0x0b15 [INODE_UNINIT, BLOCK_UNINIT, ITABLE_ZEROED] Backup superblock at 163840, Group descriptors at 163841-163841 Reserved GDT blocks at 163842-163968 Block bitmap at 134 (bg #0 + 134), csum 0x00000000 Inode bitmap at 142 (bg #0 + 142), csum 0x00000000 Inode table at 2695-3204 (bg #0 + 2695) 32639 free blocks, 8160 free inodes, 0 directories, 8160 unused inodes Free blocks: 163969-196607 Free inodes: 40801-48960 Group 6: (Blocks 196608-229375) csum 0x18c8 [INODE_UNINIT, BLOCK_UNINIT, ITABLE_ZEROED] Block bitmap at 135 (bg #0 + 135), csum 0x00000000 Inode bitmap at 143 (bg #0 + 143), csum 0x00000000 Inode table at 3205-3714 (bg #0 + 3205) 32768 free blocks, 8160 free inodes, 0 directories, 8160 unused inodes Free blocks: 196608-229375 Free inodes: 48961-57120 Group 7: (Blocks 229376-261119) csum 0x9bc0 [INODE_UNINIT, ITABLE_ZEROED] Backup superblock at 229376, Group descriptors at 229377-229377 Reserved GDT blocks at 229378-229504 Block bitmap at 136 (bg #0 + 136), csum 0xde491fa2 Inode bitmap at 144 (bg #0 + 144), csum 0x00000000 Inode table at 3715-4224 (bg #0 + 3715) 31615 free blocks, 8160 free inodes, 0 directories, 8160 unused inodes Free blocks: 229505-261119 Free inodes: 57121-65280

2.2 Ext4块数据属性

要定位文件在磁盘的位置,首先要清楚ionde 和data block的数据内容。

在 Ext4 中除日志以外的数据都是以小端法存储的。

不过多对查找文件内容不起作用的部分进行叙述。

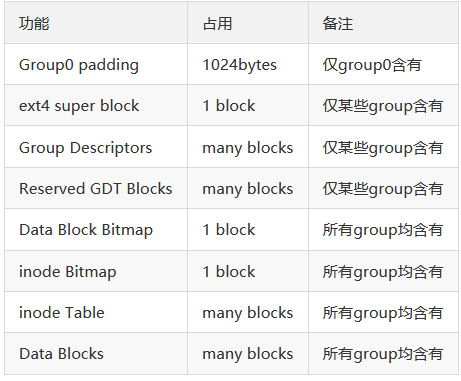

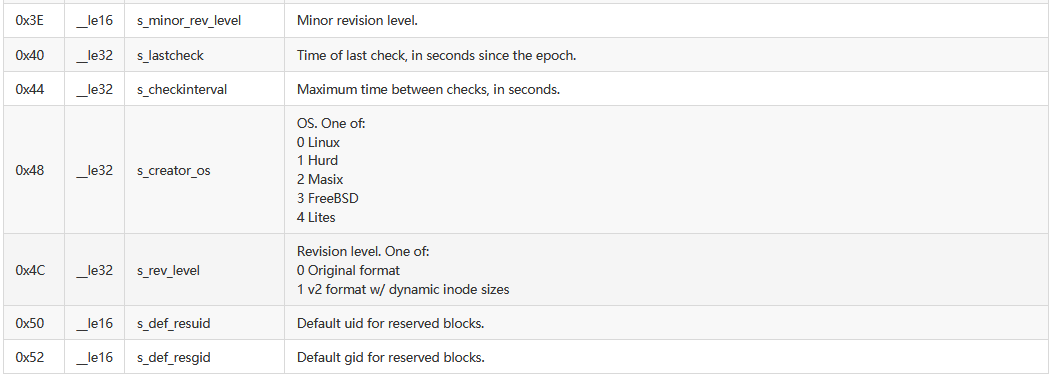

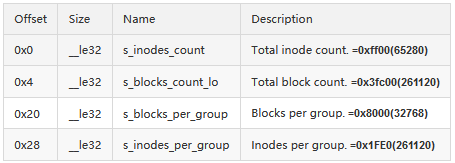

2.2.1 superblock 超级块

superblock记录有关封闭文件系统的各种信息,例如块计数、inode计数、支持的功能、维护信息等等。

如果设置了sparse_super feature标志,则超级块和组描述符的冗余副本仅保留在组号为0或3、5或7的幂次方的组中。如果未设置该标志,则所有组中都会保留冗余副本。

超级块校验和是根据包含FS UUID的超级块结构计算的。

ext4超级块在struct ext4_super_block中的布局如下:

2.2.2 Block Group Descriptors 块组描述

详细查询官网。

在一个块组中,拥有固定位置的数据结构只有超级块和块组描述符。

flex_bg 和 meta_bg 并不是互斥关系。

ext4 64-bits 特性未开启时占用 32 bytes,开启时占用 64 bytes。

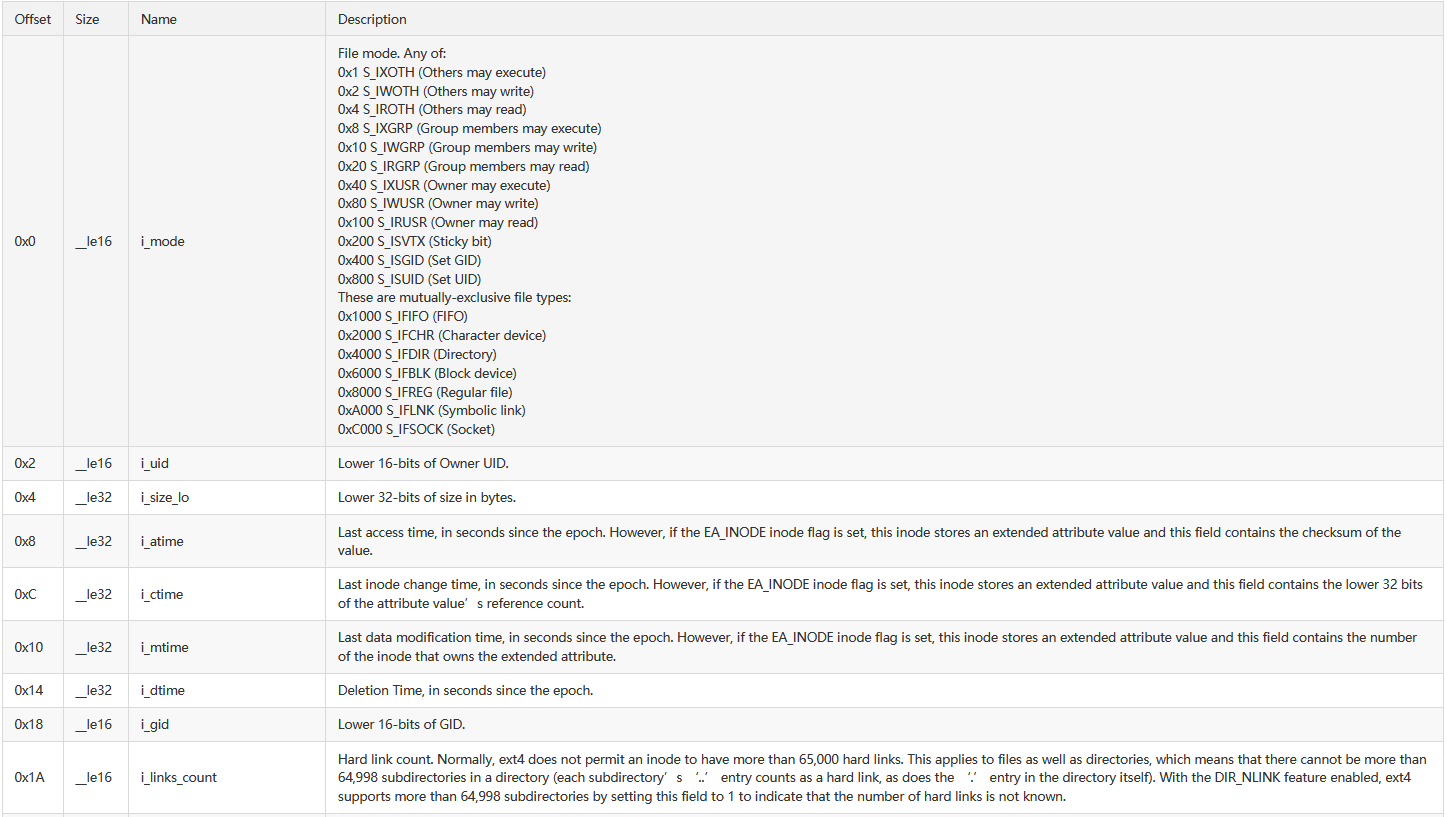

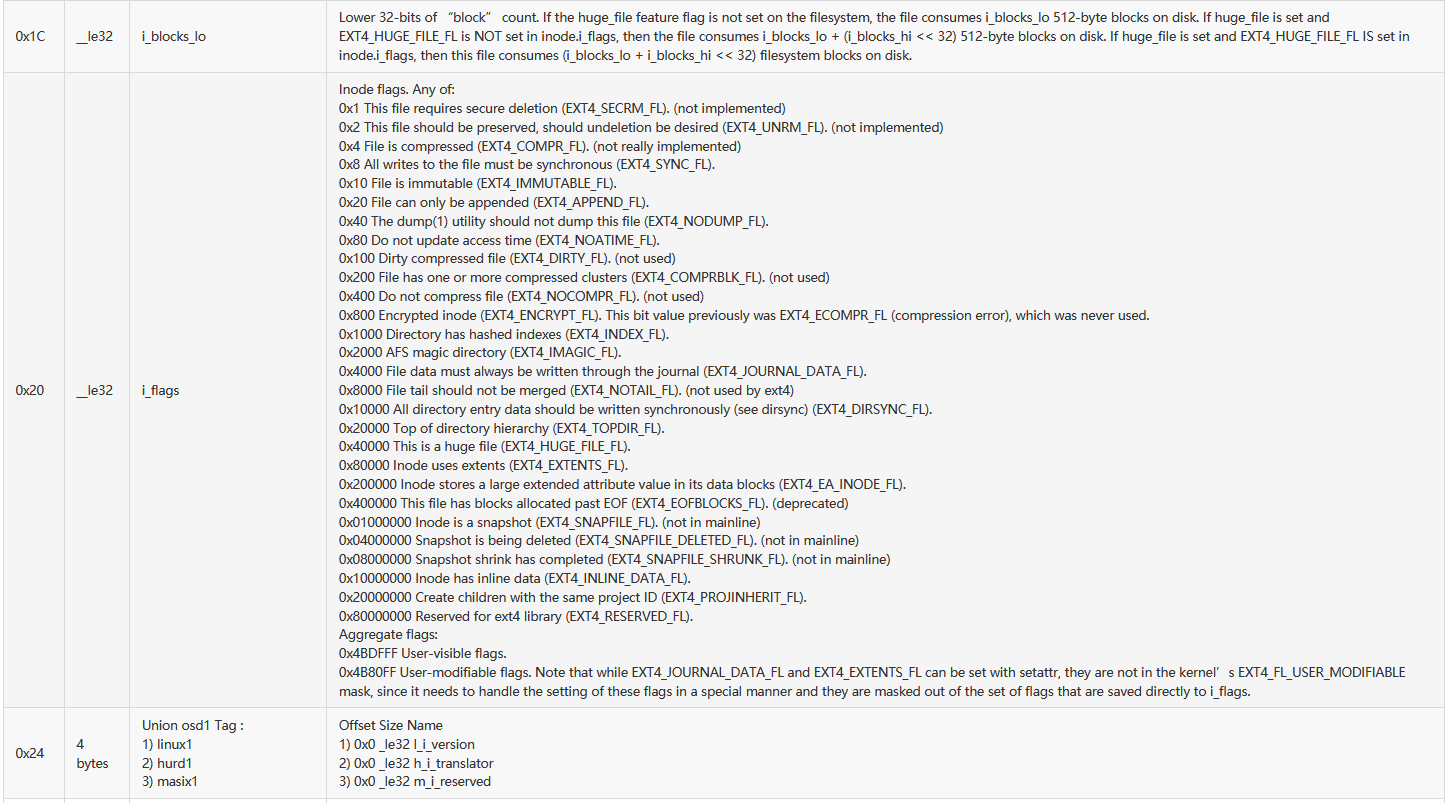

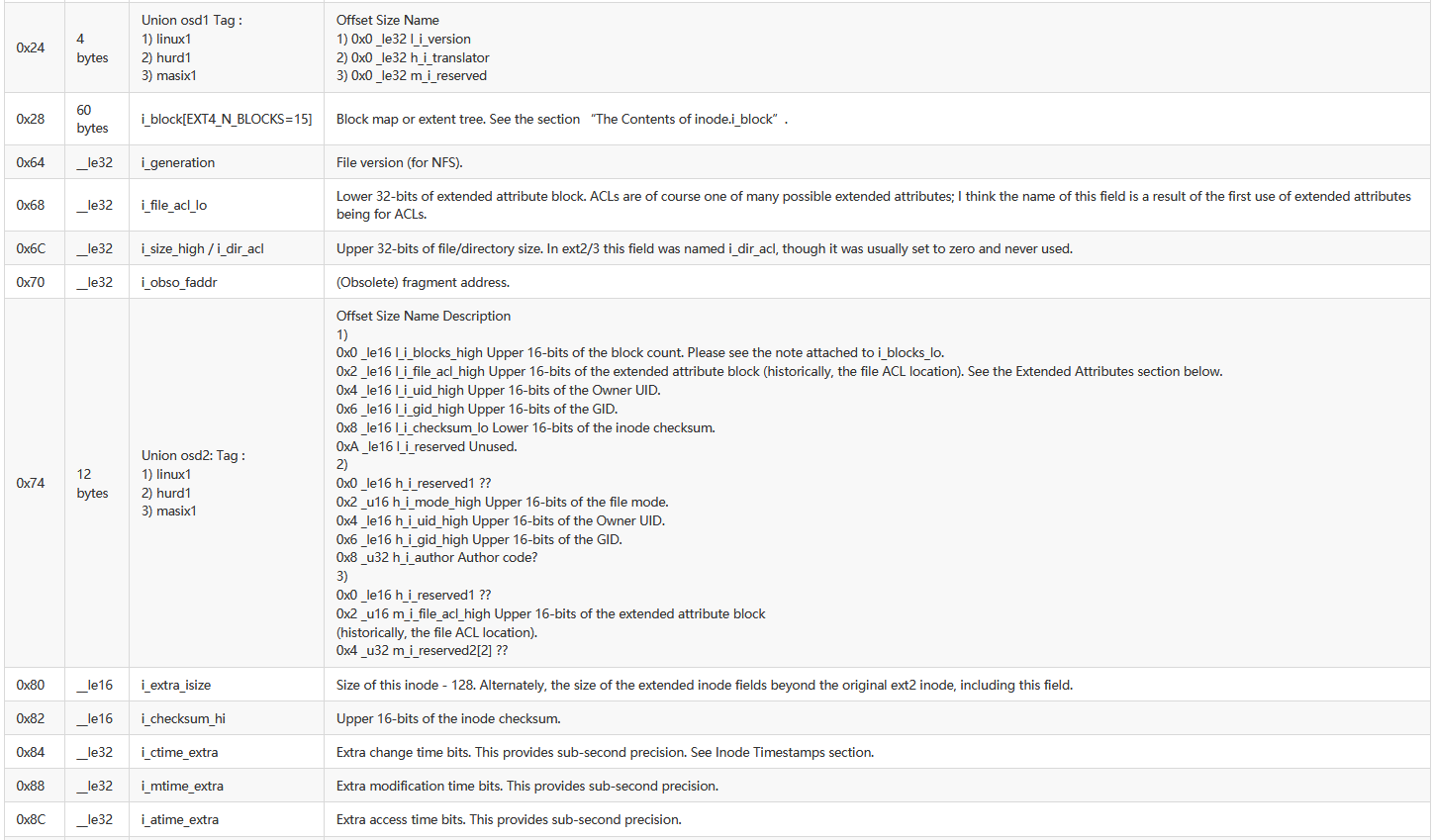

2.2.3 Inode Table inode表

inode存储与文件相关的所有元数据(时间戳、块映射、扩展属性等)

inode表是struct ext4_inode的线性数组。该表的大小足以存储至少sb.s_inode_size * sb.s_inodes_per_group字节。包含inode的块组的编号可以计算为(inode_number-1)/sb.s_inodes_per_group,并且组表中的偏移量为(inode_number-1)%sb.s_inodes_per_group。没有inode 0。

![]()

2.2.4 Extent Tree 文件的储存方式

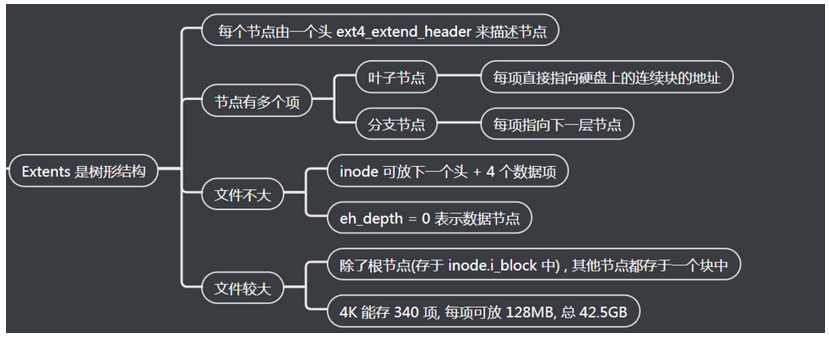

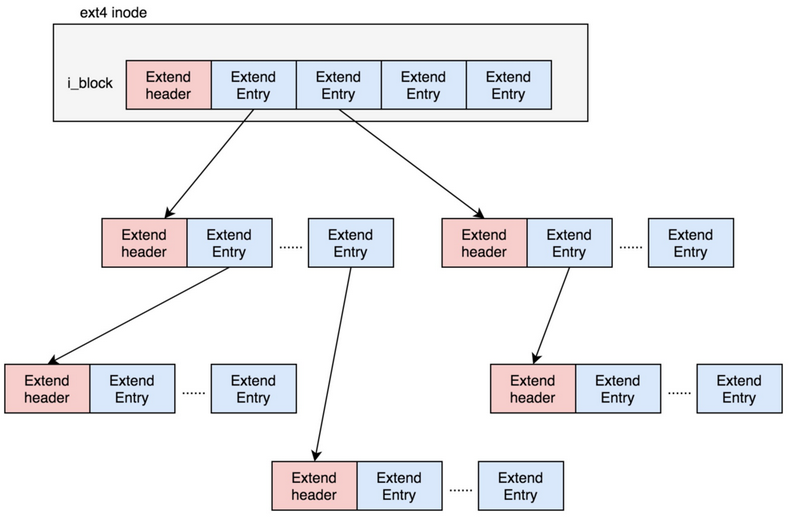

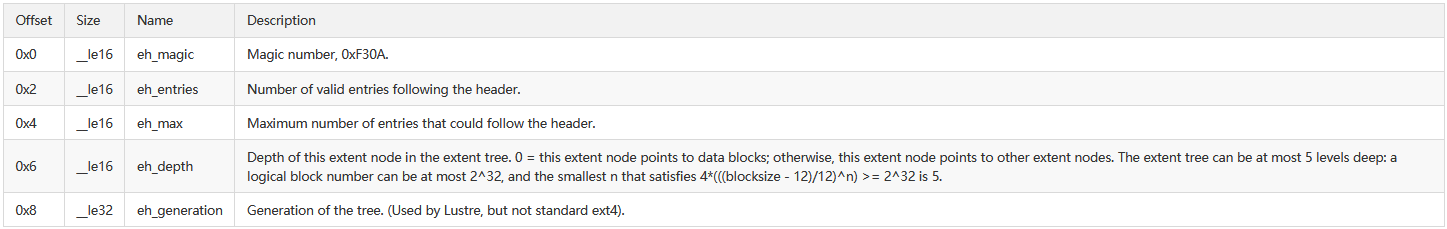

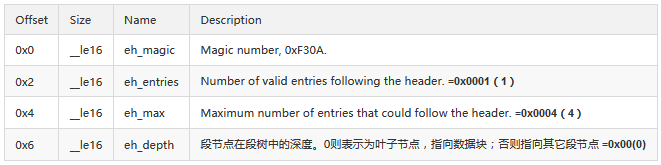

每个extent节点由一个头部和多个body组成,无论是索引节点还是叶子节点,甚至是直接存储在inode中的extent节点。当一个文件占用的extent数量较少的时候,其extent可直接存储在inode.i_block[ ]中,当extent数量超过inode.i_block[ ]的存储容量的时候( 由上图inode结构表可知,inode.i_block最大值是60bytes,去除了extent_header 12bytes之后剩下48bytes只够存4个extent),ext4便会用B树来组织所有的extent。B树上的每个节点,无论是索引节点还是叶子节点,其主体包含两个部分:extent_header和extent_body。每个节点包含一个extent_header和多个extent_body。

B树上的节点能存在 block size(4096bytes) - extent_header(12bytes) / extent_body(12bytes) = 340个extent 远比indoe上的4个大!

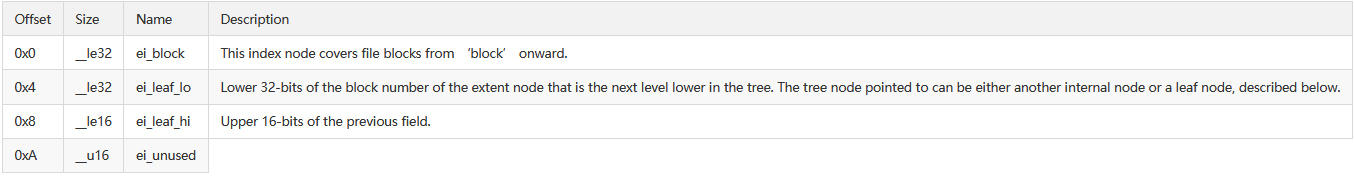

ext4_extent_idx 就是索引节点,他指向的是下个节点或者叶子,叶子ext4_extent 才具有指向文件内容的data block的信息。

The extent tree header is recorded in struct ext4_extent_header, which is 12 bytes long:

Internal nodes of the extent tree, also known as index nodes, are recorded as struct ext4_extent_idx, and are 12 bytes long:

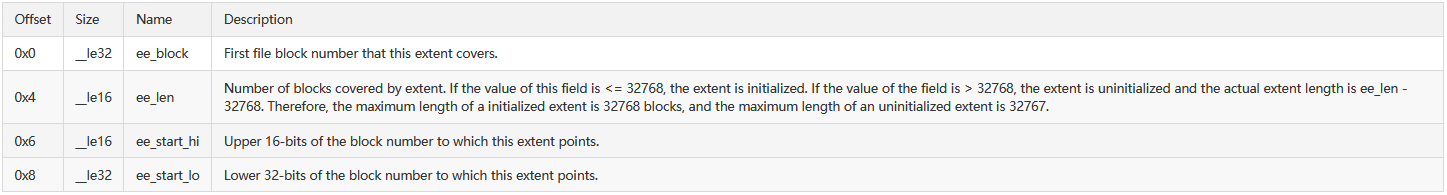

Leaf nodes of the extent tree are recorded as struct ext4_extent, and are also 12 bytes long:

2.2.5 ext4_dir_entry/ext4_dir_entry2 目录存放

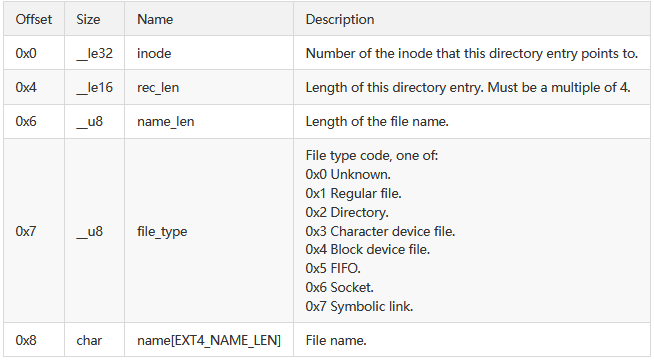

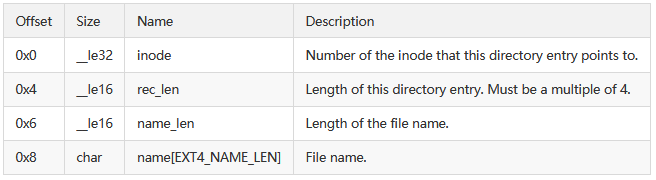

目录也是文件, 也有 inode, inode 指向一个块, 块中保存各个文件信息, ext4_dir_entry 包括文件名和 inode, 默认按列表存

ext4_dir_entry

ext4_dir_entry_2

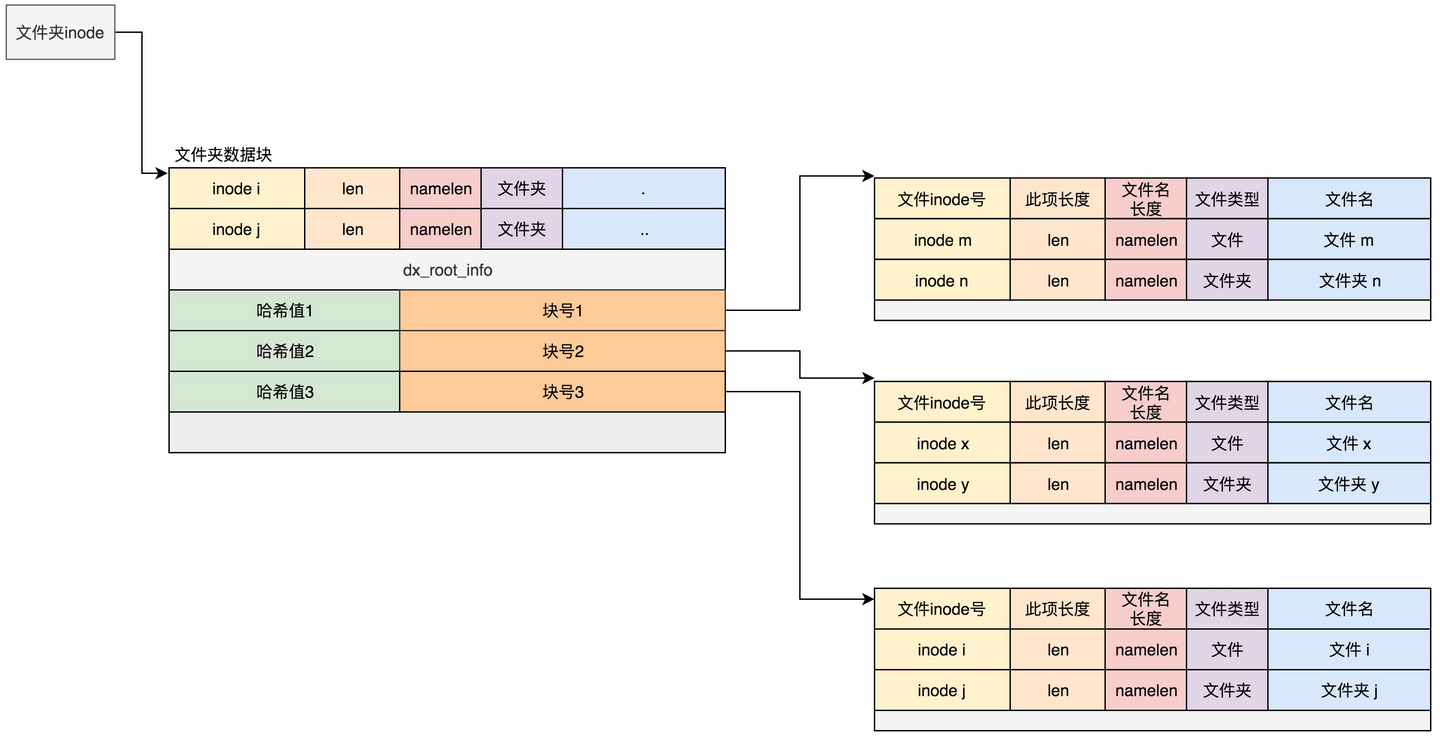

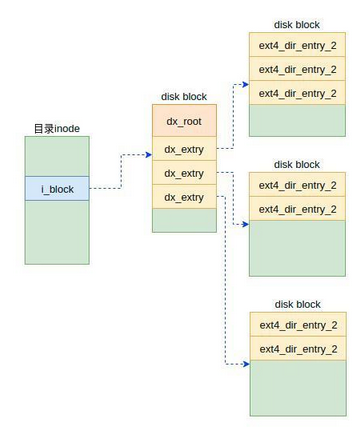

2.2.6 Hash Tree 目录储存方式

线性目录项不利于系统性能提升。因而从ext3开始加入了快速平衡树哈希目录项名称。如果在inode中设置EXT4_INDEX_FL标志,目录使用哈希的B树(hashed btree ,htree)组织和查找目录项。为了向后只读兼容Ext2,htree实际上隐藏在目录文件中。

Ext2的惯例,树的根总是在目录文件的第一个数据块中。“.”和“..”目录项必须出现在第一个数据块的开头。因而这两个目录项在数据块的开头存放两个struct ext4_dir_entry_2结构,且它们不存到树中。根结点的其他部分包含树的元数据,最后一个hash->block map查找到htree中更低的节点。如果dx_root.info.indirect_levels不为0,那么htree有两层;htree根结点的map指向的数据块是一个内部节点,由一个minor hash索引。Htree中的内部节点的minor_hash->block map之后包含一个零化的(zeroed out) structext4_dir_entry_2找到叶子节点。叶子节点包括一个线性的struct ext4_dir_entry_2数组;所有这些项都哈希到相同的值。如果发生溢出,目录项简单地溢出到下一个叶子节点,哈希的least-significant位(内部节点的map)做相应设置。以htree的方式遍历目录,计算要查找的目录文件名称的哈希值,然后使用哈希值找到对应的数据块号。如果树是flat,该数据块是目录项的线性数组,因而可被搜索到;否则,计算文件名称的minor hash,并使用minor hash查找相应的第三个数据块号。第三个数据块是目录项线性数组。

Htree的根 :struct dx_root

Htree的内部节点: struct dx_node

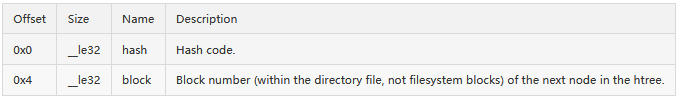

Htree 树根和节点中都存在的 Hashmap: struct dx_entry

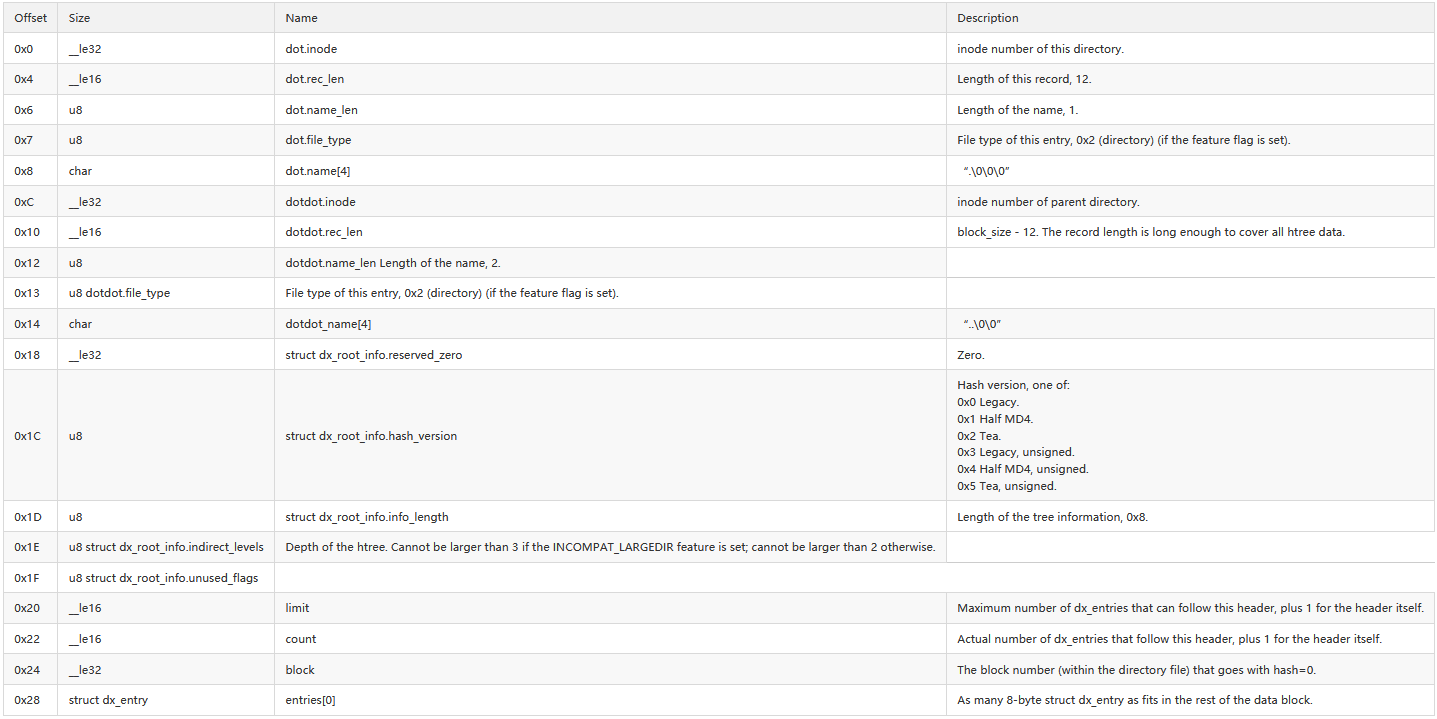

The root of the htree is in struct dx_root, which is the full length of a data block:

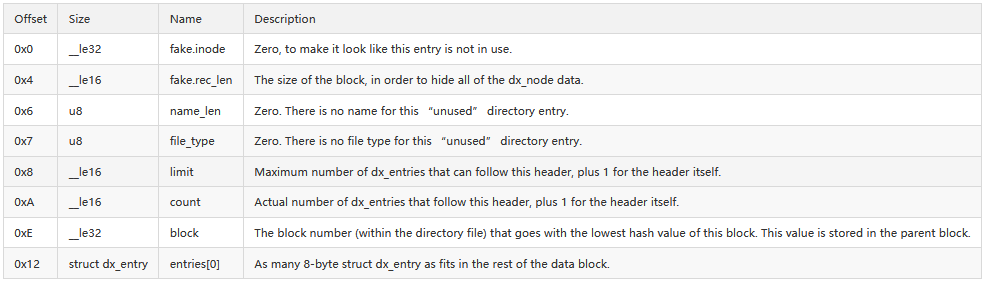

Interior nodes of an htree are recorded as struct dx_node, which is also the full length of a data block:

If metadata checksums are enabled, the last 8 bytes of the directory block (precisely the length of one dx_entry) are used to store a struct dx_tail, which contains the checksum. The limit and count entries in the dx_root/dx_node structures are adjusted as necessary to fit the dx_tail into the block. If there is no space for the dx_tail, the user is notified to run e2fsck -D to rebuild the directory index (which will ensure that there’s space for the checksum. The dx_tail structure is 8 bytes long and looks like this:

2. 通过dd了解文件在磁盘中的内容

把ext4分区挂载到ceph-vol1目录上,可以直接通过查看分区每个block的数据来了解文件系统中的各个概念

root@xxxxxx:/mnt/ceph-vol1# tree ./ ./ ├── AAA │ ├── 123 │ ├── 13344 │ ├── MN212142_9392.csv │ ├── random_data │ └── tiantian ├── lost+found ├── random_data └── test.txt root@xxxxxx:/mnt/ceph-vol1# stat ./ File: ./ Size: 4096 Blocks: 8 IO Block: 4096 directory Device: fb01h/64257d Inode: 2 Links: 4 Access: (0755/drwxr-xr-x) Uid: ( 0/ root) Gid: ( 0/ root) Access: 2020-10-22 09:21:12.342641001 +0800 Modify: 2020-10-22 09:21:11.526615694 +0800 Change: 2020-10-22 09:21:11.526615694 +0800 Birth: - root@xxxxxx:/mnt/ceph-vol1# stat test.txt File: AAA/test.txt Size: 52 Blocks: 8 IO Block: 4096 regular file Device: fb01h/64257d Inode: 12 Links: 1 Access: (0644/-rw-r--r--) Uid: ( 0/ root) Gid: ( 0/ root) Access: 2020-10-15 17:36:05.266403535 +0800 Change: 2020-10-22 09:21:11.526615694 +0800 Birth: - root@xxxxxx:/mnt/ceph-vol1# dumpe2fs /dev/rbd0p1 ***** Group 0: (Blocks 0-32767) csum 0xe2fe [ITABLE_ZEROED] Primary superblock at 0, Group descriptors at 1-1 Reserved GDT blocks at 2-128 Block bitmap at 129 (+129), csum 0x06292657 Inode bitmap at 137 (+137), csum 0xc6f39952 Inode table at 145-654 (+145) 28533 free blocks, 8143 free inodes, 6 directories, 8143 unused inodes Free blocks: 4235-32767 Free inodes: 18-8160 ***

得知 ceph-vol1的innode编号是2 目录下有个AAA目录,当中text.txt的inode编号是12。

通过以下线路获取text.txt的内容

从 Group Descriptors —> inode table —-> ceph-vol1 inode —> ceph-vol1 extent Data block 获得 ceph-vol1 的信息

从 ceph-vol1 extent Data block —> test.txt inode—> test.txt Data block 获得text.txt内容

第一步读取超级块信息,获得根目录ceph-vol1信息。

从文档磁盘以及ext4概念 得知,块组第0组必定有超级块的信息,使用DD命令读取对应的偏移量。

读取超级块数据

块的大小是4096bytes,skip是0 , 偏移量是0x400(1024的Group 0 Padding) 使用DD命令读取

dd if=/dev/rbd0p1 bs=4096 skip=0 | hexdump -C -n 3000 00000000 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 00000400 00 ff 00 00 00 fc 03 00 00 33 00 00 74 c5 03 00 |.........3..t...| //superblock起始 00000410 f3 fe 00 00 00 00 00 00 02 00 00 00 02 00 00 00 |................| 00000420 00 80 00 00 00 80 00 00 e0 1f 00 00 f4 17 88 5f |..............._| 00000430 f4 17 88 5f 02 00 ff ff 53 ef 01 00 01 00 00 00 |..._....S.......| 00000440 00 c8 86 5f 00 00 00 00 00 00 00 00 01 00 00 00 |..._............| 00000450 00 00 00 00 0b 00 00 00 00 01 00 00 3c 00 00 00 |............<...| 00000460 c6 02 00 00 6b 04 00 00 6f 07 4d b1 8f 6f 43 13 |....k...o.M..oC.| 00000470 85 1d fa 3c 9b 75 90 f8 00 00 00 00 00 00 00 00 |...<.u..........| 00000480 00 00 00 00 00 00 00 00 2f 6d 6e 74 2f 63 65 70 |......../mnt/cep| 00000490 68 2d 76 6f 6c 31 00 00 00 00 00 00 00 00 00 00 |h-vol1..........| 000004a0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| *

代入supperblock的结构体得到

Total inode count = 65280

Total block count = 261120

Blocks per group = 32768

Inodes per group = 8160

与 dumpe2fs /dev/rbd0p1 得出结果一致,证明直接读取磁盘数据的偏移量计算是正确的。

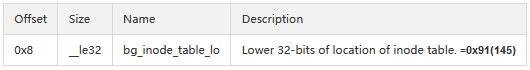

读取Group Descriptors块组描述符表

要获取第0组块的块描述,可以增大偏移量跳过超级块的位置,把skip改成1 获得块描述的信息

注意:所有块组的描述块都是放在一起。 起始第一个block刚好对应第0组块。

root@xxxxxx:~# dd if=/dev/rbd0p1 bs=4096 skip=1 | hexdump -C -n 3000 00000000 81 00 00 00 89 00 00 00 91 00 00 00 75 6f cf 1f |............uo..| //看这一行 00000010 06 00 04 00 00 00 00 00 57 26 52 99 cf 1f fe e2 |........W&R.....| 00000020 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| 00000030 00 00 00 00 00 00 00 00 29 06 f3 c6 00 00 00 00 |........).......| 00000040 82 00 00 00 8a 00 00 00 8f 02 00 00 7e 6b e0 1f |............~k..| 00000050 00 00 05 00 00 00 00 00 ac cb 00 00 e0 1f 02 4d |...............M| 00000060 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| 00000070 00 00 00 00 00 00 00 00 6b fa 00 00 00 00 00 00 |........k.......| 00000080 83 00 00 00 8b 00 00 00 8d 04 00 00 00 70 e0 1f |.............p..| 00000090 00 00 05 00 00 00 00 00 87 be 00 00 e0 1f 1c ba |................| 000000a0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| 000000b0 00 00 00 00 00 00 00 00 3d 8c 00 00 00 00 00 00 |........=.......| 000000c0 84 00 00 00 8c 00 00 00 8b 06 00 00 7f 7f e0 1f |................| 000000d0 00 00 07 00 00 00 00 00 00 00 00 00 e0 1f 9e 57 |...............W| 000000e0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

这样就获取得到组0的inode table的起始位置145 与dumpe2fs /dev/rbd0p1一致。

读取inode table 与 inode信息

root@xxxxxx:~# dd if=/dev/rbd0p1 bs=4096 skip=145 | hexdump -C -n 2000 00000000 00 00 00 00 00 00 00 00 01 c8 86 5f 01 c8 86 5f |..........._..._| 00000010 01 c8 86 5f 00 00 00 00 00 00 00 00 00 00 00 00 |..._............| 00000020 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 00000070 00 00 00 00 00 00 00 00 00 00 00 00 9e c7 00 00 |................| 00000080 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 00000100 ed 41 00 00 00 10 00 00 88 de 90 5f 87 de 90 5f |.A........._..._| //此处目录 00000110 87 de 90 5f 00 00 00 00 00 00 04 00 08 00 00 00 |..._............| 00000120 00 00 08 00 0c 00 00 00 0a f3 01 00 04 00 00 00 |................| 00000130 00 00 00 00 00 00 00 00 01 00 00 00 81 10 00 00 |................| 00000140 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 00000170 00 00 00 00 00 00 00 00 00 00 00 00 7f 57 00 00 |.............W..| 00000180 20 00 1f 92 38 12 8e 7d 38 12 8e 7d a4 25 b1 51 | ...8..}8..}.%.Q| 00000190 01 c8 86 5f 00 00 00 00 00 00 00 00 00 00 00 00 |..._............| 000001a0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

可以通过公式推得出,这个

block group = (inode_number - 1) / sb.s_inodes_per_group

inode table index = (inode_number - 1) % sb.s_inodes_per_group

byte address in the inode table = (inode table index) * sb->s_inode_size

得出 00000100 的位置是 ceph-vol1 indoe数据 , 00000128 则是i_block[EXT4_N_BLOCKS=15] extent tree的起始位置。

ceph-vol1 的inode 只有一个 extent 并且是叶子节点指向data block,目录inode的data block是Directory Entries结构体 struct dx_root,

i_block开头再偏移12bytes 得出extent 指向的data block编号 0x1081 (4225)

读取 目录extent数据

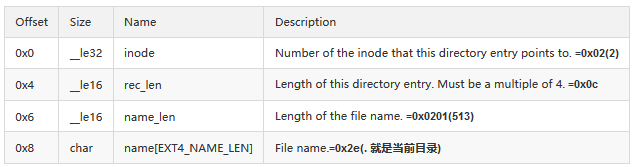

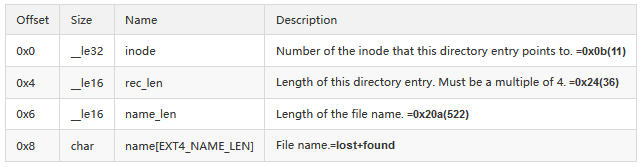

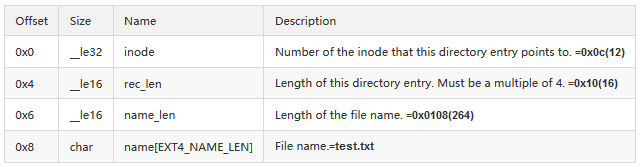

root@xxxxxx:~# dd if=/dev/rbd0p1 bs=4096 skip=4225 | hexdump -C -n 2000 00000000 02 00 00 00 0c 00 01 02 2e 00 00 00 02 00 00 00 |................| 00000010 0c 00 02 02 2e 2e 00 00 0b 00 00 00 24 00 0a 02 |............$...| 00000020 6c 6f 73 74 2b 66 6f 75 6e 64 00 00 0c 00 00 00 |lost+found......| 00000030 10 00 08 01 74 65 73 74 2e 74 78 74 0d 00 00 00 |....test.txt....| 00000040 14 00 0b 01 72 61 6e 64 6f 6d 5f 64 61 74 61 77 |....random_dataw| 00000050 0e 00 00 00 a4 0f 03 02 41 41 41 01 2e 74 65 73 |........AAA..tes| 00000060 74 2e 74 78 74 2e 73 77 78 00 00 00 00 00 00 00 |t.txt.swx.......| 00000070 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 000007d0

extent 数据中包含了当前目录,上一级目录,当前子目录和当前目录文件的信息

当前目录

ext4_dir_entry_2

lost+found

ext4_dir_entry_2

test.txt

ext4_dir_entry_2

可以得出 test.txt的inode编号 与 stat 结果是一致 为 12

读取文件indoe 以及 extent

再次回到上面inode table

把 12代入 inode_number

block group = (inode_number - 1) / sb.s_inodes_per_group

inode table index = (inode_number - 1) % sb.s_inodes_per_group

byte address in the inode table = (inode table index) * sb->s_inode_size

得

block group = 0

inode table index = 11

byte address in the inode table = 11 * 256 = 0xB00

root@xxxxxx:~# dd if=/dev/rbd0p1 bs=4096 skip=145 | hexdump -C -n 30000 * 00000b00 a4 81 00 00 34 00 00 00 05 18 88 5f 87 de 90 5f |....4......_..._| //此处 00000b10 01 18 88 5f 00 00 00 00 00 00 01 00 08 00 00 00 |..._............| 00000b20 00 00 08 00 01 00 00 00 0a f3 01 00 04 00 00 00 |................| 00000b30 00 00 00 00 00 00 00 00 01 00 00 00 81 80 00 00 |................| 00000b40 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| *

找到0xf30a位置再确认extent,得出data block 编号是 0x8081 (32897) ,便可知道text.txt的内容

dd if=/dev/rbd0p1 bs=4096 skip=32897 | hexdump -C -n 1000 00000000 68 65 6c 6c 6f 20 77 6f 72 6c 64 6c 73 6c 73 6c |hello worldlslsl| 00000010 73 6c 73 20 2d 2d 2d 2d 2d 2d 2d 2d 20 66 75 63 |sls -------- fuc| 00000020 6b 20 66 75 66 75 66 66 66 66 66 66 66 0a 66 64 |k fufufffffff.fd| 00000030 64 66 64 0a 00 00 00 00 00 00 00 00 00 00 00 00 |dfd.............| 00000040 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 000003e8 root@xxxxxx:/mnt/ceph-vol1/AAA# cat test.txt hello worldlslslsls -------- fuck fufufffffff fddfd

使用debugfs工具直接找出内容

使用debugfs 可以直接获得 文件/目录 data block 指向ext4_extent/ext4_dir_entry2 的编号

root@xxxxxx:/mnt/ceph-vol1/AAA# debugfs /dev/rbd0p1 debugfs 1.44.1 (24-Mar-2018) debugfs:stat ./ Inode: 14 Type: directory Mode: 0755 Flags: 0x80000 Generation: 2546967618 Version: 0x00000000:00000007 User: 0 Group: 0 Project: 0 Size: 4096 File ACL: 0 Links: 5 Blockcount: 8 Fragment: Address: 0 Number: 0 Size: 0 ctime: 0x5f91560e:e4814ef8 -- Thu Oct 22 17:51:10 2020 atime: 0x5f915612:a96762a8 -- Thu Oct 22 17:51:14 2020 mtime: 0x5f91560e:e4814ef8 -- Thu Oct 22 17:51:10 2020 crtime: 0x5f8916fe:3c52facc -- Fri Oct 16 11:43:58 2020 Size of extra inode fields: 32 Inode checksum: 0x704ebab8 EXTENTS: (0):4231 debugfs:stat ./test.txt Inode: 12 Type: regular Mode: 0644 Flags: 0x80000 Generation: 3671053201 Version: 0x00000000:00000001 User: 0 Group: 0 Project: 0 Size: 52 File ACL: 0 Links: 1 Blockcount: 8 Fragment: Address: 0 Number: 0 Size: 0 ctime: 0x5f90de87:7d8e1238 -- Thu Oct 22 09:21:11 2020 atime: 0x5f915207:02d0eb1c -- Thu Oct 22 17:33:59 2020 mtime: 0x5f881801:d53ae608 -- Thu Oct 15 17:36:01 2020 crtime: 0x5f86ca79:4762b4f8 -- Wed Oct 14 17:52:57 2020 Size of extra inode fields: 32 Inode checksum: 0xa5bced70 EXTENTS: (0):32897

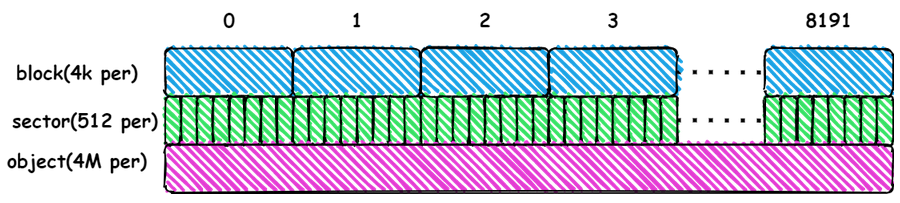

3. ceph object分布 与 ext4 块对应关系

ext4分区最小单位是一个block默认是4KB,在磁盘上是8个连续的sector。 ceph的oject大小默认是4M 等于可以保存 1024个 block,8192个 sector。

Disk /dev/rbd0: 1 GiB, 1073741824 bytes, 2097152 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 4194304 bytes / 4194304 bytes Disklabel type: dos Disk identifier: 0x5510f42b Device Boot Start End Sectors Size Id Type /dev/rbd0p1 8192 2097151 2088960 1020M 83 Linux

以上述的text.txt验证

起始Sector(start) = 分区start + block number 8 = 8192 + 32897 8 = 271368

结束Sector(end) = 分区start + (block number + 1) 8 - 1 = 8192 + 32898 8 - 1 = 271375

root@xxxxxx:~# dd if=/dev/rbd0 bs=512 skip=271368 | hexdump -C -n 1000 00000000 68 65 6c 6c 6f 20 77 6f 72 6c 64 6c 73 6c 73 6c |hello worldlslsl| 00000010 73 6c 73 20 2d 2d 2d 2d 2d 2d 2d 2d 20 66 75 63 |sls -------- fuc| 00000020 6b 20 66 75 66 75 66 66 66 66 66 66 66 0a 66 64 |k fufufffffff.fd| 00000030 64 66 64 0a 00 00 00 00 00 00 00 00 00 00 00 00 |dfd.............| 00000040 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 000003e8

sector 在 ceph object中是连续

起始Object(start) = 起始Sector(start) / 8 = 33921

结束Object(end) = 结束Sector(end) / 8 = 33921

偏移量 = 起始Sector(start) % 8192

常用命令参考

fdisk -l dumpe2fs tree stat dd debugfs

结束语

ext4 文件系统并不复杂,分析当中的储存架构可以使我们对操作系统有更好的了解。

浙公网安备 33010602011771号

浙公网安备 33010602011771号