使用Langchain实现AI搜索,提升Langchain使用技巧,改造AI搜索实现----4

search-api 国内不能使用

使用zhipu 的 web-search-pro 进行搜索

import os

from typing import List

from pydantic import BaseModel

from langchain.prompts import PromptTemplate

from langchain_community.llms.tongyi import Tongyi

from langchain_core.runnables import RunnablePassthrough

from serpapi import client

# 搜索 使用智普的

import requests

from zhipuai import ZhipuAI

# 通义获取api key

import os

from dotenv import find_dotenv, load_dotenv

load_dotenv(find_dotenv())

DASHSCOPE_API_KEY = os.environ["DASHSCOPE_API_KEY"]

api_key = "fde24905ae3c5af19145593f767cdfde.NlFmUfzSqkJmrhb"

# # 调用web-search-pro获取搜索结果

def get_search_results(query: str):

msg = [

{

"role": "user",

"content": query,

}

]

tool = "web-search-pro"

url = "https://open.bigmodel.cn/api/paas/v4/tools"

data = {

"tool": tool,

"stream": False,

"messages": msg

}

resp = requests.post(

url,

json=data,

headers={'Authorization': api_key},

timeout=300

)

res = eval(resp.content.decode()) # 转换成字典

list_v = res.get("choices")

for mes in list_v:

value = mes["message"]

tool_c = value.get("tool_calls")

for tool_data in tool_c:

result_v = tool_data.get("search_result")

for dict_v in result_v:

# print(dict_v["title"])

# print(dict_v["content"])

return dict_v

# get_search_results(query="易建联什么时候退役的")

# 1.定义模型

model = Tongyi(model_name='qwen-max', model_kwargs={'temperature': 0.01})

# 2 定义提示

prompt_tlp = PromptTemplate.from_template(

"根据搜索引擎的结果,回答用户的问题。\nSEARCH_RESULTS:{search_result}\nUSER_QUERY:{query}"

)

# 创建搜索Chain

search_chain = {

"search_result": get_search_results, # 引入搜索大模型结果计算

"query": RunnablePassthrough() # 获取变量值。 相当于get_search_results, 获取的结果的变量

} | prompt_tlp | model

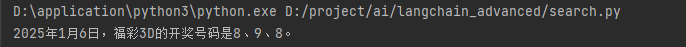

# query = '2025年1月6日,福彩3D开奖号是多少?'

query = '清明上河图密码是什么意思?'

res = search_chain.invoke(query)

print(res)

问一个目前热播的事情

![]()

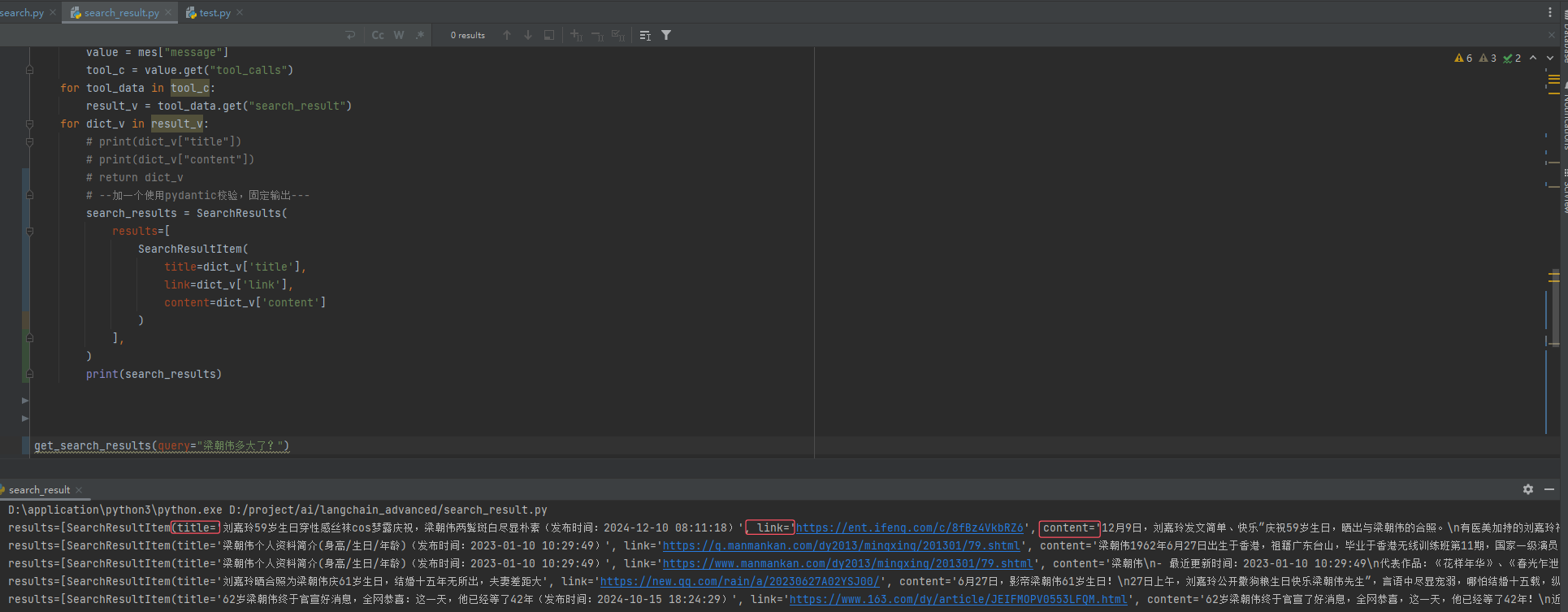

解耦搜索引擎

就是,加一个 pydantic 返回参数校验

定义输出,数据格式

class SearchResultItem(BaseModel):

title: str

link: str

snippet: str # 摘要

class SearchResults(BaseModel):

results: List[SearchResultItem]代码

固定输出 title, link, content

for dict_v in result_v:

# print(dict_v["title"])

# print(dict_v["content"])

# return dict_v

# --加一个使用pydantic校验,固定输出---

search_results = SearchResults(

results=[

SearchResultItem(

title=dict_v['title'],

link=dict_v['link'],

content=dict_v['content']

)

],

)

print(search_results)

序列化之后输出的数据,然后进行计算

from typing import List

from pydantic import BaseModel

from langchain.prompts import PromptTemplate

from langchain_community.llms.tongyi import Tongyi

from langchain_core.runnables import RunnablePassthrough

# 搜索 使用智普的

import requests

from zhipuai import ZhipuAI

# 通义获取api key

import os

from dotenv import find_dotenv, load_dotenv

load_dotenv(find_dotenv())

DASHSCOPE_API_KEY = os.environ["DASHSCOPE_API_KEY"]

api_key = "fde24905ae3c5af1914559f767cdfde.NlFmUfz3SqkJmrhb"

# pydantic,搜索结果数据模型model

class SearchResultItem(BaseModel): # 存储单条搜索结果

title: str

link: str

content: str # 摘要

class SearchResults(BaseModel): # 存储多条搜索结果

results: List[SearchResultItem]

# # 调用web-search-pro获取搜索结果

def get_search_results(query: str) -> SearchResults:

msg = [

{

"role": "user",

"content": query,

}

]

tool = "web-search-pro"

url = "https://open.bigmodel.cn/api/paas/v4/tools"

data = {

"tool": tool,

"stream": False,

"messages": msg

}

resp = requests.post(

url,

json=data,

headers={'Authorization': api_key},

timeout=300

)

res = eval(resp.content.decode()) # 转换成字典

list_v = res.get("choices")

for mes in list_v:

value = mes["message"]

tool_c = value.get("tool_calls")

for tool_data in tool_c:

result_v = tool_data.get("search_result")

for dict_v in result_v:

# print(dict_v["title"])

# print(dict_v["content"])

# return dict_v

# -----------加一个使用pydantic校验,序列化,固定输出---

search_results = SearchResults(

results=[

SearchResultItem(

title=dict_v['title'],

link=dict_v['link'],

content=dict_v['content']

)

],

)

# print(search_results)

return search_results

# get_search_results(query="梁朝伟多大了?")

# 1.定义模型

model = Tongyi(model_name='qwen-max', model_kwargs={'temperature': 0.01})

# 2 定义提示

prompt_tlp = PromptTemplate.from_template(

"""

根据搜索引擎的结果,回答用户的问题。\n

SEARCH_RESULTS: {search_result}\n

USER_QUERY: {query}

"""

)

# 创建搜索Chain

search_chain = {

"search_result": lambda x: get_search_results(x),

"query": lambda x: x,

} | prompt_tlp | model

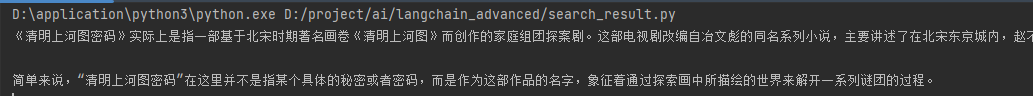

# query = '2025年1月6日,福彩3D开奖号是多少?'

query = '清明上河图密码是什么意思?'

res = search_chain.invoke(query)

print(res)

end...

本文来自博客园,作者:王竹笙,转载请注明原文链接:https://www.cnblogs.com/edeny/p/18657244

浙公网安备 33010602011771号

浙公网安备 33010602011771号