centos7安装ELK

Elasticsearch

Elasticsearch是个开源分布式搜索引擎,提供搜集、分析、存储数据三大功能。它的特点有:分布式,零配置,自动发现,索引自动分片,索引副本机制,restful风格接口,多数据源,自动搜索负载等。

Logstash

数据收集引擎。它支持动态的从各种数据源搜集数据,并对数据进行过滤、分析、丰富、统一格式等操作,然后存储到用户指定的位置。

FileBeat

ELK 协议栈的新成员,一个轻量级开源日志文件数据搜集器,基于 Logstash-Forwarder 源代码开发,是对它的替代。在需要采集日志数据的 server 上安装 Filebeat,并指定日志目录或日志文件后,Filebeat 就能读取数据,迅速发送到 Logstash 进行解析,亦或直接发送到 Elasticsearch 进行集中式存储和分析。

Filebeat隶属于Beats。目前Beats包含六种工具:

Packetbeat(搜集网络流量数据)

Metricbeat(搜集系统、进程和文件系统级别的 CPU 和内存使用情况等数据)

Filebeat(搜集文件数据)

Winlogbeat(搜集 Windows 事件日志数据)

Auditbeat( 轻量型审计日志采集器)

Heartbeat(轻量级服务器健康采集器)

Kibana

Kibana可以为 Logstash 、Beats和 ElasticSearch 提供的日志分析友好的 Web 界面,可以帮助汇总、分析和搜索重要数据日志。

安装前环境准备

1.修改limit.conf文件的配置

vim /etc/security/limit.conf 若有下面的内容则将数字修改为65536,若没有则添加

* hard nofile 65536

* soft nofile 65536

* soft nproc 65536

* hard nproc 65536

2.修改sysctl.conf文件的配置

vim /etc/sysctl.conf

vm.max_map_count = 262144

net.core.somaxconn=65535

net.ipv4.ip_forward = 1

3.执行sysctl -p 使上面的配置生效

安装linux版本的jdk8

参考链接进行jdk8安装:http://www.dyfblogs.com/post/centos_install_jdk8/

下载6.2.4版本的E,L,K

注意:Elasticsearch、Kibana、FileBeat一定要使用相同的版本

ELK+Filebeat, 每个节点安装Filebeat收集日志,然后将日志传给logstash

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.2.4.rpm

wget https://artifacts.elastic.co/downloads/kibana/kibana-6.2.4-x86_64.rpm

wget https://artifacts.elastic.co/downloads/logstash/logstash-6.2.4.rpm

Linux节点服务器需下载

wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-6.2.4-x86_64.rpm

ll

-----------------------------------------------------------------------

total 270628

-rw-r--r-- 1 root root 28992177 Dec 30 23:27 elasticsearch-6.2.4.rpm

-rw-r--r-- 1 root root 12699052 Dec 30 23:28 filebeat-6.2.4-x86_64.rpm

-rw-r--r-- 1 root root 87218216 Dec 30 23:27 kibana-6.2.4-x86_64.rpm

-rw-r--r-- 1 root root 148204622 Dec 30 23:28 logstash-6.2.4.rpm

将filebeat-6.2.4-x86_64.rpm cp到需要收集日志的节点

安装ELK

1.安装elasticsearch

# rpm -ivh elasticsearch-6.2.4.rpm

warning: elasticsearch-6.2.4.rpm: Header V4 RSA/SHA512 Signature, key ID d88e42b4: NOKEY

Preparing... ################################# [100%]

Creating elasticsearch group... OK

Creating elasticsearch user... OK

Updating / installing...

1:elasticsearch-0:6.2.4-1 ################################# [100%]

### NOT starting on installation,

### please execute the following statements to configure elasticsearch service to start automatically using systemd

sudo systemctl daemon-reload

sudo systemctl enable elasticsearch.service

### You can start elasticsearch service by executing

sudo systemctl start elasticsearch.service

2.安装kibana

]# rpm -ivh kibana-6.2.4-x86_64.rpm

warning: kibana-6.2.4-x86_64.rpm: Header V4 RSA/SHA512 Signature, key ID d88e42b4: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:kibana-6.2.4-1 ################################# [100%]

3.安装logstash

# rpm -ivh logstash-6.2.4.rpm

warning: /home/elk_files/rpm_type/logstash-6.2.4.rpm: Header V4 RSA/SHA512 Signature, key ID d88e42b4: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:logstash-1:6.2.4-1 ################################# [100%]

Using provided startup.options file: /etc/logstash/startup.options

Successfully created system startup script for Logstash

4.创建ELK存放数据和日志的目录

# mkdir -pv /home/data/elasticsearch/{data,logs}

mkdir: created directory ‘/home/data’

mkdir: created directory ‘/home/data/elasticsearch’

mkdir: created directory ‘/home/data/elasticsearch/data’

mkdir: created directory ‘/home/data/elasticsearch/logs’

# mkdir -pv /home/data/logstash/{data,logs}

mkdir: created directory ‘/home/data/logstash’

mkdir: created directory ‘/home/data/logstash/data’

mkdir: created directory ‘/home/data/logstash/logs’

5.为elasticsearch和logstash创建用户并对home/data/logstash和/home/data/elasticsearch授权

useradd elastic

echo "123456" | passwd elastic --stdin

chown -R elastic.elastic /home/data/elasticsearch

useradd logsth

echo "123456" | passwd logsth --stdin

chown -R logstash.logstash home/data/logstash

修改ELK配置文件

1.修改elasticsearch文件

vi /etc/elasticsearch/elasticsearch.yml

path.data: /data/elasticsearch/data

path.logs: /data/elasticsearch/logs

network.host: 0.0.0.0

http.port: 9200

2.修改logstash文件

vi /etc/logstash/logstash.yml

path.data: /data/logstash/data

path.logs: /data/logstash/logs

path.config: /etc/logstash/conf.d

vim /etc/logstash/conf.d/logstash.conf # 添加如下内容

input {

beats {

port => 5044

codec => plain {

charset => "UTF-8"

}

}

}

output {

elasticsearch {

hosts => "127.0.0.1:9200"

manage_template => false

index => "%{[@metadata][beat]}-%{+YYYY.MM.dd}"

document_type => "%{[@metadata][type]}"

}

}

3.修改kibana文件

vi /etc/kibana/kibana.yml

server.port: 5601

server.host: "192.168.2.207"

elasticsearch.url: "http://localhost:9200"

启动ELK

1.重新加载所有配置文件

systemctl daemon-reload

2.启动elasticsearch

systemctl start elasticsearch

3.设置elasticsearch开机启动

systemctl enable elasticsearch

注意:elasticsearch的配置文件的用户要和/home/data/elasticsearch 的用户一样,rpm包安装的elasticsearch的配置文件默认用户为elasticsearch

4.启动logstash

systemctl start logstash

5.设置logstash开机启动

systemctl enable logstash

6.启动kibana

systemctl start kibana

7.设置kibana开机启动

systemctl enable kibana

查看端口是否已监听

ss -tnl

查看elasticsearch状态

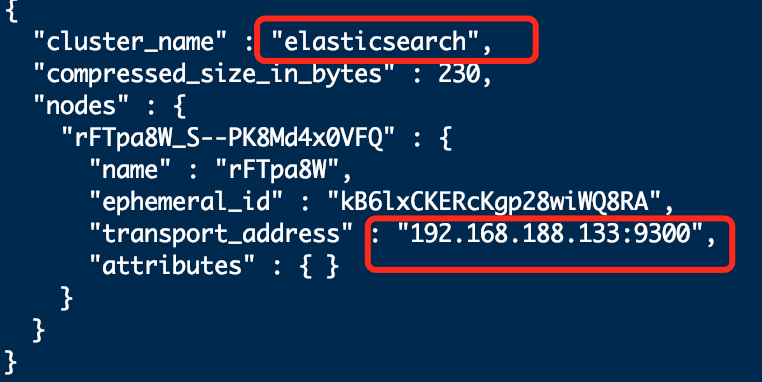

curl -XGET 'http://192.168.188.133:9200/_cluster/state/nodes?pretty'

查看elasticsearch的master

curl -XGET 'http://192.168.188.133:9200/_cluster/state/master_node?pretty'

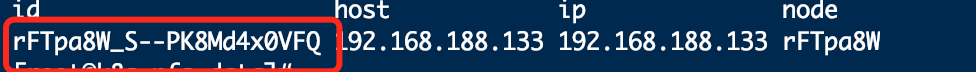

curl -XGET 'http://192.168.188.133:9200/_cat/master?v'

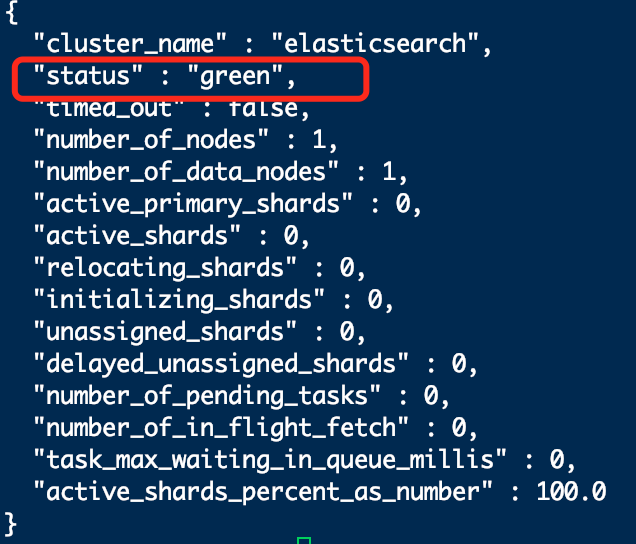

查看健康状态

curl -XGET 'http://192.168.188.133:9200/_cat/health?v'

curl -XGET 'http://192.168.188.133:9200/_cluster/health?pretty'

需要收集日志的节点安装Filebeat

进入filebeat-6.2.4-x86_64.rpm所在目录

# rpm -ivh filebeat-6.2.4-x86_64.rpm

warning: filebeat-6.2.4-x86_64.rpm: Header V4 RSA/SHA512 Signature, key ID d88e42b4: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:filebeat-6.2.4-1 ################################# [100%]

修改Filebeat配置

vi /etc/filebeat/filebeat.yml

-----------------------------------------------------------

- type: log

enabled: true

- /var/log/*.log

- /var/log/messages

- /var/log/containers/*.log

setup.kibana:

host: "192.168.188.133:5601"

#output.elasticsearch: //我们输出到logstash,把这行注释掉

#hosts: ["localhost:9200"] //这行也注释掉

output.logstash:

hosts: ["192.168.188.133:5044"]

启动Filebeat

systemctl start filebeat

systemctl enable filebeat

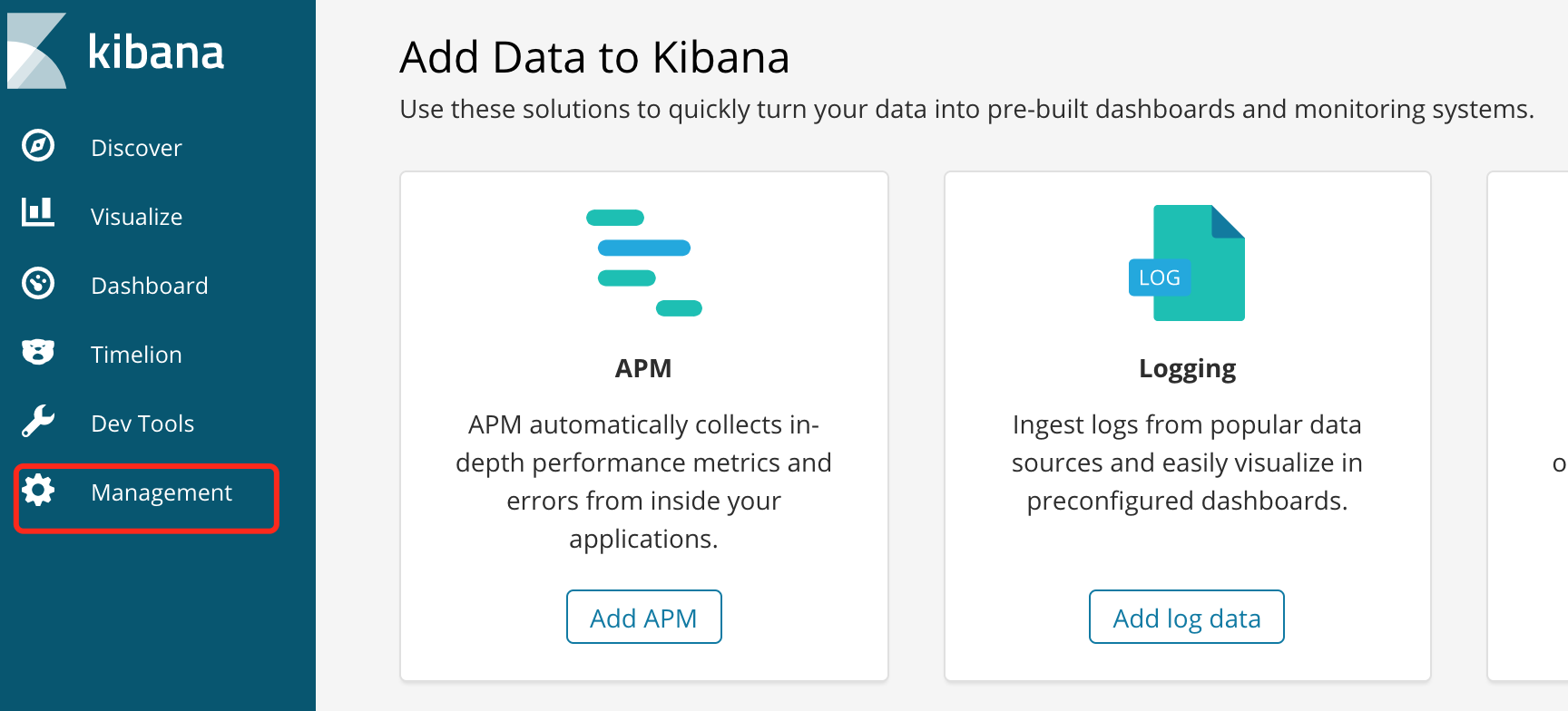

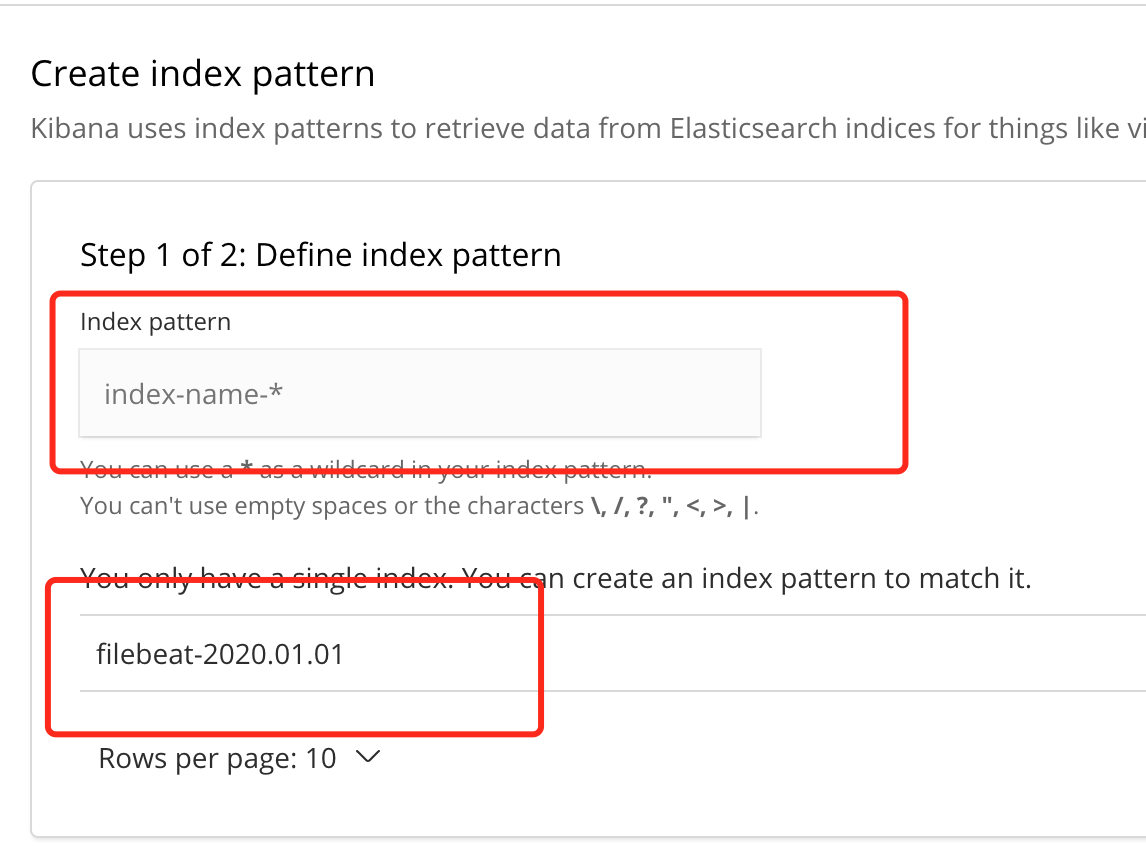

访问kibana: 192.168.188.133:5601

第一个框中输入filebeat-*, 然后点击Next step

然后选择@timestamp,点击create index pattern

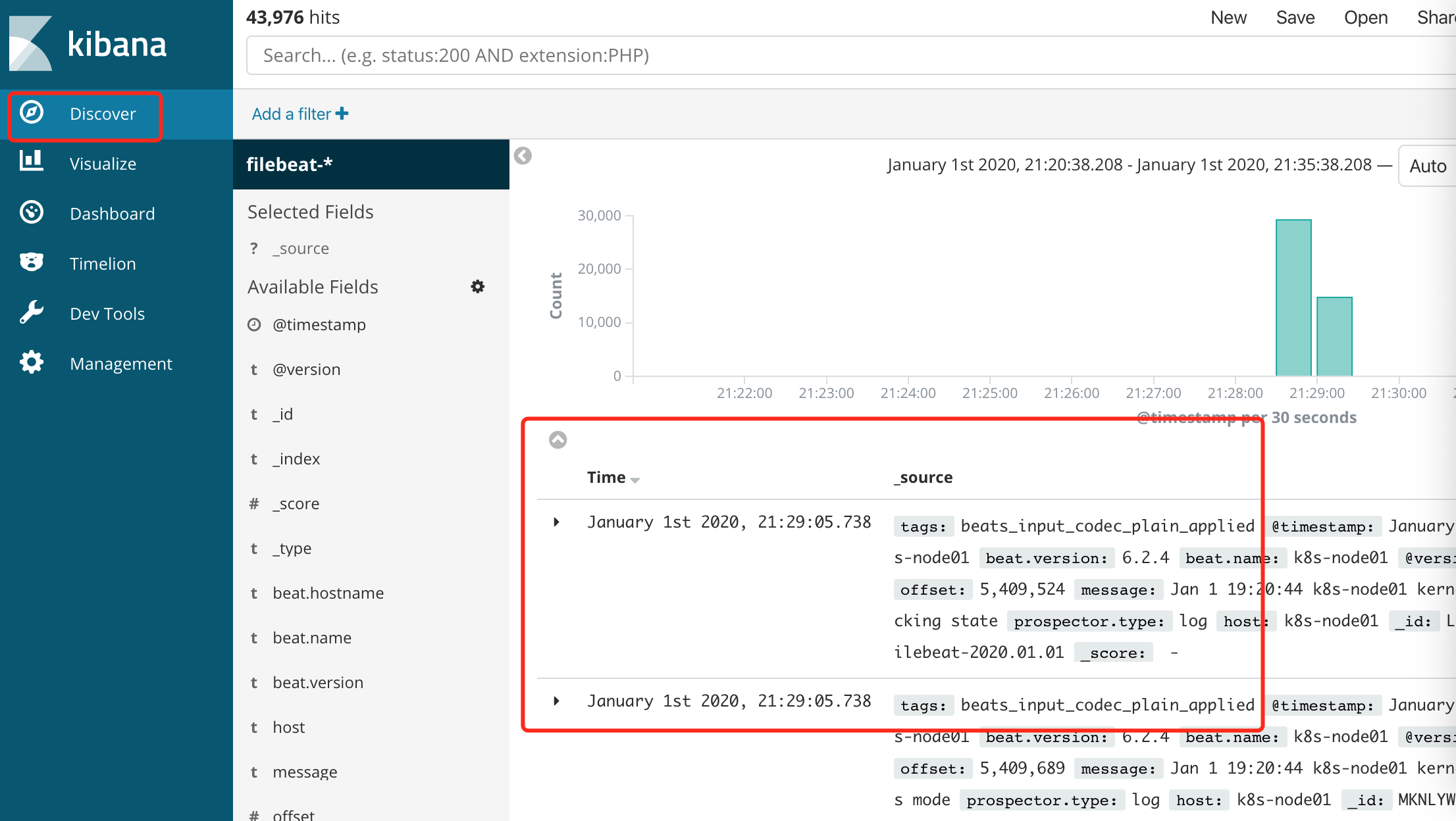

创建好后,点击左上角Discover,就可以看到如下图所示的日志内容了

安装配置iptables

1.安装iptables工具(若有该工具,则无需安装)

yum -y install iptables iptables-services

2.修改iptables文件添加端口策略

vim /etc/sysconfig/iptables

-A INPUT -p tcp -m state --state NEW -m tcp --dport 9200 -j ACCEPT

-A INPUT -p tcp -m state --state NEW -m tcp --dport 80 -j ACCEPT

-A INPUT -p tcp -m state --state NEW -m tcp --dport 5601 -j ACCEPT

-A INPUT -p tcp -m state --state NEW -m tcp --dport 5044 -j ACCEPT

3.设置防火墙zone

firewall-cmd --zone=public --add-port=8081/tcp --permanent

4.重新加载防火墙配置

firewall-cmd --reload

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】凌霞软件回馈社区,博客园 & 1Panel & Halo 联合会员上线

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】博客园社区专享云产品让利特惠,阿里云新客6.5折上折

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 用 C# 插值字符串处理器写一个 sscanf

· Java 中堆内存和栈内存上的数据分布和特点

· 开发中对象命名的一点思考

· .NET Core内存结构体系(Windows环境)底层原理浅谈

· C# 深度学习:对抗生成网络(GAN)训练头像生成模型

· 为什么说在企业级应用开发中,后端往往是效率杀手?

· 本地部署DeepSeek后,没有好看的交互界面怎么行!

· 趁着过年的时候手搓了一个低代码框架

· 用 C# 插值字符串处理器写一个 sscanf

· 推荐一个DeepSeek 大模型的免费 API 项目!兼容OpenAI接口!