tensorflow2.0——ShortCut结构、BottleNeck结构与ResNet50网络

一、ShortCut结构

ResNet神经网络中有一种ShortCut Connection网络结构,主要用的是跳远连接的方式来解决深层神经网络退化的问题,在跳远连接的后需要对输入与激活前的值进行相加,激活前的值y可能与输入值的shape相同(称为identity block),也可能不相同(称为cov block),所以有ResNet有两种方式,当shape不相同时,用1*1的卷积操作来处理,一般来说1*1的卷积对神经网络特征的学习作用不大,通常用来做shape的调整。

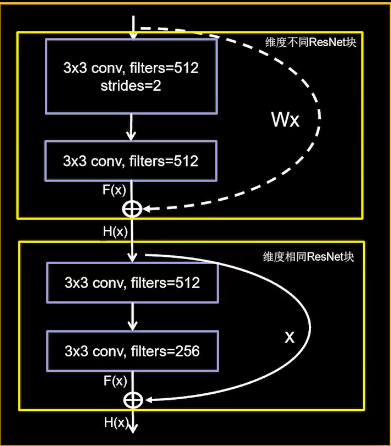

如下图,输入是265通道的图,虚线表示shape不同的ResNet操作,实线表示shape相同的ResNet操作。

二、ShortCut实现

用cifar10数据集对的shape进行实现,跳远结构也比较简单,和上一节的图示一样只是跳了两层卷积,如何实现维度不同的的跳远连接操作呢,可以通过对比ResNet的输入与卷积之后的输出的shape即可,如下代码:

# 判断输入和输出是否有相同的channel,不相同则用1*1卷积进行操作 if block_input.shape[-1] == cov_output.shape[-1]: block_out = block_input + cov_output return block_out else: block_out = block_input + Covunit(cov_output, cov_output.shape[-1], [1 * 1]) # 当维度不相同时用1*1的卷积处理 return block_out

代码示例:

import tensorflow as tf # 创建卷积结构单元的函数 def Covunit(unit_input, filters, kernel_size, activation=None): ''' :param unit_input: 卷积核输入,tensor :param filters: 卷积核个数 :param kernel_size: 卷积核大小 :param activation: 激活函数,若为0则不激活 :return:卷积后输出的tensor ''' unit_cov = tf.keras.layers.Conv2D(filters, kernel_size, padding='same')(unit_input) # 卷积,不改变长于宽 unit_bn = tf.keras.layers.BatchNormalization()(unit_cov) # 批标准化 if activation: unit_act = tf.keras.layers.Activation(activation)(unit_bn) else: unit_act = unit_bn return unit_act # ResNet函数,网络结构中可能有多个这样的结构块,所以需要如此操作 def ShortCut(block_input, filters=None, activations=None): ''' :param block_input: ResNet结构的输入 :param filters: 该ResNet结构每个卷积层的filters个数 :param filters: 该ResNet结构每个卷积层的激活函数,最后一层不需要激活函数 :return: Inceptionblock结构输出的tensor ''' # 如果有多个卷积操作,则依次构建卷积操作 if filters: layer_input = block_input # 定义卷积层的输入,初始为block_input for filters, activation in zip(filters, activations): layer_input = Covunit(unit_input=layer_input, filters=filters, kernel_size=[3, 3], activation=activations) cov_output = layer_input # 卷积层的输出 else: cov_output = block_input # 无卷积层的输出 # 判断输入和输出是否有相同的channel,不相同则用1*1卷积进行操作 if block_input.shape[-1] == cov_output.shape[-1]: block_out = block_input + cov_output return block_out else: block_out = block_input + Covunit(cov_output, cov_output.shape[-1], [1 * 1]) # 当维度不相同时用1*1的卷积处理 return block_out

三、BottleNeck结构

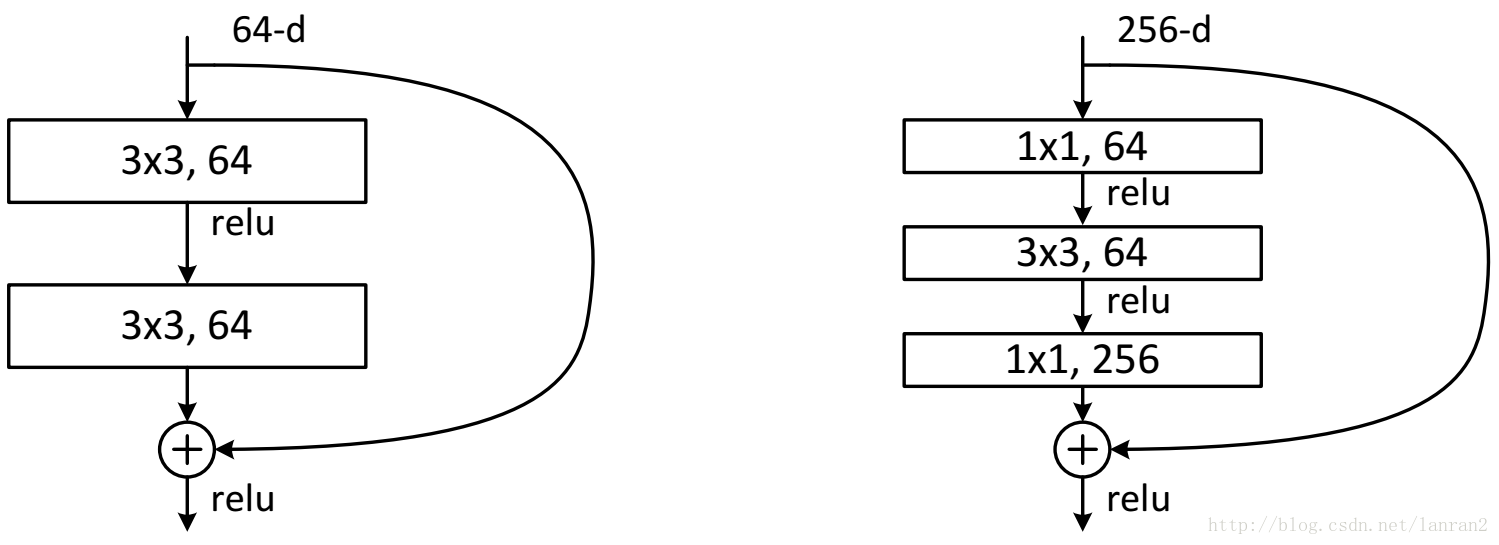

普通的shortcut(如下左图)参数的计算量比较大,这个时候可以使用bottleneck瓶颈结构(如下右图)来减少参数量,这种替换有点类似于用两个3*3卷积替换一个5*5的卷积的情况。

四、ResNet50网络

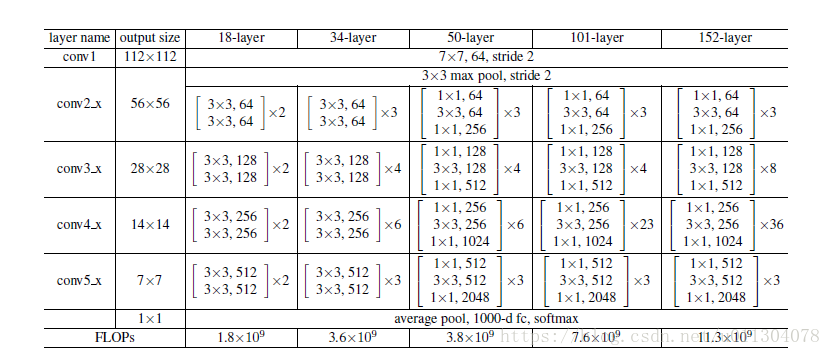

ResNet50网络是一个非常典型的利用瓶颈结构进行深度网络构建的,其结构如下图:

这里有如下注意事项:

- 在进行layername=COV1前需要对输入进行3*3padding stride 2的零填充操作。

- 每一个stage的第一个瓶颈层应为cov block,用来做参数结构调整,其余瓶颈层应为identity block。如cov2_x有3个瓶颈层,第一个瓶颈层应该是cov block,剩余的两个位你identity block。

五、ResNet50网络的实现

考虑到存在两种不同的跳远连接,所以这里定义cov_block与identity_block两个函数。

代码示例

from tensorflow.keras import layers def cov_block(input_tensor, filters, kernel_size=(3, 3), strides=(1, 1), name=None): ''' resnet网络中,跳远连接后,input与output的通道数不同的瓶颈卷积块 input_tensor:输入tensor filters:瓶颈结构各个卷积的通道数,如[64,64,265] ''' filter1, filter2, filter3 = filters # 瓶颈结构个各个卷积层的卷积核数 # 瓶颈结构1*1卷积层 x = layers.Conv2D(filter1, (1, 1), strides=strides, name=name + 'bot_cov1')(input_tensor) x = layers.BatchNormalization(name=name + 'bottle_cov1_bn')(x) x = layers.Activation('relu')(x) # 瓶颈结构3*3卷积层 x = layers.Conv2D(filter2, kernel_size, strides=strides, name=name + 'bot_cov2')(x) x = layers.BatchNormalization(name=name + 'bottle_cov2_bn')(x) x = layers.Activation('relu')(x) # 瓶颈结构1*1卷积层 x = layers.Conv2D(filter3, (1, 1), strides=strides, name=name + 'bot_cov3')(x) x = layers.BatchNormalization(name=name + 'bottle_cov3_bn')(x) # 跳远结构,调整input与output相同的shape shortcut = layers.Conv2D(filter3, (1, 1), strides, 1, name=name + 'bot_shortcut')(input_tensor) shortcut = layers.BatchNormalization(name=name + 'bottle_cov3_bn')(shortcut) # 输出 x = layers.add([x, shortcut]) x = layers.Activation('relu')(x) return x def identity_block(input_tensor, filters, kernel_size=(3, 3), strides=(1, 1), name=None): ''' resnet网络中,跳远连接后,input与output的通道数相同的瓶颈卷积块 input_tensor:输入tensor filters:瓶颈结构各个卷积的通道数,如[64,64,265] ''' filter1, filter2, filter3 = filters # 瓶颈结构个各个卷积层的卷积核数 # 瓶颈结构1*1卷积层 x = layers.Conv2D(filter1, (1, 1), strides=strides, name=name + 'bot_cov1')(input_tensor) x = layers.BatchNormalization(name=name + 'bottle_cov1_bn')(x) x = layers.Activation('relu')(x) # 瓶颈结构3*3卷积层 x = layers.Conv2D(filter2, kernel_size, strides=strides, name=name + 'bot_cov2')(x) x = layers.BatchNormalization(name=name + 'bottle_cov2_bn')(x) x = layers.Activation('relu')(x) # 瓶颈结构1*1卷积层 x = layers.Conv2D(filter3, (1, 1), strides=strides, name=name + 'bot_cov3')(x) x = layers.BatchNormalization(name=name + 'bottle_cov3_bn')(x) # 输出,输入与输出shape相同,输入在上一次卷积激活钱已经标准化,所以这里不需要再次标准化,直接相加 x = layers.add([x, input_tensor]) x = layers.Activation('relu')(x) return x def resnet50(input): # resnet50-cov1: 3*3 padding, 7*7,64, stride 2 x = layers.ZeroPadding2D((3, 3))(input) x = layers.Conv2D(64, (7, 7), strides=(2, 2), name='resnet_cov1')(x) x = layers.BatchNormalization(name='resnet_cov1_bn')(x) x = layers.Activation('relu')(x) # 3*3 maxpooling, stride 2 x = layers.MaxPooling2D((3, 3), (2, 2))(x) # resnet50-cov2 x = cov_block(input_tensor=x, filters=[64, 64, 256], kernel_size=(3, 3), strides=(1, 1), name='resnet_cov2_bot1') x = identity_block(input_tensor=x, filters=[64, 64, 256], kernel_size=(3, 3), strides=(1, 1), name='resnet_cov2_bot2') x = identity_block(input_tensor=x, filters=[64, 64, 256], kernel_size=(3, 3), strides=(1, 1), name='resnet_cov2_bot3') # resnet50-cov3 x = cov_block(x, [128, 128, 512], (3, 3), (1, 1), 'resnet_cov3_bot1') x = identity_block(x, [128, 128, 512], (3, 3), (1, 1), 'resnet_cov3_bot2') x = identity_block(x, [128, 128, 512], (3, 3), (1, 1), 'resnet_cov3_bot3') x = identity_block(x, [128, 128, 512], (3, 3), (1, 1), 'resnet_cov3_bot4') # resnet50-cov4 x = cov_block(x, [256, 256, 1024], (3, 3), (1, 1), 'resnet_cov4_bot1') x = identity_block(x, [256, 256, 1024], (3, 3), (1, 1), 'resnet_cov4_bot2') x = identity_block(x, [256, 256, 1024], (3, 3), (1, 1), 'resnet_cov4_bot3') x = identity_block(x, [256, 256, 1024], (3, 3), (1, 1), 'resnet_cov4_bot4') x = identity_block(x, [256, 256, 1024], (3, 3), (1, 1), 'resnet_cov4_bot5') x = identity_block(x, [256, 256, 1024], (3, 3), (1, 1), 'resnet_cov4_bot6') # resnet50-cov5 x = cov_block(x, [512, 512, 2048], (3, 3), (1, 1), 'resnet_cov5_bot1') x = identity_block(x, [512, 512, 2048], (3, 3), (1, 1), 'resnet_cov5_bot2') x = identity_block(x, [512, 512, 2048], (3, 3), (1, 1), 'resnet_cov5_bot3')

浙公网安备 33010602011771号

浙公网安备 33010602011771号