tensorflow2.0——经典网络结构LeNet-5、AlexNet、VGGNet-16

一、LeNet-5

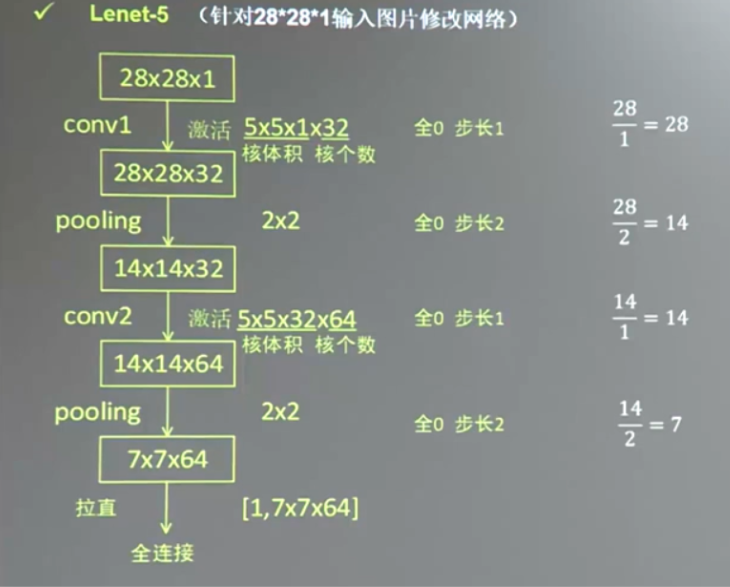

Lenet-5的结构很简单,但是包含神经网络的基本结构,用的是5*5卷积和平均池化,可以用来作为简单的练习,其结构图下:

代码:

import tensorflow as tf import matplotlib.pyplot as plt import os # gpus=tf.config.list_physical_devices('GPU') # tf.config.experimental.set_visible_devices(devices=gpus[2:4], device_type='GPU') # os.environ["CUDA_VISIBLE_DEVICES"] = "-0" # 读取数据集,28*28像素1通道mnist图片,标签为10类 (train_images, train_labels), (test_images, test_labels) = tf.keras.datasets.mnist.load_data() train_images = tf.reshape(train_images, (train_images.shape[0], train_images.shape[1], train_images.shape[2], 1)) print(train_images.shape) test_images = tf.reshape(test_images, (test_images.shape[0], test_images.shape[1], test_images.shape[2], 1)) # 数据集归一化 train_images = train_images / 255 train_labels = train_labels / 255 # 进行数据的归一化,加快计算的进程 # 创建模型结构 net_input = tf.keras.Input(shape=(28, 28, 1)) l1_con = tf.keras.layers.Conv2D(6, (5, 5), padding='valid', activation='sigmoid')(net_input) # 6个5*5卷积层,sigmod激活函数 l1_pool = tf.keras.layers.AveragePooling2D((2, 2), (2, 2))(l1_con) # 2*2,stride=2的平均池化 l2_con = tf.keras.layers.Conv2D(16, (5, 5), padding='valid', activation='sigmoid')(l1_pool) # 16个5*5卷积层,sigmod激活函数 l2_pool = tf.keras.layers.AveragePooling2D((2, 2), (2, 2))(l2_con) # 2*2,stride=2的平均池化 flat = tf.keras.layers.Flatten()(l2_pool) l3_dense = tf.keras.layers.Dense(120, activation='sigmoid')(flat) # 全连接层 l4_dense = tf.keras.layers.Dense(84, activation='sigmoid')(l3_dense) # 全连接层 net_output = tf.keras.layers.Dense(10, activation='softmax')(l4_dense) # 输出层 # 创建模型类 model = tf.keras.Model(inputs=net_input, outputs=net_output) # 查看模型的结构 model.summary() # 模型编译 model.compile(optimizer=tf.keras.optimizers.SGD(learning_rate=0.01), loss="sparse_categorical_crossentropy", metrics=['acc']) # 模型训练 history = model.fit(train_images, train_labels, batch_size=50, epochs=5, validation_split=0.1, verbose=1) # 模型评估 model.evaluate(test_images, test_labels)

二、AlexNet

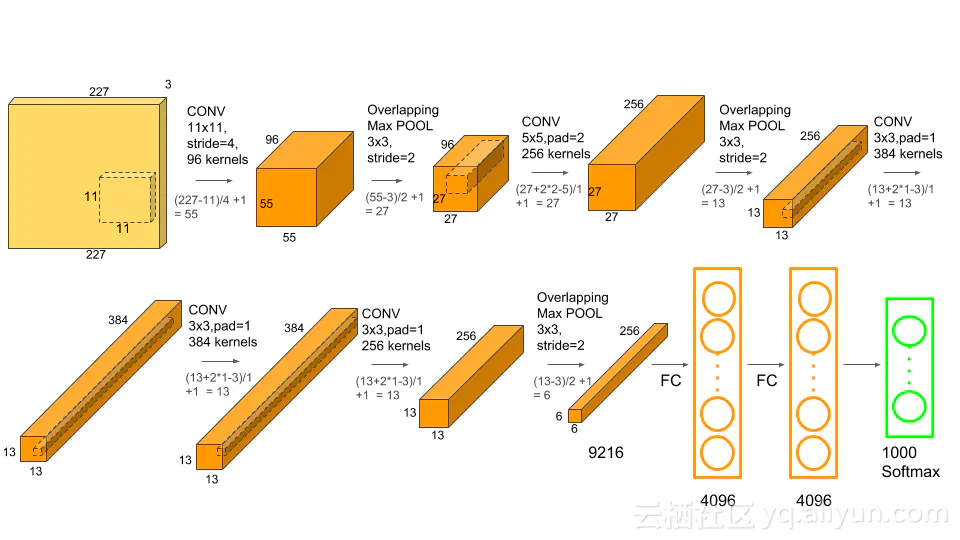

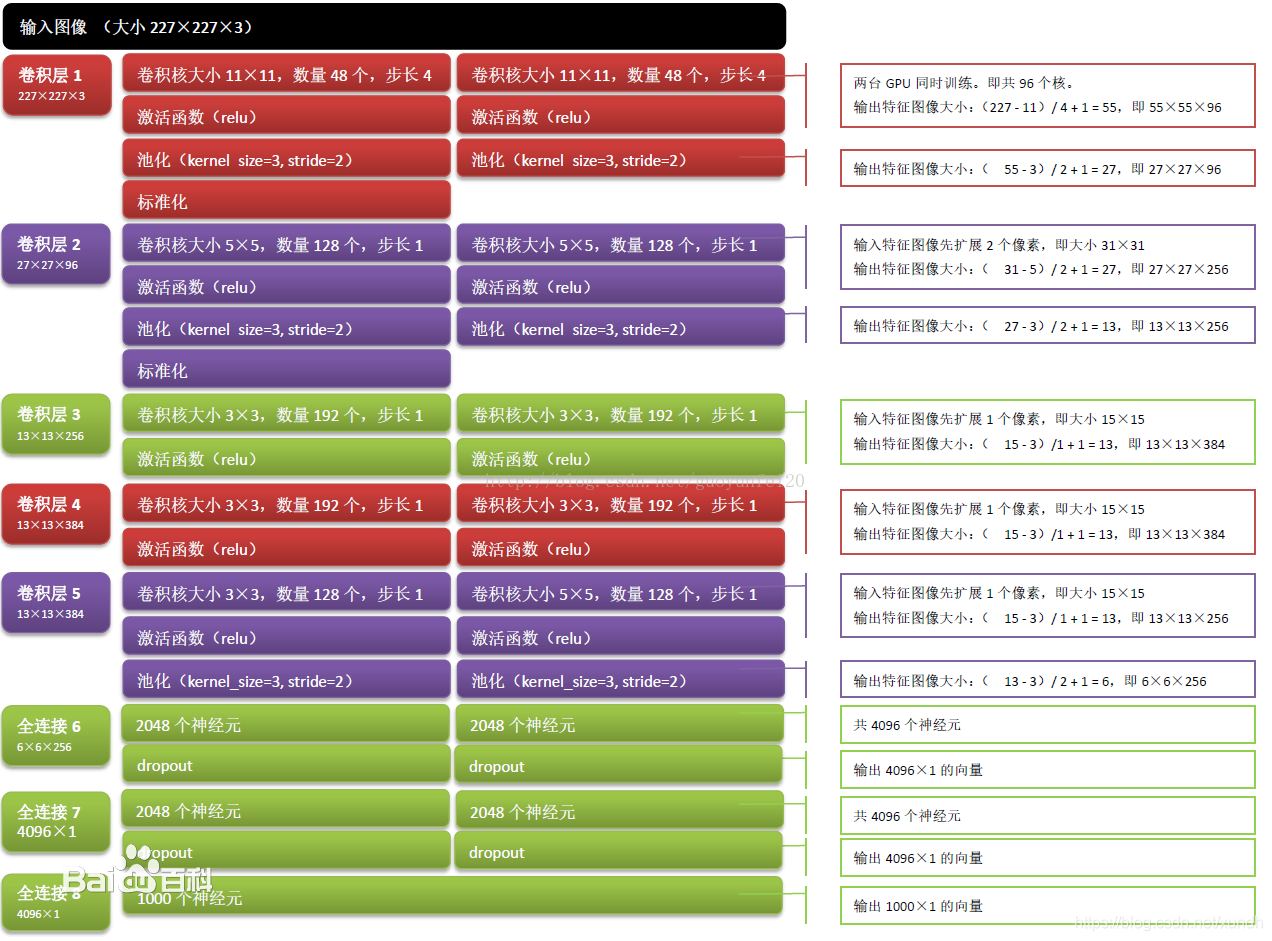

相较于LeNet-5,AlexNet有比较大的特点,如:

- AlexNet的结构更深,因为AlexNet用2个3*3的卷积代替了LeNet的5*5卷积,虽然感受野相同,但是计算的参数变少了。

- AlexNet使用了relu函数。

- AlexNet使用了LRN层,但是现在基本不用,因为没什么效果。

- AlexNet使用了drop层避免过拟合。

- AlexNet对数据集进行了数据增强。

结构图如下:

实现代码:

import tensorflow as tf from tensorflow import keras import matplotlib.pyplot as plt import numpy as np # 加载数据集 (x_train, y_train), (x_test, y_test) = tf.keras.datasets.cifar10.load_data() # 零均值归一化 def normalize(X_train, X_test): X_train = X_train / 255. X_test = X_test / 255. mean = np.mean(X_train, axis=(0, 1, 2, 3)) # 均值 std = np.std(X_train, axis=(0, 1, 2, 3)) # 标准差 print('mean:', mean, 'std:', std) X_train = (X_train - mean) / (std + 1e-7) X_test = (X_test - mean) / (std + 1e-7) return X_train, X_test # 预处理 def preprocess(x, y): x = tf.image.resize(x, (227, 227)) # 将32*32的图片放大为227*227的图片 x = tf.cast(x, tf.float32) y = tf.cast(y, tf.int32) y = tf.squeeze(y, axis=1) # 将(50000, 1)的数组转化为(50000)的Tensor y = tf.one_hot(y, depth=10) return x, y # 零均值归一化 x_train, x_test = normalize(x_train, x_test) # 预处理 train_db = tf.data.Dataset.from_tensor_slices((x_train, y_train)) train_db = train_db.shuffle(50000).batch(128).map(preprocess) # 每个批次128个训练样本 test_db = tf.data.Dataset.from_tensor_slices((x_test, y_test)) test_db = test_db.shuffle(10000).batch(128).map(preprocess) # 每个批次128个测试样本 # 可以自定义填充数量的卷积层 class ConvWithPadding(tf.keras.layers.Layer): def __init__(self, kernel, filters, strides, padding): super().__init__() self.kernel = kernel self.filters = filters self.strides = strides self.padding = padding def build(self, input_shape): self.w = tf.random.normal([self.filters, self.filters, input_shape[-1], self.kernel]) def call(self, inputs): return tf.nn.conv2d(inputs, filters=self.w, strides=self.strides, padding=self.padding) batch = 32 alex_net = keras.Sequential([ # 卷积层1 keras.layers.Conv2D(96, 11, 4), # 输入为227*227@3的图片,通过96个大小为11*11@3的卷积核,步长为4,无填充,后得到55*55@96的特征图 keras.layers.ReLU(), # ReLU激活 keras.layers.MaxPooling2D((3, 3), 2), # 重叠最大池化,大小为3*3,步长为2,最后得到27*27@96的特征图 keras.layers.BatchNormalization(), # 卷积层2 # ConvWithPadding(kernel=256, filters=5, strides=1, padding=[[0, 0], [2, 2], [2, 2], [0, 0]]), keras.layers.Conv2D(256, 5, 1, padding='same'), # 输入27*27@96,卷积核256个,大小5*5@96,步长1,填充2,得到27*27@96(与输入等长宽)特征图 keras.layers.ReLU(), keras.layers.MaxPooling2D((3, 3), 2), # 重叠最大池化,大小为3*3,步长为2,最后得到13*13@256的特征图 keras.layers.BatchNormalization(), # 卷积层3 keras.layers.Conv2D(384, 3, 1, padding='same'), # 输入13*13@256,卷积核384个,大小3*3@256,步长1,填充1,得到13*13@384(与输入等长宽)特征图 keras.layers.ReLU(), # 卷积层4 keras.layers.Conv2D(384, 3, 1, padding='same'), # 输入13*13@384,卷积核384个,大小3*3@384,步长1,填充1,得到13*13@384(与输入等长宽)特征图 keras.layers.ReLU(), # 卷积层5 keras.layers.Conv2D(256, 3, 1, padding='same'), # 输入13*13@384,卷积核256个,大小3*3@384,步长1,填充1,得到13*13@256(与输入等长宽)特征图 keras.layers.ReLU(), keras.layers.MaxPooling2D((3, 3), 2), # 重叠最大池化,大小为3*3,步长为2,最后得到6*6@256的特征图 # 全连接层1 keras.layers.Flatten(), # 将6*6@256的特征图拉伸成9216个像素点 keras.layers.Dense(4096), # 9216*4096的全连接 keras.layers.ReLU(), keras.layers.Dropout(0.25), # Dropout 25%的神经元 # 全连接层2 keras.layers.Dense(4096), # 4096*4096的全连接 keras.layers.ReLU(), keras.layers.Dropout(0.25), # Dropout 25%的神经元 # 全连接层3 keras.layers.Dense(10, activation='softmax') # 4096*10的全连接,通过softmax后10分类 ]) alex_net.build(input_shape=[batch, 227, 227, 3]) alex_net.summary() # 网络编译参数设置 loss = keras.losses.CategoricalCrossentropy() alex_net.compile(optimizer=keras.optimizers.Adam(0.00001), loss=keras.losses.CategoricalCrossentropy(from_logits=True), metrics=['accuracy']) # 训练 history = alex_net.fit(train_db, epochs=10) # 损失下降曲线 plt.plot(history.history['loss']) plt.title('model loss') plt.ylabel('loss') plt.xlabel('epoch') # 测试 alex_net.evaluate(test_db) plt.show()

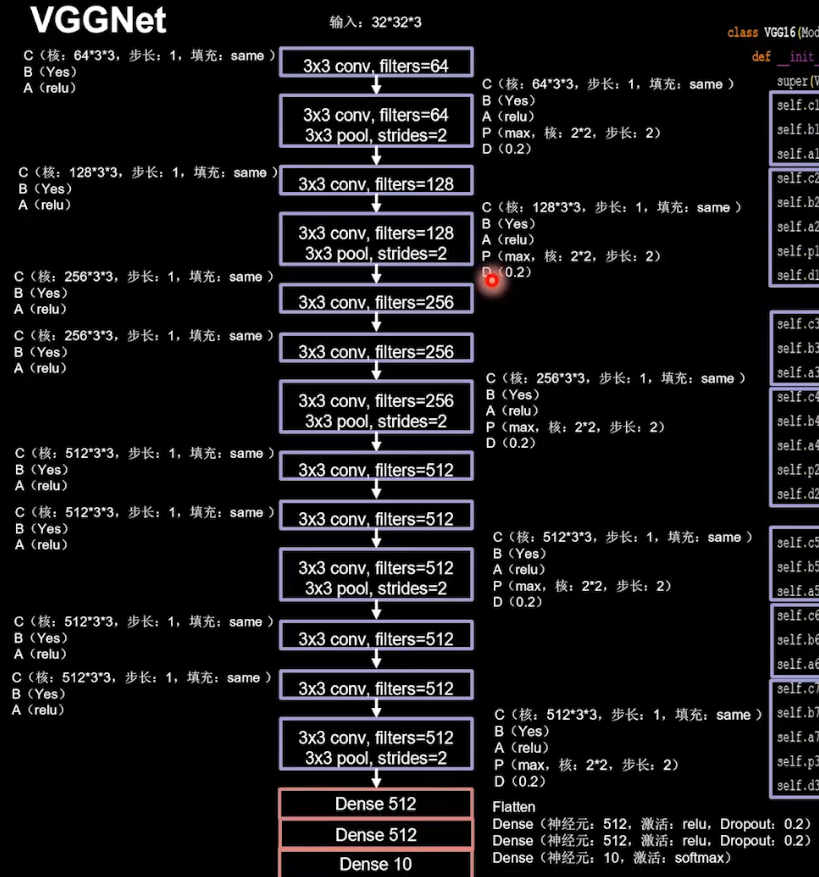

三、VGGNet-16

VGGNet16通过反复的堆叠3*3的小型卷积核和2*2的最大池化层,成功的构建了16~19层深的卷积神经网络,它通过两个3*3卷积层替代1个5*5的卷积层,3个3*3的卷积层替代1个7*7的卷积,这样替换有相同的感受野,但是参数却少了很多。该模型还不够深,只达到19层便饱和了,而且没有探索卷积核宽度对网络性能的影响。同时网络参数过多,达到1.3亿参数以上。以下是VGGNet-16的结构:

代码实现:

import tensorflow as tf import numpy as np import matplotlib.pyplot as plt import os gpus=tf.config.list_physical_devices('GPU') tf.config.experimental.set_visible_devices(devices=gpus[2:8], device_type='GPU') # os.environ["CUDA_VISIBLE_DEVICES"] = "0" # 读取数据集,32*32像素3通道图片,标签为10类 (train_images, train_labels), (test_images, test_labels) = tf.keras.datasets.cifar10.load_data() # 零均值归一化 def normalize(X_train, X_test): X_train = X_train / 255. X_test = X_test / 255. mean = np.mean(X_train, axis=(0, 1, 2, 3)) # 均值 std = np.std(X_train, axis=(0, 1, 2, 3)) # 标准差 X_train = (X_train - mean) / (std + 1e-7) X_test = (X_test - mean) / (std + 1e-7) return X_train, X_test # 预处理 def preprocess(x, y): x = tf.cast(x, tf.float32) y = tf.cast(y, tf.int32) y = tf.squeeze(y, axis=1) # 将(50000, 1)的数组转化为(50000)的Tensor y = tf.one_hot(y, depth=10) return x, y # 训练数据标准化 train_images, test_images = normalize(train_images, test_images) # 预处理 train_db = tf.data.Dataset.from_tensor_slices((train_images, train_labels)) train_db = train_db.shuffle(50000).batch(100).map(preprocess) # 每个批次128个训练样本 test_db = tf.data.Dataset.from_tensor_slices((test_images, test_labels)) test_db = test_db.shuffle(10000).batch(100).map(preprocess) # 每个批次128个测试样本 # 创建模型结构 # 模型输入 VGGNet_input = tf.keras.Input((32, 32, 3)) c1 = tf.keras.layers.Conv2D(64, (3, 3), padding='same')(VGGNet_input) # 第一层 b1 = tf.keras.layers.BatchNormalization()(c1) a1 = tf.keras.layers.Activation('relu')(b1) c2 = tf.keras.layers.Conv2D(64, (3, 3), padding='same')(a1) # 第二层 b2 = tf.keras.layers.BatchNormalization()(c2) a2 = tf.keras.layers.Activation('relu')(b2) p2 = tf.keras.layers.MaxPooling2D((2, 2), (2, 2))(a2) d2 = tf.keras.layers.Dropout(0.2)(p2) c3 = tf.keras.layers.Conv2D(128, (3, 3), padding='same')(d2) # 第三层 b3 = tf.keras.layers.BatchNormalization()(c3) a3 = tf.keras.layers.Activation('relu')(b3) c4 = tf.keras.layers.Conv2D(128, (3, 3), padding='same')(a3) # 第四层 b4 = tf.keras.layers.BatchNormalization()(c4) a4 = tf.keras.layers.Activation('relu')(b4) p4 = tf.keras.layers.MaxPooling2D((2, 2), (2, 2))(a4) d4 = tf.keras.layers.Dropout(0.2)(p4) c5 = tf.keras.layers.Conv2D(256, (3, 3), padding='same')(d4) # 第五层 b5 = tf.keras.layers.BatchNormalization()(c5) a5 = tf.keras.layers.Activation('relu')(b5) c6 = tf.keras.layers.Conv2D(256, (3, 3), padding='same')(a5) # 第六层 b6 = tf.keras.layers.BatchNormalization()(c6) a6 = tf.keras.layers.Activation('relu')(b6) c7 = tf.keras.layers.Conv2D(256, (3, 3), padding='same')(a6) # 第七层 b7 = tf.keras.layers.BatchNormalization()(c7) a7 = tf.keras.layers.Activation('relu')(b7) p7 = tf.keras.layers.MaxPooling2D((2, 2), (2, 2))(a7) d7 = tf.keras.layers.Dropout(0.2)(p7) c8 = tf.keras.layers.Conv2D(512, (3, 3), padding='same')(d7) # 第八层 b8 = tf.keras.layers.BatchNormalization()(c8) a8 = tf.keras.layers.Activation('relu')(b8) c9 = tf.keras.layers.Conv2D(512, (3, 3), padding='same')(a8) # 第九层 b9 = tf.keras.layers.BatchNormalization()(c9) a9 = tf.keras.layers.Activation('relu')(b9) c10 = tf.keras.layers.Conv2D(512, (3, 3), padding='same')(a9) # 第十层 b10 = tf.keras.layers.BatchNormalization()(c10) a10 = tf.keras.layers.Activation('relu')(b10) p10 = tf.keras.layers.MaxPooling2D((2, 2), (2, 2))(a10) d10 = tf.keras.layers.Dropout(0.2)(p10) c11 = tf.keras.layers.Conv2D(512, (3, 3), padding='same')(d10) # 第十一层 b11 = tf.keras.layers.BatchNormalization()(c11) a11 = tf.keras.layers.Activation('relu')(b11) c12 = tf.keras.layers.Conv2D(512, (3, 3), padding='same')(a11) # 第十二层 b12 = tf.keras.layers.BatchNormalization()(c12) a12 = tf.keras.layers.Activation('relu')(b12) c13 = tf.keras.layers.Conv2D(512, (3, 3), padding='same')(a12) # 第十三层 b13 = tf.keras.layers.BatchNormalization()(c13) a13 = tf.keras.layers.Activation('relu')(b13) p13 = tf.keras.layers.MaxPooling2D((2, 2), (2, 2))(a13) d13 = tf.keras.layers.Dropout(0.2)(p13) f14 = tf.keras.layers.Flatten()(d13) # 第十四层 den14 = tf.keras.layers.Dense(512, 'relu')(f14) d14 = tf.keras.layers.Dropout(0.2)(den14) den15 = tf.keras.layers.Dense(512, 'relu')(d14) # 第十五层 d15 = tf.keras.layers.Dropout(0.2)(den15) VGGNet_output = tf.keras.layers.Dense(10, 'softmax')(d15) # 第十六层 VGGNet=tf.keras.Model(inputs=VGGNet_input,outputs=VGGNet_output) # 构建模型 # 查看模型的结构 VGGNet.summary() # 模型编译 VGGNet.compile( optimizer=tf.keras.optimizers.Adam(0.00001), loss=tf.keras.losses.CategoricalCrossentropy(from_logits=True), metrics=['accuracy'] ) VGGNet.fit(train_db)