1. 配置zookeeper

3.1 解压存放指定目录

[root@bogon src]# tar xf zookeeper-3.4.10.tar.gz

[root@bogon src]# mv zookeeper-3.4.10 /usr/local/zookeeper

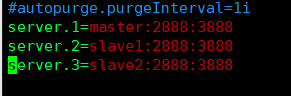

3.2 创建zoo.cfg文件

[root@master ~]# vim /usr/local/zookeeper/conf/zoo.cfg

添加:

tickTime=2000

dataDir=/opt/zookeeper

clientPort=2181

initLimit=5

syncLimit=2

server.1=master:2888:3888

server.2=slave1:2888:3888

server.3=slave2:2888:3888

设置三台zookeeper集群,作为投票,必须是单数,才能保证投出leader节点

创建zoo.cfg指定的zookeepr节点的dataDir目录

[root@master ~]# mkdir /opt/zookeeper

[root@slave1 ~]# mkdir /opt/zookeeper

[root@slave2 ~]# mkdir /opt/zookeeper

3.2 配置myid

[root@master ~]# echo 1 >/opt/zookeeper/myid

[root@slave1 ~]# echo 2 >/opt/zookeeper/myid

[root@slave2 ~]# echo 3 >/opt/zookeeper/myid

设置每个zookeeper节点的id标识

3.3 拷贝zookeeper文件

[root@master ~]# scp -rp /usr/local/zookeeper/ slave1:/usr/local/

[root@master ~]# scp -rp /usr/local/zookeeper slave2:/usr/local/

将zookeeper文件拷贝到其他两个zookeeper节点上

3.4 配置各台zookeeper节点的环境变量

[root@master ~]# vim /etc/profile

修改:

#zookeeper

export PATH=$PATH:/usr/local/zookeeper/bin

将zookeeper的bin目录加入到环境中去

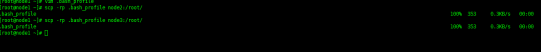

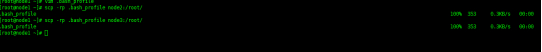

[root@master ~]# scp -rp /etc/profile slave1:/etc/

[root@master ~]# scp -rp /etc/profile slave2:/etc/

将环境变量文件同步到其他zookeeper节点中去

[root@master ~]# source /etc/profile

[root@slave1 ~]# source /etc/profile

[root@slave2 ~]# source /etc/profile

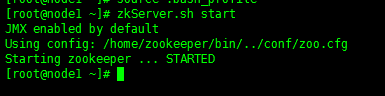

3.5 启动zookeeper

[root@master ~]# zkServer.sh start

[root@slave1 ~]# zkServer.sh start

[root@slave2 ~]# zkServer.sh start

在三台zookeeper节点同时启动zookeeper服务,并在启动时的当前路径下产生zookeeper日志文件zookeeper.out

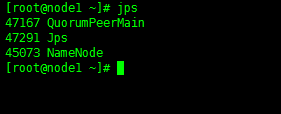

查看zookeeper进程是否存在

[root@master ~]# jps

[root@slave1 ~]# jps

[root@slave2 ~]# jps

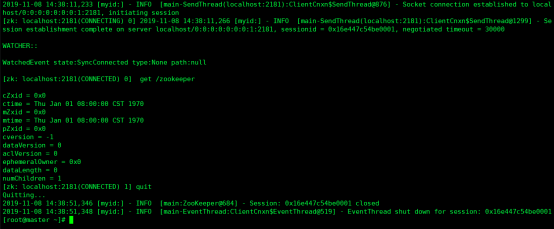

3.6 连接内存实时库

[root@master ~]# zkCli.sh

[zk: localhost:2181(CONNECTED) 2] get /zookeeper

[zk: localhost:2181(CONNECTED) 3] quit

解释:

Zookeeper正常启动会启动它的内存库,可以查看是否存在/zookeeper文件,表示zookeeper安装是否有问题

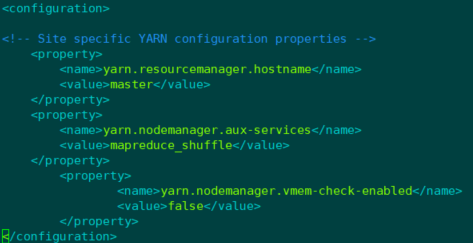

3.7 配置hadoop资源管理

3.7.1 编辑yarn-site.xml文件

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

</configuration>

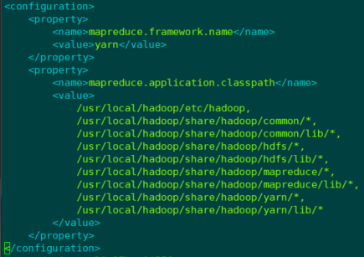

3.7.2 创建mapperd-site.xml文件

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.application.classpath</name>

<value>

/usr/local/hadoop/etc/hadoop,

/usr/local/hadoop/share/hadoop/common/*,

/usr/local/hadoop/share/hadoop/common/lib/*,

/usr/local/hadoop/share/hadoop/hdfs/*,

/usr/local/hadoop/share/hadoop/hdfs/lib/*,

/usr/local/hadoop/share/hadoop/mapreduce/*,

/usr/local/hadoop/share/hadoop/mapreduce/lib/*,

/usr/local/hadoop/share/hadoop/yarn/*,

/usr/local/hadoop/share/hadoop/yarn/lib/*

</value>

</property>

</configuration>

3.7.3 同步配置文件

[root@master hadoop]# scp yarn-site.xml slave1:/usr/local/hadoop/etc/hadoop/

[root@master hadoop]# scp yarn-site.xml slave2:/usr/local/hadoop/etc/hadoop/

[root@master hadoop]# scp mapred-site.xml slave1:/usr/local/hadoop/etc/hadoop/

[root@master hadoop]# scp mapred-site.xml slave2:/usr/local/hadoop/etc/hadoop/

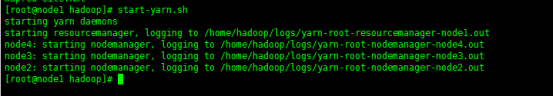

3.7.4启动yarn服务及验证

[root@master hadoop]# start-yarn.sh

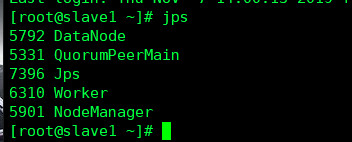

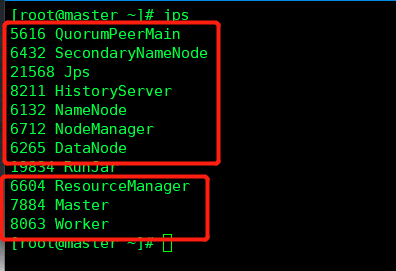

3.7.5 jps正常状态

master节点

Slave节点