hbase--迁移数据之snapshot (一)

1.目标

迁移测试环境的四张表到线上环境,名称发生变动。要求phoenix可以访问

2.实现原理

2.1 需要配置参数 hbase-site.xml

<property> <name>hbase.snapshot.enabled</name> <value>true</value> </property>

2.2 实现过程

相关命令

list_snapshot 显示备份

delete_snapshot 删除备份

clone_snapshot 克隆到一个新表,复用snapshot指定的数据文件

restore_snapshot 恢复备份,替换当前表的schema 和 数据

snapshot 制作备份文件

常规的方法如下:

先禁用旧表

disable 'temp:old_table'

再将旧表备份成快照

snapshot 'temp:old_table', 'tableSnapshot'

删除旧表数据

drop 'temp:old_table'

恢复旧表

restore_snapshot 'tableSnapshot'

那么跨集群怎么操作呢?

将快照数据从旧集群迁移到新集群

hbase org.apache.hadoop.hbase.snapshot.ExportSnapshot -snapshot 快照名 -copy-to hdfs://new-nn/hbase -copy-from hdfs://old-nn/hbase

new-nn和old-nn是两个集群上active namenode的IP,

如果hdfs的端口不是默认的,则还需加上端口

-copy-to是目录集群的hbase主目录, -copy-from是源集群的hbase主目录。

需要保证新旧集群中的所有节点能够相互连的通,这个命令的本质是一个MR任务

接下来就是将远程拷贝过来的快照文件,进行restore_snapshot ,然后创建phoenix的表进行映射,就可以在phoenix进行访问了。

但是 restore_snapshot 操作进行覆盖会造成数据的丢失,所以一般是 clone_snapshot 到一个新表。然后自行的进行数据整合。

3.实现步骤

简单展示下snapshot 指令

hbase(main):020:0> snapshot 'TEST','TEST_snapshot' #快照表TEST

hbase(main):021:0> list_snapshots #当前快照列表信息

SNAPSHOT TABLE + CREATION TIME

TEST_snapshot TEST (2021-07-30 15:13:47 +0800)

hbase(main):022:0> clone_snapshot 'TEST_snapshot','TESTAA' #克隆到新表 TESTAA (新表需要不存在,否则报错)

hbase(main):023:0> scan 'TESTAA' #查看下数据

ROW COLUMN+CELL

0 column=INFO:BROWER, timestamp=1627458022126, value=ie

0 column=INFO:DATA, timestamp=1627458022126, value=20190520164020

0 column=INFO:IP, timestamp=1627626495290, value=192.168.168.170

0 column=INFO:_0, timestamp=1627626495290, value=x

1 column=INFO:BROWER, timestamp=1627458022126, value=chorm

1 column=INFO:DATA, timestamp=1627458022126, value=20190520164020

1 column=INFO:IP, timestamp=1627458022126, value=139.203.75.112

1 column=INFO:_0, timestamp=1627458022126, value=

2 column=INFO:BROWER, timestamp=1627458022126, value=chorm

hbase(main):024:0> disable "TEST" 删除TEST表

hbase(main):025:0> drop "TEST" (如果不删除表,单纯的disable,然后restore操作,也是可以的。但后续需要enable 该表。) (同时,做了实验,实验结果:数据会覆盖)

hbase(main):029:0> restore_snapshot 'TEST_snapshot'

hbase(main):031:0> scan 'TEST'

ROW COLUMN+CELL 0 column=INFO:BROWER, timestamp=1627458022126, value=ie 0 column=INFO:DATA, timestamp=1627458022126, value=20190520164020 0 column=INFO:IP, timestamp=1627626495290, value=192.168.168.170 0 column=INFO:_0, timestamp=1627626495290, value=x 1 column=INFO:BROWER, timestamp=1627458022126, value=chorm 1 column=INFO:DATA, timestamp=1627458022126, value=20190520164020 1 column=INFO:IP, timestamp=1627458022126, value=139.203.75.112 1 column=INFO:_0, timestamp=1627458022126, value= 2 column=INFO:BROWER, timestamp=1627458022126, value=chorm

前戏结束正式开始正文

[root@master bin]# ./hbase shell

hbase(main):001:0> list

在hbase shell中执行如下:

snapshot "ECOMMERCE_ANALYSIS_SHOW:STORE_DSR_HISTORY","STORE_DSR_HISTORY_snapshot"

snapshot "ECOMMERCE_ANALYSIS_SHOW:STORE_EVALUATION_HISTORY","STORE_EVALUATION_HISTORY_snapshot"

snapshot "ECOMMERCE_ANALYSIS_SHOW:STORE_INFO_HISTORY","STORE_INFO_HISTORY_snapshot"

snapshot "ECOMMERCE_ANALYSIS_SHOW:STORE_ITEMS_HISTORY","STORE_ITEMS_HISTORY_snapshot"

snapshot "ECOMMERCE_ANALYSIS_SHOW:STORE_SKU_HISTORY","STORE_SKU_HISTORY_snapshot"

hbase(main):007:0> list_snapshots

SNAPSHOT TABLE + CREATION TIME

STORE_DSR_HISTORY_snapshot ECOMMERCE_ANALYSIS_SHOW:STORE_DSR_HISTORY (2022-01-18 11:17:26 +0800)

STORE_EVALUATION_HISTORY_snapshot ECOMMERCE_ANALYSIS_SHOW:STORE_EVALUATION_HISTORY (2022-01-18 11:19:02 +0800)

STORE_INFO_HISTORY_snapshot ECOMMERCE_ANALYSIS_SHOW:STORE_INFO_HISTORY (2022-01-18 11:19:02 +0800)

STORE_ITEMS_HISTORY_snapshot ECOMMERCE_ANALYSIS_SHOW:STORE_ITEMS_HISTORY (2022-01-18 11:19:03 +0800)

STORE_SKU_HISTORY_snapshot ECOMMERCE_ANALYSIS_SHOW:STORE_SKU_HISTORY (2022-01-18 11:19:05 +0800)

5 row(s)

Took 0.0437 seconds

跨集群拷贝快照

cd /usr/local/hbase

./hbase org.apache.hadoop.hbase.snapshot.ExportSnapshot -Dmapreduce.map.memory.mb=4096 -Dmapreduce.map.java.opts=-Xmx3686m -overwrite -snapshot STORE_DSR_HISTORY_snapshot -copy-from hdfs://192.168.88.126:9820/hbase -copy-to hdfs://192.168.188.10:9820/hbase -mappers 10 -bandwidth 30

./hbase org.apache.hadoop.hbase.snapshot.ExportSnapshot -Dmapreduce.map.memory.mb=4096 -Dmapreduce.map.java.opts=-Xmx3686m -overwrite -snapshot STORE_EVALUATION_HISTORY_snapshot -copy-from hdfs://192.168.88.126:9820/hbase -copy-to hdfs://192.168.188.10:9820/hbase -mappers 10 -bandwidth 30

./hbase org.apache.hadoop.hbase.snapshot.ExportSnapshot -Dmapreduce.map.memory.mb=4096 -Dmapreduce.map.java.opts=-Xmx3686m -overwrite -snapshot STORE_INFO_HISTORY_snapshot -copy-from hdfs://192.168.88.126:9820/hbase -copy-to hdfs://192.168.188.10:9820/hbase -mappers 10 -bandwidth 30

./hbase org.apache.hadoop.hbase.snapshot.ExportSnapshot -Dmapreduce.map.memory.mb=4096 -Dmapreduce.map.java.opts=-Xmx3686m -overwrite -snapshot STORE_ITEMS_HISTORY_snapshot -copy-from hdfs://192.168.88.126:9820/hbase -copy-to hdfs://192.168.188.10:9820/hbase -mappers 10 -bandwidth 30

./hbase org.apache.hadoop.hbase.snapshot.ExportSnapshot -Dmapreduce.map.memory.mb=4096 -Dmapreduce.map.java.opts=-Xmx3686m -overwrite -snapshot STORE_SKU_HISTORY_snapshot -copy-from hdfs://192.168.88.126:9820/hbase -copy-to hdfs://192.168.188.10:9820/hbase -mappers 10 -bandwidth 30

查看拷贝过来的快照

新的环境hbase shell下查看 或者直接hdfs去查看 。hdfs的目录:/hbase/.hbase-snapshot

hbase(main):007:0> list_snapshots

SNAPSHOT TABLE + CREATION TIME

STORE_DSR_HISTORY_snapshot ECOMMERCE_ANALYSIS_SHOW:STORE_DSR_HISTORY (2022-01-18 11:17:26 +0800)

STORE_EVALUATION_HISTORY_snapshot ECOMMERCE_ANALYSIS_SHOW:STORE_EVALUATION_HISTORY (2022-01-18 11:19:02 +0800)

STORE_INFO_HISTORY_snapshot ECOMMERCE_ANALYSIS_SHOW:STORE_INFO_HISTORY (2022-01-18 11:19:02 +0800)

STORE_ITEMS_HISTORY_snapshot ECOMMERCE_ANALYSIS_SHOW:STORE_ITEMS_HISTORY (2022-01-18 11:19:03 +0800)

STORE_SKU_HISTORY_snapshot ECOMMERCE_ANALYSIS_SHOW:STORE_SKU_HISTORY (2022-01-18 11:19:05 +0800)

5 row(s)

Took 0.0437 seconds

新的集群创建新的库,和原来不一样,这边采用clone

创建库

create_namespace "ECOMMERCE_ANALYSIS_LDH"

恢复快照到表

clone_snapshot 'STORE_DSR_HISTORY_snapshot',"ECOMMERCE_ANALYSIS_LDH:STORE_DSR_HISTORY"

clone_snapshot 'STORE_EVALUATION_HISTORY_snapshot',"ECOMMERCE_ANALYSIS_LDH:STORE_EVALUATION_HISTORY"

clone_snapshot 'STORE_INFO_HISTORY_snapshot',"ECOMMERCE_ANALYSIS_LDH:STORE_INFO_HISTORY"

clone_snapshot 'STORE_ITEMS_HISTORY_snapshot',"ECOMMERCE_ANALYSIS_LDH:STORE_ITEMS_HISTORY"

clone_snapshot 'STORE_SKU_HISTORY_snapshot',"ECOMMERCE_ANALYSIS_LDH:STORE_SKU_HISTORY"

进入phoenix,创建表,进行关联

CREATE TABLE "ECOMMERCE_ANALYSIS_LDH"."STORE_DSR_HISTORY" ("ID" BIGINT NOT NULL,"SHOP_ID" BIGINT,"DATA_JSON" VARCHAR,"START_DATE" DATE,"END_DATE" DATE,"CREATE_DATE" DATE CONSTRAINT PK PRIMARY KEY (ID))column_encoded_bytes=0;

CREATE TABLE "ECOMMERCE_ANALYSIS_LDH"."STORE_EVALUATION_HISTORY" ("ID" BIGINT NOT NULL,"SHOP_ID" BIGINT,"ITEM_ID" BIGINT,"DATA_JSON" VARCHAR,"CREATE_DATE" DATE CONSTRAINT PK PRIMARY KEY (ID))column_encoded_bytes=0;

CREATE TABLE "ECOMMERCE_ANALYSIS_LDH"."STORE_INFO_HISTORY" ("ID" BIGINT NOT NULL,"SHOP_ID" BIGINT,"DATA_JSON" VARCHAR,"CREATE_DATE" DATE CONSTRAINT PK PRIMARY KEY (ID))column_encoded_bytes=0;

CREATE TABLE "ECOMMERCE_ANALYSIS_LDH"."STORE_ITEMS_HISTORY" ("ID" BIGINT NOT NULL,"SHOP_ID" BIGINT,"DATA_JSON" VARCHAR,"CREATE_DATE" DATE,"END_DATE" DATE CONSTRAINT PK PRIMARY KEY (ID))column_encoded_bytes=0;

CREATE TABLE "ECOMMERCE_ANALYSIS_LDH"."STORE_SKU_HISTORY" ("ID" BIGINT NOT NULL,"SHOP_ID" BIGINT,"ITEM_ID" BIGINT,"DATA_JSON" VARCHAR,"CREATE_DATE" DATE,"END_DATE" DATE,"START_DATE" DATE CONSTRAINT PK PRIMARY KEY (ID))column_encoded_bytes=0;

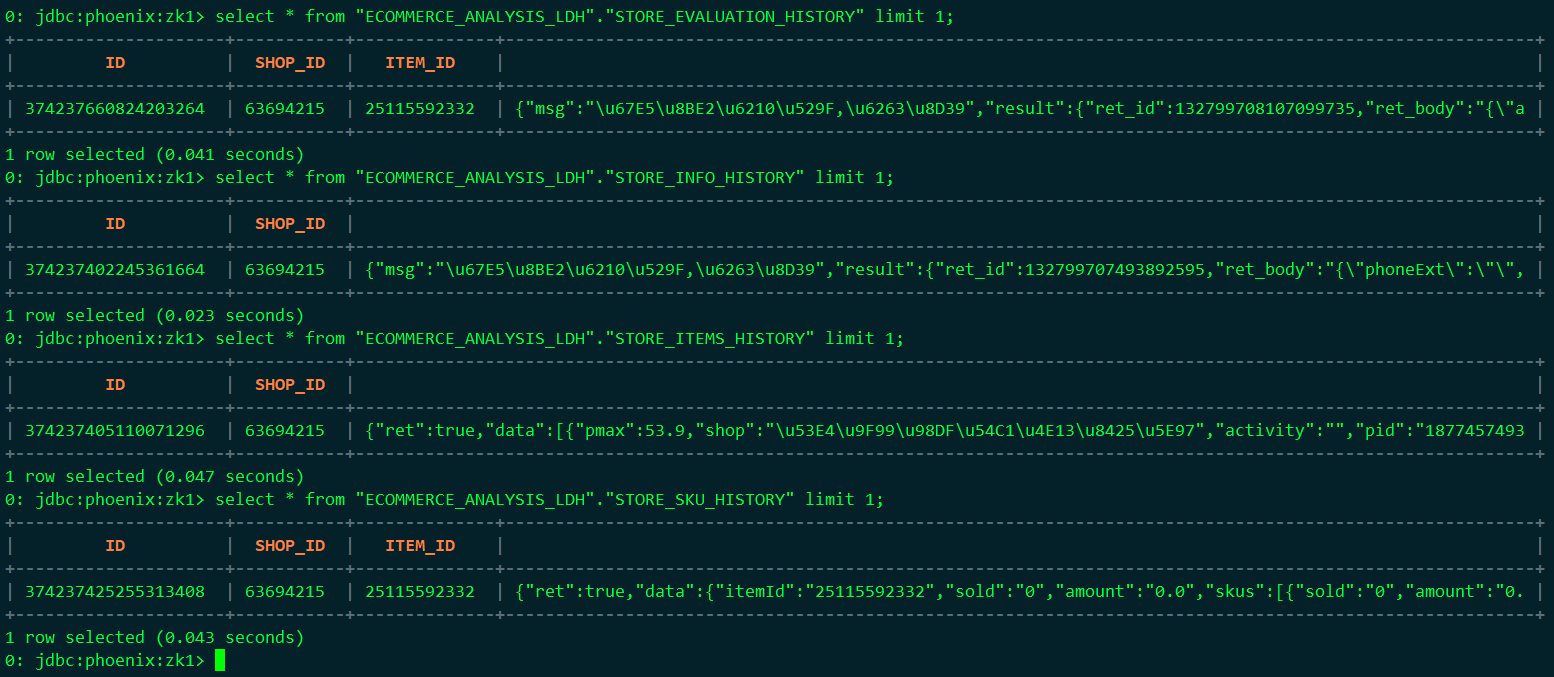

4.实现结果展示

查看数据

select * from "ECOMMERCE_ANALYSIS_LDH"."STORE_DSR_HISTORY" limit 1;

select * from "ECOMMERCE_ANALYSIS_LDH"."STORE_EVALUATION_HISTORY" limit 1;

select * from "ECOMMERCE_ANALYSIS_LDH"."STORE_INFO_HISTORY" limit 1;

select * from "ECOMMERCE_ANALYSIS_LDH"."STORE_ITEMS_HISTORY" limit 1;

select * from "ECOMMERCE_ANALYSIS_LDH"."STORE_SKU_HISTORY" limit 1;

5.问题探索

(1) 查询phoenix

select * 时会出现无法查询所有字段的情况。只是phoenix在终端界面显示问题而言,不用担心。不过可以添加字段。

0: jdbc:phoenix:zk1> select ID,SHOP_ID,ITEM_ID,CREATE_DATE,END_DATE,START_DATE from "ECOMMERCE_ANALYSIS_LDH"."STORE_SKU_HISTORY" limit 1;

+---------------------+-----------+--------------+--------------------------+--------------------------+--------------------------+

| ID | SHOP_ID | ITEM_ID | CREATE_DATE | END_DATE | START_DATE |

+---------------------+-----------+--------------+--------------------------+--------------------------+--------------------------+

| 374237425255313408 | 63694215 | 25115592332 | 2021-10-29 00:00:00.000 | 2021-10-28 00:00:00.000 | 2021-09-29 00:00:00.000 |

+---------------------+-----------+--------------+--------------------------+--------------------------+--------------------------+

1 row selected (0.044 seconds)

(2)phoenix表关联,查看不到导入的数据,但是新增数据可以看到。

4.x之后的phoenix的版本做映射的时候需要参数 column_encoded_bytes=0

1、如果只做查询,强烈建议使用 phoenix 视图方式映射,删除视图不影响 hbase 源数据,语法如下:

create view if not exists TEST(ID varchar primary key, INFO.DATA varchar, INFO.IP varchar, INFO.BROWER varchar)column_encoded_bytes=0;

2、必须要表映射,需要禁用列映射规则(会降低查询性能),如下:

create table if not exists TEST(ID varchar primary key, INFO.DATA varchar, INFO.IP varchar, INFO.BROWER varchar)column_encoded_bytes=0;

(3)那么错误的映射如何解决呢?删除Phoenix映射表的同时不删除hbase原表

(a)删除phoenix记录

DELETE from SYSTEM.CATALOG where TABLE_NAME ='TEST';

(b)更新hbase shell操作

hbase(main):003:0> describe 'TEST'

=> {coprocessor$1 =>

……

, coprocessor$5 =>

hbase(main):004:0> disable 'TEST'

执行

alter 'TEST',METHOD=>'table_att_unset',NAME=>'coprocessor$1'

alter 'TEST',METHOD=>'table_att_unset',NAME=>'coprocessor$2'

alter 'TEST',METHOD=>'table_att_unset',NAME=>'coprocessor$3'

alter 'TEST',METHOD=>'table_att_unset',NAME=>'coprocessor$4'

alter 'TEST',METHOD=>'table_att_unset',NAME=>'coprocessor$5'

hbase(main):009:0> alter 'TEST',METHOD=>'table_att_unset',NAME=>'coprocessor$5'

Updating all regions with the new schema...

All regions updated.

Done.

Took 1.4489 seconds

hbase(main):010:0> enable 'TEST'

hbase(main):011:0> scan 'TEST'

重启hbase phoenix

重新映射表--phoenix

create table if not exists TEST("ID" varchar primary key,"INFO"."DATA" varchar,"INFO"."IP" varchar,"INFO"."BROWER" varchar)column_encoded_bytes=0;

0: jdbc:phoenix:master> select * from "TEST";

+-----+-----------------+-----------------+----------+

| ID | DATA | IP | BROWER |

+-----+-----------------+-----------------+----------+

| 0 | 20190520164020 | 171.15.136.201 | ie |

| 1 | 20190520164020 | 139.203.75.112 | chorm |

| 2 | 20190520164020 | 121.77.62.91 | chorm |

| 3 | 20190520164020 | 139.213.175.14 | ie |

| 4 | 20190520164020 | 210.45.253.237 | chorm |

| 5 | 20190520164020 | 171.12.45.87 | chrome |

| 6 | 20190520164020 | 139.200.93.224 | firefox |

| 7 | 20190520164020 | 222.61.160.72 | chorm |

+-----+-----------------+-----------------+----------+

(4)数据量很大,不想影响业务

快照

导出操作不会对Region server造成额外的负担。因为它工作在HDFS层级,你仅需指定HDFS的位置即可。

也可以手动传输到指定位置。hdfs的目录:/hbase/.hbase-snapshot

或者跨集群拷贝时,限制mapreduce任务的资源

ExportSnapshot命令也可以限定mapper个数,如下:

$ bin/hbase org.apache.hadoop.hbase.snapshot.ExportSnapshot -snapshot test_table_snapshot -copy-to hdfs://follow_cluster_namenode:9820/hbase -mapers n

还可以限定拷贝的流量,如下:将拷贝的流量限定为200M。

$ bin/hbase org.apache.hadoop.hbase.snapshot.ExportSnapshot -snapshot test_table_snapshot -copy-to hdfs://follow_cluster_namenode:9820/hbase -mapers n -bandwidth 200

(5)表很大,迁移时间很长

hbase.master.hfilecleaner.ttl

修改下参数就行

迁移小表(耗时几分钟内)时没有遇到过错误,但迁移大表(耗时超过30分钟)时,一直报错“Can't find hfile”。

CleanerChore线程清理archive目录是通过配置项hbase.master.hfilecleaner.ttl控制的,默认是5分钟(单位:毫秒),大表的文件迁移远超5分钟。将hbase.master.hfilecleaner.ttl调到两小时的足够大值后,问题消失。

hbase.master.hfilecleaner.ttl

---------------------------------------采遍所有的坑,让别人无坑可踩---------------------------------------