K8S--镜像加速--部署拉取镜像--集群删除新增节点--镜像导入导出

末尾有贴图

以K8S部署初始时下载镜像为例,引出上面一些问题的解决方法等,首先罗列服务器信息

|

IP

|

host

|

role

|

|

192.168.101.86

|

k8s1.dev.lczy.com

|

master

|

|

192.168.100.17

|

node

|

好久之前打算整理一下,k8s部署时镜像拉取问题,现在正好需要重新部署K8S环境,所以前来写一份文档,分享给大家。

1.18版本--需要下载的镜像列表:

api等服务

k8s.gcr.io/kube-proxy:v1.18.0

k8s.gcr.io/kube-scheduler:v1.18.0

k8s.gcr.io/kube-controller-manager:v1.18.0

k8s.gcr.io/kube-apiserver:v1.18.0

k8s.gcr.io/pause:3.2

k8s.gcr.io/coredns:1.6.7

k8s.gcr.io/etcd:3.4.3-0

网络--可选

quay.io/coreos/flannel:v0.12.0-s390x

quay.io/coreos/flannel:v0.12.0-ppc64le

quay.io/coreos/flannel:v0.12.0-arm64

quay.io/coreos/flannel:v0.12.0-arm

quay.io/coreos/flannel:v0.12.0-amd64

网络下载境外镜像,网速受到限制,很慢。

解决方法:

1.上传阿里云镜像仓库,开放状态,大家都可以拉取,比如我上传的共享镜像:

registry.cn-beijing.aliyuncs.com/yunweijia/etcd:3.4.3-0

2.下载到本地,移动存储,使用时上传服务器,docker加载镜像

3.本地搭建容器镜像仓库,将镜像上传私有镜像仓库,使用时直接内网拉取

优劣:

方法1:永久保存,云值得信赖,第一次上传慢,后续使用下载速度一般,需要下载后进行tag

方法2:存到本地易丢失,速度快,不需要重新tag

方法3:两种选择,修改image下载源指向harbor私用仓库或者手动下载,手动tag。比阿里云下载快,环境需要自己维护

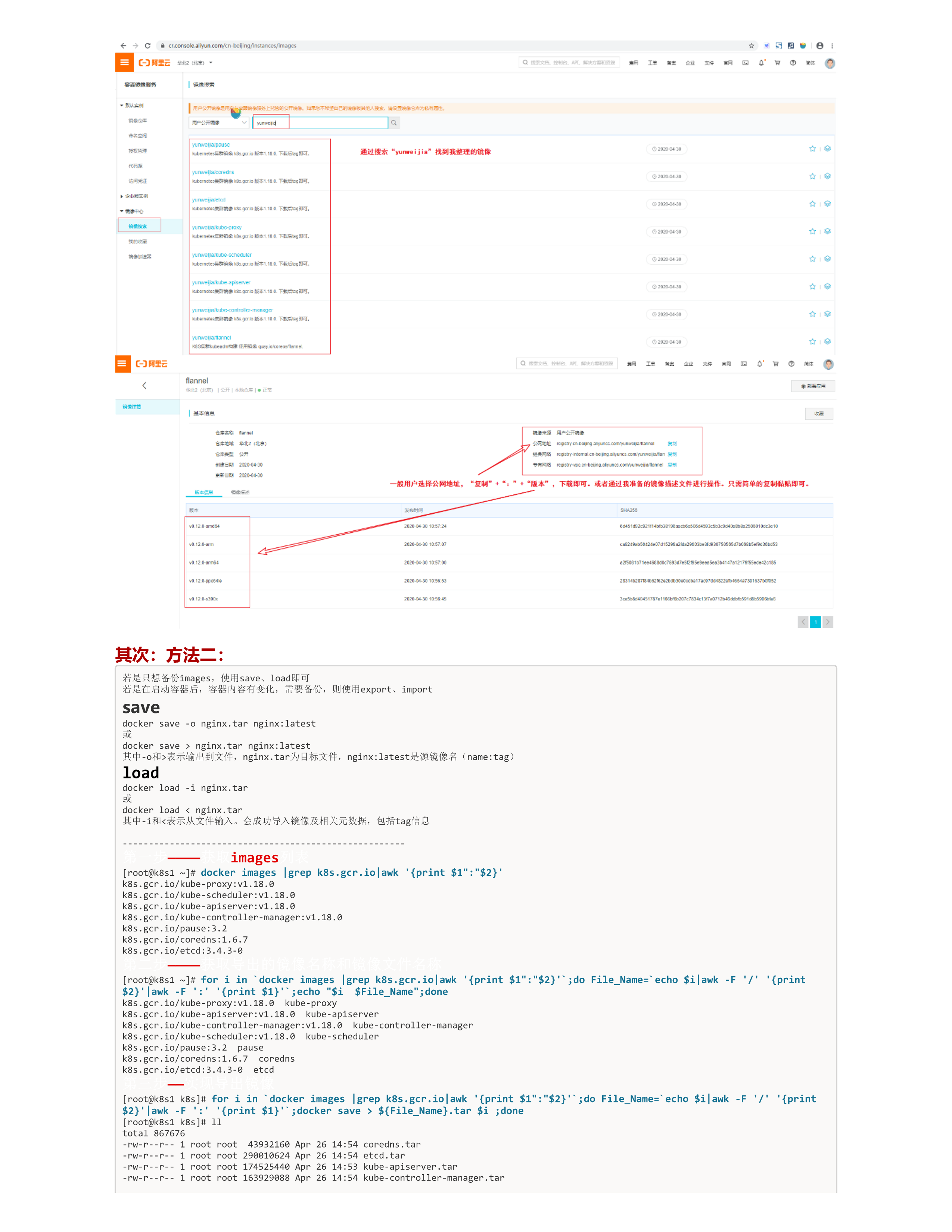

首先:方法一:

你们可以根据上述的网址,准备自己的私有镜像上传。这里我提供了,自己正路的阿里云的镜像,

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/etcd:3.4.3-0

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/coredns:1.6.7

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/pause:3.2

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/kube-controller-manager:v1.18.0

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/kube-scheduler:v1.18.0

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/kube-apiserver:v1.18.0

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/kube-proxy:v1.18.0

重新tag

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/kube-proxy:v1.18.0 k8s.gcr.io/kube-proxy:v1.18.0

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/kube-apiserver:v1.18.0 k8s.gcr.io/kube-apiserver:v1.18.0

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/kube-scheduler:v1.18.0 k8s.gcr.io/kube-scheduler:v1.18.0

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/kube-controller-manager:v1.18.0 k8s.gcr.io/kube-controller-manager:v1.18.0

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/pause:3.2 k8s.gcr.io/pause:3.2

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/coredns:1.6.7 k8s.gcr.io/coredns:1.6.7

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/etcd:3.4.3-0 k8s.gcr.io/etcd:3.4.3-0

当然还有网络插件等

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-s390x

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-ppc64le

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-arm64

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-arm

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-amd64

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-s390x quay.io/coreos/flannel:v0.12.0-s390x

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-ppc64le quay.io/coreos/flannel:v0.12.0-ppc64le

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-arm64 quay.io/coreos/flannel:v0.12.0-arm64

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-arm quay.io/coreos/flannel:v0.12.0-arm

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-amd64 quay.io/coreos/flannel:v0.12.0-amd64

然后初始化就行了

图片比较模糊,凑合看一看吧!(o( ̄┰ ̄*)ゞ)

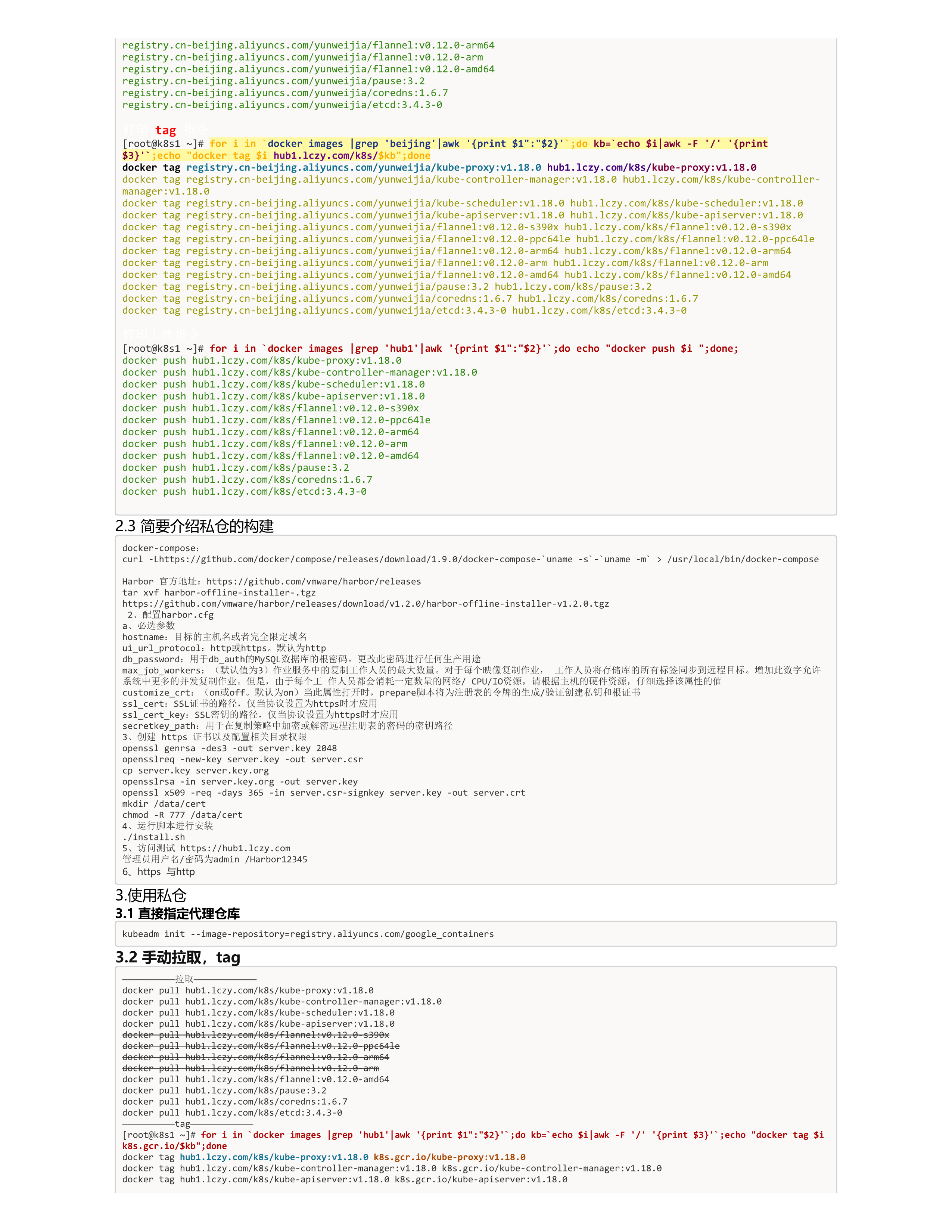

其次:方法二:

若是只想备份images,使用save、load即可

若是在启动容器后,容器内容有变化,需要备份,则使用export、import

save

docker save -o nginx.tar nginx:latest

或

docker save > nginx.tar nginx:latest

其中-o和>表示输出到文件,nginx.tar为目标文件,nginx:latest是源镜像名(name:tag)

load

docker load -i nginx.tar

或

docker load < nginx.tar

其中-i和<表示从文件输入。会成功导入镜像及相关元数据,包括tag信息

------------------------------------------------------

第一步————获取images列表

[root@k8s1 ~]# docker images |grep k8s.gcr.io|awk '{print $1":"$2}'

k8s.gcr.io/kube-proxy:v1.18.0

k8s.gcr.io/kube-scheduler:v1.18.0

k8s.gcr.io/kube-apiserver:v1.18.0

k8s.gcr.io/kube-controller-manager:v1.18.0

k8s.gcr.io/pause:3.2

k8s.gcr.io/coredns:1.6.7

k8s.gcr.io/etcd:3.4.3-0

第二步————获取导出的镜像名称和镜像文件名称

[root@k8s1 ~]# for i in `docker images |grep k8s.gcr.io|awk '{print $1":"$2}'`;do File_Name=`echo $i|awk -F '/' '{print $2}'|awk -F ':' '{print $1}'`;echo "$i $File_Name";done

k8s.gcr.io/kube-proxy:v1.18.0 kube-proxy

k8s.gcr.io/kube-apiserver:v1.18.0 kube-apiserver

k8s.gcr.io/kube-controller-manager:v1.18.0 kube-controller-manager

k8s.gcr.io/kube-scheduler:v1.18.0 kube-scheduler

k8s.gcr.io/pause:3.2 pause

k8s.gcr.io/coredns:1.6.7 coredns

k8s.gcr.io/etcd:3.4.3-0 etcd

第三步——实现导出镜像

[root@k8s1 k8s]# for i in `docker images |grep k8s.gcr.io|awk '{print $1":"$2}'`;do File_Name=`echo $i|awk -F '/' '{print $2}'|awk -F ':' '{print $1}'`;docker save > ${File_Name}.tar $i ;done

[root@k8s1 k8s]# ll

total 867676

-rw-r--r-- 1 root root 43932160 Apr 26 14:54 coredns.tar

-rw-r--r-- 1 root root 290010624 Apr 26 14:54 etcd.tar

-rw-r--r-- 1 root root 174525440 Apr 26 14:53 kube-apiserver.tar

-rw-r--r-- 1 root root 163929088 Apr 26 14:54 kube-controller-manager.tar

-rw-r--r-- 1 root root 118543360 Apr 26 14:53 kube-proxy.tar

-rw-r--r-- 1 root root 96836608 Apr 26 14:53 kube-scheduler.tar

-rw-r--r-- 1 root root 692736 Apr 26 14:54 pause.tar

第四步——实现导入镜像

for i in `ls /opt/k8s/*.tar`;do docker load < $i;done

耐心等待,选择一个目录,再目录下执行。比如:mkdir k8s_images;cd k8s_images;

最后:方法三:

1.环境准备

1.1 docker-简单介绍

下载安装docker服务:

mkdir /var/log/journal

mkdir /etc/systemd/journald.conf.d

cat > /etc/systemd/journald.conf.d/99-prophet.conf <<EOF

[Journal]

Storage=persistent

Compress=yes

SyncIntervalSec=5m

RateLimitInterval=30s

RateLimitBurst=1000

SystemMaxUse=10G

SystemMaxFileSize=200M

MaxRetentionSec=2week

ForwardToSyslog=no

EOF

systemctl restart systemd-journald

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce

mkdir /etc/docker

cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"registry-mirrors": ["https://******.mirror.aliyuncs.com"],

"insecure-registry": [ "hub1.lczy.com" ]

}

EOF

mkdir -p /etc/systemd/system/docker.service.d

systemctl daemon-reload && systemctl restart docker && systemctl enable docker

解释:

https://******.mirror.aliyuncs.com——————阿里云加速

"hub1.lczy.com"————私仓,http请求,也可以使用 "https://hub1.lczy.com"

注意版本,1.18D的K8S集群适合最新的docker版本为19.03

1.2 镜像下载

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/etcd:3.4.3-0

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/coredns:1.6.7

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/pause:3.2

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/kube-controller-manager:v1.18.0

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/kube-scheduler:v1.18.0

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/kube-apiserver:v1.18.0

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/kube-proxy:v1.18.0

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-s390x

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-ppc64le

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-arm64

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-arm

docker pull registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-amd64

2.构建harbor私仓

2.1 私仓登录

docker login hub1.lczy.com

admin

Harbor12345

https:访问证书问题:

echo -n | openssl s_client -showcerts -connect hub1.lczy.com:443 2>/dev/null | sed -ne '/-BEGIN CERTIFICATE-/,/-END CERTIFICATE-/p' >> /etc/ssl/certs/ca-certificates.crt

2.2 上传私仓

本地重新tag然后上传 ,查看已下载的镜像

[root@k8s1 ~]# docker images |grep 'registry.cn-beijing.aliyuncs.com'|awk '{print $1":"$2}'

registry.cn-beijing.aliyuncs.com/yunweijia/kube-proxy:v1.18.0

registry.cn-beijing.aliyuncs.com/yunweijia/kube-scheduler:v1.18.0

registry.cn-beijing.aliyuncs.com/yunweijia/kube-apiserver:v1.18.0

registry.cn-beijing.aliyuncs.com/yunweijia/kube-controller-manager:v1.18.0

registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-s390x

registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-ppc64le

registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-arm64

registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-arm

registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-amd64

registry.cn-beijing.aliyuncs.com/yunweijia/pause:3.2

registry.cn-beijing.aliyuncs.com/yunweijia/coredns:1.6.7

registry.cn-beijing.aliyuncs.com/yunweijia/etcd:3.4.3-0

打印 tag 指令

[root@k8s1 ~]# for i in `docker images |grep 'beijing'|awk '{print $1":"$2}'`;do kb=`echo $i|awk -F '/' '{print $3}'`;echo "docker tag $i hub1.lczy.com/k8s/$kb";done

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/kube-proxy:v1.18.0 hub1.lczy.com/k8s/kube-proxy:v1.18.0

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/kube-controller-manager:v1.18.0 hub1.lczy.com/k8s/kube-controller-manager:v1.18.0

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/kube-scheduler:v1.18.0 hub1.lczy.com/k8s/kube-scheduler:v1.18.0

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/kube-apiserver:v1.18.0 hub1.lczy.com/k8s/kube-apiserver:v1.18.0

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-s390x hub1.lczy.com/k8s/flannel:v0.12.0-s390x

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-ppc64le hub1.lczy.com/k8s/flannel:v0.12.0-ppc64le

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-arm64 hub1.lczy.com/k8s/flannel:v0.12.0-arm64

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-arm hub1.lczy.com/k8s/flannel:v0.12.0-arm

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/flannel:v0.12.0-amd64 hub1.lczy.com/k8s/flannel:v0.12.0-amd64

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/pause:3.2 hub1.lczy.com/k8s/pause:3.2

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/coredns:1.6.7 hub1.lczy.com/k8s/coredns:1.6.7

docker tag registry.cn-beijing.aliyuncs.com/yunweijia/etcd:3.4.3-0 hub1.lczy.com/k8s/etcd:3.4.3-0

打印上传指令

[root@k8s1 ~]# for i in `docker images |grep 'hub1'|awk '{print $1":"$2}'`;do echo "docker push $i ";done;

docker push hub1.lczy.com/k8s/kube-proxy:v1.18.0

docker push hub1.lczy.com/k8s/kube-controller-manager:v1.18.0

docker push hub1.lczy.com/k8s/kube-scheduler:v1.18.0

docker push hub1.lczy.com/k8s/kube-apiserver:v1.18.0

docker push hub1.lczy.com/k8s/flannel:v0.12.0-s390x

docker push hub1.lczy.com/k8s/flannel:v0.12.0-ppc64le

docker push hub1.lczy.com/k8s/flannel:v0.12.0-arm64

docker push hub1.lczy.com/k8s/flannel:v0.12.0-arm

docker push hub1.lczy.com/k8s/flannel:v0.12.0-amd64

docker push hub1.lczy.com/k8s/pause:3.2

docker push hub1.lczy.com/k8s/coredns:1.6.7

docker push hub1.lczy.com/k8s/etcd:3.4.3-0

2.3 简要介绍私仓的构建

docker-compose:

curl -Lhttps://github.com/docker/compose/releases/download/1.9.0/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose

Harbor 官方地址:https://github.com/vmware/harbor/releases

tar xvf harbor-offline-installer-.tgz

https://github.com/vmware/harbor/releases/download/v1.2.0/harbor-offline-installer-v1.2.0.tgz

2、配置harbor.cfg

a、必选参数

hostname:目标的主机名或者完全限定域名

ui_url_protocol:http或https。默认为http

db_password:用于db_auth的MySQL数据库的根密码。更改此密码进行任何生产用途

max_job_workers:(默认值为3)作业服务中的复制工作人员的最大数量。对于每个映像复制作业, 工作人员将存储库的所有标签同步到远程目标。增加此数字允许系统中更多的并发复制作业。但是,由于每个工 作人员都会消耗一定数量的网络/ CPU/IO资源,请根据主机的硬件资源,仔细选择该属性的值

customize_crt:(on或off。默认为on)当此属性打开时,prepare脚本将为注册表的令牌的生成/验证创建私钥和根证书

ssl_cert:SSL证书的路径,仅当协议设置为https时才应用

ssl_cert_key:SSL密钥的路径,仅当协议设置为https时才应用

secretkey_path:用于在复制策略中加密或解密远程注册表的密码的密钥路径

3、创建 https 证书以及配置相关目录权限

openssl genrsa -des3 -out server.key 2048

opensslreq -new-key server.key -out server.csr

cp server.key server.key.org

opensslrsa -in server.key.org -out server.key

openssl x509 -req -days 365 -in server.csr-signkey server.key -out server.crt

mkdir /data/cert

chmod -R 777 /data/cert

4、运行脚本进行安装

./install.sh

5、访问测试 https://hub1.lczy.com

管理员用户名/密码为admin /Harbor12345

6、https 与http

3.使用私仓

3.1 直接指定代理仓库

kubeadm init --image-repository=registry.aliyuncs.com/google_containers

3.2 手动拉取,tag

——————————拉取————————————

docker pull hub1.lczy.com/k8s/kube-proxy:v1.18.0

docker pull hub1.lczy.com/k8s/kube-controller-manager:v1.18.0

docker pull hub1.lczy.com/k8s/kube-scheduler:v1.18.0

docker pull hub1.lczy.com/k8s/kube-apiserver:v1.18.0

docker pull hub1.lczy.com/k8s/flannel:v0.12.0-s390x

docker pull hub1.lczy.com/k8s/flannel:v0.12.0-ppc64le

docker pull hub1.lczy.com/k8s/flannel:v0.12.0-arm64

docker pull hub1.lczy.com/k8s/flannel:v0.12.0-arm

docker pull hub1.lczy.com/k8s/flannel:v0.12.0-amd64

docker pull hub1.lczy.com/k8s/pause:3.2

docker pull hub1.lczy.com/k8s/coredns:1.6.7

docker pull hub1.lczy.com/k8s/etcd:3.4.3-0

——————————tag————————————

[root@k8s1 ~]# for i in `docker images |grep 'hub1'|awk '{print $1":"$2}'`;do kb=`echo $i|awk -F '/' '{print $3}'`;echo "docker tag $i k8s.gcr.io/$kb";done

docker tag hub1.lczy.com/k8s/kube-proxy:v1.18.0 k8s.gcr.io/kube-proxy:v1.18.0

docker tag hub1.lczy.com/k8s/kube-controller-manager:v1.18.0 k8s.gcr.io/kube-controller-manager:v1.18.0

docker tag hub1.lczy.com/k8s/kube-apiserver:v1.18.0 k8s.gcr.io/kube-apiserver:v1.18.0

docker tag hub1.lczy.com/k8s/kube-scheduler:v1.18.0 k8s.gcr.io/kube-scheduler:v1.18.0

docker tag hub1.lczy.com/k8s/flannel:v0.12.0-s390x k8s.gcr.io/flannel:v0.12.0-s390x

docker tag hub1.lczy.com/k8s/flannel:v0.12.0-ppc64le k8s.gcr.io/flannel:v0.12.0-ppc64le

docker tag hub1.lczy.com/k8s/flannel:v0.12.0-arm64 k8s.gcr.io/flannel:v0.12.0-arm64

docker tag hub1.lczy.com/k8s/flannel:v0.12.0-arm k8s.gcr.io/flannel:v0.12.0-arm

docker tag hub1.lczy.com/k8s/flannel:v0.12.0-amd64 k8s.gcr.io/flannel:v0.12.0-amd64

docker tag hub1.lczy.com/k8s/pause:3.2 k8s.gcr.io/pause:3.2

docker tag hub1.lczy.com/k8s/coredns:1.6.7 k8s.gcr.io/coredns:1.6.7

docker tag hub1.lczy.com/k8s/etcd:3.4.3-0 k8s.gcr.io/etcd:3.4.3-0

3.3 初始化测试

[root@k8s1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

hub1.lczy.com/k8s/kube-proxy v1.18.0 43940c34f24f 13 months ago 117MB

hub1.lczy.com/k8s/kube-apiserver v1.18.0 74060cea7f70 13 months ago 173MB

hub1.lczy.com/k8s/kube-scheduler v1.18.0 a31f78c7c8ce 13 months ago 95.3MB

hub1.lczy.com/k8s/kube-controller-manager v1.18.0 d3e55153f52f 13 months ago 162MB

hub1.lczy.com/k8s/flannel v0.12.0-s390x 57eade024bfb 13 months ago 56.9MB

hub1.lczy.com/k8s/flannel v0.12.0-ppc64le 9225b871924d 13 months ago 70.3MB

hub1.lczy.com/k8s/flannel v0.12.0-arm64 7cf4a417daaa 13 months ago 53.6MB

hub1.lczy.com/k8s/flannel v0.12.0-arm 767c3d1f8cba 13 months ago 47.8MB

hub1.lczy.com/k8s/flannel v0.12.0-amd64 4e9f801d2217 13 months ago 52.8MB

hub1.lczy.com/k8s/pause 3.2 80d28bedfe5d 14 months ago 683kB

hub1.lczy.com/k8s/coredns 1.6.7 67da37a9a360 15 months ago 43.8MB

hub1.lczy.com/k8s/etcd 3.4.3-0 303ce5db0e90 18 months ago 288MB

主节点不必要下载--flannel--相关插件

[root@k8s1 ~]# kubeadm init --image-repository=hub1.lczy.com/k8s --config=kubeadm-config.yaml --upload-certs | tee kubeadm-init.log

can not mix '--config' with arguments [image-repository]

To see the stack trace of this error execute with --v=5 or higher

报错

直接更改config配置文件

cat kubeadm-config.yaml

源文件内容:imageRepository: k8s.gcr.io

修改为:imageRepository: hub1.lczy.com/k8s

再次尝试

[root@k8s1 ~]# kubeadm init --config=kubeadm-config.yaml --upload-certs | tee kubeadm-init.log

W0426 15:07:06.869261 13847 strict.go:54] error unmarshaling configuration schema.GroupVersionKind{Group:"kubeproxy.config.k8s.io", Version:"v1alpha1", Kind:"KubeProxyConfiguration"}: error unmarshaling JSON: while decoding JSON: json: unknown field "SupportIPVSProxyMode"

W0426 15:07:06.870176 13847 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[init] Using Kubernetes version: v1.18.0

[preflight] Running pre-flight checks

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.6. Latest validated version: 19.03

[WARNING Hostname]: hostname "k8s1.dev.lczy.com" could not be reached

[WARNING Hostname]: hostname "k8s1.dev.lczy.com": lookup k8s1.dev.lczy.com on 192.168.168.169:53: no such host

error execution phase wait-control-plane: couldn't initialize a Kubernetes cluster

Unfortunately, an error has occurred:

timed out waiting for the condition

This error is likely caused by:

- The kubelet is not running

- The kubelet is unhealthy due to a misconfiguration of the node in some way (required cgroups disabled)

If you are on a systemd-powered system, you can try to troubleshoot the error with the following commands:

- 'systemctl status kubelet'

- 'journalctl -xeu kubelet'

Additionally, a control plane component may have crashed or exited when started by the container runtime.

To troubleshoot, list all containers using your preferred container runtimes CLI.

Here is one example how you may list all Kubernetes containers running in docker:

- 'docker ps -a | grep kube | grep -v pause'

Once you have found the failing container, you can inspect its logs with:

- 'docker logs CONTAINERID'

报错

排查一下,

[root@k8s1 ~]# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: active (running) since Mon 2021-04-26 15:07:19 CST; 7min ago

Docs: https://kubernetes.io/docs/

[root@k8s1 ~]# docker ps -a | grep kube | grep -v pause

f9fd62134e6c 303ce5db0e90 "etcd --advertise-cl…" About a minute ago Exited (1) About a minute ago k8s_etcd_etcd-k8s1.dev.lczy.com_kube-system_7916363d00f9b5fe860b436551739261_6

d65cd6aac4e9 74060cea7f70 "kube-apiserver --ad…" 2 minutes ago Exited (2) 2 minutes ago k8s_kube-apiserver_kube-apiserver-k8s1.dev.lczy.com_kube-system_ed56c54b98d34e8731772586f127f86f_5

b56803458bff a31f78c7c8ce "kube-scheduler --au…" 7 minutes ago Up 7 minutes k8s_kube-scheduler_kube-scheduler-k8s1.dev.lczy.com_kube-system_58cabb9b5f97f8700654c0ffc7ec0696_0

a25255200f79 d3e55153f52f "kube-controller-man…" 7 minutes ago Up 7 minutes k8s_kube-controller-manager_kube-controller-manager-k8s1.dev.lczy.com_kube-system_9152e2708b669ca9a567d1606811fca2_0

[root@k8s1 ~]# docker logs f9fd62134e6c

[WARNING] Deprecated '--logger=capnslog' flag is set; use '--logger=zap' flag instead

2021-04-26 07:13:23.557913 I | etcdmain: etcd Version: 3.4.3

2021-04-26 07:13:23.557970 I | etcdmain: Git SHA: 3cf2f69b5

2021-04-26 07:13:23.557978 I | etcdmain: Go Version: go1.12.12

2021-04-26 07:13:23.557984 I | etcdmain: Go OS/Arch: linux/amd64

2021-04-26 07:13:23.557990 I | etcdmain: setting maximum number of CPUs to 2, total number of available CPUs is 2

[WARNING] Deprecated '--logger=capnslog' flag is set; use '--logger=zap' flag instead

2021-04-26 07:13:23.558112 I | embed: peerTLS: cert = /etc/kubernetes/pki/etcd/peer.crt, key = /etc/kubernetes/pki/etcd/peer.key, trusted-ca = /etc/kubernetes/pki/etcd/ca.crt, client-cert-auth = true, crl-file =

2021-04-26 07:13:23.558289 C | etcdmain: listen tcp 1.2.3.4:2380: bind: cannot assign requested address

初始化配置文件没有修改ip。。。醉了

生成配置文件

kubeadm config print init-defaults > kubeadm-config.yaml

修改三处

1.advertiseAddress: 192.168.101.86

2.imageRepository: hub1.lczy.com/k8s

3. 红色为新增,

dnsDomain: cluster.local

podSubnet: "10.244.0.0/16"

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:

SupportIPVSProxyMode: true

mode: ipvs

修改玩配置需要--kubeadm reset-顺便主机名加上

[root@k8s1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.101.86 k8s1.dev.lczy.com

192.168.100.17 k8s2.dev.lczy.com

[root@k8s1 ~]# kubeadm reset

[reset] Reading configuration from the cluster...

[reset] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

W0426 15:23:53.087893 22435 reset.go:99] [reset] Unable to fetch the kubeadm-config ConfigMap from cluster: failed to get config map: Get https://1.2.3.4:6443/api/v1/namespaces/kube-system/configmaps/kubeadm-config?timeout=10s: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

[reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.

[reset] Are you sure you want to proceed? [y/N]: y

[preflight] Running pre-flight checks

W0426 15:24:40.494905 22435 removeetcdmember.go:79] [reset] No kubeadm config, using etcd pod spec to get data directory

[reset] Stopping the kubelet service

[reset] Unmounting mounted directories in "/var/lib/kubelet"

[reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

[reset] Deleting contents of stateful directories: [/var/lib/etcd /var/lib/kubelet /var/lib/dockershim /var/run/kubernetes /var/lib/cni]

The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d

The reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually by using the "iptables" command.

If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.

The reset process does not clean your kubeconfig files and you must remove them manually.

Please, check the contents of the $HOME/.kube/config file

这里也帮你自动清理掉了

[root@k8s1 ~]# docker ps -a | grep kube | grep -v pause

[root@k8s1 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

重新再来一次初始化

[root@k8s1 ~]# kubeadm init --config=kubeadm-config.yaml --upload-certs | tee kubeadm-init.log

W0426 15:27:33.169180 23145 strict.go:54] error unmarshaling configuration schema.GroupVersionKind{Group:"kubeproxy.config.k8s.io", Version:"v1alpha1", Kind:"KubeProxyConfiguration"}: error unmarshaling JSON: while decoding JSON: json: unknown field "SupportIPVSProxyMode"

W0426 15:27:33.170594 23145 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[init] Using Kubernetes version: v1.18.0

[preflight] Running pre-flight checks (docker版本安全时要是要注意版本)

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.6. Latest validated version: 19.03

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s1.dev.lczy.com kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.101.86]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s1.dev.lczy.com localhost] and IPs [192.168.101.86 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s1.dev.lczy.com localhost] and IPs [192.168.101.86 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

W0426 15:27:40.648222 23145 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

W0426 15:27:40.649970 23145 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 24.005147 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.18" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

1fe7e1c89deec70c5c859fddec371694c381c1986485d6850cc8daec506de3cd

[mark-control-plane] Marking the node k8s1.dev.lczy.com as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8s1.dev.lczy.com as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

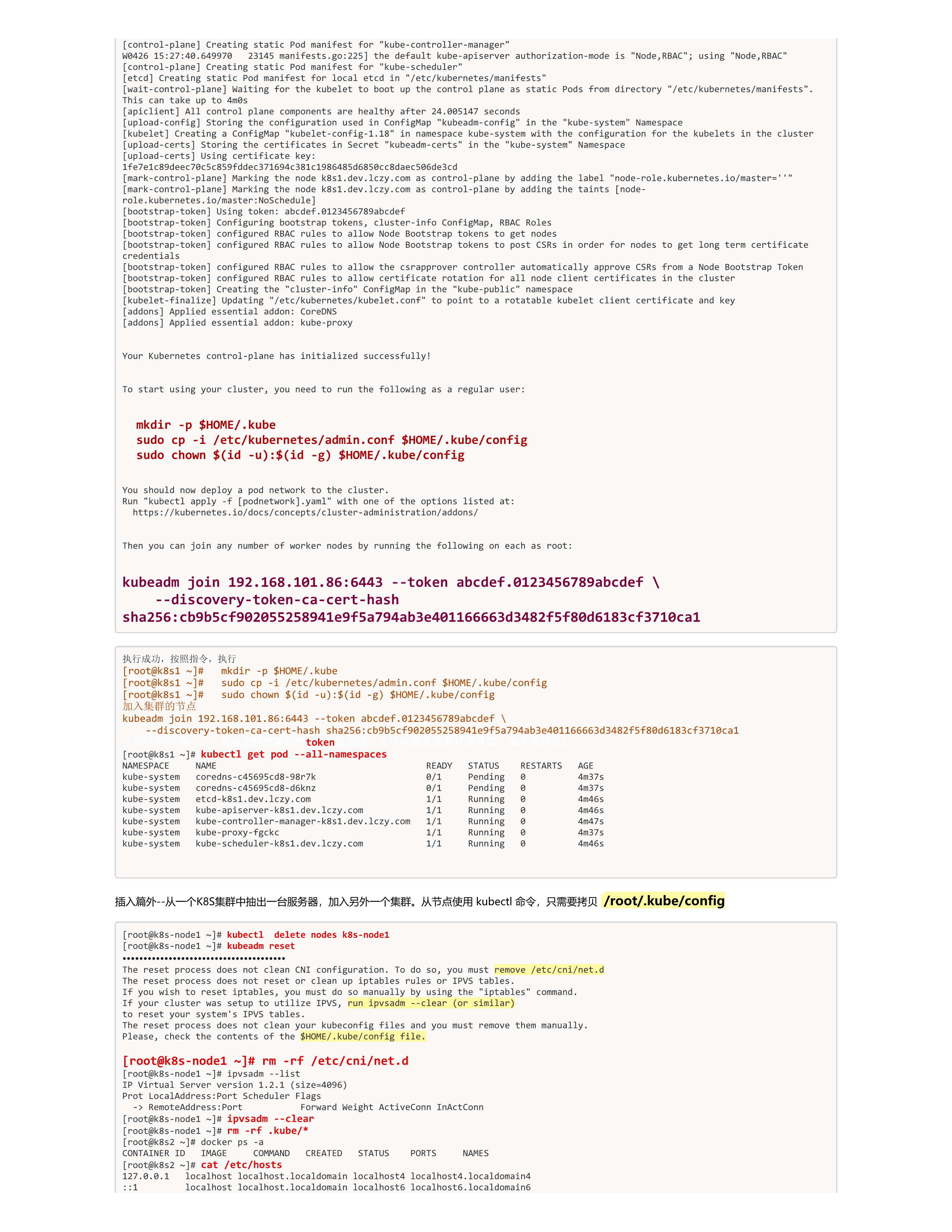

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.101.86:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:cb9b5cf902055258941e9f5a794ab3e401166663d3482f5f80d6183cf3710ca1

执行成功,按照指令,执行

[root@k8s1 ~]# mkdir -p $HOME/.kube

[root@k8s1 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s1 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

加入集群的节点

kubeadm join 192.168.101.86:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:cb9b5cf902055258941e9f5a794ab3e401166663d3482f5f80d6183cf3710ca1

(会过期的哦,下一篇会介绍更新这个token的方法,也可以搜索引擎寻求答案,很多很好找)

[root@k8s1 ~]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-c45695cd8-98r7k 0/1 Pending 0 4m37s

kube-system coredns-c45695cd8-d6knz 0/1 Pending 0 4m37s

kube-system etcd-k8s1.dev.lczy.com 1/1 Running 0 4m46s

kube-system kube-apiserver-k8s1.dev.lczy.com 1/1 Running 0 4m46s

kube-system kube-controller-manager-k8s1.dev.lczy.com 1/1 Running 0 4m47s

kube-system kube-proxy-fgckc 1/1 Running 0 4m37s

kube-system kube-scheduler-k8s1.dev.lczy.com 1/1 Running 0 4m46s

插入篇外--从一个K8S集群中抽出一台服务器,加入另外一个集群。从节点使用 kubectl 命令,只需要拷贝 /root/.kube/config

[root@k8s-node1 ~]# kubectl delete nodes k8s-node1

[root@k8s-node1 ~]# kubeadm reset

·······································

The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d

The reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually by using the "iptables" command.

If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.

The reset process does not clean your kubeconfig files and you must remove them manually.

Please, check the contents of the $HOME/.kube/config file.

[root@k8s-node1 ~]# rm -rf /etc/cni/net.d

[root@k8s-node1 ~]# ipvsadm --list

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

[root@k8s-node1 ~]# ipvsadm --clear

[root@k8s-node1 ~]# rm -rf .kube/*

[root@k8s2 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

[root@k8s2 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.168.168 hub.lczy.com

192.168.168.169 hub1.lczy.com

192.168.101.86 k8s1.dev.lczy.com

192.168.100.17 k8s2.dev.lczy.com

加入集群(复制时注意“> ”不需要哦!)

[root@k8s2 ~]# kubeadm join 192.168.101.86:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:cb9b5cf902055258941e9f5a794ab3e401166663d3482f5f80d6183cf3710ca1

W0426 15:52:57.501721 19344 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.

[preflight] Running pre-flight checks

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.0. Latest validated version: 19.03

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.18" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@k8s1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s1.dev.lczy.com NotReady master 25m v1.18.0

k8s2.dev.lczy.com NotReady <none> 11s v1.18.0

flannel文件

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

[root@k8s1 ~]# cat kube-flannel.yml |grep image

image: quay.io/coreos/flannel:v0.12.0-amd64

image: quay.io/coreos/flannel:v0.12.0-amd64

image: quay.io/coreos/flannel:v0.12.0-arm64

image: quay.io/coreos/flannel:v0.12.0-arm64

image: quay.io/coreos/flannel:v0.12.0-arm

image: quay.io/coreos/flannel:v0.12.0-arm

image: quay.io/coreos/flannel:v0.12.0-ppc64le

image: quay.io/coreos/flannel:v0.12.0-ppc64le

image: quay.io/coreos/flannel:v0.12.0-s390x

image: quay.io/coreos/flannel:v0.12.0-s390x

vim 批量替换 vim kube-flannel.yml

:%s/quay.io\/coreos/hub1.lczy.com\/k8s/g

[root@k8s1 ~]# cat kube-flannel.yml |grep image

image: hub1.lczy.com/k8s/flannel:v0.12.0-amd64

image: hub1.lczy.com/k8s/flannel:v0.12.0-amd64

image: hub1.lczy.com/k8s/flannel:v0.12.0-arm64

image: hub1.lczy.com/k8s/flannel:v0.12.0-arm64

image: hub1.lczy.com/k8s/flannel:v0.12.0-arm

image: hub1.lczy.com/k8s/flannel:v0.12.0-arm

image: hub1.lczy.com/k8s/flannel:v0.12.0-ppc64le

image: hub1.lczy.com/k8s/flannel:v0.12.0-ppc64le

image: hub1.lczy.com/k8s/flannel:v0.12.0-s390x

image: hub1.lczy.com/k8s/flannel:v0.12.0-s390x

[root@k8s1 ~]# kubectl get pod --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-c45695cd8-98r7k 1/1 Running 0 41m 10.244.0.3 k8s1.dev.lczy.com <none> <none>

kube-system coredns-c45695cd8-d6knz 1/1 Running 0 41m 10.244.0.2 k8s1.dev.lczy.com <none> <none>

kube-system etcd-k8s1.dev.lczy.com 1/1 Running 0 42m 192.168.101.86 k8s1.dev.lczy.com <none> <none>

kube-system kube-apiserver-k8s1.dev.lczy.com 1/1 Running 0 42m 192.168.101.86 k8s1.dev.lczy.com <none> <none>

kube-system kube-controller-manager-k8s1.dev.lczy.com 1/1 Running 0 42m 192.168.101.86 k8s1.dev.lczy.com <none> <none>

kube-system kube-flannel-ds-amd64-n4g6d 1/1 Running 0 2m31s 192.168.101.86 k8s1.dev.lczy.com <none> <none>

kube-system kube-flannel-ds-amd64-xv4ms 0/1 Init:0/1 0 2m31s 192.168.100.17 k8s2.dev.lczy.com <none> <none>

kube-system kube-proxy-62v94 0/1 ContainerCreating 0 16m 192.168.100.17 k8s2.dev.lczy.com <none> <none>

kube-system kube-proxy-fgckc 1/1 Running 0 41m 192.168.101.86 k8s1.dev.lczy.com <none> <none>

kube-system kube-scheduler-k8s1.dev.lczy.com 1/1 Running 0 42m 192.168.101.86 k8s1.dev.lczy.com <none> <none>

k8s2.dev.lczy.com 没有启动服务,查看镜像没有拉取。看来需要手动拉取了

本身不会自己拉取镜像!!!本身不会自己拉取镜像!!!本身不会自己拉取镜像!!!

[root@k8s2 ~]# docker pull hub1.lczy.com/k8s/coredns:1.6.7

Error response from daemon: pull access denied for hub1.lczy.com/k8s/coredns, repository does not exist or may require 'docker login': denied: requested access to the resource is denied

[root@k8s2 ~]# cat .docker/config.json

{

"auths": {

"hub1.lczy.com": {

"auth": "cGFuaGFueGluOjEyM1FXRWFzZA=="

}

}

}[root@k8s2 ~]# rm -rf .docker/config.json

目录被我设置了权限,更改账户密码,ok

[root@k8s2 ~]# docker pull hub1.lczy.com/k8s/coredns:1.6.7

1.6.7: Pulling from k8s/coredns

Digest: sha256:695a5e109604331f843d2c435f488bf3f239a88aec49112d452c1cbf87e88405

Status: Downloaded newer image for hub1.lczy.com/k8s/coredns:1.6.7

hub1.lczy.com/k8s/coredns:1.6.7

再次执行

docker pull hub1.lczy.com/k8s/kube-proxy:v1.18.0

docker pull hub1.lczy.com/k8s/kube-controller-manager:v1.18.0

docker pull hub1.lczy.com/k8s/kube-scheduler:v1.18.0

docker pull hub1.lczy.com/k8s/kube-apiserver:v1.18.0

docker pull hub1.lczy.com/k8s/flannel:v0.12.0-s390x

docker pull hub1.lczy.com/k8s/flannel:v0.12.0-ppc64le

docker pull hub1.lczy.com/k8s/flannel:v0.12.0-arm64

docker pull hub1.lczy.com/k8s/flannel:v0.12.0-arm

docker pull hub1.lczy.com/k8s/flannel:v0.12.0-amd64

docker pull hub1.lczy.com/k8s/pause:3.2

docker pull hub1.lczy.com/k8s/coredns:1.6.7

docker pull hub1.lczy.com/k8s/etcd:3.4.3-0

[root@k8s1 ~]# kubectl get pod --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-c45695cd8-98r7k 1/1 Running 0 49m 10.244.0.3 k8s1.dev.lczy.com <none> <none>

kube-system coredns-c45695cd8-d6knz 1/1 Running 0 49m 10.244.0.2 k8s1.dev.lczy.com <none> <none>

kube-system etcd-k8s1.dev.lczy.com 1/1 Running 0 50m 192.168.101.86 k8s1.dev.lczy.com <none> <none>

kube-system kube-apiserver-k8s1.dev.lczy.com 1/1 Running 0 50m 192.168.101.86 k8s1.dev.lczy.com <none> <none>

kube-system kube-controller-manager-k8s1.dev.lczy.com 1/1 Running 0 50m 192.168.101.86 k8s1.dev.lczy.com <none> <none>

kube-system kube-flannel-ds-amd64-n4g6d 1/1 Running 0 10m 192.168.101.86 k8s1.dev.lczy.com <none> <none>

kube-system kube-flannel-ds-amd64-xv4ms 1/1 Running 2 10m 192.168.100.17 k8s2.dev.lczy.com <none> <none>

kube-system kube-proxy-62v94 1/1 Running 0 24m 192.168.100.17 k8s2.dev.lczy.com <none> <none>

kube-system kube-proxy-fgckc 1/1 Running 0 49m 192.168.101.86 k8s1.dev.lczy.com <none> <none>

kube-system kube-scheduler-k8s1.dev.lczy.com 1/1 Running 0 50m 192.168.101.86 k8s1.dev.lczy.com <none> <none>

[root@k8s1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s1.dev.lczy.com Ready master 110m v1.18.0

k8s2.dev.lczy.com Ready <none> 84m v1.18.0

------END--------------

说明1:

文件

https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

是变动的,每次包含的镜像名称不一样,可以考虑备份或者自行修改替换镜像为自己方便下载的镜像名称。

举例:

新下载的--2021-04-27--

[root@k8s1 ~]# cat kube-flannel.yml |grep image

image: hub1.lczy.com/k8s/flannel:v0.14.0-rc1

image: hub1.lczy.com/k8s/flannel:v0.14.0-rc1

---2020年下载的---

[root@k8s1 ~]# cat kube-flannel.yml |grep image

image: quay.io/coreos/flannel:v0.12.0-amd64

image: quay.io/coreos/flannel:v0.12.0-amd64

image: quay.io/coreos/flannel:v0.12.0-arm64

image: quay.io/coreos/flannel:v0.12.0-arm64

image: quay.io/coreos/flannel:v0.12.0-arm

image: quay.io/coreos/flannel:v0.12.0-arm

image: quay.io/coreos/flannel:v0.12.0-ppc64le

image: quay.io/coreos/flannel:v0.12.0-ppc64le

image: quay.io/coreos/flannel:v0.12.0-s390x

image: quay.io/coreos/flannel:v0.12.0-s390x

只需要替换 flannel:v0.14.0-rc1 flannel:v0.12.0-amd64

说明2:从节点不会主动下载镜像,主节点会。(我的环境是这样的)

docker pull hub1.lczy.com/k8s/kube-proxy:v1.18.0

docker pull hub1.lczy.com/k8s/flannel:v0.12.0-amd64

docker pull hub1.lczy.com/k8s/pause:3.2

只需要上面的三个镜像即可