技术:return of nn.LSTM

The return of Pytorch.nn.LSTM is: output, (h_n, c_n)

Outputs: output, (h_n, c_n)

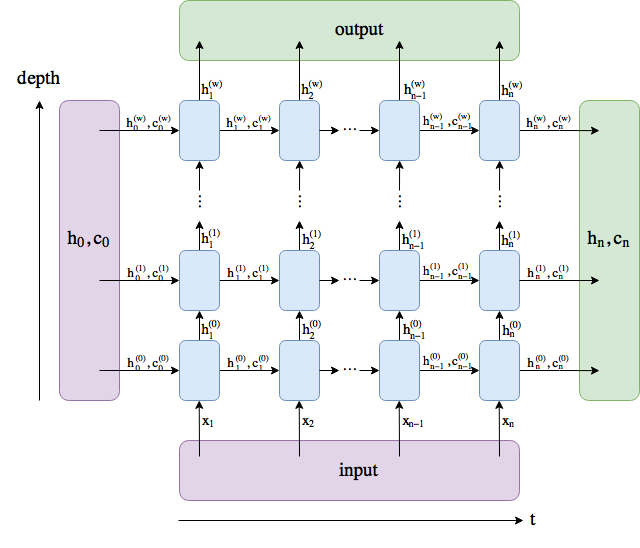

- output (seq_len, batch, hidden_size * num_directions): tensor containing the output features (h_t) from the last layer of the RNN, for each t. If a torch.nn.utils.rnn.PackedSequence has been given as the input, the output will also be a packed sequence.

- h_n (num_layers * num_directions, batch, hidden_size): tensor containing the hidden state for t=seq_len

- c_n (num_layers * num_directions, batch, hidden_size): tensor containing the cell state for t=seq_len

output comprises all the hidden states in the last layer ("last" depth-wise, not time-wise). (h_n, c_n) comprises the hidden states after the last timestep, t = n, so you could potentially feed them into another LSTM.

在VAE中,latent variables是根据h_n来计算的,而attention是根据output来计算的。

Reference:

浙公网安备 33010602011771号

浙公网安备 33010602011771号