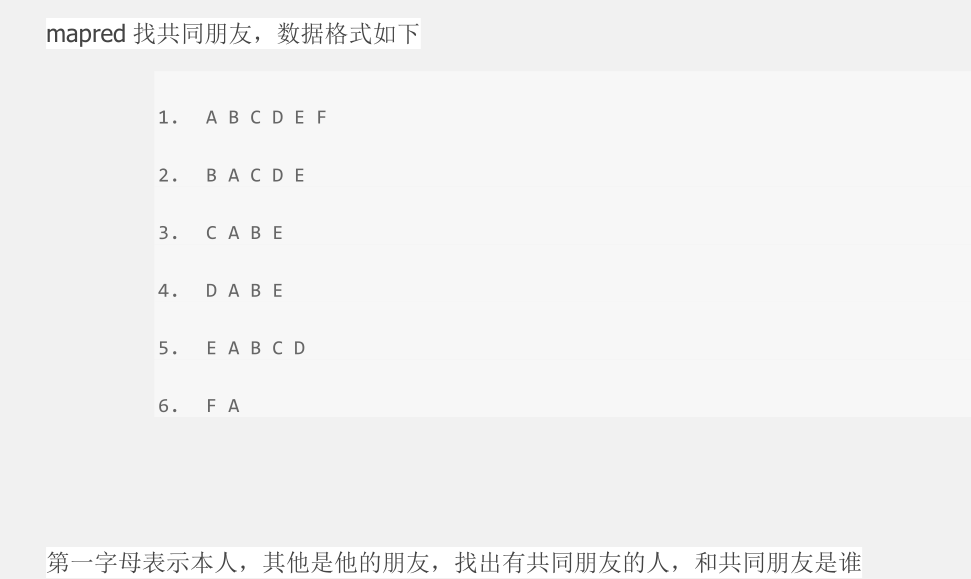

MapReduce实现共同朋友问题

答案:

package com.duking.mapreduce; import java.io.IOException; import java.util.Set; import java.util.StringTokenizer; import java.util.TreeSet; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import org.apache.hadoop.util.GenericOptionsParser; public class FindFriends { /** * map方法 * @author duking * */ public static class Map extends Mapper<Object, Text, Text, Text> { /** * 实现map方法 */ public void map(Object key, Text value, Context context) throws IOException, InterruptedException { //将输入的每一行数据切分后存到persions中 StringTokenizer persions = new StringTokenizer(value.toString()); //定义一个Text 存放本人信息owner Text owner = new Text(); //定义一个Set集合,存放朋友信息 Set<String> set = new TreeSet<String>(); //将这一行的本人信息存入owner中 owner.set(persions.nextToken()); //将所有的朋友信息存放到Set集合中 while(persions.hasMoreTokens()){ set.add(persions.nextToken()); } //定义一个String数组存放朋友信息 String[] friends = new String[set.size()]; //将集合转换为数组,并将集合中的数据存放到friend friends = set.toArray(friends); //将朋友进行两两组合 for(int i=0;i<friends.length;i++){ for(int j=i+1;j<friends.length;j++){ String outputkey = friends[i]+friends[j]; context.write(new Text(outputkey), owner); } } } } /** * Reduce方法 * @author duking * */ public static class Reduce extends Reducer<Text, Text, Text, Text> { /** * 实现Reduce方法 */ public void reduce(Text key, Iterable<Text> values,Context context) throws IOException, InterruptedException { String commonfriends = ""; for (Text val : values){ if(commonfriends == ""){ commonfriends = val.toString(); }else{ commonfriends = commonfriends + ":" +val.toString(); } } context.write(key,new Text(commonfriends)); } } /** * main * @param args * @throws Exception */ public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); conf.set("mapred.job.tracker", "192.168.60.129:9000"); //指定待运行参数的目录为输入输出目录 String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs(); /* 指定工程目录下的input output为输入输出目录 String[] ioArgs = new String[] {"input", "output" }; String[] otherArgs = new GenericOptionsParser(conf, ioArgs).getRemainingArgs(); */ if (otherArgs.length != 2) { //判断运行参数个数 System.err.println("Usage: Data Deduplication <in> <out>"); System.exit(2); } // set maprduce job name Job job = new Job(conf, "findfriends"); job.setJarByClass(FindFriends.class); // 设置map reduce处理类 job.setMapperClass(Map.class); job.setReducerClass(Reduce.class); // 设置输出类型 job.setOutputKeyClass(Text.class); job.setOutputValueClass(Text.class); //设置输入输出路径 FileInputFormat.addInputPath(job, new Path(otherArgs[0])); FileOutputFormat.setOutputPath(job, new Path(otherArgs[1])); System.exit(job.waitForCompletion(true) ? 0 : 1); } }

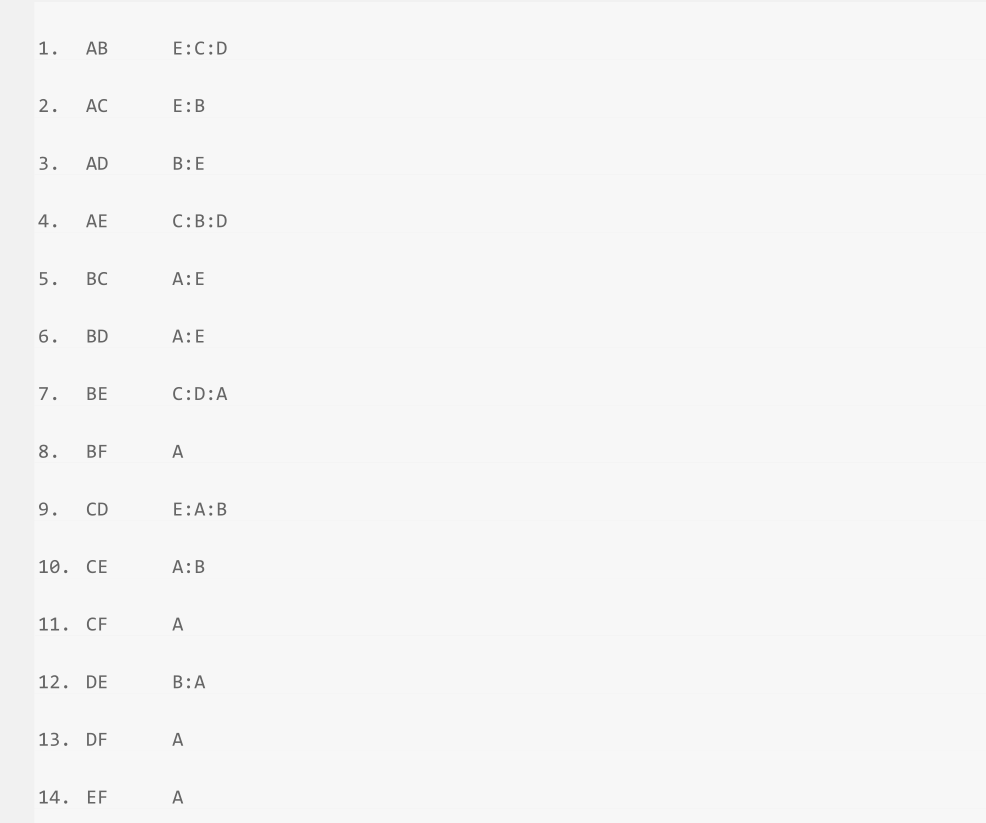

结果