hadoop 伪分布模式环境搭建

一 安装JDK

下载JDK jdk-8u112-linux-i586.tar.gz

解压JDK hadoop@ubuntu:/soft$ tar -zxvf jdk-8u112-linux-i586.tar.gz

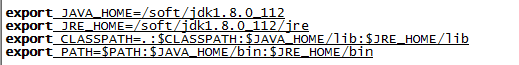

配置环境变量

使配置生效 hadoop@ubuntu:/soft/jdk1.8.0_112$ source /etc/profile

检验配置:hadoop@ubuntu:/soft/jdk1.8.0_112$ java

Usage: java [-options] class [args...]

(to execute a class)

or java [-options] -jar jarfile [args...]

(to execute a jar file)

where options include:

-d32 use a 32-bit data model if available

-d64 use a 64-bit data model if available

-client to select the "client" VM

-server to select the "server" VM

-minimal to select the "minimal" VM

The default VM is client.

-cp <class search path of directories and zip/jar files>

-classpath <class search path of directories and zip/jar files>

A : separated list of directories, JAR archives,

and ZIP archives to search for class files.

-D<name>=<value>

set a system property

-verbose:[class|gc|jni]

enable verbose output

-version print product version and exit

-version:<value>

Warning: this feature is deprecated and will be removed

in a future release.

require the specified version to run

-showversion print product version and continue

-jre-restrict-search | -no-jre-restrict-search

Warning: this feature is deprecated and will be removed

in a future release.

include/exclude user private JREs in the version search

-? -help print this help message

-X print help on non-standard options

-ea[:<packagename>...|:<classname>]

-enableassertions[:<packagename>...|:<classname>]

enable assertions with specified granularity

-da[:<packagename>...|:<classname>]

-disableassertions[:<packagename>...|:<classname>]

disable assertions with specified granularity

-esa | -enablesystemassertions

enable system assertions

-dsa | -disablesystemassertions

disable system assertions

-agentlib:<libname>[=<options>]

load native agent library <libname>, e.g. -agentlib:hprof

see also, -agentlib:jdwp=help and -agentlib:hprof=help

-agentpath:<pathname>[=<options>]

load native agent library by full pathname

-javaagent:<jarpath>[=<options>]

load Java programming language agent, see java.lang.instrument

-splash:<imagepath>

show splash screen with specified image

See http://www.oracle.com/technetwork/java/javase/documentation/index.html for more details.

检验配置:hadoop@ubuntu:/soft/jdk1.8.0_112$ javac

Usage: javac <options> <source files>

where possible options include:

-g Generate all debugging info

-g:none Generate no debugging info

-g:{lines,vars,source} Generate only some debugging info

-nowarn Generate no warnings

-verbose Output messages about what the compiler is doing

-deprecation Output source locations where deprecated APIs are used

-classpath <path> Specify where to find user class files and annotation processors

-cp <path> Specify where to find user class files and annotation processors

-sourcepath <path> Specify where to find input source files

-bootclasspath <path> Override location of bootstrap class files

-extdirs <dirs> Override location of installed extensions

-endorseddirs <dirs> Override location of endorsed standards path

-proc:{none,only} Control whether annotation processing and/or compilation is done.

-processor <class1>[,<class2>,<class3>...] Names of the annotation processors to run; bypasses default discovery process

-processorpath <path> Specify where to find annotation processors

-parameters Generate metadata for reflection on method parameters

-d <directory> Specify where to place generated class files

-s <directory> Specify where to place generated source files

-h <directory> Specify where to place generated native header files

-implicit:{none,class} Specify whether or not to generate class files for implicitly referenced files

-encoding <encoding> Specify character encoding used by source files

-source <release> Provide source compatibility with specified release

-target <release> Generate class files for specific VM version

-profile <profile> Check that API used is available in the specified profile

-version Version information

-help Print a synopsis of standard options

-Akey[=value] Options to pass to annotation processors

-X Print a synopsis of nonstandard options

-J<flag> Pass <flag> directly to the runtime system

-Werror Terminate compilation if warnings occur

@<filename> Read options and filenames from file

二 安装SSH

下载安装: hadoop@ubuntu:/soft/jdk1.8.0_112$ sudo apt-get install ssh

配置无密码登录本机

生成KEY hadoop@ubuntu:/soft/jdk1.8.0_112$ ssh-keygen -t rsa -P "" 回车

追加公钥到授权KEY里 hadoop@ubuntu:/soft/jdk1.8.0_112$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

验证SSH

ssh localhost 如果不用输入密码即安装成功

三 安装hadoop及配置

1 下载 hadoop http://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-2.7.3/hadoop-2.7.3.tar.gz

2 解压hadoop hadoop@ubuntu:/soft/hadoop$ tar -zxvf hadoop-2.7.3.tar.gz

3 修改Hadoop-env.sh,添加JAVA_HOME路径

hadoop@ubuntu:/soft/hadoop/hadoop-2.7.3/etc/hadoop$ vim hadoop-env.sh

添加:export JAVA_HOME=/soft/jdk1.8.0_112

4 修改core-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/soft/hadoop/hdfs-dir</value>

</property>

</configuration>

5修改hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

6 新建mapred-site.xml

<configuration>

<property>

<name>mapreduce.framwork.name</name>

<value>yarn</value>

</property>

</configuration>

7 配置yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

</configuration>

8 格式化HDFS文件系统

hadoop@ubuntu:/soft/hadoop/hadoop-2.7.3$ bin/hadoop namenode -format

9 启动相关进程

hadoop@ubuntu:/soft/hadoop/hadoop-2.7.3$ sbin/start-all.sh

10 查看进程是否启动成功

hadoop@ubuntu:/soft/hadoop/hadoop-2.7.3$ jps

6373 SecondaryNameNode

6859 Jps

6523 ResourceManager

6766 NodeManager

6206 DataNode

四 验证安装是否成功

打开浏览器

- 输入:

http://localhost:8088进入ResourceManager管理页面 - 输入:

http://localhost:50070进入HDFS页面

五 测试验证

1新建HDFS文件夹

$ bin/hadoop dfs -mkdir /user

$ bin/hadoop dfs -mkdir /user/hadoop

$ bin/hadoop dfs -mkdir /user/hadoop/input

2 将数据导入HDFS 的input文件夹

$ bin/hadoop dfs -put /etc/protocols /user/hadoop/input

3 执行Hadoop WordCount应用(词频统计)

# 如果存在上一次测试生成的output,由于hadoop的安全机制,直接运行可能会报错,所以请手动删除上一次生成的output文件夹

$ bin/hadoop jar share/hadoop/mapreduce/sources/hadoop-mapreduce-examples-2.7.3-sources.jar org.apache.hadoop.examples.WordCount input output

4查看运行结果

hadoop@ubuntu:/soft/hadoop/hadoop-2.7.3$ bin/hadoop dfs -cat output/part-r-00000