k8s 挂载ceph rbd

如果集群是用kubeadm安装的必须要安装rbd-provisioner,因为默认以docker 运行的kube-controller-manager容器内没有ceph命令,无法正常工作,关于介绍 https://github.com/kubernetes-retired/external-storage

apiVersion: apps/v1 kind: Deployment metadata: name: rbd-provisioner namespace: kube-system spec: replicas: 1 selector: matchLabels: app: rbd-provisioner strategy: type: Recreate template: metadata: labels: app: rbd-provisioner spec: containers: - name: rbd-provisioner image: "quay.io/external_storage/rbd-provisioner:latest" env: - name: PROVISIONER_NAME value: ceph.com/rbd serviceAccountName: persistent-volume-binder

将以上manifest应用到集群

apiVersion: v1 kind: Namespace metadata: name: ceph --- apiVersion: v1 kind: Secret metadata: name: ceph-storageclass-secret namespace: ceph data: key: QVFCZ3pjNWh1RHVOT2hBQWVDa2xxTWtlQ3g2bGtJdU5uNFpWOVE9PQ== #ceph auth get-key client.admin | base64(获取ceph 客户端的秘钥,k8s需要用这个key去ceph授权创建rbd) type: kubernetes.io/rbd --- apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: ceph namespace: ceph annotations: storageclass.kubernetes.io/is-default-class: "true" #设置为默认StorageClass,这样创建pvc时就不用指定StorageClass provisioner: ceph.com/rbd parameters: monitors: 192.168.3.80 adminId: admin adminSecretName: ceph-storageclass-secret adminSecretNamespace: ceph pool: k8s userId: admin userSecretName: ceph-storageclass-secret userSecretNamespace: ceph imageFormat: "2" imageFeatures: "layering"

将以上manifest应用到集群

kind: PersistentVolumeClaim apiVersion: v1 metadata: name: ceph-pvc-test2 namespace: default spec: accessModes: - ReadWriteOnce resources: requests: storage: 100Gi

kubectl get pvc 查看自动创建的pvc

坑:

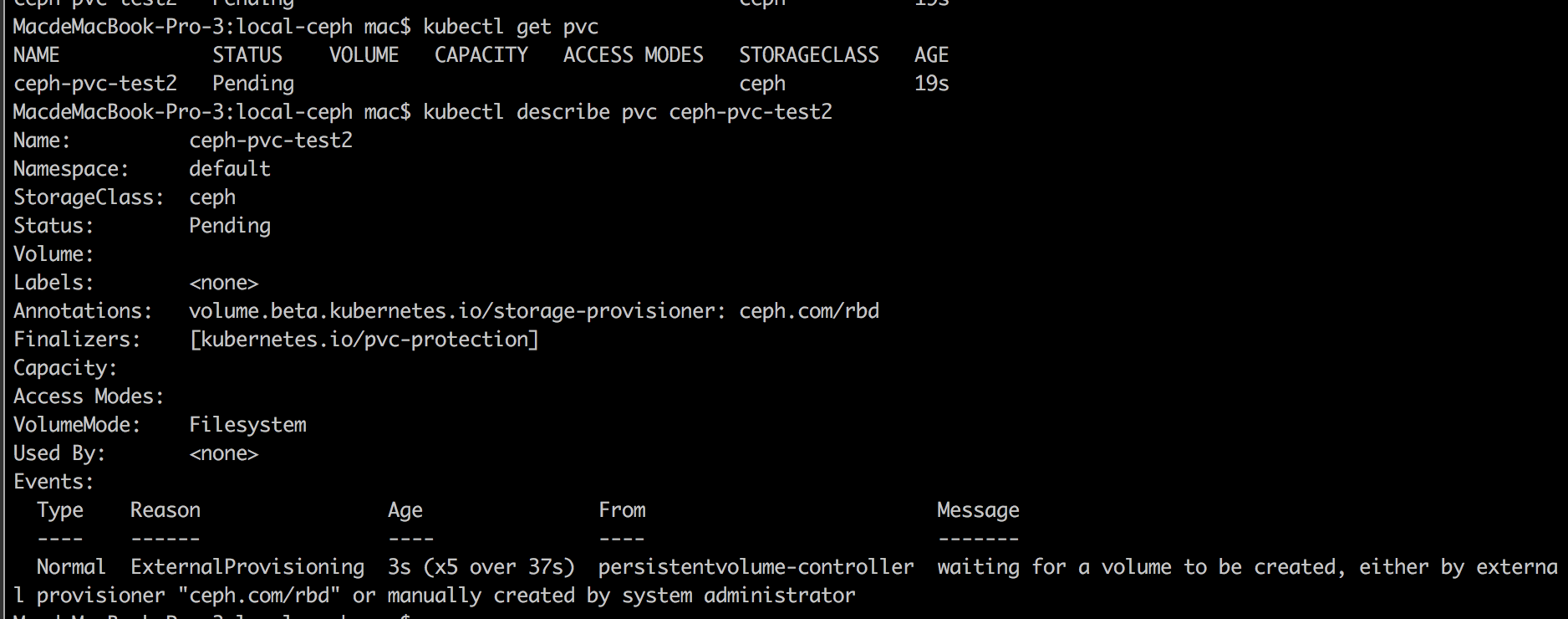

当第一次安装 rbd-provisioner后这时候如果直接去创建一个pvc,会一直出于pending,且报错:ormal ExternalProvisioning 3s (x5 over 37s) persistentvolume-controller waiting for a volume to be created, either by external provisioner "ceph.com/rbd" or manually created by system administrator,这时候网上有教程去修改k8s api增加参数:--feature-gates=RemoveSelfLink=false(改完重启),然并卵,后面发现除了加参数还必须将pvc定义后马上pod 去引用刚才的pvc,这样才会创建成功,原因未知。必须以下这样写

kind: PersistentVolumeClaim apiVersion: v1 metadata: name: ceph-pvc-test2 namespace: default spec: accessModes: - ReadWriteOnce resources: requests: storage: 100Gi --- apiVersion: apps/v1 kind: Deployment metadata: name: test spec: selector: matchLabels: app: test replicas: 1 template: metadata: labels: app: test spec: containers: - name: test image: reg.xthklocal.cn/xthk-library/basic-config-center-bms:1.1.0.2 ports: - containerPort: 80 name: http protocol: TCP volumeMounts: - name: mysql-data mountPath: /var/lib/mysql volumes: - name: mysql-data persistentVolumeClaim: claimName: ceph-pvc-test2

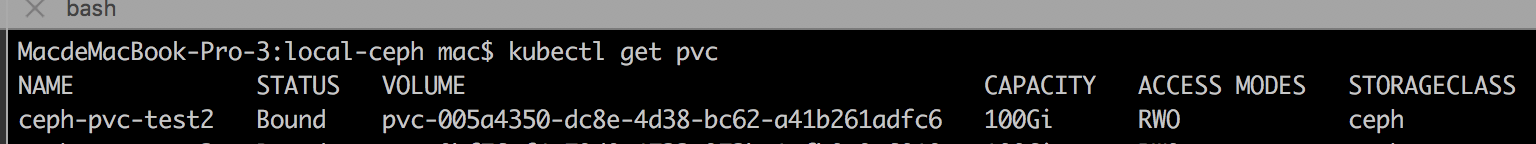

再次查看pvc状态:

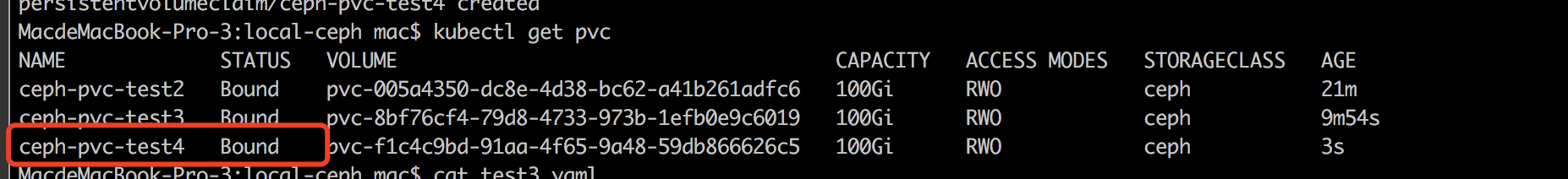

发现已经调度成功了,然后我们再次尝试只创建一个pvc

kind: PersistentVolumeClaim apiVersion: v1 metadata: name: ceph-pvc-test4 namespace: default spec: accessModes: - ReadWriteOnce resources: requests: storage: 100Gi

查看状态

既然成功了,那就不要再动他了吧

posted on 2022-01-04 20:02 it_man_xiangge 阅读(586) 评论(0) 编辑 收藏 举报