Kubernetes --(k8s)volume 数据管理

容器的磁盘的生命周期是短暂的,这就带来了许多问题;第一:当一个容器损坏了,kubelet会重启这个容器,但是数据会随着container的死亡而丢失;第二:当很多容器在同一Pod中运行的时候,经常需要数据共享。kubernets Volume解决了这些问题

kubernets volume的四种类型

- emtyDir

- hostPath

- NFS

- pv/pvc

https://www.kubernetes.org.cn/kubernetes-volumes

emtyDir

第一步:编写yml文件

╭─root@node1 ~

╰─➤ vim nginx-empty.yml

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: du # 对应

mountPath: /usr/share/nginx/html

volumes:

- name: du # 对应

emptyDir: {}

第二步:运行yml文件

╭─root@node1 ~

╰─➤ kubectl apply -f nginx-empty.yml

第三步:查看pod

╭─root@node1 ~

╰─➤ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx 1/1 Running 0 7m18s 10.244.2.14 node3 <none> <none>

第四步:到node3节点查看容器详细信息

# docker ps

╭─root@node3 ~

╰─➤ docker inspect 9c3ed074fb29| grep "Mounts" -A 8

"Mounts": [

{

"Type": "bind",

"Source": "/var/lib/kubelet/pods/2ab6183c-eddd-44eb-9e62-ded5106d1d1a/volumes/kubernetes.io~empty-dir/du",

"Destination": "/usr/share/nginx/html",

"Mode": "Z",

"RW": true,

"Propagation": "rprivate"

},

第五步:写入内容

╭─root@node3 ~

╰─➤ cd /var/lib/kubelet/pods/2ab6183c-eddd-44eb-9e62-ded5106d1d1a/volumes/kubernetes.io~empty-dir/du

╭─root@node3 /var/lib/kubelet/pods/2ab6183c-eddd-44eb-9e62-ded5106d1d1a/volumes/kubernetes.io~empty-dir/du

╰─➤ ls

╭─root@node3 /var/lib/kubelet/pods/2ab6183c-eddd-44eb-9e62-ded5106d1d1a/volumes/kubernetes.io~empty-dir/du

╰─➤ echo "empty test" >> index.html

第六步:访问

╭─root@node1 ~

╰─➤ curl 10.244.2.14

empty test

第七步:停掉容器

╭─root@node3 ~

╰─➤ docker stop 9c3ed074fb29

9c3ed074fb29

第八步:查看新起来的容器

╭─root@node3 ~

╰─➤ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

14ca410ad737 5a3221f0137b "nginx -g 'daemon of…" About a minute ago Up About a minute k8s_nginx_nginx_default_2ab6183c-eddd-44eb-9e62-ded5106d1d1a_1

第九步:查看pod 信息 并访问

╭─root@node1 ~

╰─➤ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx 1/1 Running 1 40m 10.244.2.14 node3 <none> <none>

╭─root@node1 ~

╰─➤ curl 10.244.2.14

empty test

第十步:删除pod

╭─root@node1 ~

╰─➤ kubectl delete pod nginx

pod "nginx" deleted

第十一步:查看emptyDir

╭─root@node3 ~

╰─➤ ls /var/lib/kubelet/pods/2ab6183c-eddd-44eb-9e62-ded5106d1d1a/volumes/kubernetes.io~empty-dir/du

ls: cannot access /var/lib/kubelet/pods/2ab6183c-eddd-44eb-9e62-ded5106d1d1a/volumes/kubernetes.io~empty-dir/du: No such file or directory

emptyDir实验总结

- 写入文件可以访问说明文件已经同步

- 停到原容器后,kubelet新建一容器。

- 访问仍成功说明数据没有随容器的死亡而丢失

- emptyDir 寿命与pods 同步

hostPath

效果相当于执行: docker run -v /tmp:/usr/share/nginx/html

第一步:编写yml文件

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: du # 对应

mountPath: /usr/share/nginx/html

volumes:

- name: du # 对应

hostPath:

path: /tmp

第二步:运行yml文件

╭─root@node1 ~

╰─➤ kubectl apply -f nginx-hostP.yml

pod/nginx2 created

第三步:查看pods

╭─root@node1 ~

╰─➤ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx2 1/1 Running 0 55s 10.244.2.15 node3 <none> <none>

第四步:pod所在节点写入测试文件

╭─root@node3 ~

╰─➤ echo "hostPath test " >> /tmp/index.html

第五步: 访问

╭─root@node1 ~

╰─➤ curl 10.244.2.15

hostPath test

第六步:删除pods

╭─root@node1 ~

╰─➤ kubectl delete -f nginx-hostP.yml

pod "nginx2" deleted

第七步:查看测试文件

╭─root@node3 ~

╰─➤ cat /tmp/index.html

hostPath test

hostPath实验总结

- 一个hostPath类型的磁盘就是挂在了主机的一个文件或者目录

- 注意:从模版文件中创建的pod可能会因为主机上文件夹目录的不同而导致一些问题,因为挂载的目录是pod宿主机的某个目录。

NFS

第一步:部署nfs (每个节点安装nfs)

╭─root@node1 ~

╰─➤ yum install nfs-utils rpcbind -y

╭─root@node1 ~

╰─➤ cat /etc/exports

/tmp *(rw)

╭─root@node1 ~

╰─➤ chown -R nfsnobody: /tmp

╭─root@node1 ~

╰─➤ systemctl restart nfs rpcbind

----------------------------------------------

╭─root@node2 ~

╰─➤ yum install nfs-utils -y

----------------------------------------------

╭─root@node3 ~

╰─➤ yum install nfs-utils -y

第二步:编写yml文件

apiVersion: v1

kind: Pod

metadata:

name: nginx2

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: du # 对应

mountPath: /usr/share/nginx/html

volumes:

- name: du # 对应

nfs:

path: /tmp

server: 192.168.137.3

第三步:运行yml文件

╭─root@node1 ~

╰─➤ kubectl apply -f nginx-nfs.yml

pod/nginx2 created

第四步:查看pod信息

╭─root@node1 ~

╰─➤ kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx2 1/1 Running 0 5m46s 10.244.2.16 node3 <none> <none>

第五步:编写测试文件

╭─root@node1 ~

╰─➤ echo "nfs-test" >> /tmp/index.html

第六步:访问

╭─root@node1 ~

╰─➤ curl 10.244.2.16

nfs-test

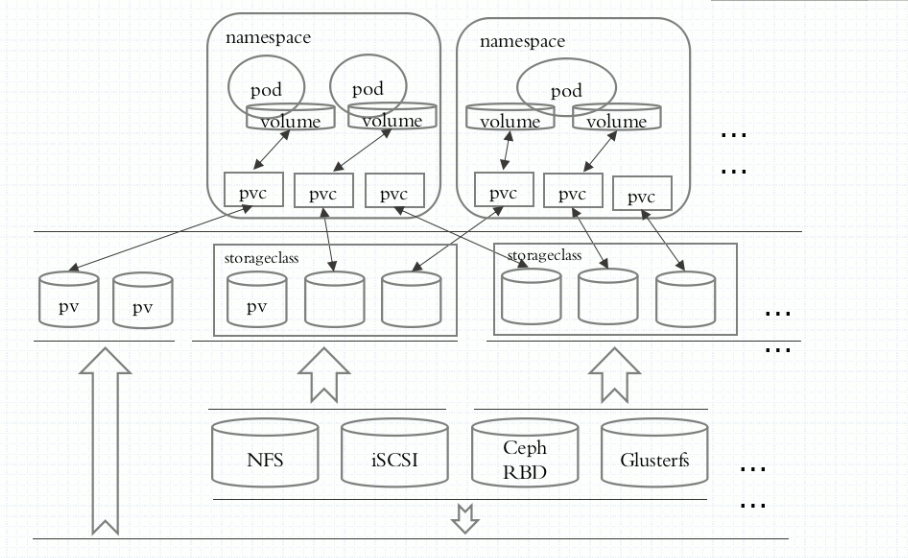

pv/pvc

第一步:部署NFS

略

第二步:编写pv的yml文件

apiVersion: v1

kind: PersistentVolume

metadata:

name: mypv

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

nfs:

path: /tmp

server: 192.168.137.3

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mypvc1

spec:

accessModes:

- ReadWriteMany

volumeName: mypv

resources:

requests:

storage: 1Gi

accessModes有三类

- ReadWriteOnce – 可以被单个节点进行读写挂载

- ReadOnlyMany – 可以被多个节点进行只读挂载

- ReadWriteMany – 可以被多个节点进行读写挂载

reclaim policy有三类

- retain – pvc被删除之后,数据保留

- recyle- 删除pvc之后会删除数据(被废弃)

- delete – 删除pvc之后会删除数据

第三步:执行yml文件 创建pv/pvc

╭─root@node1 ~

╰─➤ vim pv-pvc.yml

╭─root@node1 ~

╰─➤ kubectl apply -f pv-pvc.yml

persistentvolume/mypv created

persistentvolumeclaim/mypvc1 created

第四步:查看

╭─root@node1 ~

╰─➤ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

mypv 1Gi RWX Retain Bound default/mypvc1 68s

╭─root@node1 ~

╰─➤ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mypvc1 Bound mypv 1Gi RWX 78s

使用pvc

第五步:编写nginx的yml文件

apiVersion: v1

kind: Pod

metadata:

name: nginx3

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: du # 对应

mountPath: /usr/share/nginx/html

volumes:

- name: du # 对应

persistentVolumeClaim:

claimName: mypvc1

第六步:执行yml文件

╭─root@node1 ~

╰─➤ kubectl apply -f nginx-pv.yml

pod/nginx3 created

第七步:查看pod

╭─root@node1 ~

╰─➤ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx3 1/1 Running 0 3m45s 10.244.2.17 node3 <none> <none>

第八步:编写访问文件并访问

╭─root@node1 ~

╰─➤ echo "pv test" > /tmp/index.html

╭─root@node1 ~

╰─➤ curl 10.244.2.17

pv test

删除一直处于terminating状态的pod

我这里的pod是与nfs有关,nfs挂载有问题导致pod有问题,执行完删除命令以后看到pod一直处于terminating的状态。

这种情况下可以使用强制删除命令:

kubectl delete pod [pod name] --force --grace-period=0 -n [namespace]

# 注意:必须加-n参数指明namespace,否则可能报错pod not found

演示:

╭─root@node1 ~

╰─➤ kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx3 0/1 Terminating 0 7d17h

╭─root@node1 ~

╰─➤ kubectl delete pod nginx3 --force --grace-period=0 -n default

warning: Immediate deletion does not wait for confirmation that the running resource has been terminated. The resource may continue to run on the cluster indefinitely.

pod "nginx3" force deleted