openresty

## start server cafe.example.com server { server_name cafe.example.com ; listen 80 ; listen 443 ssl http2 ; set $proxy_upstream_name "-"; ssl_certificate_by_lua_block { certificate.call() } location /coffee/ { set $namespace "default"; set $ingress_name "cafe-k8s-ingress"; set $service_name "coffee-svc"; set $service_port "80"; set $location_path "/coffee"; set $global_rate_limit_exceeding n; rewrite_by_lua_block { lua_ingress.rewrite({ force_ssl_redirect = false, ssl_redirect = true, force_no_ssl_redirect = false, preserve_trailing_slash = false, use_port_in_redirects = false, global_throttle = { namespace = "", limit = 0, window_size = 0, key = { }, ignored_cidrs = { } }, }) balancer.rewrite() plugins.run() } # be careful with `access_by_lua_block` and `satisfy any` directives as satisfy any # will always succeed when there's `access_by_lua_block` that does not have any lua code doing `ngx.exit(ngx.DECLINED)` # other authentication method such as basic auth or external auth useless - all requests will be allowed. #access_by_lua_block { #} header_filter_by_lua_block { lua_ingress.header() plugins.run() } body_filter_by_lua_block { plugins.run() } log_by_lua_block { balancer.log() monitor.call() plugins.run() } port_in_redirect off; set $balancer_ewma_score -1; set $proxy_upstream_name "default-coffee-svc-80"; set $proxy_host $proxy_upstream_name; set $pass_access_scheme $scheme; set $pass_server_port $server_port; set $best_http_host $http_host; set $pass_port $pass_server_port; set $proxy_alternative_upstream_name ""; client_max_body_size 1m; proxy_set_header Host $best_http_host; # Pass the extracted client certificate to the backend # Allow websocket connections proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection $connection_upgrade; proxy_set_header X-Request-ID $req_id; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $remote_addr; proxy_set_header X-Forwarded-Host $best_http_host; proxy_set_header X-Forwarded-Port $pass_port; proxy_set_header X-Forwarded-Proto $pass_access_scheme; proxy_set_header X-Forwarded-Scheme $pass_access_scheme; proxy_set_header X-Scheme $pass_access_scheme; # Pass the original X-Forwarded-For proxy_set_header X-Original-Forwarded-For $http_x_forwarded_for; # mitigate HTTPoxy Vulnerability # https://www.nginx.com/blog/mitigating-the-httpoxy-vulnerability-with-nginx/ proxy_set_header Proxy ""; # Custom headers to proxied server proxy_connect_timeout 5s; proxy_send_timeout 60s; proxy_read_timeout 60s; proxy_buffering off; proxy_buffer_size 4k; proxy_buffers 4 4k; proxy_max_temp_file_size 1024m; proxy_request_buffering on; proxy_http_version 1.1; proxy_cookie_domain off; proxy_cookie_path off; # In case of errors try the next upstream server before returning an error proxy_next_upstream error timeout; proxy_next_upstream_timeout 0; proxy_next_upstream_tries 3; proxy_pass http://upstream_balancer; proxy_redirect off; } location = /coffee { set $namespace "default"; set $ingress_name "cafe-k8s-ingress"; set $service_name "coffee-svc"; set $service_port "80"; set $location_path "/coffee"; set $global_rate_limit_exceeding n; rewrite_by_lua_block { lua_ingress.rewrite({ force_ssl_redirect = false, ssl_redirect = true, force_no_ssl_redirect = false, preserve_trailing_slash = false, use_port_in_redirects = false, global_throttle = { namespace = "", limit = 0, window_size = 0, key = { }, ignored_cidrs = { } }, }) balancer.rewrite() plugins.run() } # be careful with `access_by_lua_block` and `satisfy any` directives as satisfy any # will always succeed when there's `access_by_lua_block` that does not have any lua code doing `ngx.exit(ngx.DECLINED)` # other authentication method such as basic auth or external auth useless - all requests will be allowed. #access_by_lua_block { #} header_filter_by_lua_block { lua_ingress.header() plugins.run() } body_filter_by_lua_block { plugins.run() } log_by_lua_block { balancer.log() monitor.call() plugins.run() } port_in_redirect off; set $balancer_ewma_score -1; set $proxy_upstream_name "default-coffee-svc-80"; set $proxy_host $proxy_upstream_name; set $pass_access_scheme $scheme; set $pass_server_port $server_port; set $best_http_host $http_host; set $pass_port $pass_server_port; set $proxy_alternative_upstream_name ""; client_max_body_size 1m; proxy_set_header Host $best_http_host; # Pass the extracted client certificate to the backend # Allow websocket connections proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection $connection_upgrade; proxy_set_header X-Request-ID $req_id; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $remote_addr; proxy_set_header X-Forwarded-Host $best_http_host; proxy_set_header X-Forwarded-Port $pass_port; proxy_set_header X-Forwarded-Proto $pass_access_scheme; proxy_set_header X-Forwarded-Scheme $pass_access_scheme; proxy_set_header X-Scheme $pass_access_scheme; # Pass the original X-Forwarded-For proxy_set_header X-Original-Forwarded-For $http_x_forwarded_for; # mitigate HTTPoxy Vulnerability # https://www.nginx.com/blog/mitigating-the-httpoxy-vulnerability-with-nginx/ proxy_set_header Proxy ""; # Custom headers to proxied server proxy_connect_timeout 5s; proxy_send_timeout 60s; proxy_read_timeout 60s; proxy_buffering off; proxy_buffer_size 4k; proxy_buffers 4 4k; proxy_max_temp_file_size 1024m; proxy_request_buffering on; proxy_http_version 1.1; proxy_cookie_domain off; proxy_cookie_path off; # In case of errors try the next upstream server before returning an error proxy_next_upstream error timeout; proxy_next_upstream_timeout 0; proxy_next_upstream_tries 3; proxy_pass http://upstream_balancer; proxy_redirect off; }

upstream_balancer

upstream upstream_balancer { ### Attention!!! # # We no longer create "upstream" section for every backend. # Backends are handled dynamically using Lua. If you would like to debug # and see what backends ingress-nginx has in its memory you can # install our kubectl plugin https://kubernetes.github.io/ingress-nginx/kubectl-plugin. # Once you have the plugin you can use "kubectl ingress-nginx backends" command to # inspect current backends. # ### server 0.0.0.1; # placeholder balancer_by_lua_block { balancer.balance() } keepalive 320; keepalive_timeout 60s; keepalive_requests 10000; }

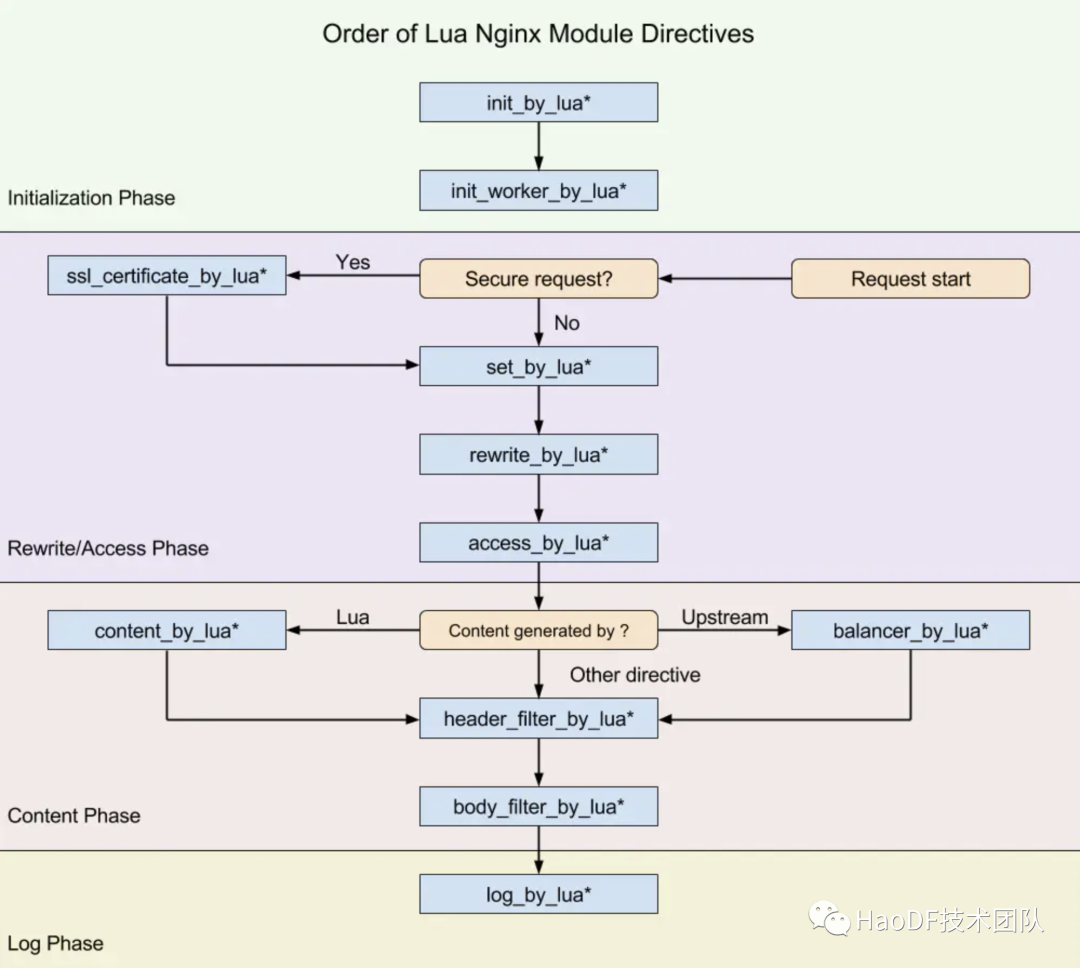

init_by_lua 用于加载 nginx master 进程配置,init_worker_by_lua 用于加载 nginx worker 进程的配置,rewrite_by_lua 是用于重写 url 和使用缓存的阶段,access_by_lua 用于权限控制,限流等功能,header_filter_by_lua 用于对 response header 头进行处理,body_filter_by_lua 用于对 respone body 体进行重写,log_by_lua 用于请求的日志记录。

ssl_certificate /etc/nginx/secrets/default-cafe-secret的功能和ssl_certificate_by_lua_block类似

demo1 rewrite_by_lua_block

nginx最常用的是反向代理功能,例如通过URL上的特征将同一个域名下的请求按照规则分发给不同的后端集群,举个例子:

http://example.com/user/1和http://example.com/product/1

是同个域名下的两个请求,他们分别对应用户与商品,后端提供服务的集群很可能是拆分的,这种情况使用nginx就可以很容易地分流;

但如果这个分流的特征不在header或者URL上,比如在post请求的body体中,nginx原生就没法支持,此时可以借助OpenResty的lua脚本来实现。

我就遇到过这样一个需求,同样的请求需要路由到不同的集群处理,但特征无法通过header或者URL来区分,因为在前期的设计中,不需要区分;这个请求可以处理单个的请求,也可以处理批量的情况,现在批量的请求性能不如人意,需要一个新集群来处理,抽象为以下请求

curl -X "POST" -d '{"uids":[1,2]}' -H "Content-Type:application/json" 'http://127.0.0.1:6699/post'

期望分离当body体中uids是多个和单个的请求,当uids只有1个uid时请求路由到后端A,uids中uid数量大于1时路由到后端B

在之前的nginx.conf基础上修改

[root@centos7 work]# cat conf/nginx.conf worker_processes 1; daemon off; error_log logs/error.log info; events { worker_connections 1024; } http { upstream apache { server 10.100.156.75:80; } upstream nginx { server 10.108.206.182:80; } server { listen 6699; location / { default_type application/json; # default upstream set $upstream_name 'apache'; rewrite_by_lua_block { cjson = require 'cjson.safe' ngx.req.read_body() local body = ngx.req.get_body_data() if body then ngx.log(ngx.INFO, "body=" .. body) local data = cjson.decode(body) if data and type(data) == "table" then local count = 0 for k,v in pairs(data["uids"]) do count = count + 1 end ngx.log(ngx.INFO, "count = " .. count) if count == 1 then ngx.var.upstream_name = "nginx" end end end } proxy_pass http://$upstream_name; } } } [root@centos7 work]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE apache-svc ClusterIP 10.100.156.75 <none> 80/TCP 166m kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 7d23h nginx-svc ClusterIP 10.108.206.182 <none> 80/TCP 166m [root@centos7 work]#

[root@centos7 logs]# curl -X "POST" -d '{"uids":[1,2]}' -H "Content-Type: application/json" 'http://127.0.0.1:6699' <html><body><h1>It works!</h1></body></html> [root@centos7 logs]# curl -X "POST" -d '{"uids":[1,2,3]}' -H "Content-Type: application/json" 'http://127.0.0.1:6699' <html><body><h1>It works!</h1></body></html> [root@centos7 logs]#

[root@centos7 work]# tail -n 10 logs/error.log 2021/08/27 02:30:18 [info] 23668#23668: *20 client 127.0.0.1 closed keepalive connection 2021/08/27 02:33:41 [info] 23668#23668: *22 [lua] rewrite_by_lua(nginx.conf:45):7: body={"uids":[1]}, client: 127.0.0.1, server: , request: "POST / HTTP/1.1", host: "127.0.0.1:6699" 2021/08/27 02:33:41 [info] 23668#23668: *22 [lua] rewrite_by_lua(nginx.conf:45):16: count = 1, client: 127.0.0.1, server: , request: "POST / HTTP/1.1", host: "127.0.0.1:6699" 2021/08/27 02:33:41 [info] 23668#23668: *22 client 127.0.0.1 closed keepalive connection 2021/08/27 02:33:54 [info] 23668#23668: *24 [lua] rewrite_by_lua(nginx.conf:45):7: body={"uids":[1,2]}, client: 127.0.0.1, server: , request: "POST / HTTP/1.1", host: "127.0.0.1:6699" 2021/08/27 02:33:54 [info] 23668#23668: *24 [lua] rewrite_by_lua(nginx.conf:45):16: count = 2, client: 127.0.0.1, server: , request: "POST / HTTP/1.1", host: "127.0.0.1:6699" 2021/08/27 02:33:54 [info] 23668#23668: *24 client 127.0.0.1 closed keepalive connection 2021/08/27 02:34:04 [info] 23668#23668: *26 [lua] rewrite_by_lua(nginx.conf:45):7: body={"uids":[1,2,3]}, client: 127.0.0.1, server: , request: "POST / HTTP/1.1", host: "127.0.0.1:6699" 2021/08/27 02:34:04 [info] 23668#23668: *26 [lua] rewrite_by_lua(nginx.conf:45):16: count = 3, client: 127.0.0.1, server: , request: "POST / HTTP/1.1", host: "127.0.0.1:6699" 2021/08/27 02:34:04 [info] 23668#23668: *26 client 127.0.0.1 closed keepalive connection [root@centos7 work]#

demo2 content_by_lua_block

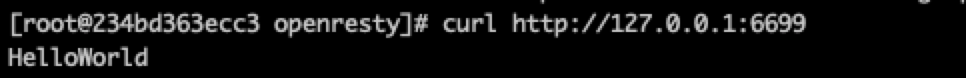

worker_processes 1; error_log logs/error.log; events { worker_connections 1024; } http { server { listen 6699; location / { default_type text/html; content_by_lua_block { ngx.say("HelloWorld") } } } }

curl http://127.0.0.1:6699

demo3

location / { set $proxy_host ""; rewrite_by_lua_block { local ld = require("launchdarkly_server_sdk") local client = require("shared") local user = ld.makeUser({ key = "abc" }) ngx.var.proxy_host = client:stringVariation(user, "YOUR_FLAG_KEY", "10.0.0.0") } proxy_pass https://$proxy_host$uri; proxy_set_header Host $proxy_host; proxy_set_header X-Forwarded-For $remote_addr; }

demo4 header_filter_by_lua

worker_processes 1; daemon off; error_log logs/error.log; events { worker_connections 1024; } http { upstream remote_world { server 127.0.0.1:8080; } server { listen 80; location /exec { content_by_lua ' local cjson = require "cjson" local headers = { ["Etag"] = "663b92165e216225df78fbbd47c9c5ba", ["Last-Modified"] = "Fri, 12 May 2016 18:53:33 GMT", } ngx.var.my_headers = cjson.encode(headers) ngx.var.my_upstream = "remote_world" ngx.var.my_uri = "/world" ngx.exec("/upstream") '; } location /upstream { internal; set $my_headers $my_headers; set $my_upstream $my_upstream; set $my_uri $my_uri; proxy_pass http://$my_upstream$my_uri; header_filter_by_lua ' local cjson = require "cjson" headers = cjson.decode(ngx.var.my_headers) for k, v in pairs(headers) do ngx.header[k] = v end '; } } server { listen 8080; location /world { echo "hello world"; } } }

[root@centos7 conf]# curl -i http://127.0.0.1/exec HTTP/1.1 200 OK Server: openresty/1.19.3.2 Date: Fri, 27 Aug 2021 07:39:41 GMT Content-Type: text/plain Transfer-Encoding: chunked Connection: keep-alive Etag: 663b92165e216225df78fbbd47c9c5ba Last-Modified: Fri, 12 May 2016 18:53:33 GMT hello world [root@centos7 conf]# curl -i http://127.0.0.1:8080/world HTTP/1.1 200 OK Server: openresty/1.19.3.2 Date: Fri, 27 Aug 2021 07:40:27 GMT Content-Type: text/plain Transfer-Encoding: chunked Connection: keep-alive hello world

dmeo5

不执行

ngx.exec("/upstream")

[root@centos7 conf]# cat nginx.conf worker_processes 1; daemon off; error_log logs/error.log; events { worker_connections 1024; } http { access_log logs/access.log ; upstream remote_world { server 127.0.0.1:8080; } server { listen 80; location /exec { content_by_lua ' local cjson = require "cjson" local headers = { ["Etag"] = "663b92165e216225df78fbbd47c9c5ba", ["Last-Modified"] = "Fri, 12 May 2016 18:53:33 GMT", } ngx.var.my_headers = cjson.encode(headers) ngx.var.my_upstream = "remote_world" ngx.var.my_uri = "/world" '; } location /upstream { internal; set $my_headers $my_headers; set $my_upstream $my_upstream; set $my_uri $my_uri; proxy_pass http://$my_upstream$my_uri; header_filter_by_lua ' local cjson = require "cjson" headers = cjson.decode(ngx.var.my_headers) for k, v in pairs(headers) do ngx.header[k] = v end '; } } server { listen 8080; location /world { echo "hello world"; } } }

[root@centos7 conf]# curl -i http://127.0.0.1/exec HTTP/1.1 200 OK Server: openresty/1.19.3.2 Date: Fri, 27 Aug 2021 07:45:33 GMT Content-Type: text/plain Transfer-Encoding: chunked Connection: keep-alive

demo6

没有

header_filter_by_lua

[root@centos7 conf]# cat nginx.conf worker_processes 1; daemon off; error_log logs/error.log; events { worker_connections 1024; } http { access_log logs/access.log ; upstream remote_world { server 127.0.0.1:8080; } server { listen 80; location /exec { content_by_lua ' local cjson = require "cjson" local headers = { ["Etag"] = "663b92165e216225df78fbbd47c9c5ba", ["Last-Modified"] = "Fri, 12 May 2016 18:53:33 GMT", } ngx.var.my_headers = cjson.encode(headers) ngx.var.my_upstream = "remote_world" ngx.var.my_uri = "/world" ngx.exec("/upstream") '; } location /upstream { internal; set $my_headers $my_headers; set $my_upstream $my_upstream; set $my_uri $my_uri; proxy_pass http://$my_upstream$my_uri; } } server { listen 8080; location /world { echo "hello world"; } } }

[root@centos7 conf]# curl -i http://127.0.0.1/exec HTTP/1.1 200 OK Server: openresty/1.19.3.2 Date: Fri, 27 Aug 2021 07:49:32 GMT Content-Type: text/plain Transfer-Encoding: chunked Connection: keep-alive hello world [root@centos7 conf]#

更改header 和内容

[root@centos7 conf]# cat nginx.conf worker_processes 1; daemon off; error_log logs/error.log; events { worker_connections 1024; } http { access_log logs/access.log ; upstream remote_world { server 127.0.0.1:8080; } server { listen 80; location /exec { content_by_lua ' local cjson = require "cjson" local headers = { ["Etag"] = "663b92165e216225df78fbbd47c9c5ba", ["Last-Modified"] = "Fri, 12 May 2016 18:53:33 GMT", } ngx.var.my_headers = cjson.encode(headers) ngx.var.my_upstream = "remote_world" ngx.var.my_uri = "/world" ngx.exec("/upstream") '; } location /upstream { internal; set $my_headers $my_headers; set $my_upstream $my_upstream; set $my_uri $my_uri; proxy_pass http://$my_upstream$my_uri; header_filter_by_lua_block { ngx.header["my_header"] = "customized header"; } content_by_lua_block { ngx.say("Hello*****World") } } } server { listen 8080; location /world { echo "hello world"; } } }

[root@centos7 conf]# curl -i http://127.0.0.1/exec HTTP/1.1 200 OK Server: openresty/1.19.3.2 Date: Fri, 27 Aug 2021 08:03:58 GMT Content-Type: text/plain Transfer-Encoding: chunked Connection: keep-alive my-header: customized header Hello*****World You have new mail in /var/spool/mail/root [root@centos7 conf]#

log_by_lua_block

[root@centos7 conf]# cat nginx.conf worker_processes 1; daemon off; error_log logs/error.log info; events { worker_connections 1024; } http { server { listen 6699; location /foo { content_by_lua_block { ngx.say([[I am foo]]) } log_by_lua_block{ local data = {response={}, request={}} local req = ngx.req.get_headers() req.accessTime = os.date("%Y-%m-%d %H:%M:%S") data.request = req local resp = data.response resp.headers = ngx.resp.get_headers() resp.status = ngx.status resp.duration = ngx.var.upstream_response_time resp.body = ngx.var.resp_body ngx.log(ngx.NOTICE,"from log pharse:", json.encode(data)); } } location / { rewrite_by_lua_block { return ngx.redirect('/foo'); } } } }

ngx.redirect返回重定向code

[root@centos7 conf]# curl -i http://127.0.0.1:6699/ HTTP/1.1 302 Moved Temporarily Server: openresty/1.19.3.2 Date: Fri, 27 Aug 2021 08:11:29 GMT Content-Type: text/html Content-Length: 151 Connection: keep-alive Location: /foo <html> <head><title>302 Found</title></head> <body> <center><h1>302 Found</h1></center> <hr><center>openresty/1.19.3.2</center> </body> </html> [root@centos7 conf]# curl -i http://127.0.0.1:6699/foo HTTP/1.1 200 OK Server: openresty/1.19.3.2 Date: Fri, 27 Aug 2021 08:12:08 GMT Content-Type: text/plain Transfer-Encoding: chunked Connection: keep-alive I am foo [root@centos7 conf]#

body_filter_by_lua_block

[root@centos7 conf]# cat nginx.conf worker_processes 1; daemon off; error_log logs/error.log info; events { worker_connections 256; } http { #lua_package_path '/Users/sensoro/bynf/test/SENSORO/openresty/?.lua;;'; server { listen 8815; server_name localhost; location /test { # curl "127.0.0.1:8815/test" #流程分支处理判断变量初始化 set_by_lua $a 'ngx.log(ngx.INFO, "set_by_lua=== 111")'; #转发、重定向、缓存等功能(例如特定请求代理到外网) rewrite_by_lua 'ngx.log(ngx.INFO, "rewrite_by_lua===222")'; #IP准入、接口权限等情况集中处理(例如配合iptable完成简单防火墙) access_by_lua 'ngx.log(ngx.INFO, "access_by_lua=== 333")'; #内容生成 content_by_lua 'ngx.log(ngx.INFO, "content_by_lua=== 444")'; #应答HTTP过滤处理(例如添加头部信息) header_filter_by_lua 'ngx.log(ngx.INFO, "header_filter_by_lua=== 555")'; #应答BODY过滤处理(例如完成应答内容统一成大写) body_filter_by_lua 'ngx.log(ngx.INFO, "body_filter_by_lua=== 666")'; #会话完成后本地异步完成日志记录(日志可以记录在本地,还可以同步到其他机器) log_by_lua 'ngx.log(ngx.INFO, "log_by_lua=== 777")'; } location /test2 { # curl "127.0.0.1:8815/test2" content_by_lua_block { ngx.say("aaa"); ngx.say("bbb"); } body_filter_by_lua_block { ngx.arg[1] = string.upper(ngx.arg[1]); } } location /test3 { # curl "127.0.0.1:8815/test3" content_by_lua_block { ngx.say("aaa"); ngx.print("bbb"); } body_filter_by_lua_block { if ngx.arg[1] == "aaa\n" then --注意: ngx.say会在输出内容后加入\n换行 ngx.arg[1] = string.upper(ngx.arg[1]); end if ngx.arg[1] == "bbb" then --注意: ngx.print直接输出内容 ngx.arg[1] = string.upper(ngx.arg[1]); end } } location /test4 { # curl "127.0.0.1:8815/test4" content_by_lua_block { ngx.say("aaa"); ngx.say("bbb"); } body_filter_by_lua_block { if ngx.arg[1] == "aaa\n" then ngx.arg[1] = string.upper(ngx.arg[1]); ngx.arg[2] = true; -- 设置eof,截断输出,此时只会输出AAA return; end } } location /test5 { # curl "127.0.0.1:8815/test5" content_by_lua_block { local json = require "cjson"; ngx.print(json.encode({a="aaa", b=123})); } body_filter_by_lua_block { local json = require "cjson"; local t = json.decode(ngx.arg[1]); --注意: 不能将解析后的值在赋值给ngx.arg[1] t.a = string.upper(t.a); t.b = t.b * 2; t.c = {1,2,3}; ngx.arg[1] = json.encode(t) .. "\n"; ngx.arg[2] = true; --注意: 没有这句代码会报错 } } } }

curl "127.0.0.1:8815/test" -i

2021/08/27 04:19:36 [info] 71836#71836: *1 [lua] set_by_lua:1: set_by_lua=== 111, client: 127.0.0.1, server: localhost, request: "GET /test HTTP/1.1", host: "127.0.0.1:8815" 2021/08/27 04:19:36 [info] 71836#71836: *1 [lua] rewrite_by_lua(nginx.conf:19):1: rewrite_by_lua===222, client: 127.0.0.1, server: localhost, request: "GET /test HTTP/1.1", host: "127.0.0.1:8815" 2021/08/27 04:19:36 [info] 71836#71836: *1 [lua] access_by_lua(nginx.conf:21):1: access_by_lua=== 333, client: 127.0.0.1, server: localhost, request: "GET /test HTTP/1.1", host: "127.0.0.1:8815" 2021/08/27 04:19:36 [info] 71836#71836: *1 [lua] content_by_lua(nginx.conf:23):1: content_by_lua=== 444, client: 127.0.0.1, server: localhost, request: "GET /test HTTP/1.1", host: "127.0.0.1:8815" 2021/08/27 04:19:36 [info] 71836#71836: *1 [lua] header_filter_by_lua:1: header_filter_by_lua=== 555, client: 127.0.0.1, server: localhost, request: "GET /test HTTP/1.1", host: "127.0.0.1:8815" 2021/08/27 04:19:36 [info] 71836#71836: *1 [lua] body_filter_by_lua:1: body_filter_by_lua=== 666, client: 127.0.0.1, server: localhost, request: "GET /test HTTP/1.1", host: "127.0.0.1:8815" 2021/08/27 04:19:36 [info] 71836#71836: *1 [lua] log_by_lua(nginx.conf:29):1: log_by_lua=== 777 while logging request, client: 127.0.0.1, server: localhost, request: "GET /test HTTP/1.1", host: "127.0.0.1:8815" 2021/08/27 04:19:36 [info] 71836#71836: *1 client 127.0.0.1 closed keepalive connection 2021/08/27 04:19:39 [info] 71836#71836: *2 [lua] set_by_lua:1: set_by_lua=== 111, client: 127.0.0.1, server: localhost, request: "GET /test HTTP/1.1", host: "127.0.0.1:8815" 2021/08/27 04:19:39 [info] 71836#71836: *2 [lua] rewrite_by_lua(nginx.conf:19):1: rewrite_by_lua===222, client: 127.0.0.1, server: localhost, request: "GET /test HTTP/1.1", host: "127.0.0.1:8815" 2021/08/27 04:19:39 [info] 71836#71836: *2 [lua] access_by_lua(nginx.conf:21):1: access_by_lua=== 333, client: 127.0.0.1, server: localhost, request: "GET /test HTTP/1.1", host: "127.0.0.1:8815" 2021/08/27 04:19:39 [info] 71836#71836: *2 [lua] content_by_lua(nginx.conf:23):1: content_by_lua=== 444, client: 127.0.0.1, server: localhost, request: "GET /test HTTP/1.1", host: "127.0.0.1:8815" 2021/08/27 04:19:39 [info] 71836#71836: *2 [lua] header_filter_by_lua:1: header_filter_by_lua=== 555, client: 127.0.0.1, server: localhost, request: "GET /test HTTP/1.1", host: "127.0.0.1:8815" 2021/08/27 04:19:39 [info] 71836#71836: *2 [lua] body_filter_by_lua:1: body_filter_by_lua=== 666, client: 127.0.0.1, server: localhost, request: "GET /test HTTP/1.1", host: "127.0.0.1:8815" 2021/08/27 04:19:39 [info] 71836#71836: *2 [lua] log_by_lua(nginx.conf:29):1: log_by_lua=== 777 while logging request, client: 127.0.0.1, server: localhost, request: "GET /test HTTP/1.1", host: "127.0.0.1:8815" 2021/08/27 04:19:39 [info] 71836#71836: *2 client 127.0.0.1 closed keepalive connection

[root@centos7 conf]# curl -i "127.0.0.1:8815/test2" HTTP/1.1 200 OK Server: openresty/1.19.3.2 Date: Fri, 27 Aug 2021 08:20:35 GMT Content-Type: text/plain Transfer-Encoding: chunked Connection: keep-alive AAA BBB [root@centos7 conf]# curl -i "127.0.0.1:8815/test3" HTTP/1.1 200 OK Server: openresty/1.19.3.2 Date: Fri, 27 Aug 2021 08:21:06 GMT Content-Type: text/plain Transfer-Encoding: chunked Connection: keep-alive AAA BBB[root@centos7 conf]# curl -i "127.0.0.1:8815/test4" HTTP/1.1 200 OK Server: openresty/1.19.3.2 Date: Fri, 27 Aug 2021 08:21:10 GMT Content-Type: text/plain Transfer-Encoding: chunked Connection: keep-alive AAA [root@centos7 conf]# curl -i "127.0.0.1:8815/test5" HTTP/1.1 200 OK Server: openresty/1.19.3.2 Date: Fri, 27 Aug 2021 08:21:15 GMT Content-Type: text/plain Transfer-Encoding: chunked Connection: keep-alive {"c":[1,2,3],"b":246,"a":"AAA"} [root@centos7 conf]#

body_filter_by_lua_block

[root@centos7 conf]# cat nginx.conf worker_processes 1; daemon off; error_log logs/error.log; events { worker_connections 1024; } http { access_log logs/access.log ; upstream remote_world { server 127.0.0.1:8080; } server { listen 80; location /exec { content_by_lua ' local cjson = require "cjson" local headers = { ["Etag"] = "663b92165e216225df78fbbd47c9c5ba", ["Last-Modified"] = "Fri, 12 May 2016 18:53:33 GMT", } ngx.var.my_headers = cjson.encode(headers) ngx.var.my_upstream = "remote_world" ngx.var.my_uri = "/world" ngx.exec("/upstream") '; } location /upstream { internal; set $my_headers $my_headers; set $my_upstream $my_upstream; set $my_uri $my_uri; proxy_pass http://$my_upstream$my_uri; header_filter_by_lua_block { ngx.header["my_header"] = "customized header"; } content_by_lua_block { ngx.say("Hello*****World") } body_filter_by_lua_block { ngx.arg[1] = string.upper(ngx.arg[1]); } } } server { listen 8080; location /world { echo "hello world"; } } }

[root@centos7 conf]# curl -i http://127.0.0.1:80/exec

HTTP/1.1 200 OK

Server: openresty/1.19.3.2

Date: Fri, 27 Aug 2021 08:29:45 GMT

Content-Type: text/plain

Transfer-Encoding: chunked

Connection: keep-alive

my-header: customized header

HELLO*****WORLD

proxy_set_header X-Request-ID

[root@centos7 conf]# cat nginx.conf worker_processes 1; daemon off; error_log logs/error.log; events { worker_connections 1024; } http { access_log logs/access.log ; upstream remote_world { server 127.0.0.1:8080; } server { listen 80; location /exec { content_by_lua ' local cjson = require "cjson" local headers = { ["Etag"] = "663b92165e216225df78fbbd47c9c5ba", ["Last-Modified"] = "Fri, 12 May 2016 18:53:33 GMT", } ngx.var.my_headers = cjson.encode(headers) ngx.var.my_upstream = "remote_world" ngx.var.my_uri = "/world" ngx.exec("/upstream") '; } location /upstream { internal; set $my_headers $my_headers; set $my_upstream $my_upstream; set $my_uri $my_uri; proxy_set_header X-Request-ID "64e7be5ff2fd71f2b1ec8452a8705242"; proxy_pass http://$my_upstream$my_uri; rewrite_by_lua_block { ngx.req.set_header("x-request-id", "60e7be5ff2fd71f2b1ec8452a8705242"); } header_filter_by_lua_block { ngx.header["myHeader"] = "customized header"; ngx.header["requestId"] = ngx.var.http_x_request_id; } body_filter_by_lua_block { ngx.arg[1] = string.upper(ngx.arg[1]); } } } server { listen 8080; location /world { echo "hello world"; rewrite_by_lua_block { ngx.header["requestProxyId"] = ngx.var.http_x_request_id; } } } }

location /world 和 /upstream 两个请求的 ngx.var.http_x_request_id是具有不同的内存的

[root@centos7 conf]# curl http://127.0.0.1:80/exec -i

HTTP/1.1 200 OK

Server: openresty/1.19.3.2

Date: Fri, 27 Aug 2021 09:04:53 GMT

Content-Type: text/plain

Transfer-Encoding: chunked

Connection: keep-alive

requestProxyId: 64e7be5ff2fd71f2b1ec8452a8705242

myHeader: customized header

requestId: 60e7be5ff2fd71f2b1ec8452a8705242

HELLO WORLD

ngx.ctx的使用

ngx.ctx

环境:init_worker_by_lua*、set_by_lua*、rewrite_by_lua*、access_by_lua*、 content_by_lua*、header_filter_by_lua*、body_filter_by_lua*、log_by_lua*、ngx.timer.、balancer_by_lua

含义:ngx.ctx是Lua的table类型,用来缓存基于Lua的环境数据,该缓存在请求结束后会随之清空,类似于Nginx中set指令的效果。

示例:

rewrite_by_lua_block { --设置1个test数据 ngx.ctx.test = 'nginx' ngx.ctx.test_1 = {a=1,b=2} } access_by_lua_block { --修改test ngx.ctx.test = ngx.ctx.test .. ' hello' } content_by_lua_block { --输出test 和 test_1中的a元素的值 ngx.say(ngx.ctx.test) ngx.say("a: ",ngx.ctx.test_1["a"]) } header_filter_by_lua_block { --作为响应头输出 ngx.header["test"] = ngx.ctx.test .. ' world!' } }

上述配置执行结果如下:

# curl -i 'http://testnginx.com/' HTTP/1.1 200 OK Server: nginx/1.12.2 Date: Mon, 18 Jun 2018 05:07:15 GMT Content-Type: application/octet-stream Transfer-Encoding: chunked Connection: keep-alive test: nginx hello world! nginx hello a: 1

从执行结果可知,ngx.ctx的数据可以被Lua的每个执行阶段读/写,并且支持存储table类型的数据。

子请求和内部重定向的缓存区别

ngx.ctx在子请求和内部重定向中的使用方法有些区别。在子请求中修改ngx.ctx.*的数据不会影响主请求中ngx.ctx.*对应的数据,它们维护的是不同的版本,如执行子请求的ngx.location.capture、ngx.location.capture_multi、echo_location等指令时;在内部重定向中修改数据会破坏原始请求中ngx.ctx.*的数据,新请求将会是1个空的ngx.ctx table,例如,当ngx.exec、rewrite配合last/break使用时。

ngx.ctx在内部重定向中使用的示例如下:

location /subq { header_filter_by_lua_block { --如果ngx.ctx.test不存在,则把not test赋值给a local a = ngx.ctx.test or 'not test' --作为响应头输出 ngx.header["test"] = a .. ' world!' } content_by_lua_block { ngx.say(ngx.ctx.test) } } location / { header_filter_by_lua_block { ngx.header["test"] = ' world!' } content_by_lua_block { ngx.ctx.test = "nginx" --执行内部重定向 ngx.exec("/subq") } }

执行结果如下:

# curl -i 'http://testnginx.com/' HTTP/1.1 200 OK Server: nginx/1.12.2 Date: Mon, 18 Jun 2018 05:36:38 GMT Content-Type: application/octet-stream Transfer-Encoding: chunked Connection: keep-alive test: not test world! nil

ngx.var与ngx.ctx的区别

https://blog.csdn.net/u011944141/article/details/89145362

location /sub { content_by_lua_block { ngx.say("sub pre: ", ngx.ctx.blah) ngx.ctx.blah = 32 ngx.say("sub post: ", ngx.ctx.blah) } } location /main { content_by_lua_block { ngx.ctx.blah = 73 ngx.say("main pre: ", ngx.ctx.blah) local res = ngx.location.capture("/sub") ngx.print(res.body) ngx.say("main post: ", ngx.ctx.blah) } } Then GET /main will give the output main pre: 73 sub pre: nil sub post: 32 main post: 73 ----------------------------------------------- location /new { content_by_lua_block { ngx.say(ngx.ctx.foo) } } location /orig { content_by_lua_block { ngx.ctx.foo = "hello" ngx.exec("/new") } } Then GET /orig will give nil

demo11

[root@centos7 conf]# cat nginx.conf worker_processes 1; daemon off; events { worker_connections 1024; } error_log logs/error_lua.log info; http { default_type application/octet-stream; sendfile on; keepalive_timeout 65; lua_code_cache on; # 注意 limit_conn_store 的大小需要足够放置限流所需的键值。 # 每个 $binary_remote_addr 大小不会超过 16 字节(IPv6 情况下),算上 lua_shared_dict 的节点大小,总共不到 64 字节。 # 100M 可以放 1.6M 个键值对 lua_shared_dict limit_conn_store 100M; server { listen 80; location = /sum { # 只允许内部调用 internal; # 这里做了一个求和运算只是一个例子,可以在这里完成一些数据库、 # 缓存服务器的操作,达到基础模块和业务逻辑分离目的 content_by_lua_block { local args = ngx.req.get_uri_args() ngx.say(tonumber(args.a) + tonumber(args.b)) } } location = /app/test { content_by_lua_block { local res = ngx.location.capture( "/sum", {args={a=3, b=8}} ) ngx.say("status:", res.status, " response:", res.body) } } } }[root@centos7 conf]# curl 127.0.0.1/sum <html> <head><title>404 Not Found</title></head> <body> <center><h1>404 Not Found</h1></center> <hr><center>openresty/1.19.3.2</center> </body> </html> [root@centos7 conf]# curl 127.0.0.1/app/test status:200 response:11 [root@centos7 conf]#

demo12

worker_processes 1; daemon off; events { worker_connections 1024; } error_log logs/error_lua.log info; http { default_type application/octet-stream; sendfile on; keepalive_timeout 65; lua_code_cache on; # 注意 limit_conn_store 的大小需要足够放置限流所需的键值。 # 每个 $binary_remote_addr 大小不会超过 16 字节(IPv6 情况下),算上 lua_shared_dict 的节点大小,总共不到 64 字节。 # 100M 可以放 1.6M 个键值对 lua_shared_dict limit_conn_store 100M; server { listen 80; location = /sum { internal; content_by_lua_block { ngx.sleep(0.1) local args = ngx.req.get_uri_args() ngx.print(tonumber(args.a) + tonumber(args.b)) } } location = /subduction { internal; content_by_lua_block { ngx.sleep(0.1) local args = ngx.req.get_uri_args() ngx.print(tonumber(args.a) - tonumber(args.b)) } } location = /app/test_parallels { content_by_lua_block { local start_time = ngx.now() local res1, res2 = ngx.location.capture_multi( { {"/sum", {args={a=3, b=8}}}, {"/subduction", {args={a=3, b=8}}} }) ngx.say("status:", res1.status, " response:", res1.body) ngx.say("status:", res2.status, " response:", res2.body) ngx.say("time used:", ngx.now() - start_time) } } location = /app/test_queue { content_by_lua_block { local start_time = ngx.now() local res1 = ngx.location.capture_multi( { {"/sum", {args={a=3, b=8}}} }) local res2 = ngx.location.capture_multi( { {"/subduction", {args={a=3, b=8}}} }) ngx.say("status:", res1.status, " response:", res1.body) ngx.say("status:", res2.status, " response:", res2.body) ngx.say("time used:", ngx.now() - start_time) } } }

[root@centos7 conf]# curl 127.0.0.1/app/test_parallels status:200 response:11 status:200 response:-5 time used:0.099999904632568 [root@centos7 conf]# curl 127.0.0.1/app/test_queue status:200 response:11 status:200 response:-5 time used:0.20099997520447 [root@centos7 conf]#

利用 ngx.location.capture_multi 函数,直接完成了两个子请求并行执行。当两个请求没有相互依赖,这种方法可以极大提高查询效率。两个无依赖请求,各自是 100ms,顺序执行需要 200ms,但通过并行执行可以在 100ms 完成两个请求。实际生产中查询时间可能没这么规整,但思想大同小异,这个特性是很有用的。

该方法,可以被广泛应用于广告系统(1:N模型,一个请求,后端从N家供应商中获取条件最优广告)、高并发前端页面展示(并行无依赖界面、降级开关等)。

Nginx balancer_by_lua

https://qiita.com/toritori0318/items/a9305d528b52936c0573

nginx+lua,在我司实践过超有用的3个案例

Centos7 下 Openresty 从安装到入门

https://wiki.jikexueyuan.com/project/openresty/openresty/work_with_location.html