网卡VXLAN的offload技术介绍

1.1 offload技术概述

首先要从术语offload说起,offload指的是将一个本来有软件实现的功能放到硬件上来实现,这样就可以将本来在操作系统上进行的一些数据包处理(如分片、重组等)放到网卡硬件上去做,降低系统CPU消耗的同时,提高处理性能。在neutron中,基于VXLAN的网络虚拟技术给服务器的CPU带来了额外的负担,比如封包、解包和校验等,VXLAN的封包和解包都是由OVS来完成的,使用VXLAN offload技术后,VXLAN的封包和解包都交给网卡或者硬件交换机来做了,那么网卡的VXLAN offload技术就是利用网卡来实现VXLAN的封包和解包功能。

再说技术分类,实现offload的几种技术:

-

LSO(Large Segment Offload):协议栈直接传递打包给网卡,由网卡负责分割

-

LRO(Large Receive Offload):网卡对零散的小包进行拼装,返回给协议栈一个大包

-

GSO(Generic Segmentation Offload):LSO需要用户区分网卡是否支持该功能,GSO则会自动判断,如果支持则启用LSO,否则不启用

-

GRO(Generic Receive Offload):LRO需要用户区分网卡是否支持该功能,GRO则会自动判断,如果支持则启用LRO,否则不启用

-

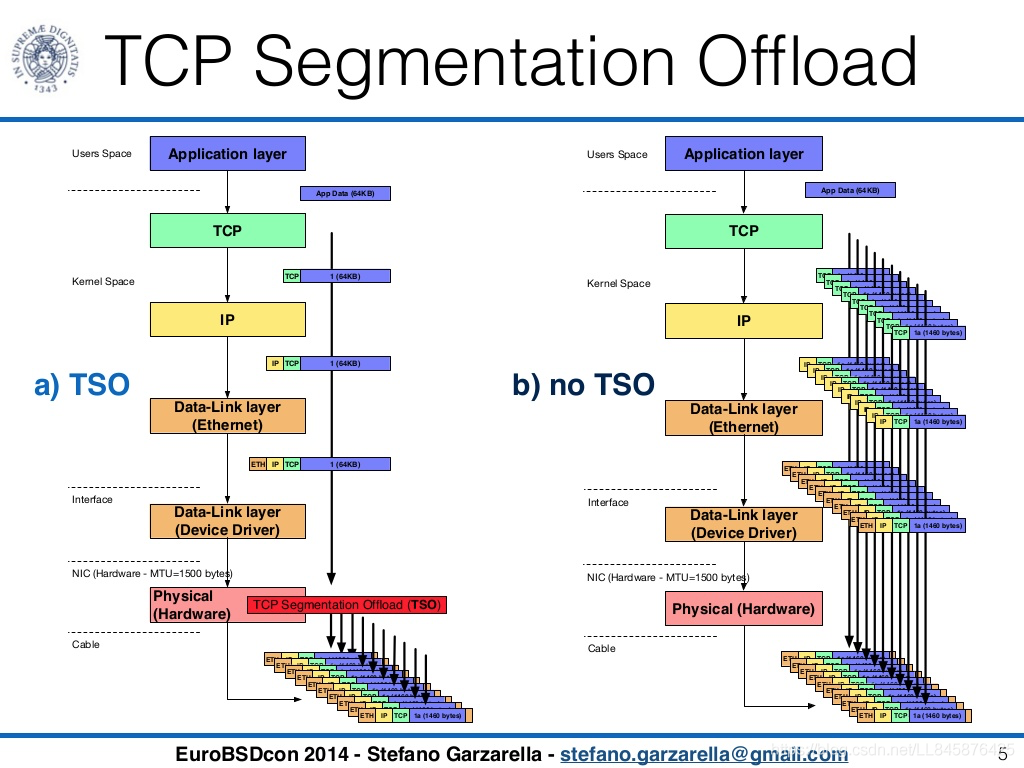

TSO(TCP Segmentation Offload):针对TCP的分片的offload。类似LSO、GSO,但这里明确是针对TCP

-

USO(UDP Segmentation offload):正对UDP的offload,一般是IP层面的分片处理

几种技术的对比

(1)LSO vs LRO

两种技术分别对应发送数据和接收数据两个方面,一般来说,计算机网络上传输的数据基本单位是离散的数据包,而且这个数据包都有MTU的大小的限制,如果需要发送比较多的数据,那么经过OS协议栈的时候,就会拆分为不超过MTU的数据包,如果使用CPU来做的话,会造成使用率过高。引入LSO后,在发送数据超过MTU的时候,OS只需要提交一次请求给网卡,网卡会自动把数据拿过来,然后进行拆分封装,发送的数据包不会超过MTU的限制,而LRO的作用就是当网卡一次收到很多碎片数据包时,LRO可以辅助自动组合成一段较大的数据包,一次性的交给OS处理,这两种技术主要面向TCP报文。

(2)TSO vs UFO

分别对应TCP报文和UDP报文,TSO 将 TCP 协议的一些处理下放到网卡完成以减轻协议栈处理占用 CPU 的负载。通常以太网的 MTU 是1500Bytes,除去 IP 头(标准情况下20 Bytes)、TCP头(标准情况下20Bytes),TCP的MSS (Max Segment Size)大小是1460Bytes。当应用层下发的数据超过 MSS 时,协议栈会对这样的 payload 进行分片,保证生成的报文长度不超过MTU的大小。但是对于支持 TSO/GSO 的网卡而言,就没这个必要了,可以把最多64K大小的 payload 直接往下传给协议栈,此时 IP 层也不会进行分片,一直会传给网卡驱动,支持TSO/GSO的网卡会自己生成TCP/IP包头和帧头,这样可以offload很多协议栈上的内存操作,checksum计算等原本靠CPU来做的工作都移给了网卡。

1.2 NIC的VXLAN offload技术介绍

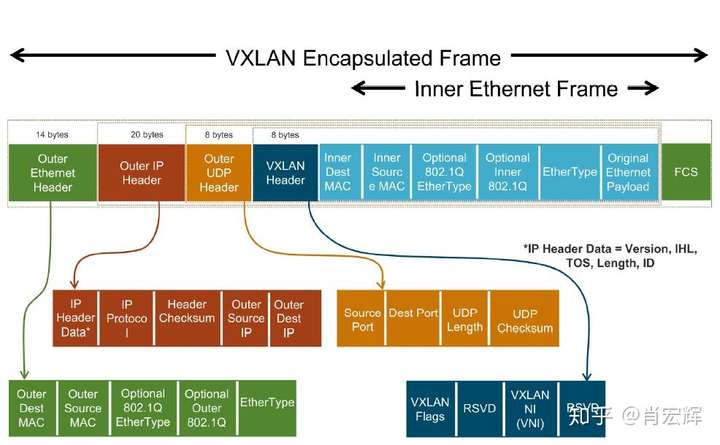

上面主要介绍了一些offload技术的基本概念,下面来详细介绍VXLAN的offload原理。 在虚拟化的网络覆盖应用中存在多种技术,主要包括VXLAN、NVGRE和SST等隧道技术,以VXLAN技术为例,采用MAC-in-UDP来进行数据包的转换传输,从上图可以看出,除了UDP协议报头,VXLAN还引入额外的数据包处理,这种添加或者移除协议包头的操作使CPU需要执行关于数据包的更多操作。目前来看,Linux driver已经可满足硬件网卡的VXLAN offload需求,使用下面的ethtool命令就可以配置网卡的VXLAN offload功能

ethtool -k ethX //这项命令可以列举出ethX的offloads以及当前的状态

ethtool -K ethX tx-udp_tnl-segmentation [off|on] //可以开启或关闭Linux

采用网卡VXLAN offload技术后,overlay情形下的虚拟网络性能也会得到大规模的提升。本质上来说,VXLAN的封装格式类似于一种l2vpn技术,即将二层以太网报文封装在udp报文里,从而跨越underlay L3网络,来实现不同的服务器或不同的数据中心间的互联。

在采用VXLAN技术后,由于虚机产生或接受的报文被封装于外层的UDP报文中予以传输,使得以往的TCP segment optimization、TCP checksum offload等功能对于内层的虚机的TCP数据收发失效,较大的影响了虚机间通信的性能,给最终的用户带来了很差的用户体验。厂商为了解决上述问题,提出了NIC VXLAN offload技术。

网卡的VXLAN offload主要对网卡的能力进行了增强,并与网卡驱动配合,使得网卡能够知晓VXLAN内部以太报文的位置,从而使得TSO、TCP checksum offload这些技术能够对内部的以太报文生效,从而提升TCP性能。

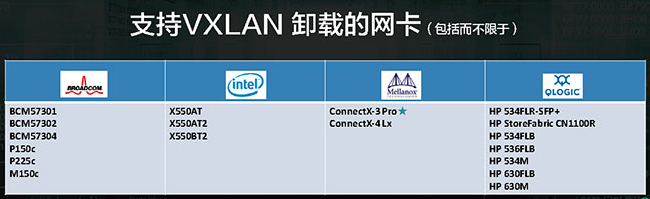

目前部署虚拟网络主流采用VXALN技术,其封包、解包用CPU来实现,将会消耗很大的CPU等系统资源。VXLAN使用通用的x86进行封包、解包处理,其CPU资源占用会达到50%左右,可以考虑使用支持VXLAN offload功能的网卡来降低系统资源的消耗问题。目前来看,博通、Intel、mellanox和Qlogic等网卡厂商都支持VXLAN的卸载。尽管是不同厂商的产品,但业内已经有标准的VXLAN offload接口,无需改动代码即可启用这一功能,并不会增加代码层面的工作量。

tx-udp_tnl-segmentation

Overlay网络,例如VxLAN,现在应用的越来越多。Overlay网络可以使得用户不受物理网络的限制,进而创建,配置并管理所需要的虚拟网络连接。同时Overlay可以让多个租户共用一个物理网络,提高网络的利用率。Overlay网络有很多种,但是最具有代表性的是VxLAN。VxLAN是一个MAC in UDP的设计,具体格式如下所示。

从VxLAN的格式可以看出,以VxLAN为代表的Overlay网络在性能上存在两个问题。一个是Overhead的增加,VxLAN在原始的Ethernet Frame上再包了一层Ethernet+IP+UDP+VXLAN,这样每个Ethernet Frame比原来要多传输50个字节。所以可以预见的是,Overlay网络的效率必然要低于Underlay网络。另一个问题比传50个字节更为严重,那就是需要处理这额外的50个字节。这50个字节包括了4个Header,每个Header都涉及到拷贝,计算,都需要消耗CPU。而我们现在迫切的问题在于CPU可以用来处理每个网络数据包的时间更少了。

首先,VxLAN的这50个字节是没法避免的。其次,那就只能降低它的影响。这里仍然可以采用Jumbo Frames的思想,因为50个字节是固定的,那网络数据包越大,50字节带来的影响就相对越小。

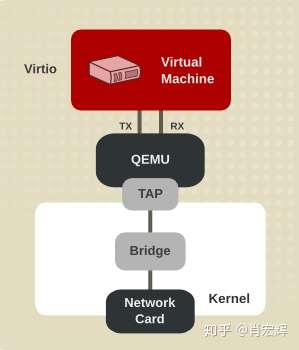

先来看一下虚拟机的网络连接图。虚拟机通过QEMU连接到位于宿主机的TAP设备,之后再通过虚机交换机转到VTEP(VxLAN Tunnel EndPoint),封装VxLAN格式,发给宿主机网卡。

理想情况就是,一大段VxLAN数据直接传给网卡,由网卡去完成剩下的分片,分段,并对分成的小的网络包分别封装VxLAN,计算校验和等工作。这样VxLAN对虚机网络带来影响就可以降到最低。实际中,这是可能的,但是需要一系列的前提条件。

首先,虚拟机要把大的网络包发到宿主机。因为虚拟机里面也运行了一个操作系统,也有自己的TCP/IP协议栈,所以虚拟机完全有能力自己就把大的网络包分成多个小的网络包。从前面介绍的内容看,只有TSO才能真正将一个大的网络包发到网卡。GSO在发到网卡的时候,已经在进入驱动的前一刻将大的网络包分成了若干个小的网络数据包。所以这里要求:虚机的网卡支持TSO(Virtio默认支持),并且打开TSO(默认打开),同时虚机发出的是TCP数据。

之后,经过QEMU,虚拟交换机的转发,VTEP的封装,这个大的TCP数据被封装成了VxLAN格式。50个字节的VxLAN数据被加到了这个大的TCP数据上。接下来问题来了,这本来是个TCP数据,但是因为做了VxLAN的封装,现在看起来像是个UDP的数据。如果操作系统不做任何处理,按照前面的介绍,那就应该走GSO做IP Fragmentation,并在发送给网卡的前一刻分成多个小包。这样,如果网卡本来支持TSO现在就用不上了。并且更加严重的是,现在还没做TCP Segmentation。我们在上一篇花了很大的篇幅介绍其必要性的TCP Segmentation在这里也丢失了。

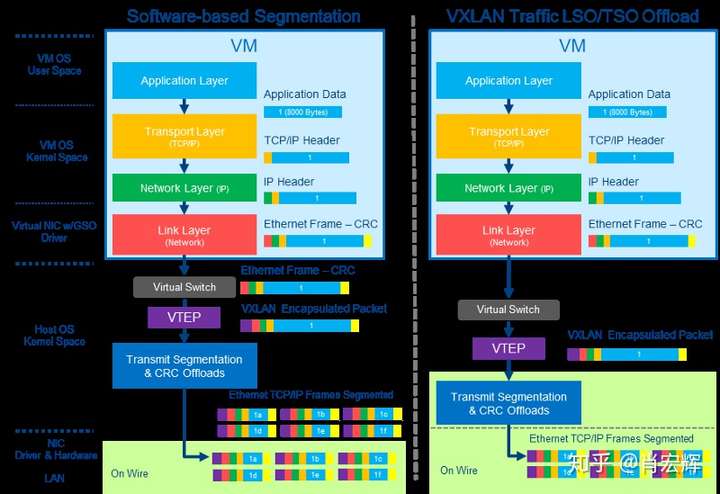

对于现代的网卡,除了TSO,GSO等offload选项外,还多了一个选项tx-udp_tnl-segmentation。如果这个选项打开,操作系统自己会识别封装成VxLAN的UDP数据是一个tunnel数据,并且操作系统会直接把这一大段VxLAN数据丢给网卡去处理。在网卡里面,网卡会针对内层的TCP数据,完成TCP Segmentation。之后再为每个TCP Segment加上VxLAN封装(50字节),如下图右所示。这样,VxLAN封装对于虚拟机网络来说,影响降到了最低。

从前面描述看,要达成上述的效果,需要宿主机网卡同时支持TSO和tx-udp_tnl-segmentation。如果这两者任意一个不支持或者都不支持。那么系统内核会调用GSO,将封装成VxLAN格式的大段TCP数据,在发给网卡驱动前完成TCP Segmentation,并且为每个TCP Segment加上VxLAN封装。如下图左所示。

如果关闭虚拟机内的TSO,或者虚拟机内发送的是UDP数据。那么在虚拟机的TCP/IP协议栈会调用GSO,发给虚拟机网卡驱动的前一刻,完成了分段、分片。虚拟机最终发到QEMU的网络数据包就是多个小的网络数据包。这个时候,无论宿主机怎么配置,都需要处理多个小的网络包,并对他们做VxLAN封装。

VXLAN hardware offload

Intel X540默认支持VXLAN offload:

tx-udp_tnl-segmentation: on static int __devinit ixgbe_probe(struct pci_dev *pdev, const struct pci_device_id __always_unused *ent) { ... #ifdef HAVE_ENCAP_TSO_OFFLOAD netdev->features |= NETIF_F_GSO_UDP_TUNNEL; ///UDP tunnel offload #endif static const char netdev_features_strings[NETDEV_FEATURE_COUNT][ETH_GSTRING_LEN] = { ... [NETIF_F_GSO_UDP_TUNNEL_BIT] = "tx-udp_tnl-segmentation",

[root@bogon ~]# ethtool -k enahisic2i0 tx-udp_tnl-segmentation on ethtool: bad command line argument(s) For more information run ethtool -h [root@bogon ~]# ethtool -k enahisic2i3 tx-udp_tnl-segmentation on ethtool: bad command line argument(s) For more information run ethtool -h [root@bogon ~]# ethtool -k enahisic2i0 | grep tx-udp tx-udp_tnl-segmentation: off [fixed] tx-udp_tnl-csum-segmentation: off [fixed]

static const struct net_device_ops vxlan_netdev_ops = { .ndo_init = vxlan_init, .ndo_uninit = vxlan_uninit, .ndo_open = vxlan_open, .ndo_stop = vxlan_stop, .ndo_start_xmit = vxlan_xmit, .ndo_get_stats64 = ip_tunnel_get_stats64, .ndo_set_rx_mode = vxlan_set_multicast_list, .ndo_change_mtu = vxlan_change_mtu, .ndo_validate_addr = eth_validate_addr, .ndo_set_mac_address = eth_mac_addr, .ndo_fdb_add = vxlan_fdb_add, .ndo_fdb_del = vxlan_fdb_delete, .ndo_fdb_dump = vxlan_fdb_dump, };

vxlan_xmit-->vxlan_xmit_one-->vxlan_xmit_skb

int vxlan_xmit_skb(struct vxlan_sock *vs, struct rtable *rt, struct sk_buff *skb, __be32 src, __be32 dst, __u8 tos, __u8 ttl, __be16 df, __be16 src_port, __be16 dst_port, __be32 vni) { struct vxlanhdr *vxh; struct udphdr *uh; int min_headroom; int err; if (!skb->encapsulation) { skb_reset_inner_headers(skb); skb->encapsulation = 1; } min_headroom = LL_RESERVED_SPACE(rt->dst.dev) + rt->dst.header_len + VXLAN_HLEN + sizeof(struct iphdr) + (vlan_tx_tag_present(skb) ? VLAN_HLEN : 0); /* Need space for new headers (invalidates iph ptr) */ err = skb_cow_head(skb, min_headroom); if (unlikely(err)) return err; if (vlan_tx_tag_present(skb)) { if (WARN_ON(!__vlan_put_tag(skb, skb->vlan_proto, vlan_tx_tag_get(skb)))) return -ENOMEM; skb->vlan_tci = 0; } vxh = (struct vxlanhdr *) __skb_push(skb, sizeof(*vxh)); vxh->vx_flags = htonl(VXLAN_FLAGS); vxh->vx_vni = vni; __skb_push(skb, sizeof(*uh)); skb_reset_transport_header(skb); uh = udp_hdr(skb); uh->dest = dst_port; uh->source = src_port; uh->len = htons(skb->len); uh->check = 0; err = handle_offloads(skb); if (err) return err; return iptunnel_xmit(rt, skb, src, dst, IPPROTO_UDP, tos, ttl, df, false); }

SKB_GSO_UDP_TUNNEL

VXLAN设备在发送数据时,会设置SKB_GSO_UDP_TUNNEL:

static int handle_offloads(struct sk_buff *skb) { if (skb_is_gso(skb)) { int err = skb_unclone(skb, GFP_ATOMIC); if (unlikely(err)) return err; skb_shinfo(skb)->gso_type |= SKB_GSO_UDP_TUNNEL; } else if (skb->ip_summed != CHECKSUM_PARTIAL) skb->ip_summed = CHECKSUM_NONE; return 0; }

值得注意的是,该特性只有当内层的packet为TCP协议时,才有意义。前面已经讨论ixgbe不支持UFO,所以对UDP packet,最终会在推送给物理网卡时(dev_hard_start_xmit)进行软件GSO。

static struct sk_buff *udp4_ufo_fragment(struct sk_buff *skb, netdev_features_t features) { struct sk_buff *segs = ERR_PTR(-EINVAL); unsigned int mss; __wsum csum; struct udphdr *uh; struct iphdr *iph; if (skb->encapsulation && (skb_shinfo(skb)->gso_type & (SKB_GSO_UDP_TUNNEL|SKB_GSO_UDP_TUNNEL_CSUM))) { segs = skb_udp_tunnel_segment(skb, features, false); //进行分段 goto out; }

}

测试

VXLAN OFFLOAD

It’s been a while since I last posted due to work and life in general. I’ve been working on several NFV projects and thought I’d share some recent testing that I’ve been doing…so here we go :)

Let me offload that for ya!

In a multi-tenancy environment (OpenStack, Docker, LXC, etc), VXLAN solves the limitation of 4094 VLANs/networks, but introduces a few caveats:

- Performance impact on the data path due to encapsulation overhead

- Inability of hardware offload capabilities on the inner packet

These extra packet processing workloads are handled by the host operating system in software, which can result in increased overall CPU utilization and reduced packet throughput.

Network interface cards like the Intel X710 and Emulex OneConnect can offload some of the CPU resources by processing the workload in the physical NIC.

Below is a simple lab setup to test VXLAN offload data path with offload hardware. The main focus is to compare the effect of VXLAN offloading and how it performs directly over a physical or bridge interface.

Test Configuration

The lab topology consists of the following:

- Nexus 9372 (tenant leaf) w/ jumbo frames enabled

- Cisco UCS 220 M4S (client)

- Cisco UCS 240 M4X (server)

- Emulex OneConnect NICs

Specs:

Lab topology

Four types of traffic flows have been used to compare the impact of Emulex’s VXLAN offload when the feature has been enabled or disabled:

ethtool -k <eth0/eth1> tx-udp_tnl-segmentation <on/off>

Tools

Netperf was used to generate TCP traffic between client and server. It is a light user-level process that is widely used for networking measurement. The tool consists of two binaries:

- netperf - user-level process that connects to the server and generates traffic

- netserver - user-level process that listens and accepts connection requests

**MTU considerations: VXLAN tunneling adds 50 bytes (14-eth + 20-ip + 8-udp + 8-vxlan) to the VM Ethernet frame. You should make sure that the MTU of the NIC that sends the packets takes into account the tunneling overhead (the configuration below shows the MTU adjustment).

Client Configuration

# Update system

yum update -y

# Install and start OpenvSwitch

yum install -y openvswitch

service openvswitch start

# Create bridge

ovs-vsctl add-br br-int

# Create VXLAN interface and set destination VTEP

ovs-vsctl add-port br-int vxlan0 -- set interface vxlan0 type=vxlan options:remote_ip=<server ip> options:key=10 options:dst_port=4789

# Create tenant namespaces

ip netns add tenant1

# Create veth pairs

ip link add host-veth0 type veth peer name host-veth1

ip link add tenant1-veth0 type veth peer name tenant1-veth1

# Link primary veth interfaces to namespaces

ip link set tenant1-veth0 netns tenant1

# Add IP addresses

ip a add dev host-veth0 192.168.0.10/24

ip netns exec tenant1 ip a add dev tenant1-veth0 192.168.10.10/24

# Bring up loopback interfaces

ip netns exec tenant1 ip link set dev lo up

# Set MTU to account for VXLAN overhead

ip link set dev host-veth0 mtu 8950

ip netns exec tenant1 ip link set dev tenant1-veth0 mtu 8950

# Bring up veth interfaces

ip link set dev host-veth0 up

ip netns exec tenant1 ip link set dev tenant1-veth0 up

# Bring up host interfaces and set MTU

ip link set dev host-veth1 up

ip link set dev host-veth1 mtu 8950

ip link set dev tenant1-veth1 up

ip link set dev tenant1-veth1 mtu 8950

# Attach ports to OpenvSwitch

ovs-vsctl add-port br-int host-veth1

ovs-vsctl add-port br-int tenant1-veth1

# Enable VXLAN offload

ethtool -k eth0 tx-udp_tnl-segmentation on

ethtool -k eth1 tx-udp_tnl-segmentation on

Server Configuration

# Update system

yum update -y

# Install and start OpenvSwitch

yum install -y openvswitch

service openvswitch start

# Create bridge

ovs-vsctl add-br br-int

# Create VXLAN interface and set destination VTEP

ovs-vsctl add-port br-int vxlan0 -- set interface vxlan0 type=vxlan options:remote_ip=<client ip> options:key=10 options:dst_port=4789

# Create tenant namespaces

ip netns add tenant1

# Create veth pairs

ip link add host-veth0 type veth peer name host-veth1

ip link add tenant1-veth0 type veth peer name tenant1-veth1

# Link primary veth interfaces to namespaces

ip link set tenant1-veth0 netns tenant1

# Add IP addresses

ip a add dev host-veth0 192.168.0.20/24

ip netns exec tenant1 ip a add dev tenant1-veth0 192.168.10.20/24

# Bring up loopback interfaces

ip netns exec tenant1 ip link set dev lo up

# Set MTU to account for VXLAN overhead

ip link set dev host-veth0 mtu 8950

ip netns exec tenant1 ip link set dev tenant1-veth0 mtu 8950

# Bring up veth interfaces

ip link set dev host-veth0 up

ip netns exec tenant1 ip link set dev tenant1-veth0 up

# Bring up host interfaces and set MTU

ip link set dev host-veth1 up

ip link set dev host-veth1 mtu 8950

ip link set dev tenant1-veth1 up

ip link set dev tenant1-veth1 mtu 8950

# Attach ports to OpenvSwitch

ovs-vsctl add-port br-int host-veth1

ovs-vsctl add-port br-int tenant1-veth1

# Enable VXLAN offload

ethtool -k eth0 tx-udp_tnl-segmentation on

ethtool -k eth1 tx-udp_tnl-segmentation on

Offload verification

[root@client ~]# dmesg | grep VxLAN

[ 6829.318535] be2net 0000:05:00.0: Enabled VxLAN offloads for UDP port 4789

[ 6829.324162] be2net 0000:05:00.1: Enabled VxLAN offloads for UDP port 4789

[ 6829.329787] be2net 0000:05:00.2: Enabled VxLAN offloads for UDP port 4789

[ 6829.335418] be2net 0000:05:00.3: Enabled VxLAN offloads for UDP port 4789

[root@client ~]# ethtool -k eth0 | grep tx-udp

tx-udp_tnl-segmentation: on

[root@server ~]# dmesg | grep VxLAN

[ 6829.318535] be2net 0000:05:00.0: Enabled VxLAN offloads for UDP port 4789

[ 6829.324162] be2net 0000:05:00.1: Enabled VxLAN offloads for UDP port 4789

[ 6829.329787] be2net 0000:05:00.2: Enabled VxLAN offloads for UDP port 4789

[ 6829.335418] be2net 0000:05:00.3: Enabled VxLAN offloads for UDP port 4789

[root@server ~]# ethtool -k eth0 | grep tx-udp

tx-udp_tnl-segmentation: on

Testing

As stated before, Netperf was used for getting the throughput and the CPU utilization for the server and the client side. The test was run over the bridged interface in the Tenant1 namespace with VXLAN Offload off and Offload on.

Copies of the netperf scripts can be found here:

TCP stream testing UDP stream testing

Throughput:  % CPU Utilization (Server side):

% CPU Utilization (Server side):  % CPU Utilization (Client side):

% CPU Utilization (Client side):

I conducted several TCP stream tests saw the following results with different buffer/socket sizes:

Socket size of 128K(sender and Receiver):  Socket size of 32K(sender and Receiver):

Socket size of 32K(sender and Receiver):  Socket size of 4K(sender and Receiver):

Socket size of 4K(sender and Receiver):

NETPERF Raw Results:

Offload Off:

MIGRATED TCP STREAM TEST from 0.0.0.0 (0.0.0.0) port 0 AF_INET to 192.168.10.20 () port 0 AF_INET : +/-2.500% @ 99% conf.

!!! WARNING

!!! Desired confidence was not achieved within the specified iterations.

!!! This implies that there was variability in the test environment that

!!! must be investigated before going further.

!!! Confidence intervals: Throughput : 6.663%

!!! Local CPU util : 14.049%

!!! Remote CPU util : 13.944%

Recv Send Send Utilization Service Demand

Socket Socket Message Elapsed Send Recv Send Recv

Size Size Size Time Throughput local remote local remote

bytes bytes bytes secs. 10^6bits/s % S % S us/KB us/KB

87380 16384 4096 10.00 9591.78 1.18 0.93 0.483 0.383

MIGRATED TCP STREAM TEST from 0.0.0.0 (0.0.0.0) port 0 AF_INET to 192.168.10.20 () port 0 AF_INET : +/-2.500% @ 99% conf.

!!! WARNING

!!! Desired confidence was not achieved within the specified iterations.

!!! This implies that there was variability in the test environment that

!!! must be investigated before going further.

!!! Confidence intervals: Throughput : 4.763%

!!! Local CPU util : 7.529%

!!! Remote CPU util : 10.146%

Recv Send Send Utilization Service Demand

Socket Socket Message Elapsed Send Recv Send Recv

Size Size Size Time Throughput local remote local remote

bytes bytes bytes secs. 10^6bits/s % S % S us/KB us/KB

87380 16384 8192 10.00 9200.11 0.94 0.90 0.402 0.386

MIGRATED TCP STREAM TEST from 0.0.0.0 (0.0.0.0) port 0 AF_INET to 192.168.10.20 () port 0 AF_INET : +/-2.500% @ 99% conf.

!!! WARNING

!!! Desired confidence was not achieved within the specified iterations.

!!! This implies that there was variability in the test environment that

!!! must be investigated before going further.

!!! Confidence intervals: Throughput : 4.469%

!!! Local CPU util : 8.006%

!!! Remote CPU util : 8.229%

Recv Send Send Utilization Service Demand

Socket Socket Message Elapsed Send Recv Send Recv

Size Size Size Time Throughput local remote local remote

bytes bytes bytes secs. 10^6bits/s % S % S us/KB us/KB

87380 16384 32768 10.00 9590.11 0.65 0.90 0.268 0.367

MIGRATED TCP STREAM TEST from 0.0.0.0 (0.0.0.0) port 0 AF_INET to 192.168.10.20 () port 0 AF_INET : +/-2.500% @ 99% conf.

!!! WARNING

!!! Desired confidence was not achieved within the specified iterations.

!!! This implies that there was variability in the test environment that

!!! must be investigated before going further.

!!! Confidence intervals: Throughput : 7.053%

!!! Local CPU util : 12.213%

!!! Remote CPU util : 13.209%

Recv Send Send Utilization Service Demand

Socket Socket Message Elapsed Send Recv Send Recv

Size Size Size Time Throughput local remote local remote

bytes bytes bytes secs. 10^6bits/s % S % S us/KB us/KB

87380 16384 16384 10.00 9412.99 0.76 0.85 0.316 0.357

MIGRATED TCP STREAM TEST from 0.0.0.0 (0.0.0.0) port 0 AF_INET to 192.168.10.20 () port 0 AF_INET : +/-2.500% @ 99% conf.

!!! WARNING

!!! Desired confidence was not achieved within the specified iterations.

!!! This implies that there was variability in the test environment that

!!! must be investigated before going further.

!!! Confidence intervals: Throughput : 1.537%

!!! Local CPU util : 12.137%

!!! Remote CPU util : 15.495%

Recv Send Send Utilization Service Demand

Socket Socket Message Elapsed Send Recv Send Recv

Size Size Size Time Throughput local remote local remote

bytes bytes bytes secs. 10^6bits/s % S % S us/KB us/KB

87380 16384 65536 10.00 9106.93 0.59 0.85 0.253 0.369

Offload ON:

MIGRATED TCP STREAM TEST from 0.0.0.0 (0.0.0.0) port 0 AF_INET to 192.168.10.20 () port 0 AF_INET : +/-2.500% @ 99% conf.

!!! WARNING

!!! Desired confidence was not achieved within the specified iterations.

!!! This implies that there was variability in the test environment that

!!! must be investigated before going further.

!!! Confidence intervals: Throughput : 5.995%

!!! Local CPU util : 8.044%

!!! Remote CPU util : 7.965%

Recv Send Send Utilization Service Demand

Socket Socket Message Elapsed Send Recv Send Recv

Size Size Size Time Throughput local remote local remote

bytes bytes bytes secs. 10^6bits/s % S % S us/KB us/KB

87380 16384 4096 10.00 9632.98 1.08 0.91 0.440 0.371

MIGRATED TCP STREAM TEST from 0.0.0.0 (0.0.0.0) port 0 AF_INET to 192.168.10.20 () port 0 AF_INET : +/-2.500% @ 99% conf.

!!! WARNING

!!! Desired confidence was not achieved within the specified iterations.

!!! This implies that there was variability in the test environment that

!!! must be investigated before going further.

!!! Confidence intervals: Throughput : 0.031%

!!! Local CPU util : 6.747%

!!! Remote CPU util : 5.451%

Recv Send Send Utilization Service Demand

Socket Socket Message Elapsed Send Recv Send Recv

Size Size Size Time Throughput local remote local remote

bytes bytes bytes secs. 10^6bits/s % S % S us/KB us/KB

87380 16384 8192 10.00 9837.25 0.91 0.91 0.362 0.363

MIGRATED TCP STREAM TEST from 0.0.0.0 (0.0.0.0) port 0 AF_INET to 192.168.10.20 () port 0 AF_INET : +/-2.500% @ 99% conf.

!!! WARNING

!!! Desired confidence was not achieved within the specified iterations.

!!! This implies that there was variability in the test environment that

!!! must be investigated before going further.

!!! Confidence intervals: Throughput : 0.099%

!!! Local CPU util : 7.835%

!!! Remote CPU util : 13.783%

Recv Send Send Utilization Service Demand

Socket Socket Message Elapsed Send Recv Send Recv

Size Size Size Time Throughput local remote local remote

bytes bytes bytes secs. 10^6bits/s % S % S us/KB us/KB

87380 16384 16384 10.00 9837.17 0.65 0.89 0.261 0.354

MIGRATED TCP STREAM TEST from 0.0.0.0 (0.0.0.0) port 0 AF_INET to 192.168.10.20 () port 0 AF_INET : +/-2.500% @ 99% conf.

!!! WARNING

!!! Desired confidence was not achieved within the specified iterations.

!!! This implies that there was variability in the test environment that

!!! must be investigated before going further.

!!! Confidence intervals: Throughput : 0.092%

!!! Local CPU util : 7.445%

!!! Remote CPU util : 8.866%

Recv Send Send Utilization Service Demand

Socket Socket Message Elapsed Send Recv Send Recv

Size Size Size Time Throughput local remote local remote

bytes bytes bytes secs. 10^6bits/s % S % S us/KB us/KB

87380 16384 32768 10.00 9834.57 0.53 0.88 0.212 0.353

MIGRATED TCP STREAM TEST from 0.0.0.0 (0.0.0.0) port 0 AF_INET to 192.168.10.20 () port 0 AF_INET : +/-2.500% @ 99% conf.

!!! WARNING

!!! Desired confidence was not achieved within the specified iterations.

!!! This implies that there was variability in the test environment that

!!! must be investigated before going further.

!!! Confidence intervals: Throughput : 5.255%

!!! Local CPU util : 7.245%

!!! Remote CPU util : 8.528%

Recv Send Send Utilization Service Demand

Socket Socket Message Elapsed Send Recv Send Recv

Size Size Size Time Throughput local remote local remote

bytes bytes bytes secs. 10^6bits/s % S % S us/KB us/KB

87380 16384 65536 10.00 9465.12 0.52 0.90 0.214 0.375

Test results

Line rate speeds were achieved in almost all traffic flow type tests with the exception of VXLAN over bridge. For VXLAN over physical flow test, the CPU utilization was pretty much similar to the baseline physical flow test as no encapsulation was taken place. When offload was disabled, the CPU usage increased by over 50%.

Given that Netperf is single process threaded, and forwarding pins to one CPU core only, the CPU utilization shown was an extrapolated result from what was reported by the tool which was N*8 cores. This also showed how throughput was effected by CPU resource as seen in the case with VXLAN over bridge test. Also, reduction in socket sizes produced higher CPU utilization with offload on, due to the smaller packet/additional overhead handling.

These tests were completed with the standard supported kernel in RHEL 7.1. There have been added networking improvements in the 4.x kernel that in separate testing increased performance by over 3x, although existing results are very promising.

Overall, VXLAN offloading will be useful in getting past specific network limitations and achieving scalable east-west expansions.

Code

https://github.com/therandomsecurityguy/benchmarking-tools/tree/main/netperf

Packet fragmentation and segmentation offload in UDP and VXLAN

https://hustcat.github.io/udp-and-vxlan-fragment/

Linux环境中的网络分段卸载技术 GSO/TSO/UFO/LRO/GRO

https://rtoax.blog.csdn.net/article/details/108748689

常见网络加速技术浅谈(二)

https://zhuanlan.zhihu.com/p/44683790