mlx5 dpdk ovs offload

https://github.com/Mellanox/OVS/blob/master/Documentation/topics/dpdk/phy.rst

https://developer.nvidia.com/zh-cn/blog/improving-performance-for-nfv-infrastructure-and-agile-cloud-data-centers/

在 2018 年红帽峰会上, NVIDIA Mellanox 宣布推出 开放网络功能虚拟化基础设施( NFVI )和云数据中心解决方案 。该解决方案将 Red Hat Enterprise Linux 云软件与 NVIDIA Mellanox NIC 硬件的机箱内支持相结合。我们与 Red Hat 的密切合作和联合验证产生了一个完全集成的解决方案,它提供了高性能和高效率,并且易于部署。该解决方案包括开源数据路径加速技术,包括数据平面开发工具包( 数据平面开发套件 , DPDK )和 Open vSwitch ( OvS )加速。

私有云和通信服务提供商正在改造其基础设施,以实现超规模公共云提供商的灵活性和效率。这种转变基于两个基本原则:分解和虚拟化。

分解将网络软件与底层硬件分离。服务器和网络虚拟化通过使用 hypervisor 和 overlay 网络共享行业标准服务器和网络设备来提高效率。这些颠覆性功能提供了灵活性、灵活性和软件可编程性等好处。然而,由于基于内核的 hypervisor 和虚拟交换,它们也会对网络性能造成严重的影响,这两种方法都无法有效地消耗主机 CPU 周期进行网络数据包处理。为解决网络性能下降而过度配置 CPU 核心会导致较高的资本支出,从而使通过服务器虚拟化获得硬件效率的目标落空。

为了应对这些挑战, Red Hat 和 NVIDIA Mellanox 向市场推出了一款高效、硬件加速、紧密集成的 NFVI 和云数据中心解决方案,该解决方案将 Red Hat Enterprise Linux 操作系统与运行 DPDK 的 NVIDIA Mellanox ConnectX-5 网络适配器以及加速交换和数据包处理( ASAP )相结合2) OvS 卸载技术。

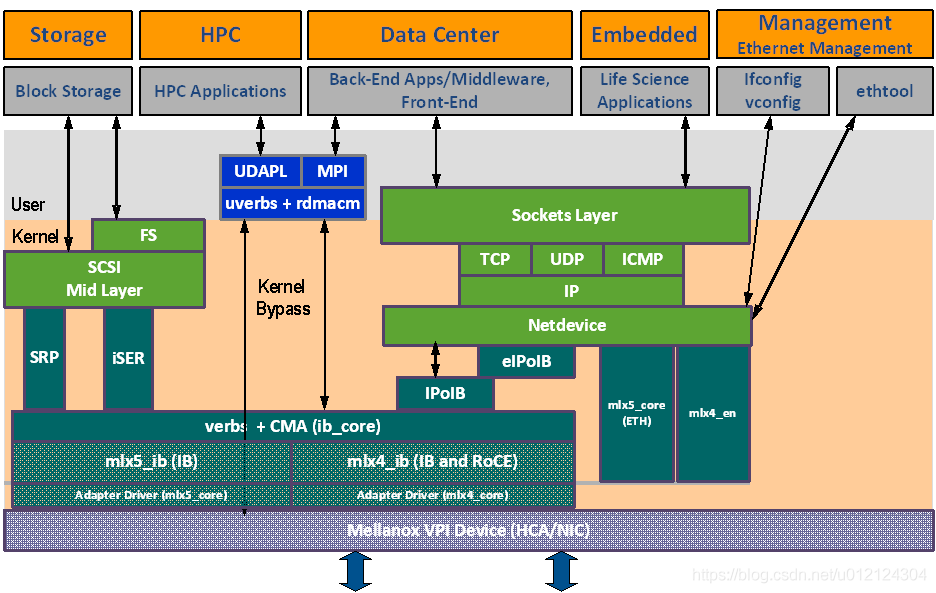

Mellanox OFED栈的架构

mlx4 VPI Driver

ConnectX®-3可以作为一个IB(InfiniBand)适配器,或者一个以太网卡。mlx4是ConnectX® 家族适配器的低层驱动实现。OFED驱动支持IB和以太网配置,为了适应这些配置,这个驱动被分为下面模块:

mlx4_core:处理底层功能如设备初始化和固件。同时控制资源分配从而让IB和以太网功能可以互不干扰地共享设备

mlx4_ib:处理IB功能并且插入到IB中间层

mlx4_en:drivers/net/ethernet/mellanox/mlx4下一个10/24GigE的驱动,处理以太网功能

libmlx4 is a userspace driver for Mellanox ConnectX InfiniBand HCAs.It is a plug-in module for libibverbs that allows programs to useMellanox hardware directly from userspace.

mlx5 Driver

mlx5是the Connect-IB® and ConnectX®-4适配器的底层驱动实现。Connect-IB作为IB适配器而ConnectX-4作为一个VPI适配器(IB和以太网)。mlx5包括了以下内核模块:

mlx5_core:作为一个通用功能库(比如重置后初始化设备),Connect-IB® and ConnectX®-4适配卡需要这些功能。mlx5_core也为ConnectX®-4实现了以太网接口。和mlx4_en/core不同的是,mlx5驱动不需要mlx5_en模块因为以太网功能已经内置在mlx_core模块中了。

mlx5_ib:处理IB功能

libmlx5:实现指定硬件的用户空间功能。如果固件和驱动不兼容,这个驱动不会加载并且会打印一条信息在dmesg中。下面是libmlx5的环境变量:

MLX5_FREEZE_ON_ERROR_CQE

MLX5_POST_SEND_PREFER_BF

MLX5_SHUT_UP_BF

MLX5_SINGLE_THREADED

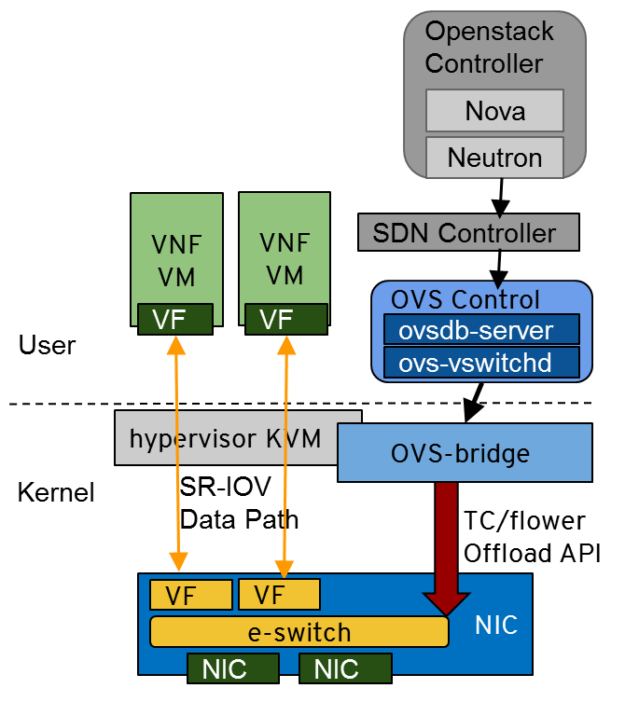

ASAP2OvS 卸载加速

OvS 硬件卸载解决方案将基于软件的缓慢虚拟交换机数据包性能提高一个数量级。从本质上讲, OvS 硬件卸载提供了两个方面的最佳选择:数据路径的硬件加速以及未经修改的 OvS 控制路径,以实现匹配操作规则的灵活性和编程。 NVIDIA Mellanox 是这一突破性技术的先驱,在 OvS 、 Linux 内核、 DPDK 和 OpenStack 开源社区中引领了支持这一创新所需的开放架构。

图 1 。ASAP2OvS 卸载解决方案。

图 1 。ASAP2OvS 卸载解决方案。图 1 显示了 NVIDIA Mellanox open ASAP 2 OvS 卸载技术。它完全透明地将虚拟交换机和路由器数据路径处理卸载到 NIC 嵌入式交换机( e-switch )。 NVIDIA Mellanox 为核心框架和 API (如 tcflower )的上游开发做出了贡献,使它们可以在 Linux 内核和 OvS 版本中使用。这些 api 极大地加速了网络功能,如覆盖、交换、路由、安全和负载平衡。

正如在 Red Hat 实验室进行的性能测试所证实的, NVIDIA Mellanox ASAP2该技术为大型虚拟可扩展局域网( VXLAN )数据包提供了接近 100g 的线速率吞吐量,而不消耗任何 CPU 周期。对于小包裹,ASAP2将 OvS VXLAN 数据包速率提高了 10 倍,从使用 12 个 CPU 内核的每秒 500 万个数据包提高到每秒消耗 0 个 CPU 核的 5500 万个数据包。

云通信服务提供商和企业可以尽快实现基础设施的总体效率2– 基于的高性能解决方案,同时释放 CPU 内核,以便在同一服务器上打包更多虚拟网络功能( vnf )和云本地应用程序。这有助于减少服务器占用空间并节省大量的资本支出。ASAP2已从 OSP13 和 RHEL7 . 5 作为技术预览版提供,从 OSP16 . 1 和 RHEL8 . 2 开始正式提供。

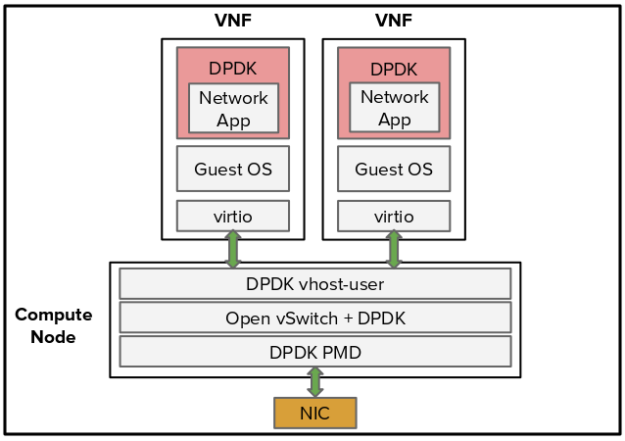

OVS-DPDK 加速

如果您想保持现有较慢的 OvS virtio 数据路径,但仍然需要一些加速,可以使用 NVIDIA Mellanox DPDK 解决方案来提高 OvS 性能。图 2 显示了 OvS over DPDK 解决方案使用 DPDK 软件库和轮询模式驱动程序( PMD ),以消耗 CPU 核心为代价,显著提高了数据包速率。

图 2 。 OVS-DPDK 解决方案图。

图 2 。 OVS-DPDK 解决方案图。使用开源 DPDK 技术, NVIDIA Mellanox ConnectX-5 NIC 提供业界最佳的裸机数据包速率,即每秒 1 . 39 亿个数据包,用于在 DPDK 上运行 OvS 、 VNF 或云应用程序。 RHEL7 . 5 完全支持 Red Hat –

网络架构师在选择适合其 IT 基础设施需求的最佳技术时经常面临许多选择。在决定是否 ASAP2 而 DPDK ,由于 ASAP 的巨大优势,决策变得更加容易2技术超过 DPDK 。

由于 SR-IOV 数据路径,与ASAP2和使用传统的较慢 virtio 数据路径的 DPDK 相比, OvS 卸载实现了显著更高的性能。进一步,ASAP2通过将流卸载到 NIC 来节省 CPU 核心,在 NIC 中 DPDK 消耗 CPU 核心以次优方式处理数据包。像 DPDK 一样,ASAP2OvS offload 是一种开源技术,在开源社区中得到了充分的支持,并在业界得到了广泛的采用。

有关更多信息,请参阅以下资源:

- Mellanox ConnectX-5 网卡

- 含 Mellanox 的 DPDK (视频)

- 加速交换和分组处理 (视频)

https://isrc.iscas.ac.cn/gitlab/mirrors/git.yoctoproject.org/linux-yocto/commit/f8e8fa0262eaf544490a11746c524333158ef0b6

drivers/infiniband/hw/mlx5/ib_rep.c

内核:https://elixir.bootlin.com/linux/latest/source/drivers/net/ethernet/mellanox/mlx5/core/eswitch.c

ivers/net/ethernet/mellanox/mlx5/core/en_tc.c:2664: const struct flow_action_entry *act;

|

dpdk

mlx5_pci_probe mlx5_init_once static int if (mlx5_init_shared_data())

|

内核 mlx5_init_once |

kernel

下发命令

err = parse_tc_fdb_actions(priv, &rule->action, flow, extack); err = mlx5e_tc_add_fdb_flow(priv, flow, extack); static int parse_tc_fdb_actions(struct mlx5e_priv *priv, struct flow_action *flow_action, struct mlx5e_tc_flow *flow, struct netlink_ext_ack *extack) { case FLOW_ACTION_VLAN_PUSH: case FLOW_ACTION_VLAN_POP: if (act->id == FLOW_ACTION_VLAN_PUSH && (action & MLX5_FLOW_CONTEXT_ACTION_VLAN_POP)) { /* Replace vlan pop+push with vlan modify */ action &= ~MLX5_FLOW_CONTEXT_ACTION_VLAN_POP; err = add_vlan_rewrite_action(priv, MLX5_FLOW_NAMESPACE_FDB, act, parse_attr, hdrs, &action, extack); } else { err = parse_tc_vlan_action(priv, act, attr, &action); } if (err) return err; attr->split_count = attr->out_count; break; } mlx5e_tc_add_fdb_flow { struct mlx5_esw_flow_attr *attr = flow->esw_attr; err = mlx5_eswitch_add_vlan_action(esw, attr); flow_flag_set(flow, OFFLOADED); } flow_flag_set(flow, OFFLOADED); MLX5E_TC_FLOW_FLAG_OFFLOADED static bool mlx5e_is_offloaded_flow(struct mlx5e_tc_flow *flow) { return flow_flag_test(flow, OFFLOADED); } int mlx5_eswitch_add_vlan_action(struct mlx5_eswitch *esw, struct mlx5_esw_flow_attr *attr) { struct offloads_fdb *offloads = &esw->fdb_table.offloads; struct mlx5_eswitch_rep *vport = NULL; bool push, pop, fwd; int err = 0; /* nop if we're on the vlan push/pop non emulation mode */ if (mlx5_eswitch_vlan_actions_supported(esw->dev, 1)) return 0; vport = esw_vlan_action_get_vport(attr, push, pop); if (push) { if (vport->vlan_refcount) goto skip_set_push; err = __mlx5_eswitch_set_vport_vlan(esw, vport->vport, attr->vlan_vid[0], 0, SET_VLAN_INSERT | SET_VLAN_STRIP); if (err) goto out; vport->vlan = attr->vlan_vid[0]; skip_set_push: vport->vlan_refcount++; } } mlx5_eswitch_add_vlan_action--> __mlx5_eswitch_set_vport_vlan --> modify_esw_vport_cvlan -->MLX5_SET --> modify_esw_vport_context_cmd -->mlx5_cmd_exec --> mlx5_cmd_invoke int mlx5_cmd_exec(struct mlx5_core_dev *dev, void *in, int in_size, void *out, int out_size) { int err; err = cmd_exec(dev, in, in_size, out, out_size, NULL, NULL, false); return err ? : mlx5_cmd_check(dev, in, out); }

static int query_esw_vport_context_cmd(struct mlx5_core_dev *dev, u16 vport, void *out, int outlen) { u32 in[MLX5_ST_SZ_DW(query_esw_vport_context_in)] = {}; MLX5_SET(query_esw_vport_context_in, in, opcode, MLX5_CMD_OP_QUERY_ESW_VPORT_CONTEXT); MLX5_SET(modify_esw_vport_context_in, in, vport_number, vport); MLX5_SET(modify_esw_vport_context_in, in, other_vport, 1); return mlx5_cmd_exec(dev, in, sizeof(in), out, outlen); }

dpdk

flow_list_create-->

flow_drv_translate-->fops->translate

__flow_dv_translate --> flow_dv_matcher_register

cache_matcher->matcher_object = mlx5_glue->dv_create_flow_matcher(sh->ctx, &dv_attr, tbl->obj);

static inline int flow_drv_translate(struct rte_eth_dev *dev, struct mlx5_flow *dev_flow, const struct rte_flow_attr *attr, const struct rte_flow_item items[], const struct rte_flow_action actions[], struct rte_flow_error *error) { const struct mlx5_flow_driver_ops *fops; enum mlx5_flow_drv_type type = dev_flow->flow->drv_type; assert(type > MLX5_FLOW_TYPE_MIN && type < MLX5_FLOW_TYPE_MAX); fops = flow_get_drv_ops(type); return fops->translate(dev, dev_flow, attr, items, actions, error); }

const struct mlx5_flow_driver_ops mlx5_flow_dv_drv_ops = { .validate = flow_dv_validate, .prepare = flow_dv_prepare, .translate = flow_dv_translate, .apply = flow_dv_apply, .remove = flow_dv_remove, .destroy = flow_dv_destroy, .query = flow_dv_query, .create_mtr_tbls = flow_dv_create_mtr_tbl, .destroy_mtr_tbls = flow_dv_destroy_mtr_tbl, .create_policer_rules = flow_dv_create_policer_rules, .destroy_policer_rules = flow_dv_destroy_policer_rules, .counter_alloc = flow_dv_counter_allocate, .counter_free = flow_dv_counter_free, .counter_query = flow_dv_counter_query, };

query_nic_vport

enum { MLX5_GET_HCA_CAP_OP_MOD_GENERAL_DEVICE = 0x0 << 1, MLX5_GET_HCA_CAP_OP_MOD_ETHERNET_OFFLOAD_CAPS = 0x1 << 1, MLX5_GET_HCA_CAP_OP_MOD_QOS_CAP = 0xc << 1, }; enum { MLX5_HCA_CAP_OPMOD_GET_MAX = 0, MLX5_HCA_CAP_OPMOD_GET_CUR = 1, }; enum { MLX5_CAP_INLINE_MODE_L2, MLX5_CAP_INLINE_MODE_VPORT_CONTEXT, MLX5_CAP_INLINE_MODE_NOT_REQUIRED, }; enum { MLX5_INLINE_MODE_NONE, MLX5_INLINE_MODE_L2, MLX5_INLINE_MODE_IP, MLX5_INLINE_MODE_TCP_UDP, MLX5_INLINE_MODE_RESERVED4, MLX5_INLINE_MODE_INNER_L2, MLX5_INLINE_MODE_INNER_IP, MLX5_INLINE_MODE_INNER_TCP_UDP, }; /* HCA bit masks indicating which Flex parser protocols are already enabled. */ #define MLX5_HCA_FLEX_IPV4_OVER_VXLAN_ENABLED (1UL << 0) #define MLX5_HCA_FLEX_IPV6_OVER_VXLAN_ENABLED (1UL << 1) #define MLX5_HCA_FLEX_IPV6_OVER_IP_ENABLED (1UL << 2) #define MLX5_HCA_FLEX_GENEVE_ENABLED (1UL << 3) #define MLX5_HCA_FLEX_CW_MPLS_OVER_GRE_ENABLED (1UL << 4) #define MLX5_HCA_FLEX_CW_MPLS_OVER_UDP_ENABLED (1UL << 5) #define MLX5_HCA_FLEX_P_BIT_VXLAN_GPE_ENABLED (1UL << 6) #define MLX5_HCA_FLEX_VXLAN_GPE_ENABLED (1UL << 7) mlx5_dev_spawn -->mlx5_devx_cmd_query_hca_att MLX5_GET_HCA_CAP_OP_MOD_GENERAL_DEVICE mlx5_devx_cmd_query_hca_attr(struct ibv_context *ctx, struct mlx5_hca_attr *attr) { uint32_t in[MLX5_ST_SZ_DW(query_hca_cap_in)] = {0}; uint32_t out[MLX5_ST_SZ_DW(query_hca_cap_out)] = {0}; void *hcattr; int status, syndrome, rc; MLX5_SET(query_hca_cap_in, in, opcode, MLX5_CMD_OP_QUERY_HCA_CAP); MLX5_SET(query_hca_cap_in, in, op_mod, MLX5_GET_HCA_CAP_OP_MOD_GENERAL_DEVICE | MLX5_HCA_CAP_OPMOD_GET_CUR); rc = mlx5_glue->devx_general_cmd(ctx, in, sizeof(in), out, sizeof(out)); } .devx_general_cmd = mlx5_glue_devx_general_cmd mlx5_glue_devx_general_cmd(struct ibv_context *ctx, const void *in, size_t inlen, void *out, size_t outlen) { #ifdef HAVE_IBV_DEVX_OBJ return mlx5dv_devx_general_cmd(ctx, in, inlen, out, outlen); #else (void)ctx; (void)in; (void)inlen; (void)out; (void)outlen; return -ENOTSUP; #endif }

static int mlx5_devx_cmd_query_nic_vport_context(struct ibv_context *ctx, unsigned int vport, struct mlx5_hca_attr *attr) { uint32_t in[MLX5_ST_SZ_DW(query_nic_vport_context_in)] = {0}; uint32_t out[MLX5_ST_SZ_DW(query_nic_vport_context_out)] = {0}; void *vctx; int status, syndrome, rc; /* Query NIC vport context to determine inline mode. */ MLX5_SET(query_nic_vport_context_in, in, opcode, MLX5_CMD_OP_QUERY_NIC_VPORT_CONTEXT); MLX5_SET(query_nic_vport_context_in, in, vport_number, vport); if (vport) MLX5_SET(query_nic_vport_context_in, in, other_vport, 1); rc = mlx5_glue->devx_general_cmd(ctx, in, sizeof(in), out, sizeof(out)); if (rc) goto error; status = MLX5_GET(query_nic_vport_context_out, out, status); syndrome = MLX5_GET(query_nic_vport_context_out, out, syndrome); if (status) { DRV_LOG(DEBUG, "Failed to query NIC vport context, " "status %x, syndrome = %x", status, syndrome); return -1; } vctx = MLX5_ADDR_OF(query_nic_vport_context_out, out, nic_vport_context); attr->vport_inline_mode = MLX5_GET(nic_vport_context, vctx, min_wqe_inline_mode); return 0; error: rc = (rc > 0) ? -rc : rc; return rc; }

下发命令 flow_dv_create_action_push_vlan

flow_dv_create_action_push_vlan--> flow_dv_push_vlan_action_resource_register --> mlx5_glue->dr_create_flow_action_push_vlan .dr_create_flow_action_push_vlan =mlx5_glue_dr_create_flow_action_push_vlan, mlx5dv_dr_action_create_push_vlan

$Q sh -- '$<' '$@' \ HAVE_MLX5DV_DR_VLAN \ infiniband/mlx5dv.h \ func mlx5dv_dr_action_create_push_vlan \ $(AUTOCONF_OUTPUT)

mlx5dv_dr_action_create_push_vlan

https://github.com/linux-rdma/rdma-core/blob/364dbcc7c04afce7d3085a9b420bb59bc9d99d95/providers/mlx5/dr_action.c

struct mlx5dv_dr_action *mlx5dv_dr_action_create_push_vlan(struct mlx5dv_dr_domain *dmn, __be32 vlan_hdr) { uint32_t vlan_hdr_h = be32toh(vlan_hdr); uint16_t ethertype = vlan_hdr_h >> 16; struct mlx5dv_dr_action *action; if (ethertype != SVLAN_ETHERTYPE && ethertype != CVLAN_ETHERTYPE) { dr_dbg(dmn, "Invalid vlan ethertype\n"); errno = EINVAL; return NULL; } action = dr_action_create_generic(DR_ACTION_TYP_PUSH_VLAN); if (!action) return NULL; action->push_vlan.vlan_hdr = vlan_hdr_h; return action; }

https://github.com/linux-rdma/rdma-core/blob/364dbcc7c04afce7d3085a9b420bb59bc9d99d95/providers/mlx5/dr_action.c

case RTE_FLOW_ACTION_TYPE_OF_PUSH_VLAN: flow_dev_get_vlan_info_from_items(items, &vlan); vlan.eth_proto = rte_be_to_cpu_16 ((((const struct rte_flow_action_of_push_vlan *) actions->conf)->ethertype)); found_action = mlx5_flow_find_action (actions + 1, RTE_FLOW_ACTION_TYPE_OF_SET_VLAN_VID); if (found_action) mlx5_update_vlan_vid_pcp(found_action, &vlan); found_action = mlx5_flow_find_action (actions + 1, RTE_FLOW_ACTION_TYPE_OF_SET_VLAN_PCP); if (found_action) mlx5_update_vlan_vid_pcp(found_action, &vlan); if (flow_dv_create_action_push_vlan (dev, attr, &vlan, dev_flow, error)) return -rte_errno; dev_flow->dv.actions[actions_n++] = dev_flow->dv.push_vlan_res->action; action_flags |= MLX5_FLOW_ACTION_OF_PUSH_VLAN; break; if (flow_dv_matcher_register(dev, &matcher, &tbl_key, dev_flow, error)) return -rte_errno; return 0;

flow_dv_matcher_register --> mlx5_glue->dv_create_flow_matcher( --> mlx5_glue_dv_create_flow_matcher -->mlx5dv_create_flow_matcher -->mlx5dv_dr_matcher_create

https://www.mellanox.com/related-docs/user_manuals/Ethernet_Adapters_Programming_Manual.pdf

RDMA Mellanox VPI verbs API + DV APIs

| mlx5: Introduce DEVX APIs |

ibv_create_flow

drivers/net/mlx5/mlx5_flow_dv.c:7341: mlx5_glue->dv_create_flow(dv->matcher->matcher_object, drivers/net/mlx5/mlx5_flow_dv.c:7909: mlx5_glue->dv_create_flow(dtb->any_matcher, drivers/net/mlx5/mlx5_flow_dv.c:8098: mlx5_glue->dv_create_flow(dtb->color_matcher, drivers/net/mlx5/mlx5_flow_verbs.c:1722: verbs->flow = mlx5_glue->create_flow(verbs->hrxq->qp, drivers/net/mlx4/mlx4_flow.c:1117: flow->ibv_flow = mlx4_glue->create_flow(qp, flow->ibv_attr); drivers/net/mlx5/mlx5_flow.c:511: flow = mlx5_glue->create_flow(drop->qp, &flow_attr.attr); mlx4_glue_create_flow(struct ibv_qp *qp, struct ibv_flow_attr *flow) { return ibv_create_flow(qp, flow); } drivers/net/mlx5/mlx5_flow.c:511 mlx5_flow_discover_priorities --> mlx5_glue->create_flow const struct mlx4_glue *mlx4_glue = &(const struct mlx4_glue){ .create_flow = mlx4_glue_create_flow,

Prerequisites

This driver relies on external libraries and kernel drivers for resources allocations and initialization. The following dependencies are not part of DPDK and must be installed separately: libibverbs User space Verbs framework used by librte_pmd_mlx5. This library provides a generic interface between the kernel and low-level user space drivers such as libmlx5. It allows slow and privileged operations (context initialization, hardware resources allocations) to be managed by the kernel and fast operations to never leave user space. libmlx5 Low-level user space driver library for Mellanox ConnectX-4 devices, it is automatically loaded by libibverbs. This library basically implements send/receive calls to the hardware queues. Kernel modules (mlnx-ofed-kernel) They provide the kernel-side Verbs API and low level device drivers that manage actual hardware initialization and resources sharing with user space processes. Unlike most other PMDs, these modules must remain loaded and bound to their devices: mlx5_core: hardware driver managing Mellanox ConnectX-4 devices and related Ethernet kernel network devices. mlx5_ib: InifiniBand device driver. ib_uverbs: user space driver for Verbs (entry point for libibverbs). Firmware update Mellanox OFED releases include firmware updates for ConnectX-4 adapters. Because each release provides new features, these updates must be applied to match the kernel modules and libraries they come with.

Load the kernel modules:

modprobe -a ib_uverbs mlx5_core mlx5_ib

Alternatively if MLNX_OFED is fully installed, the following script can be run:

/etc/init.d/openibd restart

MLX5_SET + flow_dv_matcher_register

__flow_dv_translate { flow_dv_translate_item_vlan case RTE_FLOW_ITEM_TYPE_VXLAN: flow_dv_translate_item_vxlan(match_mask, match_value, items, tunnel); last_item = MLX5_FLOW_LAYER_VXLAN; break; tbl_key.domain = attr->transfer; tbl_key.direction = attr->egress; tbl_key.table_id = dev_flow->group; if (flow_dv_matcher_register(dev, &matcher, &tbl_key, dev_flow, error)) return -rte_errno; return 0; } flow_dv_translate_item_vlan(struct mlx5_flow *dev_flow, void *matcher, void *key, const struct rte_flow_item *item, int inner) { const struct rte_flow_item_vlan *vlan_m = item->mask; const struct rte_flow_item_vlan *vlan_v = item->spec; void *headers_m; void *headers_v; uint16_t tci_m; uint16_t tci_v; if (!vlan_v) return; if (!vlan_m) vlan_m = &rte_flow_item_vlan_mask; if (inner) { headers_m = MLX5_ADDR_OF(fte_match_param, matcher, inner_headers); headers_v = MLX5_ADDR_OF(fte_match_param, key, inner_headers); } else { headers_m = MLX5_ADDR_OF(fte_match_param, matcher, outer_headers); headers_v = MLX5_ADDR_OF(fte_match_param, key, outer_headers); /* * This is workaround, masks are not supported, * and pre-validated. */ dev_flow->dv.vf_vlan.tag = rte_be_to_cpu_16(vlan_v->tci) & 0x0fff; } tci_m = rte_be_to_cpu_16(vlan_m->tci); tci_v = rte_be_to_cpu_16(vlan_m->tci & vlan_v->tci); MLX5_SET(fte_match_set_lyr_2_4, headers_m, cvlan_tag, 1); MLX5_SET(fte_match_set_lyr_2_4, headers_v, cvlan_tag, 1); MLX5_SET(fte_match_set_lyr_2_4, headers_m, first_vid, tci_m); MLX5_SET(fte_match_set_lyr_2_4, headers_v, first_vid, tci_v); MLX5_SET(fte_match_set_lyr_2_4, headers_m, first_cfi, tci_m >> 12); MLX5_SET(fte_match_set_lyr_2_4, headers_v, first_cfi, tci_v >> 12); MLX5_SET(fte_match_set_lyr_2_4, headers_m, first_prio, tci_m >> 13); MLX5_SET(fte_match_set_lyr_2_4, headers_v, first_prio, tci_v >> 13); MLX5_SET(fte_match_set_lyr_2_4, headers_m, ethertype, rte_be_to_cpu_16(vlan_m->inner_type)); MLX5_SET(fte_match_set_lyr_2_4, headers_v, ethertype, rte_be_to_cpu_16(vlan_m->inner_type & vlan_v->inner_type)); }

port信息

static int __flow_dv_translate { case RTE_FLOW_ACTION_TYPE_PORT_ID: if (flow_dv_translate_action_port_id(dev, action, &port_id, error)) return -rte_errno; port_id_resource.port_id = port_id; if (flow_dv_port_id_action_resource_register (dev, &port_id_resource, dev_flow, error)) return -rte_errno; dev_flow->dv.actions[actions_n++] = dev_flow->dv.port_id_action->action; action_flags |= MLX5_FLOW_ACTION_PORT_ID; break;

flow_dv_translate_action_port_id(struct rte_eth_dev *dev, const struct rte_flow_action *action, uint32_t *dst_port_id, struct rte_flow_error *error) { uint32_t port; struct mlx5_priv *priv; const struct rte_flow_action_port_id *conf = (const struct rte_flow_action_port_id *)action->conf; port = conf->original ? dev->data->port_id : conf->id; priv = mlx5_port_to_eswitch_info(port, false); if (!priv) return rte_flow_error_set(error, -rte_errno, RTE_FLOW_ERROR_TYPE_ACTION, NULL, "No eswitch info was found for port"); #ifdef HAVE_MLX5DV_DR_DEVX_PORT /* * This parameter is transferred to * mlx5dv_dr_action_create_dest_ib_port(). */ *dst_port_id = priv->ibv_port; #else /* * Legacy mode, no LAG configurations is supported. * This parameter is transferred to * mlx5dv_dr_action_create_dest_vport(). */ *dst_port_id = priv->vport_id; #endif return 0; }

struct mlx5_priv * mlx5_port_to_eswitch_info(uint16_t port, bool valid) { struct rte_eth_dev *dev; struct mlx5_priv *priv; if (port >= RTE_MAX_ETHPORTS) { rte_errno = EINVAL; return NULL; } if (!valid && !rte_eth_dev_is_valid_port(port)) { rte_errno = ENODEV; return NULL; } dev = &rte_eth_devices[port]; priv = dev->data->dev_private; if (!(priv->representor || priv->master)) { rte_errno = EINVAL; return NULL; } return priv; }

fops->apply

mlx5_flow_start->flow_drv_apply -->fops->apply(dev, flow, error)

--> __flow_dv_apply

old version

static int flow_dv_translate(struct rte_eth_dev *dev, struct mlx5_flow *dev_flow, const struct rte_flow_attr *attr, const struct rte_flow_item items[], const struct rte_flow_action actions[] __rte_unused, struct rte_flow_error *error) { struct priv *priv = dev->data->dev_private; uint64_t priority = attr->priority; struct mlx5_flow_dv_matcher matcher = { .mask = { .size = sizeof(matcher.mask.buf), }, }; void *match_value = dev_flow->dv.value.buf; uint8_t inner = 0; if (priority == MLX5_FLOW_PRIO_RSVD) priority = priv->config.flow_prio - 1; for (; items->type != RTE_FLOW_ITEM_TYPE_END; items++) flow_dv_create_item(&matcher, match_value, items, dev_flow, inner); matcher.crc = rte_raw_cksum((const void *)matcher.mask.buf, matcher.mask.size); if (priority == MLX5_FLOW_PRIO_RSVD) priority = priv->config.flow_prio - 1; matcher.priority = mlx5_flow_adjust_priority(dev, priority, matcher.priority); matcher.egress = attr->egress; if (flow_dv_matcher_register(dev, &matcher, dev_flow, error)) return -rte_errno; for (; actions->type != RTE_FLOW_ACTION_TYPE_END; actions++) flow_dv_create_action(actions, dev_flow); return 0; } flow_dv_create_item(void *matcher, void *key, const struct rte_flow_item *item, struct mlx5_flow *dev_flow, int inner) { struct mlx5_flow_dv_matcher *tmatcher = matcher; switch (item->type) { case RTE_FLOW_ITEM_TYPE_VOID: case RTE_FLOW_ITEM_TYPE_END: break; case RTE_FLOW_ITEM_TYPE_ETH: flow_dv_translate_item_eth(tmatcher->mask.buf, key, item, inner); tmatcher->priority = MLX5_PRIORITY_MAP_L2; break; case RTE_FLOW_ITEM_TYPE_VLAN: flow_dv_translate_item_vlan(tmatcher->mask.buf, key, item, inner); break; case RTE_FLOW_ITEM_TYPE_IPV4: flow_dv_translate_item_ipv4(tmatcher->mask.buf, key, item, inner); tmatcher->priority = MLX5_PRIORITY_MAP_L3; dev_flow->dv.hash_fields |= mlx5_flow_hashfields_adjust(dev_flow, inner, MLX5_IPV4_LAYER_TYPES, MLX5_IPV4_IBV_RX_HASH); break; case RTE_FLOW_ITEM_TYPE_IPV6: flow_dv_translate_item_ipv6(tmatcher->mask.buf, key, item, inner); tmatcher->priority = MLX5_PRIORITY_MAP_L3; dev_flow->dv.hash_fields |= mlx5_flow_hashfields_adjust(dev_flow, inner, MLX5_IPV6_LAYER_TYPES, MLX5_IPV6_IBV_RX_HASH); break; case RTE_FLOW_ITEM_TYPE_TCP: flow_dv_translate_item_tcp(tmatcher->mask.buf, key, item, inner); tmatcher->priority = MLX5_PRIORITY_MAP_L4; dev_flow->dv.hash_fields |= mlx5_flow_hashfields_adjust(dev_flow, inner, ETH_RSS_TCP, (IBV_RX_HASH_SRC_PORT_TCP | IBV_RX_HASH_DST_PORT_TCP)); break; case RTE_FLOW_ITEM_TYPE_UDP: flow_dv_translate_item_udp(tmatcher->mask.buf, key, item, inner); tmatcher->priority = MLX5_PRIORITY_MAP_L4; dev_flow->verbs.hash_fields |= mlx5_flow_hashfields_adjust(dev_flow, inner, ETH_RSS_TCP, (IBV_RX_HASH_SRC_PORT_TCP | IBV_RX_HASH_DST_PORT_TCP)); break; case RTE_FLOW_ITEM_TYPE_NVGRE: flow_dv_translate_item_nvgre(tmatcher->mask.buf, key, item, inner); break; case RTE_FLOW_ITEM_TYPE_GRE: flow_dv_translate_item_gre(tmatcher->mask.buf, key, item, inner); break; case RTE_FLOW_ITEM_TYPE_VXLAN: case RTE_FLOW_ITEM_TYPE_VXLAN_GPE: flow_dv_translate_item_vxlan(tmatcher->mask.buf, key, item, inner); break; default: break; } } flow_dv_create_action(struct rte_eth_dev *dev, const struct rte_flow_action *action, struct mlx5_flow *dev_flow, + const struct rte_flow_attr *attr, struct rte_flow_error *error) { const struct rte_flow_action_queue *queue; const struct rte_flow_action_rss *rss; int actions_n = dev_flow->dv.actions_n; struct rte_flow *flow = dev_flow->flow; + const struct rte_flow_action *action_ptr = action; switch (action->type) { case RTE_FLOW_ACTION_TYPE_VOID: actions_n++; break; + case RTE_FLOW_ACTION_TYPE_RAW_ENCAP: + /* Handle encap action with preceding decap */ + if (flow->actions & MLX5_FLOW_ACTION_RAW_DECAP) { + dev_flow->dv.actions[actions_n].type = + MLX5DV_FLOW_ACTION_IBV_FLOW_ACTION; + dev_flow->dv.actions[actions_n].action = + flow_dv_create_action_raw_encap + (dev, action, + attr, error); + if (!(dev_flow->dv.actions[actions_n].action)) + return -rte_errno; + dev_flow->dv.encap_decap_verbs_action = + dev_flow->dv.actions[actions_n].action; + } else { + /* Handle encap action without preceding decap */ + dev_flow->dv.actions[actions_n].type = + MLX5DV_FLOW_ACTION_IBV_FLOW_ACTION; + dev_flow->dv.actions[actions_n].action = + flow_dv_create_action_l2_encap + (dev, action, error); + if (!(dev_flow->dv.actions[actions_n].action)) + return -rte_errno; + dev_flow->dv.encap_decap_verbs_action = + dev_flow->dv.actions[actions_n].action; + } + flow->actions |= MLX5_FLOW_ACTION_RAW_ENCAP; + actions_n++; + break; + case RTE_FLOW_ACTION_TYPE_RAW_DECAP: + /* Check if this decap action is followed by encap. */ + for (; action_ptr->type != RTE_FLOW_ACTION_TYPE_END && + action_ptr->type != RTE_FLOW_ACTION_TYPE_RAW_ENCAP; + action_ptr++) { + } + /* Handle decap action only if it isn't followed by encap */ + if (action_ptr->type != RTE_FLOW_ACTION_TYPE_RAW_ENCAP) { + dev_flow->dv.actions[actions_n].type = + MLX5DV_FLOW_ACTION_IBV_FLOW_ACTION; + dev_flow->dv.actions[actions_n].action = + flow_dv_create_action_l2_decap(dev, + error); + if (!(dev_flow->dv.actions[actions_n].action)) + return -rte_errno; + dev_flow->dv.encap_decap_verbs_action = + dev_flow->dv.actions[actions_n].action; + actions_n++; + } + /* If decap is followed by encap, handle it at encap case. */ + break; + flow->actions |= MLX5_FLOW_ACTION_RAW_DECAP; default: break; }

===========================================================================================================================

/** Switch information returned by mlx5_nl_switch_info(). */ struct mlx5_switch_info { uint32_t master:1; /**< Master device. */ uint32_t representor:1; /**< Representor device. */ enum mlx5_phys_port_name_type name_type; /** < Port name type. */ int32_t pf_num; /**< PF number (valid for pfxvfx format only). */ int32_t port_name; /**< Representor port name. */ uint64_t switch_id; /**< Switch identifier. */ }; static const struct rte_flow_ops mlx5_flow_ops = { .validate = mlx5_flow_validate, .create = mlx5_flow_create, .destroy = mlx5_flow_destroy, .flush = mlx5_flow_flush, .isolate = mlx5_flow_isolate, .query = mlx5_flow_query, };

rte_flow_create

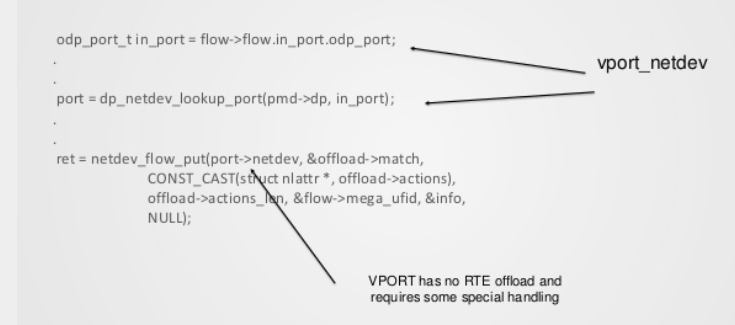

dp_netdev_flow_offload_put netdev_flow_put->flow_ flow_api->flow_put netdev_offload_dpdk_flow_put netdev_offload_dpdk_add_flow netdev_offload_dpdk_action netdev_offload_dpdk_flow_create netdev_dpdk_rte_flow_create rte_flow_create -->mlx5_flow_create flow_list_create

OVS已经支持基本的HW offload框架,OVS内部的flow表示被转化成基于DPDK rte_flow的描述后通过rte_flow_create() API将流表规则下发到硬件,后续OVS接收到的报文将会被硬件打上flow mark标签,OVS可以迅速地从flow mark索引到相应的flow并执行其action。

/* Create a flow rule on a given port. */ struct rte_flow * rte_flow_create(uint16_t port_id, const struct rte_flow_attr *attr, const struct rte_flow_item pattern[], const struct rte_flow_action actions[], struct rte_flow_error *error) { struct rte_eth_dev *dev = &rte_eth_devices[port_id]; struct rte_flow *flow; const struct rte_flow_ops *ops = rte_flow_ops_get(port_id, error); if (unlikely(!ops)) return NULL; if (likely(!!ops->create)) { flow = ops->create(dev, attr, pattern, actions, error); if (flow == NULL) flow_err(port_id, -rte_errno, error); return flow; } rte_flow_error_set(error, ENOSYS, RTE_FLOW_ERROR_TYPE_UNSPECIFIED, NULL, rte_strerror(ENOSYS)); return NULL; } /* Get generic flow operations structure from a port. */ const struct rte_flow_ops * rte_flow_ops_get(uint16_t port_id, struct rte_flow_error *error) { struct rte_eth_dev *dev = &rte_eth_devices[port_id]; const struct rte_flow_ops *ops; int code; if (unlikely(!rte_eth_dev_is_valid_port(port_id))) code = ENODEV; else if (unlikely(!dev->dev_ops->filter_ctrl || dev->dev_ops->filter_ctrl(dev, RTE_ETH_FILTER_GENERIC, RTE_ETH_FILTER_GET, &ops) || !ops)) code = ENOSYS; else return ops; rte_flow_error_set(error, code, RTE_FLOW_ERROR_TYPE_UNSPECIFIED, NULL, rte_strerror(code)); return NULL; }

mlx5_dev_filter_ctrl

mlx5_dev_filter_ctrl(struct rte_eth_dev *dev, enum rte_filter_type filter_type, enum rte_filter_op filter_op, void *arg) { switch (filter_type) { case RTE_ETH_FILTER_GENERIC: if (filter_op != RTE_ETH_FILTER_GET) { rte_errno = EINVAL; return -rte_errno; } *(const void **)arg = &mlx5_flow_ops; return 0; case RTE_ETH_FILTER_FDIR: return flow_fdir_ctrl_func(dev, filter_op, arg); default: DRV_LOG(ERR, "port %u filter type (%d) not supported", dev->data->port_id, filter_type); rte_errno = ENOTSUP; return -rte_errno; } return 0; } .filter_ctrl = mlx5_dev_filter_ctrl,

mlx5_pci_probe mlx5_sysfs_switch_info mlx5_sysfs_switch_info(unsigned int ifindex, struct mlx5_switch_info *info) { char ifname[IF_NAMESIZE]; char port_name[IF_NAMESIZE]; FILE *file; struct mlx5_switch_info data = { .master = 0, .representor = 0, .name_type = MLX5_PHYS_PORT_NAME_TYPE_NOTSET, .port_name = 0, .switch_id = 0, }; DIR *dir; bool port_switch_id_set = false; bool device_dir = false; char c; int ret; if (!if_indextoname(ifindex, ifname)) { rte_errno = errno; return -rte_errno; } MKSTR(phys_port_name, "/sys/class/net/%s/phys_port_name", ifname); MKSTR(phys_switch_id, "/sys/class/net/%s/phys_switch_id", ifname); MKSTR(pci_device, "/sys/class/net/%s/device", ifname); file = fopen(phys_port_name, "rb"); if (file != NULL) { ret = fscanf(file, "%s", port_name); fclose(file); if (ret == 1) mlx5_translate_port_name(port_name, &data); } file = fopen(phys_switch_id, "rb"); if (file == NULL) { rte_errno = errno; return -rte_errno; } port_switch_id_set = fscanf(file, "%" SCNx64 "%c", &data.switch_id, &c) == 2 && c == '\n'; fclose(file); dir = opendir(pci_device); if (dir != NULL) { closedir(dir); device_dir = true; } if (port_switch_id_set) { /* We have some E-Switch configuration. */ mlx5_sysfs_check_switch_info(device_dir, &data); } *info = data; assert(!(data.master && data.representor)); if (data.master && data.representor) { DRV_LOG(ERR, "ifindex %u device is recognized as master" " and as representor", ifindex); rte_errno = ENODEV; return -rte_errno; } return 0; }

struct mlx5_priv

每个一vport对应一个mlx5_priv

struct mlx5_priv { struct rte_eth_dev_data *dev_data; /* Pointer to device data. */ struct mlx5_ibv_shared *sh; /* Shared IB device context. */ uint32_t ibv_port; /* IB device port number. */ struct rte_pci_device *pci_dev; /* Backend PCI device. */ struct rte_ether_addr mac[MLX5_MAX_MAC_ADDRESSES]; /* MAC addresses. */ BITFIELD_DECLARE(mac_own, uint64_t, MLX5_MAX_MAC_ADDRESSES); /* Bit-field of MAC addresses owned by the PMD. */ uint16_t vlan_filter[MLX5_MAX_VLAN_IDS]; /* VLAN filters table. */ unsigned int vlan_filter_n; /* Number of configured VLAN filters. */ /* Device properties. */ uint16_t mtu; /* Configured MTU. */ unsigned int isolated:1; /* Whether isolated mode is enabled. */ unsigned int representor:1; /* Device is a port representor. */ unsigned int master:1; /* Device is a E-Switch master. */ unsigned int dr_shared:1; /* DV/DR data is shared. */ unsigned int counter_fallback:1; /* Use counter fallback management. */ unsigned int mtr_en:1; /* Whether support meter. */ uint16_t domain_id; /* Switch domain identifier. */ uint16_t vport_id; /* Associated VF vport index (if any). */ uint32_t vport_meta_tag; /* Used for vport index match ove VF LAG. */ uint32_t vport_meta_mask; /* Used for vport index field match mask. */ int32_t representor_id; /* Port representor identifier. */ int32_t pf_bond; /* >=0 means PF index in bonding configuration. */ } struct mlx5_priv * mlx5_dev_to_eswitch_info(struct rte_eth_dev *dev) { struct mlx5_priv *priv; priv = dev->data->dev_private; if (!(priv->representor || priv->master)) { rte_errno = EINVAL; return NULL; } return priv; }

tatic struct rte_eth_dev * mlx5_dev_spawn(struct rte_device *dpdk_dev, struct mlx5_dev_spawn_data *spawn, struct mlx5_dev_config config) { const struct mlx5_switch_info *switch_info = &spawn->info; struct mlx5_ibv_shared *sh = NULL; struct ibv_port_attr port_attr; struct mlx5dv_context dv_attr = { .comp_mask = 0 }; struct rte_eth_dev *eth_dev = NULL; struct mlx5_priv *priv = NULL; int err = 0; unsigned int hw_padding = 0; unsigned int mps; unsigned int cqe_comp; unsigned int cqe_pad = 0; unsigned int tunnel_en = 0; unsigned int mpls_en = 0; unsigned int swp = 0; unsigned int mprq = 0; unsigned int mprq_min_stride_size_n = 0; unsigned int mprq_max_stride_size_n = 0; unsigned int mprq_min_stride_num_n = 0; unsigned int mprq_max_stride_num_n = 0; struct rte_ether_addr mac; char name[RTE_ETH_NAME_MAX_LEN]; int own_domain_id = 0; uint16_t port_id; unsigned int i; /* Determine if this port representor is supposed to be spawned. */ if (switch_info->representor && dpdk_dev->devargs) { struct rte_eth_devargs eth_da; err = rte_eth_devargs_parse(dpdk_dev->devargs->args, ð_da); if (err) { rte_errno = -err; DRV_LOG(ERR, "failed to process device arguments: %s", strerror(rte_errno)); return NULL; } for (i = 0; i < eth_da.nb_representor_ports; ++i) if (eth_da.representor_ports[i] == (uint16_t)switch_info->port_name) break; if (i == eth_da.nb_representor_ports) { rte_errno = EBUSY; return NULL; } } /* Build device name. */ if (!switch_info->representor) strlcpy(name, dpdk_dev->name, sizeof(name)); else snprintf(name, sizeof(name), "%s_representor_%u", dpdk_dev->name, switch_info->port_name); /* check if the device is already spawned */ if (rte_eth_dev_get_port_by_name(name, &port_id) == 0) { rte_errno = EEXIST; return NULL; } DRV_LOG(DEBUG, "naming Ethernet device \"%s\"", name); if (rte_eal_process_type() == RTE_PROC_SECONDARY) { eth_dev = rte_eth_dev_attach_secondary(name); if (eth_dev == NULL) { DRV_LOG(ERR, "can not attach rte ethdev"); rte_errno = ENOMEM; return NULL; } eth_dev->device = dpdk_dev; eth_dev->dev_ops = &mlx5_dev_sec_ops; err = mlx5_proc_priv_init(eth_dev); if (err) return NULL; /* Receive command fd from primary process */ err = mlx5_mp_req_verbs_cmd_fd(eth_dev); if (err < 0) return NULL; /* Remap UAR for Tx queues. */ err = mlx5_tx_uar_init_secondary(eth_dev, err); if (err) return NULL; /* * Ethdev pointer is still required as input since * the primary device is not accessible from the * secondary process. */ eth_dev->rx_pkt_burst = mlx5_select_rx_function(eth_dev); eth_dev->tx_pkt_burst = mlx5_select_tx_function(eth_dev); return eth_dev; } sh = mlx5_alloc_shared_ibctx(spawn); if (!sh) return NULL; config.devx = sh->devx; #ifdef HAVE_IBV_MLX5_MOD_SWP dv_attr.comp_mask |= MLX5DV_CONTEXT_MASK_SWP; #endif /* * Multi-packet send is supported by ConnectX-4 Lx PF as well * as all ConnectX-5 devices. */ #ifdef HAVE_IBV_DEVICE_TUNNEL_SUPPORT dv_attr.comp_mask |= MLX5DV_CONTEXT_MASK_TUNNEL_OFFLOADS; #endif #ifdef HAVE_IBV_DEVICE_STRIDING_RQ_SUPPORT dv_attr.comp_mask |= MLX5DV_CONTEXT_MASK_STRIDING_RQ; #endif mlx5_glue->dv_query_device(sh->ctx, &dv_attr); if (dv_attr.flags & MLX5DV_CONTEXT_FLAGS_MPW_ALLOWED) { if (dv_attr.flags & MLX5DV_CONTEXT_FLAGS_ENHANCED_MPW) { DRV_LOG(DEBUG, "enhanced MPW is supported"); mps = MLX5_MPW_ENHANCED; } else { DRV_LOG(DEBUG, "MPW is supported"); mps = MLX5_MPW; } } else { DRV_LOG(DEBUG, "MPW isn't supported"); mps = MLX5_MPW_DISABLED; } #ifdef HAVE_IBV_MLX5_MOD_SWP if (dv_attr.comp_mask & MLX5DV_CONTEXT_MASK_SWP) swp = dv_attr.sw_parsing_caps.sw_parsing_offloads; DRV_LOG(DEBUG, "SWP support: %u", swp); #endif config.swp = !!swp; #ifdef HAVE_IBV_DEVICE_STRIDING_RQ_SUPPORT if (dv_attr.comp_mask & MLX5DV_CONTEXT_MASK_STRIDING_RQ) { struct mlx5dv_striding_rq_caps mprq_caps = dv_attr.striding_rq_caps; DRV_LOG(DEBUG, "\tmin_single_stride_log_num_of_bytes: %d", mprq_caps.min_single_stride_log_num_of_bytes); DRV_LOG(DEBUG, "\tmax_single_stride_log_num_of_bytes: %d", mprq_caps.max_single_stride_log_num_of_bytes); DRV_LOG(DEBUG, "\tmin_single_wqe_log_num_of_strides: %d", mprq_caps.min_single_wqe_log_num_of_strides); DRV_LOG(DEBUG, "\tmax_single_wqe_log_num_of_strides: %d", mprq_caps.max_single_wqe_log_num_of_strides); DRV_LOG(DEBUG, "\tsupported_qpts: %d", mprq_caps.supported_qpts); DRV_LOG(DEBUG, "device supports Multi-Packet RQ"); mprq = 1; mprq_min_stride_size_n = mprq_caps.min_single_stride_log_num_of_bytes; mprq_max_stride_size_n = mprq_caps.max_single_stride_log_num_of_bytes; mprq_min_stride_num_n = mprq_caps.min_single_wqe_log_num_of_strides; mprq_max_stride_num_n = mprq_caps.max_single_wqe_log_num_of_strides; config.mprq.stride_num_n = RTE_MAX(MLX5_MPRQ_STRIDE_NUM_N, mprq_min_stride_num_n); } #endif if (RTE_CACHE_LINE_SIZE == 128 && !(dv_attr.flags & MLX5DV_CONTEXT_FLAGS_CQE_128B_COMP)) cqe_comp = 0; else cqe_comp = 1; config.cqe_comp = cqe_comp; #ifdef HAVE_IBV_MLX5_MOD_CQE_128B_PAD /* Whether device supports 128B Rx CQE padding. */ cqe_pad = RTE_CACHE_LINE_SIZE == 128 && (dv_attr.flags & MLX5DV_CONTEXT_FLAGS_CQE_128B_PAD); #endif #ifdef HAVE_IBV_DEVICE_TUNNEL_SUPPORT if (dv_attr.comp_mask & MLX5DV_CONTEXT_MASK_TUNNEL_OFFLOADS) { tunnel_en = ((dv_attr.tunnel_offloads_caps & MLX5DV_RAW_PACKET_CAP_TUNNELED_OFFLOAD_VXLAN) && (dv_attr.tunnel_offloads_caps & MLX5DV_RAW_PACKET_CAP_TUNNELED_OFFLOAD_GRE)); } DRV_LOG(DEBUG, "tunnel offloading is %ssupported", tunnel_en ? "" : "not "); #else DRV_LOG(WARNING, "tunnel offloading disabled due to old OFED/rdma-core version"); #endif config.tunnel_en = tunnel_en; #ifdef HAVE_IBV_DEVICE_MPLS_SUPPORT mpls_en = ((dv_attr.tunnel_offloads_caps & MLX5DV_RAW_PACKET_CAP_TUNNELED_OFFLOAD_CW_MPLS_OVER_GRE) && (dv_attr.tunnel_offloads_caps & MLX5DV_RAW_PACKET_CAP_TUNNELED_OFFLOAD_CW_MPLS_OVER_UDP)); DRV_LOG(DEBUG, "MPLS over GRE/UDP tunnel offloading is %ssupported", mpls_en ? "" : "not "); #else DRV_LOG(WARNING, "MPLS over GRE/UDP tunnel offloading disabled due to" " old OFED/rdma-core version or firmware configuration"); #endif config.mpls_en = mpls_en; /* Check port status. */ err = mlx5_glue->query_port(sh->ctx, spawn->ibv_port, &port_attr); if (err) { DRV_LOG(ERR, "port query failed: %s", strerror(err)); goto error; } if (port_attr.link_layer != IBV_LINK_LAYER_ETHERNET) { DRV_LOG(ERR, "port is not configured in Ethernet mode"); err = EINVAL; goto error; } if (port_attr.state != IBV_PORT_ACTIVE) DRV_LOG(DEBUG, "port is not active: \"%s\" (%d)", mlx5_glue->port_state_str(port_attr.state), port_attr.state); /* Allocate private eth device data. */ priv = rte_zmalloc("ethdev private structure", sizeof(*priv), RTE_CACHE_LINE_SIZE); if (priv == NULL) { DRV_LOG(ERR, "priv allocation failure"); err = ENOMEM; goto error; } priv->sh = sh; priv->ibv_port = spawn->ibv_port; priv->mtu = RTE_ETHER_MTU; #ifndef RTE_ARCH_64 /* Initialize UAR access locks for 32bit implementations. */ rte_spinlock_init(&priv->uar_lock_cq); for (i = 0; i < MLX5_UAR_PAGE_NUM_MAX; i++) rte_spinlock_init(&priv->uar_lock[i]); #endif /* Some internal functions rely on Netlink sockets, open them now. */ priv->nl_socket_rdma = mlx5_nl_init(NETLINK_RDMA); priv->nl_socket_route = mlx5_nl_init(NETLINK_ROUTE); priv->nl_sn = 0; priv->representor = !!switch_info->representor; priv->master = !!switch_info->master; priv->domain_id = RTE_ETH_DEV_SWITCH_DOMAIN_ID_INVALID; /* * Currently we support single E-Switch per PF configurations * only and vport_id field contains the vport index for * associated VF, which is deduced from representor port name. * For example, let's have the IB device port 10, it has * attached network device eth0, which has port name attribute * pf0vf2, we can deduce the VF number as 2, and set vport index * as 3 (2+1). This assigning schema should be changed if the * multiple E-Switch instances per PF configurations or/and PCI * subfunctions are added. */ priv->vport_id = switch_info->representor ? switch_info->port_name + 1 : -1; /* representor_id field keeps the unmodified port/VF index. */ priv->representor_id = switch_info->representor ? switch_info->port_name : -1; /* * Look for sibling devices in order to reuse their switch domain * if any, otherwise allocate one. */ RTE_ETH_FOREACH_DEV_OF(port_id, dpdk_dev) { const struct mlx5_priv *opriv = rte_eth_devices[port_id].data->dev_private; if (!opriv || opriv->domain_id == RTE_ETH_DEV_SWITCH_DOMAIN_ID_INVALID) continue; priv->domain_id = opriv->domain_id; break; } if (priv->domain_id == RTE_ETH_DEV_SWITCH_DOMAIN_ID_INVALID) { err = rte_eth_switch_domain_alloc(&priv->domain_id); if (err) { err = rte_errno; DRV_LOG(ERR, "unable to allocate switch domain: %s", strerror(rte_errno)); goto error; } own_domain_id = 1; } err = mlx5_args(&config, dpdk_dev->devargs); if (err) { err = rte_errno; DRV_LOG(ERR, "failed to process device arguments: %s", strerror(rte_errno)); goto error; } config.hw_csum = !!(sh->device_attr.device_cap_flags_ex & IBV_DEVICE_RAW_IP_CSUM); DRV_LOG(DEBUG, "checksum offloading is %ssupported", (config.hw_csum ? "" : "not ")); #if !defined(HAVE_IBV_DEVICE_COUNTERS_SET_V42) && \ !defined(HAVE_IBV_DEVICE_COUNTERS_SET_V45) DRV_LOG(DEBUG, "counters are not supported"); #endif #ifndef HAVE_IBV_FLOW_DV_SUPPORT if (config.dv_flow_en) { DRV_LOG(WARNING, "DV flow is not supported"); config.dv_flow_en = 0; } #endif config.ind_table_max_size = sh->device_attr.rss_caps.max_rwq_indirection_table_size; /* * Remove this check once DPDK supports larger/variable * indirection tables. */ if (config.ind_table_max_size > (unsigned int)ETH_RSS_RETA_SIZE_512) config.ind_table_max_size = ETH_RSS_RETA_SIZE_512; DRV_LOG(DEBUG, "maximum Rx indirection table size is %u", config.ind_table_max_size); config.hw_vlan_strip = !!(sh->device_attr.raw_packet_caps & IBV_RAW_PACKET_CAP_CVLAN_STRIPPING); DRV_LOG(DEBUG, "VLAN stripping is %ssupported", (config.hw_vlan_strip ? "" : "not ")); config.hw_fcs_strip = !!(sh->device_attr.raw_packet_caps & IBV_RAW_PACKET_CAP_SCATTER_FCS); DRV_LOG(DEBUG, "FCS stripping configuration is %ssupported", (config.hw_fcs_strip ? "" : "not ")); #if defined(HAVE_IBV_WQ_FLAG_RX_END_PADDING) hw_padding = !!sh->device_attr.rx_pad_end_addr_align; #elif defined(HAVE_IBV_WQ_FLAGS_PCI_WRITE_END_PADDING) hw_padding = !!(sh->device_attr.device_cap_flags_ex & IBV_DEVICE_PCI_WRITE_END_PADDING); #endif if (config.hw_padding && !hw_padding) { DRV_LOG(DEBUG, "Rx end alignment padding isn't supported"); config.hw_padding = 0; } else if (config.hw_padding) { DRV_LOG(DEBUG, "Rx end alignment padding is enabled"); } config.tso = (sh->device_attr.tso_caps.max_tso > 0 && (sh->device_attr.tso_caps.supported_qpts & (1 << IBV_QPT_RAW_PACKET))); if (config.tso) config.tso_max_payload_sz = sh->device_attr.tso_caps.max_tso; /* * MPW is disabled by default, while the Enhanced MPW is enabled * by default. */ if (config.mps == MLX5_ARG_UNSET) config.mps = (mps == MLX5_MPW_ENHANCED) ? MLX5_MPW_ENHANCED : MLX5_MPW_DISABLED; else config.mps = config.mps ? mps : MLX5_MPW_DISABLED; DRV_LOG(INFO, "%sMPS is %s", config.mps == MLX5_MPW_ENHANCED ? "enhanced " : "", config.mps != MLX5_MPW_DISABLED ? "enabled" : "disabled"); if (config.cqe_comp && !cqe_comp) { DRV_LOG(WARNING, "Rx CQE compression isn't supported"); config.cqe_comp = 0; } if (config.cqe_pad && !cqe_pad) { DRV_LOG(WARNING, "Rx CQE padding isn't supported"); config.cqe_pad = 0; } else if (config.cqe_pad) { DRV_LOG(INFO, "Rx CQE padding is enabled"); } if (config.mprq.enabled && mprq) { if (config.mprq.stride_num_n > mprq_max_stride_num_n || config.mprq.stride_num_n < mprq_min_stride_num_n) { config.mprq.stride_num_n = RTE_MAX(MLX5_MPRQ_STRIDE_NUM_N, mprq_min_stride_num_n); DRV_LOG(WARNING, "the number of strides" " for Multi-Packet RQ is out of range," " setting default value (%u)", 1 << config.mprq.stride_num_n); } config.mprq.min_stride_size_n = mprq_min_stride_size_n; config.mprq.max_stride_size_n = mprq_max_stride_size_n; } else if (config.mprq.enabled && !mprq) { DRV_LOG(WARNING, "Multi-Packet RQ isn't supported"); config.mprq.enabled = 0; } if (config.max_dump_files_num == 0) config.max_dump_files_num = 128; eth_dev = rte_eth_dev_allocate(name); if (eth_dev == NULL) { DRV_LOG(ERR, "can not allocate rte ethdev"); err = ENOMEM; goto error; } /* Flag to call rte_eth_dev_release_port() in rte_eth_dev_close(). */ eth_dev->data->dev_flags |= RTE_ETH_DEV_CLOSE_REMOVE; if (priv->representor) { eth_dev->data->dev_flags |= RTE_ETH_DEV_REPRESENTOR; eth_dev->data->representor_id = priv->representor_id; } eth_dev->data->dev_private = priv; priv->dev_data = eth_dev->data; eth_dev->data->mac_addrs = priv->mac; eth_dev->device = dpdk_dev; /* Configure the first MAC address by default. */ if (mlx5_get_mac(eth_dev, &mac.addr_bytes)) { DRV_LOG(ERR, "port %u cannot get MAC address, is mlx5_en" " loaded? (errno: %s)", eth_dev->data->port_id, strerror(rte_errno)); err = ENODEV; goto error; } DRV_LOG(INFO, "port %u MAC address is %02x:%02x:%02x:%02x:%02x:%02x", eth_dev->data->port_id, mac.addr_bytes[0], mac.addr_bytes[1], mac.addr_bytes[2], mac.addr_bytes[3], mac.addr_bytes[4], mac.addr_bytes[5]); #ifndef NDEBUG { char ifname[IF_NAMESIZE]; if (mlx5_get_ifname(eth_dev, &ifname) == 0) DRV_LOG(DEBUG, "port %u ifname is \"%s\"", eth_dev->data->port_id, ifname); else DRV_LOG(DEBUG, "port %u ifname is unknown", eth_dev->data->port_id); } #endif /* Get actual MTU if possible. */ err = mlx5_get_mtu(eth_dev, &priv->mtu); if (err) { err = rte_errno; goto error; } DRV_LOG(DEBUG, "port %u MTU is %u", eth_dev->data->port_id, priv->mtu); /* Initialize burst functions to prevent crashes before link-up. */ eth_dev->rx_pkt_burst = removed_rx_burst; eth_dev->tx_pkt_burst = removed_tx_burst; eth_dev->dev_ops = &mlx5_dev_ops; /* Register MAC address. */ claim_zero(mlx5_mac_addr_add(eth_dev, &mac, 0, 0)); if (config.vf && config.vf_nl_en) mlx5_nl_mac_addr_sync(eth_dev); priv->tcf_context = mlx5_flow_tcf_context_create(); if (!priv->tcf_context) { err = -rte_errno; DRV_LOG(WARNING, "flow rules relying on switch offloads will not be" " supported: cannot open libmnl socket: %s", strerror(rte_errno)); } else { struct rte_flow_error error; unsigned int ifindex = mlx5_ifindex(eth_dev); if (!ifindex) { err = -rte_errno; error.message = "cannot retrieve network interface index"; } else { err = mlx5_flow_tcf_init(priv->tcf_context, ifindex, &error); } if (err) { DRV_LOG(WARNING, "flow rules relying on switch offloads will" " not be supported: %s: %s", error.message, strerror(rte_errno)); mlx5_flow_tcf_context_destroy(priv->tcf_context); priv->tcf_context = NULL; } } TAILQ_INIT(&priv->flows); TAILQ_INIT(&priv->ctrl_flows); /* Hint libmlx5 to use PMD allocator for data plane resources */ struct mlx5dv_ctx_allocators alctr = { .alloc = &mlx5_alloc_verbs_buf, .free = &mlx5_free_verbs_buf, .data = priv, }; mlx5_glue->dv_set_context_attr(sh->ctx, MLX5DV_CTX_ATTR_BUF_ALLOCATORS, (void *)((uintptr_t)&alctr)); /* Bring Ethernet device up. */ DRV_LOG(DEBUG, "port %u forcing Ethernet interface up", eth_dev->data->port_id); mlx5_set_link_up(eth_dev); /* * Even though the interrupt handler is not installed yet, * interrupts will still trigger on the async_fd from * Verbs context returned by ibv_open_device(). */ mlx5_link_update(eth_dev, 0); #ifdef HAVE_IBV_DEVX_OBJ if (config.devx) { err = mlx5_devx_cmd_query_hca_attr(sh->ctx, &config.hca_attr); if (err) { err = -err; goto error; } } #endif #ifdef HAVE_MLX5DV_DR_ESWITCH if (!(config.hca_attr.eswitch_manager && config.dv_flow_en && (switch_info->representor || switch_info->master))) config.dv_esw_en = 0; #else config.dv_esw_en = 0; #endif /* Store device configuration on private structure. */ priv->config = config; if (config.dv_flow_en) { err = mlx5_alloc_shared_dr(priv); if (err) goto error; } /* Supported Verbs flow priority number detection. */ err = mlx5_flow_discover_priorities(eth_dev); if (err < 0) { err = -err; goto error; } priv->config.flow_prio = err; /* Add device to memory callback list. */ rte_rwlock_write_lock(&mlx5_shared_data->mem_event_rwlock); LIST_INSERT_HEAD(&mlx5_shared_data->mem_event_cb_list, sh, mem_event_cb); rte_rwlock_write_unlock(&mlx5_shared_data->mem_event_rwlock); return eth_dev; error: if (priv) { if (priv->sh) mlx5_free_shared_dr(priv); if (priv->nl_socket_route >= 0) close(priv->nl_socket_route); if (priv->nl_socket_rdma >= 0) close(priv->nl_socket_rdma); if (priv->tcf_context) mlx5_flow_tcf_context_destroy(priv->tcf_context); if (own_domain_id) claim_zero(rte_eth_switch_domain_free(priv->domain_id)); rte_free(priv); if (eth_dev != NULL) eth_dev->data->dev_private = NULL; } if (eth_dev != NULL) { /* mac_addrs must not be freed alone because part of dev_private */ eth_dev->data->mac_addrs = NULL; rte_eth_dev_release_port(eth_dev); } if (sh) mlx5_free_shared_ibctx(sh); assert(err > 0); rte_errno = err; return NULL; }

> > > #0 mlx5dv_dr_table_create (dmn=0x555556c641b0, level=65534) at > > > ../providers/mlx5/dr_table.c:183 > > > #1 0x0000555555dfaeaa in flow_dv_tbl_resource_get (dev=<optimized > > > out>, table_id=65534, egress=<optimized out>, transfer=<optimized > > > out>, > > > error=0x7fffffffdca0) at > > > /home/bganne/src/dpdk/drivers/net/mlx5/mlx5_flow_dv.c:6746 > > > #2 0x0000555555e02b28 in __flow_dv_translate > > > (dev=dev at entry=0x555556bbcdc0 <rte_eth_devices>, > > > dev_flow=0x100388300, attr=<optimized out>, items=<optimized out>, > > > actions=<optimized out>, error=<optimized out>) at > > > /home/bganne/src/dpdk/drivers/net/mlx5/mlx5_flow_dv.c:7503 > > > #3 0x0000555555e04954 in flow_dv_translate (dev=0x555556bbcdc0 > > > <rte_eth_devices>, dev_flow=<optimized out>, attr=<optimized out>, > > > items=<optimized out>, actions=<optimized out>, error=<optimized > > > out>) > > at > > > /home/bganne/src/dpdk/drivers/net/mlx5/mlx5_flow_dv.c:8841 > > > #4 0x0000555555df152f in flow_drv_translate (error=0x7fffffffdca0, > > > actions=0x7fffffffdce0, items=0x7fffffffdcc0, attr=0x7fffffffbb88, > > > dev_flow=<optimized out>, dev=0x555556bbcdc0 <rte_eth_devices>) at > > > /home/bganne/src/dpdk/drivers/net/mlx5/mlx5_flow.c:2571 > > > #5 flow_create_split_inner (error=0x7fffffffdca0, external=false, > > > actions=0x7fffffffdce0, items=0x7fffffffdcc0, attr=0x7fffffffbb88, > > > prefix_layers=0, sub_flow=0x0, flow=0x1003885c0, dev=0x555556bbcdc0 > > > <rte_eth_devices>) at > > > /home/bganne/src/dpdk/drivers/net/mlx5/mlx5_flow.c:3490 > > > #6 flow_create_split_metadata (error=0x7fffffffdca0, > > > external=false, actions=0x7fffffffdce0, items=0x7fffffffdcc0, > > > attr=0x7fffffffbb88, prefix_layers=0, flow=0x1003885c0, > > > dev=0x555556bbcdc0 <rte_eth_devices>) at > > > /home/bganne/src/dpdk/drivers/net/mlx5/mlx5_flow.c:3865 > > > #7 flow_create_split_meter (error=0x7fffffffdca0, external=false, > > > actions=0x7fffffffdce0, items=<optimized out>, attr=0x7fffffffdc94, > > > flow=0x1003885c0, dev=0x555556bbcdc0 <rte_eth_devices>) at > > > /home/bganne/src/dpdk/drivers/net/mlx5/mlx5_flow.c:4121 > > > #8 flow_create_split_outer (error=0x7fffffffdca0, external=false, > > > actions=0x7fffffffdce0, items=<optimized out>, attr=0x7fffffffdc94, > > > flow=0x1003885c0, dev=0x555556bbcdc0 <rte_eth_devices>) at > > > /home/bganne/src/dpdk/drivers/net/mlx5/mlx5_flow.c:4178 > > > #9 flow_list_create (dev=dev at entry=0x555556bbcdc0 > > > <rte_eth_devices>, list=list at entry=0x0, > > > attr=attr at entry=0x7fffffffdc94, items=items at entry=0x7fffffffdcc0, > > > actions=actions at entry=0x7fffffffdce0, > > > external=external at entry=false, error=0x7fffffffdca0) at > > > /home/bganne/src/dpdk/drivers/net/mlx5/mlx5_flow.c:4306 > > > #10 0x0000555555df8587 in mlx5_flow_discover_mreg_c > > > (dev=dev at entry=0x555556bbcdc0 <rte_eth_devices>) at > > > /home/bganne/src/dpdk/drivers/net/mlx5/mlx5_flow.c:5747 > > > #11 0x0000555555d692a6 in mlx5_dev_spawn (config=..., > > > spawn=0x1003e9e00, > > > dpdk_dev=0x555556dd6fe0) at > > > /home/bganne/src/dpdk/drivers/net/mlx5/mlx5.c:2763 > > > #12 mlx5_pci_probe (pci_drv=<optimized out>, pci_dev=<optimized > > > out>) at > > > /home/bganne/src/dpdk/drivers/net/mlx5/mlx5.c:3363 > > > #13 0x0000555555a411c8 in pci_probe_all_drivers () > > > #14 0x0000555555a412f8 in rte_pci_probe () > > > #15 0x0000555555a083da in rte_bus_probe () > > > #16 0x00005555559f204d in rte_eal_init () > > > #17 0x00005555556a0d45 in main () > > >

netdev-offload-dpdk

https://github.com/Mellanox/OVS/blob/master/lib/netdev-offload-dpdk.c

const struct netdev_flow_api netdev_offload_dpdk = { .type = "dpdk_flow_api", .flow_put = netdev_offload_dpdk_flow_put, .flow_del = netdev_offload_dpdk_flow_del, .init_flow_api = netdev_offload_dpdk_init_flow_api, };

netdev_offload_tc

const struct netdev_flow_api netdev_offload_tc = { .type = "linux_tc", .flow_flush = netdev_tc_flow_flush, .flow_dump_create = netdev_tc_flow_dump_create, .flow_dump_destroy = netdev_tc_flow_dump_destroy, .flow_dump_next = netdev_tc_flow_dump_next, .flow_put = netdev_tc_flow_put, .flow_get = netdev_tc_flow_get, .flow_del = netdev_tc_flow_del, .init_flow_api = netdev_tc_init_flow_api, }; netdev_tc_flow_put tc_replace_flower nl_msg_put_flower_options tc_get_tc_cls_policy tc_get_tc_cls_policy(enum tc_offload_policy policy) { if (policy == TC_POLICY_SKIP_HW) { return TCA_CLS_FLAGS_SKIP_HW; } else if (policy == TC_POLICY_SKIP_SW) { return TCA_CLS_FLAGS_SKIP_SW; } return 0; }

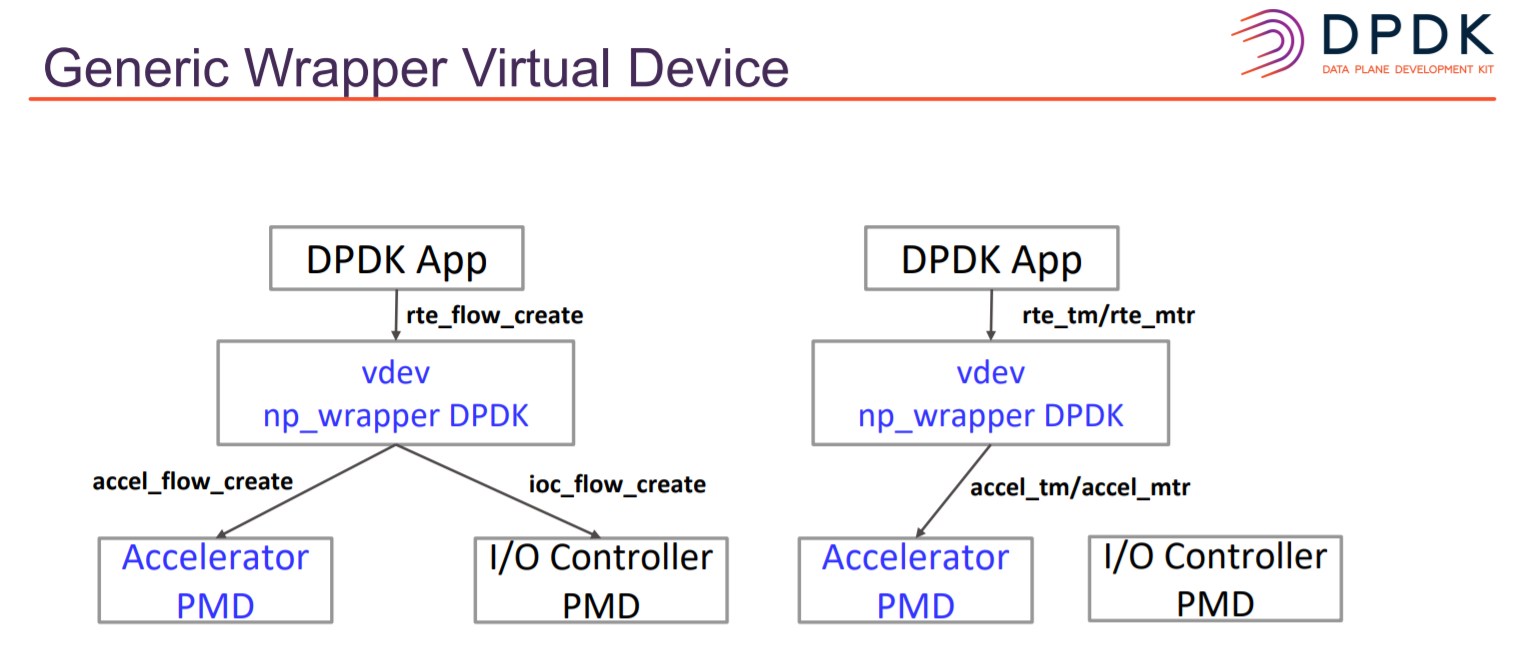

Generic Flow API简介

Classification功能

Classification功能是指网卡在收包时,将符合某种规则的包放入指定的队列。网卡一般支持一种或多种classification功能,以intel 700系列网卡为例,其支持MAC/VLAN filter、Ethertype filter、Cloud filter、flow director等等。不同的网卡可能支持不同种类的filter,例如intel 82599系列网卡支持n-tuple、L2_tunnel、flow director等,下表列出了DPDK中不同驱动对filter的支持。即使intel 82599系列网卡和intel 700系列网卡都支持flow director,它们支持的方式也不一样。那DPDK是如何支持不同网卡的filter的呢?

现有flow API

DPDK定义所有网卡的filter类型以及每种filter的基本属性,并提供相应的接口给上层应用,所以要求用户对filter属性有一定的概念。以flow director为例,其中有两个数据结构为:

* A structure used to contain extend input of flow */ struct rte_eth_fdir_flow_ext { uint16_t vlan_tci; uint8_t flexbytes[RTE_ETH_FDIR_MAX_FLEXLEN]; /**< It is filled by the flexible payload to match. */ uint8_t is_vf; /**< 1 for VF, 0 for port dev */ uint16_t dst_id; /**< VF ID, available when is_vf is 1*/ }; /** * A structure used to define the input for a flow director filter entry */ struct rte_eth_fdir_input { uint16_t flow_type; union rte_eth_fdir_flow flow; /**< Flow fields to match, dependent on flow_type */ struct rte_eth_fdir_flow_ext flow_ext; /**< Additional fields to match */ };

用户在添加flow director流规则的时候,需要填写上述信息。但并不是所有的网卡都关注flow_type 和is_vf,比如intel 82599系列网卡不需要flow_type及is_vf。其它类型的filter也有类似的情况。

从上可以看出,现有方案有很多缺陷:首先,DPDK为所有网卡抽象出统一的属性,但是某些属性只对一种网卡有意义;其次,随着DPDK支持的网卡越来越多,DPDK需要定义的filter类型要增加,网卡filter功能升级也需要DPDK作相应修改,这样很容易导致API/ABI的破坏;

另外,从应用角度来看,现有的方案也有诸多不便,使得API比较难用,不够友好:那些可选或者可缺省的属性容易让用户产生疑惑;经常在某种filter类型中随意插入一些某个网卡特有的属性;设计复杂,也没有比较详细的说明文档。

鉴于上述原因,一个generic flow API必不可少。

Generic flow API

DPDK v17.02 推出了generic flow API方案,DPDK把一条流规则抽象为pattern和actions两部分。

Pattern由一定数目的item组成。Item主要和协议相关,支持ETH, IPV4, IPV6, UDP, TCP, VXLAN等等。item也包括一些标志符,比如PF, VF, END等,目前DPDK支持的item类型定义在rte_flow.h的enum rte_flow_item_type。在描述一个item的时候可以添加spec和mask,告诉驱动哪些需要匹配。下面以以太网包的流规则为例,该item描述的是精确匹配二层包头的目的地址11:22:33:44:55:66。

Actions表示流规则的动作,比如QUEUE, DROP, PF, VF,END等等,DPDK支持的action类型定义在rte_flow.h的enum rte_flow_action_type。以下action表示符合某种pattern的包放入队列3。

该方案把复杂的区分filter类型的事情交给驱动处理,用户再也不需要关注硬件的能力,这样使得上层应用能够方便添加或者删除流规则。

如果想添加一条流规则,上层应用只需要调用rte_flow_create()这个接口,并填好相应的pattern和actions;如果要删除一条流规则,上层应用只需要调用rte_flow_destroy()。还是以flow director为例,流规则定义如下所示,对于用户来说,这种方式更易操作。

综上所述,generic flow API明显方便很多。

https://www.sdnlab.com/24697.html

DPDK数据流过滤规则例程解析—— 网卡流处理功能窥探

https://www.sdnlab.com/23216.html

[SPDK/NVMe存储技术分析]012 - 用户态ibv_post_send()源码分析

https://www.cnblogs.com/vlhn/p/7997457.html

CentOS安装基于Mellanox网卡的DPDK开发环境

https://blog.csdn.net/botao_li/article/details/108075832?utm_medium=distribute.pc_relevant.none-task-blog-baidujs_title-2&spm=1001.2101.3001.4242