gvisor task

https://terassyi.net/posts/2020/04/14/gvisor.html

Goroutine的并发编程模型基于GMP模型,简要解释一下GMP的含义:

G:表示goroutine,每个goroutine都有自己的栈空间,定时器,初始化的栈空间在2k左右,空间会随着需求增长。

M:抽象化代表内核线程,记录内核线程栈信息,当goroutine调度到线程时,使用该goroutine自己的栈信息。

M.stack→G.stack,M的PC寄存器指向G提供的函数,然后去执行。

type m struct {

/*

1. 所有调用栈的Goroutine,这是一个比较特殊的Goroutine。

2. 普通的Goroutine栈是在Heap分配的可增长的stack,而g0的stack是M对应的线程栈。

3. 所有调度相关代码,会先切换到该Goroutine的栈再执行。

*/

g0 *g

curg *g // M当前绑定的结构体G

// SP、PC寄存器用于现场保护和现场恢复

vdsoSP uintptr

vdsoPC uintptr

// 省略…}P:代表调度器,负责调度goroutine,维护一个本地goroutine队列,M从P上获得goroutine并执行,同时还负责部分内存的管理。

Fork

pkg/sentry/syscalls/linux/sys_thread.go

pkg/sentry/kernel/task_exec.go:203: t.fdTable = t.fdTable.Fork(t) pkg/sentry/kernel/task_clone.go:229: image, err := t.image.Fork(t, t.k, !opts.NewAddressSpace) pkg/sentry/kernel/task_clone.go:257: fdTable = t.fdTable.Fork(t) pkg/sentry/kernel/task_clone.go:531: t.fdTable = oldFDTable.Fork(t) pkg/sentry/syscalls/linux/sys_thread.go:232:func Fork(t *kernel.Task, args arch.SyscallArguments) (uintptr, *kernel.SyscallControl, error) {

// Fork implements Linux syscall fork(2). func Fork(t *kernel.Task, args arch.SyscallArguments) (uintptr, *kernel.SyscallControl, error) { // "A call to fork() is equivalent to a call to clone(2) specifying flags // as just SIGCHLD." - fork(2) return clone(t, int(syscall.SIGCHLD), 0, 0, 0, 0) }

// clone is used by Clone, Fork, and VFork. func clone(t *kernel.Task, flags int, stack usermem.Addr, parentTID usermem.Addr, childTID usermem.Addr, tls usermem.Addr) (uintptr, *kernel.SyscallControl, error) { opts := kernel.CloneOptions{ SharingOptions: kernel.SharingOptions{ NewAddressSpace: flags&linux.CLONE_VM == 0, NewSignalHandlers: flags&linux.CLONE_SIGHAND == 0, NewThreadGroup: flags&linux.CLONE_THREAD == 0, TerminationSignal: linux.Signal(flags & exitSignalMask), NewPIDNamespace: flags&linux.CLONE_NEWPID == linux.CLONE_NEWPID, NewUserNamespace: flags&linux.CLONE_NEWUSER == linux.CLONE_NEWUSER, NewNetworkNamespace: flags&linux.CLONE_NEWNET == linux.CLONE_NEWNET, NewFiles: flags&linux.CLONE_FILES == 0, NewFSContext: flags&linux.CLONE_FS == 0, } } } if wd != nil { defer wd.DecRef(t) } // Load the new TaskImage. remainingTraversals := uint(linux.MaxSymlinkTraversals) loadArgs := loader.LoadArgs{ Opener: fsbridge.NewFSLookup(t.MountNamespace(), root, wd), RemainingTraversals: &remainingTraversals, ResolveFinal: resolveFinal, Filename: pathname, File: executable, CloseOnExec: closeOnExec, Argv: argv, Envv: envv, Features: t.Arch().FeatureSet(), } image, se := t.Kernel().LoadTaskImage(t, loadArgs) if se != nil { return 0, nil, se.ToError() } ctrl, err := t.Execve(image) return 0, ctrl, err }

task Start

Data structures Task is like task_struct. type Task struct { ... p platform.Context `state:"nosave"` k *Kernel tc TaskContext ... } TaskContext contains MemoryManager, MemoryManager is like mm_struct. MemoryManager contains platform.AddressSpace, which is a interface, implemented by kvm.addressSpace, which contains pagetables.PageTables. There are two kernel structs, kernel.Kernel and ring0.Kernel. kernel.Kernel contains most of kernel data structures while ring0.kernel only contains PageTables *pagetables.PageTables and globalIDT idt64.

// Start starts the task goroutine. Start must be called exactly once for each // task returned by NewTask. // // 'tid' must be the task's TID in the root PID namespace and it's used for // debugging purposes only (set as parameter to Task.run to make it visible // in stack dumps). func (t *Task) Start(tid ThreadID) { // If the task was restored, it may be "starting" after having already exited. if t.runState == nil { return } t.goroutineStopped.Add(1) t.tg.liveGoroutines.Add(1) t.tg.pidns.owner.liveGoroutines.Add(1) t.tg.pidns.owner.runningGoroutines.Add(1) // Task is now running in system mode. t.accountTaskGoroutineLeave(TaskGoroutineNonexistent) // Use the task's TID in the root PID namespace to make it visible in stack dumps. go t.run(uintptr(tid)) // S/R-SAFE: synchronizes with saving through stops }

// StartProcess starts running a process that was created with CreateProcess. func (k *Kernel) StartProcess(tg *ThreadGroup) { t := tg.Leader() tid := k.tasks.Root.IDOfTask(t) t.Start(tid) } // Start starts execution of all tasks in k. // // Preconditions: Start may be called exactly once. func (k *Kernel) Start() error { k.extMu.Lock() defer k.extMu.Unlock() if k.globalInit == nil { return fmt.Errorf("kernel contains no tasks") } if k.started { return fmt.Errorf("kernel already started") } k.started = true k.cpuClockTicker = ktime.NewTimer(k.monotonicClock, newKernelCPUClockTicker(k)) k.cpuClockTicker.Swap(ktime.Setting{ Enabled: true, Period: linux.ClockTick, }) // If k was created by LoadKernelFrom, timers were stopped during // Kernel.SaveTo and need to be resumed. If k was created by NewKernel, // this is a no-op. k.resumeTimeLocked(k.SupervisorContext()) // Start task goroutines. k.tasks.mu.RLock() defer k.tasks.mu.RUnlock() for t, tid := range k.tasks.Root.tids { t.Start(tid) } return nil }

startContainer

/ startContainer starts a child container. It returns the thread group ID of // the newly created process. Used FDs are either closed or released. It's safe // for the caller to close any remaining files upon return. func (l *Loader) startContainer(spec *specs.Spec, conf *config.Config, cid string, stdioFDs, goferFDs []*fd.FD) error { // Create capabilities. caps, err := specutils.Capabilities(conf.EnableRaw, spec.Process.Capabilities) if err != nil { return fmt.Errorf("creating capabilities: %v", err) } l.mu.Lock() defer l.mu.Unlock() ep := l.processes[execID{cid: cid}] if ep == nil { return fmt.Errorf("trying to start a deleted container %q", cid) } // Convert the spec's additional GIDs to KGIDs. extraKGIDs := make([]auth.KGID, 0, len(spec.Process.User.AdditionalGids)) for _, GID := range spec.Process.User.AdditionalGids { extraKGIDs = append(extraKGIDs, auth.KGID(GID)) } // Create credentials. We reuse the root user namespace because the // sentry currently supports only 1 mount namespace, which is tied to a // single user namespace. Thus we must run in the same user namespace // to access mounts. creds := auth.NewUserCredentials( auth.KUID(spec.Process.User.UID), auth.KGID(spec.Process.User.GID), extraKGIDs, caps, l.k.RootUserNamespace()) var pidns *kernel.PIDNamespace if ns, ok := specutils.GetNS(specs.PIDNamespace, spec); ok { if ns.Path != "" { for _, p := range l.processes { if ns.Path == p.pidnsPath { pidns = p.tg.PIDNamespace() break } } } if pidns == nil { pidns = l.k.RootPIDNamespace().NewChild(l.k.RootUserNamespace()) } ep.pidnsPath = ns.Path } else { pidns = l.k.RootPIDNamespace() } info := &containerInfo{ conf: conf, spec: spec, goferFDs: goferFDs, } info.procArgs, err = createProcessArgs(cid, spec, creds, l.k, pidns) if err != nil { return fmt.Errorf("creating new process: %v", err) } // Use stdios or TTY depending on the spec configuration. if spec.Process.Terminal { if len(stdioFDs) > 0 { return fmt.Errorf("using TTY, stdios not expected: %v", stdioFDs) } if ep.hostTTY == nil { return fmt.Errorf("terminal enabled but no TTY provided. Did you set --console-socket on create?") } info.stdioFDs = []*fd.FD{ep.hostTTY, ep.hostTTY, ep.hostTTY} ep.hostTTY = nil } else { info.stdioFDs = stdioFDs } ep.tg, ep.tty, ep.ttyVFS2, err = l.createContainerProcess(false, cid, info) if err != nil { return err } l.k.StartProcess(ep.tg) return nil }

//go:nosplit func (c *CPU) SwitchToUser(switchOpts SwitchOpts) (vector Vector) { userCR3 := switchOpts.PageTables.CR3(!switchOpts.Flush, switchOpts.UserPCID) c.kernelCR3 = uintptr(c.kernel.PageTables.CR3(true, switchOpts.KernelPCID)) // Sanitize registers. regs := switchOpts.Registers regs.Eflags &= ^uint64(UserFlagsClear) regs.Eflags |= UserFlagsSet regs.Cs = uint64(Ucode64) // Required for iret. regs.Ss = uint64(Udata) // Ditto. // Perform the switch. swapgs() // GS will be swapped on return. WriteFS(uintptr(regs.Fs_base)) // escapes: no. Set application FS. WriteGS(uintptr(regs.Gs_base)) // escapes: no. Set application GS. LoadFloatingPoint(switchOpts.FloatingPointState) // escapes: no. Copy in floating point. if switchOpts.FullRestore { vector = iret(c, regs, uintptr(userCR3)) } else { vector = sysret(c, regs, uintptr(userCR3)) } SaveFloatingPoint(switchOpts.FloatingPointState) // escapes: no. Copy out floating point. WriteFS(uintptr(c.registers.Fs_base)) // escapes: no. Restore kernel FS. return }

pkg/sentry/kernel/task_run.go:271: info, at, err := t.p.Switch(t, t.MemoryManager(), t.Arch(), t.rseqCPU)

info, at, err := t.p.Switch(t, t.MemoryManager(), t.Arch(), t.rseqCPU) t.accountTaskGoroutineLeave(TaskGoroutineRunningApp) region.End() if clearSinglestep { t.Arch().ClearSingleStep() } switch err { case nil: // Handle application system call. return t.doSyscall()

pkg/sentry/platform/kvm/context.go Switch

// Switch runs the provided context in the given address space. func (c *context) Switch(ctx pkgcontext.Context, mm platform.MemoryManager, ac arch.Context, _ int32) (*arch.SignalInfo, usermem.AccessType, error) { as := mm.AddressSpace() localAS := as.(*addressSpace) // Grab a vCPU. cpu := c.machine.Get() // Enable interrupts (i.e. calls to vCPU.Notify). if !c.interrupt.Enable(cpu) { c.machine.Put(cpu) // Already preempted. return nil, usermem.NoAccess, platform.ErrContextInterrupt } // Set the active address space. // // This must be done prior to the call to Touch below. If the address // space is invalidated between this line and the call below, we will // flag on entry anyways. When the active address space below is // cleared, it indicates that we don't need an explicit interrupt and // that the flush can occur naturally on the next user entry. cpu.active.set(localAS) // Prepare switch options. switchOpts := ring0.SwitchOpts{ Registers: &ac.StateData().Regs, FloatingPointState: (*byte)(ac.FloatingPointData()), PageTables: localAS.pageTables, Flush: localAS.Touch(cpu), FullRestore: ac.FullRestore(), } // Take the blue pill. at, err := cpu.SwitchToUser(switchOpts, &c.info)

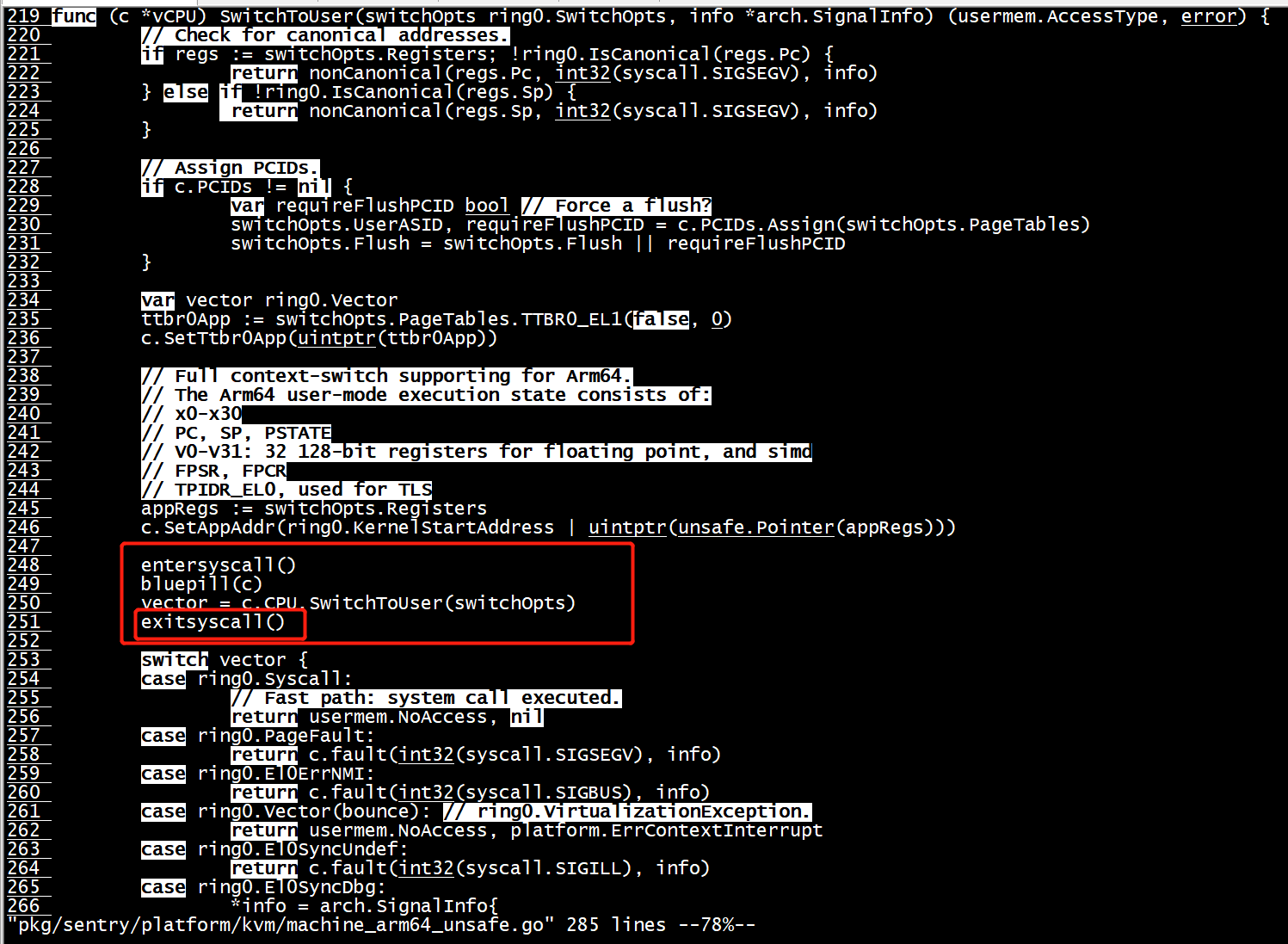

SwitchToUser

pkg/sentry/platform/kvm/machine_amd64.go

// SwitchToUser unpacks architectural-details. func (c *vCPU) SwitchToUser(switchOpts ring0.SwitchOpts, info *arch.SignalInfo) (usermem.AccessType, error) { // Check for canonical addresses. if regs := switchOpts.Registers; !ring0.IsCanonical(regs.Rip) { return nonCanonical(regs.Rip, int32(syscall.SIGSEGV), info) } else if !ring0.IsCanonical(regs.Rsp) { return nonCanonical(regs.Rsp, int32(syscall.SIGBUS), info) } else if !ring0.IsCanonical(regs.Fs_base) { return nonCanonical(regs.Fs_base, int32(syscall.SIGBUS), info) } else if !ring0.IsCanonical(regs.Gs_base) { return nonCanonical(regs.Gs_base, int32(syscall.SIGBUS), info) } // Assign PCIDs. if c.PCIDs != nil { var requireFlushPCID bool // Force a flush? switchOpts.UserPCID, requireFlushPCID = c.PCIDs.Assign(switchOpts.PageTables) switchOpts.KernelPCID = fixedKernelPCID switchOpts.Flush = switchOpts.Flush || requireFlushPCID } // See below. var vector ring0.Vector // Past this point, stack growth can cause system calls (and a break // from guest mode). So we need to ensure that between the bluepill // call here and the switch call immediately below, no additional // allocations occur. entersyscall() bluepill(c) vector = c.CPU.SwitchToUser(switchOpts) exitsyscall()

gvisor.googlesource.com/gvisor/pkg/sentry/platform/kvm.(*vCPU).SwitchToUser(0xc0003c6000, 0xc000690a20, 0xc0008d5900, 0xc000ada090, 0x100070000, 0xc000512848, 0xe4, 0x1, 0x7f931efc4860) pkg/sentry/platform/kvm/machine_amd64.go:235 +0xbe fp=0xc000715da8 sp=0xc000715d38 pc=0x95c94e gvisor.googlesource.com/gvisor/pkg/sentry/platform/kvm.(*context).Switch(0xc000512840, 0xd1f260, 0xc000578480, 0xd2d6a0, 0xc000690a20, 0x7f93ffffffff, 0xc000512848, 0x0, 0x0, 0x0) pkg/sentry/platform/kvm/context.go:71 +0x1fd fp=0xc000715e68 sp=0xc000715da8 pc=0x951fdd gvisor.googlesource.com/gvisor/pkg/sentry/kernel.(*runApp).execute(0x0, 0xc000aeca80, 0xd12980, 0x0) pkg/sentry/kernel/task_run.go:205 +0x348 fp=0xc000715f88 sp=0xc000715e68 pc=0x738d68 gvisor.googlesource.com/gvisor/pkg/sentry/kernel.(*Task).run(0xc000aeca80, 0x39) pkg/sentry/kernel/task_run.go:91 +0x149 fp=0xc000715fd0 sp=0xc000715f88 pc=0x7386a9 runtime.goexit() bazel-out/k8-fastbuild/bin/external/io_bazel_rules_go/linux_amd64_pure_stripped/stdlib%/src/runtime/asm_amd64.s:1333 +0x1 fp=0xc000715fd8 sp=0xc000715fd0 pc=0x457e31 created by gvisor.googlesource.com/gvisor/pkg/sentry/kernel.(*Task).Start pkg/sentry/kernel/task_start.go:279 +0xfe

Almost, except in guest mode, the sentry always executes in ring 0.

You can see the core flow here:

https://github.com/google/gvisor/blob/master/pkg/sentry/platform/ring0/kernel_amd64.go#L215-L231

The sentry is normally mapped at a normal userspace address which

cannot be mapped into application address spaces (since it would conflict

with application mappings). So there is a sentry page table with the normal

mappings, plus a mirror of relevant sentry mappings in the kernel range

(bit 63 set) in all application page tables. This mirrored copy is what

executes between jumpToKernel() and jumpToUser().

iret()/sysret() save RSP/RBP so that the syscall handler (sysenter())

can restore them and then "return" to the call site in SwitchToUser.

The full execution path looks like:

kernel.runApp.execute -> kernel.Task.p.Switch (kvm.context.Switch) ->

kvm.vCPU.SwitchToUser -> ring0.CPU.SwitchToUser

kernel.runApp is part of the core task lifecycle state machine which

handles application syscalls (eventually calling one of the handlers

pkg/sentry/platform/ring0/kernel_arm64.go

func (c *CPU) SwitchToUser(switchOpts SwitchOpts) (vector Vector) { storeAppASID(uintptr(switchOpts.UserASID)) if switchOpts.Flush { FlushTlbByASID(uintptr(switchOpts.UserASID)) } regs := switchOpts.Registers regs.Pstate &= ^uint64(PsrFlagsClear) regs.Pstate |= UserFlagsSet EnableVFP() LoadFloatingPoint(switchOpts.FloatingPointState) kernelExitToEl0() SaveFloatingPoint(switchOpts.FloatingPointState) DisableVFP() vector = c.vecCode return }

MSR_LSTAR

//go:nosplit func start(c *CPU) { // Save per-cpu & FS segment. WriteGS(kernelAddr(c.kernelEntry)) WriteFS(uintptr(c.registers.Fs_base)) // Initialize floating point. // // Note that on skylake, the valid XCR0 mask reported seems to be 0xff. // This breaks down as: // // bit0 - x87 // bit1 - SSE // bit2 - AVX // bit3-4 - MPX // bit5-7 - AVX512 // // For some reason, enabled MPX & AVX512 on platforms that report them // seems to be cause a general protection fault. (Maybe there are some // virtualization issues and these aren't exported to the guest cpuid.) // This needs further investigation, but we can limit the floating // point operations to x87, SSE & AVX for now. fninit() xsetbv(0, validXCR0Mask&0x7) // Set the syscall target. wrmsr(_MSR_LSTAR, kernelFunc(sysenter)) wrmsr(_MSR_SYSCALL_MASK, KernelFlagsClear|_RFLAGS_DF) // NOTE: This depends on having the 64-bit segments immediately // following the 32-bit user segments. This is simply the way the // sysret instruction is designed to work (it assumes they follow). wrmsr(_MSR_STAR, uintptr(uint64(Kcode)<<32|uint64(Ucode32)<<48)) wrmsr(_MSR_CSTAR, kernelFunc(sysenter)) }

gdb bluepillHandler

root@cloud:/mycontainer# dlv attach 926926 Type 'help' for list of commands. (dlv) b bluepillHandler Breakpoint 1 set at 0x87b300 for gvisor.dev/gvisor/pkg/sentry/platform/kvm.bluepillHandler() pkg/sentry/platform/kvm/bluepill_unsafe.go:91 (dlv) continue > gvisor.dev/gvisor/pkg/sentry/platform/kvm.bluepillHandler() pkg/sentry/platform/kvm/bluepill_unsafe.go:91 (hits goroutine(219):1 total:1) (PC: 0x87b300) Warning: debugging optimized function (dlv) bt 0 0x000000000087b300 in gvisor.dev/gvisor/pkg/sentry/platform/kvm.bluepillHandler at pkg/sentry/platform/kvm/bluepill_unsafe.go:91 1 0x0000000000881bec in ??? at ?:-1 2 0x0000ffff90bcf598 in ??? at ?:-1 3 0x000000000087f514 in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*vCPU).SwitchToUser at pkg/sentry/platform/kvm/machine_arm64_unsafe.go:249 (dlv) clear 1 Breakpoint 1 cleared at 0x87b300 for gvisor.dev/gvisor/pkg/sentry/platform/kvm.bluepillHandler() pkg/sentry/platform/kvm/bluepill_unsafe.go:91 (dlv) quit Would you like to kill the process? [Y/n] n

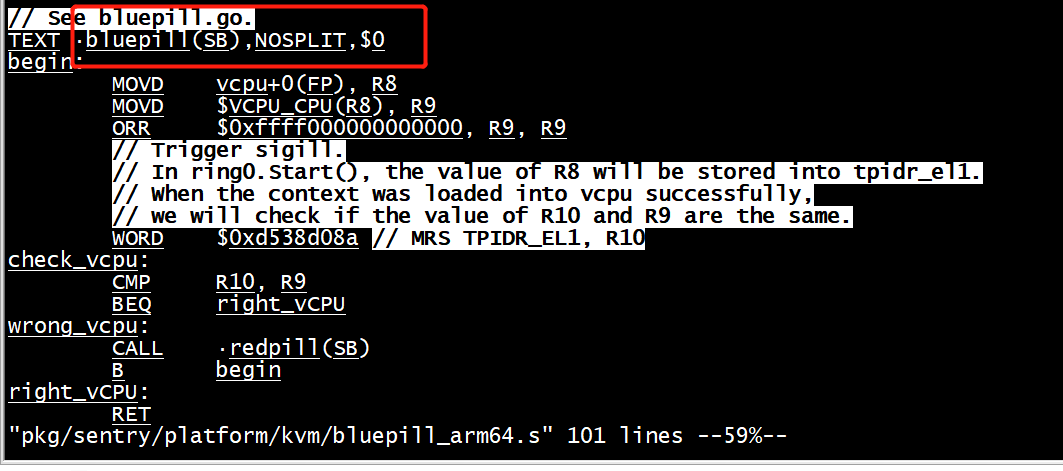

bluepill

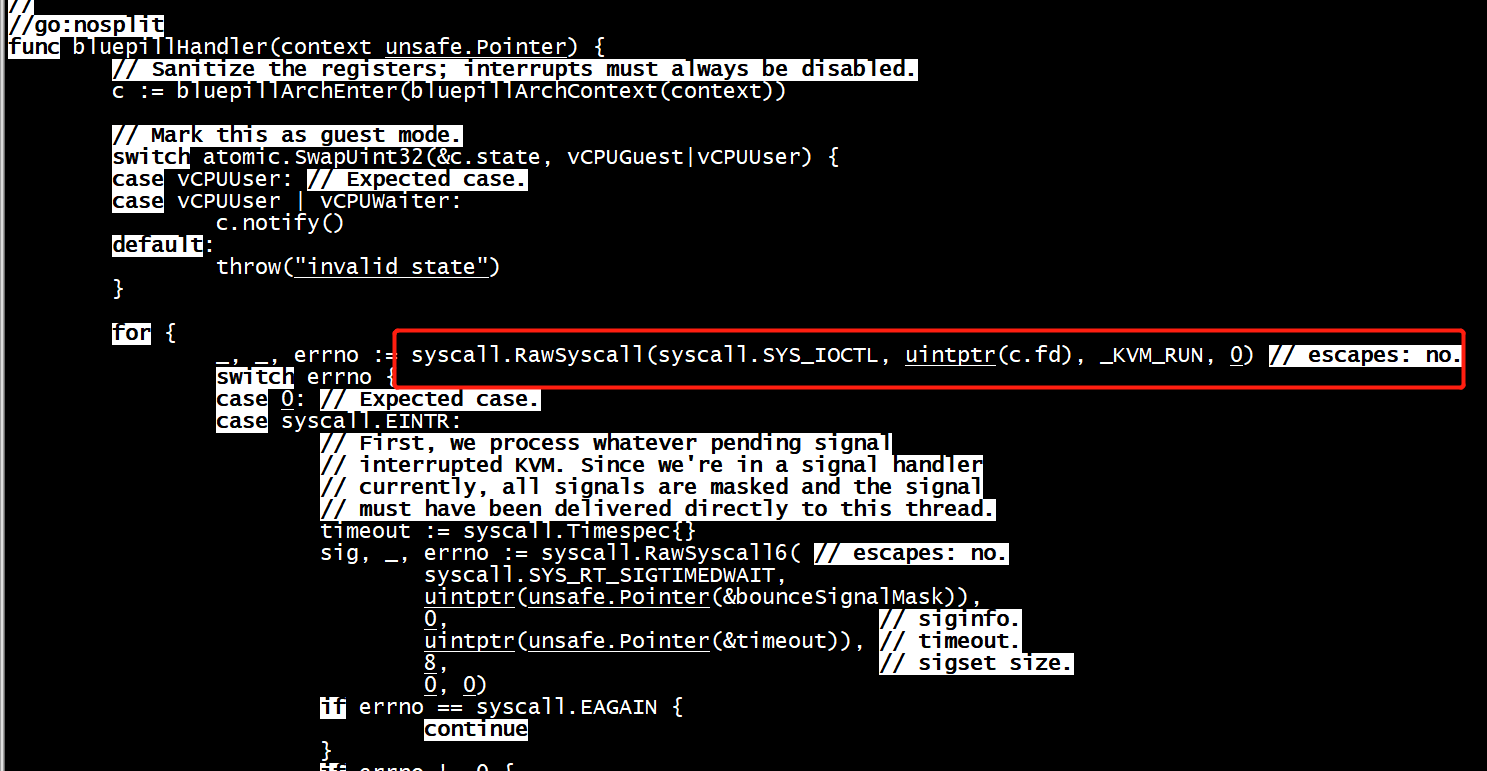

bluepillHandler KVM_RUN

gdb SwitchToUser

root@cloud:/mycontainer# dlv attach 926926 Type 'help' for list of commands. (dlv) b SwitchToUser Command failed: Location "SwitchToUser" ambiguous: gvisor.dev/gvisor/pkg/sentry/platform/ring0.(*CPU).SwitchToUser, gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*vCPU).SwitchToUser… (dlv) b ring0.SwitchToUser Breakpoint 1 set at 0x877cf0 for gvisor.dev/gvisor/pkg/sentry/platform/ring0.(*CPU).SwitchToUser() pkg/sentry/platform/ring0/kernel_arm64.go:63 (dlv) b kvm.SwitchToUser Breakpoint 2 set at 0x87f490 for gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*vCPU).SwitchToUser() pkg/sentry/platform/kvm/machine_arm64_unsafe.go:219 (dlv) c > gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*vCPU).SwitchToUser() pkg/sentry/platform/kvm/machine_arm64_unsafe.go:219 (hits goroutine(372):1 total:1) (PC: 0x87f490) Warning: debugging optimized function (dlv) bt 0 0x000000000087f490 in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*vCPU).SwitchToUser at pkg/sentry/platform/kvm/machine_arm64_unsafe.go:219 1 0x000000000087bb1c in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*context).Switch at pkg/sentry/platform/kvm/context.go:75 2 0x00000000005186d0 in gvisor.dev/gvisor/pkg/sentry/kernel.(*runApp).execute at pkg/sentry/kernel/task_run.go:271 3 0x0000000000517d9c in gvisor.dev/gvisor/pkg/sentry/kernel.(*Task).run at pkg/sentry/kernel/task_run.go:97 4 0x0000000000077c84 in runtime.goexit at src/runtime/asm_arm64.s:1136 (dlv) c > gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*vCPU).SwitchToUser() pkg/sentry/platform/kvm/machine_arm64_unsafe.go:219 (hits goroutine(257):1 total:2) (PC: 0x87f490) Warning: debugging optimized function (dlv) bt 0 0x000000000087f490 in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*vCPU).SwitchToUser at pkg/sentry/platform/kvm/machine_arm64_unsafe.go:219 1 0x000000000087bb1c in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*context).Switch at pkg/sentry/platform/kvm/context.go:75 2 0x00000000005186d0 in gvisor.dev/gvisor/pkg/sentry/kernel.(*runApp).execute at pkg/sentry/kernel/task_run.go:271 3 0x0000000000517d9c in gvisor.dev/gvisor/pkg/sentry/kernel.(*Task).run at pkg/sentry/kernel/task_run.go:97 4 0x0000000000077c84 in runtime.goexit at src/runtime/asm_arm64.s:1136 (dlv) clearall Breakpoint 1 cleared at 0x877cf0 for gvisor.dev/gvisor/pkg/sentry/platform/ring0.(*CPU).SwitchToUser() pkg/sentry/platform/ring0/kernel_arm64.go:63 Breakpoint 2 cleared at 0x87f490 for gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*vCPU).SwitchToUser() pkg/sentry/platform/kvm/machine_arm64_unsafe.go:219 (dlv) b ring0.SwitchToUser Breakpoint 3 set at 0x877cf0 for gvisor.dev/gvisor/pkg/sentry/platform/ring0.(*CPU).SwitchToUser() pkg/sentry/platform/ring0/kernel_arm64.go:63 (dlv) c > gvisor.dev/gvisor/pkg/sentry/platform/ring0.(*CPU).SwitchToUser() pkg/sentry/platform/ring0/kernel_arm64.go:63 (hits goroutine(372):1 total:1) (PC: 0x877cf0) Warning: debugging optimized function (dlv) bt 0 0x0000000000877cf0 in gvisor.dev/gvisor/pkg/sentry/platform/ring0.(*CPU).SwitchToUser at pkg/sentry/platform/ring0/kernel_arm64.go:63 1 0x000000000087f53c in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*vCPU).SwitchToUser at pkg/sentry/platform/kvm/machine_arm64_unsafe.go:250 2 0x000000000087bb1c in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*context).Switch at pkg/sentry/platform/kvm/context.go:75 3 0x00000000005186d0 in gvisor.dev/gvisor/pkg/sentry/kernel.(*runApp).execute at pkg/sentry/kernel/task_run.go:271 4 0x0000000000517d9c in gvisor.dev/gvisor/pkg/sentry/kernel.(*Task).run at pkg/sentry/kernel/task_run.go:97 5 0x0000000000077c84 in runtime.goexit at src/runtime/asm_arm64.s:1136 (dlv) c > gvisor.dev/gvisor/pkg/sentry/platform/ring0.(*CPU).SwitchToUser() pkg/sentry/platform/ring0/kernel_arm64.go:63 (hits goroutine(257):1 total:2) (PC: 0x877cf0) Warning: debugging optimized function (dlv) bt 0 0x0000000000877cf0 in gvisor.dev/gvisor/pkg/sentry/platform/ring0.(*CPU).SwitchToUser at pkg/sentry/platform/ring0/kernel_arm64.go:63 1 0x000000000087f53c in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*vCPU).SwitchToUser at pkg/sentry/platform/kvm/machine_arm64_unsafe.go:250 2 0x000000000087bb1c in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*context).Switch at pkg/sentry/platform/kvm/context.go:75 3 0x00000000005186d0 in gvisor.dev/gvisor/pkg/sentry/kernel.(*runApp).execute at pkg/sentry/kernel/task_run.go:271 4 0x0000000000517d9c in gvisor.dev/gvisor/pkg/sentry/kernel.(*Task).run at pkg/sentry/kernel/task_run.go:97 5 0x0000000000077c84 in runtime.goexit at src/runtime/asm_arm64.s:1136 (dlv) bt 0 0x0000000000877cf0 in gvisor.dev/gvisor/pkg/sentry/platform/ring0.(*CPU).SwitchToUser at pkg/sentry/platform/ring0/kernel_arm64.go:63 1 0x000000000087f53c in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*vCPU).SwitchToUser at pkg/sentry/platform/kvm/machine_arm64_unsafe.go:250 2 0x000000000087bb1c in gvisor.dev/gvisor/pkg/sentry/platform/kvm.(*context).Switch at pkg/sentry/platform/kvm/context.go:75 3 0x00000000005186d0 in gvisor.dev/gvisor/pkg/sentry/kernel.(*runApp).execute at pkg/sentry/kernel/task_run.go:271 4 0x0000000000517d9c in gvisor.dev/gvisor/pkg/sentry/kernel.(*Task).run at pkg/sentry/kernel/task_run.go:97 5 0x0000000000077c84 in runtime.goexit at src/runtime/asm_arm64.s:1136 (dlv) quit Would you like to kill the process? [Y/n] n root@cloud:/mycontainer#

bluepill + c.CPU.SwitchToUser