qemu + dpdk vq->callfd

qemu-kvm segfault in kvm packstack aio + ovs-dpdk

https://bugzilla.redhat.com/show_bug.cgi?id=1380703

(gdb) bt #0 0x00007fb6c573c0b8 in kvm_virtio_pci_irqfd_use (proxy=proxy@entry=0x7fb6d17e2000, queue_no=queue_no@entry=0, vector=vector@entry=1) at hw/virtio/virtio-pci.c:498 #1 0x00007fb6c573d2de in virtio_pci_vq_vector_unmask (msg=..., vector=1, queue_no=0, proxy=0x7fb6d17e2000) at hw/virtio/virtio-pci.c:624 #2 virtio_pci_vector_unmask (dev=0x7fb6d17e2000, vector=1, msg=...) at hw/virtio/virtio-pci.c:660 #3 0x00007fb6c570d7ca in msix_set_notifier_for_vector (vector=1, dev=0x7fb6d17e2000) at hw/pci/msix.c:513 #4 msix_set_vector_notifiers (dev=dev@entry=0x7fb6d17e2000, use_notifier=use_notifier@entry=0x7fb6c573d130 <virtio_pci_vector_unmask>, release_notifier=release_notifier@entry=0x7fb6c573d080 <virtio_pci_vector_mask>, poll_notifier=poll_notifier@entry=0x7fb6c573bf40 <virtio_pci_vector_poll>) at hw/pci/msix.c:540 #5 0x00007fb6c573d82d in virtio_pci_set_guest_notifiers (d=0x7fb6d17e2000, nvqs=2, assign=<optimized out>) at hw/virtio/virtio-pci.c:821 #6 0x00007fb6c55ed1c0 in vhost_net_start (dev=dev@entry=0x7fb6d17e9f40, ncs=0x7fb6c8601da0, total_queues=total_queues@entry=1) at /usr/src/debug/qemu-2.3.0/hw/net/vhost_net.c:353 #7 0x00007fb6c55e91e4 in virtio_net_vhost_status (status=<optimized out>, n=0x7fb6d17e9f40) at /usr/src/debug/qemu-2.3.0/hw/net/virtio-net.c:143 #8 virtio_net_set_status (vdev=<optimized out>, status=7 '\a') at /usr/src/debug/qemu-2.3.0/hw/net/virtio-net.c:162 #9 0x00007fb6c55f97dc in virtio_set_status (vdev=vdev@entry=0x7fb6d17e9f40, val=val@entry=7 '\a') at /usr/src/debug/qemu-2.3.0/hw/virtio/virtio.c:609 #10 0x00007fb6c573ca4e in virtio_ioport_write (val=7, addr=18, opaque=0x7fb6d17e2000) at hw/virtio/virtio-pci.c:283 #11 virtio_pci_config_write (opaque=0x7fb6d17e2000, addr=18, val=7, size=<optimized out>) at hw/virtio/virtio-pci.c:409 #12 0x00007fb6c55ca3d7 in memory_region_write_accessor (mr=0x7fb6d17e2880, addr=<optimized out>, value=0x7fb6ac21a338, size=1, shift=<optimized out>, mask=<optimized out>, attrs=...) at /usr/src/debug/qemu-2.3.0/memory.c:457 #13 0x00007fb6c55ca0e9 in access_with_adjusted_size (addr=addr@entry=18, value=value@entry=0x7fb6ac21a338, size=size@entry=1, access_size_min=<optimized out>, access_size_max=<optimized out>, access=access@entry=0x7fb6c55ca380 <memory_region_write_accessor>, mr=mr@entry=0x7fb6d17e2880, attrs=attrs@entry=...) at /usr/src/debug/qemu-2.3.0/memory.c:516 #14 0x00007fb6c55cbb51 in memory_region_dispatch_write (mr=mr@entry=0x7fb6d17e2880, addr=18, data=7, size=1, attrs=...) at /usr/src/debug/qemu-2.3.0/memory.c:1161 #15 0x00007fb6c55976e0 in address_space_rw (as=0x7fb6c5c51cc0 <address_space_io>, addr=49266, attrs=..., buf=buf@entry=0x7fb6ac21a40c "\a\177", len=len@entry=1, is_write=is_write@entry=true) at /usr/src/debug/qemu-2.3.0/exec.c:2353 #16 0x00007fb6c559794b in address_space_write (as=<optimized out>, addr=<optimized out>, attrs=..., attrs@entry=..., buf=buf@entry=0x7fb6ac21a40c "\a\177", len=len@entry=1) at /usr/src/debug/qemu-2.3.0/exec.c:2415 #17 0x00007fb6c55c3a4c in cpu_outb (addr=<optimized out>, val=7 '\a') at /usr/src/debug/qemu-2.3.0/ioport.c:67 #18 0x00007fb6ad7020b0 in ?? () #19 0x00007fb6ac21a500 in ?? () #20 0x0000000000000000 in ?? ()

https://bugzilla.redhat.com/show_bug.cgi?id=1410716

Qemu segfault when using TCG acceleration with vhost-user netdev: #0 0x00005624dd5b2cee in kvm_virtio_pci_irqfd_use (proxy=proxy@entry=0x5624e62f8000, queue_no=queue_no@entry=0, vector=vector@entry=1) at /usr/src/debug/qemu-2.7.0/hw/virtio/virtio-pci.c:735 #1 0x00005624dd5b4556 in virtio_pci_vq_vector_unmask (msg=..., vector=1, queue_no=0, proxy=0x5624e62f8000) at /usr/src/debug/qemu-2.7.0/hw/virtio/virtio-pci.c:860 #2 0x00005624dd5b4556 in virtio_pci_vector_unmask (dev=0x5624e62f8000, vector=1, msg=...) at /usr/src/debug/qemu-2.7.0/hw/virtio/virtio-pci.c:896 #3 0x00005624dd55eb06 in msix_set_notifier_for_vector (vector=1, dev=0x5624e62f8000) at /usr/src/debug/qemu-2.7.0/hw/pci/msix.c:525 #4 0x00005624dd55eb06 in msix_set_vector_notifiers (dev=dev@entry=0x5624e62f8000, use_notifier=use_notifier@entry=0x5624dd5b4320 <virtio_pci_vector_unmask>, release_notifier=release_notifier@entry=0x5624dd5b4280 <virtio_pci_vector_mask>, poll_notifier=poll_notifier@entry=0x5624dd5b2b80 <virtio_pci_vector_poll>) at /usr/src/debug/qemu-2.7.0/hw/pci/msix.c:552 #5 0x00005624dd5b4829 in virtio_pci_set_guest_notifiers (d=0x5624e62f8000, nvqs=2, assign=true) at /usr/src/debug/qemu-2.7.0/hw/virtio/virtio-pci.c:1057 #6 0x00005624dd3e037b in vhost_net_start (dev=dev@entry=0x5624e6300340, ncs=0x5624df8d7f00, total_queues=total_queues@entry=1) at /usr/src/debug/qemu-2.7.0/hw/net/vhost_net.c:317 #7 0x00005624dd3dce73 in virtio_net_vhost_status (status=15 '\017', n=0x5624e6300340) at /usr/src/debug/qemu-2.7.0/hw/net/virtio-net.c:151 #8 0x00005624dd3dce73 in virtio_net_set_status (vdev=<optimized out>, status=<optimized out>) at /usr/src/debug/qemu-2.7.0/hw/net/virtio-net.c:224 #9 0x00005624dd3f08b9 in virtio_set_status (vdev=vdev@entry=0x5624e6300340, val=<optimized out>) at /usr/src/debug/qemu-2.7.0/hw/virtio/virtio.c:765 #10 0x00005624dd5b28c4 in virtio_pci_common_write (opaque=0x5624e62f8000, addr=<optimized out>, val=<optimized out>, size=<optimized out>) at /usr/src/debug/qemu-2.7.0/hw/virtio/virtio-pci.c:1298 #11 0x00005624dd3af086 in memory_region_write_accessor (mr=<optimized out>, addr=<optimized out>, value=<optimized out>, size=<optimized out>, shift=<optimized out>, mask=<optimized out>, attrs=...) at /usr/src/debug/qemu-2.7.0/memory.c:525 #12 0x00005624dd3ad97d in access_with_adjusted_size (addr=addr@entry=20, value=value@entry=0x7fb9583fa0d8, size=size@entry=1, access_size_min=<optimized out>, access_size_max=<optimized out>, access= 0x5624dd3af040 <memory_region_write_accessor>, mr=0x5624e62f8988, attrs=...) at /usr/src/debug/qemu-2.7.0/memory.c:591 #13 0x00005624dd3b0dac in memory_region_dispatch_write (mr=<optimized out>, addr=20, data=<optimized out>, size=<optimized out>, attrs=...) at /usr/src/debug/qemu-2.7.0/memory.c:1275 #14 0x00007fb962c730a4 in code_gen_buffer () #15 0x00005624dd35f805 in cpu_tb_exec (cpu=cpu@entry=0x5624e51d6000, itb=<optimized out>, itb=<optimized out>) at /usr/src/debug/qemu-2.7.0/cpu-exec.c:166 #16 0x00005624dd374f22 in cpu_loop_exec_tb (sc=0x7fb9583fa640, tb_exit=<synthetic pointer>, last_tb=0x7fb9583fa630, tb=<optimized out>, cpu=0x5624e51d6000) at /usr/src/debug/qemu-2.7.0/cpu-exec.c:530 #17 0x00005624dd374f22 in cpu_exec (cpu=<optimized out>) at /usr/src/debug/qemu-2.7.0/cpu-exec.c:625 #18 0x00005624dd39aa8f in qemu_tcg_cpu_thread_fn (arg=<optimized out>) at /usr/src/debug/qemu-2.7.0/cpus.c:1541 #19 0x00007fb9b8d9b6ca in start_thread (arg=0x7fb9583fd700) at pthread_create.c:333 #20 0x00007fb9b8ad5f6f in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:105

Breakpoint 2, vhost_user_set_vring_call (pdev=0xffffbc3ecfa8, msg=0xffffbc3ecd00, main_fd=45) at /data1/dpdk-19.11/lib/librte_vhost/vhost_user.c:1563

1563 struct virtio_net *dev = *pdev;

(gdb) bt

#0 vhost_user_set_vring_call (pdev=0xffffbc3ecfa8, msg=0xffffbc3ecd00, main_fd=45) at /data1/dpdk-19.11/lib/librte_vhost/vhost_user.c:1563

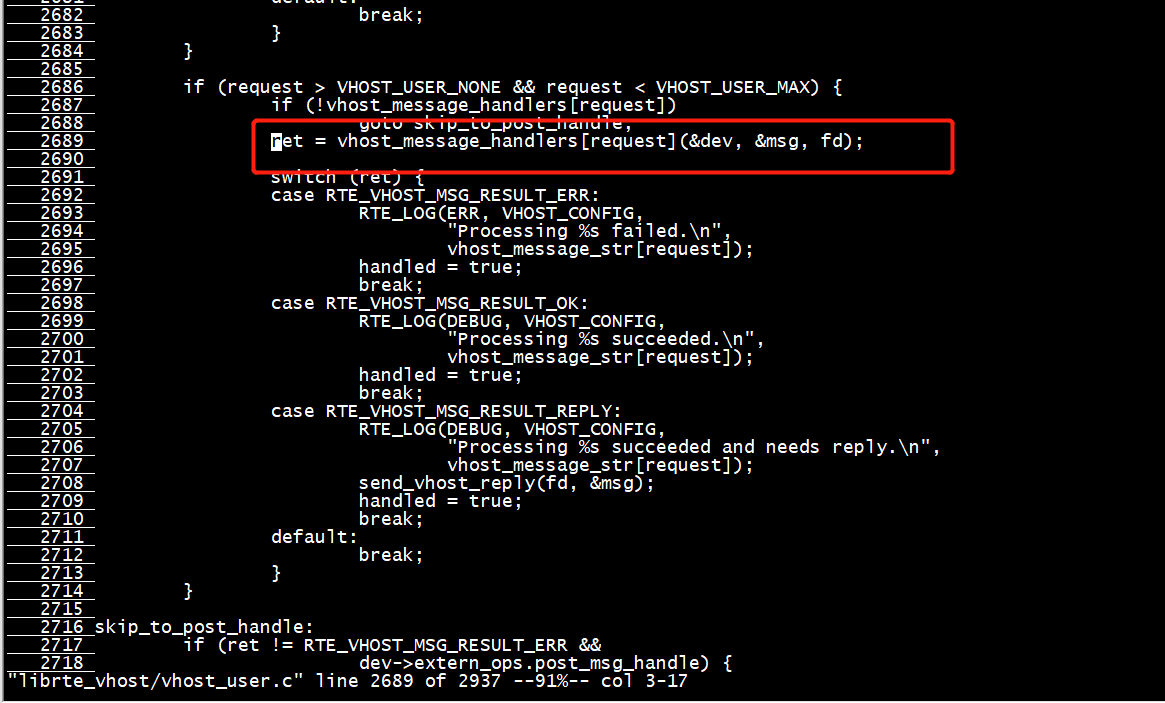

#1 0x0000000000511e24 in vhost_user_msg_handler (vid=0, fd=45) at /data1/dpdk-19.11/lib/librte_vhost/vhost_user.c:2689 ----------消息处理

#2 0x0000000000504eb0 in vhost_user_read_cb (connfd=45, dat=0x15a3de0, remove=0xffffbc3ed064) at /data1/dpdk-19.11/lib/librte_vhost/socket.c:306

#3 0x0000000000502198 in fdset_event_dispatch (arg=0x11f37f8 <vhost_user+8192>) at /data1/dpdk-19.11/lib/librte_vhost/fd_man.c:286

#4 0x00000000005a05a4 in rte_thread_init (arg=0x15a42c0) at /data1/dpdk-19.11/lib/librte_eal/common/eal_common_thread.c:165

#5 0x0000ffffbe617d38 in start_thread (arg=0xffffbc3ed910) at pthread_create.c:309

#6 0x0000ffffbe55f5f0 in thread_start () at ../sysdeps/unix/sysv/linux/aarch64/clone.S:91

(gdb)

Breakpoint 1, vhost_user_set_vring_kick (pdev=0xffffbc3ecfa8, msg=0xffffbc3ecd00, main_fd=45) at /data1/dpdk-19.11/lib/librte_vhost/vhost_user.c:1795

1795 struct virtio_net *dev = *pdev;

(gdb) bt

#0 vhost_user_set_vring_kick (pdev=0xffffbc3ecfa8, msg=0xffffbc3ecd00, main_fd=45) at /data1/dpdk-19.11/lib/librte_vhost/vhost_user.c:1795

#1 0x0000000000511e24 in vhost_user_msg_handler (vid=0, fd=45) at /data1/dpdk-19.11/lib/librte_vhost/vhost_user.c:2689--消息处理

#2 0x0000000000504eb0 in vhost_user_read_cb (connfd=45, dat=0x15a3de0, remove=0xffffbc3ed064) at /data1/dpdk-19.11/lib/librte_vhost/socket.c:306

#3 0x0000000000502198 in fdset_event_dispatch (arg=0x11f37f8 <vhost_user+8192>) at /data1/dpdk-19.11/lib/librte_vhost/fd_man.c:286

#4 0x00000000005a05a4 in rte_thread_init (arg=0x15a42c0) at /data1/dpdk-19.11/lib/librte_eal/common/eal_common_thread.c:165

#5 0x0000ffffbe617d38 in start_thread (arg=0xffffbc3ed910) at pthread_create.c:309

#6 0x0000ffffbe55f5f0 in thread_start () at ../sysdeps/unix/sysv/linux/aarch64/clone.S:91

(gdb)

rte_vhost_driver_register

int rte_vhost_driver_register(const char *path, uint64_t flags) { int ret = -1; struct vhost_user_socket *vsocket; if (!path) return -1; pthread_mutex_lock(&vhost_user.mutex); if (vhost_user.vsocket_cnt == MAX_VHOST_SOCKET) { RTE_LOG(ERR, VHOST_CONFIG, "error: the number of vhost sockets reaches maximum\n"); goto out; } vsocket = malloc(sizeof(struct vhost_user_socket)); if (!vsocket) goto out; memset(vsocket, 0, sizeof(struct vhost_user_socket)); vsocket->path = strdup(path); if (vsocket->path == NULL) { RTE_LOG(ERR, VHOST_CONFIG, "error: failed to copy socket path string\n"); vhost_user_socket_mem_free(vsocket); goto out; } TAILQ_INIT(&vsocket->conn_list); ret = pthread_mutex_init(&vsocket->conn_mutex, NULL); if (ret) { RTE_LOG(ERR, VHOST_CONFIG, "error: failed to init connection mutex\n"); goto out_free; } vsocket->dequeue_zero_copy = flags & RTE_VHOST_USER_DEQUEUE_ZERO_COPY; /* * Set the supported features correctly for the builtin vhost-user * net driver. * * Applications know nothing about features the builtin virtio net * driver (virtio_net.c) supports, thus it's not possible for them * to invoke rte_vhost_driver_set_features(). To workaround it, here * we set it unconditionally. If the application want to implement * another vhost-user driver (say SCSI), it should call the * rte_vhost_driver_set_features(), which will overwrite following * two values. */ vsocket->use_builtin_virtio_net = true; vsocket->supported_features = VIRTIO_NET_SUPPORTED_FEATURES; vsocket->features = VIRTIO_NET_SUPPORTED_FEATURES; vsocket->protocol_features = VHOST_USER_PROTOCOL_FEATURES; /* * Dequeue zero copy can't assure descriptors returned in order. * Also, it requires that the guest memory is populated, which is * not compatible with postcopy. */ if (vsocket->dequeue_zero_copy) { vsocket->supported_features &= ~(1ULL << VIRTIO_F_IN_ORDER); vsocket->features &= ~(1ULL << VIRTIO_F_IN_ORDER); RTE_LOG(INFO, VHOST_CONFIG, "Dequeue zero copy requested, disabling postcopy support\n"); vsocket->protocol_features &= ~(1ULL << VHOST_USER_PROTOCOL_F_PAGEFAULT); } if (!(flags & RTE_VHOST_USER_IOMMU_SUPPORT)) { vsocket->supported_features &= ~(1ULL << VIRTIO_F_IOMMU_PLATFORM); vsocket->features &= ~(1ULL << VIRTIO_F_IOMMU_PLATFORM); } if (!(flags & RTE_VHOST_USER_POSTCOPY_SUPPORT)) { vsocket->protocol_features &= ~(1ULL << VHOST_USER_PROTOCOL_F_PAGEFAULT); } else { #ifndef RTE_LIBRTE_VHOST_POSTCOPY RTE_LOG(ERR, VHOST_CONFIG, "Postcopy requested but not compiled\n"); ret = -1; goto out_mutex; #endif } if ((flags & RTE_VHOST_USER_CLIENT) != 0) { vsocket->reconnect = !(flags & RTE_VHOST_USER_NO_RECONNECT); if (vsocket->reconnect && reconn_tid == 0) { if (vhost_user_reconnect_init() != 0) goto out_mutex; } } else { vsocket->is_server = true; } ret = create_unix_socket(vsocket); if (ret < 0) { goto out_mutex; } vhost_user.vsockets[vhost_user.vsocket_cnt++] = vsocket; pthread_mutex_unlock(&vhost_user.mutex); return ret; out_mutex: if (pthread_mutex_destroy(&vsocket->conn_mutex)) { RTE_LOG(ERR, VHOST_CONFIG, "error: failed to destroy connection mutex\n"); } out_free: vhost_user_socket_mem_free(vsocket); out: pthread_mutex_unlock(&vhost_user.mutex); return ret; }

rte_vhost_driver_start(Socket_files)

int rte_vhost_driver_start(const char *path) { struct vhost_user_socket *vsocket; static pthread_t fdset_tid; pthread_mutex_lock(&vhost_user.mutex); vsocket = find_vhost_user_socket(path); pthread_mutex_unlock(&vhost_user.mutex); if (!vsocket) return -1; if (fdset_tid == 0) { /** * create a pipe which will be waited by poll and notified to * rebuild the wait list of poll. */ if (fdset_pipe_init(&vhost_user.fdset) < 0) { RTE_LOG(ERR, VHOST_CONFIG, "failed to create pipe for vhost fdset\n"); return -1; } int ret = rte_ctrl_thread_create(&fdset_tid, "vhost-events", NULL, fdset_event_dispatch, &vhost_user.fdset); if (ret != 0) { RTE_LOG(ERR, VHOST_CONFIG, "failed to create fdset handling thread"); fdset_pipe_uninit(&vhost_user.fdset); return -1; } } if (vsocket->is_server) return vhost_user_start_server(vsocket); else return vhost_user_start_client(vsocket); }

vhost_user_msg_handler

static void vhost_user_read_cb(int connfd, void *dat, int *remove) { struct vhost_user_connection *conn = dat; struct vhost_user_socket *vsocket = conn->vsocket; int ret; ret = vhost_user_msg_handler(conn->vid, connfd); if (ret < 0) { close(connfd); *remove = 1; vhost_destroy_device(conn->vid); if (vsocket->notify_ops->destroy_connection) vsocket->notify_ops->destroy_connection(conn->vid); pthread_mutex_lock(&vsocket->conn_mutex); TAILQ_REMOVE(&vsocket->conn_list, conn, next); pthread_mutex_unlock(&vsocket->conn_mutex); free(conn); if (vsocket->reconnect) { create_unix_socket(vsocket); vhost_user_start_client(vsocket); } } }

int vhost_user_msg_handler(int vid, int fd) { struct virtio_net *dev; struct VhostUserMsg msg; struct rte_vdpa_device *vdpa_dev; int did = -1; int ret; int unlock_required = 0; uint32_t skip_master = 0; int request; dev = get_device(vid); if (dev == NULL) return -1; if (!dev->notify_ops) { dev->notify_ops = vhost_driver_callback_get(dev->ifname); if (!dev->notify_ops) { RTE_LOG(ERR, VHOST_CONFIG, "failed to get callback ops for driver %s\n", dev->ifname); return -1; } } ret = read_vhost_message(fd, &msg); if (ret <= 0 || msg.request.master >= VHOST_USER_MAX) { if (ret < 0) RTE_LOG(ERR, VHOST_CONFIG, "vhost read message failed\n"); else if (ret == 0) RTE_LOG(INFO, VHOST_CONFIG, "vhost peer closed\n"); else RTE_LOG(ERR, VHOST_CONFIG, "vhost read incorrect message\n"); return -1; } ret = 0; if (msg.request.master != VHOST_USER_IOTLB_MSG) RTE_LOG(INFO, VHOST_CONFIG, "read message %s\n", vhost_message_str[msg.request.master]); else RTE_LOG(DEBUG, VHOST_CONFIG, "read message %s\n", vhost_message_str[msg.request.master]); ret = vhost_user_check_and_alloc_queue_pair(dev, &msg); if (ret < 0) { RTE_LOG(ERR, VHOST_CONFIG, "failed to alloc queue\n"); return -1; } /* * Note: we don't lock all queues on VHOST_USER_GET_VRING_BASE * and VHOST_USER_RESET_OWNER, since it is sent when virtio stops * and device is destroyed. destroy_device waits for queues to be * inactive, so it is safe. Otherwise taking the access_lock * would cause a dead lock. */ switch (msg.request.master) { case VHOST_USER_SET_FEATURES: case VHOST_USER_SET_PROTOCOL_FEATURES: case VHOST_USER_SET_OWNER: case VHOST_USER_SET_MEM_TABLE: case VHOST_USER_SET_LOG_BASE: case VHOST_USER_SET_LOG_FD: case VHOST_USER_SET_VRING_NUM: case VHOST_USER_SET_VRING_ADDR: case VHOST_USER_SET_VRING_BASE: case VHOST_USER_SET_VRING_KICK: case VHOST_USER_SET_VRING_CALL: case VHOST_USER_SET_VRING_ERR: case VHOST_USER_SET_VRING_ENABLE: case VHOST_USER_SEND_RARP: case VHOST_USER_NET_SET_MTU: case VHOST_USER_SET_SLAVE_REQ_FD: vhost_user_lock_all_queue_pairs(dev); unlock_required = 1; break; default: break; } if (dev->extern_ops.pre_msg_handle) { ret = (*dev->extern_ops.pre_msg_handle)(dev->vid, (void *)&msg, &skip_master); if (ret == VH_RESULT_ERR) goto skip_to_reply; else if (ret == VH_RESULT_REPLY) send_vhost_reply(fd, &msg); if (skip_master) goto skip_to_post_handle; } request = msg.request.master; if (request > VHOST_USER_NONE && request < VHOST_USER_MAX) { if (!vhost_message_handlers[request]) goto skip_to_post_handle; ret = vhost_message_handlers[request](&dev, &msg, fd); switch (ret) { case VH_RESULT_ERR: RTE_LOG(ERR, VHOST_CONFIG, "Processing %s failed.\n", vhost_message_str[request]); break; case VH_RESULT_OK: RTE_LOG(DEBUG, VHOST_CONFIG, "Processing %s succeeded.\n", vhost_message_str[request]); break; case VH_RESULT_REPLY: RTE_LOG(DEBUG, VHOST_CONFIG, "Processing %s succeeded and needs reply.\n", vhost_message_str[request]); send_vhost_reply(fd, &msg); break; } } else { RTE_LOG(ERR, VHOST_CONFIG, "Requested invalid message type %d.\n", request); ret = VH_RESULT_ERR; } skip_to_post_handle: if (ret != VH_RESULT_ERR && dev->extern_ops.post_msg_handle) { ret = (*dev->extern_ops.post_msg_handle)( dev->vid, (void *)&msg); if (ret == VH_RESULT_ERR) goto skip_to_reply; else if (ret == VH_RESULT_REPLY) send_vhost_reply(fd, &msg); } skip_to_reply: if (unlock_required) vhost_user_unlock_all_queue_pairs(dev); /* * If the request required a reply that was already sent, * this optional reply-ack won't be sent as the * VHOST_USER_NEED_REPLY was cleared in send_vhost_reply(). */ if (msg.flags & VHOST_USER_NEED_REPLY) { msg.payload.u64 = ret == VH_RESULT_ERR; msg.size = sizeof(msg.payload.u64); msg.fd_num = 0; send_vhost_reply(fd, &msg); } else if (ret == VH_RESULT_ERR) { RTE_LOG(ERR, VHOST_CONFIG, "vhost message handling failed.\n"); return -1; } if (!(dev->flags & VIRTIO_DEV_RUNNING) && virtio_is_ready(dev)) { dev->flags |= VIRTIO_DEV_READY; if (!(dev->flags & VIRTIO_DEV_RUNNING)) { if (dev->dequeue_zero_copy) { RTE_LOG(INFO, VHOST_CONFIG, "dequeue zero copy is enabled\n"); } if (dev->notify_ops->new_device(dev->vid) == 0) dev->flags |= VIRTIO_DEV_RUNNING; } } did = dev->vdpa_dev_id; vdpa_dev = rte_vdpa_get_device(did); if (vdpa_dev && virtio_is_ready(dev) && !(dev->flags & VIRTIO_DEV_VDPA_CONFIGURED) && msg.request.master == VHOST_USER_SET_VRING_ENABLE) { if (vdpa_dev->ops->dev_conf) vdpa_dev->ops->dev_conf(dev->vid); dev->flags |= VIRTIO_DEV_VDPA_CONFIGURED; if (vhost_user_host_notifier_ctrl(dev->vid, true) != 0) { RTE_LOG(INFO, VHOST_CONFIG, "(%d) software relay is used for vDPA, performance may be low.\n", dev->vid); } } return 0; }

实际上,QEMU中有一个与DPDK的消息处理函数类型的处理函数。

qemu-3.0.0/contrib/libvhost-user/libvhost-user.c

1218 static bool 1219 vu_process_message(VuDev *dev, VhostUserMsg *vmsg) 1220 { (...) 1244 switch (vmsg->request) { (...) 1265 case VHOST_USER_SET_VRING_ADDR: 1266 return vu_set_vring_addr_exec(dev, vmsg); (...)

显然,QEMU中需要有函数通过UNIX套接口发送内存地址信息到DPDK中。

qemu-3.0.0/hw/virtio/vhost-user.c

588 static int vhost_user_set_vring_addr(struct vhost_dev *dev, 589 struct vhost_vring_addr *addr) 590 { 591 VhostUserMsg msg = { 592 .hdr.request = VHOST_USER_SET_VRING_ADDR, 593 .hdr.flags = VHOST_USER_VERSION, 594 .payload.addr = *addr, 595 .hdr.size = sizeof(msg.payload.addr), 596 }; 597 598 if (vhost_user_write(dev, &msg, NULL, 0) < 0) { 599 return -1; 600 } 601 602 return 0;

drivers/crypto/virtio/virtio_ring.h:28:#define VRING_AVAIL_F_NO_INTERRUPT 1

vring_virtqueue里面最重要的数据结构vring

struct vring {

unsigned int num;

struct vring_desc *desc;

struct vring_avail *avail;

struct vring_used *used;

};可以看到vring里面又包含了三个非常重要的数据结构 vring_desc,vring_avail, vring_used.先看一下vring_avail数据结构(注:这三个数据结构前后端是通过共享内存来访问的)

struct vring_avail {

__virtio16 flags;

__virtio16 idx;

__virtio16 ring[];

};其中vring_avail flags是用来告诉后端是否要发中断,具体后端代码如下,以dpdk 中的vhost_user为例

if (!(vq->avail->flags & VRING_AVAIL_F_NO_INTERRUPT))

eventfd_write((int)vq->callfd, 1);可以看到当avail flags为0时后端就后发中断到guest里面。

dpdk 设置 vq->callfd

static int vhost_user_set_vring_call(struct virtio_net **pdev, struct VhostUserMsg *msg, int main_fd __rte_unused) { struct virtio_net *dev = *pdev; struct vhost_vring_file file; struct vhost_virtqueue *vq; int expected_fds; expected_fds = (msg->payload.u64 & VHOST_USER_VRING_NOFD_MASK) ? 0 : 1; if (validate_msg_fds(msg, expected_fds) != 0) return RTE_VHOST_MSG_RESULT_ERR; file.index = msg->payload.u64 & VHOST_USER_VRING_IDX_MASK; if (msg->payload.u64 & VHOST_USER_VRING_NOFD_MASK) file.fd = VIRTIO_INVALID_EVENTFD; else file.fd = msg->fds[0]; RTE_LOG(INFO, VHOST_CONFIG, "vring call idx:%d file:%d\n", file.index, file.fd); vq = dev->virtqueue[file.index]; if (vq->callfd >= 0) close(vq->callfd); vq->callfd = file.fd; return RTE_VHOST_MSG_RESULT_OK; }

VHOST_CONFIG: reallocate vq from 0 to 3 node VHOST_CONFIG: reallocate dev from 0 to 3 node VHOST_CONFIG: read message VHOST_USER_SET_VRING_KICK VHOST_CONFIG: vring kick idx:0 file:48 VHOST_CONFIG: read message VHOST_USER_SET_VRING_CALL VHOST_CONFIG: vring call idx:0 file:50 VHOST_CONFIG: read message VHOST_USER_SET_VRING_NUM VHOST_CONFIG: read message VHOST_USER_SET_VRING_BASE VHOST_CONFIG: read message VHOST_USER_SET_VRING_ADDR VHOST_CONFIG: reallocate vq from 0 to 3 node VHOST_CONFIG: read message VHOST_USER_SET_VRING_KICK VHOST_CONFIG: vring kick idx:1 file:45 VHOST_CONFIG: virtio is now ready for processing. LSWITCH_CONFIG: Virtual device 0 is added to the device list VHOST_CONFIG: read message VHOST_USER_SET_VRING_CALL VHOST_CONFIG: vring call idx:1 file:51 VHOST_CONFIG: read message VHOST_USER_SET_VRING_ENABLE VHOST_CONFIG: set queue enable: 1 to qp idx: 0 VHOST_CONFIG: read message VHOST_USER_SET_VRING_ENABLE VHOST_CONFIG: set queue enable: 1 to qp idx: 1

vhost_vring_call_split(struct virtio_net *dev, struct vhost_virtqueue *vq) { /* Flush used->idx update before we read avail->flags. */ rte_smp_mb(); /* Don't kick guest if we don't reach index specified by guest. */ if (dev->features & (1ULL << VIRTIO_RING_F_EVENT_IDX)) { uint16_t old = vq->signalled_used; uint16_t new = vq->last_used_idx; bool signalled_used_valid = vq->signalled_used_valid; vq->signalled_used = new; vq->signalled_used_valid = true; VHOST_LOG_DEBUG(VHOST_DATA, "%s: used_event_idx=%d, old=%d, new=%d\n", __func__, vhost_used_event(vq), old, new); if ((vhost_need_event(vhost_used_event(vq), new, old) && (vq->callfd >= 0)) || unlikely(!signalled_used_valid)) { eventfd_write(vq->callfd, (eventfd_t) 1); if (dev->notify_ops->guest_notified) dev->notify_ops->guest_notified(dev->vid); } } else { /* Kick the guest if necessary. */ if (!(vq->avail->flags & VRING_AVAIL_F_NO_INTERRUPT) && (vq->callfd >= 0)) { eventfd_write(vq->callfd, (eventfd_t)1); if (dev->notify_ops->guest_notified) dev->notify_ops->guest_notified(dev->vid); } } }

Breakpoint 1, virtio_dev_tx_split (dev=0x423fffba00, vq=0x423ffcf000, mbuf_pool=0x13f9aac00, pkts=0xffffbd40cea0, count=1) at /data1/dpdk-19.11/lib/librte_vhost/virtio_net.c:1795 1795 vhost_vring_call_split(dev, vq); Missing separate debuginfos, use: debuginfo-install libgcc-4.8.5-44.el7.aarch64 (gdb) bt #0 virtio_dev_tx_split (dev=0x423fffba00, vq=0x423ffcf000, mbuf_pool=0x13f9aac00, pkts=0xffffbd40cea0, count=1) at /data1/dpdk-19.11/lib/librte_vhost/virtio_net.c:1795 #1 0x000000000052312c in rte_vhost_dequeue_burst (vid=0, queue_id=1, mbuf_pool=0x13f9aac00, pkts=0xffffbd40cea0, count=32) at /data1/dpdk-19.11/lib/librte_vhost/virtio_net.c:2255 #2 0x00000000004822b0 in learning_switch_main (arg=<optimized out>) at /data1/ovs/LearningSwitch-DPDK/main.c:503 #3 0x0000000000585fb4 in eal_thread_loop (arg=0x0) at /data1/dpdk-19.11/lib/librte_eal/linux/eal/eal_thread.c:153 #4 0x0000ffffbe617d38 in start_thread (arg=0xffffbd40d910) at pthread_create.c:309 #5 0x0000ffffbe55f5f0 in thread_start () at ../sysdeps/unix/sysv/linux/aarch64/clone.S:91 (gdb) s vhost_vring_call_split (vq=0x423ffcf000, dev=0x423fffba00) at /data1/dpdk-19.11/lib/librte_vhost/vhost.h:656 656 rte_smp_mb(); (gdb) list 651 652 static __rte_always_inline void 653 vhost_vring_call_split(struct virtio_net *dev, struct vhost_virtqueue *vq) 654 { 655 /* Flush used->idx update before we read avail->flags. */ 656 rte_smp_mb(); 657 658 /* Don't kick guest if we don't reach index specified by guest. */ 659 if (dev->features & (1ULL << VIRTIO_RING_F_EVENT_IDX)) { 660 uint16_t old = vq->signalled_used; (gdb) n 659 if (dev->features & (1ULL << VIRTIO_RING_F_EVENT_IDX)) { (gdb) n 660 uint16_t old = vq->signalled_used; (gdb) n 661 uint16_t new = vq->last_used_idx; (gdb) n 662 bool signalled_used_valid = vq->signalled_used_valid; (gdb) n 664 vq->signalled_used = new; (gdb) n 665 vq->signalled_used_valid = true; (gdb) n 672 if ((vhost_need_event(vhost_used_event(vq), new, old) && (gdb) n 674 unlikely(!signalled_used_valid)) { (gdb) n 673 (vq->callfd >= 0)) || (gdb) n 675 eventfd_write(vq->callfd, (eventfd_t) 1); (gdb) n 676 if (dev->notify_ops->guest_notified) (gdb) n

qemu 侧

qemu在vhost_virtqueue_start时,会取得VirtQueue的host_notifier的rfd,并把fd通过VHOST_SET_VRING_KICK传入kvm.ko,这样kvm.ko后续就会通过eventfd_signal通知这个fd

static int vhost_virtqueue_start(struct vhost_dev *dev, struct VirtIODevice *vdev, struct vhost_virtqueue *vq, unsigned idx) { BusState *qbus = BUS(qdev_get_parent_bus(DEVICE(vdev))); VirtioBusState *vbus = VIRTIO_BUS(qbus); VirtioBusClass *k = VIRTIO_BUS_GET_CLASS(vbus); hwaddr s, l, a; int r; int vhost_vq_index = dev->vhost_ops->vhost_get_vq_index(dev, idx); struct vhost_vring_file file = { .index = vhost_vq_index }; struct vhost_vring_state state = { .index = vhost_vq_index }; struct VirtQueue *vvq = virtio_get_queue(vdev, idx); a = virtio_queue_get_desc_addr(vdev, idx); if (a == 0) { /* Queue might not be ready for start */ return 0; } vq->num = state.num = virtio_queue_get_num(vdev, idx); r = dev->vhost_ops->vhost_set_vring_num(dev, &state); if (r) { VHOST_OPS_DEBUG("vhost_set_vring_num failed"); return -errno; } state.num = virtio_queue_get_last_avail_idx(vdev, idx); r = dev->vhost_ops->vhost_set_vring_base(dev, &state); if (r) { VHOST_OPS_DEBUG("vhost_set_vring_base failed"); return -errno; } if (vhost_needs_vring_endian(vdev)) { r = vhost_virtqueue_set_vring_endian_legacy(dev, virtio_is_big_endian(vdev), vhost_vq_index); if (r) { return -errno; } } vq->desc_size = s = l = virtio_queue_get_desc_size(vdev, idx); vq->desc_phys = a; vq->desc = vhost_memory_map(dev, a, &l, false); if (!vq->desc || l != s) { r = -ENOMEM; goto fail_alloc_desc; } vq->avail_size = s = l = virtio_queue_get_avail_size(vdev, idx); vq->avail_phys = a = virtio_queue_get_avail_addr(vdev, idx); vq->avail = vhost_memory_map(dev, a, &l, false); if (!vq->avail || l != s) { r = -ENOMEM; goto fail_alloc_avail; } vq->used_size = s = l = virtio_queue_get_used_size(vdev, idx); vq->used_phys = a = virtio_queue_get_used_addr(vdev, idx); vq->used = vhost_memory_map(dev, a, &l, true); if (!vq->used || l != s) { r = -ENOMEM; goto fail_alloc_used; } r = vhost_virtqueue_set_addr(dev, vq, vhost_vq_index, dev->log_enabled); if (r < 0) { r = -errno; goto fail_alloc; } file.fd = event_notifier_get_fd(virtio_queue_get_host_notifier(vvq)); ----------------传递fd r = dev->vhost_ops->vhost_set_vring_kick(dev, &file); if (r) { VHOST_OPS_DEBUG("vhost_set_vring_kick failed"); r = -errno; goto fail_kick; } /* Clear and discard previous events if any. */ event_notifier_test_and_clear(&vq->masked_notifier); /* Init vring in unmasked state, unless guest_notifier_mask * will do it later. */ if (!vdev->use_guest_notifier_mask) { /* TODO: check and handle errors. */ vhost_virtqueue_mask(dev, vdev, idx, false); } if (k->query_guest_notifiers && k->query_guest_notifiers(qbus->parent) && virtio_queue_vector(vdev, idx) == VIRTIO_NO_VECTOR) { file.fd = -1; ---------------- -1哦 r = dev->vhost_ops->vhost_set_vring_call(dev, &file); if (r) { goto fail_vector; } } return 0; fail_vector: fail_kick: fail_alloc: vhost_memory_unmap(dev, vq->used, virtio_queue_get_used_size(vdev, idx), 0, 0); fail_alloc_used: vhost_memory_unmap(dev, vq->avail, virtio_queue_get_avail_size(vdev, idx), 0, 0); fail_alloc_avail: vhost_memory_unmap(dev, vq->desc, virtio_queue_get_desc_size(vdev, idx), 0, 0); fail_alloc_desc: return r; }

vhost_net_start

int vhost_net_start(VirtIODevice *dev, NetClientState *ncs, int total_queues) { BusState *qbus = BUS(qdev_get_parent_bus(DEVICE(dev))); VirtioBusState *vbus = VIRTIO_BUS(qbus); VirtioBusClass *k = VIRTIO_BUS_GET_CLASS(vbus); struct vhost_net *net; int r, e, i; NetClientState *peer; if (!k->set_guest_notifiers) { error_report("binding does not support guest notifiers"); return -ENOSYS; } for (i = 0; i < total_queues; i++) { peer = qemu_get_peer(ncs, i); net = get_vhost_net(peer); vhost_net_set_vq_index(net, i * 2); /* Suppress the masking guest notifiers on vhost user * because vhost user doesn't interrupt masking/unmasking * properly. */ if (net->nc->info->type == NET_CLIENT_DRIVER_VHOST_USER) { dev->use_guest_notifier_mask = false; } } r = k->set_guest_notifiers(qbus->parent, total_queues * 2, true); if (r < 0) { error_report("Error binding guest notifier: %d", -r); goto err; } for (i = 0; i < total_queues; i++) { peer = qemu_get_peer(ncs, i); r = vhost_net_start_one(get_vhost_net(peer), dev); if (r < 0) { goto err_start; } if (peer->vring_enable) { /* restore vring enable state */ r = vhost_set_vring_enable(peer, peer->vring_enable); if (r < 0) { goto err_start; } } } return 0

vhost_user_backend_start(VhostUserBackend *b) { BusState *qbus = BUS(qdev_get_parent_bus(DEVICE(b->vdev))); VirtioBusClass *k = VIRTIO_BUS_GET_CLASS(qbus); int ret, i ; if (b->started) { return; } if (!k->set_guest_notifiers) { error_report("binding does not support guest notifiers"); return; } ret = vhost_dev_enable_notifiers(&b->dev, b->vdev); if (ret < 0) { return; }

//virtio_pci_set_guest_notifiers

ret = k->set_guest_notifiers(qbus->parent, b->dev.nvqs, true); //使用中断 if (ret < 0) { error_report("Error binding guest notifier"); goto err_host_notifiers; } b->dev.acked_features = b->vdev->guest_features; ret = vhost_dev_start(&b->dev, b->vdev); if (ret < 0) { error_report("Error start vhost dev"); goto err_guest_notifiers; } /* guest_notifier_mask/pending not used yet, so just unmask * everything here. virtio-pci will do the right thing by * enabling/disabling irqfd. */ for (i = 0; i < b->dev.nvqs; i++) { vhost_virtqueue_mask(&b->dev, b->vdev, b->dev.vq_index + i, false); } b->started = true; return; err_guest_notifiers: k->set_guest_notifiers(qbus->parent, b->dev.nvqs, false); err_host_notifiers: vhost_dev_disable_notifiers(&b->dev, b->vdev); }

vhost_user_backend_dev_init b->dev.nvqs = nvqs; k->set_guest_notifiers(qbus->parent, b->dev.nvqs, true); //使用中断 static int virtio_pci_set_guest_notifier(DeviceState *d, int n, bool assign, bool with_irqfd) { VirtIOPCIProxy *proxy = to_virtio_pci_proxy(d); VirtIODevice *vdev = virtio_bus_get_device(&proxy->bus); VirtioDeviceClass *vdc = VIRTIO_DEVICE_GET_CLASS(vdev); VirtQueue *vq = virtio_get_queue(vdev, n); EventNotifier *notifier = virtio_queue_get_guest_notifier(vq); if (assign) { int r = event_notifier_init(notifier, 0); //初始化 if (r < 0) { return r; } virtio_queue_set_guest_notifier_fd_handler(vq, true, with_irqfd); } else { virtio_queue_set_guest_notifier_fd_handler(vq, false, with_irqfd); event_notifier_cleanup(notifier); } if (!msix_enabled(&proxy->pci_dev) && vdev->use_guest_notifier_mask && vdc->guest_notifier_mask) { vdc->guest_notifier_mask(vdev, n, !assign); } return 0; } EventNotifier *virtio_queue_get_guest_notifier(VirtQueue *vq) { return &vq->guest_notifier; } void virtio_queue_set_guest_notifier_fd_handler(VirtQueue *vq, bool assign, bool with_irqfd) { if (assign && !with_irqfd) { event_notifier_set_handler(&vq->guest_notifier, virtio_queue_guest_notifier_read); } else { event_notifier_set_handler(&vq->guest_notifier, NULL); } if (!assign) { /* Test and clear notifier before closing it, * in case poll callback didn't have time to run. */ virtio_queue_guest_notifier_read(&vq->guest_notifier); } }

void event_notifier_init_fd(EventNotifier *e, int fd) { e->rfd = fd; e->wfd = fd; } int event_notifier_init(EventNotifier *e, int active) { int fds[2]; int ret; #ifdef CONFIG_EVENTFD ret = eventfd(0, EFD_NONBLOCK | EFD_CLOEXEC); #else ret = -1; errno = ENOSYS; #endif if (ret >= 0) { e->rfd = e->wfd = ret; } else { if (errno != ENOSYS) { return -errno; } if (qemu_pipe(fds) < 0) { return -errno; } ret = fcntl_setfl(fds[0], O_NONBLOCK); if (ret < 0) { ret = -errno; goto fail; } ret = fcntl_setfl(fds[1], O_NONBLOCK); if (ret < 0) { ret = -errno; goto fail; } e->rfd = fds[0]; e->wfd = fds[1]; } if (active) { event_notifier_set(e); } return 0; fail: close(fds[0]); close(fds[1]); return ret; }

如果KVM_IRQFD成功设置,kvm模块会通过irqfd把中断注入guest。qemu是通过virtio_pci_set_guest_notifiers -> kvm_virtio_pci_vector_use -> kvm_virtio_pci_irqfd_use -> kvm_irqchip_add_irqfd_notifier -> kvm_irqchip_assign_irqfd最终调用kvm_vm_ioctl来设置kvm模块的irqfd的,包含write fd和read fd(可选)

static void virtio_queue_guest_notifier_read(EventNotifier *n)

{

VirtQueue *vq = container_of(n, VirtQueue, guest_notifier);

if (event_notifier_test_and_clear(n)) {

virtio_irq(vq);

}

}

void virtio_irq(VirtQueue *vq)

{

trace_virtio_irq(vq);

vq->vdev->isr |= 0x01;

virtio_notify_vector(vq->vdev, vq->vector);

}

static void virtio_notify_vector(VirtIODevice *vdev, uint16_t vector)

{

BusState *qbus = qdev_get_parent_bus(DEVICE(vdev));

VirtioBusClass *k = VIRTIO_BUS_GET_CLASS(qbus);

if (k->notify) {

k->notify(qbus->parent, vector);

}

}

static void virtio_pci_notify(DeviceState *d, uint16_t vector)

{

VirtIOPCIProxy *proxy = to_virtio_pci_proxy_fast(d);

if (msix_enabled(&proxy->pci_dev))

msix_notify(&proxy->pci_dev, vector);

else {

VirtIODevice *vdev = virtio_bus_get_device(&proxy->bus);

pci_set_irq(&proxy->pci_dev, vdev->isr & 1);

}

}

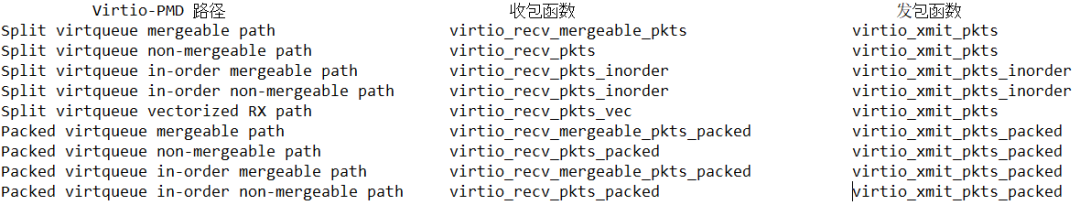

virtio pmd