kvm + qemu + kvm ioctl

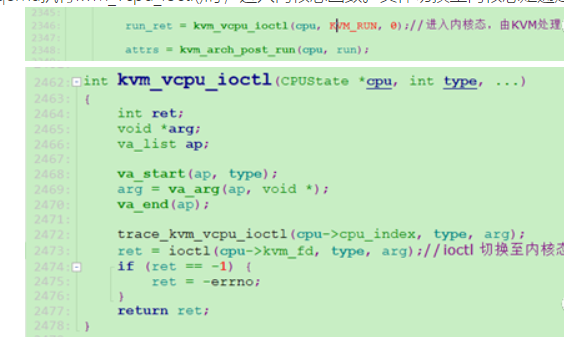

kvm_cpu_exec --> kvm_vcpu_ioctl(cpu, KVM_RUN, 0)

static void *kvm_vcpu_thread_fn(void *arg) { CPUState *cpu = arg; int r; rcu_register_thread(); qemu_mutex_lock_iothread(); qemu_thread_get_self(cpu->thread); cpu->thread_id = qemu_get_thread_id(); cpu->can_do_io = 1; current_cpu = cpu; r = kvm_init_vcpu(cpu, &error_fatal); kvm_init_cpu_signals(cpu); /* signal CPU creation */ cpu_thread_signal_created(cpu); qemu_guest_random_seed_thread_part2(cpu->random_seed); do { if (cpu_can_run(cpu)) { r = kvm_cpu_exec(cpu); if (r == EXCP_DEBUG) { cpu_handle_guest_debug(cpu); } } qemu_wait_io_event(cpu); } while (!cpu->unplug || cpu_can_run(cpu)); kvm_destroy_vcpu(cpu); cpu_thread_signal_destroyed(cpu); qemu_mutex_unlock_iothread(); rcu_unregister_thread(); return NULL; }

void *kvm_cpu_thread(void *data) { struct kvm *kvm = (struct kvm *)data; int ret = 0; kvm_reset_vcpu(kvm->vcpus); while (1) { printf("KVM start run\n"); ret = ioctl(kvm->vcpus->vcpu_fd, KVM_RUN, 0); if (ret < 0) { fprintf(stderr, "KVM_RUN failed\n"); exit(1); } switch (kvm->vcpus->kvm_run->exit_reason) { case KVM_EXIT_UNKNOWN: printf("KVM_EXIT_UNKNOWN\n"); break; case KVM_EXIT_DEBUG: printf("KVM_EXIT_DEBUG\n"); break; case KVM_EXIT_IO: printf("KVM_EXIT_IO\n"); printf("out port: %d, data: %d\n", kvm->vcpus->kvm_run->io.port, *(int *)((char *)(kvm->vcpus->kvm_run) + kvm->vcpus->kvm_run->io.data_offset) ); sleep(1); break; case KVM_EXIT_MMIO: printf("KVM_EXIT_MMIO\n"); break; case KVM_EXIT_INTR: printf("KVM_EXIT_INTR\n"); break; case KVM_EXIT_SHUTDOWN: printf("KVM_EXIT_SHUTDOWN\n"); goto exit_kvm; break; default: printf("KVM PANIC\n"); goto exit_kvm; } } exit_kvm: return 0; }

/dev/kvm

[root@localhost cloud_images]# lsof /dev/kvm COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME qemu-syst 50066 root 14u CHR 10,232 0t0 1103 /dev/kvm [root@localhost cloud_images]# ps -elf | grep 50066 3 S root 50066 1 40 80 0 - 83896 poll_s 02:53 ? 00:00:07 qemu-system-aarch64 -name vm2 -daemonize -enable-kvm -M virt -cpu host -smp 2 -m 4096 -global virtio-blk-device.scsi=off -device virtio-scsi-device,id=scsi -kernel vmlinuz-4.18 --append console=ttyAMA0 root=UUID=6a09973e-e8fd-4a6d-a8c0-1deb9556f477 -initrd initramfs-4.18 -drive file=vhuser-test1.qcow2 -netdev user,id=unet,hostfwd=tcp:127.0.0.1:1122-:22 -device virtio-net-device,netdev=unet -vnc :10 0 S root 50093 48588 0 80 0 - 1729 pipe_w 02:54 pts/1 00:00:00 grep --color=auto 50066 [root@localhost cloud_images]#

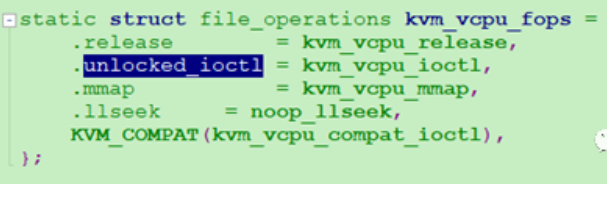

ioctl系统调用sys_ioctl->ksys_ioctl->do_vfs_ioctl->vfs_ioctl->unlocked_ioctl->kvm_vcpu_ioctl

kvm_vm_ioctl

accel/kvm/kvm-all.c:2623:int kvm_vm_ioctl(KVMState *s, int type, ...) include/sysemu/kvm.h:276:int kvm_vm_ioctl(KVMState *s, int type, ...);

KVM_IOEVENTFD

Qemu中建立ioeventfd的处理流程:

virtio_pci_config_write

|-->virtio_ioport_write

|-->virtio_pci_start_ioeventfd

|-->virtio_pci_set_host_notifier_internal

|-->virtio_queue_set_host_notifier_fd_handler

|-->memory_region_add_eventfd

|-->memory_region_transaction_commit

|-->address_space_update_ioeventfds

|-->address_space_add_del_ioeventfds

|-->eventfd_add[kvm_mem_ioeventfd_add]

|-->kvm_set_ioeventfd_mmio

|-->kvm_vm_ioctl(...,KVM_IOEVENTFD,...)

KVM_IRQFD

static int kvm_irqchip_assign_irqfd(KVMState *s, EventNotifier *event, EventNotifier *resample, int virq, bool assign) { int fd = event_notifier_get_fd(event); int rfd = resample ? event_notifier_get_fd(resample) : -1; struct kvm_irqfd irqfd = { .fd = fd, .gsi = virq, .flags = assign ? 0 : KVM_IRQFD_FLAG_DEASSIGN, }; if (rfd != -1) { assert(assign); if (kvm_irqchip_is_split()) { /* * When the slow irqchip (e.g. IOAPIC) is in the * userspace, KVM kernel resamplefd will not work because * the EOI of the interrupt will be delivered to userspace * instead, so the KVM kernel resamplefd kick will be * skipped. The userspace here mimics what the kernel * provides with resamplefd, remember the resamplefd and * kick it when we receive EOI of this IRQ. * * This is hackery because IOAPIC is mostly bypassed * (except EOI broadcasts) when irqfd is used. However * this can bring much performance back for split irqchip * with INTx IRQs (for VFIO, this gives 93% perf of the * full fast path, which is 46% perf boost comparing to * the INTx slow path). */ kvm_resample_fd_insert(virq, resample); } else { irqfd.flags |= KVM_IRQFD_FLAG_RESAMPLE; irqfd.resamplefd = rfd; } } else if (!assign) { if (kvm_irqchip_is_split()) { kvm_resample_fd_remove(virq); } } if (!kvm_irqfds_enabled()) { return -ENOSYS; } return kvm_vm_ioctl(s, KVM_IRQFD, &irqfd); }

kvm_vm_ioctl

static long kvm_vm_ioctl(struct file *filp, unsigned int ioctl, unsigned long arg) { struct kvm *kvm = filp->private_data; void __user *argp = (void __user *)arg; int r; if (kvm->mm != current->mm) return -EIO; switch (ioctl) { case KVM_CREATE_VCPU: r = kvm_vm_ioctl_create_vcpu(kvm, arg); break; case KVM_ENABLE_CAP: { struct kvm_enable_cap cap; r = -EFAULT; if (copy_from_user(&cap, argp, sizeof(cap))) goto out; r = kvm_vm_ioctl_enable_cap_generic(kvm, &cap); break; } case KVM_SET_USER_MEMORY_REGION: { struct kvm_userspace_memory_region kvm_userspace_mem; r = -EFAULT; if (copy_from_user(&kvm_userspace_mem, argp, sizeof(kvm_userspace_mem))) goto out; r = kvm_vm_ioctl_set_memory_region(kvm, &kvm_userspace_mem); break; } case KVM_GET_DIRTY_LOG: { struct kvm_dirty_log log; r = -EFAULT; if (copy_from_user(&log, argp, sizeof(log))) goto out; r = kvm_vm_ioctl_get_dirty_log(kvm, &log); break; } #ifdef CONFIG_KVM_GENERIC_DIRTYLOG_READ_PROTECT case KVM_CLEAR_DIRTY_LOG: { struct kvm_clear_dirty_log log; r = -EFAULT; if (copy_from_user(&log, argp, sizeof(log))) goto out; r = kvm_vm_ioctl_clear_dirty_log(kvm, &log); break; } #endif #ifdef CONFIG_KVM_MMIO case KVM_REGISTER_COALESCED_MMIO: { struct kvm_coalesced_mmio_zone zone; r = -EFAULT; if (copy_from_user(&zone, argp, sizeof(zone))) goto out; r = kvm_vm_ioctl_register_coalesced_mmio(kvm, &zone); break; } case KVM_UNREGISTER_COALESCED_MMIO: { struct kvm_coalesced_mmio_zone zone; r = -EFAULT; if (copy_from_user(&zone, argp, sizeof(zone))) goto out; r = kvm_vm_ioctl_unregister_coalesced_mmio(kvm, &zone); break; } #endif case KVM_IRQFD: { struct kvm_irqfd data; r = -EFAULT; if (copy_from_user(&data, argp, sizeof(data))) goto out; r = kvm_irqfd(kvm, &data); break; } case KVM_IOEVENTFD: { struct kvm_ioeventfd data; r = -EFAULT; if (copy_from_user(&data, argp, sizeof(data))) goto out; r = kvm_ioeventfd(kvm, &data); break; } #ifdef CONFIG_HAVE_KVM_MSI case KVM_SIGNAL_MSI: { struct kvm_msi msi; r = -EFAULT; if (copy_from_user(&msi, argp, sizeof(msi))) goto out; r = kvm_send_userspace_msi(kvm, &msi); break; } #endif #ifdef __KVM_HAVE_IRQ_LINE case KVM_IRQ_LINE_STATUS: case KVM_IRQ_LINE: { struct kvm_irq_level irq_event; r = -EFAULT; if (copy_from_user(&irq_event, argp, sizeof(irq_event))) goto out; r = kvm_vm_ioctl_irq_line(kvm, &irq_event, ioctl == KVM_IRQ_LINE_STATUS); if (r) goto out; r = -EFAULT; if (ioctl == KVM_IRQ_LINE_STATUS) { if (copy_to_user(argp, &irq_event, sizeof(irq_event))) goto out; } r = 0; break; } #endif #ifdef CONFIG_HAVE_KVM_IRQ_ROUTING case KVM_SET_GSI_ROUTING: { struct kvm_irq_routing routing; struct kvm_irq_routing __user *urouting; struct kvm_irq_routing_entry *entries = NULL; r = -EFAULT; if (copy_from_user(&routing, argp, sizeof(routing))) goto out; r = -EINVAL; if (!kvm_arch_can_set_irq_routing(kvm)) goto out; if (routing.nr > KVM_MAX_IRQ_ROUTES) goto out; if (routing.flags) goto out; if (routing.nr) { urouting = argp; entries = vmemdup_user(urouting->entries, array_size(sizeof(*entries), routing.nr)); if (IS_ERR(entries)) { r = PTR_ERR(entries); goto out; } } r = kvm_set_irq_routing(kvm, entries, routing.nr, routing.flags); kvfree(entries); break; } #endif /* CONFIG_HAVE_KVM_IRQ_ROUTING */ case KVM_CREATE_DEVICE: { struct kvm_create_device cd; r = -EFAULT; if (copy_from_user(&cd, argp, sizeof(cd))) goto out; r = kvm_ioctl_create_device(kvm, &cd); if (r) goto out; r = -EFAULT; if (copy_to_user(argp, &cd, sizeof(cd))) goto out; r = 0; break; } case KVM_CHECK_EXTENSION: r = kvm_vm_ioctl_check_extension_generic(kvm, arg); break; default: r = kvm_arch_vm_ioctl(filp, ioctl, arg); } out: return r; }

浙公网安备 33010602011771号

浙公网安备 33010602011771号