dpdk +vfio 中断关和开

1、request_irq会去写mxi msg

普通网卡驱动申请中断 int ixgbe_init_interrupt_scheme(struct ixgbe_adapter *adapter) { err = ixgbe_alloc_q_vectors(adapter); } static int ixgbe_alloc_q_vectors(struct ixgbe_adapter *adapter) { if (q_vectors >= (rxr_remaining + txr_remaining)) { for (; rxr_remaining; v_idx++) { err = ixgbe_alloc_q_vector(adapter, q_vectors, v_idx, 0, 0, 1, rxr_idx); if (err) goto err_out; /* update counts and index */ rxr_remaining--; rxr_idx++; } } } static int ixgbe_alloc_q_vector(struct ixgbe_adapter *adapter, int v_count, int v_idx, int txr_count, int txr_idx, int rxr_count, int rxr_idx) { /* setup affinity mask and node */ if (cpu != -1) cpumask_set_cpu(cpu, &q_vector->affinity_mask); q_vector->numa_node = node; #ifdef CONFIG_IXGBE_DCA /* initialize CPU for DCA */ q_vector->cpu = -1; #endif /* initialize NAPI */ netif_napi_add(adapter->netdev, &q_vector->napi, ixgbe_poll, 64); napi_hash_add(&q_vector->napi); }

static int ixgbe_open(struct net_device *netdev) { /* allocate transmit descriptors */ err = ixgbe_setup_all_tx_resources(adapter); if (err) goto err_setup_tx; /* allocate receive descriptors */ err = ixgbe_setup_all_rx_resources(adapter); /*注册中断*/ err = ixgbe_request_irq(adapter); } static int ixgbe_request_irq(struct ixgbe_adapter *adapter) { struct net_device *netdev = adapter->netdev; int err; if (adapter->flags & IXGBE_FLAG_MSIX_ENABLED) err = ixgbe_request_msix_irqs(adapter); else if (adapter->flags & IXGBE_FLAG_MSI_ENABLED) err = request_irq(adapter->pdev->irq, ixgbe_intr, 0, netdev->name, adapter); else err = request_irq(adapter->pdev->irq, ixgbe_intr, IRQF_SHARED, netdev->name, adapter); if (err) e_err(probe, "request_irq failed, Error %d\n", err); return err; } static int ixgbe_request_msix_irqs(struct ixgbe_adapter *adapter) { for (vector = 0; vector < adapter->num_q_vectors; vector++) { struct ixgbe_q_vector *q_vector = adapter->q_vector[vector]; struct msix_entry *entry = &adapter->msix_entries[vector]; err = request_irq(entry->vector, &ixgbe_msix_clean_rings, 0, q_vector->name, q_vector); } }

开中断

int igb_up(struct igb_adapter *adapter) { struct e1000_hw *hw = &adapter->hw; int i; /* hardware has been reset, we need to reload some things */ igb_configure(adapter); clear_bit(__IGB_DOWN, &adapter->state); for (i = 0; i < adapter->num_q_vectors; i++) napi_enable(&(adapter->q_vector[i]->napi)); if (adapter->msix_entries) igb_configure_msix(adapter); else igb_assign_vector(adapter->q_vector[0], 0); /* Clear any pending interrupts. */ rd32(E1000_ICR); igb_irq_enable(adapter); /* notify VFs that reset has been completed */ if (adapter->vfs_allocated_count) { u32 reg_data = rd32(E1000_CTRL_EXT); reg_data |= E1000_CTRL_EXT_PFRSTD; wr32(E1000_CTRL_EXT, reg_data); } netif_tx_start_all_queues(adapter->netdev); /* start the watchdog. */ hw->mac.get_link_status = 1; schedule_work(&adapter->watchdog_task); return 0; }

**/ static void igb_irq_enable(struct igb_adapter *adapter) { struct e1000_hw *hw = &adapter->hw; if (adapter->msix_entries) { u32 ims = E1000_IMS_LSC | E1000_IMS_DOUTSYNC | E1000_IMS_DRSTA; u32 regval = rd32(E1000_EIAC); wr32(E1000_EIAC, regval | adapter->eims_enable_mask); regval = rd32(E1000_EIAM); wr32(E1000_EIAM, regval | adapter->eims_enable_mask); wr32(E1000_EIMS, adapter->eims_enable_mask); if (adapter->vfs_allocated_count) { wr32(E1000_MBVFIMR, 0xFF); ims |= E1000_IMS_VMMB; } wr32(E1000_IMS, ims); } else { wr32(E1000_IMS, IMS_ENABLE_MASK | E1000_IMS_DRSTA); wr32(E1000_IAM, IMS_ENABLE_MASK | E1000_IMS_DRSTA); } }

到这里,几乎所有的准备工作都就绪了。唯一剩下的就是打开硬中断,等待数据包进来。打开硬中断的方式因硬件而异,igb 驱动是在 __igb_open 里调用辅助函数igb_irq_enable 完成的。

中断通过写寄存器的方式打开:

|

1

2

3

4

5

6

7

8

|

static void igb_irq_enable(struct igb_adapter *adapter)

{

/* ... */

wr32(E1000_IMS, IMS_ENABLE_MASK | E1000_IMS_DRSTA);

wr32(E1000_IAM, IMS_ENABLE_MASK | E1000_IMS_DRSTA);

/* ... */

}

|

现在,网卡已经启用了。驱动可能还会做一些额外的事情,例如启动定时器,工作队列(work queue),或者其他硬件相关的设置。这些工作做完后,网卡就可以收包了。

普通驱动关中断

/* disable interrupts on this vector only */ ixgbe_irq_disable_queues(adapter, ((u64)1 << q_vector->v_idx)); napi_schedule(&q_vector->napi); // NAPI调度

static inline void ixgbe_irq_disable_queues(struct ixgbe_adapter *adapter, u64 qmask) { u32 mask; struct ixgbe_hw *hw = &adapter->hw; switch (hw->mac.type) { case ixgbe_mac_82598EB: mask = (IXGBE_EIMS_RTX_QUEUE & qmask); IXGBE_WRITE_REG(hw, IXGBE_EIMC, mask); break; case ixgbe_mac_82599EB: case ixgbe_mac_X540: case ixgbe_mac_X550: case ixgbe_mac_X550EM_x: case ixgbe_mac_x550em_a: mask = (qmask & 0xFFFFFFFF); if (mask) IXGBE_WRITE_REG(hw, IXGBE_EIMC_EX(0), mask); mask = (qmask >> 32); if (mask) IXGBE_WRITE_REG(hw, IXGBE_EIMC_EX(1), mask); break; default: break; } /* skip the flush */ }

https://blog.csdn.net/yhb1047818384/article/details/106676560

ixgbe_set_ivar_map

ixgbe_dev_start /* confiugre msix for sleep until rx interrupt */ ixgbe_configure_msix(dev);

static int vfio_msi_enable(struct vfio_pci_device *vdev, int nvec, bool msix) { struct pci_dev *pdev = vdev->pdev; unsigned int flag = msix ? PCI_IRQ_MSIX : PCI_IRQ_MSI; int ret; if (!is_irq_none(vdev)) return -EINVAL; vdev->ctx = kcalloc(nvec, sizeof(struct vfio_pci_irq_ctx), GFP_KERNEL); if (!vdev->ctx) return -ENOMEM; /* return the number of supported vectors if we can't get all: */ ret = pci_alloc_irq_vectors(pdev, 1, nvec, flag); if (ret < nvec) { if (ret > 0) pci_free_irq_vectors(pdev); kfree(vdev->ctx); return ret; } vdev->num_ctx = nvec; vdev->irq_type = msix ? VFIO_PCI_MSIX_IRQ_INDEX : VFIO_PCI_MSI_IRQ_INDEX; if (!msix) { /* * Compute the virtual hardware field for max msi vectors - * it is the log base 2 of the number of vectors. */ vdev->msi_qmax = fls(nvec * 2 - 1) - 1; } return 0; }

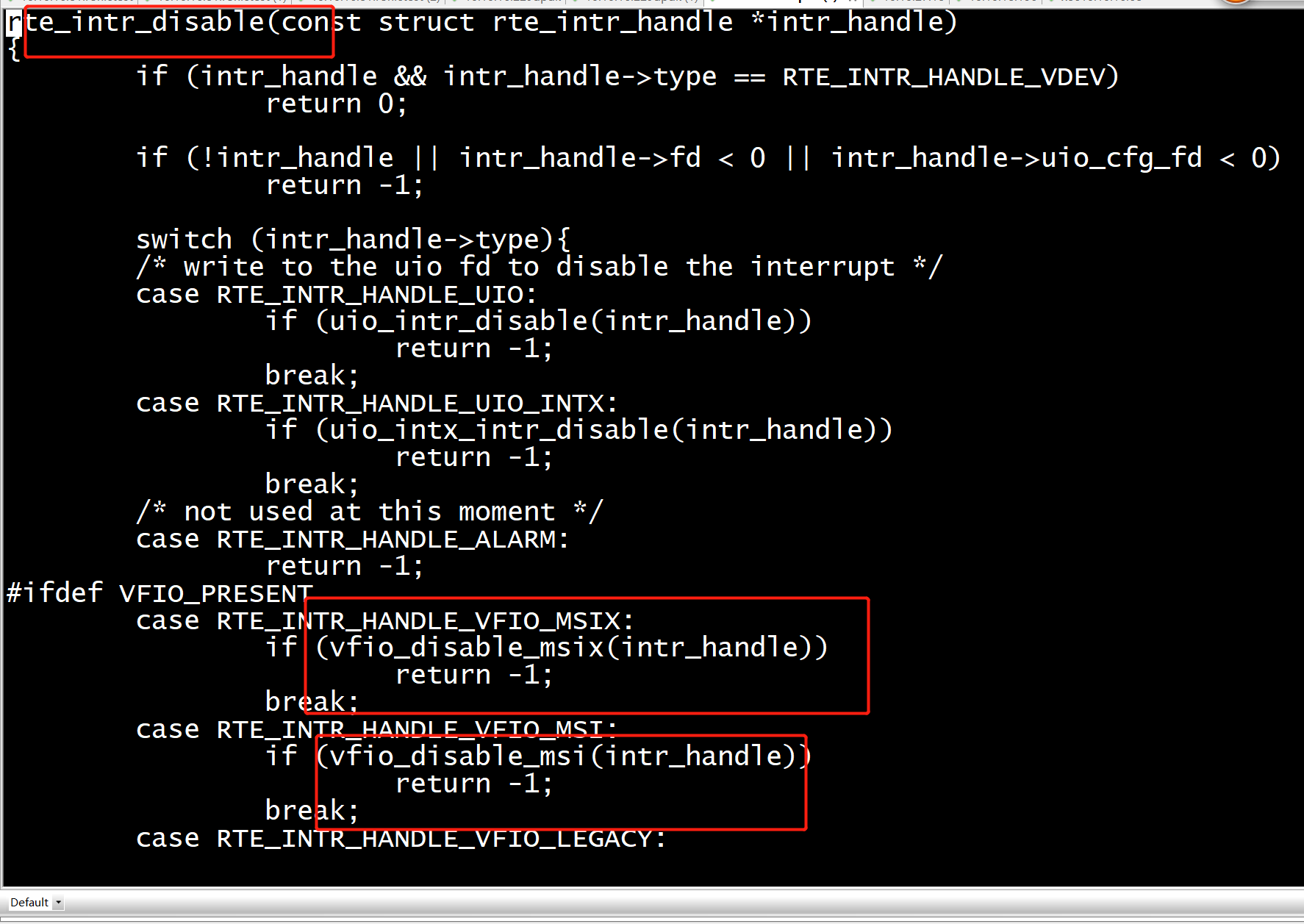

dpdk关中断

static void ixgbe_disable_intr(struct ixgbe_hw *hw) { PMD_INIT_FUNC_TRACE(); if (hw->mac.type == ixgbe_mac_82598EB) { IXGBE_WRITE_REG(hw, IXGBE_EIMC, ~0); } else { IXGBE_WRITE_REG(hw, IXGBE_EIMC, 0xFFFF0000); IXGBE_WRITE_REG(hw, IXGBE_EIMC_EX(0), ~0); IXGBE_WRITE_REG(hw, IXGBE_EIMC_EX(1), ~0); } IXGBE_WRITE_FLUSH(hw); }

[root@localhost ixgbe]# python Python 2.7.5 (default, Oct 31 2018, 18:48:32) [GCC 4.8.5 20150623 (Red Hat 4.8.5-36)] on linux2 Type "help", "copyright", "credits" or "license" for more information. >>> ~0 -1 >>> 0xFF&~0 255 >>> 0xF&~0 15 >>>

ixgbe_dev_rx_queue_intr_disable(struct rte_eth_dev *dev, uint16_t queue_id) { uint32_t mask; struct ixgbe_hw *hw = IXGBE_DEV_PRIVATE_TO_HW(dev->data->dev_private); struct ixgbe_interrupt *intr = IXGBE_DEV_PRIVATE_TO_INTR(dev->data->dev_private); if (queue_id < 16) { ixgbe_disable_intr(hw); intr->mask &= ~(1 << queue_id); // 某一位0表示关 ixgbe_enable_intr(dev); } else if (queue_id < 32) { mask = IXGBE_READ_REG(hw, IXGBE_EIMS_EX(0)); mask &= ~(1 << queue_id); IXGBE_WRITE_REG(hw, IXGBE_EIMS_EX(0), mask); } else if (queue_id < 64) { mask = IXGBE_READ_REG(hw, IXGBE_EIMS_EX(1)); mask &= ~(1 << (queue_id - 32)); IXGBE_WRITE_REG(hw, IXGBE_EIMS_EX(1), mask); } return 0; }

+static int +ixgbe_dev_rx_queue_intr_enable(struct rte_eth_dev *dev, uint16_t queue_id) +{ + u32 mask; + struct ixgbe_hw *hw = + IXGBE_DEV_PRIVATE_TO_HW(dev->data->dev_private); + struct ixgbe_interrupt *intr = + IXGBE_DEV_PRIVATE_TO_INTR(dev->data->dev_private); + + if (queue_id < 16) { + ixgbe_disable_intr(hw); + intr->mask |= (1 << queue_id); + ixgbe_enable_intr(dev); + } else if (queue_id < 32) { + mask = IXGBE_READ_REG(hw, IXGBE_EIMS_EX(0)); + mask &= (1 << queue_id); + IXGBE_WRITE_REG(hw, IXGBE_EIMS_EX(0), mask); + } else if (queue_id < 64) { + mask = IXGBE_READ_REG(hw, IXGBE_EIMS_EX(1)); + mask &= (1 << (queue_id - 32)); + IXGBE_WRITE_REG(hw, IXGBE_EIMS_EX(1), mask); //某一位是1表示开 + } + + return 0; +}

>>> 1 << 2 4 >>> ~(1 << 2) -5 >>>

ixgbe_ethdev.c:289:static int ixgbe_dev_rx_queue_intr_disable(struct rte_eth_dev *dev, ixgbe_ethdev.c:556: .rx_queue_intr_disable = ixgbe_dev_rx_queue_intr_disable, ixgbe_ethdev.c:5891:ixgbe_dev_rx_queue_intr_disable(struct rte_eth_dev *dev, uint16_t queue_id)

int rte_eth_dev_rx_intr_disable(uint16_t port_id, uint16_t queue_id) { struct rte_eth_dev *dev; RTE_ETH_VALID_PORTID_OR_ERR_RET(port_id, -ENODEV); dev = &rte_eth_devices[port_id]; RTE_FUNC_PTR_OR_ERR_RET(*dev->dev_ops->rx_queue_intr_disable, -ENOTSUP); return eth_err(port_id, (*dev->dev_ops->rx_queue_intr_disable)(dev, queue_id)); }

dpdk 网卡初始化,初始化中断

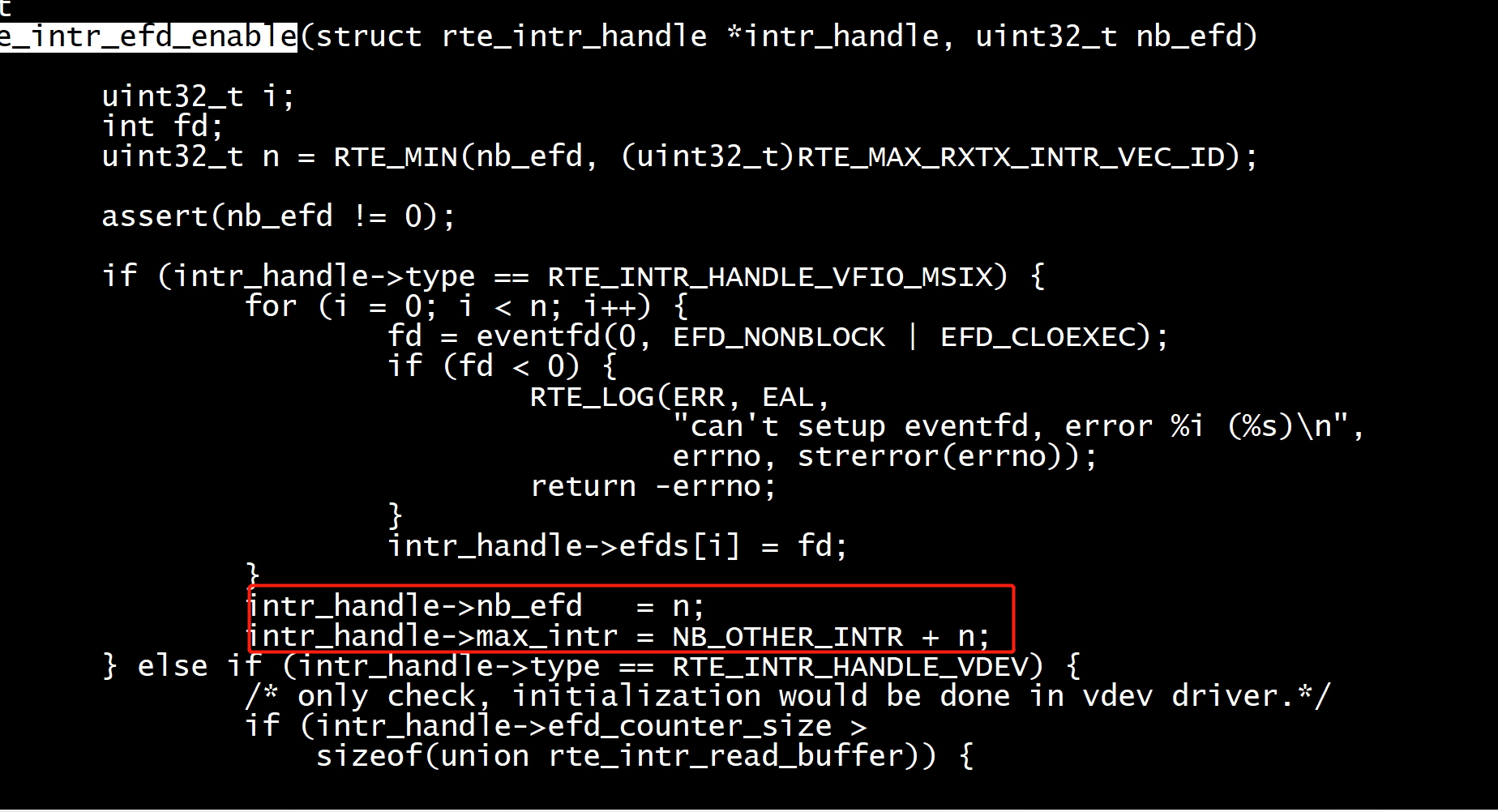

/* * Configure device link speed and setup link. * It returns 0 on success. */ static int ixgbe_dev_start(struct rte_eth_dev *dev) { struct ixgbe_hw *hw = IXGBE_DEV_PRIVATE_TO_HW(dev->data->dev_private); struct ixgbe_vf_info *vfinfo = *IXGBE_DEV_PRIVATE_TO_P_VFDATA(dev->data->dev_private); struct rte_pci_device *pci_dev = RTE_ETH_DEV_TO_PCI(dev); struct rte_intr_handle *intr_handle = &pci_dev->intr_handle; uint32_t intr_vector = 0; /* disable uio/vfio intr/eventfd mapping */ rte_intr_disable(intr_handle); /* check and configure queue intr-vector mapping */ if ((rte_intr_cap_multiple(intr_handle) || !RTE_ETH_DEV_SRIOV(dev).active) && dev->data->dev_conf.intr_conf.rxq != 0) { //中断conf intr_vector = dev->data->nb_rx_queues; if (intr_vector > IXGBE_MAX_INTR_QUEUE_NUM) { PMD_INIT_LOG(ERR, "At most %d intr queues supported", IXGBE_MAX_INTR_QUEUE_NUM); return -ENOTSUP; } if (rte_intr_efd_enable(intr_handle, intr_vector)) return -1; } if (rte_intr_dp_is_en(intr_handle) && !intr_handle->intr_vec) { intr_handle->intr_vec = rte_zmalloc("intr_vec", dev->data->nb_rx_queues * sizeof(int), 0); if (intr_handle->intr_vec == NULL) { PMD_INIT_LOG(ERR, "Failed to allocate %d rx_queues" " intr_vec", dev->data->nb_rx_queues); return -ENOMEM; } } /* confiugre msix for sleep until rx interrupt */ ixgbe_configure_msix(dev); /* initialize transmission unit */ ixgbe_dev_tx_init(dev); /* This can fail when allocating mbufs for descriptor rings */ err = ixgbe_dev_rx_init(dev); if (err) { PMD_INIT_LOG(ERR, "Unable to initialize RX hardware"); goto error; } }

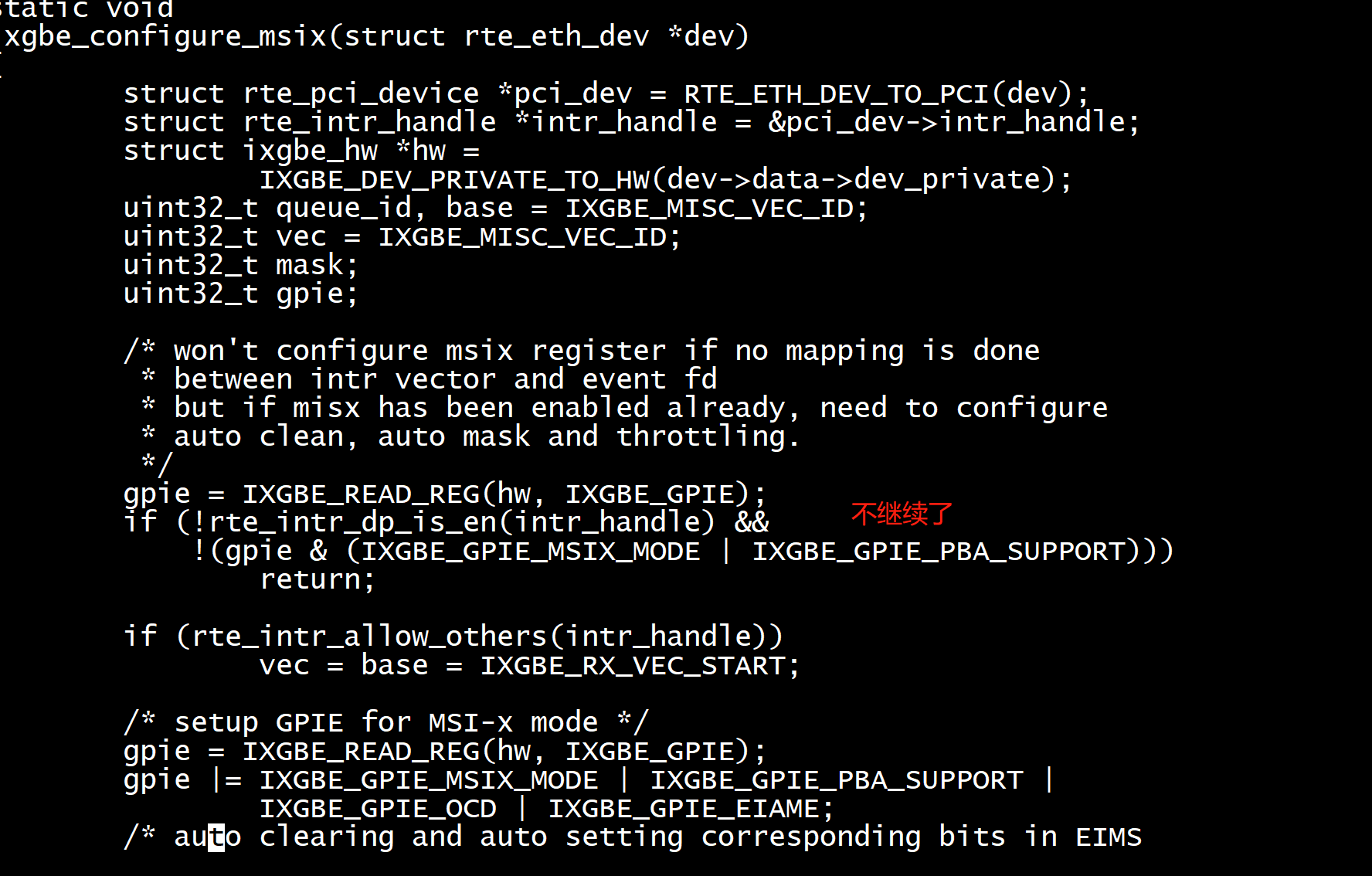

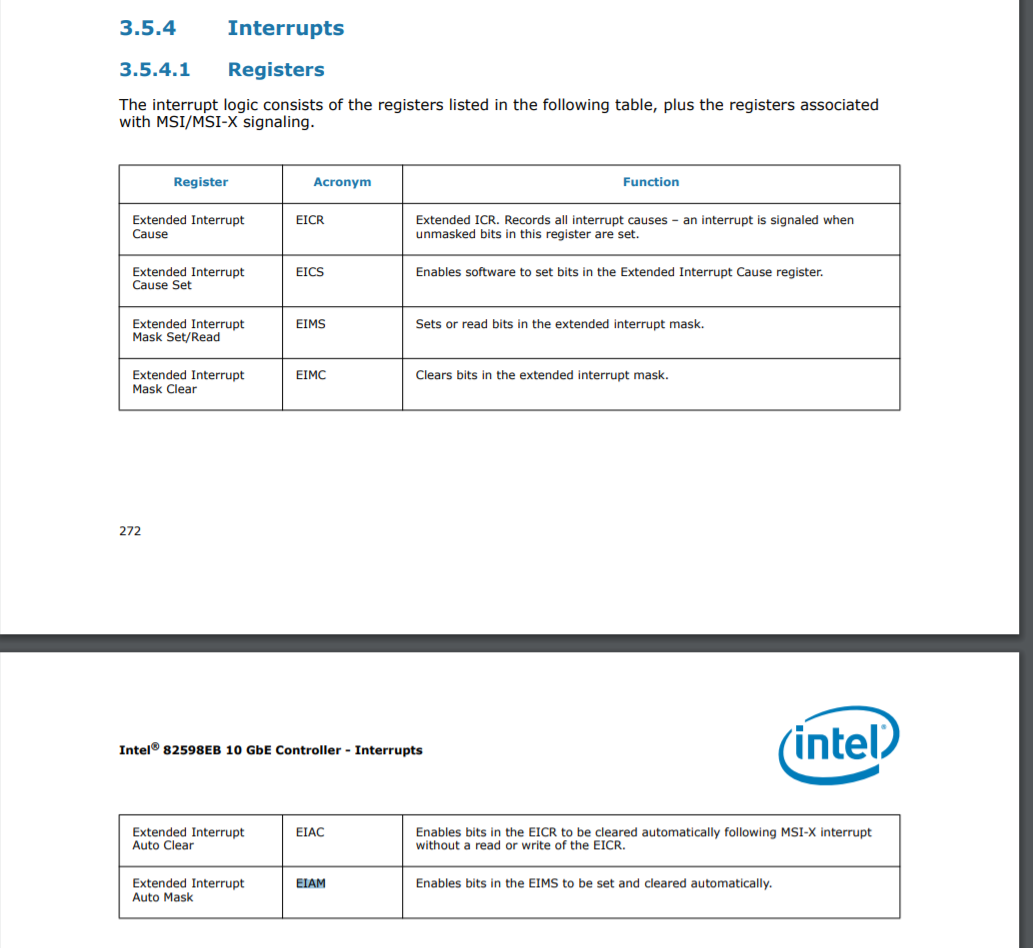

/** * Sets up the hardware to properly generate MSI-X interrupts * @hw * board private structure */ static void ixgbe_configure_msix(struct rte_eth_dev *dev) { struct rte_pci_device *pci_dev = RTE_ETH_DEV_TO_PCI(dev); struct rte_intr_handle *intr_handle = &pci_dev->intr_handle; struct ixgbe_hw *hw = IXGBE_DEV_PRIVATE_TO_HW(dev->data->dev_private); uint32_t queue_id, base = IXGBE_MISC_VEC_ID; uint32_t vec = IXGBE_MISC_VEC_ID; uint32_t mask; uint32_t gpie; /* won't configure msix register if no mapping is done * between intr vector and event fd * but if misx has been enabled already, need to configure * auto clean, auto mask and throttling. */ gpie = IXGBE_READ_REG(hw, IXGBE_GPIE); if (!rte_intr_dp_is_en(intr_handle) && !(gpie & (IXGBE_GPIE_MSIX_MODE | IXGBE_GPIE_PBA_SUPPORT))) return; if (rte_intr_allow_others(intr_handle)) vec = base = IXGBE_RX_VEC_START; /* setup GPIE for MSI-x mode */ gpie = IXGBE_READ_REG(hw, IXGBE_GPIE); gpie |= IXGBE_GPIE_MSIX_MODE | IXGBE_GPIE_PBA_SUPPORT | IXGBE_GPIE_OCD | IXGBE_GPIE_EIAME; /* auto clearing and auto setting corresponding bits in EIMS * when MSI-X interrupt is triggered */ if (hw->mac.type == ixgbe_mac_82598EB) { IXGBE_WRITE_REG(hw, IXGBE_EIAM, IXGBE_EICS_RTX_QUEUE); } else { IXGBE_WRITE_REG(hw, IXGBE_EIAM_EX(0), 0xFFFFFFFF); IXGBE_WRITE_REG(hw, IXGBE_EIAM_EX(1), 0xFFFFFFFF); } IXGBE_WRITE_REG(hw, IXGBE_GPIE, gpie); /* Populate the IVAR table and set the ITR values to the * corresponding register. */ if (rte_intr_dp_is_en(intr_handle)) { for (queue_id = 0; queue_id < dev->data->nb_rx_queues; queue_id++) { /* by default, 1:1 mapping */ ixgbe_set_ivar_map(hw, 0, queue_id, vec); intr_handle->intr_vec[queue_id] = vec; if (vec < base + intr_handle->nb_efd - 1) vec++; } switch (hw->mac.type) { case ixgbe_mac_82598EB: ixgbe_set_ivar_map(hw, -1, IXGBE_IVAR_OTHER_CAUSES_INDEX, IXGBE_MISC_VEC_ID); break; case ixgbe_mac_82599EB: case ixgbe_mac_X540: case ixgbe_mac_X550: case ixgbe_mac_X550EM_x: ixgbe_set_ivar_map(hw, -1, 1, IXGBE_MISC_VEC_ID); break; default: break; } } IXGBE_WRITE_REG(hw, IXGBE_EITR(IXGBE_MISC_VEC_ID), IXGBE_EITR_INTERVAL_US(IXGBE_QUEUE_ITR_INTERVAL_DEFAULT) | IXGBE_EITR_CNT_WDIS); /* set up to autoclear timer, and the vectors */ ---------------------------------- 只打开部分 mask = IXGBE_EIMS_ENABLE_MASK; mask &= ~(IXGBE_EIMS_OTHER | IXGBE_EIMS_MAILBOX | IXGBE_EIMS_LSC); IXGBE_WRITE_REG(hw, IXGBE_EIAC, mask); }

ixgbe_configure_msix 操作的是IXGBE_EIAC不是 IXGBE_EIMS_EX寄存器

https://pdf.ic37.com/INTEL_CN/82598EB_datasheet_13491422/82598EB_289.html

/* Extended Interrupt Mask Clear */ 2004#define IXGBE_EIMC_RTX_QUEUE IXGBE_EICR_RTX_QUEUE /* RTx Queue Interrupt */ 2005#define IXGBE_EIMC_FLOW_DIR IXGBE_EICR_FLOW_DIR /* FDir Exception */ 2006#define IXGBE_EIMC_RX_MISS IXGBE_EICR_RX_MISS /* Packet Buffer Overrun */ 2007#define IXGBE_EIMC_PCI IXGBE_EICR_PCI /* PCI Exception */ 2008#define IXGBE_EIMC_MAILBOX IXGBE_EICR_MAILBOX /* VF to PF Mailbox Int */ 2009#define IXGBE_EIMC_LSC IXGBE_EICR_LSC /* Link Status Change */ 2010#define IXGBE_EIMC_MNG IXGBE_EICR_MNG /* MNG Event Interrupt */ 2011#define IXGBE_EIMC_TIMESYNC IXGBE_EICR_TIMESYNC /* Timesync Event */ 2012#define IXGBE_EIMC_GPI_SDP0 IXGBE_EICR_GPI_SDP0 /* SDP0 Gen Purpose Int */ 2013#define IXGBE_EIMC_GPI_SDP1 IXGBE_EICR_GPI_SDP1 /* SDP1 Gen Purpose Int */ 2014#define IXGBE_EIMC_GPI_SDP2 IXGBE_EICR_GPI_SDP2 /* SDP2 Gen Purpose Int */ 2015#define IXGBE_EIMC_ECC IXGBE_EICR_ECC /* ECC Error */ 2016#define IXGBE_EIMC_GPI_SDP0_BY_MAC(_hw) IXGBE_EICR_GPI_SDP0_BY_MAC(_hw) 2017#define IXGBE_EIMC_GPI_SDP1_BY_MAC(_hw) IXGBE_EICR_GPI_SDP1_BY_MAC(_hw) 2018#define IXGBE_EIMC_GPI_SDP2_BY_MAC(_hw) IXGBE_EICR_GPI_SDP2_BY_MAC(_hw) 2019#define IXGBE_EIMC_PBUR IXGBE_EICR_PBUR /* Pkt Buf Handler Err */ 2020#define IXGBE_EIMC_DHER IXGBE_EICR_DHER /* Desc Handler Err */ 2021#define IXGBE_EIMC_TCP_TIMER IXGBE_EICR_TCP_TIMER /* TCP Timer */ 2022#define IXGBE_EIMC_OTHER IXGBE_EICR_OTHER /* INT Cause Active */ 2023 2024#define IXGBE_EIMS_ENABLE_MASK ( \ 2025 IXGBE_EIMS_RTX_QUEUE | \ 2026 IXGBE_EIMS_LSC | \ 2027 IXGBE_EIMS_TCP_TIMER | \ 2028 IXGBE_EIMS_OTHER)

#define IXGBE_EIMS_OTHER IXGBE_EICR_OTHER /* INT Cause Active */ /* Extended Interrupt Mask Clear */ #define IXGBE_EIMC_RTX_QUEUE IXGBE_EICR_RTX_QUEUE /* RTx Queue Interrupt */ #define IXGBE_EIMC_FLOW_DIR IXGBE_EICR_FLOW_DIR /* FDir Exception */ #define IXGBE_EIMC_RX_MISS IXGBE_EICR_RX_MISS /* Packet Buffer Overrun */ #define IXGBE_EIMC_PCI IXGBE_EICR_PCI /* PCI Exception */ #define IXGBE_EIMC_MAILBOX IXGBE_EICR_MAILBOX /* VF to PF Mailbox Int */ #define IXGBE_EIMC_LSC IXGBE_EICR_LSC /* Link Status Change */ #define IXGBE_EIMC_MNG IXGBE_EICR_MNG /* MNG Event Interrupt */ #define IXGBE_EIMC_TIMESYNC IXGBE_EICR_TIMESYNC /* Timesync Event */ #define IXGBE_EIMC_GPI_SDP0 IXGBE_EICR_GPI_SDP0 /* SDP0 Gen Purpose Int */ #define IXGBE_EIMC_GPI_SDP1 IXGBE_EICR_GPI_SDP1 /* SDP1 Gen Purpose Int */ #define IXGBE_EIMC_GPI_SDP2 IXGBE_EICR_GPI_SDP2 /* SDP2 Gen Purpose Int */ #define IXGBE_EIMC_ECC IXGBE_EICR_ECC /* ECC Error */ #define IXGBE_EIMC_GPI_SDP0_BY_MAC(_hw) IXGBE_EICR_GPI_SDP0_BY_MAC(_hw) #define IXGBE_EIMC_GPI_SDP1_BY_MAC(_hw) IXGBE_EICR_GPI_SDP1_BY_MAC(_hw) #define IXGBE_EIMC_GPI_SDP2_BY_MAC(_hw) IXGBE_EICR_GPI_SDP2_BY_MAC(_hw) #define IXGBE_EIMC_PBUR IXGBE_EICR_PBUR /* Pkt Buf Handler Err */ #define IXGBE_EIMC_DHER IXGBE_EICR_DHER /* Desc Handler Err */ #define IXGBE_EIMC_TCP_TIMER IXGBE_EICR_TCP_TIMER /* TCP Timer */ #define IXGBE_EIMC_OTHER IXGBE_EICR_OTHER /* INT Cause Active */ #define IXGBE_EIMS_ENABLE_MASK ( \ IXGBE_EIMS_RTX_QUEUE | \ IXGBE_EIMS_LSC | \ IXGBE_EIMS_TCP_TIMER | \ IXGBE_EIMS_OTHER)

中断通过写寄存器的方式打开: static void igb_irq_enable(struct igb_adapter *adapter) { /* ... */ wr32(E1000_IMS, IMS_ENABLE_MASK | E1000_IMS_DRSTA); wr32(E1000_IAM, IMS_ENABLE_MASK | E1000_IMS_DRSTA); /* ... */ }

浙公网安备 33010602011771号

浙公网安备 33010602011771号