rte_memseg

memseg 数组是维护物理地址的,在上面讲到struct hugepage结构对每个hugepage物理页面都存储了它在程序里面的虚存地址。memseg 数组的作用是将物理地址、虚拟地址都连续的hugepage,并且都在同一个socket,pagesize 也相同的hugepage页面集合,把它们都划在一个memseg结构里面,这样做的好处就是优化内存。

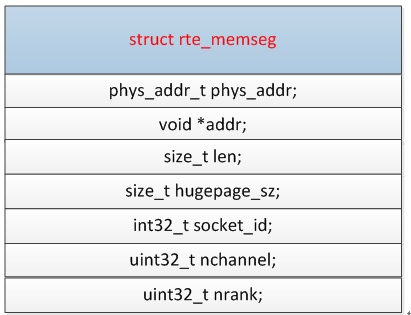

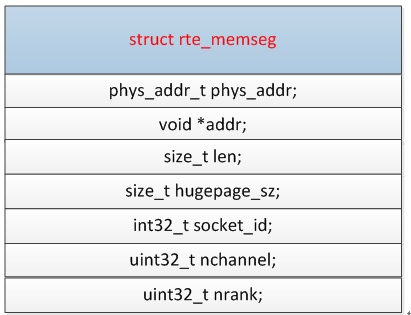

rte_memseg这个结构也很简单:

1) phys_addr:这个memseg的包含的所有的hugepage页面的起始物理地址;

2) addr:这些hugepage页面的起始的虚存地址;

3) len:这个memseg的包含的空间size

4) hugepage_sz; 这些页面的size 2M /1G?

rte_eal_malloc_heap_init

rte_memseg_contig_walk遍历memseg list中连续的mem seg,然后使用malloc_add_seg将这些内存加入heap的管理

rte_memseg_contig_walk(malloc_add_seg, NULL);

malloc_add_seg:

malloc_heap_add_memory(heap, found_msl, ms->addr, len);

void *rte_malloc_socket(const char *type, size_t size, unsigned align, int socket_arg)

{

struct rte_mem_config *mcfg = rte_eal_get_configuration()->mem_config;

int socket, i;

void *ret;

/* 如果要分配的内存大小为0,或者指定的对齐大小并不是2的幂值,则直接返回空 */

if (size == 0 || (align && !rte_is_power_of_2(align)))

return NULL;

/* 如果当前系统没使用大页,则设置指定分配的numa 结点为 SOCKET_ID_ANY */

if (!rte_eal_has_hugepages())

socket_arg = SOCKET_ID_ANY;

/* 如果指定分配内存的 Numa 结点为 SOCKET_ID_ANY ,则自动获取当前线程所在的 Numa结点 */

if (socket_arg == SOCKET_ID_ANY)

socket = malloc_get_numa_socket();

else

socket = socket_arg;

/* Check socket parameter */

if (socket >= RTE_MAX_NUMA_NODES)

return NULL;

/* 在与指定 Numa 结点相同的 malloc_heap 分配指定大小的内存 */

ret = malloc_heap_alloc(&mcfg->malloc_heaps[socket], type, size, 0, align == 0 ? 1 : align, 0);

if (ret != NULL || socket_arg != SOCKET_ID_ANY)

return ret;

/* 如果在当前 Numa 结点上分配内存失败,就去其他Numa结点上尝试 */

for (i = 0; i < RTE_MAX_NUMA_NODES; i++) {

/* we already tried this one */

if (i == socket)

continue;

ret = malloc_heap_alloc(&mcfg->malloc_heaps[i], type, size, 0, align == 0 ? 1 : align, 0);

if (ret != NULL)

return ret;

}

return NULL;

}

#0 0x00005555555a4211 in alloc_seg (ms=0x20000002e000, addr=0x200000200000, socket_id=0, hi=0x5555558831d8 <internal_config+248>, list_idx=0, seg_idx=0) at /spdk/dpdk/lib/librte_eal/linuxapp/eal/eal_memalloc.c:722

#1 0x00005555555a4a41 in alloc_seg_walk (msl=0x555555805f9c <early_mem_config+124>, arg=0x7fffffffbdc0) at /spdk/dpdk/lib/librte_eal/linuxapp/eal/eal_memalloc.c:926

#2 0x00005555555ae930 in rte_memseg_list_walk_thread_unsafe (func=0x5555555a47d1 <alloc_seg_walk>, arg=0x7fffffffbdc0) at /spdk/dpdk/lib/librte_eal/common/eal_common_memory.c:658

#3 0x00005555555a4fa3 in eal_memalloc_alloc_seg_bulk (ms=0x55555588ec40, n_segs=1, page_sz=2097152, socket=0, exact=true) at /spdk/dpdk/lib/librte_eal/linuxapp/eal/eal_memalloc.c:1086

#4 0x00005555555c28c6 in alloc_pages_on_heap (heap=0x55555580879c <early_mem_config+10364>, pg_sz=2097152, elt_size=16384, socket=0, flags=0, align=64, bound=0, contig=false, ms=0x55555588ec40, n_segs=1) at /spdk/dpdk/lib/librte_eal/common/malloc_heap.c:307

#5 0x00005555555c2b1a in try_expand_heap_primary (heap=0x55555580879c <early_mem_config+10364>, pg_sz=2097152, elt_size=16384, socket=0, flags=0, align=64, bound=0, contig=false) at /spdk/dpdk/lib/librte_eal/common/malloc_heap.c:403

#6 0x00005555555c2d7a in try_expand_heap (heap=0x55555580879c <early_mem_config+10364>, pg_sz=2097152, elt_size=16384, socket=0, flags=0, align=64, bound=0, contig=false) at /spdk/dpdk/lib/librte_eal/common/malloc_heap.c:494

#7 0x00005555555c32e7 in alloc_more_mem_on_socket (heap=0x55555580879c <early_mem_config+10364>, size=16384, socket=0, flags=0, align=64, bound=0, contig=false) at /spdk/dpdk/lib/librte_eal/common/malloc_heap.c:622

#8 0x00005555555c3474 in malloc_heap_alloc_on_heap_id (type=0x5555555e94e5 "rte_services", size=16384, heap_id=0, flags=0, align=64, bound=0, contig=false) at /spdk/dpdk/lib/librte_eal/common/malloc_heap.c:676

#9 0x00005555555c35a4 in malloc_heap_alloc (type=0x5555555e94e5 "rte_services", size=16384, socket_arg=-1, flags=0, align=64, bound=0, contig=false) at /spdk/dpdk/lib/librte_eal/common/malloc_heap.c:714

#10 0x00005555555be9a7 in rte_malloc_socket (type=0x5555555e94e5 "rte_services", size=16384, align=64, socket_arg=-1) at /spdk/dpdk/lib/librte_eal/common/rte_malloc.c:58

#11 0x00005555555bea06 in rte_zmalloc_socket (type=0x5555555e94e5 "rte_services", size=16384, align=64, socket=-1) at /spdk/dpdk/lib/librte_eal/common/rte_malloc.c:77

#12 0x00005555555bea33 in rte_zmalloc (type=0x5555555e94e5 "rte_services", size=16384, align=64) at /spdk/dpdk/lib/librte_eal/common/rte_malloc.c:86

#13 0x00005555555beaa5 in rte_calloc (type=0x5555555e94e5 "rte_services", num=64, size=256, align=64) at /spdk/dpdk/lib/librte_eal/common/rte_malloc.c:104

#14 0x00005555555c684a in rte_service_init () at /spdk/dpdk/lib/librte_eal/common/rte_service.c:82

#15 0x0000555555597677 in rte_eal_init (argc=5, argv=0x55555588ebb0) at /spdk/dpdk/lib/librte_eal/linuxapp/eal/eal.c:1070

#16 0x0000555555595226 in spdk_env_init (opts=0x7fffffffcbe0) at init.c:397

#17 0x000055555555f074 in main (argc=11, argv=0x7fffffffcd18) at perf.c:1743

alloc_seg_walk(const struct rte_memseg_list *msl, void *arg)

{

struct rte_mem_config *mcfg = rte_eal_get_configuration()->mem_config;

struct alloc_walk_param *wa = arg;

struct rte_memseg_list *cur_msl;

size_t page_sz;

int cur_idx, start_idx, j, dir_fd = -1;

unsigned int msl_idx, need, i;

if (msl->page_sz != wa->page_sz)

return 0;

if (msl->socket_id != wa->socket)

return 0;

page_sz = (size_t)msl->page_sz;

msl_idx = msl - mcfg->memsegs;

cur_msl = &mcfg->memsegs[msl_idx];

need = wa->n_segs;

/* try finding space in memseg list */

if (wa->exact) {

/* if we require exact number of pages in a list, find them */

cur_idx = rte_fbarray_find_next_n_free(&cur_msl->memseg_arr, 0,

need);

if (cur_idx < 0)

return 0;

start_idx = cur_idx;

} else {

int cur_len;

/* we don't require exact number of pages, so we're going to go

* for best-effort allocation. that means finding the biggest

* unused block, and going with that.

*/

cur_idx = rte_fbarray_find_biggest_free(&cur_msl->memseg_arr,

0);

if (cur_idx < 0)

return 0;

start_idx = cur_idx;

/* adjust the size to possibly be smaller than original

* request, but do not allow it to be bigger.

*/

cur_len = rte_fbarray_find_contig_free(&cur_msl->memseg_arr,

cur_idx);

need = RTE_MIN(need, (unsigned int)cur_len);

}

/* do not allow any page allocations during the time we're allocating,

* because file creation and locking operations are not atomic,

* and we might be the first or the last ones to use a particular page,

* so we need to ensure atomicity of every operation.

*

* during init, we already hold a write lock, so don't try to take out

* another one.

*/

if (wa->hi->lock_descriptor == -1 && !internal_config.in_memory) {

dir_fd = open(wa->hi->hugedir, O_RDONLY);

if (dir_fd < 0) {

RTE_LOG(ERR, EAL, "%s(): Cannot open '%s': %s\n",

__func__, wa->hi->hugedir, strerror(errno));

return -1;

}

/* blocking writelock */

if (flock(dir_fd, LOCK_EX)) {

RTE_LOG(ERR, EAL, "%s(): Cannot lock '%s': %s\n",

__func__, wa->hi->hugedir, strerror(errno));

close(dir_fd);

return -1;

}

}

for (i = 0; i < need; i++, cur_idx++) {

struct rte_memseg *cur;

void *map_addr;

cur = rte_fbarray_get(&cur_msl->memseg_arr, cur_idx);

map_addr = RTE_PTR_ADD(cur_msl->base_va,

cur_idx * page_sz);

//#define RTE_PTR_ADD(ptr, x) ((void*)((uintptr_t)(ptr) + (x)))

if (alloc_seg(cur, map_addr, wa->socket, wa->hi,

msl_idx, cur_idx)) {

RTE_LOG(DEBUG, EAL, "attempted to allocate %i segments, but only %i were allocated\n",

need, i);

/* if exact number wasn't requested, stop */

if (!wa->exact)

goto out;

/* clean up */

for (j = start_idx; j < cur_idx; j++) {

struct rte_memseg *tmp;

struct rte_fbarray *arr =

&cur_msl->memseg_arr;

tmp = rte_fbarray_get(arr, j);

rte_fbarray_set_free(arr, j);

/* free_seg may attempt to create a file, which

* may fail.

*/

if (free_seg(tmp, wa->hi, msl_idx, j))

RTE_LOG(DEBUG, EAL, "Cannot free page\n");

}

/* clear the list */

if (wa->ms)

memset(wa->ms, 0, sizeof(*wa->ms) * wa->n_segs);

if (dir_fd >= 0)

close(dir_fd);

return -1;

}

if (wa->ms)

wa->ms[i] = cur;

rte_fbarray_set_used(&cur_msl->memseg_arr, cur_idx);

}

out:

wa->segs_allocated = i;

if (i > 0)

cur_msl->version++;

if (dir_fd >= 0)

close(dir_fd);

/* if we didn't allocate any segments, move on to the next list */

return i > 0;

}

static int

alloc_more_mem_on_socket(struct malloc_heap *heap, size_t size, int socket,

unsigned int flags, size_t align, size_t bound, bool contig)

{

struct rte_mem_config *mcfg = rte_eal_get_configuration()->mem_config;

struct rte_memseg_list *requested_msls[RTE_MAX_MEMSEG_LISTS];

struct rte_memseg_list *other_msls[RTE_MAX_MEMSEG_LISTS];

uint64_t requested_pg_sz[RTE_MAX_MEMSEG_LISTS];

uint64_t other_pg_sz[RTE_MAX_MEMSEG_LISTS];

uint64_t prev_pg_sz;

int i, n_other_msls, n_other_pg_sz, n_requested_msls, n_requested_pg_sz;

bool size_hint = (flags & RTE_MEMZONE_SIZE_HINT_ONLY) > 0;

unsigned int size_flags = flags & ~RTE_MEMZONE_SIZE_HINT_ONLY;

void *ret;

memset(requested_msls, 0, sizeof(requested_msls));

memset(other_msls, 0, sizeof(other_msls));

memset(requested_pg_sz, 0, sizeof(requested_pg_sz));

memset(other_pg_sz, 0, sizeof(other_pg_sz));

/*

* go through memseg list and take note of all the page sizes available,

* and if any of them were specifically requested by the user.

*/

n_requested_msls = 0;

n_other_msls = 0;

for (i = 0; i < RTE_MAX_MEMSEG_LISTS; i++) {

struct rte_memseg_list *msl = &mcfg->memsegs[i];

if (msl->socket_id != socket)

continue;

if (msl->base_va == NULL)

continue;

/* if pages of specific size were requested */

if (size_flags != 0 && check_hugepage_sz(size_flags,

msl->page_sz))

requested_msls[n_requested_msls++] = msl;

else if (size_flags == 0 || size_hint)

other_msls[n_other_msls++] = msl;

}

/* sort the lists, smallest first */

qsort(requested_msls, n_requested_msls, sizeof(requested_msls[0]),

compare_pagesz);

qsort(other_msls, n_other_msls, sizeof(other_msls[0]),

compare_pagesz);

/* now, extract page sizes we are supposed to try */

prev_pg_sz = 0;

n_requested_pg_sz = 0;

for (i = 0; i < n_requested_msls; i++) {

uint64_t pg_sz = requested_msls[i]->page_sz;

if (prev_pg_sz != pg_sz) {

requested_pg_sz[n_requested_pg_sz++] = pg_sz;

prev_pg_sz = pg_sz;

}

}

prev_pg_sz = 0;

n_other_pg_sz = 0;

for (i = 0; i < n_other_msls; i++) {

uint64_t pg_sz = other_msls[i]->page_sz;

if (prev_pg_sz != pg_sz) {

other_pg_sz[n_other_pg_sz++] = pg_sz;

prev_pg_sz = pg_sz;

}

}

/* finally, try allocating memory of specified page sizes, starting from

* the smallest sizes

*/

for (i = 0; i < n_requested_pg_sz; i++) {

uint64_t pg_sz = requested_pg_sz[i];

/*

* do not pass the size hint here, as user expects other page

* sizes first, before resorting to best effort allocation.

*/

if (!try_expand_heap(heap, pg_sz, size, socket, size_flags,

align, bound, contig))

return 0;

}

if (n_other_pg_sz == 0)

return -1;

/* now, check if we can reserve anything with size hint */

ret = find_suitable_element(heap, size, flags, align, bound, contig);

if (ret != NULL)

return 0;

/*

* we still couldn't reserve memory, so try expanding heap with other

* page sizes, if there are any

*/

for (i = 0; i < n_other_pg_sz; i++) {

uint64_t pg_sz = other_pg_sz[i];

if (!try_expand_heap(heap, pg_sz, size, socket, flags,

align, bound, contig))

return 0;

}

return -1;

}