《数据分析实战-托马兹.卓巴斯》读书笔记第9章--自然语言处理NLTK(分析文本、词性标注、主题抽取、文本数据分类)

第9章描述了多种与分析文本信息流相关的技巧:词性标注、主题抽取以及对文本数据的分类。

本章中,会学习以下技巧:

·从网络读入原始文本

·标记化和标准化

·识别词类,处理n-gram,识别命名实体

·识别文章主题

·识别句子结构

·根据评论给影片归类

9.1导论

根据受控环境中收集的结构化数据建模(比如前一章)还是相对直接的。然而,现实世界中,我们很少处理结构化数据。理解人们的反馈或分析报纸的文章时尤其如此。

NLP(Natural Language Processing,自然语言处理)这门学科涉及计算机科学,统计学及语言学,其目标是处理人类语言(我特意没有使用“理解”这个词)及提取特征以用于建模。使用NLP的概念,在其他任务中,我们可以找出文本中出现最多的词,以大致辨别出文本主题,识别出人名地名,找到句子的主语和宾语,或者分析某人反馈信息的情感。

这些技巧会用到两个数据。第一个来自西雅图时报的官网——Josh Lederman关于Obama要求对更多枪支交易的背景调查(http://www.seattletimes.com/nation-world/obama-starts-2016-with-a-fight-over-gun-control/,访问时间:2016年1月4日)。

另一个是经过A.L.Mass等处理过的50000条影评;完整的数据集在http://ai.stanford.edu/~amaas/data/sentiment/。其在“Andrew L.Maas,Raymond E.Daly,Peter T.Pham,Dan Huang,Andrew Y.Ng,and Christopher Potts(2011),Learning Word Vectors for Sentiment Analysis,The 49th Annual Meeting of the Association for Computational Linguistics(ACL 2011)”中公布。

关于50000条影评,我们从训练批次和测试批次中各选取2000条正面的与2000条负面的。

9.2从网络读入原始文本

大多数时候,无格式文本可以在文本文件中找到;本技巧中,我们不会教你如何这样做,因为之前已经展示过了。(参考本书第1章)

下一技巧会讨论我们还没讨论过的读入文件的方法。

然而,很多时候,我们需要直接从网络读入原始文本:我们也许希望分析一篇博客帖子、一篇文章或者Facebook/Twitter上的帖子。Facebook和Twitter提供了API(Application Programming Interfaces,应用编程接口),一般以XML或JSON格式返回数据,处理HTML文件并不这么直接。

本技巧中,会学到如何处理Web页面,读入内容并进行处理。

准备:需装好urllib、html5lib和Beautiful Soup。

Python 3自带urllib(https://docs.python.org/3/library/urllib.html)。然而,如果你的配置中没有Beautiful Soup,安装一下也很简单。

另外,要用Beautiful Soup解析HTML文件,我们需要安装html5lib;

步骤:

Python 2.x和Python 3.x下使用urllib访问网站的过程略有不同:(Python 2.x中的)urllib2已经拆成了urllib.request、urllib.error、urllib.parse和urllib.robotparser。

更多信息请移步https://docs.python.org/2/library/urllib2.html。

本技巧中,我们使用urllib.request(nlp_read.py文件):

1 import urllib.request as u 2 import bs4 as bs 3 4 # link to the article at The Seattle Times 5 st_url = 'http://www.seattletimes.com/nation-world/obama-starts-2016-with-a-fight-over-gun-control/' 6 7 # read the contents of the webpage 8 with u.urlopen(st_url) as response: 9 html = response.read() 10 11 # using beautiful soup -- let's parse the content of the HTML 12 read = bs.BeautifulSoup(html, 'html5lib') 13 14 # find the article tag 15 article = read.find('article') 16 17 # find all the paragraphs of the article 18 all_ps = article.find_all('p') 19 20 # object to hold the body of text 21 body_of_text = [] 22 23 # get the tile 24 body_of_text.append(read.title.get_text()) 25 print(read.title) 26 27 # put all the paragraphs to the body of text list 28 for p in all_ps: 29 body_of_text.append(p.get_text()) 30 31 # we don't need some of the parts at the bottom of the page 32 body_of_text = body_of_text[:24] 33 34 # let's see what we got 35 print('\n'.join(body_of_text)) 36 37 # and save it to a file 38 with open('../../Data/Chapter09/ST_gunLaws.txt', 'w') as f: 39 f.write('\n'.join(body_of_text))

原理:一如既往,我们先导入需要的模块;本例中,就是urllib和Beautiful Soup。

西雅图时报的文章链接存在st_url对象。urllib的.urlopen(...)方法打开这个特定的URL。

我们用到了with(...)as...结构——这个结构我们已经熟悉了——因为在我们不再使用时,它会适时地关闭连接。当然,你也能这么做:

1 local_filename.headers=\ 2 urllib.request.urlretrieve(st_url) 3 html=open(local_filename) 4 html.Close()

响应对象的.read()方法读入网页的全部内容。打印出来会是这样(当然,已经简化了):

这是网页以纯文本呈现的内容。这不是我们要分析的内容。

Beautiful Soup从天而降!BeautifulSoup(...)方法以HTML或XML文本作为第一个参数。第二个参数指定了使用的解析器。

所有可用的解析器,参见http://www.crummy.com/software/BeautifulSoup/bs4/doc/#specifying-the-parser-to-use。

解析之后,(某种程度上)更可读了:

然而,我们并不用Beautiful Soup将结果打印到屏幕上。BeautifulSoup对象内部实现成一个文档中标签组成的层次化包。

你可以认为BeautifulSoup对象是一棵树。

有了前面的输出,好消息是你可以找出HTML/XML文件中所有的标签。新一代网络页面(兼容HTML5)有些新标签,有助于更方便地在页面上展示内容。

所有新元素参见:http://www.w3.org/TR/html5-diff/#new-elements。

我们的例子中,我们先找到并提取文章标签;这缩小了我们搜索的范围:

现在我们只关注文章内容,忽略用来构建页面的部分。如果你自行查看网页,你会看到眼熟的句子:

Obama moves to require background checks for more gun sales.

Originally published January 4,2016 at12:50 am.

我们行进在正确的道路上。往下滚动一点,我们可以看到更多熟悉的文章句子:

显然,文章的段落都包含在<p>标签对里面。所以,我们用.find_all('p')将他们全都提取出来。

然后,我们将标题加到body_of_text列表。我们用.get_text()方法提取标签对之间的文本;否则我们得到的内容里面将包含标签:

/* <title>Obama moves to require background checks for more gun sales | The Seattle Times</title> */

我们用同样的方法对所有段落剥除标签。你会在屏幕上看到下面的内容(有缩略):

/* Obama moves to require background checks for more gun sales | The Seattle Times Although Obama can't unilaterally change gun laws, the president is hoping that beefing up enforcement of existing laws can prevent at least some gun deaths in a country rife with them. WASHINGTON (AP) — President Barack Obama moved Monday to expand background checks to cover more firearms sold at gun shows, online and anywhere else, aiming to curb a scourge of gun violence despite unyielding opposition to new laws in Congress. Obama’s plan to broaden background checks forms the centerpiece of a broader package of gun control measures the president plans to take on his own in his final year in office. Although Obama can’t unilaterally change gun laws, the president is hoping that beefing up enforcement of existing laws can prevent at least some gun deaths in a country rife with them. Washington state voters last fall passed Initiative 594 that expanded background checks for gun buyers to include private sales and transfers, such as those conducted online or at gun shows. Gun-store owner moving out of Seattle because of new tax “This is not going to solve every violent crime in this country,” Obama said. Still, he added, “It will potentially save lives and spare families the pain of these extraordinary losses.” Under current law, only federally licensed gun dealers must conduct background checks on buyers, but many who sell guns at flea markets, on websites or in other informal settings don’t register as dealers. Gun control advocates say that loophole is exploited to skirt the background check requirement. Now, the Justice Department’s Bureau of Alcohol, Tobacco, Firearms and Explosives will issue updated guidance that says the government should deem anyone “in the business” of selling guns to be a dealer, regardless of where he or she sells the guns. To that end, the government will consider other factors, including how many guns a person sells and how frequently, and whether those guns are sold for a profit. The executive actions on gun control fall far short of what Obama and likeminded lawmakers attempted to accomplish with legislation in 2013, after a massacre at a Connecticut elementary school that shook the nation’s conscience. Even still, the more modest measures were sure to spark legal challenges from those who oppose any new impediments to buying guns. “We’re very comfortable that the president can legally take these actions,” said Attorney General Loretta Lynch. Obama’s announcement was hailed by Democratic lawmakers and gun control groups like the Brady Campaign to Prevent Gun Violence, which claimed Obama was making history with “bold and meaningful action” that would make all Americans safer. Hillary Clinton, at a rally in Iowa, said she was “so proud” of Obama but warned that the next president could easily undo his changes. “I won’t wipe it away,” Clinton said. But Republicans were quick to accuse Obama of gross overreach. Sen Bob Corker, R-Tenn., denounced Obama’s steps as “divisive and detrimental to real solutions.” “I will work with my colleagues to respond appropriately to ensure the Constitution is respected,” Corker said. Far from mandating background checks for all gun sales, the new guidance still exempts collectors and gun hobbyists, and the exact definition of who must register as a dealer and conduct background checks remains exceedingly vague. The administration did not issue a number for how many guns someone must sell to be considered a dealer, instead saying it planned to remind people that courts have deemed people to be dealers in some cases even if they only sell a handful of guns. And the background check provision rests in the murky realm of agency guidelines, which have less force than full-fledged federal regulations and can easily be rescinded. Many of the Republican presidential candidates running to succeed Obama have vowed to rip up his new gun restrictions upon taking office. In an attempt to prevent gun purchases from falling through the cracks, the FBI will hire 230 more examiners to process background checks, the White House said, an increase of about 50 percent. Many of the roughly 63,000 background check requests each day are processed within seconds. But if the system kicks back a request for further review, the government only has three days before federal law says the buyer can return and buy the gun without being cleared. That weak spot in the system came under scrutiny last summer when the FBI revealed that Dylann Roof, the accused gunman in the Charleston, S.C., church massacre, was improperly allowed to buy a gun because incomplete record-keeping and miscommunication among authorities delayed processing of his background check beyond the three-day limit. The White House also said it planned to ask Congress for $500 million to improve mental health care, and Obama issued a memorandum directing federal agencies to conduct or sponsor research into smart gun technology that reduces the risk of accidental gun discharges. The Obama administration also plans to complete a rule, already in the works, to close another loophole that allows trusts or corporations to purchase sawed-off shotguns, machine guns and similar weapons without background checks. Obama planned to announce the new measures at an event at the White House on Tuesday as he continued a weeklong push to promote the gun effort and push back on its critics. He met at the White House on Monday with Democratic lawmakers who have supported stricter gun control, and planned to take his argument to prime time Thursday with a televised town hall discussion. The initiative also promised to be prominent in Obama’s final State of the Union address next week. Whether the new steps will effectively prevent future gun deaths remained unclear. Philip Cook, a Duke University professor who researches gun violence and policy, said surveys of prisoners don’t show gun shows to be a major direct source of weapons used in violent crime. The attorney general, asked how many dealers would be newly forced to register, declined to give a number. “It’s just impossible to predict,” Lynch said. */

这才更像是我们可以处理的。最后将文本保存到文件。

9.3标记化和标准化

从页面提取内容只是第一步。在分析文章内容前,我们需要将文章拆成句子,进而拆成词。

然后我们要面临另一个问题;任何文本中,我们都会看到不同的时态、被动语态或者罕见的语法结构。为了提取主题或分析情感,我们并不区分said和says——一个say就足够了。也就是我们还要做标准化的工作,将词的不同形式转成普通形式。

准备:需要NLTK(Natural Language Toolkit,自然语言工具包)。然而,在开始之前,你需要确保机器上有NLTK模块。

/* pip install nltk Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple Collecting nltk Downloading https://pypi.tuna.tsinghua.edu.cn/packages/f6/1d/d925cfb4f324ede997f6d47bea4d9babba51b49e87a767c170b77005889d/nltk-3.4.5.zip (1.5MB) Requirement already satisfied: six in d:\tools\python37\lib\site-packages (from nltk) (1.12.0) Building wheels for collected packages: nltk Building wheel for nltk (setup.py): started Building wheel for nltk (setup.py): finished with status 'done' Created wheel for nltk: filename=nltk-3.4.5-cp37-none-any.whl size=1449914 sha256=68a8454c9bd939fc1fb5cb853896d16b4e3f5a099db051de73100ceb6d485d06 Stored in directory: C:\Users\tony zhang\AppData\Local\pip\Cache\wheels\bd\e1\05\dc3530d70790416315b9e4f889ec29e59130a01ac9ce6fab29 Successfully built nltk Installing collected packages: nltk Successfully installed nltk-3.4.5 FINISHED */

然而,这只能保证你机器上有NLTK。要能使用程序,我们需要下载模块某些部分要用到的数据。执行下面程序可以做到这一点(nlp_download.py文件):

1 import nltk

2 from nltk import data

3 data.path.append(r'D:\download\nltk_data') # 这里的路径需要换成自己数据文件下载的路径

4

5 #nltk.download()

6 #nltk.download('punkt')

开始执行后,你会看到这个类似于上图的弹窗,下一步,点击Download按钮。

默认情况下,它全都突出显示,我建议你保持这个做法。其他选项会下载跟随NLTK book所需要的所有部分。全部下载约900M。

NLTK book可以在这里找到:http://www.nltk.org/book/,如果对NLP感兴趣,作者强烈推荐你阅读这个。

会开始下载过程,你会有一段时间要面对类似下面的窗口;下载所有部分花了45~50分钟。耐心一点。

如果下载失败了,你可以只安装punkt模型。切到All Packages页,选择punkt。

如果你实在受不下载的龟速,邀月的解决方案:

https://github.com/nltk/nltk_data/tree/gh-pages页面查看Clone:https://github.com/nltk/nltk_data.git

或下载得到nltk_data-gh-pages.zip文件

https://codeload.github.com/nltk/nltk_data/zip/gh-pages,

下载后在py代码中记得更改为下载后的路径,详见上面的代码示例。

步骤:万事俱备,我们开始标记化和标准化工作(nlp_tokenize.py文件):

1 import nltk 2 3 from nltk import data 4 data.path.append(r'D:\download\nltk_data') # 这里的路径需要换成自己数据文件下载的路径 5 6 # read the text 7 guns_laws = '../../Data/Chapter09/ST_gunLaws.txt' 8 9 with open(guns_laws, 'r') as f: 10 article = f.read() 11 12 # load NLTK modules 13 sentencer = nltk.data.load('tokenizers/punkt/english.pickle') 14 tokenizer = nltk.RegexpTokenizer(r'\w+') 15 stemmer = nltk.PorterStemmer() 16 lemmatizer = nltk.WordNetLemmatizer() 17 18 19 # split the text into sentences 20 sentences = sentencer.tokenize(article) 21 22 words = [] 23 stemmed_words = [] 24 lemmatized_words = [] 25 26 # and for each sentence 27 for sentence in sentences: 28 # split the sentence into words 29 words.append(tokenizer.tokenize(sentence)) 30 31 # stemm the words 32 stemmed_words.append([stemmer.stem(word) 33 for word in words[-1]]) 34 35 # and lemmatize them 36 lemmatized_words.append([lemmatizer.lemmatize(word) 37 for word in words[-1]]) 38 39 # and save the results to files 40 file_words = '../../Data/Chapter09/ST_gunLaws_words.txt' 41 file_stems = '../../Data/Chapter09/ST_gunLaws_stems.txt' 42 file_lemmas = '../../Data/Chapter09/ST_gunLaws_lemmas.txt' 43 44 with open(file_words, 'w') as f: 45 for w in words: 46 for word in w: 47 f.write(word + '\n') 48 49 with open(file_stems, 'w') as f: 50 for w in stemmed_words: 51 for word in w: 52 f.write(word + '\n') 53 54 with open(file_lemmas, 'w') as f: 55 for w in lemmatized_words: 56 for word in w: 57 f.write(word + '\n')

原理:首先,我们从ST_gunLaws.txt文件读入文本。由于我们的文本文件几乎没有结构(除了段落是用\n隔开这一点),我们可以直接读入文件;.read()就做了这件事。

然后,我们加载所有需要的NLTK模块。sentencer对象是一个punkt句子标记器。标记器使用无监督学习找到句子的开始和结束。一个朴素的方法是寻找点号。但是,这方法没法处理句子中的缩写,例如Dr.或像Michael D.Brown这样的人名。

关于punkt句子标记器,可在NTLK文档中了解更多内容:http://www.nltk.org/api/nltk.tokenize.html#module-nltk.tokenize.punkt。

本技巧中,我们使用正则表达式作为标记器(这样就不用处理标点了)。.RegexpTokenizer(...)以正则表达式作为参数;我们的例子中,我们只对一个个单词感兴趣,所以使用'\w+'。使用这个正则表达式的缺点是不能正确处理省略形式,比如,don't就会被标记成['don','t']。

Python中可用的正则表达式列表,参见https://docs.python.org/3/library/re.html。

stemmer对象根据一个特定算法(或规则集)移除单词的结尾部分。我们使用.Porter Stemmer()进行单词的正规化;stemmer从reading移除ing得到read。不过,对于有些单词,stemmer可能会太过暴力,比如,president会被表达成presid,而语言学上的词干是preside(这个词也会被stemmer表达成presid)。

Porter Stemmer是从ANSI C移植的。提取器一开始是由C.J.van Rijsbergen、S.E.Robertson及M.F.Porter提出,作为IR项目的一部分开发的。论文An algorithm for suffix stripping由M.F.Porter在1980年发表于Program Journal。感兴趣的话,可以参考http://tartarus.org/~martin/PorterStemmer/。

还原词形的目的和提取词干相关,也是为了标准化文本;例如,are和is都会标准化为be。两者的区别在于,(前面解释过的)提取词干使用算法(以一种激进的方式)去掉单词的末尾,而还原词形使用一个巨大的词库,找出单词的语言学意义上的词干。

本技巧中,我们使用WordNet。更多内容可以参考http://www.nltk.org/api/nltk.stem.html#module-nltk.stem.wordnet。

创建需要的对象后,我们来从文本中提取句子。经punkt句子标记器处理,得到35个句子的列表:

下一步,我们遍历所有句子,使用标记器将所有单词标记出来,放到单词列表中。这个单词列表其实是个列表的列表:

注意单词can't被拆成了can和t——如.RegexpTokenizer(...)所期望的。

既然句子拆成了单词,那么我们来提取词干。

提醒一下:words[-1]语法指的是选取列表最后一个元素(即刚刚附着上去的)。

提取词干得到的列表是:

可以看出提取的简单粗暴;require成了requir,Seattle成了Seattl。不过moves和checks还是正确提取成了move和check。我们看看还原词形能得到的列表:

required正确移除了-d,也正确处理了Seattle。然而,单词的过去式并没有正确处理;我们还能看到moved和sold这样的单词。理想情况下,这些应该处理成move和sell。sold在提取词干和还原词形时都不能正确处理。

你的分析需要什么处理,完全取决于你。要记住的是,还原词形比提取词干要慢,因为要查询字典。

最后一步,我们将所有单词保存到文件,以比较两种方法,没有足够空间的话没法比较。

参考:你可以看看其他人是怎么做标记化的:http://textminingonline.com/dive-into-nltk-part-ii-sentence-tokenize-and-word-tokenize。

9.4识别词类,处理n-gram,识别命名实体

你也许想了解如何识别一个词的词类;要理解单词在句子中的意思,首先要能认出是动词还是名词。

不过这对处理二元语法(或者更泛化的n元语法)帮助不大:这是一组词,如果单独分析,不会得到正确的理解。例如,考虑一篇机器学习——更具体一点,是将神经网络用于本地网络中控制包的调度和路由——的文章,其中有个词组neural networks。同样的文章中,这两个单词(neural和networks)可能有和词组中不同的意思。

最后,阅读关于最近政要会议的政治类文章,我们可能经常碰到President这个词;更有趣的是President Obama和President Putin在文本中出现了多少次。后面的技巧中我们会展示如何解决这个问题。

准备:需要装好NLTK和正则表达式模块。

步骤:有了NLTK,标记词类就很简单了。识别命名实体要做些工作(nlp_pos.py文件):

1 import nltk 2 from nltk import data 3 data.path.append(r'D:\download\nltk_data') # 这里的路径需要换成自己数据文件下载的路径 4 import re 5 6 def preprocess_data(text): 7 global sentences, tokenized 8 tokenizer = nltk.RegexpTokenizer(r'\w+') 9 10 sentences = nltk.sent_tokenize(text) 11 tokenized = [tokenizer.tokenize(s) for s in sentences] 12 13 # import the data 14 guns_laws = '../../Data/Chapter09/ST_gunLaws.txt' 15 16 with open(guns_laws, 'r') as f: 17 article = f.read() 18 19 # chunk into sentences and tokenize 20 sentences = [] 21 tokenized = [] 22 words = [] 23 24 preprocess_data(article) 25 26 # part-of-speech tagging 27 tagged_sentences = [nltk.pos_tag(s) for s in tokenized] 28 29 # extract named entities -- naive approach 30 named_entities = [] 31 32 for sentence in tagged_sentences: 33 for word in sentence: 34 if word[1] == 'NNP' or word[1] == 'NNPS': 35 named_entities.append(word) 36 37 named_entities = list(set(named_entities)) 38 39 print('Named entities -- simplistic approach:') 40 print(named_entities) 41 42 # extract names entities -- regular expressions approach 43 named_entities = [] 44 tagged = [] 45 46 pattern = 'ENT: {<DT>?(<NNP|NNPS>)+}' 47 48 # use regular expressions parser 49 tokenizer = nltk.RegexpParser(pattern) 50 51 for sent in tagged_sentences: 52 tagged.append(tokenizer.parse(sent)) 53 54 for sentence in tagged: 55 for pos in sentence: 56 if type(pos) == nltk.tree.Tree: 57 named_entities.append(pos) 58 59 named_entities = list(set([tuple(e) for e in named_entities])) 60 61 print('\nNamed entities using regular expressions:') 62 print(named_entities)

原理:按照惯例,我们一上来先导入需要的模块,读入待分析的文本。

我们准备了preprocess_data(...)方法,以便自动化地进行句和词的标记化工作。这里使用的不是punkt句子标记器,而是NLTK:我们(实际上)用.sent_tokenize(...)调用punkt句子标记器。对于词的标记,我们仍然使用正则表达式标记器。

将文本拆成了句子,将句子拆成了单词,我们现在可以得到每个词的词类了。.pos_tag(...)方法查看整个句子,这个由标记过的单词构成的有序列表,并返回元组的列表;每个元组由单词本身及其词类构成。

从句子级别出发给单词定词类,这么做背后的想法是(从上下文)得到合理的推断。例如,单词moves在下面两个句子中的词类就不一样:

“He moves effortlessly.”

“His dancing moves are effortless.”

在前一句中是个动词,而在后一句中,是名词的复数形式。

看看我们得到的结果:

单词Obama被归为一个名词的单数形式,moves被定为动词的第三人称单数形式,to是个介词,但有其特定标签,require是动词的基本形,等等。

词类标签的列表参见:https://www.ling.upenn.edu/courses/Fall_2003/ling001/penn_treebank_pos.html。

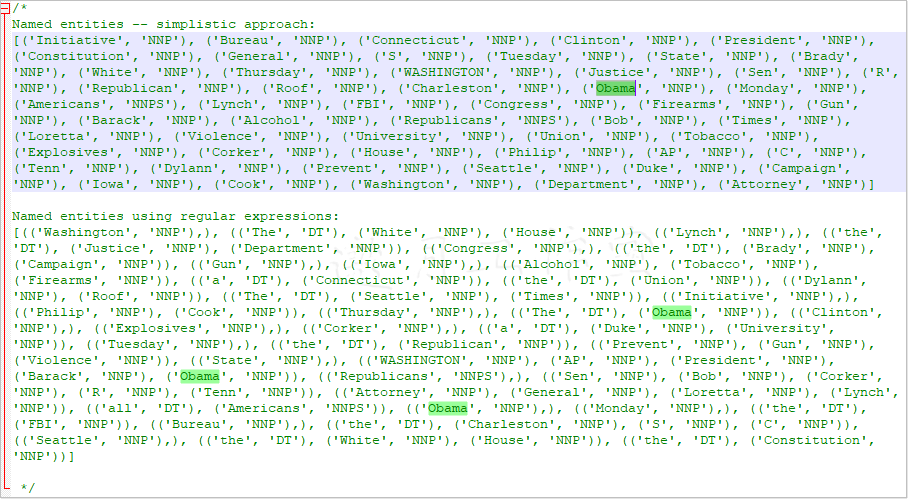

知道词类有助于区分同音词,也有助于识别n元语法和命名实体。由于命名实体一般以大写字母打头,我们会想到列出列表中所有NNP和NNPS。看看这样能得到什么:

见上图合并。

对于有些词得到的结果很好,特别是一个词的情况,比如Republicans或Thursday,不过我们实在是不知道Philip的姓是Lynch还是Cook。

简要地说,我们将使用NTLK的.RegexpParser(...)方法。这个方法使用正则表达式,查看词类的标签而不是词本身,并且匹配这个模式:

pattern = 'ENT: {<DT>?(<NNP|NNPS>)+}'

这里定义的模式将(用ENT:)标出有这样结构的实体:专有名词(如Thursday或Justice Department),单数(如the FBI)或复数(如Republicans),可加限定词(如The White House)也可不加(如Attorney General Loretta Lynch)。

提取命名实体,得到这样的列表(你看到的顺序可能不同):

见上图合并。

现在我们知道了Philip的姓是Cook,我们也能辨识出The Seattle Times,也能正确标记Constitution and Justice Department。不过,我们还无法应对Bureau of Alcohol、Tobacco以及Firearms and Explosives,并且我们遇到了康涅狄格俚语。

除了这些缺点之外,我觉得命名实体识别得挺好,我们得到了一个可以处理的列表,可以以后再优化。

更多:

我们也可以使用NLTK提供的.ne_chunk_sents(...)方法。代码的开头和之前的几乎一模一样(nls_pos_alternative.py文件):

1 # extract named entities -- the Named Entity Chunker 2 ne = nltk.ne_chunk_sents(tagged_sentences) 3 4 # get a distinct list 5 named_entities = [] 6 7 for s in ne: 8 for ne in s: 9 if type(ne) == nltk.tree.Tree: 10 named_entities.append((ne.label(), tuple(ne))) 11 12 named_entities = list(set(named_entities)) 13 named_entities = sorted(named_entities) 14 15 # and print out the list 16 for t, ne in named_entities: 17 print(t, ne)

.ne_chunk_sents(...)方法处理由句子和标了词类的单词组成的列表的列表,返回识别出命名实体的句子的列表。命名实体的形式是一个nltk.tree.Tree对象。

然后,我们创建一个识别出的命名实体元组的列表,并排序。

不指定排序字段的话,就会按照元组的第一个元素排序。

最后打印列表:此处略。

/* nltk.download('maxent_ne_chunker') #并把文件从默认的路径C:\Users\tony zhang\AppData\Roaming\nltk_data\移动到D:\download\nltk_data\ */

这个方法效果不错的,不过还是识别不出Philip Cook或Bureau of Alcohol、Tobacco以及Firearms and Explosives这样难度比较高的实体。将Bureau当成一个人,将Obama当成一个GPE,都是错误的。

9.5识别文章主题

如果要找出文字主题的情绪,统计单词数量是一个常用且简单的技巧,但通常都能得到很好的结果。本技巧中,我们会展示如何统计西雅图时报文章的单词,以识别出文章的主题。

准备:需要装好NLTK、Python正则表达式模块、NumPy和Matplotlib。

步骤:这里代码的开头和前一技巧中的很像,所以我们只展示相关的部分(nlp_countWords.py文件):

1 import nltk 2 from nltk import data 3 data.path.append(r'D:\download\nltk_data') # 这里的路径需要换成自己数据文件下载的路径 4 import re 5 import numpy as np 6 import matplotlib.pyplot as plt 7 8 def preprocess_data(text): 9 global sentences, tokenized 10 tokenizer = nltk.RegexpTokenizer(r'\w+') 11 12 sentences = nltk.sent_tokenize(text) 13 tokenized = [tokenizer.tokenize(s) for s in sentences] 14 15 # import the data 16 guns_laws = '../../Data/Chapter09/ST_gunLaws.txt' 17 18 with open(guns_laws, 'r') as f: 19 article = f.read() 20 21 # chunk into sentences and tokenize 22 sentences = [] 23 tokenized = [] 24 25 preprocess_data(article) 26 27 # part-of-speech tagging 28 tagged_sentences = [nltk.pos_tag(w) for w in tokenized] 29 30 # extract names entities -- regular expressions approach 31 tagged = [] 32 33 pattern = ''' 34 ENT: {<DT>?(<NNP|NNPS>)+} 35 ''' 36 37 tokenizer = nltk.RegexpParser(pattern) 38 39 for sent in tagged_sentences: 40 tagged.append(tokenizer.parse(sent)) 41 42 # keep named entities together 43 words = [] 44 lemmatizer = nltk.WordNetLemmatizer() 45 46 for sentence in tagged: 47 for pos in sentence: 48 if type(pos) == nltk.tree.Tree: 49 words.append(' '.join([w[0] for w in pos])) 50 else: 51 words.append(lemmatizer.lemmatize(pos[0])) 52 53 # remove stopwords 54 stopwords = nltk.corpus.stopwords.words('english') 55 words = [w for w in words if w.lower() not in stopwords] 56 57 # and calculate frequencies 58 freq = nltk.FreqDist(words) 59 60 # sort descending on frequency 61 f = sorted(freq.items(), key=lambda x: x[1], reverse=True) 62 63 # print top words 64 top_words = [w for w in f if w[1] > 1] 65 print(top_words, len(top_words)) 66 67 # plot 10 top words 68 top_words_transposed = list(zip(*top_words)) 69 y_pos = np.arange(len(top_words_transposed[0][:10]))[::-1] 70 71 plt.barh(y_pos, top_words_transposed[1][:10], 72 align='center', alpha=0.5) 73 plt.yticks(y_pos, top_words_transposed[0][:10]) 74 plt.xlabel('Frequency') 75 plt.ylabel('Top words') 76 77 plt.savefig('../../Data/Chapter09/charts/word_frequency.png', 78 dpi=300)

原理:和之前的技巧一样,我们先引入必要的模块,读入数据,做一些处理(拆解到句子,标记单词)。

然后给每个词打上词类的标签,识别出命名实体。

为了计数,我们要让文本标准化一点,将命名实体当成一个词组。我们用9.3节里介绍过的.WordNetLemmatizer()方法来标准化单词。对于命名实体,我们将列表用空格连在一起就好了,''.join([w[0]for w in pos]。

英语中最常见的单词是停用词,比如the、a、and以及in这种。统计这些词对我们理解文章主题没任何帮助。所以,我们对照NLTK提供的停用词列表,移除这些不相干的词。这个列表有将近130个词:

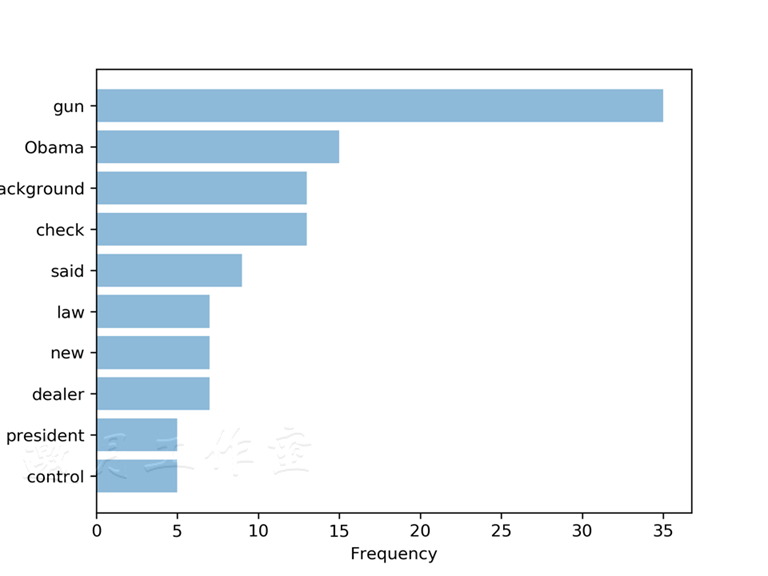

/* [('gun', 35), ('Obama', 15), ('background', 13), ('check', 13), ('said', 9), ('law', 7), ('new', 7), ('dealer', 7),

('president', 5), ('control', 5), ('sell', 5), ('prevent', 4), ('show', 4), ('many', 4), ('wa', 4), ('planned', 4),

('sale', 3), ('change', 3), ('death', 3), ('country', 3), ('plan', 3), ('measure', 3), ('take', 3), ('buyer', 3),

('must', 3), ('conduct', 3), ('register', 3), ('say', 3), ('government', 3), ('action', 3), ('lawmaker', 3),

('federal', 3), ('the White House', 3), ('day', 3), ('also', 3), ('Although', 2), ('unilaterally', 2), ('hoping', 2),

('beefing', 2), ('enforcement', 2), ('existing', 2), ('least', 2), ('rife', 2), ('Monday', 2), ('sold', 2), ('online', 2),

('violence', 2), ('Congress', 2), ('final', 2), ('office', 2), ('last', 2), ('fall', 2), ('Gun', 2), ('violent', 2),

('crime', 2), ('loophole', 2), ('issue', 2), ('guidance', 2), ('massacre', 2), ('still', 2), ('Democratic', 2),

('would', 2), ('Clinton', 2), ('next', 2), ('easily', 2), ('step', 2), ('work', 2), ('administration', 2), ('number', 2),

('people', 2), ('agency', 2), ('Many', 2), ('purchase', 2), ('the FBI', 2), ('request', 2), ('system', 2), ('back', 2),

('three', 2), ('buy', 2), ('without', 2), ('research', 2), ('weapon', 2), ('push', 2)] 83 */

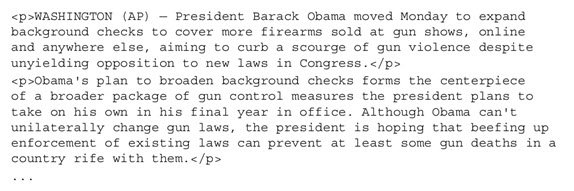

最后,我们使用.FreqDist(...)方法统计单词。这个方法传入所有单词的列表,仅仅统计出现的次数。然后我们将列表排序,只打印出出现不止一次的单词;这个列表包含83个单词,并且出现最多的五个(并不意外地)是gun、Obama、background、check和said。

出现最多的10个单词分布如下:

查看单词的分布,你可以推断出,这篇文章是关于Obama总统、枪支管制、背景调查以及经销商的。由于我们知道文章是关于什么主题的,也就很容易挑选出相关的单词,放在上下文中,所以我们可能本来就带着偏向性;但是你也能看出,即使只用头10个单词,也有猜出文章主题的可能性。

9.6识别句子结构

理解自由流文本的另一个重要方面就是句子结构:我们也许知道了词类,但如果不知道词语之间的关系,我们也理解不了上下文。

本技巧中,会学到如何识别句子的三个基本部分:NP(noun phrases,名词短语)、VP(verb phrases,动词短语)、PP(prepositional phrases,介词短语)。

名词短语包括一个名词以及其修饰语(或形容词)。NP可用来标识句子的主语,当然也可以用来标识句子中的其他成分。例如,句子My dog is lying on the carpet有两个NP:my dog和on the carpet;前一个是主语,后一个是宾语。

动词短语包括至少一个动词,以及宾语、补语和修饰语。通常描述了主语施加在宾语上的动作。考虑前面的句子,VP就是lying on the carpet。

介词短语以一个介词(at、in、from和with等)打头,后跟着名词、代名词、动名词或从句。介词短语有点像副词或形容词。举个例子,A dog with white tail is lying on the carpet中的介词短语就是with white tail,是一个介词跟着一个NP。

准备:需要装好NLTK。

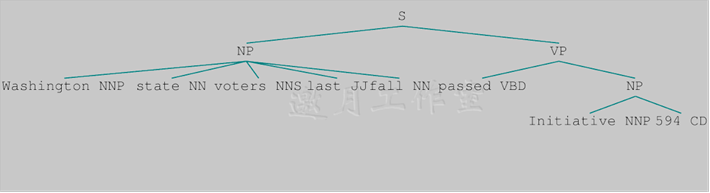

步骤:本技巧重点是讲清楚这个机制,我们只从西雅图时报的文章中选出两个句子作为样本——一个简单句,另一个稍微复杂点(nlp_sentence.py文件):

1 import nltk 2 from nltk import data 3 data.path.append(r'D:\download\nltk_data') # 这里的路径需要换成自己数据文件下载的路径 4 def print_tree(tree, filename): 5 ''' 6 A method to save the parsed NLTK tree to a PS file 7 ''' 8 # create the canvas 9 canvasFrame = nltk.draw.util.CanvasFrame() 10 11 # create tree widget 12 widget = nltk.draw.TreeWidget(canvasFrame.canvas(), tree) 13 14 # add the widget to canvas 15 canvasFrame.add_widget(widget, 10, 10) 16 17 # save the file 18 canvasFrame.print_to_file(filename) 19 20 # release the object 21 canvasFrame.destroy() 22 23 # two sentences from the article 24 sentences = ['Washington state voters last fall passed Initiative 594', 'The White House also said it planned to ask Congress for $500 million to improve mental health care, and Obama issued a memorandum directing federal agencies to conduct or sponsor research into smart gun technology that reduces the risk of accidental gun discharges.'] 25 26 # the simplest possible word tokenizer 27 sentences = [s.split() for s in sentences] 28 29 # part-of-speech tagging 30 sentences = [nltk.pos_tag(s) for s in sentences] 31 32 # pattern for recognizing structures of the sentence 33 pattern = ''' 34 NP: {<DT|JJ|NN.*|CD>+} # Chunk sequences of DT, JJ, NN 35 VP: {<VB.*><NP|PP>+} # Chunk verbs and their arguments 36 PP: {<IN><NP>} # Chunk prepositions followed by NP 37 ''' 38 39 # identify the chunks 40 NPChunker = nltk.RegexpParser(pattern) 41 chunks = [NPChunker.parse(s) for s in sentences] 42 43 # save to file 44 print_tree(chunks[0], '../../Data/Chapter09/charts/sent1.ps') 45 print_tree(chunks[1], '../../Data/Chapter09/charts/sent2.ps')

原理:我们将句子存在一个列表中。由于句子比较简单,我们使用理论上最简单的标记器;我们在每个空格符处打断。然后是我们熟悉的词类标记工作。

我们之前已经用过.RegexpParser(...)方法了,这里模式不同。

本技巧中的导论部分提过,NP包括一个名词和其修饰语。这里的模式说得更精确,NP由DT(限定词,the或a这种)、JJ(形容词)、CD(数量词)或名词的变种组成:NN.*表示NN(单数名词)、NNS(复数名词)、NNP(单数可数名词)和NNP(复数可数名词)。

VP由各种形式的动词组成:VB(基本型)、VBD(过去时)、VBG(动名词或现在进行时)、VBN(过去进行时)、VBP(非第三人称的单数现在形式)、VBZ(第三人称单数形式),还有NP或PP。

PP如前所说,是介词和NP的组合。

句子解析出的树可以保存到文件。我们使用print_tree(...)方法,以tree作为第一个参数,以filename作为第二个参数。

这个方法中,我们先创建canvasFrame并往上加一个树的挂件。.TreeWidget(...)方法将刚创建的画布作为第一个参数,以tree作为第二个参数。然后,我们将挂件加到canvasFrame上,保存到文件。最后,我们释放canvasFrame占据的内存。

不过,保存树时,唯一支持的文件格式是postscript。要转换为PDF格式的话,你可以使用一种在线转换器,比如,https://online2pdf.com/convert-ps-to-pdf,或者类UNIX的机器,你可以使用下面的命令(假设你在Codes/Chapter09文件夹下):

convert -desity 300 ../../data/Chapter09/charts/sent1.ps Chapter9/Charts/sent1.png

得到的树(第一个句子)如下所示:

我们得到的NP包括Washington、state、voters、last和fall构成的主语,动词passed,以及宾语(另一个NP):Initiative NNP 594。

参考:这是另一个例子:https://www.eecis.udel.edu/~trnka/CISC889-11S/lectures/dongqing-chunking.pdf。

9.7根据评论给影片归类

万事俱备,现在我们可以来点高阶任务了:根据评论给影片归类。本技巧中,我们会使用一个情感分析器以及朴素贝叶斯分类器来分类电影。

准备:需要装好NLTK和JSON。

步骤:

要花点功夫,不过最终代码理解起来还是很简单的(nlp_classify.py文件):

1 # this is needed to load helper from the parent folder 2 import sys 3 sys.path.append('..') 4 5 # the rest of the imports 6 import helper as hlp 7 import nltk 8 from nltk import data 9 data.path.append(r'D:\download\nltk_data') # 这里的路径需要换成自己数据文件下载的路径 10 import nltk.sentiment as sent 11 import json 12 13 @hlp.timeit 14 def classify_movies(train, sentim_analyzer): 15 ''' 16 Method to estimate a Naive Bayes classifier 17 to classify movies based on their reviews 18 估算第一个朴素贝叶斯分类器,以根据评论归类电影 19 ''' 20 nb_classifier = nltk.classify.NaiveBayesClassifier.train 21 classifier = sentim_analyzer.train(nb_classifier, train) 22 23 return classifier 24 25 @hlp.timeit 26 def evaluate_classifier(test, sentim_analyzer): 27 ''' 28 Method to estimate a Naive Bayes classifier 29 to classify movies based on their reviews 30 估算第一个朴素贝叶斯分类器,以根据评论归类电影 31 ''' 32 for key, value in sorted(sentim_analyzer.evaluate(test).items()): 33 print('{0}: {1}'.format(key, value)) 34 35 # read in the files 36 f_training = '../../Data/Chapter09/movie_reviews_train.json' 37 f_testing = '../../Data/Chapter09/movie_reviews_test.json' 38 with open(f_training, 'r') as f: 39 read = f.read() 40 train = json.loads(read) 41 42 with open(f_testing, 'r') as f: 43 read = f.read() 44 test = json.loads(read) 45 46 # tokenize the words 47 tokenizer = nltk.tokenize.TreebankWordTokenizer() 48 49 train = [(tokenizer.tokenize(r['review']), r['sentiment']) 50 for r in train] 51 52 test = [(tokenizer.tokenize(r['review']), r['sentiment']) 53 for r in test] 54 55 # analyze the sentiment of reviews 56 sentim_analyzer = sent.SentimentAnalyzer() 57 all_words_neg_flagged = sentim_analyzer.all_words( 58 [sent.util.mark_negation(doc) for doc in train]) 59 60 # get most frequent words 61 unigram_feats = sentim_analyzer.unigram_word_feats( 62 all_words_neg_flagged, min_freq=4) 63 64 # add feature extractor 65 sentim_analyzer.add_feat_extractor( 66 sent.util.extract_unigram_feats, unigrams=unigram_feats) 67 68 # and create the training and testing using the newly created 69 # features 70 train = sentim_analyzer.apply_features(train) 71 test = sentim_analyzer.apply_features(test) 72 73 # what is left is to classify the movies and then evaluate 74 # the performance of the classifier 75 classify_movies(train, sentim_analyzer) 76 evaluate_classifier(test, sentim_analyzer)

原理:按照惯例,我们一上来先引入必要的模块。

准备好的训练集和测试集以JSON格式存在Data/Chapter09文件夹。你应当了解如何读取JSON格式的文件;如果你要复习一下,参考本书1.3节。

读入文件后,我们使用.TreebankWordTokenizer()标记评论。注意,我们不将评论拆分成句子;本技巧的思想是识别出每条评论中通常带有负面意义的词,并据此区分评论是正面的还是负面的。

标记化之后,对象train和test都是元组组成的列表,元组的第一个元素是评论标记化的单词列表,第二个元素是识别出的情感:pos或neg。

准备好了数据集,我们可以创建用于训练朴素贝叶斯分类器的特征。我们使用NLTK中的.SentimentAnalyzer()。

参考NLTK关于情感分析的文档:http://www.nltk.org/api/nltk.sentiment.html。

.SentimentAnalyzer()帮我们串起来特征提取和分类的任务。

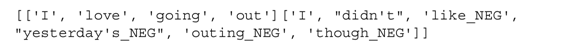

首先,我们要标出由于跟在否定形式之后而具备不同意义的单词。比如这句:I love going out.I didn’t like yesterday’s outing,though.显然,like跟在didn’t后面,并不是正面的意思。.util.mark_negation(...)方法处理单词列表,标出跟在否定形式之后的单词;刚刚这个例子中,会得到这样的结果:

现在我们标记了单词,便可以创建特征了。本例中,我们希望只使用有一定出现频率的词:like_NEG只出现一次的话不足以成为一个好的预测器。我们使用.unigram_word_feats(...)方法,得到(指定top_n参数)最常见的单词或频率高于min_freq的所有单词。

.add_feat_extractor(...)方法为SentimentAnalyzer()对象提供了一个从文档中提取单词的新方法。我们这里只关注一元语法,所以我们将.util.extract_unigram_feats作为传入.add_feat_extractor(...)方法的第一个参数。一元语法的关键词参数是一元语法特征的列表。

准备好特征提取器之后,我们可以转换训练集和测试集了。使用.apply_features(...)方法将单词列表转换成特征向量的列表,列表中每个元素标明评论是否包含某个单词/特征。打印出来像是这样(经过化简):

下面就是分类器的训练及评估了。我们将训练集与sentiment_analyzer对象传入classify_movies(...)方法。train(...)方法可传入多种训练器,这里我们使用的是.classify.NaiveBayes-Classifier.train方法。使用.timeit装饰器,我们测量出估算模型花费的时间:

/* Training classifier The method classify_movies took 501.62 sec to run. */

即,训练分类器花了将近9分钟。当然,更重要的是表现如何——朴素贝叶斯可是一种简单的分类方法(参考本书3.3节)。

evaluate_classifier(...)方法传入测试集与训练好的分类器,进行评估,并打印出结果。整体精确度不太差,接近82%。这说明我们的训练集很好地代表了这个问题:

/*

Training classifier

The method classify_movies took 501.62 sec to run.

Evaluating NaiveBayesClassifier results...

Accuracy: 0.81625

F-measure [neg]: 0.8206004393458629

F-measure [pos]: 0.8116833205226749

Precision [neg]: 0.8016213638531235

Precision [pos]: 0.8323699421965318

Recall [neg]: 0.8405

Recall [pos]: 0.792

The method evaluate_classifier took 1905.59 sec to run.

*/

对于一个简单的分类器来说,Precision与Recall的值已经很不错了。然而,估算这个模型花费的时间超过了30分钟。

第9章完。

随书源码官方下载:

http://www.hzcourse.com/web/refbook/detail/7821/92

托马兹·卓巴斯的《数据分析实战》,2018年6月出版,本系列为读书笔记。主要是为了系统整理,加深记忆。 第9章描述了多种与分析文本信息流相关的技巧:词性标注、主题抽取以及对文本数据的分类。

托马兹·卓巴斯的《数据分析实战》,2018年6月出版,本系列为读书笔记。主要是为了系统整理,加深记忆。 第9章描述了多种与分析文本信息流相关的技巧:词性标注、主题抽取以及对文本数据的分类。

浙公网安备 33010602011771号

浙公网安备 33010602011771号