phoenix 二级索引使用实践

一、参考的博客

phoenix的基本安装和使用,功能介绍等

https://www.cnblogs.com/kekukekro/p/6339587.html

phoenix全局索引和本地索引 的详细对比和测试

https://blog.csdn.net/dante_003/article/details/76439021

phoenix索引的详细使用

http://www.cnblogs.com/haoxinyue/p/6724365.html

一致性、事物和索引调优

http://www.cnblogs.com/haoxinyue/p/6747948.html

phoenix索引使用,强制使用索引,查看执行计划

https://blog.csdn.net/liyong1115/article/details/70332102

二、phoenix需要python2.7环境,如果安装之后少组件,请执行下面语句进行安装。

yum install python-argparse

scan "logs:rad",{LIMIT=>15}

三、安装位置(安装请参见我之前的博客)

192.168.180.225

cd /usr/local/apps/phoenix/bin/

./sqlline.py 192.168.180.228:2181

四、特别注意事项:

1、用命令行建表时:表名、字段名如果不是默认大写,一定要用""扩起来。

2、表名一定要大写,否则会有各种组件不兼容小写的bug。

3、hbase的原始表名一般是(logs:rad),冒号前面是命名空间名。phoenix不支持表名当中有冒号。

虽然它也有命名空间的概念,但是需要客户端、服务端一起设置之后才能使用。

4、本地索引和全局索引,都可以异步构建,且是同样的操作。

5、表分可修改表和不可修改表(指的是表内的数据只能增加,不能修改),表的类型换了,表的全局索引和本地索引也跟着变为可修改或不可修改。

6、全局索引适合读多写少,本地索引适合写多读少的场景。不可修改索引优化更好,相对性能更高。

五、=========采坑纪实======================================================================

5.1、创建原始表

CREATE TABLE "logsrad" (

id VARCHAR NOT NULL PRIMARY KEY ,

"info"."receiveTime" VARCHAR ,

"info"."sourceIp" VARCHAR ,

"info"."destinationIp" VARCHAR ,

"info"."destinationPort" VARCHAR ,

"info"."natIp" VARCHAR ,

"info"."deviceIp" VARCHAR ,

"info"."alarmLevel" VARCHAR ,

"info"."startTime" VARCHAR ,

"info"."endTime" VARCHAR ,

"info"."interfaceIp" VARCHAR ,

"info"."protocol" VARCHAR ,

"info"."natType" VARCHAR ,

"info"."messageBytes" VARCHAR

)

5.2、可修改表、不可修改表

如果你有一个已经存在的表,想把不可变索引修改成可变索引的话,可以用如下语句实现:

alter table "logsrad" set IMMUTABLE_ROWS = false;

修改成不可变表

alter table "logsrad" set IMMUTABLE_ROWS = true;

#CREATE LOCAL INDEX MYINDEX ON "logsrad"("destinationIp");

5.3、异步创建索引例子

#首先在phoenix中建立索引表信息

create index car_index_datehphm on "car"("f1"."date","f1"."hphm") include ("f1"."coorid","f1"."cx","f1"."ys") async;

#这里的建立索引语句加了async,异步建立索引。另外f1是hbase中原始的列族名,这张表是原始hbase表转过来的,为什么这么写就不解释了,"f1"."date"就代表一个字段。include是什么后面再解释

#下面启动批量建立索引的mr任务

${HBASE_HOME}/bin/hbase org.apache.phoenix.mapreduce.index.IndexTool \

--data-table "car" --index-table CAR_INDEX_DATEHPHM \

--output-path ASYNC_IDX_HFILES

5.4、本地索引

CREATE LOCAL INDEX INDEX_LOGSRAD_DESIP ON "logsrad"("info"."destinationIp") async;

cd /opt/cloudera/parcels/CDH-5.11.1-1.cdh5.11.1.p0.4/lib/hbase/bin/

#执行异步创建索引的mr任务

hbase org.apache.phoenix.mapreduce.index.IndexTool --data-table "logsrad" --index-table INDEX_LOGSRAD_DESIP --output-path ASYNC_IDX_HFILES

DROP INDEX MYINDEX ON "logsrad" ;

DROP INDEX INDEX_LOGSRAD_DESIP ON "logsrad" ;

count 'INDEX_LOGSRAD_DESIP'

5.5、全局索引

CREATE INDEX INDEX_LOGSRAD_SOURCEIP ON "logsrad"("info"."sourceIp" DESC) include("info"."deviceIp","info"."natType") async;

cd /opt/cloudera/parcels/CDH-5.11.1-1.cdh5.11.1.p0.4/lib/hbase/bin/

#执行异步创建索引的mr任务

hbase org.apache.phoenix.mapreduce.index.IndexTool --data-table "logsrad" --index-table INDEX_LOGSRAD_SOURCEIP --output-path ASYNC_IDX_HFILES

===================================================================================================================

六、========成功案例==={(数据记录)不可修改表、(数据记录)不可修改索引}========================================================================================================

报错,可能是org.apache.phoenix.mapreduce.index.IndexTool这个工具,默认表名是大写了。 我们这个表是小写的表名,所以对应不上。

(下面的实践证明,表名还是要大写,否则上面这个工具会对应不上索引是哪张表的索引,报错)

2、表名一定要大写,否则会有各种组件不兼容小写的bug。

6.1、--------修改原始hbase表名------------------------------------------------------------

请参见本人其他博客,或者搜索

6.2、创建phoenix里面的表(表名需要和hbase表名一致)

1、用命令行建表时:表名、字段名如果不是默认大写,一定要用""扩起来。

3、hbase的原始表名一般是(logs:rad),冒号前面是命名空间名。phoenix不支持表名当中有冒号。

虽然它也有命名空间的概念,但是需要客户端、服务端一起设置之后才能使用。

CREATE TABLE LOGSRADL (

id VARCHAR NOT NULL PRIMARY KEY ,

"info"."receiveTime" VARCHAR ,

"info"."sourceIp" VARCHAR ,

"info"."destinationIp" VARCHAR ,

"info"."destinationPort" VARCHAR ,

"info"."natIp" VARCHAR ,

"info"."deviceIp" VARCHAR ,

"info"."alarmLevel" VARCHAR ,

"info"."startTime" VARCHAR ,

"info"."endTime" VARCHAR ,

"info"."interfaceIp" VARCHAR ,

"info"."protocol" VARCHAR ,

"info"."natType" VARCHAR ,

"info"."messageBytes" VARCHAR

);

6.3、-------全局索引------------------------------------------------------------------------

修改成不可变表

alter table LOGSRADL set IMMUTABLE_ROWS = true;

全局索引

CREATE INDEX INDEX_LOGSRADL_SOURCEIP ON LOGSRADL("info"."sourceIp" DESC) include("info"."deviceIp","info"."natType") async;

cd /opt/cloudera/parcels/CDH-5.11.1-1.cdh5.11.1.p0.4/lib/hbase/bin/

hbase org.apache.phoenix.mapreduce.index.IndexTool --data-table LOGSRADL --index-table INDEX_LOGSRADL_SOURCEIP --output-path ASYNC_IDX_HFILES

测试:索引

select * from LOGSRADL limit 10;

select deviceIp,natType from LOGSRADL limit 10;

select "info"."deviceIp","info"."natType" from LOGSRADL where "info"."sourceIp"='46.234.125.89' limit 10;

scan "LOGSRADL",{LIMIT=>15}

#DROP INDEX INDEX_LOGSRADL_SOURCEIP ON "logsrad" ;

6.4、-------本地索引-------------------------------------------------------------

本地索引

CREATE LOCAL INDEX INDEX_LOGSRADL_DESIP ON LOGSRADL("info"."destinationIp") async;

cd /opt/cloudera/parcels/CDH-5.11.1-1.cdh5.11.1.p0.4/lib/hbase/bin/

hbase org.apache.phoenix.mapreduce.index.IndexTool --data-table LOGSRADL --index-table INDEX_LOGSRADL_DESIP --output-path ASYNC_IDX_HFILES

测试:索引

select "info"."deviceIp","info"."natType" from LOGSRADL where "info"."destinationIp"='210.29.144.128' limit 10;

select * from LOGSRADL where "info"."destinationIp"='210.29.144.128' limit 10;

七、========成功案例==={(数据记录)可修改表、(数据记录)可修改索引}========================================================================================================

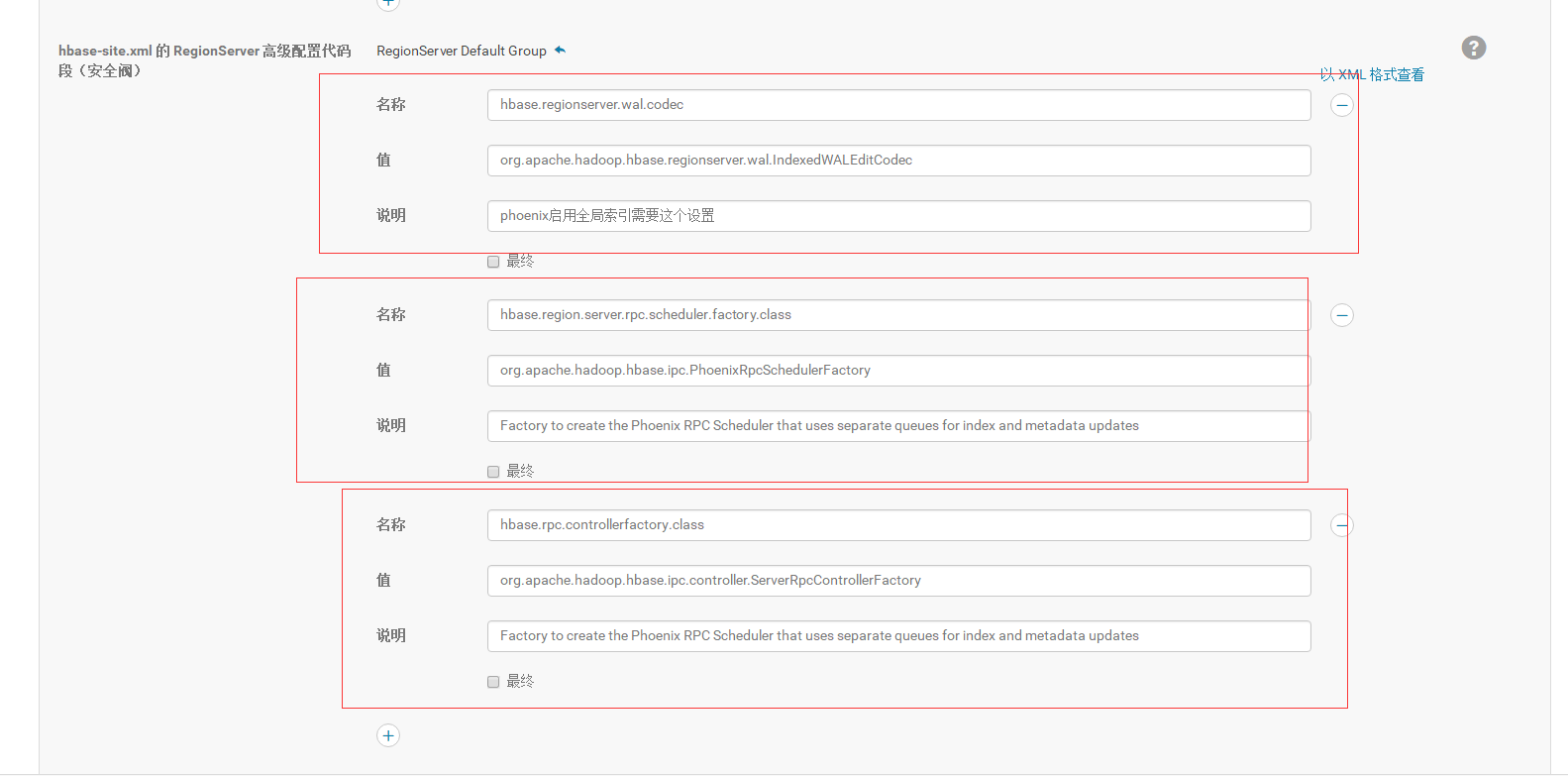

7.1、首先修改hbase的配置

官网的说明:

You will need to add the following parameters to hbase-site.xml on each region server:

<property> <name>hbase.regionserver.wal.codec</name> <value>org.apache.hadoop.hbase.regionserver.wal.IndexedWALEditCodec</value> </property>

The above property enables custom WAL edits to be written, ensuring proper writing/replay of the index updates. This codec supports the usual host of WALEdit options, most notably WALEdit compression.

<property> <name>hbase.region.server.rpc.scheduler.factory.class</name> <value>org.apache.hadoop.hbase.ipc.PhoenixRpcSchedulerFactory</value> <description>Factory to create the Phoenix RPC Scheduler that uses separate queues for index and metadata updates</description> </property> <property> <name>hbase.rpc.controllerfactory.class</name> <value>org.apache.hadoop.hbase.ipc.controller.ServerRpcControllerFactory</value> <description>Factory to create the Phoenix RPC Scheduler that uses separate queues for index and metadata updates</description> </property>

The above properties prevent deadlocks from occurring during index maintenance for global indexes (HBase 0.98.4+ and Phoenix 4.3.1+ only) by ensuring index updates are processed with a higher priority than data updates. It also prevents deadlocks by ensuring metadata rpc calls are processed with a higher priority than data rpc calls.

cloudera manager上的设置

修改成可变表(5、表分可修改表和不可修改表(指的是表内的数据只能增加,不能修改),表的类型换了,表的全局索引和本地索引也跟着变为可修改或不可修改。)

alter table LOGSRADL set IMMUTABLE_ROWS = false;

7.2、-------全局索引------------------------------------------------------------------------

全局索引

建索引的方式同上面的不可修改索引

测试:索引

select * from LOGSRADL limit 10;

select deviceIp,natType from LOGSRADL limit 10;

select "info"."deviceIp","info"."natType" from LOGSRADL where "info"."sourceIp"='46.234.125.89' limit 10;

scan "LOGSRADL",{LIMIT=>15}

#DROP INDEX INDEX_LOGSRADL_SOURCEIP ON "logsrad" ;

select "info"."sourceIp",count(*) from LOGSRADL group by "info"."sourceIp";

select "info"."deviceIp",count(*) from LOGSRADL group by "info"."deviceIp";

7.3、-------本地索引-------------------------------------------------------------

本地索引

建索引的方式同上面的不可修改索引

测试:索引

select "info"."deviceIp","info"."natType" from LOGSRADL where "info"."destinationIp"='210.29.144.128' limit 10;

select * from LOGSRADL where "info"."destinationIp"='210.29.144.128' limit 10;

select count("info"."natType") from LOGSRADL where "info"."destinationIp"='210.29.144.128' group by "info"."deviceIp";

select "info"."destinationIp",count(*) from LOGSRADL group by "info"."destinationIp";

浙公网安备 33010602011771号

浙公网安备 33010602011771号