Wireshark抓包

异常现象:

上海到深圳机房,走专线网络(10MB),出现深圳行情数据接收延迟缺失

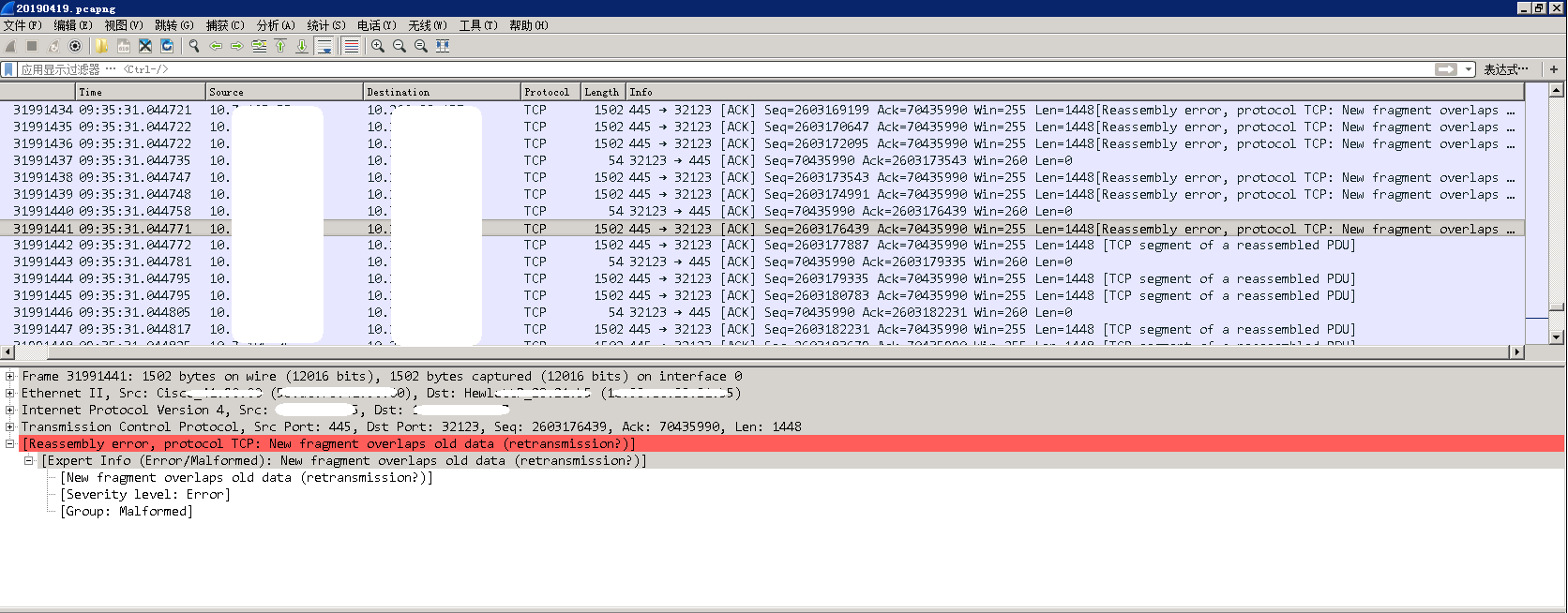

在行情的接收端抓包得到的信息:

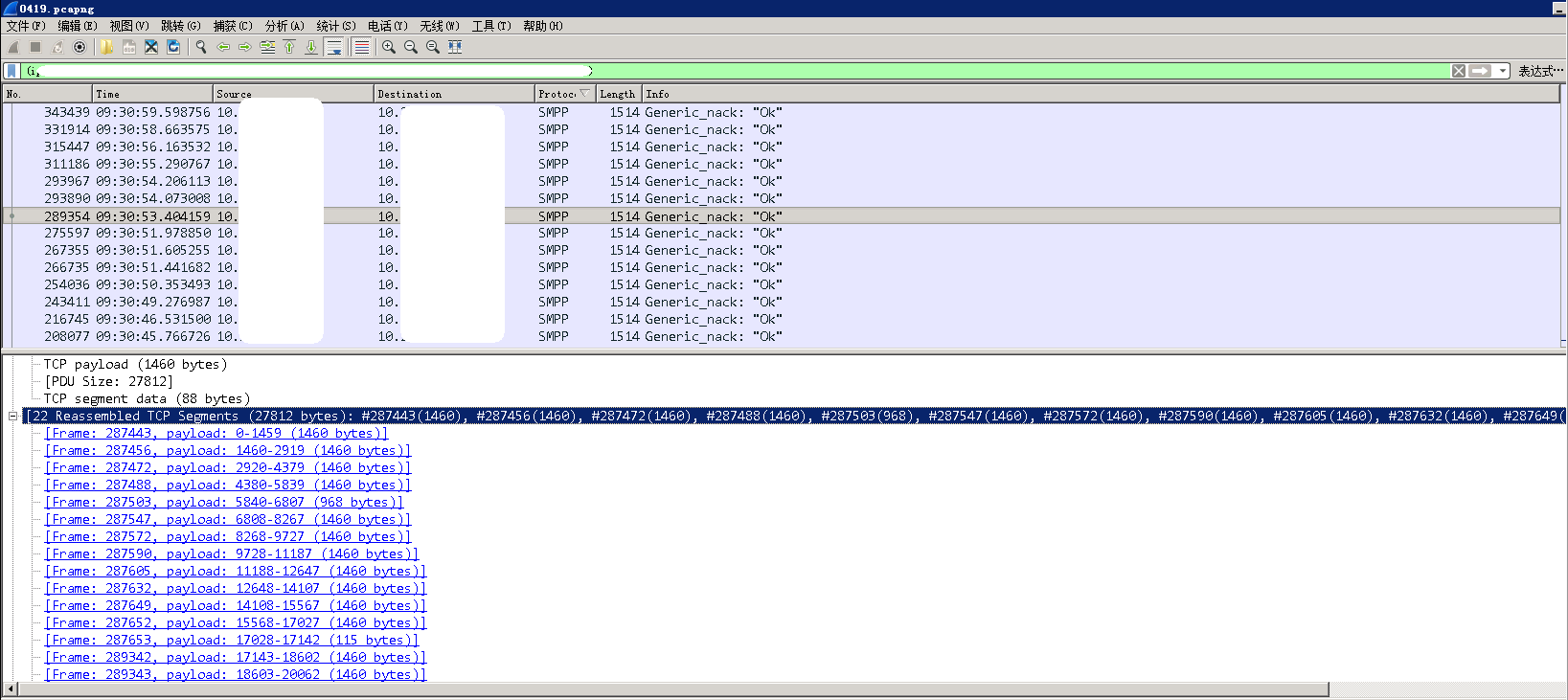

分析:TCP segment of a reassembled PDU说明服务端发送的是一个大数据帧,并且经过了分割,以每个1448字节大小的tcp段发送给客户端,当客户端收到服务端SMPP消息后,会把属于同一个PDU的包重组。

[Reassembly error, protocol TCP: New fragment overlaps old data (retransmission?)] 说明有部分的tcp段出现了重传。

统计-捕获文件属性:平均400KB/s

stevens:60s内Sequence number连续增加

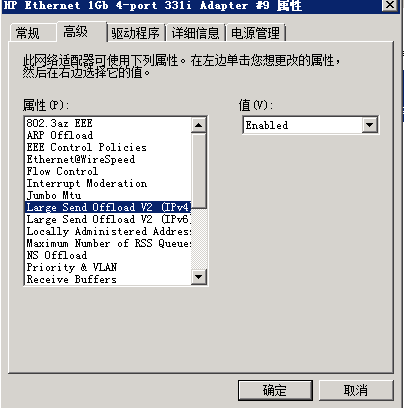

根据以上的信息无法确定问题原因,经过baidu看到有一种说法是建议关闭服务器网卡的LSO,LSO是利用网卡分割大数据包,释放CPU资源的一种机制,但是这种机制很多时候会严重的降低网络性能

以下内容来自网络:

示例1:

Large Send Offload

TCP 分段通常是由协议栈完成。启用 Large Send Offload 属性时,TCP 分段可由网络适配器完成。

Disable: 禁用 Large Send Offload。

Enable (默认值): 启用 Large Send Offload。

Large Send Offload是网络适配器的高级功能之一,其目的是在网络适配器端进行TCP的分段工作,以此来降低CPU以及其他相关设备的压力;但随着多核CPU的广泛应用,网络适配器的处理能力相较于CPU弱了很多,因此当大量并发请求导致数据频繁更新或大数据量传送时,开启Large Send Offload将严重影响性能;

示例2:

Large Send Offload and Network Performance

One issue that I continually see reported by customers is slow network performance. Although there are literally a ton of issues that can effect how fast data moves to and from a server, there is one fix I've found that will resolve this 99% of time — disable Large Send Offload on the Ethernet adapter.

So what is Large Send Offload (also known as Large Segmetation Offload, and LSO for short)? It's a feature on modern Ethernet adapters that allows the TCP\IP network stack to build a large TCP message of up to 64KB in length before sending to the Ethernet adapter. Then the hardware on the Ethernet adapter — what I'll call the LSO engine — segments it into smaller data packets (known as "frames" in Ethernet terminology) that can be sent over the wire. This is up to 1500 bytes for standard Ethernet frames and up to 9000 bytes for jumbo Ethernet frames. In return, this frees up the server CPU from having to handle segmenting large TCP messages into smaller packets that will fit inside the supported frame size. Which means better overall server performance. Sounds like a good deal. What could possibly go wrong?

Quite a lot, as it turns out. In order for this to work, the other network devices — the Ethernet switches through which all traffic flows — all have to agree on the frame size. The server cannot send frames that are larger than the Maximum Transmission Unit (MTU) supported by the switches. And this is where everything can, and often does, fall apart.

The server can discover the MTU by asking the switch for the frame size, but there is no way for the server to pass this along to the Ethernet adapter. The LSO engine doesn't have ability to use a dynamic frame size. It simply uses the default standard value of 1500 bytes,or if jumbo frames are enabled, the size of the jumbo frame configured for the adapter. (Because the maximum size of a jumbo frame can vary between different switches, most adapters allow you to set or select a value.) So what happens if the LSO engine sends a frame larger than the switch supports? The switch silently drops the frame. And this is where a performance enhancement feature becomes a performance degredation nightmare.

To understand why this hits network performance so hard, let's follow a typical large TCP message as it traverses the network between two hosts.

- With LSO enabled, the TCP/IP network stack on the server builds a large TCP message.

- The server sends the large TCP message to the Ethernet adapter to be segmented by its LSO engine for the network. Because the LSO engine cannot discover the MTU supported by the switch, it uses a standard default value.

- The LSO engine sends each of the frame segments that make up the large TCP message to the switch.

- The switch receives the frame segments, but because LSO sent frames larger than the MTU, they are silently discarded.

- On the server that is waiting to receive the TCP message, the timeout clock reaches zero when no data is received and it sends back a request to retransmit the data. Although the timeout is very short in human terms, it rather long in computer terms.

- The sending server receives the retransmission request and rebuilds the TCP message. But because this is a retransmission request, the server does not send the TCP message to the Ethernet adapter to be segmented. Instead, it handles the segmentation process itself. This appears to be designed to overcome failures caused by the offloading hardware on the adapter.

- The switch receives the retransmission frames from the server, which are the proper size because the server is able to discover the MTU, and forwards them on to the router.

- The other server finally receives the TCP message intact.

This can basicly be summed up as offload data, segment data, discard data, wait for timeout, request retransmission, segment retransmission data, resend data. The big delay is waiting for the timeout clock on the receiving server to reach zero. And the whole process is repeated the very next time a large TCP message is sent. So is it any wonder that this can cause severe network performance issues.

This is by no means an issue that effects only Peer 1. Google is littered with artices by major vendors of both hardware and software telling their customers to turn off Large Send Offload. Nor is it specific to one operating system. It effects both Linux and Windows.

I've found that Intel adapters are by far the worst offenders with Large Send Offload, but Broadcom also has problems with this as well. And, naturally, this is a feature that is enabled by default on the adapters, meaning that you have to explicitly turn it off in the Ethernet driver (preferred) or server's TCP/IP network stack.