hive安装

- 先确认hadoop是否启动成功,将hive的压缩包上传到服务器的/home/software目录下

# 修改权限

chmod 755 apache-hive-3.1.2-bin.tar.gz

# 解压

tar -zxvf apache-hive-3.1.2-bin.tar.gz

- 全局配置

vim /etc/profile

export HIVE_HOME=/home/software/apache-hive-3.1.2-bin

export PATH=$PATH:$HIVE_HOME/bin

# 生效

source /etc/profile

- 修改hive相关的配置

# 进入如下路径

/home/software/apache-hive-3.1.2-bin/conf

# 将hive-size.xml上传到该目录

# 修改为自己的ip,mysql的用户名和密码

# 如果使用mysql5,则配置com.mysql.jdbc.Driver

# 如果使用mysql8,则配置com.mysql.cj.jdbc.Driver

# 将hive的配置文件复制到spark/conf

cp hive-site.xml /home/software/spark-2.3.4-bin-hadoop2.7/conf

# 将hadoop的4个配置文件复制到spark/conf

[root@master hadoop]# pwd

/home/software/hadoop-3.2.1/etc/hadoop

[root@master hadoop]# cp core-site.xml /home/software/spark-2.3.4-bin-hadoop2.7/conf

[root@master hadoop]# cp hdfs-site.xml /home/software/spark-2.3.4-bin-hadoop2.7/conf

[root@master hadoop]# cp mapred-site.xml /home/software/spark-2.3.4-bin-hadoop2.7/conf

[root@master hadoop]# cp yarn-site.xml /home/software/spark-2.3.4-bin-hadoop2.7/conf

查看详情

- hive-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed to the Apache Software Foundation (ASF) under one

or more contributor license agreements. See the NOTICE file

distributed with this work for additional information

regarding copyright ownership. The ASF licenses this file

to you under the Apache License, Version 2.0 (the

"License"); you may not use this file except in compliance

with the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing,

software distributed under the License is distributed on an

"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

KIND, either express or implied. See the License for the

specific language governing permissions and limitations

under the License.

-->

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://192.168.128.78:3306/hive?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.cj.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

<description>password to use against metastore database</description>

</property>

</configuration>

- 添加包

# 进入lib目录

cd /home/software/apache-hive-3.1.2-bin/lib

# 删除默认的包

rm -rf guava-19.0.jar

# 将guava-27.0-jre.jar上传到该目录

# 将mysql驱动包上传到该目录

cp -r mysql-connector-java-8.0.30.jar cd /home/software/apache-hive-3.1.2-bin/lib/

- mysql创建数据库

# 登录MySQL

mysql -uroot -p

# 查看数据库

show databases;

# 删除之前的hive数据库

drop database hive;

# 创建数据库,必须使用latin1

create database hive character set latin1;

# 进入该文件夹下

cd /home/software/apache-hive-3.1.2-bin/bin

# 初始化

[root@master bin]# schematool -dbType mysql -initSchema

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/software/apache-hive-3.1.2-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/software/hadoop-3.2.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:mysql://192.168.128.78:3306/hive?createDatabaseIfNotExist=true

Metastore Connection Driver : com.mysql.cj.jdbc.Driver

Metastore connection User: root

Starting metastore schema initialization to 3.1.0

Initialization script hive-schema-3.1.0.mysql.sql

Initialization script completed

schemaTool completed

- 查看版本

[root@master bin]# hive --version

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/software/apache-hive-3.1.2-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/software/hadoop-3.2.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive 3.1.2

Git git://HW13934/Users/gates/tmp/hive-branch-3.1/hive -r 8190d2be7b7165effa62bd21b7d60ef81fb0e4af

Compiled by gates on Thu Aug 22 15:01:18 PDT 2019

From source with checksum 0492c08f784b188c349f6afb1d8d9847

- 验证

# 连接1

[root@master conf]# hive

hive>

# 连接2

# 后台启动9083

nohup hive --service metastore &

# 查看

tail -200f nohup.out

# 查看java相关的服务是否启动

netstat -nltp |grep java

# 连接1

hive>show databases;

hive>create database bigdata;

# 连接2

# 修改test1.py中ip为localhost,并执行,可以查看到在连接1中使用hive创建的数据库

[root@master software]# spark-submit test1.py

+------------+

|databaseName|

+------------+

| bigdata|

| default|

+------------+

查看详情

- test1.py

from pyspark.sql import SparkSession

if __name__ == '__main__':

# 0.构建执行环境入口对象SparkSession

spark = SparkSession.builder.\

appName("test").\

master("local[*]").config("hive.metastore.uris", "thrift://localhost:9083"). enableHiveSupport().getOrCreate()

sc = spark.sparkContext

spark.sql("show databases").show()

- 报错原因:没有启动hadoop

[root@master lib]# hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/software/apache-hive-3.1.2-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/software/hadoop-3.2.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = 01e6fede-26c8-4736-b194-e778a3c61464

Logging initialized using configuration in jar:file:/home/software/apache-hive-3.1.2-bin/lib/hive-common-3.1.2.jar!/hive-log4j2.properties Async: true

Exception in thread "main" java.lang.RuntimeException: java.net.ConnectException: Call From hadoop01/192.168.128.78 to hadoop01:9000 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:651)

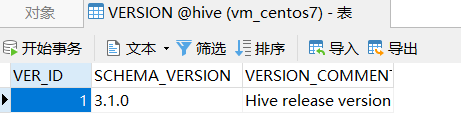

- 启动hive报错

MetaException(message:Hive Schema version 3.1.0 does not match metastore’s schema version 1.2.0 letastore is not upgraded or corrupt)

Caused bty: Met Exception(message:Hive Schema version 3.1.8 does not match metastore 's schema version 1.2.0 letastore is not upgraded or corrupt)

-

解决方案:使用navicat连接,修改版本并保存

-

spark+hive+hodp打通后测试

[root@master software]# spark-shell

/home/software/spark-2.3.4-bin-hadoop2.7/conf/spark-env.sh: line 2: /usr/local/hadoop/bin/hadoop: No such file or directory

2027-12-01 10:09:00 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://hadoop01:4040

Spark context available as 'sc' (master = local[*], app id = local-1827626954358).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.3.4

/_/

Using Scala version 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_181)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

浙公网安备 33010602011771号

浙公网安备 33010602011771号