# 上传到/home/software路径

# 设置权限

chmod 755 hadoop-3.2.1.tar.gz

# 解压

tar -zxvf hadoop-3.2.1.tar.gz

# 配置

vim /etc/profile

export HADOOP_HOME=/home/software/hadoop-3.2.1

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export HADOOP_CONF_DIR=/home/software/hadoop-3.2.1/etc/hadoop

export PATH=$PATH:$HADOOP_CONF_DIR/bin

export YARN_CONF_DIR=/home/software/hadoop-3.2.1/etc/hadoop

# 生效

source /etc/profile

# 进入如下路径

cd /home/software/hadoop-3.2.1/etc/hadoop

# 删除默认文件

rm -rf core-site.xml hdfs-site.xml mapred-site.xml yarn-site.xml

查看详情

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop01:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/software/hadoop-3.2.1/tmp</value>

</property>

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

</configuration>

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.secondary.http.address</name>

<value>hadoop01:50070</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.support.append</name>

<value>true</value>

</property>

<property>

<name>dfs.webhdfs.broken.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop01</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- 启动yarn日志,七天 -->

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>640800</value>

</property>

<property>

<name>yarn.application.classpath</name>

<value>/home/software/hadoop-3.2.1/etc/hadoop:/home/software/hadoop-3.2.1/share/hadoop/common/lib/*:/home/software/hadoop-3.2.1/share/hadoop/common/*:/home/software/hadoop-3.2.1/share/hadoop/hdfs:/home/software/hadoop-3.2.1/share/hadoop/hdfs/lib/*:/home/software/hadoop-3.2.1/share/hadoop/hdfs/*:/home/software/hadoop-3.2.1/share/hadoop/mapreduce/lib/*:/home/software/hadoop-3.2.1/share/hadoop/mapreduce/*:/home/software/hadoop-3.2.1/share/hadoop/yarn:/home/software/hadoop-3.2.1/share/hadoop/yarn/lib/*:/home/software/hadoop-3.2.1/share/hadoop/yarn/*</value>

</property>

</configuration>

[root@master sbin]# vim /etc/hosts

192.168.128.78 hadoop01

# 新建文件夹

cd /home/software/hadoop-3.2.1

mkdir -p data

[root@localhost software]# start-dfs.sh

Starting namenodes on [hadoop01]

ERROR: Attempting to operate on hdfs namenode as root

ERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation.

Starting datanodes

ERROR: Attempting to operate on hdfs datanode as root

ERROR: but there is no HDFS_DATANODE_USER defined. Aborting operation.

Starting secondary namenodes [hadoop01]

ERROR: Attempting to operate on hdfs secondarynamenode as root

ERROR: but there is no HDFS_SECONDARYNAMENODE_USER defined. Aborting operation.

vim /etc/profile

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

source /etc/profile

[root@localhost software]# start-dfs.sh

Starting namenodes on [hadoop01]

上一次登录:一 12月 4 03:07:13 CST 2023pts/0 上

hadoop01: Permission denied (publickey,gssapi-keyex,gssapi-with-mic,password).

Starting datanodes

上一次登录:一 12月 4 03:08:55 CST 2023pts/0 上

localhost: Permission denied (publickey,gssapi-keyex,gssapi-with-mic,password).

Starting secondary namenodes [hadoop01]

上一次登录:一 12月 4 03:08:55 CST 2023pts/0 上

hadoop01: Permission denied (publickey,gssapi-keyex,gssapi-with-mic,password).

# 设置本机登录本机免密码,一路enter

ssh-keygen -t rsa

# 进入如下目录

[root@hadoop01 ~]# cd /root/.ssh/

[root@hadoop01 .ssh]# ls

id_rsa id_rsa.pub known_hosts

# 将公钥追加到authorized_keys文件中去

cat id_rsa.pub >> authorized_keys

# 将authorized_keys文件权限更改为600

chmod 600 authorized_keys

[root@hadoop01 .ssh]# start-dfs.sh

Starting namenodes on [hadoop01]

上一次登录:一 12月 4 03:19:04 CST 2023从 192.168.128.1pts/0 上

hadoop01: ERROR: JAVA_HOME is not set and could not be found.

Starting datanodes

上一次登录:一 12月 4 03:21:22 CST 2023pts/0 上

localhost: ERROR: JAVA_HOME is not set and could not be found.

Starting secondary namenodes [hadoop01]

上一次登录:一 12月 4 03:21:22 CST 2023pts/0 上

hadoop01: ERROR: JAVA_HOME is not set and could not be found.

# 切到[hadoop]/etc/hadoop目录

cd /home/software/hadoop-3.2.1/etc/hadoop

# 编辑

vim hadoop-env.sh

# 修改java_home路径和hadoop_conf_dir路径

export JAVA_HOME=/home/software/jdk1.8.0_181

export HADOOP_CONF_DIR=/home/software/hadoop-3.2.1/etc/hadoop

# 重新加载使修改生效

source hadoop-env.sh

# 启动

[root@hadoop01 hadoop]# start-dfs.sh

Starting namenodes on [hadoop01]

上一次登录:一 12月 4 03:21:23 CST 2023pts/0 上

Starting datanodes

上一次登录:一 12月 4 04:02:15 CST 2023pts/0 上

Starting secondary namenodes [hadoop01]

上一次登录:一 12月 4 04:02:17 CST 2023pts/0 上

# 验证

[root@hadoop01 hadoop]# jps

2083 NameNode

2451 SecondaryNameNode

2567 Jps

2218 DataNode

# 验证:和java相关的3个端口

[root@master software]# netstat -nltp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 192.168.128.78:9000 0.0.0.0:* LISTEN 3090/java

tcp 0 0 0.0.0.0:9870 0.0.0.0:* LISTEN 3090/java

tcp 0 0 192.168.128.78:50070 0.0.0.0:* LISTEN 3450/java

# 停止

[root@hadoop01 hadoop]# stop-dfs.sh

Stopping namenodes on [hadoop01]

上一次登录:一 12月 4 04:02:24 CST 2023pts/0 上

Stopping datanodes

上一次登录:一 12月 4 04:08:15 CST 2023pts/0 上

Stopping secondary namenodes [hadoop01]

上一次登录:一 12月 4 04:08:17 CST 2023pts/0 上

# 开放端口9870

[root@hadoop01 hadoop]# firewall-cmd --zone=public --add-port=9870/tcp --permanent

success

[root@hadoop01 hadoop]# firewall-cmd --reload

success

# windows上打开cmd测试

telnet 192.168.128.78 9870

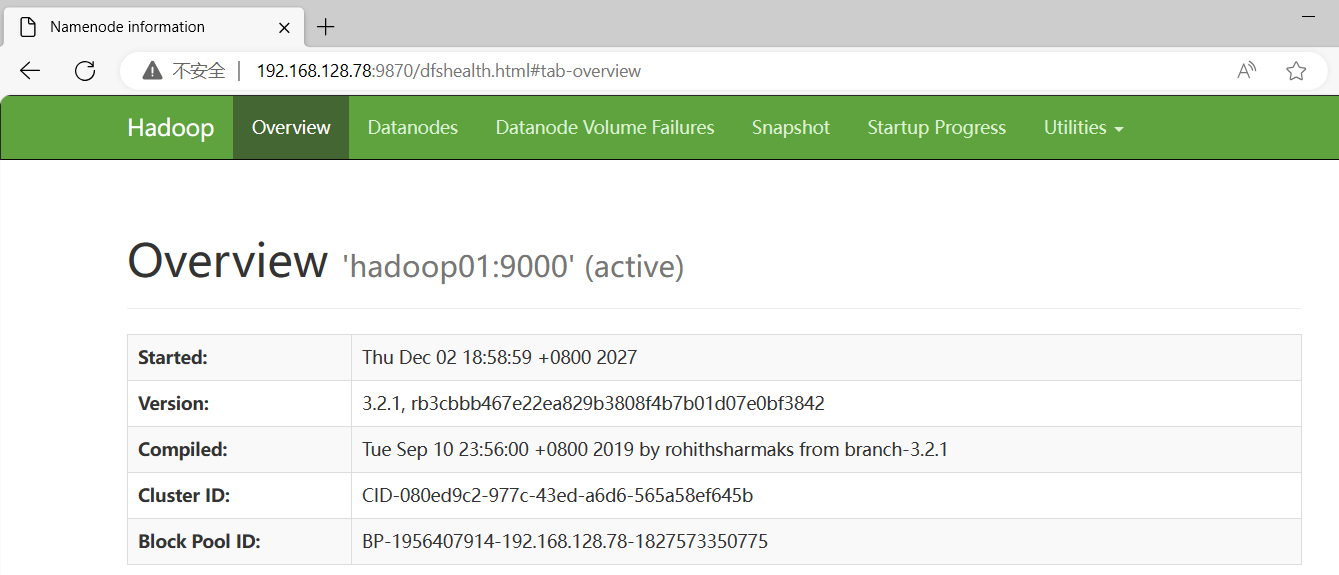

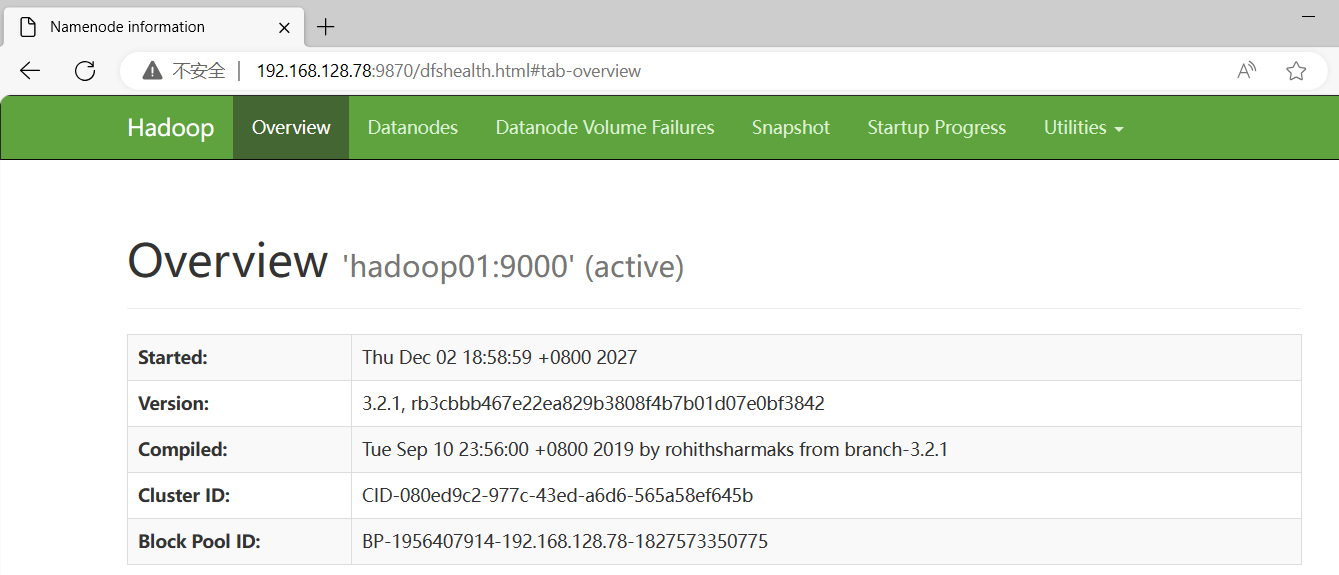

# 浏览器访问

http://192.168.128.78:9870/

# 清空缓存

hdfs namenode -format

# 查看日志

cd /home/software/hadoop-3.2.1/logs

# 启动失败,查看端口是否占用

浙公网安备 33010602011771号

浙公网安备 33010602011771号