linux安装spark

- 已安装mysql8

# 查看mysql状态

service mysqld status

查看详情

-

navicat连接mysql

-

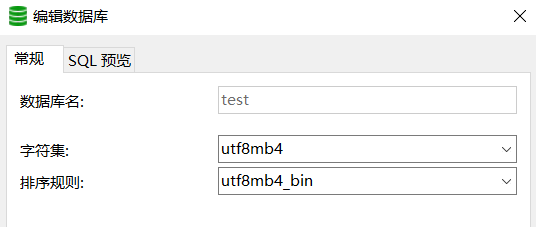

新建数据库

-

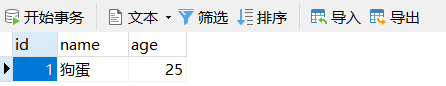

新建表student,并添加数据

- 报错:java.sgl.SQLException: No suitable driver

# 查看mysql版本

SELECT VERSION()

# 将mysql的驱动包放到如下位置

/home/software/spark-2.3.4-bin-hadoop2.7

/home/software/anaconda3/lib/python3.6/site-packages/pyspark/jars

# 查看是否有其他版本的驱动包,并删除

[root@master software]# find / -name mysql-connector-java-*.jar

/home/software/anaconda3/lib/python3.6/site-packages/pyspark/jars/mysql-connector-java-8.0.30.jar

/home/software/spark-2.3.4-bin-hadoop2.7/jars/mysql-connector-java-8.0.30.jar

- 报错:Caused by: com.mysql.cj.exceptions.UnableToConnectException: Public Key Retrieval is not allowed

# 允许公钥检索 allowPublicKeyRetrieval=true

jdbc:mysql://hostname:port/databasename?allowPublicKeyRetrieval=true&useSSL=false

- 安装jdk8

# 将压缩包上传到服务器

# 设置权限

chmod 755 jdk-8u181-linux-x64.tar.gz

# 解压

tar -zxvf jdk-8u181-linux-x64.tar.gz

# 配置

vim /etc/profile

# 配置如下内容

export JAVA_HOME=/home/software/jdk1.8.0_181

export PATH=$PATH:$JAVA_HOME/bin

# 配置生效

source /etc/profile

# 查看

java -version

# 路径

[root@master software]# env |grep JAVA

JAVA_HOME=/home/software/jdk1.8.0_181

- 报错:JAVA_HOME is not set

vim /etc/environment

# 编写如下

JAVA_HOME="/home/software/jdk1.8.0_181"

- 安装spark

# 将压缩包上传到服务器

# 设置权限

chmod 755 spark-2.3.4-bin-hadoop2.7.tgz

# 解压

tar -zxvf spark-2.3.4-bin-hadoop2.7.tgz

# 配置

vim /etc/profile

# 配置如下内容

export SPARK_HOME=/home/software/spark-2.3.4-bin-hadoop2.7

export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin

# 配置生效

source /etc/profile

# 验证

[root@master software]# pyspark

/home/software/spark-2.3.4-bin-hadoop2.7/conf/spark-env.sh: line 2: /usr/local/hadoop/bin/hadoop: No such file or directory

Python 2.7.5 (default, Nov 16 2020, 22:23:17)

[GCC 4.8.5 20150623 (Red Hat 4.8.5-44)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

2027-11-29 14:10:01 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 2.3.4

/_/

Using Python version 2.7.5 (default, Nov 16 2020 22:23:17)

SparkSession available as 'spark'.

# 退出

>>> exit()

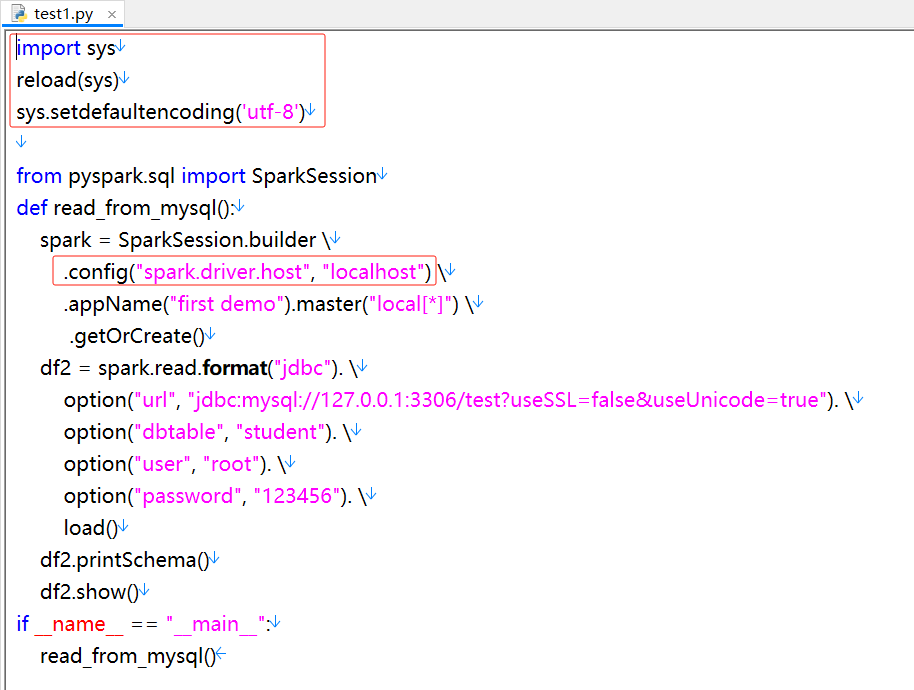

- 本地运行成功,查询到数据库的数据

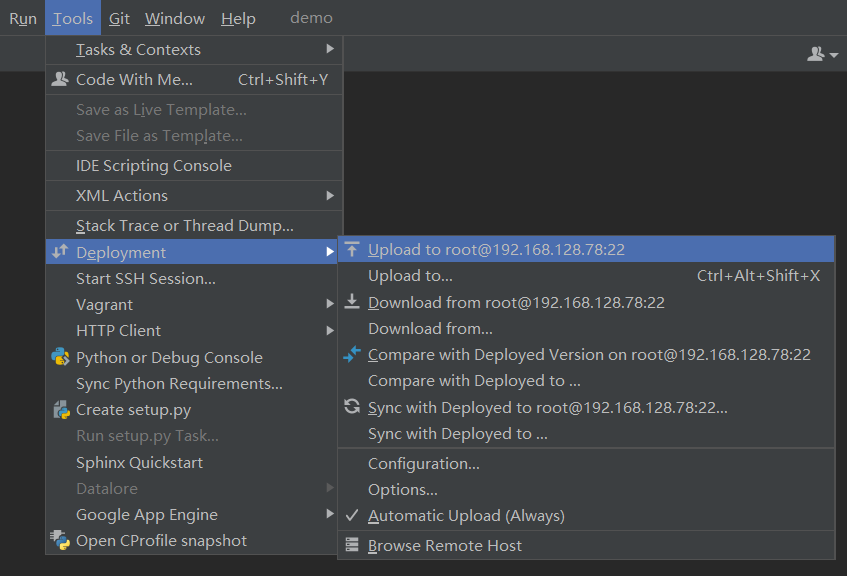

- 将python文件上传到服务器,使用spark上执行

查看详情

[root@master software]# spark-submit test1.py

/home/software/spark-2.3.4-bin-hadoop2.7/conf/spark-env.sh: line 2: /usr/local/hadoop/bin/hadoop: No such file or directory

2027-11-29 14:12:36 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2027-11-29 14:12:36 INFO SparkContext:54 - Running Spark version 2.3.4

2027-11-29 14:12:36 INFO SparkContext:54 - Submitted application: first demo

2027-11-29 14:12:36 INFO SecurityManager:54 - Changing view acls to: root

2027-11-29 14:12:36 INFO SecurityManager:54 - Changing modify acls to: root

2027-11-29 14:12:36 INFO SecurityManager:54 - Changing view acls groups to:

2027-11-29 14:12:36 INFO SecurityManager:54 - Changing modify acls groups to:

2027-11-29 14:12:36 INFO SecurityManager:54 - SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

2027-11-29 14:12:36 INFO Utils:54 - Successfully started service 'sparkDriver' on port 33641.

2027-11-29 14:12:36 INFO SparkEnv:54 - Registering MapOutputTracker

2027-11-29 14:12:36 INFO SparkEnv:54 - Registering BlockManagerMaster

2027-11-29 14:12:36 INFO BlockManagerMasterEndpoint:54 - Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

2027-11-29 14:12:36 INFO BlockManagerMasterEndpoint:54 - BlockManagerMasterEndpoint up

2027-11-29 14:12:36 INFO DiskBlockManager:54 - Created local directory at /tmp/blockmgr-7657f410-212b-4b1a-acc2-062d3ff54c8c

2027-11-29 14:12:36 INFO MemoryStore:54 - MemoryStore started with capacity 413.9 MB

2027-11-29 14:12:36 INFO SparkEnv:54 - Registering OutputCommitCoordinator

2027-11-29 14:12:36 INFO log:192 - Logging initialized @2082ms

2027-11-29 14:12:37 INFO Server:351 - jetty-9.3.z-SNAPSHOT, build timestamp: unknown, git hash: unknown

2027-11-29 14:12:37 INFO Server:419 - Started @2165ms

2027-11-29 14:12:37 INFO AbstractConnector:278 - Started ServerConnector@75afaf3{HTTP/1.1,[http/1.1]}{192.168.128.78:4040}

2027-11-29 14:12:37 INFO Utils:54 - Successfully started service 'SparkUI' on port 4040.

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@11679a7f{/jobs,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@37457949{/jobs/json,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@e200e09{/jobs/job,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@12e3ada1{/jobs/job/json,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@e701185{/stages,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@ae0604{/stages/json,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@12b969be{/stages/stage,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@63242cbd{/stages/stage/json,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@3a094dfe{/stages/pool,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@65e94d7a{/stages/pool/json,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@22530a07{/storage,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@60509b34{/storage/json,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@10c65c7d{/storage/rdd,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@3f6b4182{/storage/rdd/json,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@552775a{/environment,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@61bfc5ff{/environment/json,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@43b85801{/executors,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@26ba66a5{/executors/json,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@30c42d8f{/executors/threadDump,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@2fcb9655{/executors/threadDump/json,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@2e32bbfd{/static,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@748e1056{/,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@4eeb70d2{/api,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@541a12bb{/jobs/job/kill,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@7ec063{/stages/stage/kill,null,AVAILABLE,@Spark}

2027-11-29 14:12:37 INFO SparkUI:54 - Bound SparkUI to 192.168.128.78, and started at http://localhost:4040

2027-11-29 14:12:37 INFO SparkContext:54 - Added file file:/home/software/test1.py at file:/home/software/test1.py with timestamp 1827468757549

2027-11-29 14:12:37 INFO Utils:54 - Copying /home/software/test1.py to /tmp/spark-7c52bbd6-d752-4c4d-ab95-42cdd8c140f3/userFiles-f692857b-82b9-434c-b5bc-b5d1cf9f8bfe/test1.py

2027-11-29 14:12:37 INFO Executor:54 - Starting executor ID driver on host localhost

2027-11-29 14:12:37 INFO Utils:54 - Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 43340.

2027-11-29 14:12:37 INFO NettyBlockTransferService:54 - Server created on localhost:43340

2027-11-29 14:12:37 INFO BlockManager:54 - Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

2027-11-29 14:12:37 INFO BlockManagerMaster:54 - Registering BlockManager BlockManagerId(driver, localhost, 43340, None)

2027-11-29 14:12:37 INFO BlockManagerMasterEndpoint:54 - Registering block manager localhost:43340 with 413.9 MB RAM, BlockManagerId(driver, localhost, 43340, None)

2027-11-29 14:12:37 INFO BlockManagerMaster:54 - Registered BlockManager BlockManagerId(driver, localhost, 43340, None)

2027-11-29 14:12:37 INFO BlockManager:54 - Initialized BlockManager: BlockManagerId(driver, localhost, 43340, None)

2027-11-29 14:12:37 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@3117e40b{/metrics/json,null,AVAILABLE,@Spark}

2027-11-29 14:12:38 INFO SharedState:54 - Setting hive.metastore.warehouse.dir ('null') to the value of spark.sql.warehouse.dir ('file:/home/software/spark-warehouse/').

2027-11-29 14:12:38 INFO SharedState:54 - Warehouse path is 'file:/home/software/spark-warehouse/'.

2027-11-29 14:12:38 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@6117631{/SQL,null,AVAILABLE,@Spark}

2027-11-29 14:12:38 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@4aaadc64{/SQL/json,null,AVAILABLE,@Spark}

2027-11-29 14:12:38 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@2155efd1{/SQL/execution,null,AVAILABLE,@Spark}

2027-11-29 14:12:38 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@787abb40{/SQL/execution/json,null,AVAILABLE,@Spark}

2027-11-29 14:12:38 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@2d866e5f{/static/sql,null,AVAILABLE,@Spark}

2027-11-29 14:12:38 INFO StateStoreCoordinatorRef:54 - Registered StateStoreCoordinator endpoint

root

|-- id: integer (nullable = true)

|-- name: string (nullable = true)

|-- age: integer (nullable = true)

2027-11-29 14:12:41 INFO CodeGenerator:54 - Code generated in 397.276779 ms

2027-11-29 14:12:41 INFO CodeGenerator:54 - Code generated in 37.30301 ms

2027-11-29 14:12:42 INFO SparkContext:54 - Starting job: showString at NativeMethodAccessorImpl.java:0

2027-11-29 14:12:42 INFO DAGScheduler:54 - Got job 0 (showString at NativeMethodAccessorImpl.java:0) with 1 output partitions

2027-11-29 14:12:42 INFO DAGScheduler:54 - Final stage: ResultStage 0 (showString at NativeMethodAccessorImpl.java:0)

2027-11-29 14:12:42 INFO DAGScheduler:54 - Parents of final stage: List()

2027-11-29 14:12:42 INFO DAGScheduler:54 - Missing parents: List()

2027-11-29 14:12:42 INFO DAGScheduler:54 - Submitting ResultStage 0 (MapPartitionsRDD[3] at showString at NativeMethodAccessorImpl.java:0), which has no missing parents

2027-11-29 14:12:42 INFO MemoryStore:54 - Block broadcast_0 stored as values in memory (estimated size 8.8 KB, free 413.9 MB)

2027-11-29 14:12:42 INFO MemoryStore:54 - Block broadcast_0_piece0 stored as bytes in memory (estimated size 4.4 KB, free 413.9 MB)

2027-11-29 14:12:42 INFO BlockManagerInfo:54 - Added broadcast_0_piece0 in memory on localhost:43340 (size: 4.4 KB, free: 413.9 MB)

2027-11-29 14:12:42 INFO SparkContext:54 - Created broadcast 0 from broadcast at DAGScheduler.scala:1039

2027-11-29 14:12:42 INFO DAGScheduler:54 - Submitting 1 missing tasks from ResultStage 0 (MapPartitionsRDD[3] at showString at NativeMethodAccessorImpl.java:0) (first 15 tasks are for partitions Vector(0))

2027-11-29 14:12:42 INFO TaskSchedulerImpl:54 - Adding task set 0.0 with 1 tasks

2027-11-29 14:12:42 INFO TaskSetManager:54 - Starting task 0.0 in stage 0.0 (TID 0, localhost, executor driver, partition 0, PROCESS_LOCAL, 7677 bytes)

2027-11-29 14:12:42 INFO Executor:54 - Running task 0.0 in stage 0.0 (TID 0)

2027-11-29 14:12:42 INFO Executor:54 - Fetching file:/home/software/test1.py with timestamp 1827468757549

2027-11-29 14:12:42 INFO Utils:54 - /home/software/test1.py has been previously copied to /tmp/spark-7c52bbd6-d752-4c4d-ab95-42cdd8c140f3/userFiles-f692857b-82b9-434c-b5bc-b5d1cf9f8bfe/test1.py

2027-11-29 14:12:42 INFO JDBCRDD:54 - closed connection

2027-11-29 14:12:42 INFO Executor:54 - Finished task 0.0 in stage 0.0 (TID 0). 1234 bytes result sent to driver

2027-11-29 14:12:42 INFO TaskSetManager:54 - Finished task 0.0 in stage 0.0 (TID 0) in 255 ms on localhost (executor driver) (1/1)

2027-11-29 14:12:42 INFO DAGScheduler:54 - ResultStage 0 (showString at NativeMethodAccessorImpl.java:0) finished in 0.566 s

2027-11-29 14:12:42 INFO TaskSchedulerImpl:54 - Removed TaskSet 0.0, whose tasks have all completed, from pool

2027-11-29 14:12:42 INFO DAGScheduler:54 - Job 0 finished: showString at NativeMethodAccessorImpl.java:0, took 0.641017 s

+---+----+---+

| id|name|age|

+---+----+---+

| 1| 狗蛋| 25|

+---+----+---+

2027-11-29 14:12:42 INFO SparkContext:54 - Invoking stop() from shutdown hook

2027-11-29 14:12:42 INFO AbstractConnector:318 - Stopped Spark@75afaf3{HTTP/1.1,[http/1.1]}{192.168.128.78:4040}

2027-11-29 14:12:42 INFO SparkUI:54 - Stopped Spark web UI at http://localhost:4040

2027-11-29 14:12:42 INFO MapOutputTrackerMasterEndpoint:54 - MapOutputTrackerMasterEndpoint stopped!

2027-11-29 14:12:42 INFO MemoryStore:54 - MemoryStore cleared

2027-11-29 14:12:42 INFO BlockManager:54 - BlockManager stopped

2027-11-29 14:12:42 INFO BlockManagerMaster:54 - BlockManagerMaster stopped

2027-11-29 14:12:42 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint:54 - OutputCommitCoordinator stopped!

2027-11-29 14:12:42 INFO SparkContext:54 - Successfully stopped SparkContext

2027-11-29 14:12:42 INFO ShutdownHookManager:54 - Shutdown hook called

2027-11-29 14:12:42 INFO ShutdownHookManager:54 - Deleting directory /tmp/spark-7c52bbd6-d752-4c4d-ab95-42cdd8c140f3/pyspark-cf837efb-447f-41bf-8250-f6f6dbc7c6a0

2027-11-29 14:12:42 INFO ShutdownHookManager:54 - Deleting directory /tmp/spark-7c52bbd6-d752-4c4d-ab95-42cdd8c140f3

2027-11-29 14:12:42 INFO ShutdownHookManager:54 - Deleting directory /tmp/spark-05f73984-6870-498b-9084-1c2af8a400a5

- 报错:Service 'sparkDriver' failed after 16 retries (starting from 0)!

# 进入如下路径

cd /home/software/spark-2.3.4-bin-hadoop2.7/conf

# 查看

[root@master conf]# ls

docker.properties.template log4j.properties.template slaves.template spark-env.sh.template

fairscheduler.xml.template metrics.properties.template spark-defaults.conf.template

# 复制文件

cp spark-env.sh.template spark-env.sh

# 查看

[root@master conf]# ls

docker.properties.template log4j.properties.template slaves.template spark-env.sh

fairscheduler.xml.template metrics.properties.template spark-defaults.conf.template spark-env.sh.template

# 编辑

[root@master conf]# vim spark-env.sh

# 添加如下2行,自己的IP地址

export SPARK_DIST_CLASSPATH=$(/usr/local/hadoop/bin/hadoop classpath)

export SPARK_LOCAL_IP=192.168.128.78

- 添加1行代码

.config("spark.driver.host", "localhost")

- 报错:UnicodeEncodeError: 'ascii' codec can't encode characters in position 52-53: ordinal not in range(128)

# 顶部添加如下

import sys

reload(sys)

sys.setdefaultencoding('utf-8')

查看详情

- 导入数据到数据库

from pyspark.sql import SparkSession

from pyspark.sql.types import StructType, StringType, IntegerType

if __name__ == '__main__':

# 0. 构建执行环境入口对象SparkSession

spark = SparkSession.builder. \

config("spark.driver.host", "localhost"). \

appName("test"). \

master("local[*]").getOrCreate()

sc = spark.sparkContext

# 1. 读取数据集

schema = StructType(). \

add("user_id", StringType(), nullable=True). \

add("movie_id", IntegerType(), nullable=True). \

add("rank", IntegerType(), nullable=True). \

add("ts", StringType(), nullable=True)

df = spark.read.format("csv"). \

option("sep", "\t"). \

option("header", False). \

option("encoding", "utf-8"). \

schema(schema=schema). \

load("./u.data")

# 控制台显示20行

df.show(20)

df.createOrReplaceTempView("movie")

df2=spark.sql("select * from movie limit 100")

# 2. 写出df到mysql数据库中

df2.write.mode("overwrite"). \

format("jdbc"). \

option("url", "jdbc:mysql://192.168.128.78:3306/test?useSSL=false&useUnicode=true"). \

option("dbtable", "movie_data"). \

option("user", "root"). \

option("password", "123456"). \

save()

spark.stop()

- 报错如下,没有找到数据源

查看详情

ssh://root@192.168.128.78:22/home/software/anaconda3/bin/python3 -u /tmp/pycharm_project_115/day26/15_dataframe_jdbc.py

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

27/11/29 15:06:07 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

/home/software/anaconda3/lib/python3.6/site-packages/pyspark/context.py:238: FutureWarning: Python 3.6 support is deprecated in Spark 3.2.

FutureWarning

Traceback (most recent call last):

File "/tmp/pycharm_project_115/day26/15_dataframe_jdbc.py", line 26, in <module>

load("./u.data")

File "/home/software/anaconda3/lib/python3.6/site-packages/pyspark/sql/readwriter.py", line 158, in load

return self._df(self._jreader.load(path))

File "/home/software/anaconda3/lib/python3.6/site-packages/py4j/java_gateway.py", line 1322, in __call__

answer, self.gateway_client, self.target_id, self.name)

File "/home/software/anaconda3/lib/python3.6/site-packages/pyspark/sql/utils.py", line 117, in deco

raise converted from None

pyspark.sql.utils.AnalysisException: Path does not exist: file:/tmp/pycharm_project_115/day26/u.data

-

解决方案,上传到服务器

-

查看远程服务,是否上传成功,否则使用ftp工具手动上传

# 查看上面的控制台错误信息,找到服务器中的路径

/tmp/pycharm_project_115/

浙公网安备 33010602011771号

浙公网安备 33010602011771号