2020-2021-1 20209311《Linux内核原理与分析》第四周作业

2020-2021-1 20209311《Linux内核原理与分析》第四周作业

一、实验三 跟踪分析Linux内核的启动过程

1.实验过程

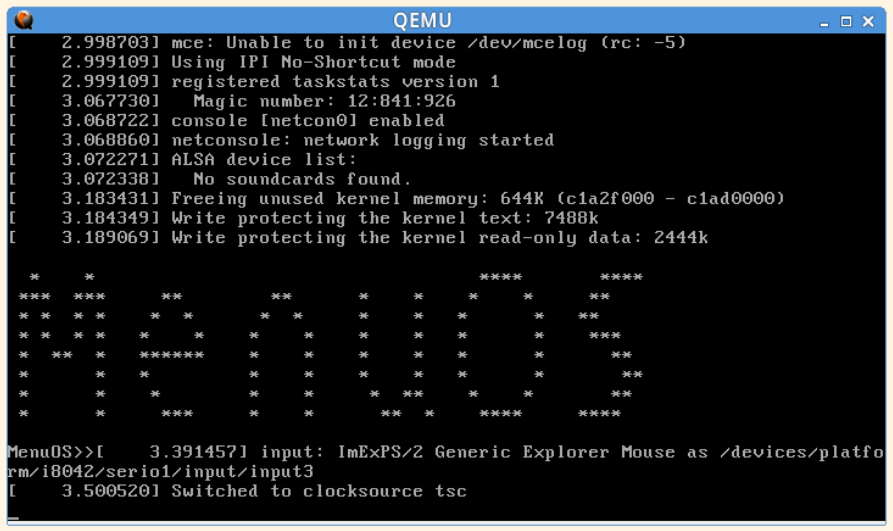

使用实验楼的虚拟机打开shell,内核启动后进入menu程序:

cd ~/LinuxKernel/

qemu -kernel linux-3.18.6/arch/x86/boot/bzImage -initrd rootfs.img

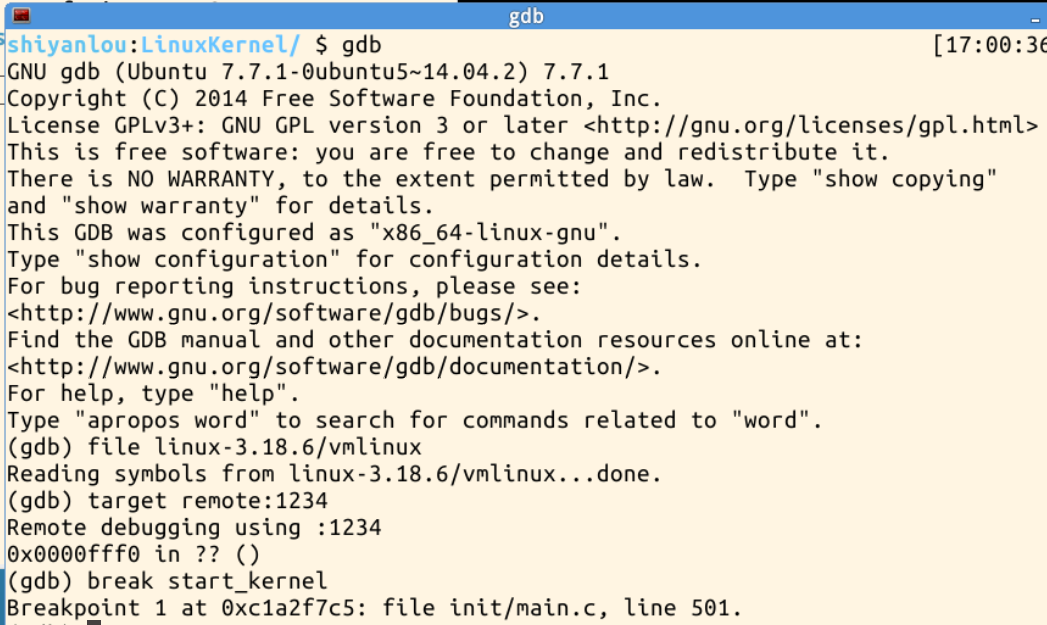

要使用gdb跟踪调试内核,需要在打开menu时添加两个选项s、S:

qemu -kernel linux-3.18.6/arch/x86/boot/bzImage -initrd rootfs.img -s -S

# 关于-s和-S选项的说明:

# 1. -S

# -S freeze CPU at startup (use ’c’ to start execution)

# 2. -s

# -s shorthand for -gdb tcp::1234

# 若不想使用1234端口,则可以使用-gdb tcp:xxxx来取代-s选项

可以看到,-S选项阻止了cpu执行后续指令,-s的作用是打开1234端口号,供后续gdb调试使用。

打开gdb,进入目录后输入以下命令:

file linux-3.18.6/vmlinux

# 在gdb界面中targe remote之前加载符号表

target remote:1234

# 建立gdb和gdbserver之间的连接,按c 让qemu上的Linux继续运行

break start_kernel

# 断点的设置可以在target remote之前,也可以在之后

效果如图所示:

通过设置断点的方法,我们可以跟踪内核的启动过程。

2.实验分析

整个内核中,最关键的函数便是start_kernel函数,这个函数颇为复杂。start_kernel函数的代码如下:

asmlinkage __visible void __init start_kernel(void)

{

char *command_line;

char *after_dashes;

/*

* Need to run as early as possible, to initialize the

* lockdep hash:

*/

lockdep_init();

set_task_stack_end_magic(&init_task);

smp_setup_processor_id();

debug_objects_early_init();

/*

* Set up the the initial canary ASAP:

*/

boot_init_stack_canary();

cgroup_init_early();

local_irq_disable();

early_boot_irqs_disabled = true;

/*

* Interrupts are still disabled. Do necessary setups, then

* enable them

*/

boot_cpu_init();

page_address_init();

pr_notice("%s", linux_banner);

setup_arch(&command_line);

mm_init_cpumask(&init_mm);

setup_command_line(command_line);

setup_nr_cpu_ids();

setup_per_cpu_areas();

smp_prepare_boot_cpu(); /* arch-specific boot-cpu hooks */

build_all_zonelists(NULL, NULL);

page_alloc_init();

pr_notice("Kernel command line: %s\n", boot_command_line);

parse_early_param();

after_dashes = parse_args("Booting kernel",

static_command_line, __start___param,

__stop___param - __start___param,

-1, -1, &unknown_bootoption);

if (!IS_ERR_OR_NULL(after_dashes))

parse_args("Setting init args", after_dashes, NULL, 0, -1, -1,

set_init_arg);

jump_label_init();

/*

* These use large bootmem allocations and must precede

* kmem_cache_init()

*/

setup_log_buf(0);

pidhash_init();

vfs_caches_init_early();

sort_main_extable();

trap_init();

mm_init();

/*

* Set up the scheduler prior starting any interrupts (such as the

* timer interrupt). Full topology setup happens at smp_init()

* time - but meanwhile we still have a functioning scheduler.

*/

sched_init();

/*

* Disable preemption - early bootup scheduling is extremely

* fragile until we cpu_idle() for the first time.

*/

preempt_disable();

if (WARN(!irqs_disabled(),

"Interrupts were enabled *very* early, fixing it\n"))

local_irq_disable();

idr_init_cache();

rcu_init();

context_tracking_init();

radix_tree_init();

/* init some links before init_ISA_irqs() */

early_irq_init();

init_IRQ();

tick_init();

rcu_init_nohz();

init_timers();

hrtimers_init();

softirq_init();

timekeeping_init();

time_init();

sched_clock_postinit();

perf_event_init();

profile_init();

call_function_init();

WARN(!irqs_disabled(), "Interrupts were enabled early\n");

early_boot_irqs_disabled = false;

local_irq_enable();

kmem_cache_init_late();

/*

* HACK ALERT! This is early. We're enabling the console before

* we've done PCI setups etc, and console_init() must be aware of

* this. But we do want output early, in case something goes wrong.

*/

console_init();

if (panic_later)

panic("Too many boot %s vars at `%s'", panic_later,

panic_param);

lockdep_info();

/*

* Need to run this when irqs are enabled, because it wants

* to self-test [hard/soft]-irqs on/off lock inversion bugs

* too:

*/

locking_selftest();

#ifdef CONFIG_BLK_DEV_INITRD

if (initrd_start && !initrd_below_start_ok &&

page_to_pfn(virt_to_page((void *)initrd_start)) < min_low_pfn) {

pr_crit("initrd overwritten (0x%08lx < 0x%08lx) - disabling it.\n",

page_to_pfn(virt_to_page((void *)initrd_start)),

min_low_pfn);

initrd_start = 0;

}

#endif

page_cgroup_init();

debug_objects_mem_init();

kmemleak_init();

setup_per_cpu_pageset();

numa_policy_init();

if (late_time_init)

late_time_init();

sched_clock_init();

calibrate_delay();

pidmap_init();

anon_vma_init();

acpi_early_init();

#ifdef CONFIG_X86

if (efi_enabled(EFI_RUNTIME_SERVICES))

efi_enter_virtual_mode();

#endif

#ifdef CONFIG_X86_ESPFIX64

/* Should be run before the first non-init thread is created */

init_espfix_bsp();

#endif

thread_info_cache_init();

cred_init();

fork_init(totalram_pages);

proc_caches_init();

buffer_init();

key_init();

security_init();

dbg_late_init();

vfs_caches_init(totalram_pages);

signals_init();

/* rootfs populating might need page-writeback */

page_writeback_init();

proc_root_init();

cgroup_init();

cpuset_init();

taskstats_init_early();

delayacct_init();

check_bugs();

sfi_init_late();

if (efi_enabled(EFI_RUNTIME_SERVICES)) {

efi_late_init();

efi_free_boot_services();

}

ftrace_init();

/* Do the rest non-__init'ed, we're now alive */

rest_init();

}

可以看到,start_kernel函数调用了一系列的初始化函数来完成内核本身的设置,包括trap_init函数初始化中断向量,mm_init函数初始化内存管理,sched_init函数初始化调度模块等,最后调用rest_init函数对剩余部分初始化。rest_init函数内容如下:

noinline void __ref rest_init(void)

{

struct task_struct *tsk;

int pid;

rcu_scheduler_starting();

/*

* We need to spawn init first so that it obtains pid 1, however

* the init task will end up wanting to create kthreads, which, if

* we schedule it before we create kthreadd, will OOPS.

*/

pid = kernel_thread(kernel_init, NULL, CLONE_FS);

/*

* Pin init on the boot CPU. Task migration is not properly working

* until sched_init_smp() has been run. It will set the allowed

* CPUs for init to the non isolated CPUs.

*/

rcu_read_lock();

tsk = find_task_by_pid_ns(pid, &init_pid_ns);

set_cpus_allowed_ptr(tsk, cpumask_of(smp_processor_id()));

rcu_read_unlock();

numa_default_policy();

pid = kernel_thread(kthreadd, NULL, CLONE_FS | CLONE_FILES);

rcu_read_lock();

kthreadd_task = find_task_by_pid_ns(pid, &init_pid_ns);

rcu_read_unlock();

/*

* Enable might_sleep() and smp_processor_id() checks.

* They cannot be enabled earlier because with CONFIG_PREEMPT=y

* kernel_thread() would trigger might_sleep() splats. With

* CONFIG_PREEMPT_VOLUNTARY=y the init task might have scheduled

* already, but it's stuck on the kthreadd_done completion.

*/

system_state = SYSTEM_SCHEDULING;

complete(&kthreadd_done);

}

可以看到,rest_init函数创建了init内核线程和kthreadd内核线程,这两个线程会创建1、2号进程。

3.实验收获

0号进程是linux启动的第一个进程,运行在内核态,由系统自动创建。当系统完成初始化后,变为idle进程。

内核在调用start_kernel后,会创建两个内核线程———init和kthreadd。其中,init内核线程最终执行/sbin/init进程,变为所有用户态程序的根进程,即用户空间的init进程,称为1号进程;kthreadd内核线程变为所有内核态其他守护线程的父线程,称为2号进程。

二、Linux知识学习

1.“三大法宝”和“两把宝剑”

计算机的“三大法宝”指:

- 存储程序计算机

- 函数调用堆栈机制

- 中断

操作系统的“两把宝剑”:

- 中断上下文

- 进程上下文

操作系统的两把宝剑:一把是中断上下文的切换——保存现场和恢复现场;另一把是进程上下文的切换。不管是“三大法宝”还是“两把宝剑”,它们都和汇编语言有着密不可分的联系。

2.Linux内核源码的目录结构

- arch:存放了CPU体系结构的相关代码,使Linux内核支持不同的CPU和体系结构。

- block:存放Linux存储体系中关于块设备管理的代码。

- crypto:存放常见的加密算法的C语言代码。

- Documentation:存放一些文档。

- drivers:驱动目录,存放了Linux内核支持的所有硬件设备的驱动源代码。

- firmware:固件。

- fs:文件系统,列出了Linux支持的各种文件系统的实现。

- include:头文件目录,存放公共的头文件。

- init:存放Linux内核启动时的初始化代码。