OpenStack学习系列之九:多种网络类型下的实例之间网络通信问题详解

OpenStack的neutron组件实现了跨宿主机实例之间的网络分配和通信功能,其中网络中使用了Linux的网络命名空间来实现网络的隔离,相关资料可以参考:https://www.cnblogs.com/djoker/p/15974846.html

在OpenStack的部署安装中,在ens19和ens20两个物理网卡上分别创建了provider和inside两个网络,这两个网络类型是一样的,都是flat网络类型,借助桥接将物理网卡和实例的虚拟网卡进行桥接,实现网络通信。

flat网络类型

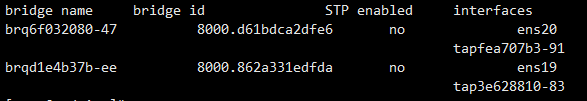

在安装部署OpenStack时设置网络部分已经添加了两个网络,分别是provider(绑定网卡ens19)和inside(绑定网卡ens20),这两个网络都是flat网络,将实例的虚拟网卡和物理网卡桥接在一起。在控制节点node1上可以看到两个桥接网卡brqd1e4b37b-ee和brq6f032080-47并分别桥接ens19和ens20。

获取OpenStack的网络信息可以看到,brqd1e4b37b-ee对应的网络ID为d1e4b37b-eeb7-4934-aab7-dec537139a3a,而brq6f032080-47对应的网络ID为6f032080-473d-4aa8-8cc3-074f23ecc4dd

[root@node1 ~]# openstack network list

+--------------------------------------+----------+--------------------------------------+

| ID | Name | Subnets |

+--------------------------------------+----------+--------------------------------------+

| 6f032080-473d-4aa8-8cc3-074f23ecc4dd | inside | 11eec460-caf2-45d1-ad79-432106b07f59 |

| d1e4b37b-eeb7-4934-aab7-dec537139a3a | provider | d439dfdb-8e80-4d30-a415-f7015c2108bc |

+--------------------------------------+----------+--------------------------------------+ 对应关系如下所示:

|

OpenStack Network ID

|

网络名词

|

宿主机桥接网卡

|

桥接物理网卡

|

|

d1e4b37b-eeb7-4934-aab7-dec537139a3a

|

provider

|

brqd1e4b37b-ee

|

ens19

|

|

6f032080-473d-4aa8-8cc3-074f23ecc4dd

|

inside

|

brq6f032080-47

|

ens20

|

这里以provider网络为例,查看provider网络的网络命名空间,可以通过获取的OpenStack network的ID来确认为qdhcp-d1e4b37b-eeb7-4934-aab7-dec537139a3a

[root@node1 ~]# ip netns ls

qdhcp-d1e4b37b-eeb7-4934-aab7-dec537139a3a (id: 1)

qdhcp-6f032080-473d-4aa8-8cc3-074f23ecc4dd (id: 0) 查看provider网络的命名空间的网络信息,可以看到其中的虚拟网卡为ns-3e628810-83,同时配置了IP地址为172.16.1.10,这里的IP地址用于启动DHCP服务并对外分配IP地址

[root@node1 ~]# ip netns exec qdhcp-d1e4b37b-eeb7-4934-aab7-dec537139a3a ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ns-3e628810-83@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:78:a1:95 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.16.1.10/24 brd 172.16.1.255 scope global ns-3e628810-83

valid_lft forever preferred_lft forever

inet 169.254.169.254/32 brd 169.254.169.254 scope global ns-3e628810-83

valid_lft forever preferred_lft forever

inet6 fe80::a9fe:a9fe/64 scope link

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe78:a195/64 scope link

valid_lft forever preferred_lft forever 而对应网络命名空间内虚拟网卡ns-3e628810-83的宿主机上的虚拟网卡为tap3e628810-83,可以看到和ens19为桥接在一起

brqd1e4b37b-ee 8000.862a331edfda no ens19

tap3e628810-83 而provider和inside之所以分别绑定在ens19和ens20两个网卡,是在neutron组件配置中/etc/neutron/plugins/ml2/linuxbridge_agent.ini配置文件中已经指定。

[linux_bridge]

physical_interface_mappings = provider:ens19,inside:ens20 创建两个实例在不同的计算节点上,网络使用provider网络,当实例运行之后,测试网络通信和查看网卡桥接状态

创建两个实例并查看网络和运行主机信息,可以看到test-1实例运行在node4上,而test-2实例运行在node3上

[root@node1 ~]# openstack server list --long

+--------------------------------------+--------+--------+------------+-------------+-----------------------+--------------------------+--------------------------+-------------+-----------+-------------------+-------+------------+

| ID | Name | Status | Task State | Power State | Networks | Image Name | Image ID | Flavor Name | Flavor ID | Availability Zone | Host | Properties |

+--------------------------------------+--------+--------+------------+-------------+-----------------------+--------------------------+--------------------------+-------------+-----------+-------------------+-------+------------+

| 59b013a2-9880-4396-a022-81db284d6f2c | test-1 | ACTIVE | None | Running | provider=172.16.1.136 | N/A (booted from volume) | N/A (booted from volume) | m1.nano | 0 | nova | node4 | |

| 8259be88-a207-4c96-bb91-9574c8264034 | test-2 | ACTIVE | None | Running | provider=172.16.1.43 | N/A (booted from volume) | N/A (booted from volume) | m1.nano | 0 | nova | node3 | |

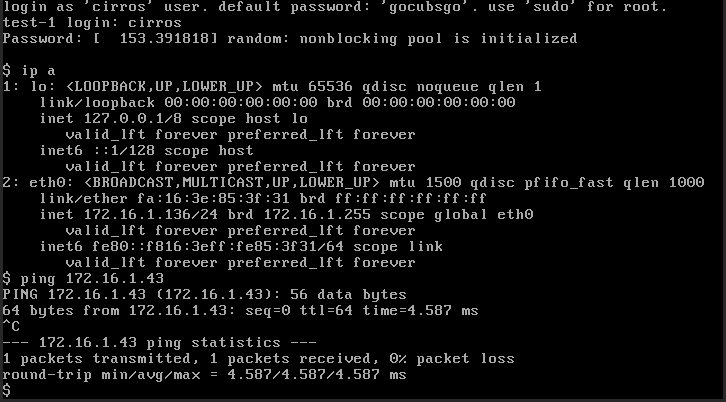

+--------------------------------------+--------+--------+------------+-------------+-----------------------+--------------------------+--------------------------+-------------+-----------+-------------------+-------+------------+ 登录test-1实例的控制台查看IP地址并测试和实例test-2的网络是可以通行的

此时查看node4上的网络信息,桥接口brqd1e4b37b-ee和控制节点上的桥接网卡名称一样,且ens19和tapb47c3f02-3b为桥接网卡。其中tapb47c3f02-3b为虚拟网卡对,一段在宿主机上和ens19作为桥接,另一端在实例中。而node3上的网络情况和node4一样。

bridge name bridge id STP enabled interfaces

brqd1e4b37b-ee 8000.0e9decd1b677 no ens19

tapb47c3f02-3bvlan网络类型

flat网络类型需要每个网络都绑定一个物理网卡,且两个flat网络类型的网络无法绑定同一个屋里网卡。当实例网络不断增加时已经无法满足需求,此时就需要vlan网络类型。vlan网络可以在一个物理网卡上添加多个,此时需要物理网卡和连接的交换机上的网口配置为trunk接口类型,并允许vlan网络类型的vlan ID。

vlan网类型的配置,在neutron组件的配置中/etc/neutron/plugins/ml2/ml2_conf.ini已经指定,并调用网络桥接插件/etc/neutron/plugins/ml2/linuxbridge_agent.ini。可以看到vlan网络类型调用的provider网络,其中vlanID为1001到2000,而provider网络绑定的物理网卡为ens19。所以需要调整ens19物理网卡对应的交换机网口为trunk口并允许vlan1001到vlan2000可以通过。

# /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

type_drivers = local,flat,vlan,gre,vxlan,geneve

[ml2_type_vlan]

network_vlan_ranges = provider:1001:2000

# /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

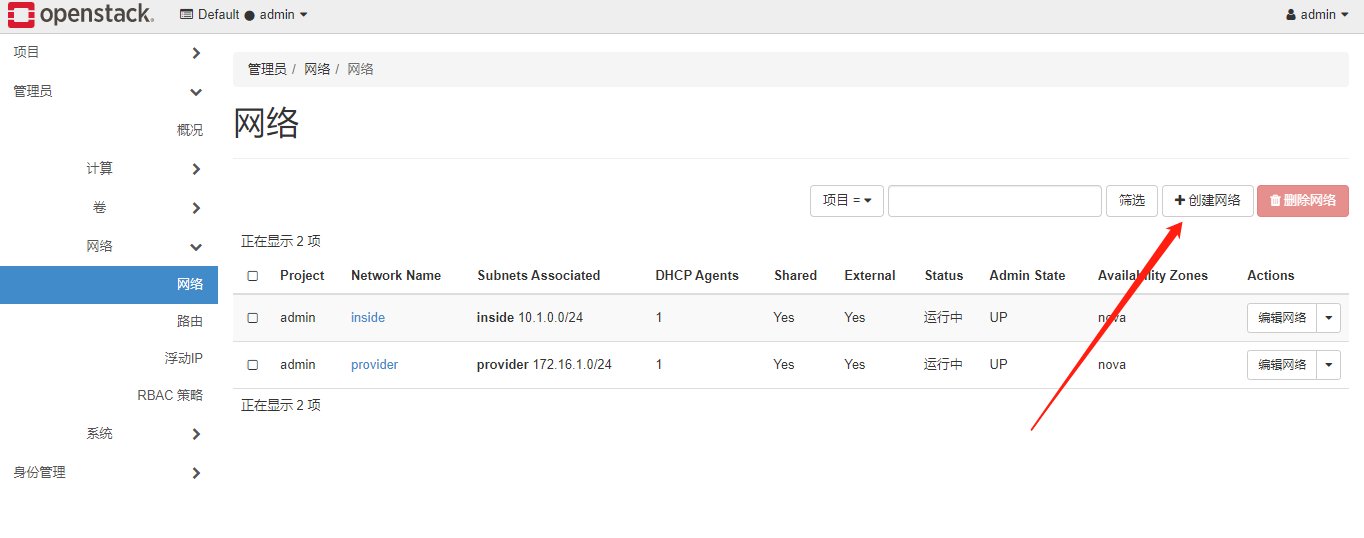

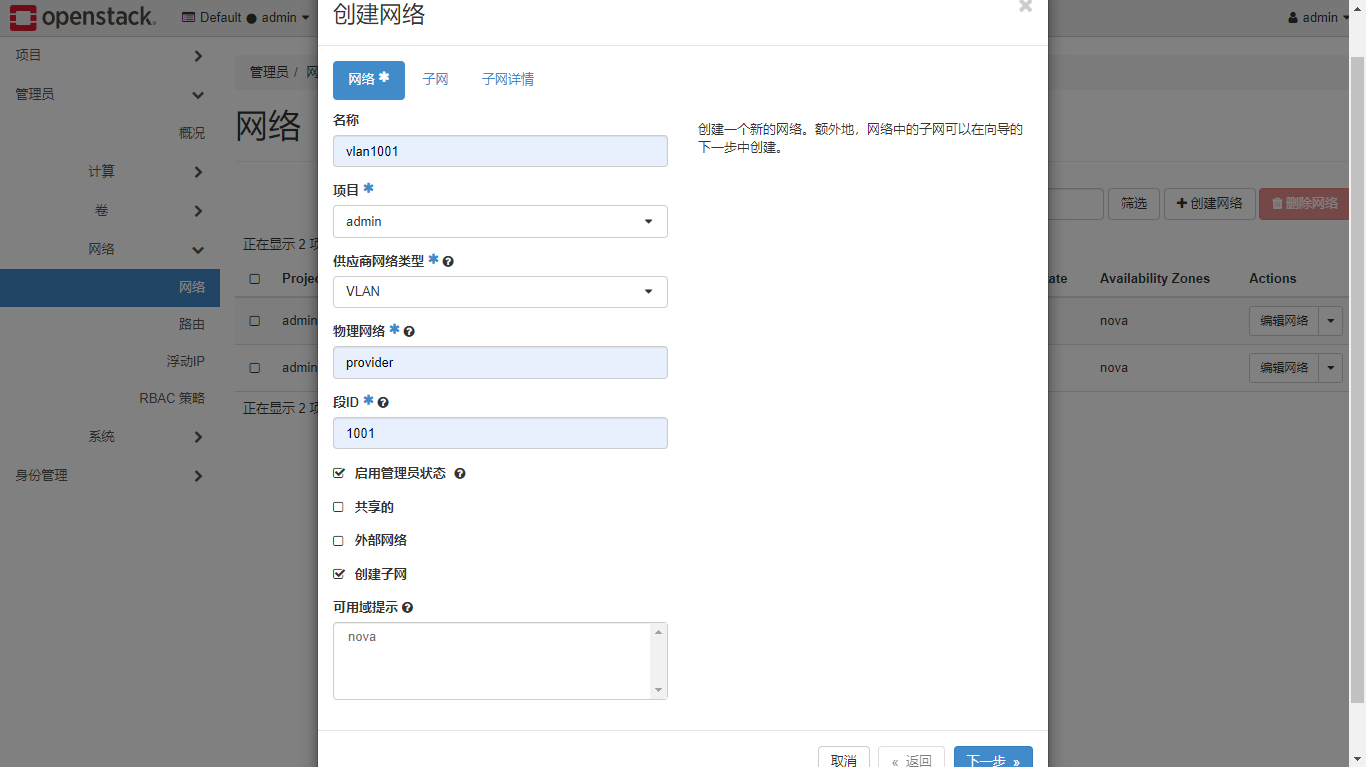

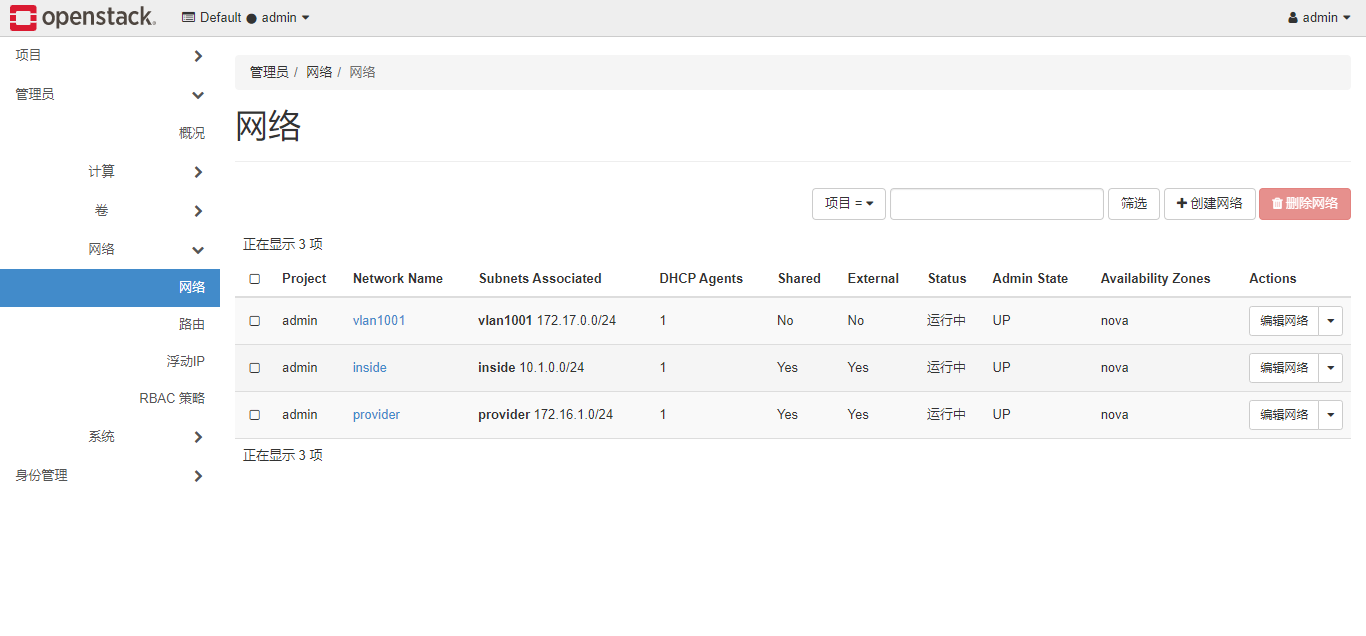

physical_interface_mappings = provider:ens19,inside:ens20 在Dashboard添加vlan网络中添加vlan网络。需要在管理员菜单下的网络页面中完成添加网络的操作。

创建网络输入名称、项目和网络类型,这里选择vlan,物理网络就是provider,会自动查找到网卡ens19,vlanID为1001,需要在范围1001到2000中。

输入网络地址172.17.0.0/24,网关172.17.0.1

输入地址池,172.17.0.10,172.17.0.100

vlan1001网络创建完成

在控制节点node1上查看网络相关信息,首先获取OpenStack的网络信息,可以看到刚刚添加的vlan1001网络,ID为54886452-55a2-437f-aa99-238e55403fc7

[root@node1 ~]# . admin-openrc

[root@node1 ~]# openstack network list

+--------------------------------------+----------+--------------------------------------+

| ID | Name | Subnets |

+--------------------------------------+----------+--------------------------------------+

| 54886452-55a2-437f-aa99-238e55403fc7 | vlan1001 | ec33a0a5-4019-4f31-a5d8-2ca86d2c9fa2 |

| 6f032080-473d-4aa8-8cc3-074f23ecc4dd | inside | 11eec460-caf2-45d1-ad79-432106b07f59 |

| d1e4b37b-eeb7-4934-aab7-dec537139a3a | provider | d439dfdb-8e80-4d30-a415-f7015c2108bc |

+--------------------------------------+----------+--------------------------------------+ 查看控制节点上的网络命名空间,可以看到多了一个qdhcp-54886452-55a2-437f-aa99-238e55403fc7,这个命名空间和OpenStack的网络id是对应的,查看该网络命名空间下的网络IP地址可以证实。

[root@node1 ~]# ip netns ls

qdhcp-54886452-55a2-437f-aa99-238e55403fc7 (id: 2)

qdhcp-d1e4b37b-eeb7-4934-aab7-dec537139a3a (id: 1)

qdhcp-6f032080-473d-4aa8-8cc3-074f23ecc4dd (id: 0)

[root@node1 ~]# ip netns exec qdhcp-54886452-55a2-437f-aa99-238e55403fc7 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ns-13daa25c-58@if10: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:5d:ed:b9 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.10/24 brd 172.17.0.255 scope global ns-13daa25c-58

valid_lft forever preferred_lft forever

inet 169.254.169.254/32 brd 169.254.169.254 scope global ns-13daa25c-58

valid_lft forever preferred_lft forever

inet6 fe80::a9fe:a9fe/64 scope link

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe5d:edb9/64 scope link

valid_lft forever preferred_lft forever 添加的vlan1001的网络命名空间中的ns-13daa25c-58虚拟网卡和宿主机上的网卡tap13daa25c-58是一对虚拟网卡,而tap13daa25c-58和ens19.1001宿主机上的vlan网卡是桥接网卡,这样就可以通过ens19.1001网卡和其他宿主机上的实例通信。

[root@node1 ~]# brctl show

bridge name bridge id STP enabled interfaces

brq54886452-55 8000.862a331edfda no ens19.1001

tap13daa25c-58 创建两个实例在不同的计算节点上,网络使用vlan1001网络,当实例运行之后,测试网络通信和查看网卡桥接状态

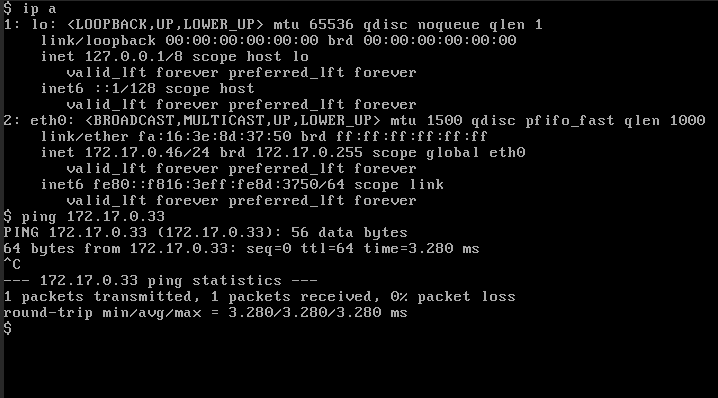

创建两个实例并查看网络和运行主机信息,可以看到vlan-test-1实例运行在node4上,而vlan-test-2实例运行在node3上

[root@node1 ~]# openstack server list --long

+--------------------------------------+-------------+--------+------------+-------------+----------------------+--------------------------+--------------------------+-------------+-----------+-------------------+-------+------------+

| ID | Name | Status | Task State | Power State | Networks | Image Name | Image ID | Flavor Name | Flavor ID | Availability Zone | Host | Properties |

+--------------------------------------+-------------+--------+------------+-------------+----------------------+--------------------------+--------------------------+-------------+-----------+-------------------+-------+------------+

| 1063b59c-5680-4a38-ab25-10f364fe2bb6 | vlan-test-1 | ACTIVE | None | Running | vlan1001=172.17.0.46 | N/A (booted from volume) | N/A (booted from volume) | m1.nano | 0 | nova | node4 | |

| 946e1f20-c31f-4a38-bf42-fd3eb82af472 | vlan-test-2 | ACTIVE | None | Running | vlan1001=172.17.0.33 | N/A (booted from volume) | N/A (booted from volume) | m1.nano | 0 | nova | node3 | |

+--------------------------------------+-------------+--------+------------+-------------+----------------------+--------------------------+--------------------------+-------------+-----------+-------------------+-------+------------+ 登录vlan-test-1实例的控制台查看IP地址并测试和实例vlan-test-2的网络是可以通行的

此时查看node4上的网络信息,桥接口brq54886452-55和控制节点上的桥接网卡名称一样,且ens19.1001和tap5c14401a-3a为桥接网卡。其中tap5c14401a-3a为虚拟网卡对,一端在宿主机上和ens19.1001作为桥接,另一端在实例中。而node3上的网络情况和node4一样。

brq54886452-55 8000.0e9decd1b677 no ens19.1001

tap5c14401a-3a 使用vlan1001网络的实例如果想访问外部网络,需要ens19物理网卡相连的交换机的网口设置为trunk,且在vlan网络ID为1001中有对应的网关来转发流量即可。

vxlan网络类型

vxlan网络类型是三层网络,实例和实例之间通过vxlan网络来通信,类似实例之间建立了隧道来通信。它没有vlan网络类型的vlanID号的限制,也没有flat网络类型需要绑定物理网卡的限制。但是因为是三层网络,所以外部访问vxlan网络的实例时需要额外配置路由。

在neutron组件的配置中已经指定了vxlan的相关配置

# /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

type_drivers = local,flat,vlan,gre,vxlan,geneve

ml2_type_vxlan = vni_ranges 1:1000 # 设置vxlan的ID范围

# /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[vxlan]

enable_vxlan = true

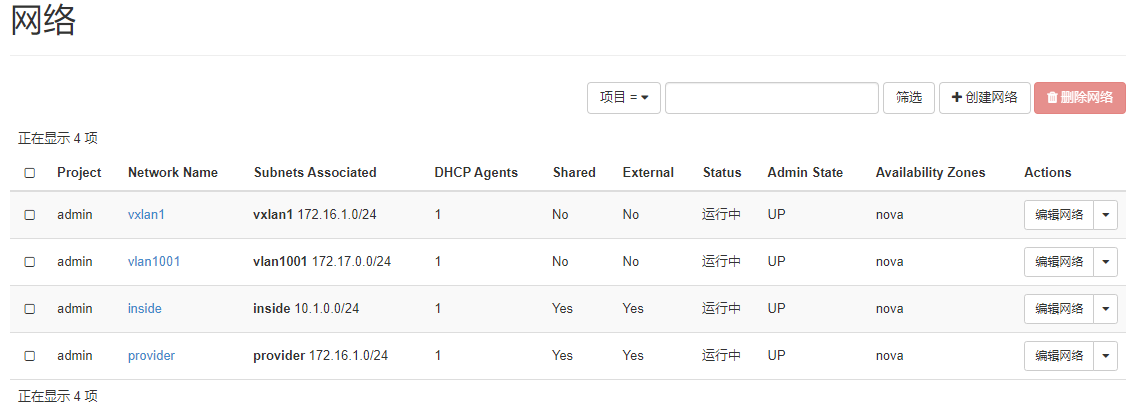

local_ip = 192.168.31.101 # 宿主机之间转发实例数据的使用的ip地址,vxlan会自动工作在该ip所在的网卡,即ens18 Dashboard添加vxlan网络,创建网络时的名称为vxlan1,网络类型为vxlan,段ID为1

网络地址范围为172.16.1.10/24,网关设置为172.16.1.1

输入地址池172.16.1.10,172,16.1.100

vxlan1网络创建完成

在控制节点node1上查看网络相关信息,首先获取OpenStack的网络信息,可以看到刚刚添加的vlan1001网络,ID为54886452-55a2-437f-aa99-238e55403fc7

[root@node1 ~]# . admin-openrc

[root@node1 ~]# openstack network list

+--------------------------------------+----------+--------------------------------------+

| ID | Name | Subnets |

+--------------------------------------+----------+--------------------------------------+

| 5415275c-9c56-4f09-aab3-3eabde4f4eb0 | vxlan1 | b7cf01d1-1331-4d1b-a86a-00e58a0f3c3a |

| 54886452-55a2-437f-aa99-238e55403fc7 | vlan1001 | ec33a0a5-4019-4f31-a5d8-2ca86d2c9fa2 |

| 6f032080-473d-4aa8-8cc3-074f23ecc4dd | inside | 11eec460-caf2-45d1-ad79-432106b07f59 |

| d1e4b37b-eeb7-4934-aab7-dec537139a3a | provider | d439dfdb-8e80-4d30-a415-f7015c2108bc |

+--------------------------------------+----------+--------------------------------------+ 查看控制节点上的网络命名空间,可以看到多了一个qdhcp-5415275c-9c56-4f09-aab3-3eabde4f4eb0,这个命名空间和OpenStack的网络id是对应的,查看该网络命名空间下的网络IP地址可以证实。

[root@node1 ~]# ip netns ls

qdhcp-5415275c-9c56-4f09-aab3-3eabde4f4eb0 (id: 3)

qdhcp-54886452-55a2-437f-aa99-238e55403fc7 (id: 2)

qdhcp-d1e4b37b-eeb7-4934-aab7-dec537139a3a (id: 1)

qdhcp-6f032080-473d-4aa8-8cc3-074f23ecc4dd (id: 0)

[root@node1 ~]# ip netns exec qdhcp-5415275c-9c56-4f09-aab3-3eabde4f4eb0 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ns-74b9fa42-6a@if13: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:31:14:a6 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.16.1.10/24 brd 172.16.1.255 scope global ns-74b9fa42-6a

valid_lft forever preferred_lft forever

inet 169.254.169.254/32 brd 169.254.169.254 scope global ns-74b9fa42-6a

valid_lft forever preferred_lft forever

inet6 fe80::a9fe:a9fe/64 scope link

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe31:14a6/64 scope link

valid_lft forever preferred_lft forever 添加的vxlan1的网络命名空间中的ns-74b9fa42-6a虚拟网卡和宿主机上的网卡vxlan-1是一对虚拟网卡,而vxlan-1和ens18宿主机上的网卡是桥接网卡,这样就可以通过ens18网卡和其他宿主机上的实例通信。vxlan网络在宿主机上通过udp协议进行通信,使用udp协议将数据包进行封装,然后接收方解包后在转发到对应实例中。

[root@node1 ~]# brctl show

bridge name bridge id STP enabled interfaces

brq5415275c-9c 8000.7e2576bd20b3 no tap74b9fa42-6a

vxlan-1

# 可以看到接口为vxlan,id为1,工作在网卡ens8上,和neutron的配置一致,且通信端口为udp 8472

[root@node1 ~]# ip -d link show vxlan-1

14: vxlan-1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master brq5415275c-9c state UNKNOWN mode DEFAULT group default qlen 1000

link/ether 82:a3:88:c3:c2:e3 brd ff:ff:ff:ff:ff:ff promiscuity 1 minmtu 68 maxmtu 65535

vxlan id 1 group 224.0.0.1 dev ens18 srcport 0 0 dstport 8472 ttl auto ageing 300 udpcsum noudp6zerocsumtx noudp6zerocsumrx 创建两个实例在不同的计算节点上,网络使用vxlan1网络,当实例运行之后,测试网络通信和查看网卡桥接状态

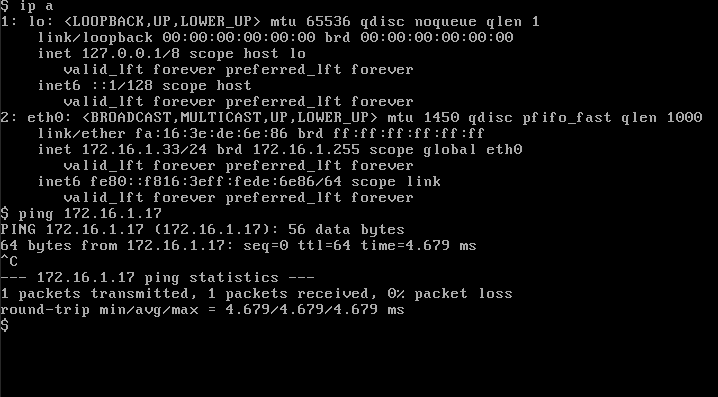

创建两个实例并查看网络和运行主机信息,可以看到vxlan1-1实例运行在node4上,而vxlan2-2实例运行在node3上

[root@node1 ~]# openstack server list --long

+--------------------------------------+----------+--------+------------+-------------+--------------------+--------------------------+--------------------------+-------------+-----------+-------------------+-------+------------+

| ID | Name | Status | Task State | Power State | Networks | Image Name | Image ID | Flavor Name | Flavor ID | Availability Zone | Host | Properties |

+--------------------------------------+----------+--------+------------+-------------+--------------------+--------------------------+--------------------------+-------------+-----------+-------------------+-------+------------+

| dc828568-f60c-4259-898c-8c43d5c1fe64 | vxlan1-2 | ACTIVE | None | Running | vxlan1=172.16.1.17 | N/A (booted from volume) | N/A (booted from volume) | m1.nano | 0 | nova | node3 | |

| fdb2c8a0-4ed4-4842-ac5b-40aa9b6afb48 | vxlan1-1 | ACTIVE | None | Running | vxlan1=172.16.1.33 | N/A (booted from volume) | N/A (booted from volume) | m1.nano | 0 | nova | node4 | |

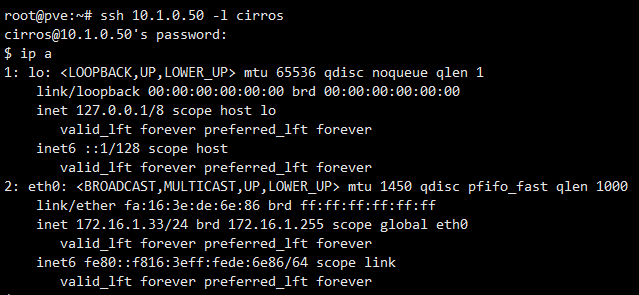

+--------------------------------------+----------+--------+------------+-------------+--------------------+--------------------------+--------------------------+-------------+-----------+-------------------+-------+------------+ 登录vxlan1-1实例的控制台查看IP地址并测试和实例vxlan1-2的网络是可以通信的

此时查看node4上的网络信息,桥接口brq5415275c-9c和控制节点上的桥接网卡名称一样,且vxlan-1和tap145db8f2-ce为桥接网卡。其中tap145db8f2-ce为虚拟网卡对,一端在宿主机上和vxlan-1作为桥接,另一端在实例中。而node3上的网络情况和node4一样。同时vxlan因为neutron的配置中指定了管理网的IP地址,所以工作在ens18网卡上。

bridge name bridge id STP enabled interfaces

brq5415275c-9c 8000.06bc46f08ed9 no tap145db8f2-ce

vxlan-1

[root@node4 ~]# ip -d link show vxlan-1

16: vxlan-1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master brq5415275c-9c state UNKNOWN mode DEFAULT group default qlen 1000

link/ether 06:bc:46:f0:8e:d9 brd ff:ff:ff:ff:ff:ff promiscuity 1 minmtu 68 maxmtu 65535

vxlan id 1 local 192.168.31.104 dev ens18 srcport 0 0 dstport 8472 ttl auto ageing 300 udpcsum noudp6zerocsumtx noudp6zerocsumrx 当vxlan1-1实例去ping实例vxlan1-2的IP地址时,在vxlan1-1实例所在的宿主机node4的ens18网卡上抓包,抓包为udp 8472,可以看到如下信息。

[root@node4 ~]# tcpdump -n -i ens18 udp port 8472

dropped privs to tcpdump

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on ens18, link-type EN10MB (Ethernet), capture size 262144 bytes

14:16:56.322196 IP 192.168.31.104.47460 > 192.168.31.103.otv: OTV, flags [I] (0x08), overlay 0, instance 1

IP 172.16.1.33 > 172.16.1.17: ICMP echo request, id 49153, seq 0, length 64

14:16:56.322666 IP 192.168.31.103.59652 > 192.168.31.104.otv: OTV, flags [I] (0x08), overlay 0, instance 1

IP 172.16.1.17 > 172.16.1.33: ICMP echo reply, id 49153, seq 0, length 64

14:16:57.322516 IP 192.168.31.104.47460 > 192.168.31.103.otv: OTV, flags [I] (0x08), overlay 0, instance 1

IP 172.16.1.33 > 172.16.1.17: ICMP echo request, id 49153, seq 1, length 64

14:16:57.323099 IP 192.168.31.103.59652 > 192.168.31.104.otv: OTV, flags [I] (0x08), overlay 0, instance 1

IP 172.16.1.17 > 172.16.1.33: ICMP echo reply, id 49153, seq 1, length 64

14:16:58.322837 IP 192.168.31.104.47460 > 192.168.31.103.otv: OTV, flags [I] (0x08), overlay 0, instance 1

IP 172.16.1.33 > 172.16.1.17: ICMP echo request, id 49153, seq 2, length 64

14:16:58.323258 IP 192.168.31.103.59652 > 192.168.31.104.otv: OTV, flags [I] (0x08), overlay 0, instance 1

IP 172.16.1.17 > 172.16.1.33: ICMP echo reply, id 49153, seq 2, length 64

14:16:59.323134 IP 192.168.31.104.47460 > 192.168.31.103.otv: OTV, flags [I] (0x08), overlay 0, instance 1

IP 172.16.1.33 > 172.16.1.17: ICMP echo request, id 49153, seq 3, length 64

14:16:59.323876 IP 192.168.31.103.59652 > 192.168.31.104.otv: OTV, flags [I] (0x08), overlay 0, instance 1

IP 172.16.1.17 > 172.16.1.33: ICMP echo reply, id 49153, seq 3, length 64

14:17:01.329459 IP 192.168.31.104.45810 > 192.168.31.103.otv: OTV, flags [I] (0x08), overlay 0, instance 1

ARP, Request who-has 172.16.1.17 tell 172.16.1.33, length 28

14:17:01.330025 IP 192.168.31.103.55260 > 192.168.31.104.otv: OTV, flags [I] (0x08), overlay 0, instance 1

ARP, Reply 172.16.1.17 is-at fa:16:3e:74:ad:39, length 28 vxlan网络类型和flat网络类型、vlan网络类型不同。flat网络类型和vlan网络类型可以通过外部的网络设置做路由转发进行通信,而vxlan网络类型和外部网络是无法通信的,只是在宿主机的实例之间进行数据通信,且是通过udp协议进行了封装。

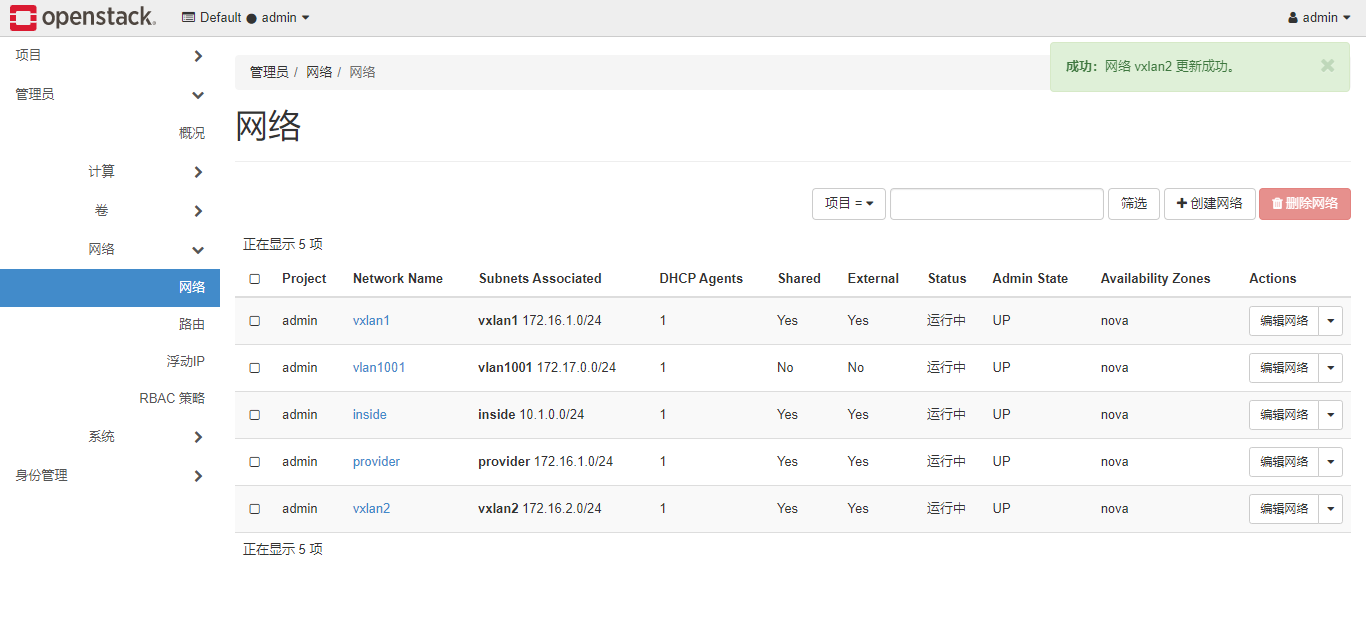

而不同vxlan网类型的实例之间可以通过OpenStack提供的路由功能进行通信。这里再添加一个vxlan网类型的网络为vxlan2,网络段为172.16.2.0/24,网关为172.16.2.1。修改vxlan网络类型的网络vxlan1和vxlan2为共享网络。在管理员菜单下编辑网络可以修改。

使用vxlan2网络创建实例vxlan2-1,并测试vxlan2-1是否可以访问vxlan1-1实例。测试结果发现在vxlan1-1中是无法ping同vxlan2-1实例的IP地址。

[root@node1 ~]# openstack server list --long

+--------------------------------------+----------+--------+------------+-------------+--------------------+--------------------------+--------------------------+-------------+-----------+-------------------+-------+------------+

| ID | Name | Status | Task State | Power State | Networks | Image Name | Image ID | Flavor Name | Flavor ID | Availability Zone | Host | Properties |

+--------------------------------------+----------+--------+------------+-------------+--------------------+--------------------------+--------------------------+-------------+-----------+-------------------+-------+------------+

| c3bc87b0-49cb-42c3-ac34-5a73b7222daa | vxlan2-1 | ACTIVE | None | Running | vxlan2=172.16.2.19 | N/A (booted from volume) | N/A (booted from volume) | m1.nano | 0 | nova | node5 | |

| dc828568-f60c-4259-898c-8c43d5c1fe64 | vxlan1-2 | ACTIVE | None | Running | vxlan1=172.16.1.17 | N/A (booted from volume) | N/A (booted from volume) | m1.nano | 0 | nova | node3 | |

| fdb2c8a0-4ed4-4842-ac5b-40aa9b6afb48 | vxlan1-1 | ACTIVE | None | Running | vxlan1=172.16.1.33 | N/A (booted from volume) | N/A (booted from volume) | m1.nano | 0 | nova | node4 | |

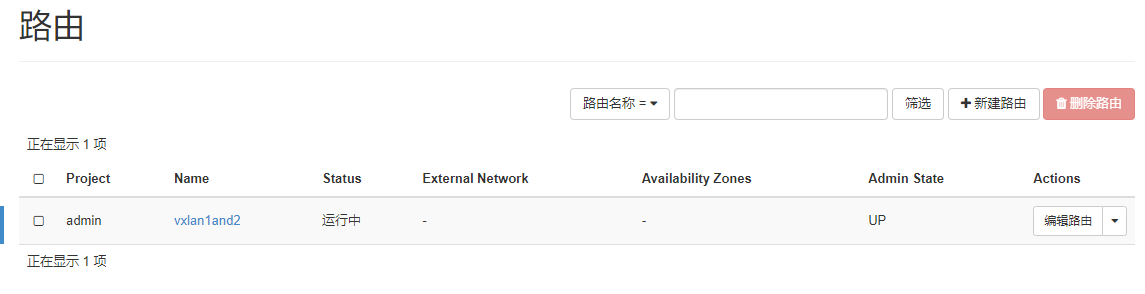

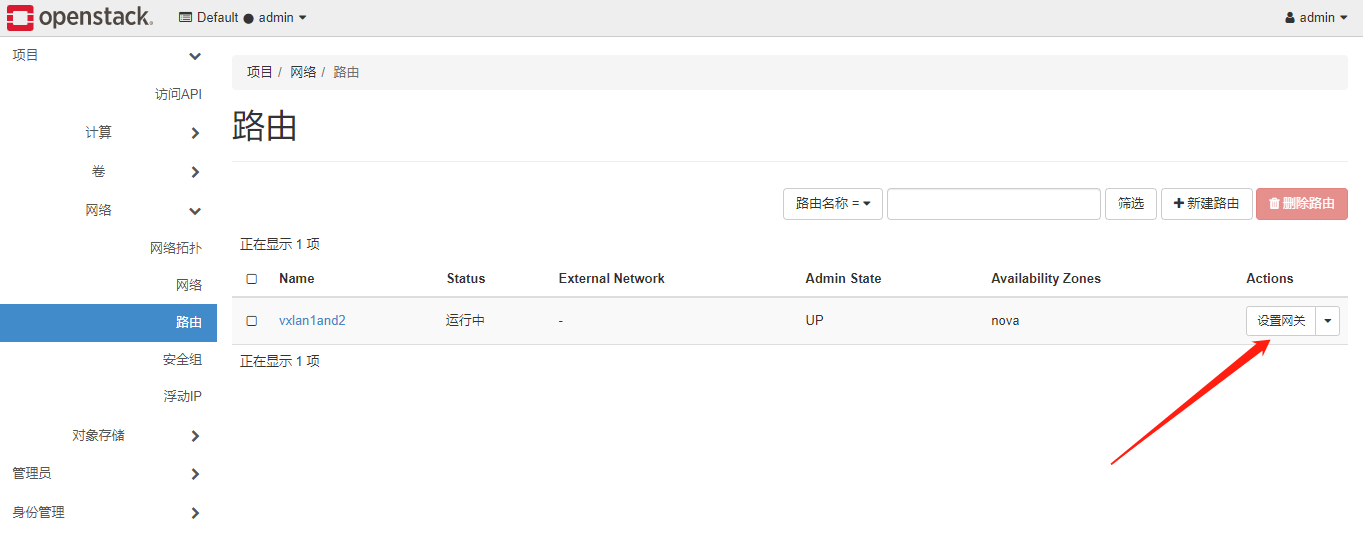

+--------------------------------------+----------+--------+------------+-------------+--------------------+--------------------------+--------------------------+-------------+-----------+-------------------+-------+------------+ 在OpenStack中添加路由,vxlan1和vlxna2的添加路由方式相同。添加vxlan1and2的路由如下:

添加完成后如下所示:

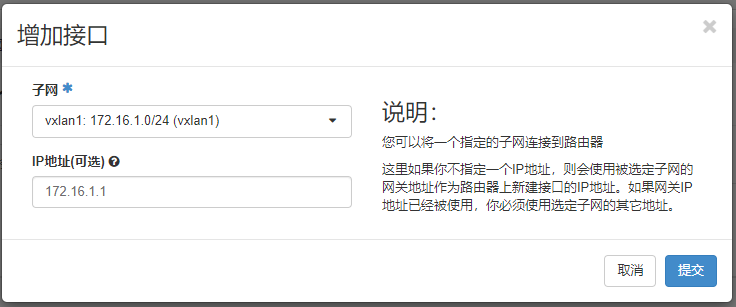

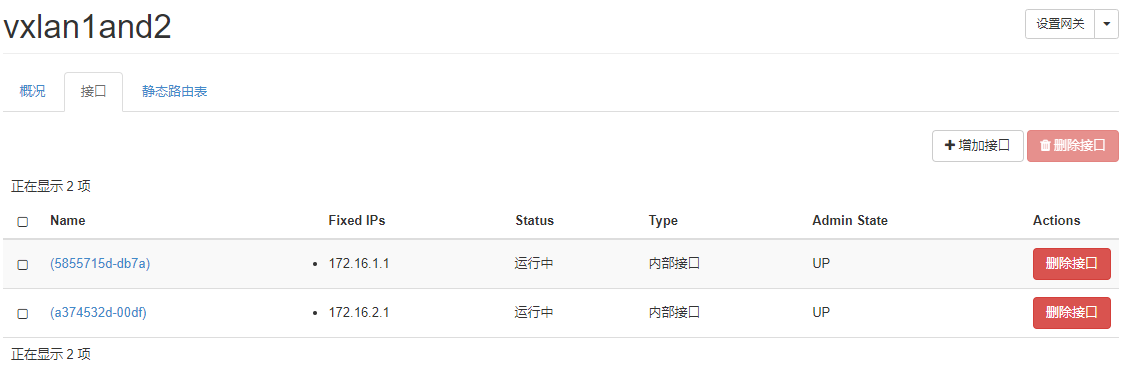

在vxlan1and2的路由中添加接口,指定子网为vxlan1,并指定IP地址为172.16.1.1,因为在添加网络的时候指定的网关为172.16.1.1

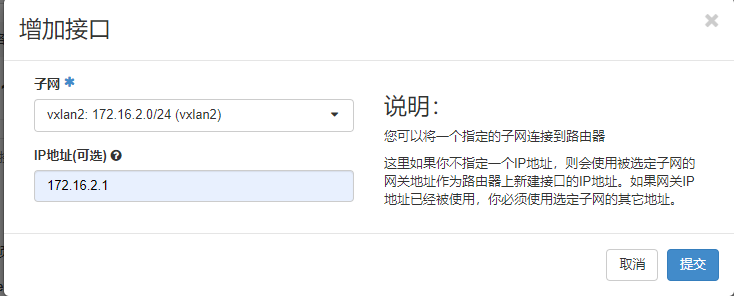

在vxlan1and2的路由中添加接口,指定子网为vxlan2,并指定IP地址为172.16.2.1,因为在添加网络的时候指定的网关为172.16.2.1

添加完成后如下所示:

添加完成后,此时从vxlan1-1访问vxlan2-1的实例的IP地址,已经可以ping通。

OpenStack路由分析

OpenStack中添加路由,其实就是创建一个虚拟网卡对,一端和命名空间中虚拟网卡对的另一个虚拟网卡所在的桥接口上,另一端在路由命名空间中,且配置IP地址,然后在路由命名空间中进行流量的转发。

查看路由的命名空间

[root@node1 ~]# ip netns ls

qrouter-636634da-8514-4b6a-9a11-43ddf1cb5682 (id: 4) 查看桥接信息,可以看到在brq5415275c-9c桥接上新添加了一个虚拟网卡tap5855715d-db,该网络和vxlan1网络相通;而在brqe1299b5f-86桥接上新添加了一个tapa374532d-00,该网络和vxlan2网络相通。

[root@node1 ~]# brctl show

bridge name bridge id STP enabled interfaces

brq5415275c-9c 8000.46ca3d9407da no tap5855715d-db

tap74b9fa42-6a

vxlan-1

brqe1299b5f-86 8000.2ed0545ab821 no tapa374532d-00

taped3f4470-9e

vxlan-2 查看路由命名空间qrouter-636634da-8514-4b6a-9a11-43ddf1cb5682中的网络信息,可以看到在Dashboard配置的两个网络的网关IP地址都在改路由命名空间中,此时两个网络就可以相互之间通信。

[root@node1 ~]# ip netns exec qrouter-636634da-8514-4b6a-9a11-43ddf1cb5682 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: qr-5855715d-db@if26: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:dd:e1:a2 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.16.1.1/24 brd 172.16.1.255 scope global qr-5855715d-db

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fedd:e1a2/64 scope link

valid_lft forever preferred_lft forever

3: qr-a374532d-00@if27: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:a9:bd:74 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.16.2.1/24 brd 172.16.2.255 scope global qr-a374532d-00

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fea9:bd74/64 scope link

valid_lft forever preferred_lft forever vxlan网络类型通过路由的方式除了和另一个vxlan网络进行通信外,还可以和其它网络类型的实例通信,原理和上述一样,但是因为默认路由(vxlan和flat网络类型的默认路由都是外部网络设备)的问题,所以可能还需要在路由中添加静态路由,同时实例上也可能需要配置额外的路由信息。

因为vxlan网络的特殊性,如果外部需要访问vxlan网络类型的实例提供的服务,需要通过浮动IP的方式来访问。

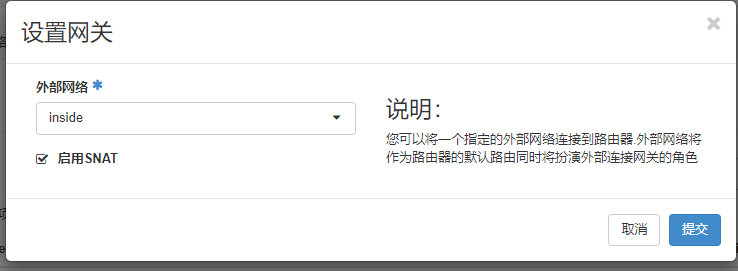

添加浮动IP时,需要首先设置该vxlan的路由,并指定该路由的外部地址,这里设置为inside,

因为vxlan1的网段和provider的网段相同,都是使用的172.16.1.0/24,如果设置外部网络为provider则会报错,提示网络冲突

分配浮动IP

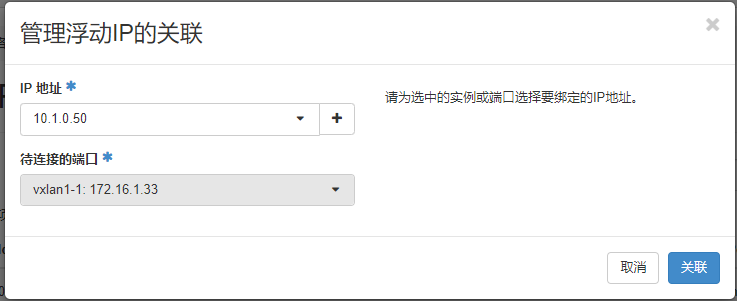

将分配的浮动IP地址进行关联

将分配的浮动IP地址10.1.0.50和实例ip地址172.16.1.33进行关联。

关联之后可以从浮动ip这里看到关联信息。

此时就可以通过浮动IP地址来访问实例,就是通过iptables做了IP地址的一对一映射。

OpenStack浮动IP分析

OpenStack的浮动IP地址需要先做路由,然后配置浮动IP地址。通过查看路由的网络命名空间配置可以得知,浮动IP地址就是添加到路由的网络命名空间中。在路由的网络命名空间中添加了一个inside网络的IP地址:10.1.0.83/24,在做数据转发时使用的路由IP地址。

[root@node1 ~]# ip netns exec qrouter-636634da-8514-4b6a-9a11-43ddf1cb5682 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: qr-5855715d-db@if26: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:dd:e1:a2 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.16.1.1/24 brd 172.16.1.255 scope global qr-5855715d-db

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fedd:e1a2/64 scope link

valid_lft forever preferred_lft forever

3: qr-a374532d-00@if27: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:a9:bd:74 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.16.2.1/24 brd 172.16.2.255 scope global qr-a374532d-00

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fea9:bd74/64 scope link

valid_lft forever preferred_lft forever

6: qg-adbbc7d0-4e@if30: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:ce:1a:6b brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.1.0.83/24 brd 10.1.0.255 scope global qg-adbbc7d0-4e

valid_lft forever preferred_lft forever

inet 10.1.0.50/32 brd 10.1.0.50 scope global qg-adbbc7d0-4e

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fece:1a6b/64 scope link

valid_lft forever preferred_lft forever

# 而qg-adbbc7d0-4e虚拟网卡的另一端,tapadbbc7d0-4e和ens20和tapfea707b3-91作为桥接口

brq6f032080-47 8000.4a7c30e1d740 no ens20

tapadbbc7d0-4e

tapfea707b3-91

# 查看inside的网络命名空间可以看到,tapfea707b3-91和ns-fea707b3-91是一对,这样inside网络和vxlan1的网络就可以通信了

[root@node1 ~]# ip netns exec qdhcp-6f032080-473d-4aa8-8cc3-074f23ecc4dd ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ns-fea707b3-91@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:02:67:64 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 169.254.169.254/32 brd 169.254.169.254 scope global ns-fea707b3-91

valid_lft forever preferred_lft forever

inet 10.1.0.10/24 brd 10.1.0.255 scope global ns-fea707b3-91

valid_lft forever preferred_lft forever

inet6 fe80::a9fe:a9fe/64 scope link

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe02:6764/64 scope link

valid_lft forever preferred_lft forever 然后在路由的网络命名空间中配置一个DNAT来完成浮动IP和实例IP的一对一映射

[root@node1 ~]# ip netns exec qrouter-636634da-8514-4b6a-9a11-43ddf1cb5682 iptables -t nat -L neutron-l3-agent-OUTPUT -n

Chain neutron-l3-agent-OUTPUT (1 references)

target prot opt source destination

DNAT all -- 0.0.0.0/0 10.1.0.50 to:172.16.1.33实例如何获取IP地址

这里通过分析vxlan1-1实例获取dhcp分配的IP地址过程,来详解实例时如何获取DHCP分配的IP地址的。其他网络类型的实例获取DHCP分配的IP地址流程是一样的。

当创建vxlan1网络之后,会在控制节点node1上创建一个vxlan-1接口,并工作在ens18网卡上。并创建一个桥接网卡和一对虚拟网卡,虚拟网卡一段在物理机上和vxlan-1进行桥接,另一段分配到网络命名空间中,并设置IP地址,然后启动dnsmasq来启动dhcp服务,对实例分配IP地址。

查看控制节点网络情况

查看vxlan-1工作在ens18网卡上,启动dev ens18表示工作的网卡

[root@node1 ~]# ip -d link show vxlan-1

14: vxlan-1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master brq5415275c-9c state UNKNOWN mode DEFAULT group default qlen 1000

link/ether 82:a3:88:c3:c2:e3 brd ff:ff:ff:ff:ff:ff promiscuity 1 minmtu 68 maxmtu 65535

vxlan id 1 group 224.0.0.1 dev ens18 srcport 0 0 dstport 8472 ttl auto ageing 300 udpcsum noudp6zerocsumtx noudp6zerocsumrx vxlan-1和虚拟网卡tap74b9fa42-6a为桥接。

[root@node1 ~]# brctl show brq5415275c-9c

bridge name bridge id STP enabled interfaces

brq5415275c-9c 8000.46ca3d9407da no tap5855715d-db

tap74b9fa42-6a

vxlan-1 而虚拟网卡tap74b9fa42-6a的另一段ns-74b9fa42-6a在网络命名空间中,并启动dnsmasq通过该网卡接受实例的dhcp请求。

[root@node1 ~]# ip netns exec qdhcp-5415275c-9c56-4f09-aab3-3eabde4f4eb0 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ns-74b9fa42-6a@if13: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:31:14:a6 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.16.1.10/24 brd 172.16.1.255 scope global ns-74b9fa42-6a

valid_lft forever preferred_lft forever

inet 169.254.169.254/32 brd 169.254.169.254 scope global ns-74b9fa42-6a

valid_lft forever preferred_lft forever

inet6 fe80::a9fe:a9fe/64 scope link

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe31:14a6/64 scope link

valid_lft forever preferred_lft forever 获取工作在网络命名空间中的进程ID,并查看该进程可以看到进程为dnsmasq,OpenStack使用dnsmasq服务为实例提供dhcp服务。

[root@node1 ~]# ip netns pids qdhcp-5415275c-9c56-4f09-aab3-3eabde4f4eb0

83979

[root@node1 ~]# ps -ef | grep 83979

dnsmasq 83979 1 0 15:29 ? 00:00:00 dnsmasq --no-hosts --no-resolv --pid-file=/var/lib/neutron/dhcp/5415275c-9c56-4f09-aab3-3eabde4f4eb0/pid --dhcp-hostsfile=/var/lib/neutron/dhcp/5415275c-9c56-4f09-aab3-3eabde4f4eb0/host --addn-hosts=/var/lib/neutron/dhcp/5415275c-9c56-4f09-aab3-3eabde4f4eb0/addn_hosts --dhcp-optsfile=/var/lib/neutron/dhcp/5415275c-9c56-4f09-aab3-3eabde4f4eb0/opts --dhcp-leasefile=/var/lib/neutron/dhcp/5415275c-9c56-4f09-aab3-3eabde4f4eb0/leases --dhcp-match=set:ipxe,175 --dhcp-userclass=set:ipxe6,iPXE --local-service --bind-dynamic --dhcp-range=set:subnet-b7cf01d1-1331-4d1b-a86a-00e58a0f3c3a,172.16.1.0,static,255.255.255.0,86400s --dhcp-option-force=option:mtu,1450 --dhcp-lease-max=256 --conf-file=/dev/null --domain=openstacklocal

root 87373 84789 0 16:17 pts/0 00:00:00 grep --color=auto 83979 查看dnsmasq的主机信息,可以看到对外提供指定主机dhcp信息,这个和实例的配置信息是一直的。172.16.1.33为实例vxlan1-1的IP地址

[root@node1 ~]# cat /var/lib/neutron/dhcp/5415275c-9c56-4f09-aab3-3eabde4f4eb0/host

fa:16:3e:de:6e:86,host-172-16-1-33.openstacklocal,172.16.1.33

fa:16:3e:dd:e1:a2,host-172-16-1-1.openstacklocal,172.16.1.1

fa:16:3e:74:ad:39,host-172-16-1-17.openstacklocal,172.16.1.17

[root@node1 ~]# openstack server list

+--------------------------------------+----------+--------+-------------------------------+--------------------------+---------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+----------+--------+-------------------------------+--------------------------+---------+

| c3bc87b0-49cb-42c3-ac34-5a73b7222daa | vxlan2-1 | ACTIVE | vxlan2=172.16.2.19 | N/A (booted from volume) | m1.nano |

| dc828568-f60c-4259-898c-8c43d5c1fe64 | vxlan1-2 | ACTIVE | vxlan1=172.16.1.17 | N/A (booted from volume) | m1.nano |

| fdb2c8a0-4ed4-4842-ac5b-40aa9b6afb48 | vxlan1-1 | ACTIVE | vxlan1=172.16.1.33, 10.1.0.50 | N/A (booted from volume) | m1.nano |

+--------------------------------------+----------+--------+-------------------------------+--------------------------+---------+查看计算节点网络情况

查看node4网络的vxlan-1工作在ens18,同控制节点一致

[root@node4 ~]# ip -d link show vxlan-1

16: vxlan-1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master brq5415275c-9c state UNKNOWN mode DEFAULT group default qlen 1000

link/ether 06:bc:46:f0:8e:d9 brd ff:ff:ff:ff:ff:ff promiscuity 1 minmtu 68 maxmtu 65535

vxlan id 1 local 192.168.31.104 dev ens18 srcport 0 0 dstport 8472 ttl auto ageing 300 udpcsum noudp6zerocsumtx noudp6zerocsumrx vxlan-1和虚拟网卡tap145db8f2-ce为桥接,而tap145db8f2-ce的另一端在实例中,此时实例就可以获取dhcp分配的IP地址

[root@node4 ~]# brctl show brq5415275c-9c

bridge name bridge id STP enabled interfaces

brq5415275c-9c 8000.06bc46f08ed9 no tap145db8f2-ce

vxlan-1 而OpenStack为了更加的安全,配置了相关的iptables规则。关闭网络的dhcp并启用外部的dhcp服务器,可以获取到IP地址,但是因为有相关的iptables规则导致无法和外部通信。

[root@node4 ~]# iptables -nv -L neutron-linuxbri-i145db8f2-c

Chain neutron-linuxbri-i145db8f2-c (1 references)

pkts bytes target prot opt in out source destination

1298 118K RETURN all -- * * 0.0.0.0/0 0.0.0.0/0 state RELATED,ESTABLISHED /* Direct packets associated with a known session to the RETURN chain. */

2 730 RETURN udp -- * * 0.0.0.0/0 172.16.1.33 udp spt:67 dpt:68

0 0 RETURN udp -- * * 0.0.0.0/0 255.255.255.255 udp spt:67 dpt:68

0 0 RETURN all -- * * 0.0.0.0/0 0.0.0.0/0 match-set NIPv49612db0d-b44e-4eba-9e8e- src

5 300 RETURN tcp -- * * 0.0.0.0/0 0.0.0.0/0 tcp dpt:22

1 84 RETURN icmp -- * * 0.0.0.0/0 0.0.0.0/0

0 0 DROP all -- * * 0.0.0.0/0 0.0.0.0/0 state INVALID /* Drop packets that appear related to an existing connection (e.g. TCP ACK/FIN) but do not have an entry in conntrack. */

2 658 neutron-linuxbri-sg-fallback all -- * * 0.0.0.0/0 0.0.0.0/0 /* Send unmatched traffic to the fallback chain. */

Chain neutron-linuxbri-o145db8f2-c (2 references)

target prot opt source destination

RETURN udp -- 0.0.0.0 255.255.255.255 udp spt:68 dpt:67 /* Allow DHCP client traffic. */

neutron-linuxbri-s145db8f2-c all -- 0.0.0.0/0 0.0.0.0/0

RETURN udp -- 0.0.0.0/0 0.0.0.0/0 udp spt:68 dpt:67 /* Allow DHCP client traffic. */

DROP udp -- 0.0.0.0/0 0.0.0.0/0 udp spt:67 dpt:68 /* Prevent DHCP Spoofing by VM. */

RETURN all -- 0.0.0.0/0 0.0.0.0/0 state RELATED,ESTABLISHED /* Direct packets associated with a known session to the RETURN chain. */

RETURN all -- 0.0.0.0/0 0.0.0.0/0

DROP all -- 0.0.0.0/0 0.0.0.0/0 state INVALID /* Drop packets that appear related to an existing connection (e.g. TCP ACK/FIN) but do not

have an entry in conntrack. */

neutron-linuxbri-sg-fallback all -- 0.0.0.0/0 0.0.0.0/0 /* Send unmatched traffic to the fallback chain. */

# 这里限制了ip和网卡mac地址,只是无法访问,但是实例是可以获取到dhcp分配的网络IP地址

[root@node4 ~]# iptables -nv -L neutron-linuxbri-s145db8f2-c

Chain neutron-linuxbri-s145db8f2-c (1 references)

pkts bytes target prot opt in out source destination

1876 164K RETURN all -- * * 172.16.1.33 0.0.0.0/0 MAC FA:16:3E:DE:6E:86 /* Allow traffic from defined IP/MAC pairs. */

0 0 DROP all -- * * 0.0.0.0/0 0.0.0.0/0 /* Drop traffic without an IP/MAC allow rule. */ 同时在ebtable中的nat链中限制了网络的arp信息

ebtable -L -t nat

浙公网安备 33010602011771号

浙公网安备 33010602011771号