AMQP: Kafka

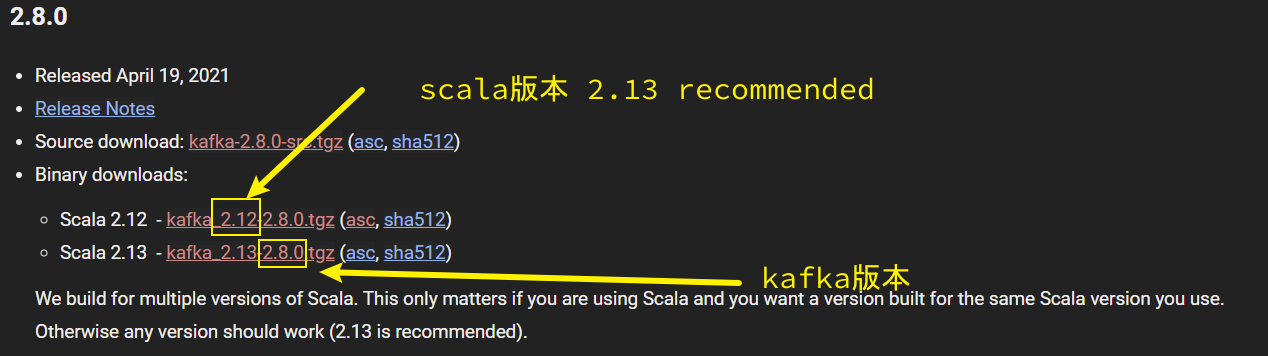

- download Kafka

http://kafka.apache.org/downloads

- offical quick start

http://kafka.apache.org/quickstart 单节点follow this guide - 安装到/opt/kafka

tar xf kafka_2.13-2.8.0.tgz --directory /opt cd /opt ln -svnf kafka_2.13-2.8.0 kafka -

配置

broker.id=1 # 标识集群中的broker, 与advertised.listeners同时修改 listeners=PLAINTEXT://192.168.8.13:9092 # advertised.listeners=PLAINTEXT://192.168.8.11:9092 # 配置监听,修改为本机ip log.dirs=/opt/kafka/data/kafka # data directory (数据)存放的路径,路径不需要提前创建,可以配置多个磁盘路径,路径与路径之间可以用""分隔 zookeeper.connect=veer1:2181,veer2:2181,veer3:2181/kafka # zookeeper连接地址, 在 zk 根目录下创建/kafka,方便管理 num.network.threads=3 # 处理网络请求的线程数量 num.io.threads=8 # 用来处理磁盘 IO 的线程数量 socket.send.buffer.bytes=102400 # 发送套接字的缓冲区大小 socket.receive.buffer.bytes=102400 # 接收套接字的缓冲区大小 socket.request.max.bytes=104857600 # num.partitions=1 # topic 在当前 broker 上的分区个数 num.recovery.threads.per.data.dir=1 # 用来恢复和清理 data 下数据的线程数量 offsets.topic.replication.factor=1 # 每个 topic 创建时的副本数,默认时 1 个副本 transaction.state.log.replication.factor=1 # transaction.state.log.min.isr=1 # log.retention.hours=168 # segment 文件保留的最长时间,超时将被删除 log.segment.bytes=1073741824 # 每个 segment 文件的大小,默认最大 1G log.retention.check.interval.ms=300000 # 检查过期数据的时间,默认 5 分钟检查一次是否数据过期 zookeeper.connection.timeout.ms=18000 group.initial.rebalance.delay.ms=0

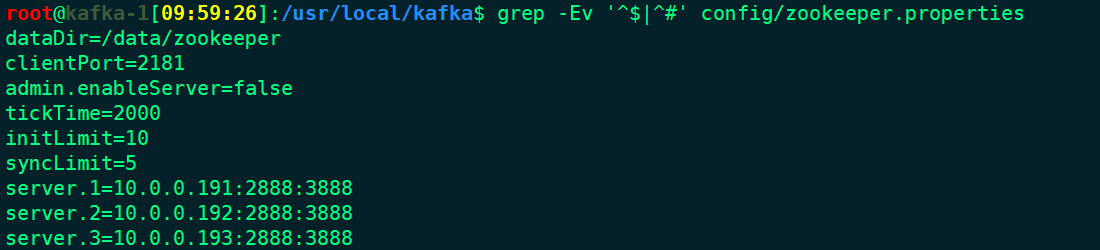

cat > /etc/profile.d/kafka.sh <<'EOF' export KAFKA_HOME=/opt/kafka export PATH=$PATH:$KAFKA_HOME/bin EOF # zookeeper配置 cat > /opt/kafka/config/zookeeper.properties <<'EOF' dataDir=/opt/kafka/data/zookeeper clientPort=2181 admin.enableServer=false tickTime=2000 initLimit=10 syncLimit=5 server.1=192.168.8.11:2888:3888 server.2=192.168.8.12:2888:3888 server.3=192.168.8.13:2888:3888 EOF # kafka配置 cat > /opt/kafka/config/server.properties <<'EOF' broker.id=3 listeners=PLAINTEXT://192.168.8.13:9092 advertised.listeners=PLAINTEXT://192.168.8.13:9092 log.dirs=/opt/kafka/data/kafka zookeeper.connect=veer1:2181,veer2:2181,veer3:2181/kafka num.network.threads=3 num.io.threads=8 socket.send.buffer.bytes=102400 socket.receive.buffer.bytes=102400 socket.request.max.bytes=104857600 num.partitions=1 num.recovery.threads.per.data.dir=1 offsets.topic.replication.factor=1 transaction.state.log.replication.factor=1 transaction.state.log.min.isr=1 log.retention.hours=168 log.segment.bytes=1073741824 log.retention.check.interval.ms=300000 zookeeper.connection.timeout.ms=18000 group.initial.rebalance.delay.ms=0 EOF mkdir -pv /opt/kafka/data/{kafka,zookeeper} # server 1 echo 1 > /opt/kafka/data/zookeeper/myid # server 2 echo 2 > /opt/kafka/data/zookeeper/myid # server 3 echo 3 > /opt/kafka/data/zookeeper/myid

- Topic

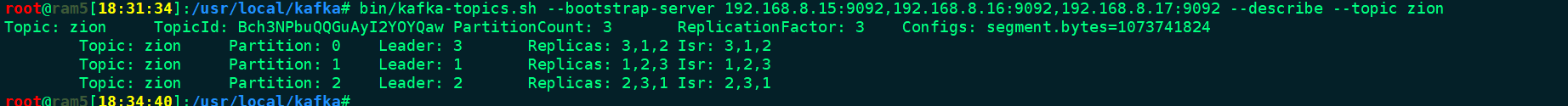

# 创建Topic, replication-factor 3 副本数量3, Replication factor: 5 larger than available brokers: 3 kafka-topics.sh --bootstrap-server 192.168.8.11:9092,192.168.8.12:9092,192.168.8.13:9092 --create --topic zion --replication-factor 3 --partitions 3 # 列出Topic kafka-topics.sh --bootstrap-server 192.168.8.11:9092,192.168.8.12:9092,192.168.8.13:9092 --list # 查看Topic详细信息 kafka-topics.sh --bootstrap-server 192.168.8.11:9092,192.168.8.12:9092,192.168.8.13:9092 --describe --topic zion # Delete Topic kafka-topics.sh --bootstrap-server 192.168.8.11:9092,192.168.8.12:9092,192.168.8.13:9092 --delete --topic zion # 修改partitions, 只能增加, 不能减少 kafka-topics.sh --bootstrap-server 192.168.8.11:9092,192.168.8.12:9092,192.168.8.13:9092 --topic zion --alter --partitions 4

“leader”:该节点负责该分区的所有的读和写,每个节点的leader都是随机选择的。 “replicas”:备份的节点列表,无论该节点是否是leader或者目前是否还活着,只是显示。 “isr”:同步备份”的节点列表,也就是活着的节点并且正在同步leader 其中Replicas和Isr中的1,2,0就对应着3个broker他们的broker.id属性!

- producer & consumer

# PRODUCER & CONSUMER kafka-console-producer.sh --bootstrap-server 192.168.8.11:9092 --topic zion kafka-console-consumer.sh --bootstrap-server 192.168.8.11:9092 --topic zion # 从头开始消费 kafka-console-consumer.sh --bootstrap-server 192.168.8.11:9092 --topic zion --from-beginning

- change topics attributes bin/kafka-configs.sh 可查看可以属性

bin/kafka-topics.sh --bootstrap-server 192.168.8.15:9092,192.168.8.16:9092,192.168.8.17:9092 --list bin/kafka-topics.sh --bootstrap-server 192.168.8.15:9092,192.168.8.16:9092,192.168.8.17:9092 --topic zion --describe # alter topic attributes bin/kafka-configs.sh --bootstrap-server 192.168.8.15:9092,192.168.8.16:9092,192.168.8.17:9092 --topic zion --describe bin/kafka-configs.sh --bootstrap-server 192.168.8.15:9092,192.168.8.16:9092,192.168.8.17:9092 --topic zion --alter --add-config max.message.bytes=16777216 bin/kafka-configs.sh --bootstrap-server 192.168.8.15:9092,192.168.8.16:9092,192.168.8.17:9092 --topic zion --alter --delete-config max.message.bytes # alter partitions bin/kafka-topics.sh --bootstrap-server 192.168.8.15:9092,192.168.8.16:9092,192.168.8.17:9092 --topic zion --alter --partitions 5 bin/kafka-topics.sh --bootstrap-server 192.168.8.15:9092,192.168.8.16:9092,192.168.8.17:9092 --topic zion --alter --partitions 4 # can't reduce partitions # delete topic bin/kafka-topics.sh --bootstrap-server 192.168.8.15:9092,192.168.8.16:9092,192.168.8.17:9092 --topic zion --deletebin/kafka-consumer-groups.sh --bootstrap-server 192.168.8.15:9092,192.168.8.16:9092,192.168.8.17:9092 --list bin/kafka-consumer-groups.sh --bootstrap-server 192.168.8.15:9092,192.168.8.16:9092,192.168.8.17:9092 --group console-consumer-40344 --describe -

zookeeper

# ZOOKEEPER zookeeper-shell.sh veer1:2181 ls /kafka/brokers/ids

管理脚本

cat > kafka.sh <<'EOF' #!/bin/bash function usage(){ echo -e "\e[7m$0 start | stop\e[0m" exit 5 } if ! [[ $1 ]];then usage fi declare -a hosts hosts=(veer1 veer2 veer3) case $1 in "start") { for i in "${hosts[@]}"; do echo ------------- Zookeeper $i Start ------------ ssh $i "/opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties" done sleep 3 for i in "${hosts[@]}"; do echo ------------- Kafka $i Start ------------ ssh $i "/opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties" done } ;; "stop") { for i in "${hosts[@]}"; do echo ------------- Kafka $i Stop ------------ ssh $i "/opt/kafka/bin/kafka-server-stop.sh" done sleep 10 for i in "${hosts[@]}"; do echo ------------- Zookeeper $i Stop ------------ ssh $i "/opt/kafka/bin/zookeeper-server-stop.sh" done } ;; "status") { for i in "${hosts[@]}"; do echo ------------- Kafka $i Status ------------ ssh $i "jps -ml" done } ;; esac EOF

启动脚本

#!/bin/bash

sadistic=/usr/local/kafka

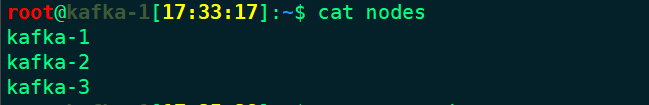

cat /root/nodes | while read line

do

{

echo zookeeper $line starting ...

ssh $line "nohup $sadistic/bin/zookeeper-server-start.sh $sadistic/config/zookeeper.properties >/dev/null 2>&1 &" </dev/null

}

done

wait

cat /root/nodes | while read line

do

{

echo kafka $line starting ...

ssh -f $line "nohup $sadistic/bin/kafka-server-start.sh $sadistic/config/server.properties >/dev/null 2>&1"

}

done

wait

终止脚本

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | #!/bin/bashsadistic=/usr/local/kafkawhile read linedo{ echo kafka $line stopping ... ssh -n $line "$sadistic/bin/kafka-server-stop.sh"} &waitdone < /root/nodeswhile read linedo{ echo zookeeper $line stopping ... ssh -n $line "$sadistic/bin/zookeeper-server-stop.sh"} &waitdone < /root/nodeswait |

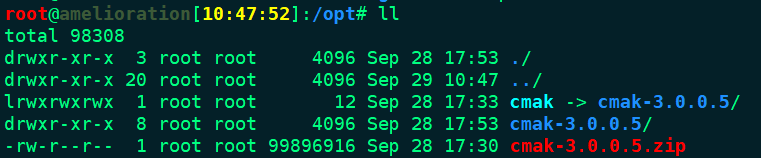

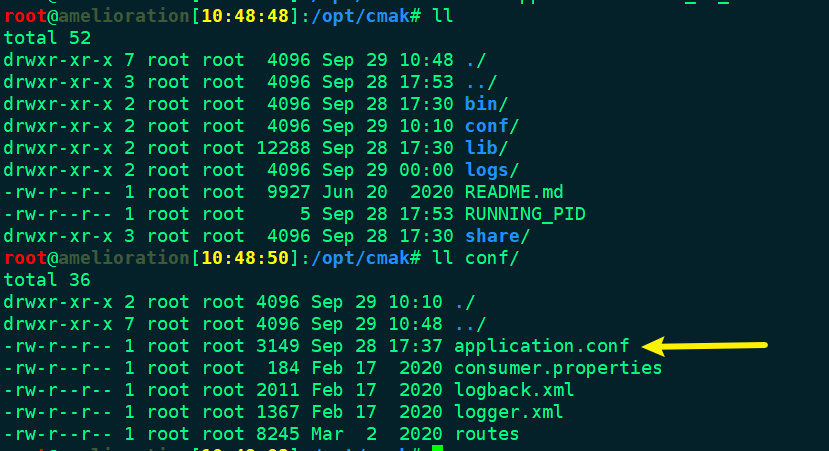

cmak

https://github.com/yahoo/CMAK/releases

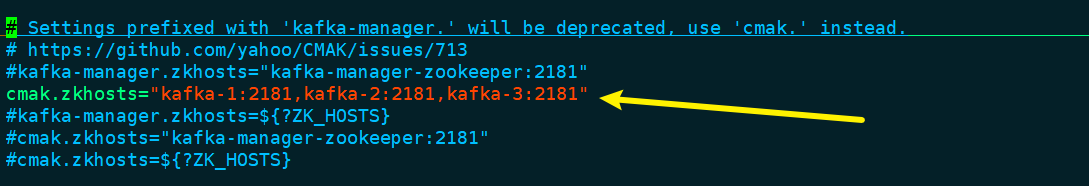

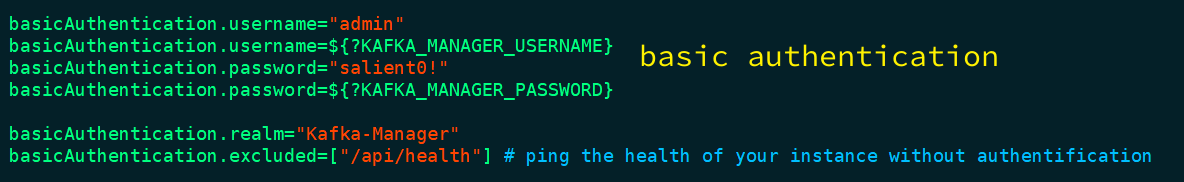

application.conf

logrotate

cat >/etc/logrotate.d/kafka-manager <<EOF

/usr/local/kafka-manager/logs/application.log {

daily

rotate 10

dateext

missingok

notifempty

}

cmak.service

cat > /etc/systemd/system/cmak.service <<EOF

[Unit]

Description=cmak service

After=network.target

[Service]

WorkingDirectory=/opt/cmak

ExecStart=/opt/cmak/bin/cmak -Dconfig.file=/opt/cmak/conf/application.conf -Dhttp.port=33333

Restart=on-failure

RestartSec=60

[Install]

WantedBy=multi-user.target

EOF

cmak启动脚本

#/bin/bash nohup /opt/cmak/bin/cmak -Dconfig.file=/opt/cmak/conf/application.conf -Dhttp.port=33333 2>&1 >nohup.out & echo aaaaaaaa exit 0

分类:

middleware

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律

2020-08-13 定期删除ES过期索引

2020-08-13 SQL server 统计数据库