Docker第二周作业笔记

Docker容器实现跨主机通信

同一主机内的容器通信

# 同一机器的三个容器 # centos 0c3d302cf050 172.17.0.3 # nginbx 和 php 共享一个网络, 172.17.0.2 root@docker-server1:~# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 0c3d302cf050 centos:7.9.2009 "bash" 10 minutes ago Up 10 minutes cranky_kirch 52f70d8565ba php:7.4.30-fpm-alpine "docker-php-entrypoi…" 43 minutes ago Up 43 minutes nervous_poincare 517ab18f9720 nginx:1.22.0-alpine "/docker-entrypoint.…" 49 minutes ago Up 49 minutes 0.0.0.0:80->80/tcp, :::80->80/tcp practical_poitras 172.17.0.2 和 172.17.0.3 通信不经过 宿主机的eth0, 直接通过docker0网桥转发 # centos的路由,yum -y intall net-tools 安装arp命令,先ping一下,arp就会记录 [root@0c3d302cf050 /]# arp -n Address HWtype HWaddress Flags Mask Iface 172.17.0.2 ether 02:42:ac:11:00:02 C eth0 172.17.0.1 ether 02:42:24:68:e9:62 C eth0 # nginx容器的路由 / # arp -a ? (172.17.0.1) at 02:42:24:68:e9:62 [ether] on eth0 ? (172.17.0.3) at 02:42:ac:11:00:03 [ether] on eth0 # 宿主机的iptables,tcp dpt:80 to:172.17.0.2:80,php的9000端口不会被暴露,要想访问9000,首先要访问nginx,然后nginx 通过 127.0.0.1:9000调用php Chain DOCKER (2 references) pkts bytes target prot opt in out source destination 0 0 RETURN all -- docker0 * 0.0.0.0/0 0.0.0.0/0 0 0 DNAT tcp -- !docker0 * 0.0.0.0/0 0.0.0.0/0 tcp dpt:80 to:172.17.0.2:80

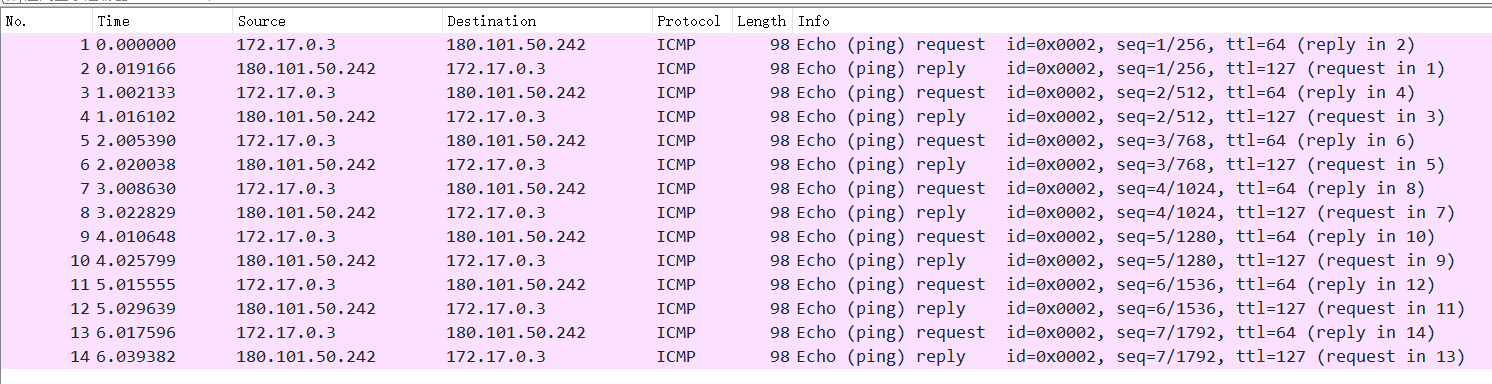

容器访问外网抓包

# 分别抓vethc64e300, docker0, eth0 root@docker-server1:~# tcpdump -nn -vvv -i vethc64e300 -vvv -nn ! port 22 and ! port 53 and ! arp -w 1-vethc64e300.pcap root@docker-server1:~# tcpdump -nn -vvv -i docker0 -vvv -nn ! port 22 and ! port 53 and ! arp -w 1-docker0.pcap root@docker-server1:~# tcpdump -nn -vvv -i eth0 -vvv -nn ! port 22 and ! port 53 and ! arp -w 1-eth00.pcap tcpdump: listening on eth0, link-type EN10MB (Ethernet), snapshot length 262144 bytes

vethc64e300

docker0

eth0

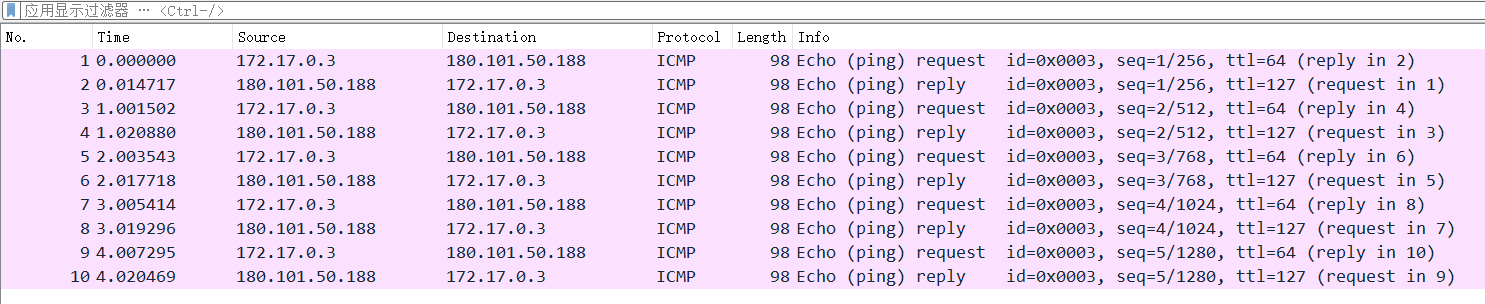

跨宿主机通信

跨宿主机通信

1、源容器产生请求报文发给网关docker0

2、docker0检查报文的目的IP,如何是本机就直接转发,如果不是本机就转发给宿主机。

3、源宿主机收到报文后检查路由表,将源地址替换为本机IP并发送报文。

4、目的主机收到请求报文,检查目的IP后将报文转发给docker0。

5、docker0检查目的IP后匹配MAC地址表,将报文发送给目的MAC的容器

容器跨宿主机通信

# 分别修改docker默认子网范围 root@docker-server1:~# grep bip /lib/systemd/system/docker.service ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --bip 10.10.0.1/24 root@docker-server2:~# grep bip /lib/systemd/system/docker.service ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --bip 10.20.0.1/24 # 分别重启docker root@docker-server1:~# systemctl daemon-reload root@docker-server1:~# systemctl restart docker root@docker-server2:~# systemctl daemon-reload root@docker-server2:~# systemctl restart docker # 确认是否修改 root@docker-server1:~# ifconfig docker0 docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 10.10.0.1 netmask 255.255.255.0 broadcast 10.10.0.255 inet6 fe80::42:24ff:fe68:e962 prefixlen 64 scopeid 0x20<link> ether 02:42:24:68:e9:62 txqueuelen 0 (Ethernet) RX packets 28565 bytes 1498015 (1.4 MB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 32685 bytes 543632306 (543.6 MB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 root@docker-server2:~# ifconfig docker0 docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 10.20.0.1 netmask 255.255.255.0 broadcast 10.20.0.255 inet6 fe80::42:79ff:fe65:6cb3 prefixlen 64 scopeid 0x20<link> ether 02:42:79:65:6c:b3 txqueuelen 0 (Ethernet) RX packets 76 bytes 105323 (105.3 KB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 107 bytes 10485 (10.4 KB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 # 修改iptables规则(默认转发全部ACCEPT)和添加路由表 root@docker-server1:~# iptables -P FORWARD ACCEPT root@docker-server1:~# route add -net 10.20.0.0/24 gw 192.168.234.202 root@docker-server1:~# route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 192.168.234.2 0.0.0.0 UG 0 0 0 eth0 **10.10.0.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0 10.20.0.0 192.168.234.202 255.255.255.0 UG 0 0 0 eth0** 192.168.234.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0 root@docker-server2:~# iptables -P FORWARD ACCEPT root@docker-server2:~# route add -net 10.10.0.0/24 gw 192.168.234.201 root@docker-server2:~# route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 192.168.234.2 0.0.0.0 UG 0 0 0 eth0 **10.10.0.0 192.168.234.201 255.255.255.0 UG 0 0 0 eth0 10.20.0.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0** 192.168.234.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0 # 201 上 启动容器 , ip 是 10.10.0.2 root@docker-server1:~# docker run -it centos:7.9.2009 [root@dfd6805348b1 /]# yum -y install net-tools [root@dfd6805348b1 /]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 10.10.0.2 netmask 255.255.255.0 broadcast 10.10.0.255 ether 02:42:0a:0a:00:02 txqueuelen 0 (Ethernet) RX packets 2567 bytes 29806789 (28.4 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 2432 bytes 135140 (131.9 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 loop txqueuelen 1000 (Local Loopback) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 # 202 上 启动容器 , ip 是 10.20.0.2 root@docker-server2:~# docker run -it -d nginx:1.22-alpine3.17 c1d671a705cd73bfd243b41f91e8db51fd5d1ffc89885e483c059d3a4e3d72c6 root@docker-server2:~# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES c1d671a705cd nginx:1.22-alpine3.17 "/docker-entrypoint.…" 3 seconds ago Up 2 seconds 80/tcp focused_kowalevski root@docker-server2:~# docker exec -it c1d671a705cd sh / # ifconfig eth0 Link encap:Ethernet HWaddr 02:42:0A:14:00:02 inet addr:10.20.0.2 Bcast:10.20.0.255 Mask:255.255.255.0 UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:10 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:876 (876.0 B) TX bytes:0 (0.0 B) lo Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 UP LOOPBACK RUNNING MTU:65536 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:0 (0.0 B) TX bytes:0 (0.0 B) / # curl 127.0.0.1 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> html { color-scheme: light dark; } body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="<http://nginx.org/>">nginx.org</a>.<br/> Commercial support is available at <a href="<http://nginx.com/>">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html> # 在10.10.0.2中访问10.20.0.2可以通 [root@dfd6805348b1 /]# ping 10.20.0.2 PING 10.20.0.2 (10.20.0.2) 56(84) bytes of data. 64 bytes from 10.20.0.2: icmp_seq=1 ttl=62 time=0.590 ms 64 bytes from 10.20.0.2: icmp_seq=2 ttl=62 time=0.501 ms 64 bytes from 10.20.0.2: icmp_seq=3 ttl=62 time=0.458 ms ^C --- 10.20.0.2 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2049ms rtt min/avg/max/mdev = 0.458/0.516/0.590/0.058 ms [root@dfd6805348b1 /]# curl 10.20.0.2 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> html { color-scheme: light dark; } body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="<http://nginx.org/>">nginx.org</a>.<br/> Commercial support is available at <a href="<http://nginx.com/>">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html> # 10.20.0.2 也可以 ping 通 10.10.0.2 / # ping 10.10.0.2 PING 10.10.0.2 (10.10.0.2): 56 data bytes 64 bytes from 10.10.0.2: seq=0 ttl=62 time=0.855 ms 64 bytes from 10.10.0.2: seq=1 ttl=62 time=1.193 ms 64 bytes from 10.10.0.2: seq=2 ttl=62 time=0.975 ms ^C

总结: 准备两台机器,需要分别修改Docker0的子网,是它们不在一个网段,修改路由表,当10.20.0.2 访问 10.10.0.1的时候,路由表会查看目的地10.10.0.0的网关是192.168.234.201,就会将转发给192.168.234.201,192.168.234.201接到报文之后,查看目的地10.10.0.0是本机地址,并且转发给docker0,就通了。

总结Dockerfile的常见指令

# 在整个dockfile文件中除了注释之外的第一行,要是FROM指令,FROM 指令用于指定当前镜像(base image)引用的父镜像(parent image) FROM centos:7.9.2009 #(镜像的维护者信息,目前已经不推荐使用) MAINTAINER #设置镜像的属性标签 LABEL "key" = "value" LABEL author = "jack jack@gmail.com" LABEL version = "1.0" # 用于添加宿主机本地的文件、目录、压缩等资源到镜像里面去,会自动解压tar.gz格式的压缩包,但不会自动解压zip包 ADD [--chown=<user>:<group>] <src>... <dest> ADD --chown=root:root test /opt/test # 用于添加宿主机本地的文件、目录、压缩等资源到镜像里面去,不会解压任何压缩包 COPY [--chown=<user>:<group>] <src>... <dest> # 设置容器环境变量 ENV MY_NAME="John Doe" # 指定运行操作的用户 USER <user>[:<group>] or USER <UID>[:<GID>] # 执行shell命令,但是一定要以非交互式的方式执行 RUN yum install vim unzip -y && cd /etc/nginx # 用于定义当前工作目录, 其实和cd一样,执行工作目录后,后续的操作会在该目录下操作 WORKDIR /data/data1 # 使用nginx用户,后续操作会使用nginx执行 USER nginx # 声明要把容器的某些端口映射到宿主机,只是声明,真正生效需要在启动容器指定 EXPOSE <port> [<port>/<protocol>...] # 挂载卷, 其实就是在镜像中新建目录。方便挂载,和mkdir是一样 VOLUME ["/data1", "/data2"] # CMD 和 ENTRYPOINT # CMD # CMD 有以上三种方式定义容器启动时所默认执行的命令或脚本 # 第一种 CMD ["executable", "param1", "param2"] CMD ["ls", "-l"] # 相当于执行 ls -l # 第二种: CMD ["param1", "param2"] (as default parameters to ENTRYPOINT) # 作为ENTRYPOINT默认参数 ENTRYPOINT ["cat"] CMD ["-n", "/etc/hosts"] # 相当于执行 cat -n /etc/hosts # 第三种 CMD command param1 param2 (shell form) #基于shell命令的 CMD cat /etc/hosts # 相当于执行 cat /etc/hosts # ENTRYPOINT # ENTRYPOINT也可以用于定义容器在启动时候默认执行的命令或者脚本 # 单独使用时和CMD效果一样 ENTRYPOINT ls -l # ls -l ENTRYPOINT ["cat", "-n", "/etc/hosts"] # cat -n /etc/hosts # 如果是和CMD命令混合使用的时候,会将CMD的命令当做参数传递给ENTRYPOINT后面的脚本,可以在脚本中对参数做判断并相应的容器初始化操作。 ENTRYPOINT ["cat"] CMD ["-n", "/etc/hosts"] # 相当于执行 cat -n /etc/hosts

基于Dockerfile Nginx镜像并验证可以启动为容器

root@docker-server1:~/docker_file/nginx# pwd /root/docker_file/nginx root@docker-server1:~/docker_file/nginx# ll total 1104 drwxr-xr-x 2 root root 112 Jul 18 23:27 ./ drwxr-xr-x 3 root root 19 Jul 18 00:13 ../ -rw-r--r-- 1 root root 945 Jul 18 23:07 Dockerfile -rw-r--r-- 1 root root 38751 Jul 18 22:39 frontend.tar.gz -rw-r--r-- 1 root root 1073322 Jul 18 22:01 nginx-1.22.0.tar.gz -rw-r--r-- 1 root root 2832 Jul 18 23:27 nginx.conf -rw-r--r-- 1 root root 900 Jul 18 21:46 sources.list root@docker-server1:~/docker_file/nginx# cat Dockerfile FROM ubuntu:22.04 LABEL author = "jack jack@gmail.com" LABEL version = "1.0" ADD sources.list /etc/apt/sources.list RUN apt update && apt install -y iproute2 ntpdate tcpdump telnet traceroute nfs-kernel-server nfs-common lrzsz tree openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev ntpdate tcpdump telnet traceroute gcc openssh-server lrzsz tree openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev ntpdate tcpdump telnet traceroute iotop unzip zip make vim tzdata && ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime ADD nginx-1.22.0.tar.gz /usr/local/src RUN cd /usr/local/src/nginx-1.22.0 && ./configure --prefix=/apps/nginx && make && make install && ln -sv /apps/nginx/sbin/nginx /usr/bin RUN groupadd -g 2088 nginx && useradd -g nginx -s /usr/sbin/nologin -u 2088 nginx && chown -R nginx.nginx /apps/nginx ADD nginx.conf /apps/nginx/conf/ ADD frontend.tar.gz /apps/nginx/html/ USER nginx WORKDIR /tmp RUN echo "hadoop\\nspark" > word.txt VOLUME ["/data1","/data2"] EXPOSE 80 80 CMD ["nginx","-g","daemon off;"] root@docker-server1:~/docker_file/nginx# cat nginx.conf user nginx; worker_processes 1; #error_log logs/error.log; #error_log logs/error.log notice; #error_log logs/error.log info; #pid logs/nginx.pid; #daemon off; events { worker_connections 1024; } http { include mime.types; default_type application/octet-stream; #log_format main '$remote_addr - $remote_user [$time_local] "$request" ' # '$status $body_bytes_sent "$http_referer" ' # '"$http_user_agent" "$http_x_forwarded_for"'; #access_log logs/access.log main; sendfile on; #tcp_nopush on; #keepalive_timeout 0; keepalive_timeout 65; #gzip on; upstream tomcat { server 192.168.234.201:8080; server 192.168.234.202:8080; } server { listen 80; server_name localhost; #charset koi8-r; #access_log logs/host.access.log main; location / { root html; index index.html index.htm; } location /myapp { proxy_pass <http://tomcat>; } #error_page 404 /404.html; # redirect server error pages to the static page /50x.html # error_page 500 502 503 504 /50x.html; location = /50x.html { root html; } # proxy the PHP scripts to Apache listening on 127.0.0.1:80 # #location ~ \\.php$ { # proxy_pass <http://127.0.0.1>; #} # pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000 # #location ~ \\.php$ { # root html; # fastcgi_pass 127.0.0.1:9000; # fastcgi_index index.php; # fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name; # include fastcgi_params; #} # deny access to .htaccess files, if Apache's document root # concurs with nginx's one # #location ~ /\\.ht { # deny all; #} } # another virtual host using mix of IP-, name-, and port-based configuration # #server { # listen 8000; # listen somename:8080; # server_name somename alias another.alias; # location / { # root html; # index index.html index.htm; # } #} # HTTPS server # #server { # listen 443 ssl; # server_name localhost; # ssl_certificate cert.pem; # ssl_certificate_key cert.key; # ssl_session_cache shared:SSL:1m; # ssl_session_timeout 5m; # ssl_ciphers HIGH:!aNULL:!MD5; # ssl_prefer_server_ciphers on; # location / { # root html; # index index.html index.htm; # } #} } root@docker-server1:~/docker_file/nginx# cat sources.list deb <http://mirrors.aliyun.com/ubuntu/> jammy main restricted universe multiverse deb-src <http://mirrors.aliyun.com/ubuntu/> jammy main restricted universe multiverse deb <http://mirrors.aliyun.com/ubuntu/> jammy-security main restricted universe multiverse deb-src <http://mirrors.aliyun.com/ubuntu/> jammy-security main restricted universe multiverse deb <http://mirrors.aliyun.com/ubuntu/> jammy-updates main restricted universe multiverse deb-src <http://mirrors.aliyun.com/ubuntu/> jammy-updates main restricted universe multiverse # deb <http://mirrors.aliyun.com/ubuntu/> jammy-proposed main restricted universe multiverse # deb-src <http://mirrors.aliyun.com/ubuntu/> jammy-proposed main restricted universe multiverse deb <http://mirrors.aliyun.com/ubuntu/> jammy-backports main restricted universe multiverse deb-src <http://mirrors.aliyun.com/ubuntu/> jammy-backports main restricted universe multiverse # 构建镜像 root@docker-server1:~/docker_file/nginx# docker build -t harbor.lgx.io/application/nginx:1.20.1 . # 启动容器 root@docker-server1:~/docker_file/nginx# docker run -d -p 80:80 harbor.lgx.io/application/nginx:1.20.1 # 查看正在运行的容器 root@docker-server1:~/docker_file/nginx# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 7a00907e217e harbor.lgx.io/application/nginx:1.20.1 "nginx -g 'daemon of…" 11 seconds ago Up 11 seconds 0.0.0.0:80->80/tcp, :::80->80/tcp stupefied_leakey

问题: apt命令报证书错误的解决方法------- Certificate verification failed: The certificate is NOT trusted. 首先将https换成http,然后运行 apt-get update && apt-get install --reinstall ca-certificates # 再将http换成https,然后运行 apt-get update # 就可以了 # 在构建Dockerfile的时候我是直接将https换成了http

部署单机harbor并实现镜像的上传与下载

harbor简介

Harbor是一个用于存储和分发Docker镜像的企业级Registry服务器,由vmware开源,在Docker Registry的基础至上添加了一些企业必需的功能特性,例如安全认证、镜像扫描和镜像管理、远程镜像复制等,扩展了开源的Docker Distribution的功能,作为一个企业级的私有Registry服务器,Harbor提供了更好的性能和安全,提升了用户使用Registry分发镜像镜像的效率,Harbor支持安装在多个Registry节点以实现镜像资源夸主机复制、从而实现镜像服务的高可用和镜像数据的安全,另外将镜像全部保存在私有网络的Registry中, 也可以确保代码数据和密码配置等信息仅在公司内部网络中传输,从而保证核心数据的安全性。

harbor官方网址:<https://goharbor.io/>

harbor官方github地址:<https://github.com/vmware/harbor>

vmware官方开源服务列表地址:<https://vmware.github.io/harbor/cn>

Harbor的安装

# 在新的docker-harbor服务器上安装docker, 上传harbor安装包 root@docker-harbor:~# ll harbor-offline-installer-v2.8.2.tgz -rw-r--r-- 1 root root 607390683 Jul 13 13:30 harbor-offline-installer-v2.8.2.tgz # 解压,移动到/apps下 root@docker-harbor:~# mkdir /apps root@docker-harbor:~# mv harbor /apps root@docker-harbor:~# cd /apps root@docker-harbor:/apps# ll total 0 drwxr-xr-x 3 root root 20 Jul 13 13:34 ./ drwxr-xr-x 20 root root 303 Jul 13 13:34 ../ drwxr-xr-x 2 root root 122 Jul 13 13:31 harbor/ # 进入harbor目录,复制一份harbor.yml.tmpl为harbor.yml,修改 root@docker-harbor:/apps# cd harbor/ root@docker-harbor:/apps/harbor# ll total 596680 drwxr-xr-x 2 root root 122 Jul 13 13:31 ./ drwxr-xr-x 3 root root 20 Jul 13 13:34 ../ -rw-r--r-- 1 root root 11347 Jun 2 11:43 LICENSE -rw-r--r-- 1 root root 3639 Jun 2 11:43 common.sh -rw-r--r-- 1 root root 610962984 Jun 2 11:44 harbor.v2.8.2.tar.gz -rw-r--r-- 1 root root 11736 Jun 2 11:43 harbor.yml.tmpl -rwxr-xr-x 1 root root 2725 Jun 2 11:43 install.sh* -rwxr-xr-x 1 root root 1881 Jun 2 11:43 prepare* root@docker-harbor:/apps/harbor# cp harbor.yml.tmpl harbor.yml vim harbor.yml # 将https的证书注释掉,本次安装http的 # https related config #https: #https port for harbor, default is 443 # port: 443 # The path of cert and key files for nginx #certificate: /your/certificate/path #private_key: /your/private/key/path # 修改hostname hostname: harbor.lgx.io # 修改harbor的密码 harbor_admin_password: 123456 # harbor的数据盘,最好是单独挂载的,因为这样服务器挂了,磁盘拿下来数据是不会丢失的 data_volume: /data/harbor # 创建新的分区, 8e 选择 LVM,方便后期扩展 root@docker-harbor:/apps/harbor# fdisk /dev/sdb Welcome to fdisk (util-linux 2.37.2). Changes will remain in memory only, until you decide to write them. Be careful before using the write command. Device does not contain a recognized partition table. Created a new DOS disklabel with disk identifier 0x4a617fed. Command (m for help): m Help: DOS (MBR) a toggle a bootable flag b edit nested BSD disklabel c toggle the dos compatibility flag Generic d delete a partition F list free unpartitioned space l list known partition types n add a new partition p print the partition table t change a partition type v verify the partition table i print information about a partition Misc m print this menu u change display/entry units x extra functionality (experts only) Script I load disk layout from sfdisk script file O dump disk layout to sfdisk script file Save & Exit w write table to disk and exit q quit without saving changes Create a new label g create a new empty GPT partition table G create a new empty SGI (IRIX) partition table o create a new empty DOS partition table s create a new empty Sun partition table Command (m for help): n Partition type p primary (0 primary, 0 extended, 4 free) e extended (container for logical partitions) Select (default p): Using default response p. Partition number (1-4, default 1): First sector (2048-209715199, default 2048): Last sector, +/-sectors or +/-size{K,M,G,T,P} (2048-209715199, default 209715199): Created a new partition 1 of type 'Linux' and of size 100 GiB. Command (m for help): p Disk /dev/sdb: 100 GiB, 107374182400 bytes, 209715200 sectors Disk model: VMware Virtual S Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x4a617fed Device Boot Start End Sectors Size Id Type /dev/sdb1 2048 209715199 209713152 100G 83 Linux Command (m for help): t Selected partition 1 Hex code or alias (type L to list all): L 00 Empty 24 NEC DOS 81 Minix / old Lin bf Solaris 01 FAT12 27 Hidden NTFS Win 82 Linux swap / So c1 DRDOS/sec (FAT- 02 XENIX root 39 Plan 9 83 Linux c4 DRDOS/sec (FAT- 03 XENIX usr 3c PartitionMagic 84 OS/2 hidden or c6 DRDOS/sec (FAT- 04 FAT16 <32M 40 Venix 80286 85 Linux extended c7 Syrinx 05 Extended 41 PPC PReP Boot 86 NTFS volume set da Non-FS data 06 FAT16 42 SFS 87 NTFS volume set db CP/M / CTOS / . 07 HPFS/NTFS/exFAT 4d QNX4.x 88 Linux plaintext de Dell Utility 08 AIX 4e QNX4.x 2nd part 8e Linux LVM df BootIt 09 AIX bootable 4f QNX4.x 3rd part 93 Amoeba e1 DOS access 0a OS/2 Boot Manag 50 OnTrack DM 94 Amoeba BBT e3 DOS R/O 0b W95 FAT32 51 OnTrack DM6 Aux 9f BSD/OS e4 SpeedStor 0c W95 FAT32 (LBA) 52 CP/M a0 IBM Thinkpad hi ea Linux extended 0e W95 FAT16 (LBA) 53 OnTrack DM6 Aux a5 FreeBSD eb BeOS fs 0f W95 Ext'd (LBA) 54 OnTrackDM6 a6 OpenBSD ee GPT 10 OPUS 55 EZ-Drive a7 NeXTSTEP ef EFI (FAT-12/16/ 11 Hidden FAT12 56 Golden Bow a8 Darwin UFS f0 Linux/PA-RISC b 12 Compaq diagnost 5c Priam Edisk a9 NetBSD f1 SpeedStor 14 Hidden FAT16 <3 61 SpeedStor ab Darwin boot f4 SpeedStor 16 Hidden FAT16 63 GNU HURD or Sys af HFS / HFS+ f2 DOS secondary 17 Hidden HPFS/NTF 64 Novell Netware b7 BSDI fs fb VMware VMFS 18 AST SmartSleep 65 Novell Netware b8 BSDI swap fc VMware VMKCORE 1b Hidden W95 FAT3 70 DiskSecure Mult bb Boot Wizard hid fd Linux raid auto 1c Hidden W95 FAT3 75 PC/IX bc Acronis FAT32 L fe LANstep 1e Hidden W95 FAT1 80 Old Minix be Solaris boot ff BBT Aliases: linux - 83 swap - 82 extended - 05 uefi - EF raid - FD lvm - 8E linuxex - 85 Hex code or alias (type L to list all): 8e Changed type of partition 'Linux' to 'Linux LVM'. Command (m for help): p Disk /dev/sdb: 100 GiB, 107374182400 bytes, 209715200 sectors Disk model: VMware Virtual S Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x4a617fed Device Boot Start End Sectors Size Id Type /dev/sdb1 2048 209715199 209713152 100G 8e Linux LVM Command (m for help): w The partition table has been altered. Calling ioctl() to re-read partition table. Syncing disks. # 创建文件系统 root@docker-harbor:/apps/harbor# mkfs.xfs /dev/sdb1 meta-data=/dev/sdb1 isize=512 agcount=4, agsize=6553536 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=1, sparse=1, rmapbt=0 = reflink=1 bigtime=0 inobtcount=0 data = bsize=4096 blocks=26214144, imaxpct=25 = sunit=0 swidth=0 blks naming =version 2 bsize=4096 ascii-ci=0, ftype=1 log =internal log bsize=4096 blocks=12799, version=2 = sectsz=512 sunit=0 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0 # 挂载 root@docker-harbor:/apps/harbor# grep harbor /etc/fstab UUID=8a7c9dec-b366-4539-b7f6-e185cc091920 /data/harbor xfs defaults 0 0 mount -a # 安装 root@docker-harbor:/apps/harbor# ./install.sh --with-trivy # 输入网址就可以登录, 输入配置文件中的用户名和密码就可以登录 <http://192.168.234.204/>

点击新建项目

输入项目名名称

选择公开:意思是下载镜像没有任何限制,上传镜像需要docker login

配置限制:-1表示不限制,如果限制输入大于0的数字

代理不需要开,因为这都是公司内部的项目,不需要配置代理

在docker-server1上传镜像

# 打tag,tag的格式是 域名/仓库名/镜像名:tag # 配置host root@docker-server1:~# docker tag nginx:1.20.1 harbor.lgx.io/baseimages/nginx:1.20.1 root@docker-server1:~# grep 192.168.234.204 /etc/hosts 192.168.234.204 harbor.lgx.io # docker login 报错,原因是没有https协议 root@docker-server1:~# docker login harbor.lgx.io Username: admin Password: Error response from daemon: Get "<https://harbor.lgx.io/v2/>": dial tcp 192.168.234.204:443: connect: connection refused # /etc/docker/daemon.json中追加 "insecure-registries":["harbor.lgx.io"], root@docker-server1:~# cat /etc/docker/daemon.json { "data-root":"/data/docker", "storage-driver":"overlay2", "exec-opts":["native.cgroupdriver=systemd"], **"insecure-registries":["harbor.lgx.io"],** "registry-mirrors":["<https://d9yz3pz4.mirror.aliyuncs.com>"], "live-restore":false, "log-opts":{ "max-file":"5", "max-size":"100m" } } # 这样就可以登录成功了 root@docker-server1:~# docker login harbor.lgx.io Username: admin Password: WARNING! Your password will be stored unencrypted in /root/.docker/config.json. Configure a credential helper to remove this warning. See <https://docs.docker.com/engine/reference/commandline/login/#credentials-store> Login Succeeded # 登录成功实际上会在 /root/.docker/config.json文件中存储用户名和密码 root@docker-server1:~# cat /root/.docker/config.json { "auths": { "harbor.lgx.io": { "auth": "YWRtaW46MTIzNDU2" } } }root@docker-server1:~# root@docker-server1:~# root@docker-server1:~# echo YWRtaW46MTIzNDU2 | base64 -d admin:123456 # 上传镜像: root@docker-server1:~# docker push harbor.lgx.io/baseimages/nginx:1.20.1 The push refers to repository [harbor.lgx.io/baseimages/nginx] 91117a05975b: Pushed 8ffde58510c5: Pushed 0dcd28129664: Pushed 4edd8832c8e8: Pushed 6662554e871b: Pushed e81bff2725db: Pushed 1.20.1: digest: sha256:ee2970c234800c5b5841d20d04b7ddc2a08f8653ce6d3376782c8a48eb61428b size: 1570

查看网站发现上传成功

在docker-server2下载镜像:

# 配置不安全的镜像仓库,和hostname,记得重启docker root@docker-server2:~# docker pull harbor.lgx.io/baseimages/nginx:1.20.1 1.20.1: Pulling from baseimages/nginx b380bbd43752: Pull complete 83acae5e2daa: Pull complete 33715b419f9b: Pull complete eb08b4d557d8: Pull complete 74d5bdecd955: Pull complete 0820d7f25141: Pull complete Digest: sha256:ee2970c234800c5b5841d20d04b7ddc2a08f8653ce6d3376782c8a48eb61428b Status: Downloaded newer image for harbor.lgx.io/baseimages/nginx:1.20.1 harbor.lgx.io/baseimages/nginx:1.20.1 root@docker-server2:~# docker images REPOSITORY TAG IMAGE ID CREATED SIZE harbor.lgx.io/baseimages/nginx 1.20.1 c8d03f6b8b91 21 months ago 133MB

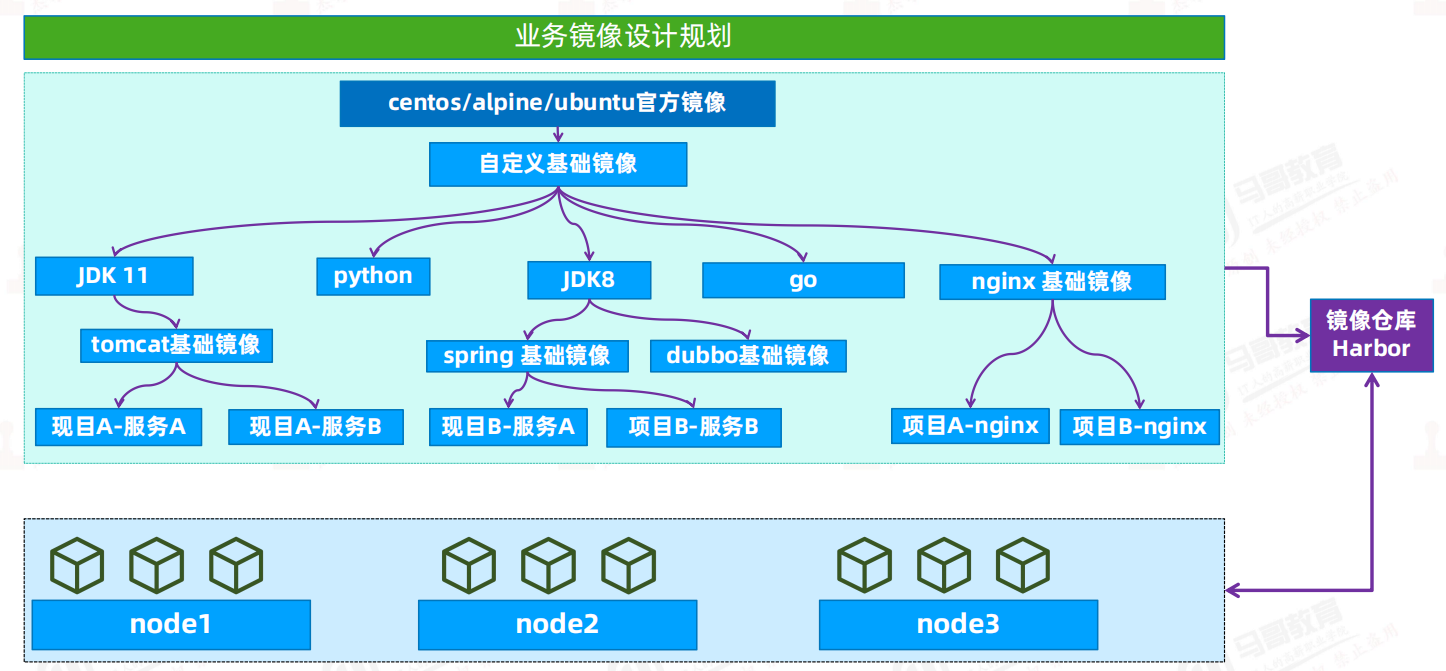

总结镜像的分层构建流程

1 首先下载官方的操作系统镜像(比如Centos, ubuntu)。

2 在此基础上做镜像的扩展(比如安装命令,新增通用的用户),形成通用的镜像

3 在通用镜像的基础上,安装应用(比如数据库,nginx,java),甚至做一些特殊的配置,形成可以对外提供的业务镜像

注意: 自定义的通用镜像应该避免反复更新,否则下面的业务镜像也会重新构建

基于systemd实现容器的CPU及内存的使用限制

容器的CPU及内存的使用限制命令总结

-- 内存 -m or --memory #限制容器可以使用的最大内存量 --memory-swap #容器可以使用的交换分区大小,必须要在设置了物理内存限制的前提才能设置交换分区的限制 --memory-swappiness #设置容器使用交换分区的倾向性,值越高表示越倾向于使用swap分区,范围为0-100,0为能不用就不用,100为能用就用。 --kernel-memory #容器可以使用的最大内核内存量,最小为4m,由于内核内存与用户空间内存隔离,因此无法与用户空间内存直接交换,因此内核内存不足的容器可能会阻塞宿主主机资源,这会对主机和其他容器或者其他服务进程产生影响,因此不要设置内核内存大小。 --memory-reservation #允许指定小于--memory的软限制,当Docker检测到主机上的争用或内存不足时会激活该限制,如果使用-- memory-reservation,则必须将其设置为低于--memory才能使其优先。 因为它是软限制,所以不能保证容器不超过限制 --oom-kill-disable #默认情况下,发生OOM时,kernel会杀死容器内进程,但是可以使用--oom-kill-disable参数,可以禁止oom发生在指定的容器上,即 仅在已设置-m / - memory选项的容器上禁用OOM,如果-m 参数未配置,产生OOM时,主机为了释放内存还会杀死系统进程 # 限制最大内存为6M root@docker-server1:~# docker run -it -m 6m centos:7.9.2009 bash # 限制最大内存为128M, 软限制是100M,软限制要比-m低 root@docker-server1:~# docker run -it -m 128m --memory-reservation 100M centos:7.9.2009 bash # 也可以只指定软限制 root@docker-server1:~# docker run -it --memory-reservation 129M centos:7.9.2009 bash -- CPU --cpus 2 指定CPU的限制为2,可以是浮点数 --cpuset-cpus 1,3 设置使用id为1和3的CPU --cpu-shares 500 指定CPU使用率,具体用法见下面docker-stress-ng 压测案例 root@docker-server1:~# docker run -it --cpus 1 centos:7.9.2009 bash root@docker-server1:~# docker run -it --cpus 1.5 centos:7.9.2009 bash # 指定CPU的限制,设置使用id为1和3的CPU root@docker-server1:~# docker run -it --cpus 2 --cpuset-cpus 1,3 centos:7.9.2009 bash

docker-stress-ng 压测案例

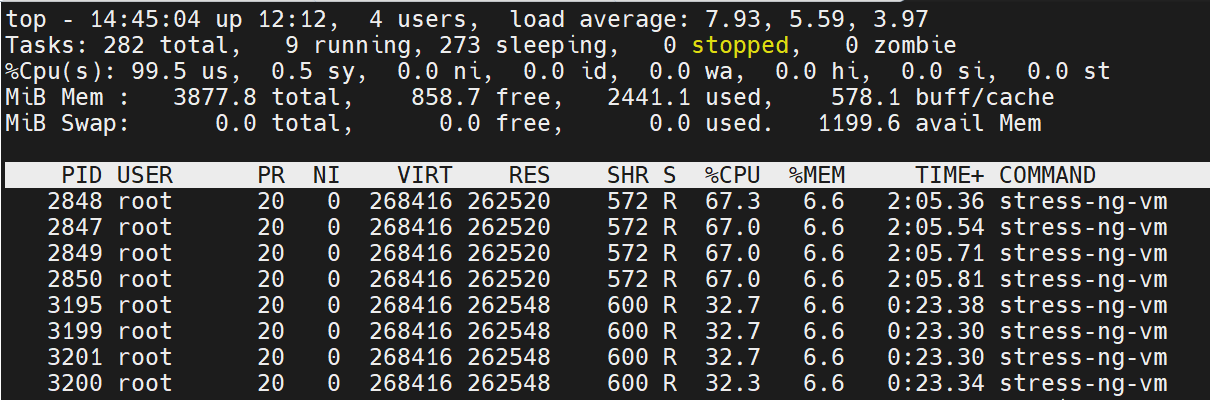

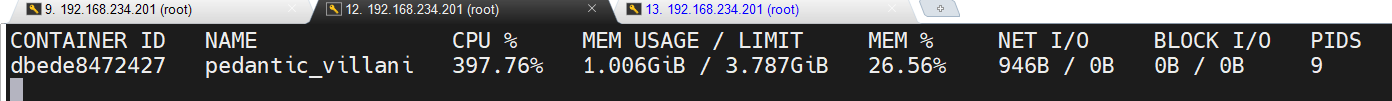

# 本机为4个CPU root@docker-server1:~# lscpu | grep CPU CPU op-mode(s): 32-bit, 64-bit CPU(s): 4 On-line CPU(s) list: 0-3 CPU family: 23 NUMA node0 CPU(s): 0-3 # 4G内存, 没有交换分区 root@docker-server1:~# free -h total used free shared buff/cache available Mem: 3.8Gi 335Mi 3.1Gi 1.0Mi 411Mi 3.2Gi Swap: 0B 0B 0B # 查看帮助信息 docker run -it --rm lorel/docker-stress-ng --help # 启动两个内存工作进程,每个内存工作进程最大允许使用内存256M,且宿主机不限制当前容器最大内存 # --vm 指定线程的数量 # --vm-bytes -- 每个工作线程消耗的内存数量 # 每个线程默认消耗1核的CPU root@docker-server1:~# docker run -it --rm --name magedu-c1 lorel/docker-stress-ng --vm 2 --vm-bytes 256M

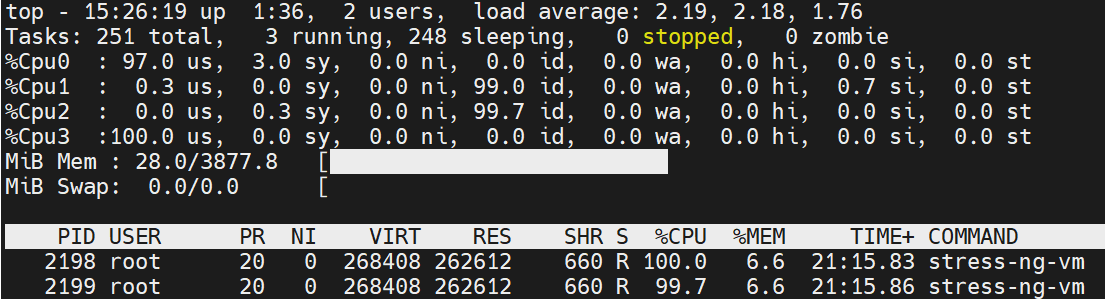

宿主机使用top查看

# 宿主机上运行top命令的结果: # 100% 表示用了1个CPU,用了0和3 # 6.6% -- 0.066 * 4 * 1024 大概是 256M

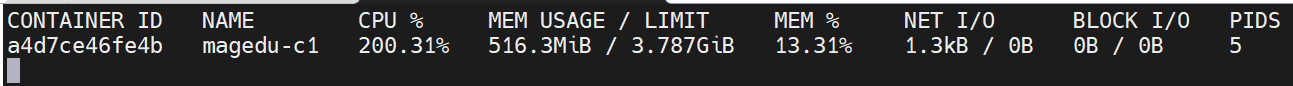

宿主机使用docker stats查看

CPU 200% MEM 516M

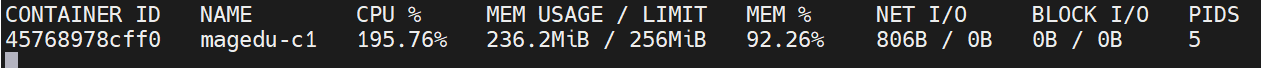

加上MEM限制

# 虽然一共申请了512m,但是由于-m的限制,所以最多只能使用256m内存 root@docker-server1:~# docker run -it --rm --name magedu-c1 -m 256m lorel/docker-stress-ng --vm 2 --vm-bytes 256M

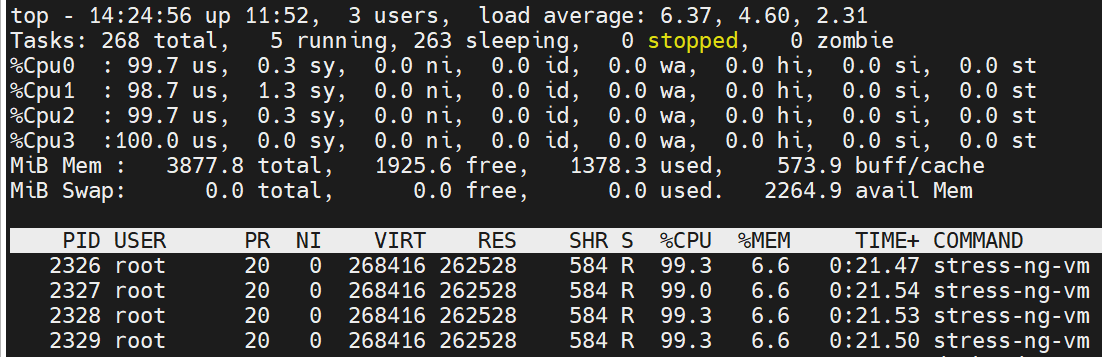

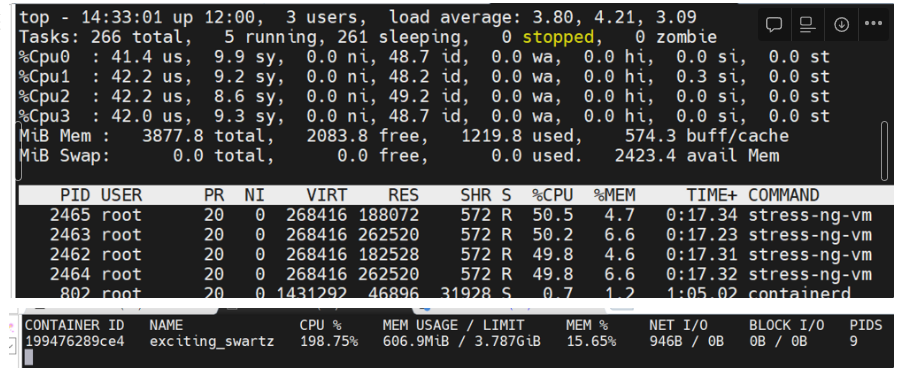

# 启用8个线程,vm4个,cpu4个,每个线程消耗0.5个CPU, 一共是4个,也就是400% root@docker-server1:~# docker run -it lorel/docker-stress-ng --vm 4 --cpu 4 stress-ng: info: [1] defaulting to a 86400 second run per stressor stress-ng: info: [1] dispatching hogs: 4 cpu, 4 vm # 启用4个工作线程,每个线程消耗1个CPU和256M内存 root@docker-server1:~# docker run -it lorel/docker-stress-ng --vm 4 stress-ng: info: [1] defaulting to a 86400 second run per stressor stress-ng: info: [1] dispatching hogs: 4 vm

加上CPU限制:

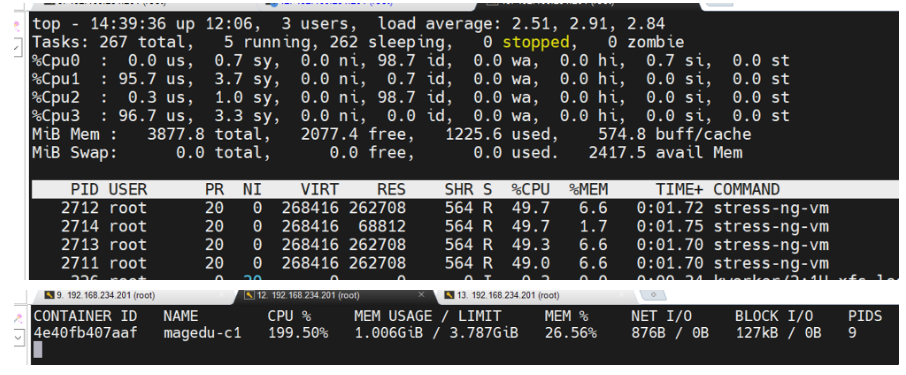

# 加上CPU限制之后,可以看到每个线程只有大概使用50%的CPU, 一共使用200% root@docker-server1:~# docker run -it --cpus 2 lorel/docker-stress-ng --vm 4

docker run -it --rm --name magedu-c1 --cpus 2 --cpuset-cpus 1,3 lorel/docker-stress-ng --vm 4

root@docker-server1:~# docker run -it --rm --name magedu-c1 --cpu-shares 1000 lorel/docker-stress-ng --vm 4 root@docker-server1:~# docker run -it --rm --name magedu-c2 --cpu-shares 500 lorel/docker-stress-ng --vm 4 magedu-c1 --- 1000 / (1000 + 500) = 66.6% magedu-c2 --- 500 / (1000 + 500) = 33.3%

容器的资源限制验证

root@docker-server1:~# root@docker-server1:~# docker run -it --cpus 1.2 -m 512M centos:7.9.2009 # 查看内存限制, 单位是字节 root@docker-server1:~# cat /sys/fs/cgroup/system.slice/docker-a9c8ff6ec11e1ce2e2a1dc2b779639e30c0fe8da37ad90497b793d08af954a62.scope/memory.max 536870912 root@docker-server1:~# echo 536870912/1024/1024 | bc 512 root@docker-server1:~# cat /sys/fs/cgroup/system.slice/docker-a9c8ff6ec11e1ce2e2a1dc2b779639e30c0fe8da37ad90497b793d08af954a62.scope/cpu.max 120000 100000 # 使用的是1.2个CPU,计算方法如下 root@docker-server1:~# bc bc 1.07.1 Copyright 1991-1994, 1997, 1998, 2000, 2004, 2006, 2008, 2012-2017 Free Software Foundation, Inc. This is free software with ABSOLUTELY NO WARRANTY. For details type `warranty'. scale=5 120000/100000 1.20000

基于lxcfs对容器的内存及CPU的资源限制

问题:使用systemd限制资源后,进入资源查看,还是宿主机的信息,这个如何解决?

# 资源限制为512M,但是显示的还是宿主机的资源(4G) root@docker-server1:~# docker run -it --cpus 1.2 -m 512M centos:7.9.2009 [root@a9c8ff6ec11e /]# free -h total used free shared buff/cache available Mem: 3.8G 377M 2.8G 1.3M 608M 3.2G # 如果想显示的准,使用lxc 容器内⾥⾯是从/proc/cpuinfo中获取到 CPU 的核数,但是容器⾥⾯的/proc⽂件系统是物理机的,内存也是显示的宿主机的/proc/meminfo的信息,因此不准确,⽽lxcfs 则是通过⽂件挂载的⽅式,把 宿主机cgroup 中关于系统的相关信息读取出来,通过 docker 的 volume 挂载给容器内部的 proc 系统,然后让 docker 内的应⽤读取proc 中信息的时候以为就是读取的宿主机的真实的 proc。 # 查看内存和cpu的详细信息 root@docker-server1:~# cat /proc/cpuinfo root@docker-server1:~# cat /proc/meminfo

# lxc的安装 root@docker-server1:~# apt install -y lxcfs # 限制磁盘大小报错 root@docker-server1:~# docker run -it -m 256m --storage-opt size=10G centos:7.9.2009 docker: Error response from daemon: --storage-opt is supported only for overlay over xfs with 'pquota' mount option. # 解决: # /etc/fstab文件, 添加pquota root@docker-server1:~# grep docker /etc/fstab UUID=5dfdf42a-fcc8-4370-8851-0382d733a527 /data/docker xfs defaults,pquota 0 0 # 修改grub文件,添加rootflags=pquota root@docker-server1:~# grep rootflag /etc/default/grub GRUB_CMDLINE_LINUX="net.ifnames=0 biosdevname=0 rootflags=pquota" # 更新grub文件 root@docker-server1:~# update-grub # 重启 root@docker-server1:~# reboot

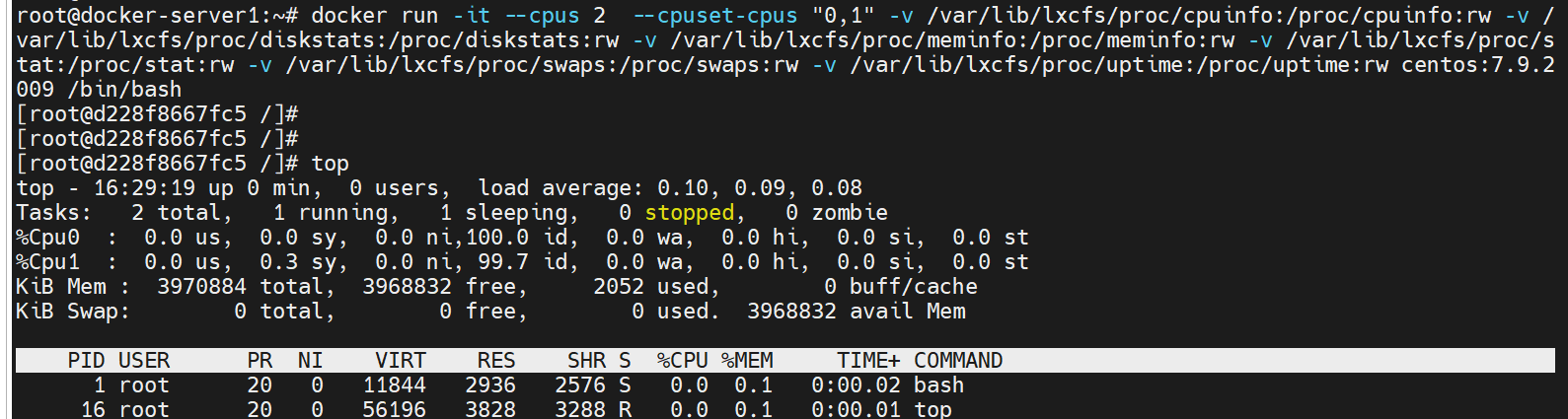

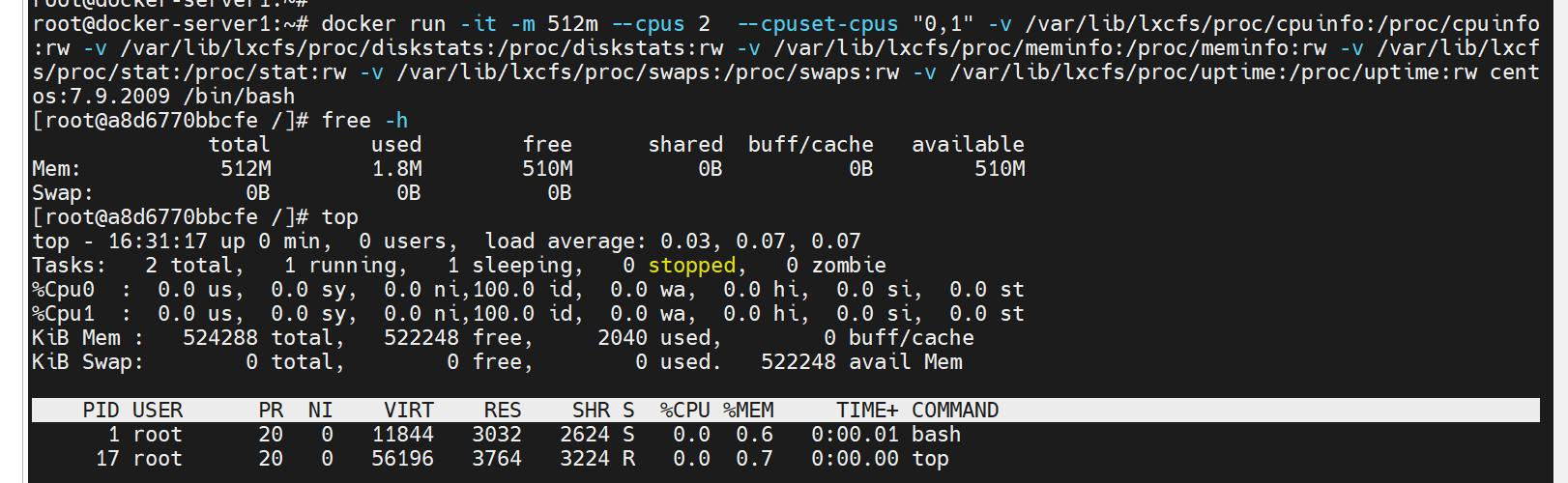

# 限制存储是10G,df -h 发现显示是准确的 root@docker-server1:~# docker run -it -m 256m --storage-opt size=10G centos:7.9.2009 [root@89a94990f5c9 /]# df -h Filesystem Size Used Avail Use% Mounted on overlay 10G 8.0K 10G 1% / tmpfs 64M 0 64M 0% /dev shm 64M 0 64M 0% /dev/shm /dev/sda4 50G 811M 50G 2% /etc/hosts tmpfs 1.9G 0 1.9G 0% /proc/asound tmpfs 1.9G 0 1.9G 0% /proc/acpi tmpfs 1.9G 0 1.9G 0% /proc/scsi tmpfs 1.9G 0 1.9G 0% /sys/firmware # 限制内存为指定⼤⼩ docker run -it -m 256m \\ -v /var/lib/lxcfs/proc/cpuinfo:/proc/cpuinfo:rw \\ -v /var/lib/lxcfs/proc/diskstats:/proc/diskstats:rw \\ -v /var/lib/lxcfs/proc/meminfo:/proc/meminfo:rw \\ -v /var/lib/lxcfs/proc/stat:/proc/stat:rw \\ -v /var/lib/lxcfs/proc/swaps:/proc/swaps:rw \\ -v /var/lib/lxcfs/proc/uptime:/proc/uptime:rw \\ centos:7.9.2009 /bin/bash root@docker-server1:~# docker run -it -m 256m \\ -v /var/lib/lxcfs/proc/cpuinfo:/proc/cpuinfo:rw \\ -v /var/lib/lxcfs/proc/diskstats:/proc/diskstats:rw \\ -v /var/lib/lxcfs/proc/meminfo:/proc/meminfo:rw \\ -v /var/lib/lxcfs/proc/stat:/proc/stat:rw \\ -v /var/lib/lxcfs/proc/swaps:/proc/swaps:rw \\ -v /var/lib/lxcfs/proc/uptime:/proc/uptime:rw \\ centos:7.9.2009 /bin/bash [root@3db60a55d064 /]# free -h total used free shared buff/cache available Mem: 256M 2.0M 251M 0B 2.4M 254M Swap: 0B 0B 0B # 限制只能使⽤1核CPU并绑定⾄id为2的cpu核⼼ # 通过top命令查看发现只有2个cpu # 需要同时指定 --cpus 和 --cpuset-cpus 这两个参数 docker run -it --cpus 2 --cpuset-cpus "0,1" \\ -v /var/lib/lxcfs/proc/cpuinfo:/proc/cpuinfo:rw \\ -v /var/lib/lxcfs/proc/diskstats:/proc/diskstats:rw \\ -v /var/lib/lxcfs/proc/meminfo:/proc/meminfo:rw \\ -v /var/lib/lxcfs/proc/stat:/proc/stat:rw \\ -v /var/lib/lxcfs/proc/swaps:/proc/swaps:rw \\ -v /var/lib/lxcfs/proc/uptime:/proc/uptime:rw \\ centos:7.9.2009 /bin/bash

# 同时限制CPU和内存 root@docker-server1:~# docker run -it -m 512m --cpus 2 --cpuset-cpus "0,1" \\ -v /var/lib/lxcfs/proc/cpuinfo:/proc/cpuinfo:rw \\ -v /var/lib/lxcfs/proc/diskstats:/proc/diskstats:rw \\ -v /var/lib/lxcfs/proc/meminfo:/proc/meminfo:rw \\ -v /var/lib/lxcfs/proc/stat:/proc/stat:rw \\ -v /var/lib/lxcfs/proc/swaps:/proc/swaps:rw \\ -v /var/lib/lxcfs/proc/uptime:/proc/uptime:rw \\ centos:7.9.2009 /bin/bash

# 同时限制内存和磁盘空间 root@docker-server1:~# docker run -it -m 256m --storage-opt size=10G \\ -v /var/lib/lxcfs/proc/cpuinfo:/proc/cpuinfo:rw \\ -v /var/lib/lxcfs/proc/diskstats:/proc/diskstats:rw \\ -v /var/lib/lxcfs/proc/meminfo:/proc/meminfo:rw \\ -v /var/lib/lxcfs/proc/stat:/proc/stat:rw \\ -v /var/lib/lxcfs/proc/swaps:/proc/swaps:rw \\ -v /var/lib/lxcfs/proc/uptime:/proc/uptime:rw \\ centos:7.9.2009 /bin/bash [root@688522b55372 /]# free -h total used free shared buff/cache available Mem: 256M 1.8M 254M 0B 0B 254M Swap: 0B 0B 0B [root@688522b55372 /]# df -h Filesystem Size Used Avail Use% Mounted on overlay 10G 8.0K 10G 1% / tmpfs 64M 0 64M 0% /dev shm 64M 0 64M 0% /dev/shm /dev/sda4 50G 811M 50G 2% /etc/hosts tmpfs 1.9G 0 1.9G 0% /proc/asound tmpfs 1.9G 0 1.9G 0% /proc/acpi tmpfs 1.9G 0 1.9G 0% /proc/scsi tmpfs 1.9G 0 1.9G 0% /sys/firmware # 以下是限制限制磁盘IO和磁盘速录,用的比较少 # 限制磁盘IO docker run -it -m 256m --cpus 1 --cpuset-cpus "2" --device-read-iops /dev/sdb:10 -- device-write-iops /dev/sdb:10 \\ -v /var/lib/lxcfs/proc/cpuinfo:/proc/cpuinfo:rw \\ -v /var/lib/lxcfs/proc/diskstats:/proc/diskstats:rw \\ -v /var/lib/lxcfs/proc/meminfo:/proc/meminfo:rw \\ -v /var/lib/lxcfs/proc/stat:/proc/stat:rw \\ -v /var/lib/lxcfs/proc/swaps:/proc/swaps:rw \\ -v /var/lib/lxcfs/proc/uptime:/proc/uptime:rw \\ centos:7.9.2009 /bin/bash # 限制磁盘速录 docker run -it -m 256m --cpus 1 --device-read-bps /dev/vda:10MB --device-write-bps /dev/vda:10MB \\ -v /var/lib/lxcfs/proc/cpuinfo:/proc/cpuinfo:rw \\ -v /var/lib/lxcfs/proc/diskstats:/proc/diskstats:rw \\ -v /var/lib/lxcfs/proc/meminfo:/proc/meminfo:rw \\ -v /var/lib/lxcfs/proc/stat:/proc/stat:rw \\ -v /var/lib/lxcfs/proc/swaps:/proc/swaps:rw \\ -v /var/lib/lxcfs/proc/uptime:/proc/uptime:rw \\ ubuntu:22.04 /bin/bash