Hive [Error 10293]: Unable to create temp file for insert values File 报错解决

Scenario:

- Hadoop、Hive安装、配置好之后,

- 创建表成功(表名:student)

- hive> select * from student 不报错

- hive> insert into table student values (101, 'leo'); 报错:

FAILED: SemanticException [Error 10293]: Unable to create temp file for insert values File /tmp/hive/root/63412761-6637-49eb-8a22-d06563b9b6ad/_tmp_space.db/Values__Tmp__Table__1/data_file could only be replicated to 0 nodes instead of minReplication (=1). There are 0 datanode(s) running and no node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(BlockManager.java:1625)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getNewBlockTargets(FSNamesystem.java:3127)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:3051)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:725)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:493)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:616)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:982)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2217)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2213)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1754)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2213)

Investigation:

1. 自查,发现hive/conf/里,发现hive-env.sh.template没有修改为hive-env.sh -- 囧,低级错误

重新操作hive> insert …… 后发现问题并没有解决!但响应metadata创建了(刚才./hive并没有创建)

但是,问题仍未解决

2. 上网查,建议hadoop删除tmp目录(hadoop fs -rm -r /tmp) ====> 格式化datanode(hadoop datanode -format)====> 创建/tmp目录(hadoop fs -mkdir /tmp)====> 重启hive,

无效,问题仍未解决

3. 还是上网查,发现有高手提出因为防火墙未关闭,导致master看不到slave上的node,对比报错提示“There are 0 datanode(s) running and no node(s) ”,确实感觉hadoop没有识别到对应node,

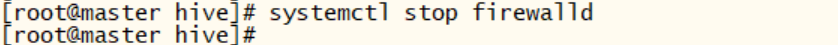

因此关闭master和slave上的防火墙(systemctl stop firewalld)

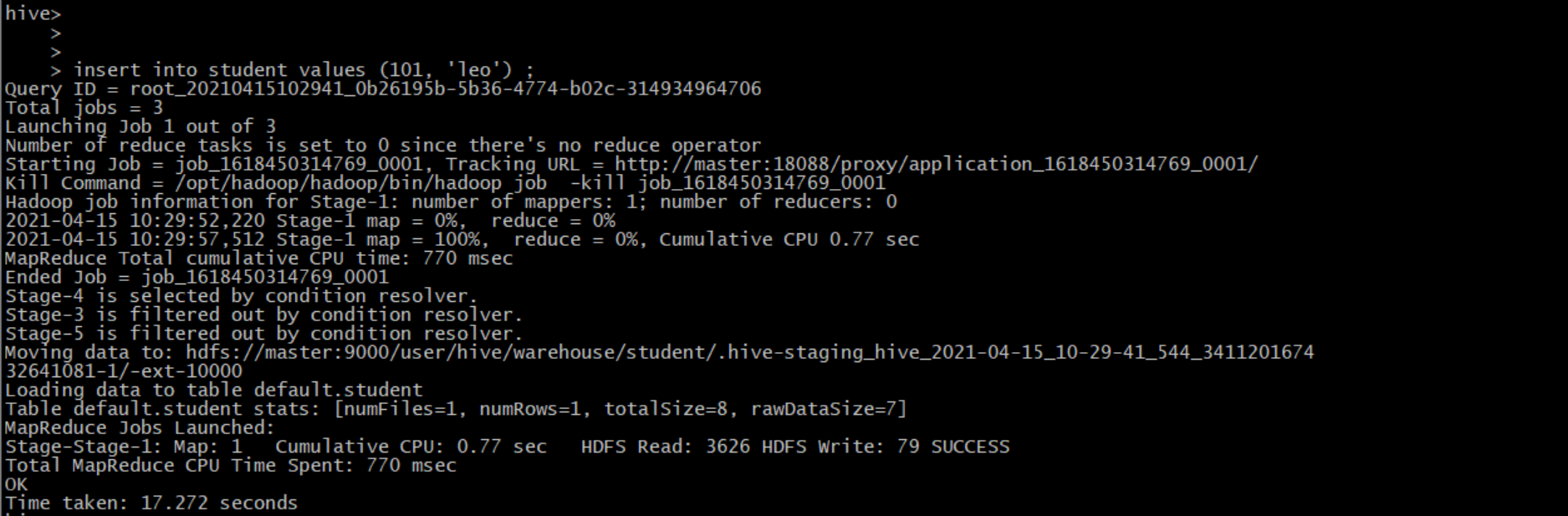

重新进入hive,insert操作成功!

Resolution:

1. 报错

2. 关闭master和slave的防火墙 systemctl stop firewalld

3.解决,insert执行成功