关系抽取----远程监督 --《 Fine-tuning Pre-Trained Transformer Language Models to Distantly Supervised Relation Extraction》

一。概述

远程监督的关系抽取目前的聚焦点在如何去消除噪音。主要方法有多实例的学习方法和提供语言或语境的信息去引导关系分类。尽管取得了sota,但是这些模型都只是在有限的关系集合中取得高的精度,而忽视了关系有很多种,模型缺少泛化能力。

对此,本文提出了一种基于预训练语言模型的远程监督方法。

由于GPT和及其相似地的其他预语言模型已经被证明能够捕获语义和语法特征,以及显著数量的“常识”知识,我们假设这些是识别更多样化关系集的重要特征。

首先在plain text的文本进行预训练,之后在 NYT10 数据集上进行微调。证明在很大的关系集合上都取得的很高的置信度。

二。相关工作。

1.关于 关系抽取RE

① 多实例学习的方法:

Mike Mintz, Steven Bills, Rion Snow, and Daniel Jurafsky. 2009. Distant supervision for relation extraction without labeled data. In ACL/IJCNLP.

Mihai Surdeanu, Julie Tibshirani, Ramesh Nallapati, and Christopher D. Manning. 2012. Multi-instance Multi-label Learning for Relation Extraction. In Proceedings of the 2012 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning, EMNLP-CoNLL ’12, pages 455–465, Stroudsburg, PA, USA. Association for Computational Linguistics

Yankai Lin, Shiqi Shen, Zhiyuan Liu, Huanbo Luan, and Maosong Sun. 2016. Neural Relation Extraction with Selective Attention over Instances. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 2124–2133, Berlin, Germany. Association for Computational Linguistics.

② 增添(提供)语义和语法知识:

Daojian Zeng, Kang Liu, Siwei Lai, Guangyou Zhou, and Jun Zhao. 2014. Relation classification via convolutional deep neural network. In Proceedings of COLING 2014, the 25th International Conference on Computational Linguistics: Technical Papers, pages 2335–2344. Dublin City University and Association for Computational Linguistics.

Yuhao Zhang, Peng Qi, and Christopher D. Manning. 2018b. Graph Convolution over Pruned Dependency Trees Improves Relation Extraction. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, pages 2205– 2215, Brussels, Belgium. Association for Computational Linguistics.

③ 利用一些侧面信息(比如:关系别名,实体类型。。。。)

Shikhar Vashishth, Rishabh Joshi, Sai Suman Prayaga, Chiranjib Bhattacharyya, and Partha Talukdar. 2018. Reside: Improving distantly-supervised neural relation extraction using side information. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, pages 1257–1266. Association for Computational Linguistics.

2. 关于语言模型LM

Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. In Advances in Neural Information Processing Systems, pages 5998–6008. 提出transfromer.

Alec Radford, Karthik Narasimhan, Tim Salimans, and Ilya Sutskever. 2018. Improving language understanding by generative pre-training. available as a preprint. (可以证明在微调过程中引入语言建模作为辅助目标可以提高泛化能力并加快收敛速度,在损失函数中应用了, 见 三.3中的loss function)

Matthew E. Peters, Mark Neumann, Mohit Iyyer, Matt Gardner, Christopher Clark, Kenton Lee, and Luke S. Zettlemoyer. 2018. Deep contextualized word representations. In NAACL-HLT.

这两个论文证明可以仅通过无监督的预训练,就捕获有用的语义和语法属性

Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2018. BERT: pre-training of deep bidirectional transformers for language understanding. Computing Research Repository (CoRR), abs/1810.04805. (此论文加上上面3个,证明在广泛的自然语言处理任务上的获得最新性能)

Christoph Alt, Marc Hubner, and Leonhard Hennig. ¨ 2019. Improving relation extraction by pre-trained language representations. In Proceedings of the 2019 Conference on Automated Knowledge BaseConstruction, Amherst, Massachusetts. 证明在有监督的关系抽取中也取得很好的性能。

Alec Radford, Jeff Wu, Rewon Child, David Luan, Dario Amodei, and Ilya Sutskever. 2019. Language models are unsupervised multitask learners. 发现语言模型在回答开放领域的问题时表现得相当好,而没有经过实际任务的训练,这表明它们只掌握了一定的“常识”知识。

因此,作者假设,预训练的语言模型为远程监控提供了更强的信号,基于非监督预训练过程中获得的知识更好地指导关系提取。用隐式特征代替显式语言和侧信息可以提高领域独立性和语言独立性,增加识别关系的多样性,最小化显式特征提取并减少错误积累的风险。

三。 Transformer Language Model

1.Transformer-Decoder

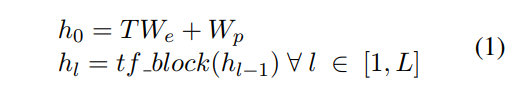

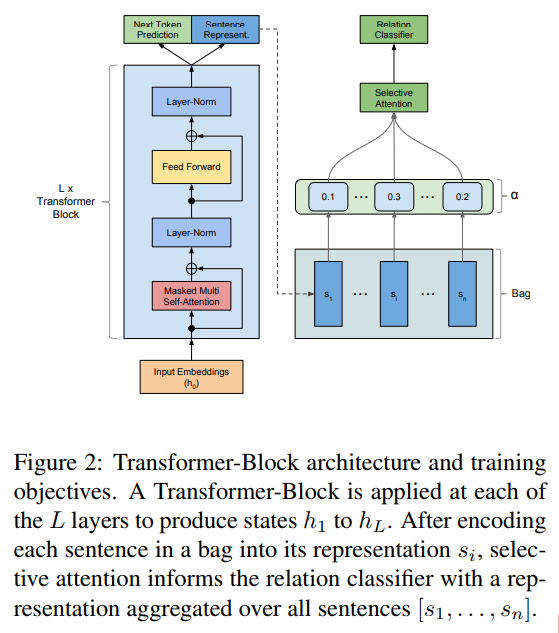

首先,transformer-decoder(如图),是transfomer的decoder的一种变种。由masked multi-head self-attention和 feed forward操作组成

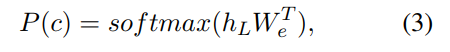

h0可以是第一个模块的输入,其中,T是一个矩阵,有多少个token,T就有多少行,每一行是一个one-hot向量,代表这个token在这个句子的位置。We是这个句子的embedding矩阵,Wp是这个位置embedding矩阵。transformer-decoder有L层。

2. Unsupervised Pre-training of Language Representations(不解释)

四. Multi-Instance Learning with the Transformer ( 正文 微调)

1. Distantly Supervised Fine-tuning on Relation Extraction

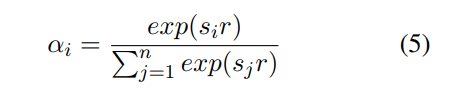

关于selective attention,可以参考刘知远教授2016年的论文(Neural Relation Extraction with Selective Attention over Instances)。这里只给出公式。

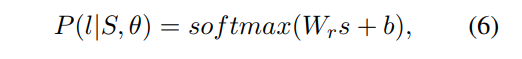

那么,如果把一个bag的句子集合用S表示,那么就可以这么说,给定一个S,和训练的参数,最大化这个实体对的关系label.其中,Wr表示关系的矩阵,b是偏置。

那么,在微调的时候,我们的损失函数可以这么设定:

由于Radford在论文中《 Improving language understanding by generative pre-training》说的,在微调过程中引入语言建模作为辅助目标可以提高泛化能力并加快收敛速度,那么损失函数可以这么设定:

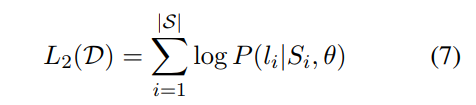

2.Input Representation

关于模型的输入,还是有一定学问的。

首先,把一个sentence 进行token.算法是 byte pair encoding (BPE) 详情可以看 http://www.luyixian.cn/news_show_73867.aspx

[关系][entity1][sep][entity2][sep][sentence][clf]

clf可以作为最后的生成用于关系分类的句子表示。

四。实验

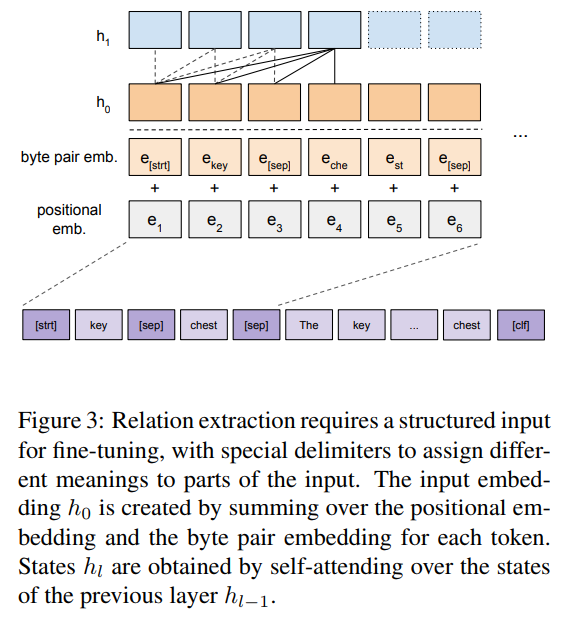

在NYT 实验。

预训练(原因:预训练在计算上是昂贵的,我们的主要目标是通过对远程监督关系提取任务的微调来显示其有效性); 直接使用了 Radford的《Improving language understanding by generative pre-training》的模型,使用的语料是

Yukun Zhu, Ryan Kiros, Richard S. Zemel, Ruslan Salakhutdinov, Raquel Urtasun, Antonio Torralba, and Sanja Fidler. 2015. Aligning books and movies: Towards story-like visual explanations by watching movies and reading books. 2015 IEEE International Conference on Computer Vision (ICCV), pages 19– 27.

超参数设定:

使用 Adam optimization β1 = 0.9, β2 = 0.999

a batch size of 8

a learning rate of 6.25e-5

3 epochs

attention dropout with a rate of 0.1

classifier dropout with a rate of 0.2

相关工作简述:

RE的初始工作使用统计分类器或基于内核的方法,并结合离散的语法特征,如词性和命名实体标记、形态特征和WordNet hypernyms

Mike Mintz, Steven Bills, Rion Snow, and Daniel Jurafsky. 2009. Distant supervision for relation extraction without labeled data. In ACL/IJCNLP.

Iris Hendrickx, Su Nam Kim, Zornitsa Kozareva, Preslav Nakov, Diarmuid O S ´ eaghdha, Sebastian ´ Pado, Marco Pennacchiotti, Lorenza Romano, and ´ Stan Szpakowicz. 2010. Semeval-2010 Task 8: Multi-Way Classification of Semantic Relations between Pairs of Nominals. In SemEval@ACL.

这些方法已经被基于序列的方法取代,包括递归方法

Richard Socher, Brody Huval, Christopher D. Manning, and Andrew Y. Ng. 2012. Semantic compositionality through recursive matrix-vector spaces. In EMNLP-CoNLL.

Dongxu Zhang and Dong Wang. 2015. Relation classification via recurrent neural network. arXiv preprint arXiv:1508.01006.

和卷积神经网络

Daojian Zeng, Kang Liu, Siwei Lai, Guangyou Zhou, and Jun Zhao. 2014. Relation classification via convolutional deep neural network. In Proceedings of COLING 2014, the 25th International Conference on Computational Linguistics: Technical Papers, pages 2335–2344. Dublin City University and Association for Computational Linguistics.

Daojian Zeng, Kang Liu, Yubo Chen, and Jun Zhao. 2015. Distant Supervision for Relation Extraction via Piecewise Convolutional Neural Networks. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, pages 1753–1762, Lisbon, Portugal. Association for Computational Linguistics.

因此,离散特征被词语和句法特征的分布式表示所取代:

Joseph P. Turian, Lev-Arie Ratinov, and Yoshua Bengio. 2010. Word representations: A simple and general method for semi-supervised learning. In ACL.

Jeffrey Pennington, Richard Socher, and Christopher D. Manning. 2014. Glove: Global vectors for word representation. In EMNLP.

最短依赖路径(SDP)信息集成到基于lstm的关系分类模型中:

Kun Xu, Yansong Feng, Songfang Huang, and Dongyan Zhao. 2015a. Semantic relation classification via convolutional neural networks with simple negative sampling. In EMNLP.

Yan Xu, Lili Mou, Ge Li, Yunchuan Chen, Hao Peng, and Zhi Jin. 2015b. Classifying relations via long short term memory networks along shortest dependency paths. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, pages 1785–1794. Association for Computational Linguistics.

考虑到SDP是有用的关系分类,因为它集中在action和agent在一个句子:

Razvan C. Bunescu and Raymond J. Mooney. 2005. A Shortest Path Dependency Kernel for Relation Extraction. In Proceedings of the Conference on Human Language Technology and Empirical Methods in Natural Language Processing, HLT ’05, pages 724–731. Association for Computational Linguistics.

Richard Socher, Andrej Karpathy, Quoc V. Le, Christopher D. Manning, and Andrew Y. Ng. 2014. Grounded compositional semantics for finding and describing images with sentences. TACL, 2:207– 218.

Yuhao Zhang, Peng Qi, and Christopher D. Manning. 2018b. Graph Convolution over Pruned Dependency Trees Improves Relation Extraction. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, pages 2205– 2215, Brussels, Belgium. Association for Computational Linguistics.

通过对依赖树应用剪枝和图卷积的组合,为TACRED数据集上的关系提取建立了一种新的技术。

远程监督:

multi-instance learning:

Sebastian Riedel, Limin Yao, and Andrew McCallum. 2010. Modeling Relations and Their Mentions without Labeled Text. In Proceedings of the European Conference on Machine Learning and Knowledge Discovery in Databases (ECML PKDD ’10).

multi-instance multi-label learning:

Mihai Surdeanu, Julie Tibshirani, Ramesh Nallapati, and Christopher D. Manning. 2012. Multi-instance Multi-label Learning for Relation Extraction. In Proceedings of the 2012 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning, EMNLP-CoNLL ’12, pages 455–465, Stroudsburg, PA, USA. Association for Computational Linguistics.

Raphael Hoffmann, Congle Zhang, Xiao Ling, Luke Zettlemoyer, and Daniel S. Weld. 2011. Knowledge-Based Weak Supervision for Information Extraction of Overlapping Relations. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, pages 541–550, Portland, Oregon, USA. Association for Computational Linguistics.

NN普及:

PCNN, selective attention

adversarial training:

Yi Wu, David Bamman, and Stuart Russell. 2017. Adversarial training for relation extraction. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, pages 1778–1783. Association for Computational Linguistics.

Pengda Qin, Weiran XU, and William Yang Wang. 2018. Dsgan: Generative adversarial training for distant supervision relation extraction. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 496–505. Association for Computational Linguistics.

noise models:

Bingfeng Luo, Yansong Feng, Zheng Wang, Zhanxing Zhu, Songfang Huang, Rui Yan, and Dongyan Zhao. 2017. Learning with noise: Enhance distantly supervised relation extraction with dynamic transition matrix. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 430–439. Association for Computational Linguistics.

soft labeling:

Tianyu Liu, Kexiang Wang, Baobao Chang, and Zhifang Sui. 2017. A soft-label method for noisetolerant distantly supervised relation extraction. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, pages 1790–1795. Association for Computational Linguistics.

此外,语言和语义背景知识对任务很有帮助,但是提议的系统通常依赖于显式特性,比如依赖树、命名实体类型和关系别名:

Shikhar Vashishth, Rishabh Joshi, Sai Suman Prayaga, Chiranjib Bhattacharyya, and Partha Talukdar. 2018. Reside: Improving distantly-supervised neural relation extraction using side information. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, pages 1257–1266. Association for Computational Linguistics.

Yadollah Yaghoobzadeh, Heike Adel, and Hinrich Schutze. 2017. ¨ Noise mitigation for neural entity typing and relation extraction. In Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics: Volume 1, Long Papers, pages 1183–1194. Association for Computational Linguistics.

浙公网安备 33010602011771号

浙公网安备 33010602011771号