二进制 k8s集群(docker)

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.24.md#client-binaries 官方二进制下载站点

一、环境

节点信息

本环境使用阿里云,API Server 高可用通过阿里云SLB实现,如果环境不在云上,可以通过 Nginx + Keepalived,或者 HaProxy + Keepalived等实现。

服务版本与K8S集群说明

阿里slb设置TCP监听,监听6443端口(通过四层负载到master apiserver)。- 所有

阿里云ECS主机使用CentOS 7.6.1810版本,并且内核都升到5.x版本。 - K8S 集群使用

Iptables 模式(kube-proxy 注释中预留Ipvs模式配置) - Calico 使用

IPIP模式 - 集群使用默认

svc.cluster.local 10.10.0.1为集群 kubernetes svc 解析ip- Docker CE version 19.03.6

- Kubernetes Version 1.18.2

- Etcd Version v3.4.7

- Calico Version v3.14.0

- Coredns Version 1.6.7

- Metrics-Server Version v0.3.6

Service 和 Pods Ip 段划分

1 2 3 | $ kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.10.0.1 <none> 443/TCP 6d23h |

Service 和 Pods Ip 段划分

二、环境初始化

所有集群节点都需要初始化

2.1 停止所有机器 firewalld 防火墙

1 2 | $ systemctl stop firewalld$ systemctl disable firewalld |

2.2 关闭 swap

1 2 | $ swapoff -a $ sed -i 's/.*swap.*/#&/' /etc/fstab |

2.3 关闭 Selinux

1 2 3 4 5 | $ setenforce 0 $ sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/sysconfig/selinux$ sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config$ sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/sysconfig/selinux$ sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/selinux/config |

2.4 设置主机名、升级内核、安装 Docker ce

运行下面 init.sh shell 脚本,脚本完成下面四项任务:

- 设置服务器

hostname - 安装

k8s依赖环境 升级系统内核(升级Centos7系统内核,解决Docker-ce版本兼容问题)- 安装

docker ce19.03.6 版本

在每台机器上运行 init.sh 脚本,示例如下:

Ps:init.sh 脚本只用于 Centos,支持 重复运行。

1 2 3 4 5 | # k8s-master1 机器运行,init.sh 后面接的参数是设置 k8s-master1 服务器主机名$ chmod +x init.sh && ./init.sh k8s-master1# 执行完 init.sh 脚本,请重启服务器$ reboot |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 | #!/usr/bin/bashfunction Check_linux_system(){ linux_version=`cat /etc/redhat-release` if [[ ${linux_version} =~ "CentOS" ]];then echo -e "\033[32;32m 系统为 ${linux_version} \033[0m \n" else echo -e "\033[32;32m 系统不是CentOS,该脚本只支持CentOS环境\033[0m \n" exit 1 fi}function Set_hostname(){ if [ -n "$HostName" ];then grep $HostName /etc/hostname && echo -e "\033[32;32m 主机名已设置,退出设置主机名步骤 \033[0m \n" && return case $HostName in help) echo -e "\033[32;32m bash init.sh 主机名 \033[0m \n" exit 1 ;; *) hostname $HostName echo "$HostName" > /etc/hostname echo "`ifconfig eth0 | grep inet | awk '{print $2}'` $HostName" >> /etc/hosts ;; esac else echo -e "\033[32;32m 输入为空,请参照 bash init.sh 主机名 \033[0m \n" exit 1 fi}function Install_depend_environment(){ rpm -qa | grep nfs-utils &> /dev/null && echo -e "\033[32;32m 已完成依赖环境安装,退出依赖环境安装步骤 \033[0m \n" && return yum install -y nfs-utils curl yum-utils device-mapper-persistent-data lvm2 net-tools conntrack-tools wget vim ntpdate libseccomp libtool-ltdl telnet echo -e "\033[32;32m 升级Centos7系统内核到5版本,解决Docker-ce版本兼容问题\033[0m \n" rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org && \ rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm && \ yum --disablerepo=\* --enablerepo=elrepo-kernel repolist && \ yum --disablerepo=\* --enablerepo=elrepo-kernel install -y kernel-ml.x86_64 && \ yum remove -y kernel-tools-libs.x86_64 kernel-tools.x86_64 && \ yum --disablerepo=\* --enablerepo=elrepo-kernel install -y kernel-ml-tools.x86_64 && \ grub2-set-default 0 modprobe br_netfilter cat <<EOF > /etc/sysctl.d/k8s.confnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1net.ipv4.ip_forward = 1EOF sysctl -p /etc/sysctl.d/k8s.conf ls /proc/sys/net/bridge}function Install_docker(){ rpm -qa | grep docker && echo -e "\033[32;32m 已安装docker,退出安装docker步骤 \033[0m \n" && return yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo yum makecache fast yum -y install docker-ce-19.03.6 docker-ce-cli-19.03.6 # 设置 iptables file表中 FORWARD 默认链规则为 ACCEPT sed -i '/ExecStart=/i ExecStartPost=\/sbin\/iptables -P FORWARD ACCEPT' /usr/lib/systemd/system/docker.service systemctl enable docker.service systemctl start docker.service systemctl stop docker.service echo '{"registry-mirrors": ["https://4xr1qpsp.mirror.aliyuncs.com"], "log-opts": {"max-size":"500m", "max-file":"3"}}' > /etc/docker/daemon.json systemctl daemon-reload systemctl start docker}# 初始化顺序HostName=$1Check_linux_system && \Set_hostname && \Install_depend_environment && \Install_docker |

三、Kubernetes 部署

部署顺序

- 1、自签TLS证书

- 2、部署Etcd集群

- 3、创建 metrics-server 证书

- 4、获取K8S二进制包

- 5、创建Node节点kubeconfig文件

- 6、配置Master组件并运行

- 7、配置kubelet证书自动续期和创建Node授权用户

- 8、配置Node组件并运行

- 9、安装calico网络,使用IPIP模式

- 10、集群CoreDNS部署

- 11、部署集群监控服务 Metrics Server

- 12、部署 Kubernetes Dashboard

3.1 自签TLS证书

在 k8s-master1 安装证书生成工具 cfssl,并生成相关证书

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | # 创建目录用于存放 SSL 证书$ mkdir /data/ssl -p# 下载生成证书命令$ wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64$ wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64$ wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64# 添加执行权限$ chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64# 移动到 /usr/local/bin 目录下$ mv cfssl_linux-amd64 /usr/local/bin/cfssl$ mv cfssljson_linux-amd64 /usr/local/bin/cfssljson$ mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo# 进入证书目录$ cd /data/ssl/# 创建 certificate.sh 脚本$ vim certificate.sh |

PS:证书有效期为 10年

脚本如下:根据自己的环境修改 certificate.sh 脚本

1 2 3 4 5 6 7 8 9 | "192.168.0.216", "192.168.0.217", "192.168.0.218", "10.10.0.1", "lb.ypvip.com.cn", 修改完脚本,然后执行$ bash certificate.sh |

# cat certificate.sh

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 | cat > ca-config.json <<EOF{ "signing": { "default": { "expiry": "87600h" }, "profiles": { "kubernetes": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } }}EOFcat > ca-csr.json <<EOF{ "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Beijing", "ST": "Beijing", "O": "k8s", "OU": "System" } ]}EOFcfssl gencert -initca ca-csr.json | cfssljson -bare ca -#-----------------------cat > server-csr.json <<EOF{ "CN": "kubernetes", "hosts": [ "127.0.0.1", "192.168.0.216", "192.168.0.217", "192.168.0.218", "10.10.0.1", "lb.ypvip.com.cn", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ]}EOFcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server#-----------------------cat > admin-csr.json <<EOF{ "CN": "admin", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "system:masters", "OU": "System" } ]}EOFcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin#-----------------------cat > kube-proxy-csr.json <<EOF{ "CN": "system:kube-proxy", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ]}EOFcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy |

3.2 部署Etcd集群

1 | k8s-master1 机器上操作,把执行文件copy到 k8s-master2 k8s-master3 |

二进制包下载地址:https://github.com/etcd-io/etcd/releases/download/v3.4.7/etcd-v3.4.7-linux-amd64.tar.gz

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | # 创建存储etcd数据目录$ mkdir /data/etcd/# 创建 k8s 集群配置目录$ mkdir /opt/kubernetes/{bin,cfg,ssl} -p# 下载二进制etcd包,并把执行文件放到 /opt/kubernetes/bin/ 目录$ cd /data/etcd/$ wget https://github.com/etcd-io/etcd/releases/download/v3.4.7/etcd-v3.4.7-linux-amd64.tar.gz$ tar zxvf etcd-v3.4.7-linux-amd64.tar.gz$ cd etcd-v3.4.7-linux-amd64$ cp -a etcd etcdctl /opt/kubernetes/bin/# 把 /opt/kubernetes/bin 目录加入到 PATH$ echo 'export PATH=$PATH:/opt/kubernetes/bin' >> /etc/profile$ source /etc/profile |

登陆到 k8s-master2 和 k8s-master3 服务器上操作

1 2 3 4 5 6 7 | # 创建 k8s 集群配置目录$ mkdir /data/etcd$ mkdir /opt/kubernetes/{bin,cfg,ssl} -p# 把 /opt/kubernetes/bin 目录加入到 PATH$ echo 'export PATH=$PATH:/opt/kubernetes/bin' >> /etc/profile$ source /etc/profile |

登陆到 k8s-master1 操作

1 2 3 4 5 6 7 8 9 | # 进入 K8S 集群证书目录$ cd /data/ssl# 把证书 copy 到 k8s-master1 机器 /opt/kubernetes/ssl/ 目录$ cp ca*pem server*pem /opt/kubernetes/ssl/# 把etcd执行文件与证书 copy 到 k8s-master2 k8s-master3 机器 scp -r /opt/kubernetes/* root@k8s-master2:/opt/kubernetesscp -r /opt/kubernetes/* root@k8s-master3:/opt/kubernetes |

1 2 3 4 | $ cd /data/etcd# 编写 etcd 配置文件脚本$ vim etcd.sh<br>#!/bin/bash<br><br>ETCD_NAME=${1:-"etcd01"}<br>ETCD_IP=${2:-"127.0.0.1"}<br>ETCD_CLUSTER=${3:-"etcd01=https://127.0.0.1:2379"}<br><br>cat <<EOF >/opt/kubernetes/cfg/etcd.yml<br>name: ${ETCD_NAME}<br>data-dir: /var/lib/etcd/default.etcd<br>listen-peer-urls: https://${ETCD_IP}:2380<br>listen-client-urls: https://${ETCD_IP}:2379,https://127.0.0.1:2379<br><br>advertise-client-urls: https://${ETCD_IP}:2379<br>initial-advertise-peer-urls: https://${ETCD_IP}:2380<br>initial-cluster: ${ETCD_CLUSTER}<br>initial-cluster-token: etcd-cluster<br>initial-cluster-state: new<br><br>client-transport-security:<br> cert-file: /opt/kubernetes/ssl/server.pem<br> key-file: /opt/kubernetes/ssl/server-key.pem<br> client-cert-auth: false<br> trusted-ca-file: /opt/kubernetes/ssl/ca.pem<br> auto-tls: false<br><br>peer-transport-security:<br> cert-file: /opt/kubernetes/ssl/server.pem<br> key-file: /opt/kubernetes/ssl/server-key.pem<br> client-cert-auth: false<br> trusted-ca-file: /opt/kubernetes/ssl/ca.pem<br> auto-tls: false<br><br>debug: false<br>logger: zap<br>log-outputs: [stderr]<br>EOF<br><br>cat <<EOF >/usr/lib/systemd/system/etcd.service<br>[Unit]<br>Description=Etcd Server<br>Documentation=https://github.com/etcd-io/etcd<br>Conflicts=etcd.service<br>After=network.target<br>After=network-online.target<br>Wants=network-online.target<br><br>[Service]<br>Type=notify<br>LimitNOFILE=65536<br>Restart=on-failure<br>RestartSec=5s<br>TimeoutStartSec=0<br>ExecStart=/opt/kubernetes/bin/etcd --config-file=/opt/kubernetes/cfg/etcd.yml<br><br>[Install]<br>WantedBy=multi-user.target<br>EOF<br><br>systemctl daemon-reload<br>systemctl enable etcd<br>systemctl restart etcd |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | # 执行 etcd.sh 生成配置脚本$ chmod +x etcd.sh$ ./etcd.sh etcd01 192.168.0.216 etcd01=https://192.168.0.216:2380,etcd02=https://192.168.0.217:2380,etcd03=https://192.168.0.218:2380# 查看 etcd 是否启动正常$ ps -ef | grep etcd $ netstat -ntplu | grep etcdtcp 0 0 192.168.0.216:2379 0.0.0.0:* LISTEN 1558/etcdtcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 1558/etcdtcp 0 0 192.168.0.216:2380 0.0.0.0:* LISTEN 1558/etcd# 把 etcd.sh 脚本 copy 到 k8s-master2 k8s-master3 机器上$ scp /data/etcd/etcd.sh root@k8s-master2:/data/etcd/$ scp /data/etcd/etcd.sh root@k8s-master3:/data/etcd/ |

登陆到 k8s-master2 操作

1 2 3 4 5 6 7 | # 执行 etcd.sh 生成配置脚本$ chmod +x etcd.sh$ ./etcd.sh etcd02 192.168.0.217 etcd01=https://192.168.0.216:2380,etcd02=https://192.168.0.217:2380,etcd03=https://192.168.0.218:2380# 查看 etcd 是否启动正常$ ps -ef | grep etcd $ netstat -ntplu | grep etcd |

登陆到 k8s-master3 操作

1 2 3 4 5 6 7 | # 执行 etcd.sh 生成配置脚本$ chmod +x etcd.sh$ ./etcd.sh etcd03 192.168.0.218 etcd01=https://192.168.0.216:2380,etcd02=https://192.168.0.217:2380,etcd03=https://192.168.0.218:2380# 查看 etcd 是否启动正常$ ps -ef | grep etcd $ netstat -ntplu | grep etcd |

1 2 3 4 5 6 7 8 9 10 11 12 | # 随便登陆一台master机器,查看 etcd 集群是否正常$ ETCDCTL_API=3 etcdctl --write-out=table \--cacert=/opt/kubernetes/ssl/ca.pem --cert=/opt/kubernetes/ssl/server.pem --key=/opt/kubernetes/ssl/server-key.pem \--endpoints=https://192.168.0.216:2379,https://192.168.0.217:2379,https://192.168.0.218:2379 endpoint health+---------------------------------+--------+-------------+-------+| ENDPOINT | HEALTH | TOOK | ERROR |+---------------------------------+--------+-------------+-------+| https://192.168.0.216:2379 | true | 38.721248ms | || https://192.168.0.217:2379 | true | 38.621248ms | || https://192.168.0.218:2379 | true | 38.821248ms | |+---------------------------------+--------+-------------+-------+ |

3.3 创建 metrics-server 证书

创建 metrics-server 使用的证书

登陆到 k8s-master1 操作

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | $ cd /data/ssl/# 注意: "CN": "system:metrics-server" 一定是这个,因为后面授权时用到这个名称,否则会报禁止匿名访问$ cat > metrics-server-csr.json <<EOF{ "CN": "system:metrics-server", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "system" } ]}EOF |

生成 metrics-server 证书和私钥

1 2 3 4 5 6 7 8 9 | # 生成证书$ cfssl gencert -ca=/opt/kubernetes/ssl/ca.pem -ca-key=/opt/kubernetes/ssl/ca-key.pem -config=/opt/kubernetes/ssl/ca-config.json -profile=kubernetes metrics-server-csr.json | cfssljson -bare metrics-server# copy 到 /opt/kubernetes/ssl 目录$ cp metrics-server-key.pem metrics-server.pem /opt/kubernetes/ssl/# copy 到 k8s-master2 k8s-master3 机器上$ scp metrics-server-key.pem metrics-server.pem root@k8s-master2:/opt/kubernetes/ssl/$ scp metrics-server-key.pem metrics-server.pem root@k8s-master3:/opt/kubernetes/ssl/ |

3.4 获取K8S二进制包

登陆到 k8s-master1 操作

1 2 3 4 5 6 7 8 9 | v1.18 下载页面 <br>https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.18.md<br># 创建存放 k8s 二进制包目录$ mkdir /data/k8s-package$ cd /data/k8s-package# 下载 v1.18.2 二进制包# 作者把二进制安装包上传到cdn上 https://cdm.yp14.cn/k8s-package/kubernetes-server-v1.18.2-linux-amd64.tar.gz$ wget https://dl.k8s.io/v1.18.2/kubernetes-server-linux-amd64.tar.gz$ tar xf kubernetes-server-linux-amd64.tar.gz |

master 节点需要用到:

1 2 3 4 | kubectlkube-schedulerkube-apiserverkube-controller-manager |

node 节点需要用到:

1 2 | kubeletkube-proxy |

PS:本文master节点也做为一个node节点,所以需要用到 kubelet kube-proxy 执行文件

1 2 3 4 5 6 7 8 9 | # 进入解压出来二进制包bin目录$ cd /data/k8s-package/kubernetes/server/bin# cpoy 执行文件到 /opt/kubernetes/bin 目录$ cp -a kube-apiserver kube-controller-manager kube-scheduler kubectl kubelet kube-proxy /opt/kubernetes/bin# copy 执行文件到 k8s-master2 k8s-master3 机器 /opt/kubernetes/bin 目录$ scp kube-apiserver kube-controller-manager kube-scheduler kubectl kubelet kube-proxy root@k8s-master2:/opt/kubernetes/bin/$ scp kube-apiserver kube-controller-manager kube-scheduler kubectl kubelet kube-proxy root@k8s-master3:/opt/kubernetes/bin/ |

3.5 创建Node节点kubeconfig文件

登陆到 k8s-master1 操作

- 创建TLS Bootstrapping Token

- 创建kubelet kubeconfig

- 创建kube-proxy kubeconfig

1 2 3 4 | $ cd /data/ssl/# 修改第10行 KUBE_APISERVER 地址$ vim kubeconfig.sh |

修改 kubeconfig.sh 脚本配置 KUBE_APISERVER 变量时,一定要把 https:// 带上,否则默认使用 http:// 请求

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 | # 创建 TLS Bootstrapping Tokenexport BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')cat > token.csv <<EOF${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"EOF#----------------------# 创建kubelet bootstrapping kubeconfig# 一定要把 https:// 带上,否则默认使用 http:// 请求export KUBE_APISERVER="https://lb.ypvip.com.cn:6443"# 设置集群参数kubectl config set-cluster kubernetes \ --certificate-authority=./ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=bootstrap.kubeconfig# 设置客户端认证参数kubectl config set-credentials kubelet-bootstrap \ --token=${BOOTSTRAP_TOKEN} \ --kubeconfig=bootstrap.kubeconfig# 设置上下文参数kubectl config set-context default \ --cluster=kubernetes \ --user=kubelet-bootstrap \ --kubeconfig=bootstrap.kubeconfig# 设置默认上下文kubectl config use-context default --kubeconfig=bootstrap.kubeconfig#----------------------# 创建kube-proxy kubeconfig文件kubectl config set-cluster kubernetes \ --certificate-authority=./ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=kube-proxy.kubeconfigkubectl config set-credentials kube-proxy \ --client-certificate=./kube-proxy.pem \ --client-key=./kube-proxy-key.pem \ --embed-certs=true \ --kubeconfig=kube-proxy.kubeconfigkubectl config set-context default \ --cluster=kubernetes \ --user=kube-proxy \ --kubeconfig=kube-proxy.kubeconfigkubectl config use-context default --kubeconfig=kube-proxy.kubeconfig |

1 2 3 4 5 6 | # 生成证书$ sh kubeconfig.sh# 输出下面结果kubeconfig.sh kube-proxy-csr.json kube-proxy.kubeconfigkube-proxy.csr kube-proxy-key.pem kube-proxy.pem bootstrap.kubeconfig |

1 2 3 4 5 6 | # copy *kubeconfig 文件到 /opt/kubernetes/cfg 目录$ cp *kubeconfig /opt/kubernetes/cfg# copy 到 k8s-master2 k8s-master3 机器上$ scp *kubeconfig root@k8s-master2:/opt/kubernetes/cfg$ scp *kubeconfig root@k8s-master3:/opt/kubernetes/cfg |

3.6 配置Master组件并运行

登陆到 k8s-master1 k8s-master2 k8s-master3 操作

1 2 3 4 5 6 7 8 | # 创建 /data/k8s-master 目录,用于存放 master 配置执行脚本$ mkdir /data/k8s-master# 创建 kube-apiserver 日志存放目录$ mkdir -p /var/log/kubernetes# 创建 kube-apiserver 审计日志文件$ touch /var/log/kubernetes/k8s-audit.log |

登陆到 k8s-master1

1 2 3 4 | $ cd /data/k8s-master# 创建生成 kube-apiserver 配置文件脚本<br>$ vim apiserver.sh<br>#!/bin/bash<br><br>MASTER_ADDRESS=${1:-"192.168.0.216"}<br>ETCD_SERVERS=${2:-"http://127.0.0.1:2379"}<br><br>cat <<EOF >/opt/kubernetes/cfg/kube-apiserver<br>KUBE_APISERVER_OPTS="--logtostderr=false \\<br>--v=2 \\<br>--log-dir=/var/log/kubernetes \\<br>--etcd-servers=${ETCD_SERVERS} \\<br>--bind-address=0.0.0.0 \\<br>--secure-port=6443 \\<br>--advertise-address=${MASTER_ADDRESS} \\<br>--allow-privileged=true \\<br>--service-cluster-ip-range=10.10.0.0/16 \\<br>--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota,NodeRestriction \\<br>--authorization-mode=RBAC,Node \\<br>--kubelet-https=true \\<br>--enable-bootstrap-token-auth=true \\<br>--token-auth-file=/opt/kubernetes/cfg/token.csv \\<br>--service-node-port-range=30000-50000 \\<br>--kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \\<br>--kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \\<br>--tls-cert-file=/opt/kubernetes/ssl/server.pem \\<br>--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\<br>--client-ca-file=/opt/kubernetes/ssl/ca.pem \\<br>--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\<br>--etcd-cafile=/opt/kubernetes/ssl/ca.pem \\<br>--etcd-certfile=/opt/kubernetes/ssl/server.pem \\<br>--etcd-keyfile=/opt/kubernetes/ssl/server-key.pem \\<br>--requestheader-client-ca-file=/opt/kubernetes/ssl/ca.pem \\<br>--requestheader-extra-headers-prefix=X-Remote-Extra- \\<br>--requestheader-group-headers=X-Remote-Group \\<br>--requestheader-username-headers=X-Remote-User \\<br>--proxy-client-cert-file=/opt/kubernetes/ssl/metrics-server.pem \\<br>--proxy-client-key-file=/opt/kubernetes/ssl/metrics-server-key.pem \\<br>--runtime-config=api/all=true \\<br>--audit-log-maxage=30 \\<br>--audit-log-maxbackup=3 \\<br>--audit-log-maxsize=100 \\<br>--audit-log-truncate-enabled=true \\<br>--audit-log-path=/var/log/kubernetes/k8s-audit.log"<br>EOF<br><br>cat <<EOF >/usr/lib/systemd/system/kube-apiserver.service<br>[Unit]<br>Description=Kubernetes API Server<br>Documentation=https://github.com/kubernetes/kubernetes<br><br>[Service]<br>EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver<br>ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS<br>Restart=on-failure<br><br>[Install]<br>WantedBy=multi-user.target<br>EOF<br><br>systemctl daemon-reload<br>systemctl enable kube-apiserver<br>systemctl restart kube-apiserver |

1 2 | # 创建生成 kube-controller-manager 配置文件脚本$ vim controller-manager.sh<br>#!/bin/bash<br><br>MASTER_ADDRESS=${1:-"127.0.0.1"}<br><br>cat <<EOF >/opt/kubernetes/cfg/kube-controller-manager<br>KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \\<br>--v=2 \\<br>--master=${MASTER_ADDRESS}:8080 \\<br>--leader-elect=true \\<br>--bind-address=0.0.0.0 \\<br>--service-cluster-ip-range=10.10.0.0/16 \\<br>--cluster-name=kubernetes \\<br>--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\<br>--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\<br>--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\<br>--experimental-cluster-signing-duration=87600h0m0s \\<br>--feature-gates=RotateKubeletServerCertificate=true \\<br>--feature-gates=RotateKubeletClientCertificate=true \\<br>--allocate-node-cidrs=true \\<br>--cluster-cidr=10.20.0.0/16 \\<br>--root-ca-file=/opt/kubernetes/ssl/ca.pem"<br>EOF<br><br>cat <<EOF >/usr/lib/systemd/system/kube-controller-manager.service<br>[Unit]<br>Description=Kubernetes Controller Manager<br>Documentation=https://github.com/kubernetes/kubernetes<br><br>[Service]<br>EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-manager<br>ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS<br>Restart=on-failure<br><br>[Install]<br>WantedBy=multi-user.target<br>EOF<br><br>systemctl daemon-reload<br>systemctl enable kube-controller-manager<br>systemctl restart kube-controller-manager |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 | # 创建生成 kube-scheduler 配置文件脚本<br>$ vim scheduler.sh#!/bin/bashMASTER_ADDRESS=${1:-"127.0.0.1"}cat <<EOF >/opt/kubernetes/cfg/kube-schedulerKUBE_SCHEDULER_OPTS="--logtostderr=true \\--v=2 \\--master=${MASTER_ADDRESS}:8080 \\--address=0.0.0.0 \\--leader-elect"EOFcat <<EOF >/usr/lib/systemd/system/kube-scheduler.service[Unit]Description=Kubernetes SchedulerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=-/opt/kubernetes/cfg/kube-schedulerExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetEOFsystemctl daemon-reloadsystemctl enable kube-schedulersystemctl restart kube-scheduler |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | # 添加执行权限$ chmod +x *.sh$ cp /data/ssl/token.csv /opt/kubernetes/cfg/# copy token.csv 和 master 配置到 k8s-master2 k8s-master3 机器上$ scp /data/ssl/token.csv root@k8s-master2:/opt/kubernetes/cfg$ scp /data/ssl/token.csv root@k8s-master3:/opt/kubernetes/cfg$ scp apiserver.sh controller-manager.sh scheduler.sh root@k8s-master2:/data/k8s-master$ scp apiserver.sh controller-manager.sh scheduler.sh root@k8s-master3:/data/k8s-master# 生成 master配置文件并运行$ ./apiserver.sh 192.168.0.216 https://192.168.0.216:2379,https://192.168.0.217:2379,https://192.168.0.218:2379 $ ./controller-manager.sh 127.0.0.1$ ./scheduler.sh 127.0.0.1# 查看master三个服务是否正常运行$ ps -ef | grep kube$ netstat -ntpl | grep kube- |

登陆到 k8s-master2 操作

1 2 3 4 5 6 7 8 9 10 | $ cd /data/k8s-master# 生成 master配置文件并运行$ ./apiserver.sh 192.168.0.217 https://192.168.0.216:2379,https://192.168.0.217:2379,https://192.168.0.218:2379 $ ./controller-manager.sh 127.0.0.1$ ./scheduler.sh 127.0.0.1# 查看master三个服务是否正常运行$ ps -ef | grep kube$ netstat -ntpl | grep kube- |

登陆到 k8s-master3 操作

1 2 3 4 5 6 7 8 9 10 | $ cd /data/k8s-master# 生成 master配置文件并运行$ ./apiserver.sh 192.168.0.218 https://192.168.0.216:2379,https://192.168.0.217:2379,https://192.168.0.218:2379 $ ./controller-manager.sh 127.0.0.1$ ./scheduler.sh 127.0.0.1# 查看master三个服务是否正常运行$ ps -ef | grep kube$ netstat -ntpl | grep kube-<br><br># 随便登陆一台master查看集群健康状态<br>$ kubectl get cs<br>NAME STATUS MESSAGE ERROR<br>scheduler Healthy ok<br>controller-manager Healthy ok<br>etcd-2 Healthy {"health":"true"}<br>etcd-1 Healthy {"health":"true"}<br>etcd-0 Healthy {"health":"true"} |

错误处理

1 2 3 4 | # 查询集群状态报下面错误$ kubectl get cserror: no configuration has been provided, try setting KUBERNETES_MASTER environment variable |

两种解决方法:

- 1、可以把kubectl版本降级到1.17版本,生成管理员config文件,并存放到 ~/.kube/config 目录下,再把 kubectl升级到1.18版本,这时就可以正常使用

- 2、直接声明下

KUBERNETES_MASTERApiserver 地址,不推荐这种方法

这里介绍生成管理员 config 配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 | # 降级 kubectl 版本到 1.17.5 $ mv /opt/kubernetes/bin/kubectl /opt/kubernetes/bin/kubectl-1.18.2$ wget https://cdm.yp14.cn/k8s-package/k8s-1.17-bin/kubectl -O /opt/kubernetes/bin/kubectl$ chmod +x /opt/kubernetes/bin/kubectl# 创建一个存放用户文件目录$ mkdir -p /root/yaml/create-user$ cd /root/yaml/create-user# Copy 脚本依赖文件$ cp /data/ssl/ca-config.json /opt/kubernetes/ssl/# 创建一个生成用户脚本$ vim create-user-kubeconfig.sh#!/bin/bash# 注意修改KUBE_APISERVER为你的API Server的地址KUBE_APISERVER=$1USER=$2USER_SA=system:serviceaccount:default:${USER}Authorization=$3USAGE="USAGE: create-user.sh <api_server> <username> <clusterrole authorization>\nExample: https://lb.ypvip.com.cn:6443 brand"CSR=`pwd`/user-csr.jsonSSL_PATH="/opt/kubernetes/ssl"USER_SSL_PATH="/root/yaml/create-user"SSL_FILES=(ca-key.pem ca.pem ca-config.json)CERT_FILES=(${USER}.csr $USER-key.pem ${USER}.pem)if [[ $KUBE_APISERVER == "" ]]; then echo -e $USAGE exit 1fiif [[ $USER == "" ]];then echo -e $USAGE exit 1fiif [[ $Authorization == "" ]];then echo -e $USAGE exit 1fi# 创建用户的csr文件function createCSR(){cat>$CSR<<EOF{ "CN": "USER", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ]}EOF# 替换csr文件中的用户名sed -i "s/USER/$USER_SA/g" $CSR}function ifExist(){if [ ! -f "$SSL_PATH/$1" ]; then echo "$SSL_PATH/$1 not found." exit 1fi}function ifClusterrole(){kubectl get clusterrole ${Authorization} &> /dev/nullif (( $? !=0 ));then echo "${Authorization} clusterrole there is no" exit 1fi}# 判断clusterrole授权是否存在ifClusterrole# 判断证书文件是否存在for f in ${SSL_FILES[@]};do echo "Check if ssl file $f exist..." ifExist $f echo "OK"doneecho "Create CSR file..."createCSRecho "$CSR created"echo "Create user's certificates and keys..."cd $USER_SSL_PATHcfssl gencert -ca=${SSL_PATH}/ca.pem -ca-key=${SSL_PATH}/ca-key.pem -config=${SSL_PATH}/ca-config.json -profile=kubernetes $CSR| cfssljson -bare $USER_SA# 创建 sakubectl create sa ${USER} -n default# 设置集群参数kubectl config set-cluster kubernetes \--certificate-authority=${SSL_PATH}/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${USER}.kubeconfig# 设置客户端认证参数kubectl config set-credentials ${USER_SA} \--client-certificate=${USER_SSL_PATH}/${USER_SA}.pem \--client-key=${USER_SSL_PATH}/${USER_SA}-key.pem \--embed-certs=true \--kubeconfig=${USER}.kubeconfig# 设置上下文参数kubectl config set-context kubernetes \--cluster=kubernetes \--user=${USER_SA} \--namespace=default \--kubeconfig=${USER}.kubeconfig# 设置默认上下文kubectl config use-context kubernetes --kubeconfig=${USER}.kubeconfig# 创建 namespace# kubectl create ns $USER# 绑定角色# kubectl create rolebinding ${USER}-admin-binding --clusterrole=admin --user=$USER --namespace=$USER --serviceaccount=$USER:defaultkubectl create clusterrolebinding ${USER}-binding --clusterrole=${Authorization} --user=${USER_SA}# kubectl config get-contextsecho "Congratulations!"echo "Your kubeconfig file is ${USER}.kubeconfig" |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | # 添加执行权限$ chmod +x create-user-kubeconfig.sh# 脚本 Help$ ./create-user-kubeconfig.sh --helpUSAGE: create-user.sh <api_server> <username> <clusterrole authorization> Example: https://lb.ypvip.com.cn:6443 brand# 创建一个 admin 管理员用户,绑定 cluster-admin 权限$ ./create-user-kubeconfig.sh https://lb.ypvip.com.cn:6443 admin cluster-admin# 查看 admin 用户 Token$ kubectl describe secrets -n default `kubectl get secrets -n default | grep admin-token | awk '{print $1}'` | grep 'token:'# 把上面查看的 Token 写入到脚本生成 admin.kubeconfig 文件最底部$ vim admin.kubeconfig |

# 创建 .kube 目录

cp admin.kubeconfig ~/.kube/config

# 把kubectl 从 1.17.5 换成 1.18.2版本,可以正常查看集群状态

chmod +x /opt/kubernetes/bin/kubectl

$ kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

3.7 配置kubelet证书自动续期和创建Node授权用户

登陆到 k8s-master1 操作

创建 Node节点 授权用户 kubelet-bootstrap

1 | $ kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap |

创建自动批准相关 CSR 请求的 ClusterRole

1 2 3 4 | # 创建证书旋转配置存放目录$ mkdir ~/yaml/kubelet-certificate-rotating$ cd ~/yaml/kubelet-certificate-rotating<br>$ vim tls-instructs-csr.yaml <br>kind: ClusterRole<br>apiVersion: rbac.authorization.k8s.io/v1<br>metadata:<br> name: system:certificates.k8s.io:certificatesigningrequests:selfnodeserver<br>rules:<br>- apiGroups: ["certificates.k8s.io"]<br> resources: ["certificatesigningrequests/selfnodeserver"]<br> verbs: ["create"]<br><br># 部署<br>$ kubectl apply -f tls-instructs-csr.yaml |

自动批准 kubelet-bootstrap 用户 TLS bootstrapping 首次申请证书的 CSR 请求

1 | $ kubectl create clusterrolebinding node-client-auto-approve-csr --clusterrole=system:certificates.k8s.io:certificatesigningrequests:nodeclient --user=kubelet-bootstrap |

自动批准 system:nodes 组用户更新 kubelet 自身与 apiserver 通讯证书的 CSR 请求

1 | $ kubectl create clusterrolebinding node-client-auto-renew-crt --clusterrole=system:certificates.k8s.io:certificatesigningrequests:selfnodeclient --group=system:nodes |

自动批准 system:nodes 组用户更新 kubelet 10250 api 端口证书的 CSR 请求

1 | $ kubectl create clusterrolebinding node-server-auto-renew-crt --clusterrole=system:certificates.k8s.io:certificatesigningrequests:selfnodeserver --group=system:nodes |

3.8 配置Node组件并运行

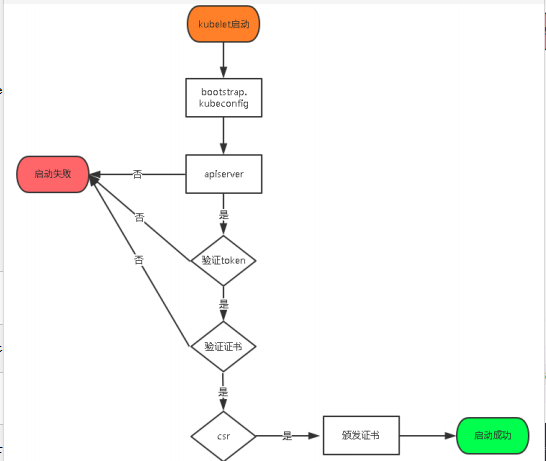

首先我们先了解下 kubelet 中 kubelet.kubeconfig 配置是如何生成?

kubelet.kubeconfig 配置是通过 TLS Bootstrapping 机制生成,下面是生成的流程图。

登陆到 k8s-master1 k8s-master2 k8s-master3 操作

# 创建 node 节点生成配置脚本目录

$ mkdir /data/k8s-node

登陆到 k8s-master1 操作

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 | # 创建生成 kubelet 配置脚本$ vim kubelet.sh#!/bin/bashDNS_SERVER_IP=${1:-"10.10.0.2"}HOSTNAME=${2:-"`hostname`"}CLUETERDOMAIN=${3:-"cluster.local"}cat <<EOF >/opt/kubernetes/cfg/kubelet.confKUBELET_OPTS="--logtostderr=true \\--v=2 \\--hostname-override=${HOSTNAME} \\--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\--config=/opt/kubernetes/cfg/kubelet-config.yml \\--cert-dir=/opt/kubernetes/ssl \\--network-plugin=cni \\--cni-conf-dir=/etc/cni/net.d \\--cni-bin-dir=/opt/cni/bin \\--pod-infra-container-image=yangpeng2468/google_containers-pause-amd64:3.2"EOFcat <<EOF >/opt/kubernetes/cfg/kubelet-config.ymlkind: KubeletConfiguration # 使用对象apiVersion: kubelet.config.k8s.io/v1beta1 # api版本address: 0.0.0.0 # 监听地址port: 10250 # 当前kubelet的端口readOnlyPort: 10255 # kubelet暴露的端口cgroupDriver: cgroupfs # 驱动,要于docker info显示的驱动一致clusterDNS: - ${DNS_SERVER_IP}clusterDomain: ${CLUETERDOMAIN} # 集群域failSwapOn: false # 关闭swap# 身份验证authentication: anonymous: enabled: false webhook: cacheTTL: 2m0s enabled: true x509: clientCAFile: /opt/kubernetes/ssl/ca.pem# 授权authorization: mode: Webhook webhook: cacheAuthorizedTTL: 5m0s cacheUnauthorizedTTL: 30s# Node 资源保留evictionHard: imagefs.available: 15% memory.available: 1G nodefs.available: 10% nodefs.inodesFree: 5%evictionPressureTransitionPeriod: 5m0s# 镜像删除策略imageGCHighThresholdPercent: 85imageGCLowThresholdPercent: 80imageMinimumGCAge: 2m0s# 旋转证书rotateCertificates: true # 旋转kubelet client 证书featureGates: RotateKubeletServerCertificate: true RotateKubeletClientCertificate: truemaxOpenFiles: 1000000maxPods: 110EOFcat <<EOF >/usr/lib/systemd/system/kubelet.service[Unit]Description=Kubernetes KubeletAfter=docker.serviceRequires=docker.service[Service]EnvironmentFile=-/opt/kubernetes/cfg/kubelet.confExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTSRestart=on-failureKillMode=process[Install]WantedBy=multi-user.targetEOFsystemctl daemon-reloadsystemctl enable kubeletsystemctl restart kubelet |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 | # 创建生成 kube-proxy 配置脚本$ vim proxy.sh#!/bin/bashHOSTNAME=${1:-"`hostname`"}cat <<EOF >/opt/kubernetes/cfg/kube-proxy.confKUBE_PROXY_OPTS="--logtostderr=true \\--v=2 \\--config=/opt/kubernetes/cfg/kube-proxy-config.yml"EOFcat <<EOF >/opt/kubernetes/cfg/kube-proxy-config.ymlkind: KubeProxyConfigurationapiVersion: kubeproxy.config.k8s.io/v1alpha1address: 0.0.0.0 # 监听地址metricsBindAddress: 0.0.0.0:10249 # 监控指标地址,监控获取相关信息 就从这里获取clientConnection: kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig # 读取配置文件hostnameOverride: ${HOSTNAME} # 注册到k8s的节点名称唯一clusterCIDR: 10.20.0.0/16 # Pod IP范围mode: iptables # 使用iptables模式# 使用 ipvs 模式#mode: ipvs # ipvs 模式#ipvs:# scheduler: "rr"#iptables:# masqueradeAll: trueEOFcat <<EOF >/usr/lib/systemd/system/kube-proxy.service[Unit]Description=Kubernetes ProxyAfter=network.target[Service]EnvironmentFile=-/opt/kubernetes/cfg/kube-proxy.confExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetEOFsystemctl daemon-reloadsystemctl enable kube-proxysystemctl restart kube-proxy |

1 2 3 4 5 6 7 8 9 10 | # 生成 node 配置文件$ ./kubelet.sh 10.10.0.2 k8s-master1 cluster.local$ ./proxy.sh k8s-master1# 查看服务是否启动$ netstat -ntpl | egrep "kubelet|kube-proxy"# copy kubelet.sh proxy.sh 脚本到 k8s-master2 k8s-master3 机器上$ scp kubelet.sh proxy.sh root@k8s-master2:/data/k8s-node$ scp kubelet.sh proxy.sh root@k8s-master3:/data/k8s-node |

登陆到 k8s-master2 操作

1 2 3 4 5 6 7 8 | $ cd /data/k8s-node# 生成 node 配置文件$ ./kubelet.sh 10.10.0.2 k8s-master2 cluster.local$ ./proxy.sh k8s-master2# 查看服务是否启动$ netstat -ntpl | egrep "kubelet|kube-proxy" |

登陆到 k8s-master3 操作

1 2 3 4 5 6 7 8 | $ cd /data/k8s-node# 生成 node 配置文件$ ./kubelet.sh 10.10.0.2 k8s-master3 cluster.local$ ./proxy.sh k8s-master3# 查看服务是否启动$ netstat -ntpl | egrep "kubelet|kube-proxy" |

1 2 3 4 5 6 7 | # 随便登陆一台master机器查看node节点是否添加成功$ kubectl get nodeNAME STATUS ROLES AGE VERSIONk8s-master1 NoReady <none> 4d4h v1.18.2k8s-master2 NoReady <none> 4d4h v1.18.2k8s-master3 NoReady <none> 4d4h v1.18.2 |

上面 Node 节点处理 NoReady 状态,是因为目前还没有安装网络组件,下文安装网络组件。

1 | $ vim ~/yaml/apiserver-to-kubelet-rbac.yml<br>kind: ClusterRoleBinding<br>apiVersion: rbac.authorization.k8s.io/v1<br>metadata:<br> name: kubelet-api-admin<br>subjects:<br>- kind: User<br> name: kubernetes<br> apiGroup: rbac.authorization.k8s.io<br>roleRef:<br> kind: ClusterRole<br> name: system:kubelet-api-admin<br> apiGroup: rbac.authorization.k8s.io<br># 应用<br>$ kubectl apply -f ~/yaml/apiserver-to-kubelet-rbac.yml |

3.9 安装calico网络,使用IPIP模式

登陆到 k8s-master1 操作

下载 Calico Version v3.14.0 Yaml 文件

1 2 3 4 5 6 7 | # 存放etcd yaml文件$ mkdir -p ~/yaml/calico$ cd ~/yaml/calico# 注意:下面是基于自建etcd做为存储的配置文件$ curl https://docs.projectcalico.org/manifests/calico-etcd.yaml -O |

calico-etcd.yaml 需要修改如下配置:

Secret 配置修改

1 2 3 4 5 6 7 8 9 10 | apiVersion: v1kind: Secrettype: Opaquemetadata: name: calico-etcd-secrets namespace: kube-systemdata: etcd-key: (cat /opt/kubernetes/ssl/server-key.pem | base64 -w 0) # 将输出结果填写在这里 etcd-cert: (cat /opt/kubernetes/ssl/server.pem | base64 -w 0) # 将输出结果填写在这里 etcd-ca: (cat /opt/kubernetes/ssl/ca.pem | base64 -w 0) # 将输出结果填写在这里 |

ConfigMap 配置修改

1 2 3 4 5 6 7 8 9 10 | kind: ConfigMapapiVersion: v1metadata: name: calico-config namespace: kube-systemdata: etcd_endpoints: "https://192.168.0.216:2379,https://192.168.0.217:2379,https://192.168.0.218:2379" etcd_ca: "/calico-secrets/etcd-ca" etcd_cert: "/calico-secrets/etcd-cert" etcd_key: "/calico-secrets/etcd-key" |

关于ConfigMap部分主要参数如下:

etcd_endpoints:Calico使用etcd来保存网络拓扑和状态,该参数指定etcd的地址,可以使用K8S Master所用的etcd,也可以另外搭建。calico_backend:Calico的后端,默认为bird。cni_network_config:符合CNI规范的网络配置,其中type=calico表示,Kubelet 从 CNI_PATH (默认为/opt/cni/bin)目录找calico的可执行文件,用于容器IP地址的分配。- etcd 如果配置

TLS安全认证,则还需要指定相应的ca、cert、key等文件

修改 Pods 使用的 IP 网段,默认使用 192.168.0.0/16 网段

1 2 | - name: CALICO_IPV4POOL_CIDR value: "10.20.0.0/16" |

配置网卡自动发现规则

在 DaemonSet calico-node env 中添加网卡发现规则

1 2 3 4 5 6 | # 定义ipv4自动发现网卡规则 - name: IP_AUTODETECTION_METHOD value: "interface=eth.*" # 定义ipv6自动发现网卡规则 - name: IP6_AUTODETECTION_METHOD value: "interface=eth.*" |

Calico 模式设置

1 2 3 | # Enable IPIP - name: CALICO_IPV4POOL_IPIP value: "Always" |

Calico 有两种网络模式:BGP 和 IPIP

- 使用

IPIP模式时,设置CALICO_IPV4POOL_IPIP="always",IPIP 是一种将各Node的路由之间做一个tunnel,再把两个网络连接起来的模式,启用IPIP模式时,Calico将在各Node上创建一个名为tunl0的虚拟网络接口。 - 使用

BGP模式时,设置CALICO_IPV4POOL_IPIP="off"

错误解决方法

错误:[ERROR][8] startup/startup.go 146: failed to query kubeadm's config map error=Get https://10.10.0.1:443/api/v1/namespaces/kube-system/configmaps/kubeadm-config?timeout=2s: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

原因:Node工作节点连接不到 apiserver 地址,检查一下calico配置文件,要把apiserver的IP和端口配置上,如果不配置的话,calico默认将设置默认的calico网段和443端口。字段名:KUBERNETES_SERVICE_HOST、KUBERNETES_SERVICE_PORT、KUBERNETES_SERVICE_PORT_HTTPS。

解决方法:

在 DaemonSet calico-node env 中添加环境变量

1 2 3 4 5 6 | - name: KUBERNETES_SERVICE_HOST value: "lb.ypvip.com.cn" - name: KUBERNETES_SERVICE_PORT value: "6443" - name: KUBERNETES_SERVICE_PORT_HTTPS value: "6443" |

修改完 calico-etcd.yaml 后,执行部署

1 2 3 4 5 6 7 8 9 10 11 12 13 | # 部署$ kubectl apply -f calico-etcd.yaml# 查看 calico pods$ kubectl get pods -n kube-system | grep calico# 查看 node 是否正常,现在 node 服务正常了$ kubectl get nodeNAME STATUS ROLES AGE VERSIONk8s-master1 Ready <none> 4d4h v1.18.2k8s-master2 Ready <none> 4d4h v1.18.2k8s-master3 Ready <none> 4d4h v1.18.2 |

3.10 集群CoreDNS部署

登陆到 k8s-master1 操作

deploy.sh 是一个便捷的脚本,用于生成coredns yaml 配置。

1 2 3 4 5 6 7 8 9 10 | # 安装依赖 jq 命令$ yum install jq -y$ cd ~/yaml$ mkdir coredns$ cd coredns# 下载 CoreDNS 项目$ git clone https://github.com/coredns/deployment.git$ cd coredns/deployment/kubernetes |

默认情况下 CLUSTER_DNS_IP 是自动获取kube-dns的集群ip的,但是由于没有部署kube-dns所以只能手动指定一个集群ip。

## vim deployment/kubernetes/deploy.sh

1 2 3 4 | if [[ -z $CLUSTER_DNS_IP ]]; then # Default IP to kube-dns IP # CLUSTER_DNS_IP=$(kubectl get service --namespace kube-system kube-dns -o jsonpath="{.spec.clusterIP}") CLUSTER_DNS_IP=10.10.0.2 |

# 查看执行效果,并未开始部署

1 2 3 4 5 6 7 | $ ./deploy.sh# 执行部署$ ./deploy.sh | kubectl apply -f -# 查看 Coredns$ kubectl get svc,pods -n kube-system| grep coredns |

测试 Coredns 解析

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | # 创建一个 busybox Pod$ vim busybox.yamlapiVersion: v1kind: Podmetadata: name: busybox namespace: defaultspec: containers: - name: busybox image: busybox:1.28.4 command: - sleep - "3600" imagePullPolicy: IfNotPresent restartPolicy: Always# 部署$ kubectl apply -f busybox.yaml# 测试解析,下面是解析正常$ kubectl exec -i busybox -n default nslookup kubernetesServer: 10.10.0.2Address 1: 10.10.0.2 kube-dns.kube-system.svc.cluster.localName: kubernetesAddress 1: 10.10.0.1 kubernetes.default.svc.cluster.local |

3.11 部署集群监控服务 Metrics Server

登陆到 k8s-master1 操作

1 2 3 4 5 | $ cd ~/yaml# 拉取 v0.3.6 版本$ git clone https://github.com/kubernetes-sigs/metrics-server.git -b v0.3.6$ cd metrics-server/deploy/1.8+ |

只修改 metrics-server-deployment.yaml 配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 | # 下面是修改前后比较差异$ git diff metrics-server-deployment.yamldiff --git a/deploy/1.8+/metrics-server-deployment.yaml b/deploy/1.8+/metrics-server-deployment.yamlindex 2393e75..2139e4a 100644--- a/deploy/1.8+/metrics-server-deployment.yaml+++ b/deploy/1.8+/metrics-server-deployment.yaml@@ -29,8 +29,19 @@ spec: emptyDir: {} containers: - name: metrics-server- image: k8s.gcr.io/metrics-server-amd64:v0.3.6- imagePullPolicy: Always+ image: yangpeng2468/metrics-server-amd64:v0.3.6+ imagePullPolicy: IfNotPresent+ resources:+ limits:+ cpu: 400m+ memory: 1024Mi+ requests:+ cpu: 50m+ memory: 50Mi+ command:+ - /metrics-server+ - --kubelet-insecure-tls+ - --kubelet-preferred-address-types=InternalIP volumeMounts: - name: tmp-dir mountPath: /tmp<br><br># 部署<br>$ kubectl apply -f .<br><br># 验证<br>$ kubectl top node<br><br>NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%<br>k8s-master1 72m 7% 1002Mi 53%<br>k8s-master2 121m 3% 1852Mi 12%<br>k8s-master3 300m 3% 1852Mi 20%<br><br># 内存单位 Mi=1024*1024字节 M=1000*1000字节<br># CPU单位 1核=1000m 即 250m=1/4核 |

3.12 部署 Kubernetes Dashboard

Kubernetes Dashboard 部署,请参考 K8S Dashboard 2.0 部署并使用 Ingress-Nginx 提供访问入口 文章。

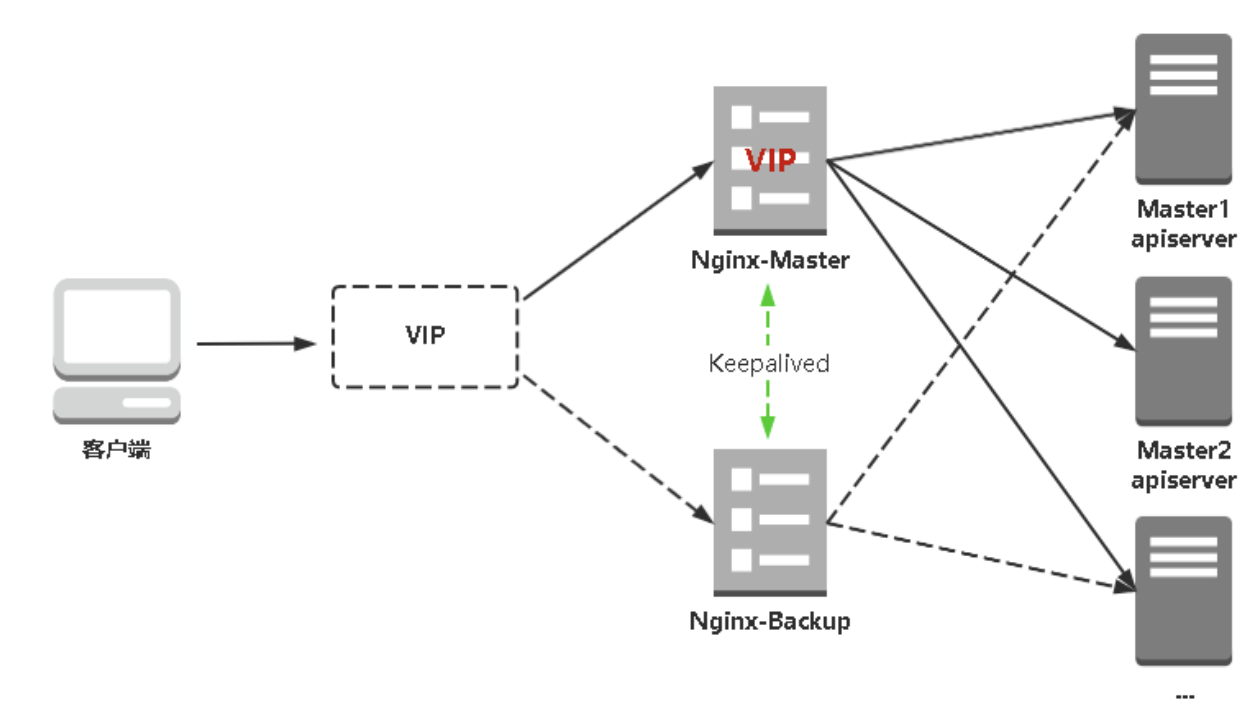

3.13 部署Nginx负载均衡器

kube-apiserver高可用架构图:

安装软件包(主/备)

1 2 | yum install epel-release -yyum install nginx keepalived -y |

Nginx配置文件(主/备一样)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 | cat > /etc/nginx/nginx.conf << "EOF"user nginx;worker_processes auto;error_log /var/log/nginx/error.log;pid /run/nginx.pid;include /usr/share/nginx/modules/*.conf;events { worker_connections 1024;}# 四层负载均衡,为两台Master apiserver组件提供负载均衡stream { log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent'; access_log /var/log/nginx/k8s-access.log main; upstream k8s-apiserver { server 192.168.31.71:6443; # Master1 APISERVER IP:PORT server 192.168.31.74:6443; # Master2 APISERVER IP:PORT } server { listen 6443; proxy_pass k8s-apiserver; }}http { log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"'; access_log /var/log/nginx/access.log main; sendfile on; tcp_nopush on; tcp_nodelay on; keepalive_timeout 65; types_hash_max_size 2048; include /etc/nginx/mime.types; default_type application/octet-stream; server { listen 80 default_server; server_name _; location / { } }}EOF |

keepalived配置文件(Nginx Master)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | cat > /etc/keepalived/keepalived.conf << EOFglobal_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id NGINX_MASTER}vrrp_script check_nginx { script "/etc/keepalived/check_nginx.sh"}vrrp_instance VI_1 { state MASTER interface ens33 virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的 priority 100 # 优先级,备服务器设置 90 advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒 authentication { auth_type PASS auth_pass 1111 } # 虚拟IP virtual_ipaddress { 192.168.31.88/24 } track_script { check_nginx }}EOF |

检查nginx状态脚本:

1 2 3 4 5 6 7 8 9 10 11 | cat > /etc/keepalived/check_nginx.sh << "EOF"#!/bin/bashcount=$(ps -ef |grep nginx |egrep -cv "grep|$$")if [ "$count" -eq 0 ];then exit 1else exit 0fiEOFchmod +x /etc/keepalived/check_nginx.sh |

keepalived配置文件(Nginx Backup)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 | cat > /etc/keepalived/keepalived.conf << EOFglobal_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id NGINX_BACKUP}vrrp_script check_nginx { script "/etc/keepalived/check_nginx.sh"}vrrp_instance VI_1 { state BACKUP interface ens33 virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的 priority 90 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.31.88/24 } track_script { check_nginx }}EOF |

访问负载均衡器测试

kill掉一台master,使用curl查看K8s版本测试,使用VIP访问

1 2 3 4 5 6 7 8 9 10 11 12 | curl -k https://192.168.31.88:6443/version{ "major": "1", "minor": "18", "gitVersion": "v1.18.3", "gitCommit": "2e7996e3e2712684bc73f0dec0200d64eec7fe40", "gitTreeState": "clean", "buildDate": "2020-05-20T12:43:34Z", "goVersion": "go1.13.9", "compiler": "gc", "platform": "linux/amd64"} |

修改所有Worker Node连接LB VIP

虽然增加了Master2和负载均衡器,但是是从单Master架构扩容的,也就是说目前所有的Node组件连接都还是Master1,如果不改为连接VIP走负载均衡器,那么Master还是单点故障。

因此接下来就是要改所有Node组件配置文件,由原来192.168.31.71修改为192.168.31.88(VIP):

在上述所有Worker Node执行:

1 2 3 | sed -i 's#192.168.31.71:6443#192.168.31.88:6443#' /opt/kubernetes/cfg/*systemctl restart kubeletsystemctl restart kube-proxy |

3.14 haproxy负载均衡方式

所有Master节点通过yum安装HAProxy和KeepAlived:

1 | yum install keepalived haproxy -y |

所有Master节点配置HAProxy(详细配置参考HAProxy文档,所有Master节点的HAProxy配置相同):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 | # mkdir /etc/haproxy[root@k8s-master01 etc]# vim /etc/haproxy/haproxy.cfg global maxconn 2000 ulimit-n 16384 log 127.0.0.1 local0 err stats timeout 30sdefaults log global mode http option httplog timeout connect 5000 timeout client 50000 timeout server 50000 timeout http-request 15s timeout http-keep-alive 15sfrontend monitor-in bind *:33305 mode http option httplog monitor-uri /monitorfrontend k8s-master bind 0.0.0.0:16443 bind 127.0.0.1:16443 mode tcp option tcplog tcp-request inspect-delay 5s default_backend k8s-masterbackend k8s-master mode tcp option tcplog option tcp-check balance roundrobin default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100 server k8s-master01 192.168.0.201:6443 check server k8s-master02 192.168.0.202:6443 check server k8s-master03 192.168.0.203:6443 check |

所有Master节点配置KeepAlived,配置不一样,注意区分

注意每个节点的IP和网卡(interface参数)

Master01节点的配置:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | mkdir /etc/keepalived[root@k8s-master01 ~]# vim /etc/keepalived/keepalived.conf ! Configuration File for keepalivedglobal_defs { router_id LVS_DEVELscript_user root enable_script_security}vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 5 weight -5 fall 2 rise 1}vrrp_instance VI_1 { state MASTER interface ens192 mcast_src_ip 192.168.0.201 virtual_router_id 51 priority 101 advert_int 2 authentication { auth_type PASS auth_pass K8SHA_KA_AUTH } virtual_ipaddress { 192.168.0.236 } track_script { chk_apiserver }} |

Master02节点的配置:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 | ! Configuration File for keepalivedglobal_defs { router_id LVS_DEVELscript_user root enable_script_security}vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 5 weight -5 fall 2 rise 1}vrrp_instance VI_1 { state BACKUP interface ens192 mcast_src_ip 192.168.0.202 virtual_router_id 51 priority 100 advert_int 2 authentication { auth_type PASS auth_pass K8SHA_KA_AUTH } virtual_ipaddress { 192.168.0.236 } track_script {<br> chk_apiserver }} |

Master03节点的配置:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 | ! Configuration File for keepalivedglobal_defs { router_id LVS_DEVELscript_user root enable_script_security}vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 5 weight -5 fall 2 rise 1}vrrp_instance VI_1 { state BACKUP interface ens192 mcast_src_ip 192.168.0.203 virtual_router_id 51 priority 100 advert_int 2 authentication { auth_type PASS auth_pass K8SHA_KA_AUTH } virtual_ipaddress { 192.168.0.236 } track_script { chk_apiserver }} |

配置KeepAlived健康检查文件:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 | # cat /etc/keepalived/check_apiserver.sh #!/bin/basherr=0for k in $(seq 1 3)do check_code=$(pgrep haproxy) if [[ $check_code == "" ]]; then err=$(expr $err + 1) sleep 1 continue else err=0 break fidoneif [[ $err != "0" ]]; then echo "systemctl stop keepalived" /usr/bin/systemctl stop keepalived exit 1else exit 0fichmod +x /etc/keepalived/check_apiserver.sh<br>启动haproxy和keepalived# systemctl daemon-reload# systemctl enable --now haproxy# systemctl enable --now keepalived |

https://mp.weixin.qq.com/s/Hyf0OD7KPeoBJw8gxTQsYA Kubernetes v1.18.2 二进制高可用部署(修正版)

https://mp.weixin.qq.com/s/az3OgOOTi17YpH0pmVDM-g 高可用安装K8s集群1.20.x

https://mp.weixin.qq.com/s/VYtyTU9_Dw9M5oHtvRfseA 部署一套完整的Kubernetes高可用集群(上)

https://mp.weixin.qq.com/s/F9BC6GALHiWBK5dmUnqepA 部署一套完整的Kubernetes高可用集群(下)