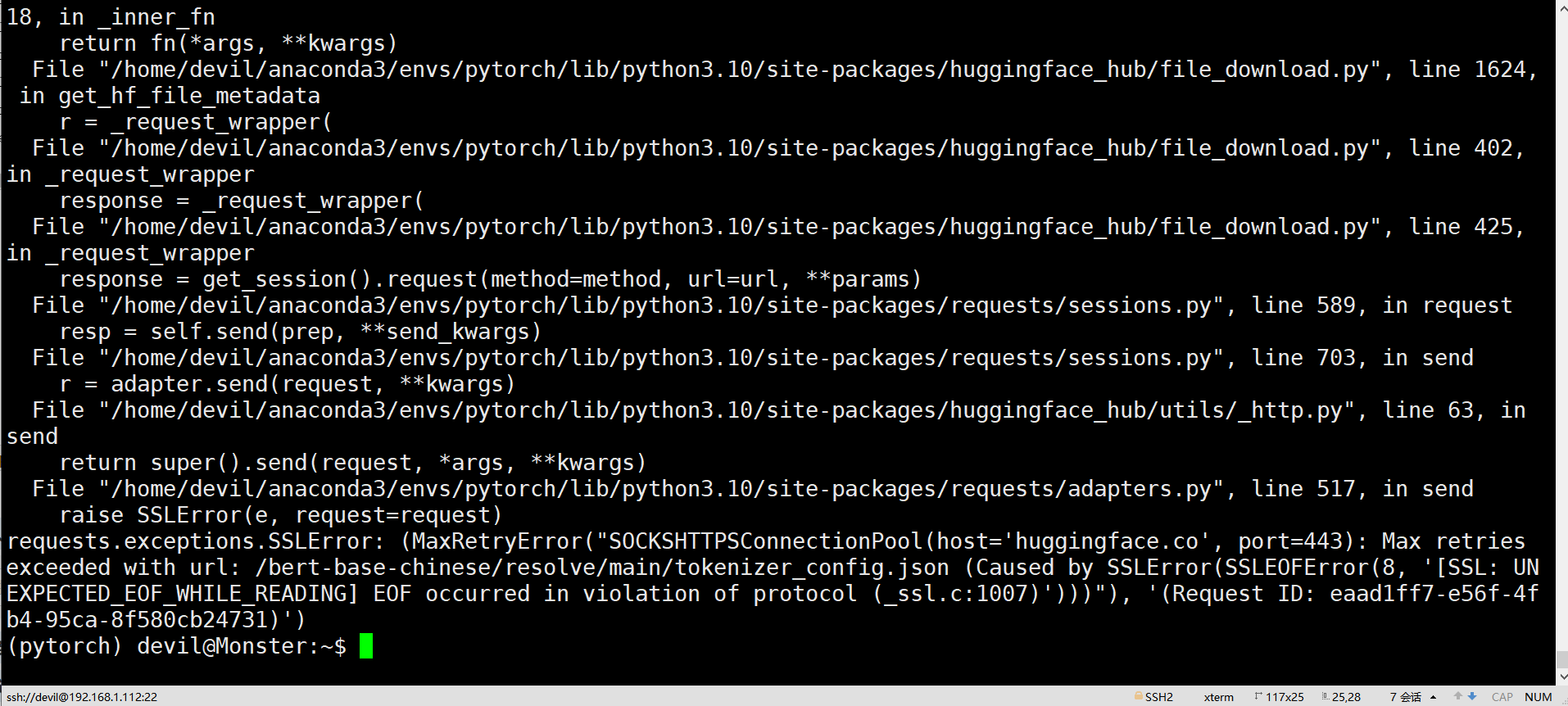

连接huggingface.co报错:(MaxRetryError("SOCKSHTTPSConnectionPool(host='huggingface.co', port=443) (SSLEOFError(8, '[SSL: UNEXPECTED_EOF_WHILE_READING] EOF occurred in violation of protocol (_ssl.c:1007)

参考:

https://blog.csdn.net/shizheng_Li/article/details/132942548

https://blog.csdn.net/weixin_42209440/article/details/129999962

============================

随着国际顶级的AI公司广泛使用huggingface.co,现在的huggingface.co已经成了搞AI的不可或缺的一个工具了。hugging face又被称作是开源机器学习版本的GitHub,个人和公司都可以在这个huggingface.co网站上分享自己的AI模型,随着huggingface.co上开源的AI模型越来越多这个网站也称为搞AI的researcher不可或缺的一个模型git库了。但是由于一些原因,huggingface.co是不能在国内进行访问的,对于这个网站如何访问估计大多数人还是会的,比较会用Google的人就会访问huggingface.co这个网站。虽然我们可以通过某些方法来访问huggingface.co网站,但是我们在project中调用huggingface.co网站上开源的模型却很那实现,对此比较常见的操作就是通过网站访问相应的模型URL,然后手动下载下来,然后再在project的代码中进行修改,这个方法虽然可以解决问题但是却需要手动的一个文件一个文件的下载,太过于麻烦,为此本文给出另一个方法,可以使代码中对huggingface.co的模型调用可行。

关于手动下载huggingface.co的模型可参考下面两个链接:

https://blog.csdn.net/shizheng_Li/article/details/132942548

https://blog.csdn.net/weixin_42209440/article/details/129999962

没有使用手动下载的文件前测试代码:

import torch from transformers import BertModel, BertTokenizer, BertConfig # 首先要import进来 tokenizer = BertTokenizer.from_pretrained('bert-base-chinese') config = BertConfig.from_pretrained('bert-base-chinese') config.update({'output_hidden_states':True}) # 这里直接更改模型配置 model = BertModel.from_pretrained("bert-base-chinese",config=config)

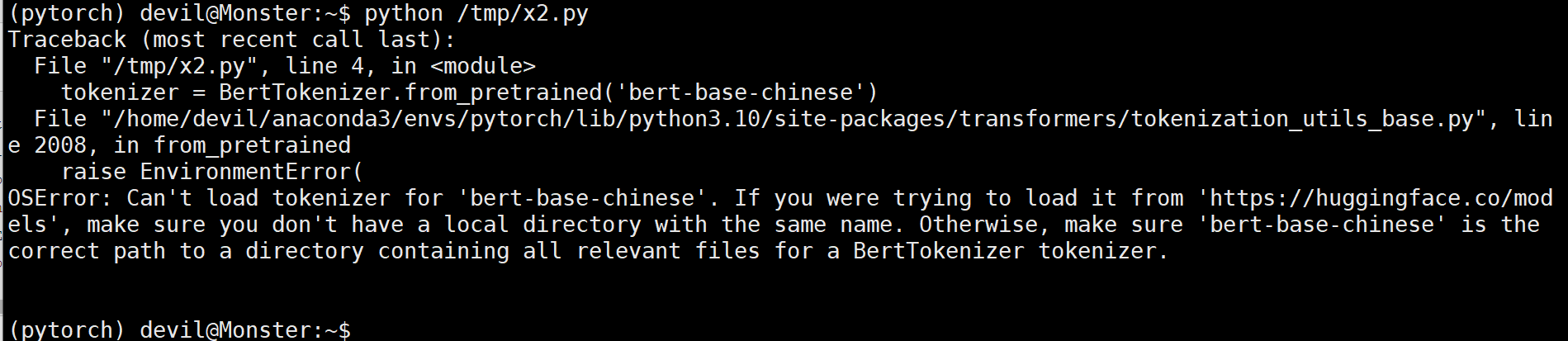

报错:

Traceback (most recent call last): File "/tmp/x2.py", line 4, in <module> tokenizer = BertTokenizer.from_pretrained('bert-base-chinese') File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/transformers/tokenization_utils_base.py", line 2008, in from_pretrained raise EnvironmentError( OSError: Can't load tokenizer for 'bert-base-chinese'. If you were trying to load it from 'https://huggingface.co/models', make sure you don't have a local directory with the same name. Otherwise, make sure 'bert-base-chinese' is the correct path to a directory containing all relevant files for a BertTokenizer tokenizer.

手动下载huggingface.co的模型并修改测试代码:

import torch from transformers import BertModel, BertTokenizer, BertConfig dir_path = "/home/devil/.cache/huggingface/hub/models--bert-base-chinese/snapshots/8d2a91f91cc38c96bb8b4556ba70c392f8d5ee55/" # 首先要import进来 tokenizer = BertTokenizer.from_pretrained(dir_path) config = BertConfig.from_pretrained(dir_path) config.update({'output_hidden_states':True}) # 这里直接更改模型配置 model = BertModel.from_pretrained(dir_path)

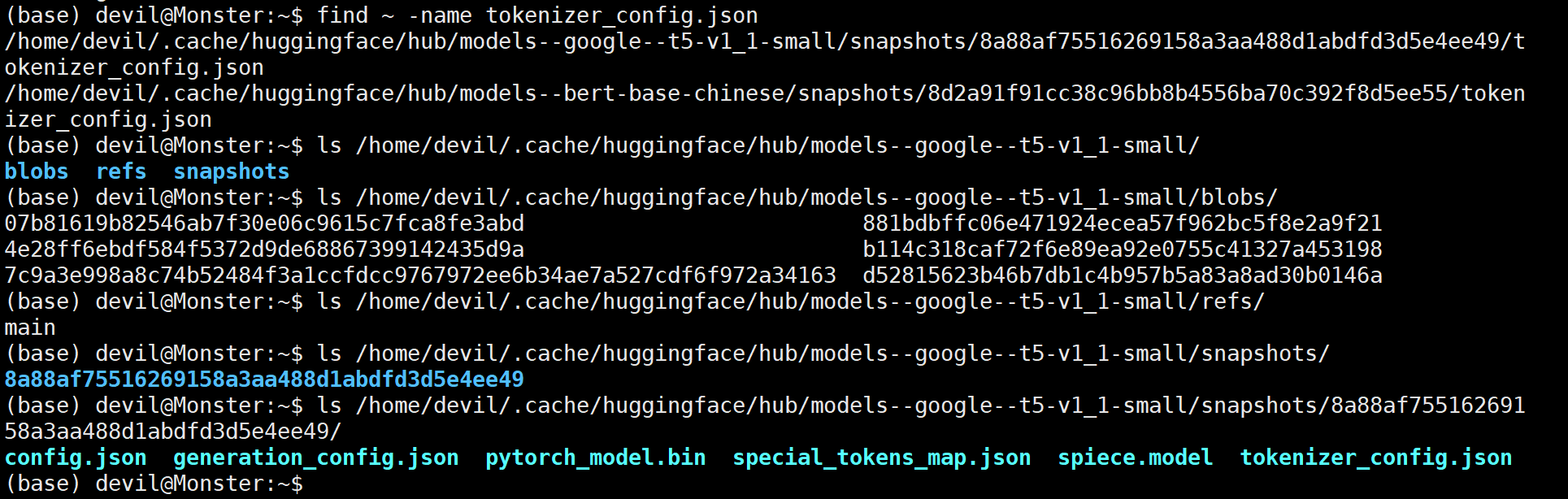

成功运行,下图给出对hugging face的模型文件的查找:

--------------------------------------------------

之所以python代码不能访问并下载huggingface.co的模型和不能web访问huggingface.co网站的原因是一样的,就和不能访问Google的原因一样,自然的想法就是使用下面的工具来解决这个问题:

根据之前的经验,我们只需要在终端中进行一定的配置即可,具体参看:

https://www.cnblogs.com/devilmaycry812839668/p/17870951.html

也就是在命令中设置:

export all_proxy=socks5://192.168.1.100:1080/ export ALL_PROXY=socks5://192.168.1.100:1080/

但是即使如此设置也会依旧报错:

Traceback (most recent call last): File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/urllib3/connectionpool.py", line 670, in urlopen httplib_response = self._make_request( File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/urllib3/connectionpool.py", line 381, in _make_request self._validate_conn(conn) File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/urllib3/connectionpool.py", line 978, in _validate_conn conn.connect() File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/urllib3/connection.py", line 362, in connect self.sock = ssl_wrap_socket( File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/urllib3/util/ssl_.py", line 386, in ssl_wrap_socket return context.wrap_socket(sock, server_hostname=server_hostname) File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/ssl.py", line 513, in wrap_socket return self.sslsocket_class._create( File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/ssl.py", line 1104, in _create self.do_handshake() File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/ssl.py", line 1375, in do_handshake self._sslobj.do_handshake() ssl.SSLEOFError: [SSL: UNEXPECTED_EOF_WHILE_READING] EOF occurred in violation of protocol (_ssl.c:1007) During handling of the above exception, another exception occurred: Traceback (most recent call last): File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/requests/adapters.py", line 486, in send resp = conn.urlopen( File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/urllib3/connectionpool.py", line 726, in urlopen retries = retries.increment( File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/urllib3/util/retry.py", line 446, in increment raise MaxRetryError(_pool, url, error or ResponseError(cause)) urllib3.exceptions.MaxRetryError: SOCKSHTTPSConnectionPool(host='huggingface.co', port=443): Max retries exceeded with url: /bert-base-chinese/resolve/main/tokenizer_config.json (Caused by SSLError(SSLEOFError(8, '[SSL: UNEXPECTED_EOF_WHILE_READING] EOF occurred in violation of protocol (_ssl.c:1007)'))) During handling of the above exception, another exception occurred: Traceback (most recent call last): File "/tmp/x2.py", line 4, in <module> tokenizer = BertTokenizer.from_pretrained('bert-base-chinese') File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/transformers/tokenization_utils_base.py", line 1947, in from_pretrained resolved_config_file = cached_file( File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/transformers/utils/hub.py", line 430, in cached_file resolved_file = hf_hub_download( File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/huggingface_hub/utils/_validators.py", line 118, in _inner_fn return fn(*args, **kwargs) File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/huggingface_hub/file_download.py", line 1247, in hf_hub_download metadata = get_hf_file_metadata( File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/huggingface_hub/utils/_validators.py", line 118, in _inner_fn return fn(*args, **kwargs) File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/huggingface_hub/file_download.py", line 1624, in get_hf_file_metadata r = _request_wrapper( File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/huggingface_hub/file_download.py", line 402, in _request_wrapper response = _request_wrapper( File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/huggingface_hub/file_download.py", line 425, in _request_wrapper response = get_session().request(method=method, url=url, **params) File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/requests/sessions.py", line 589, in request resp = self.send(prep, **send_kwargs) File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/requests/sessions.py", line 703, in send r = adapter.send(request, **kwargs) File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/huggingface_hub/utils/_http.py", line 63, in send return super().send(request, *args, **kwargs) File "/home/devil/anaconda3/envs/pytorch/lib/python3.10/site-packages/requests/adapters.py", line 517, in send raise SSLError(e, request=request) requests.exceptions.SSLError: (MaxRetryError("SOCKSHTTPSConnectionPool(host='huggingface.co', port=443): Max retries exceeded with url: /bert-base-chinese/resolve/main/tokenizer_config.json (Caused by SSLError(SSLEOFError(8, '[SSL: UNEXPECTED_EOF_WHILE_READING] EOF occurred in violation of protocol (_ssl.c:1007)')))"), '(Request ID: eaad1ff7-e56f-4fb4-95ca-8f580cb24731)')

对于这个问题,https://blog.csdn.net/devil6636252/article/details/108815201

给出的解释是 python在proxy的socks5方式下使用ssl校验时会出现Bug。

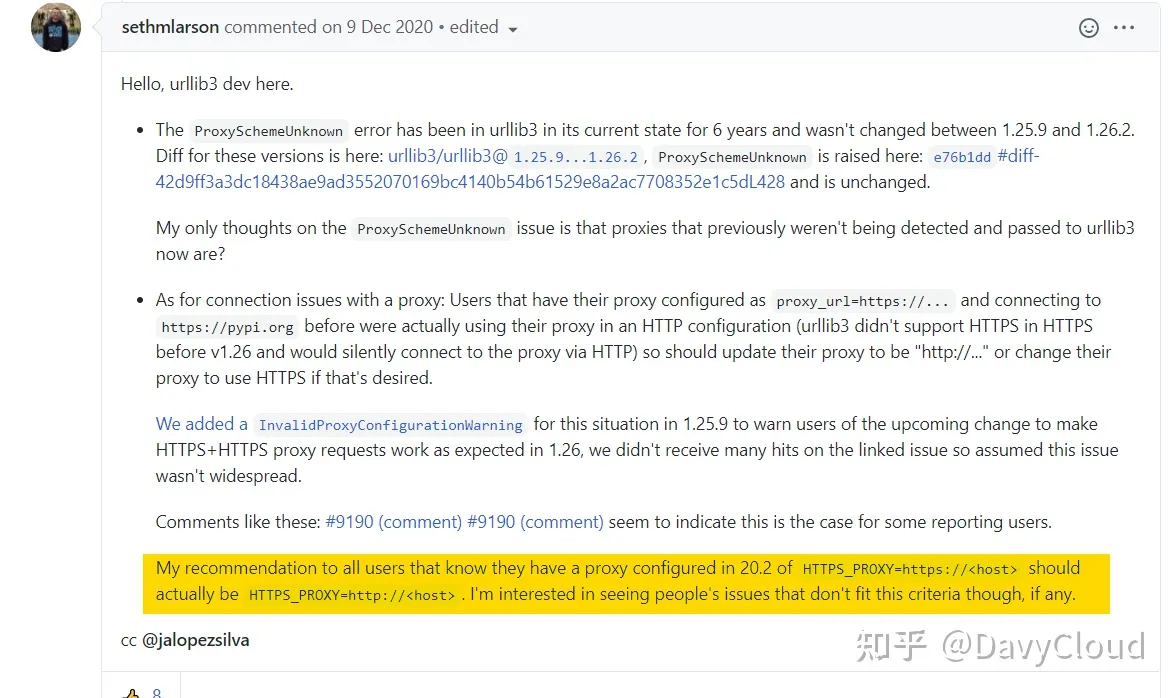

同样对于这个问题,https://zhuanlan.zhihu.com/p/350015032?utm_id=0

给出的解决方法是降低urllib3的版本:

pip install urllib3==1.25.11

并给出解释:

https://github.com/pypa/pip/issues/9216

-----------------------------------------------

根据上面的帖子中的解释我们得到了正确的解决方法:

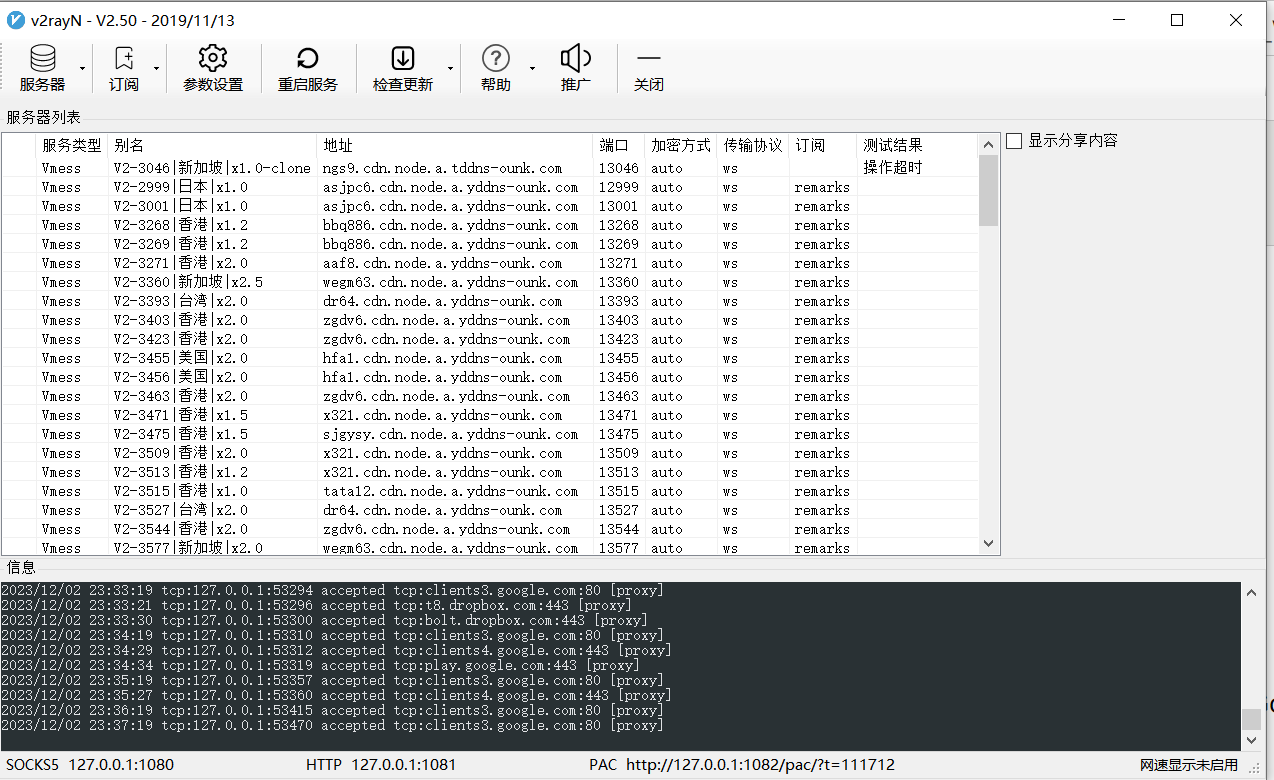

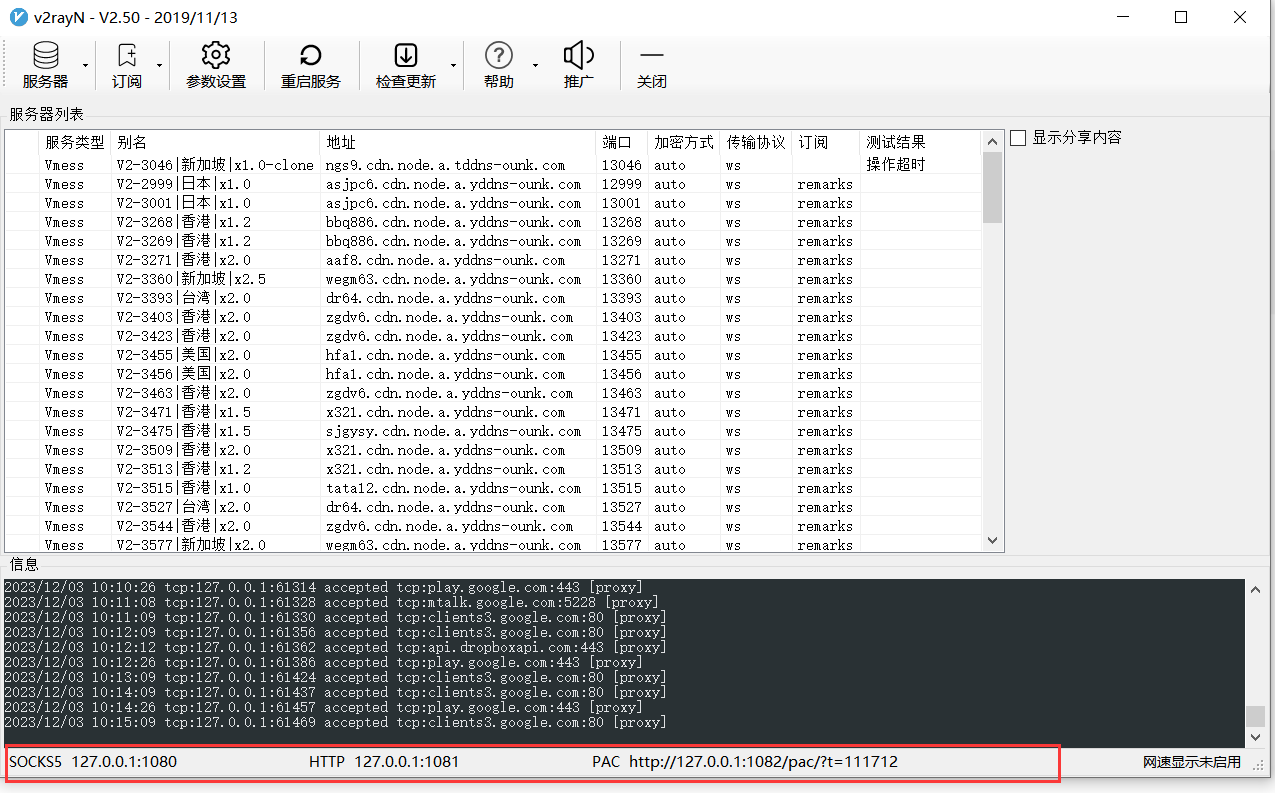

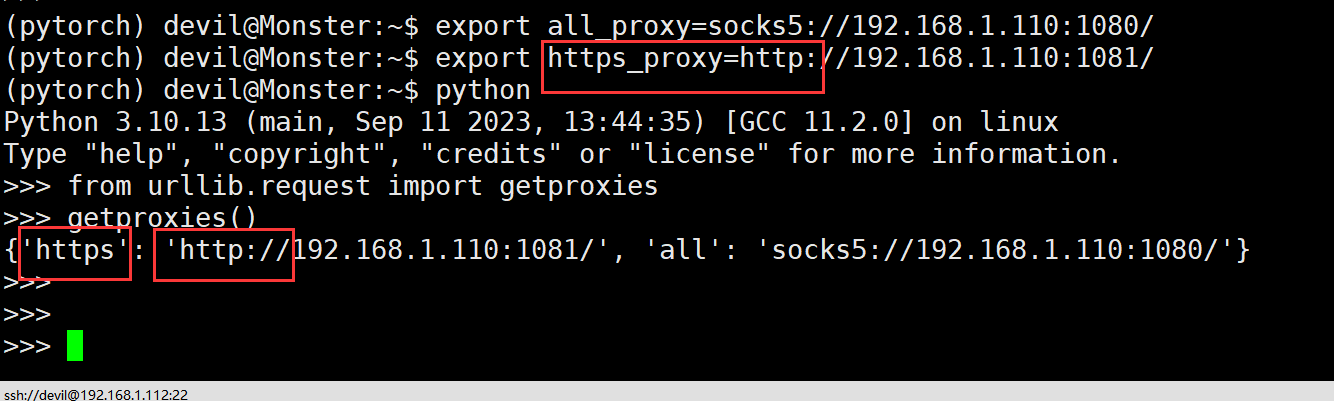

查看上网工具的网络配置:

平时我们使用操作系统时的proxy配置:

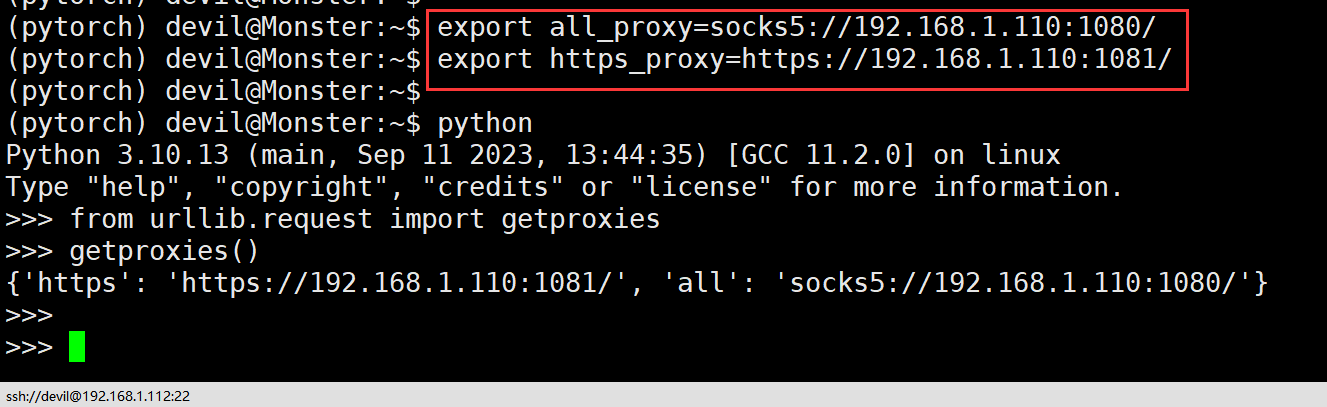

export all_proxy=socks5://192.168.1.110:1080/

export https_proxy=https://192.168.1.110:1081/

修改为:

export all_proxy=socks5://192.168.1.110:1080/

export https_proxy=http://192.168.1.110:1081/

验证:

改为:

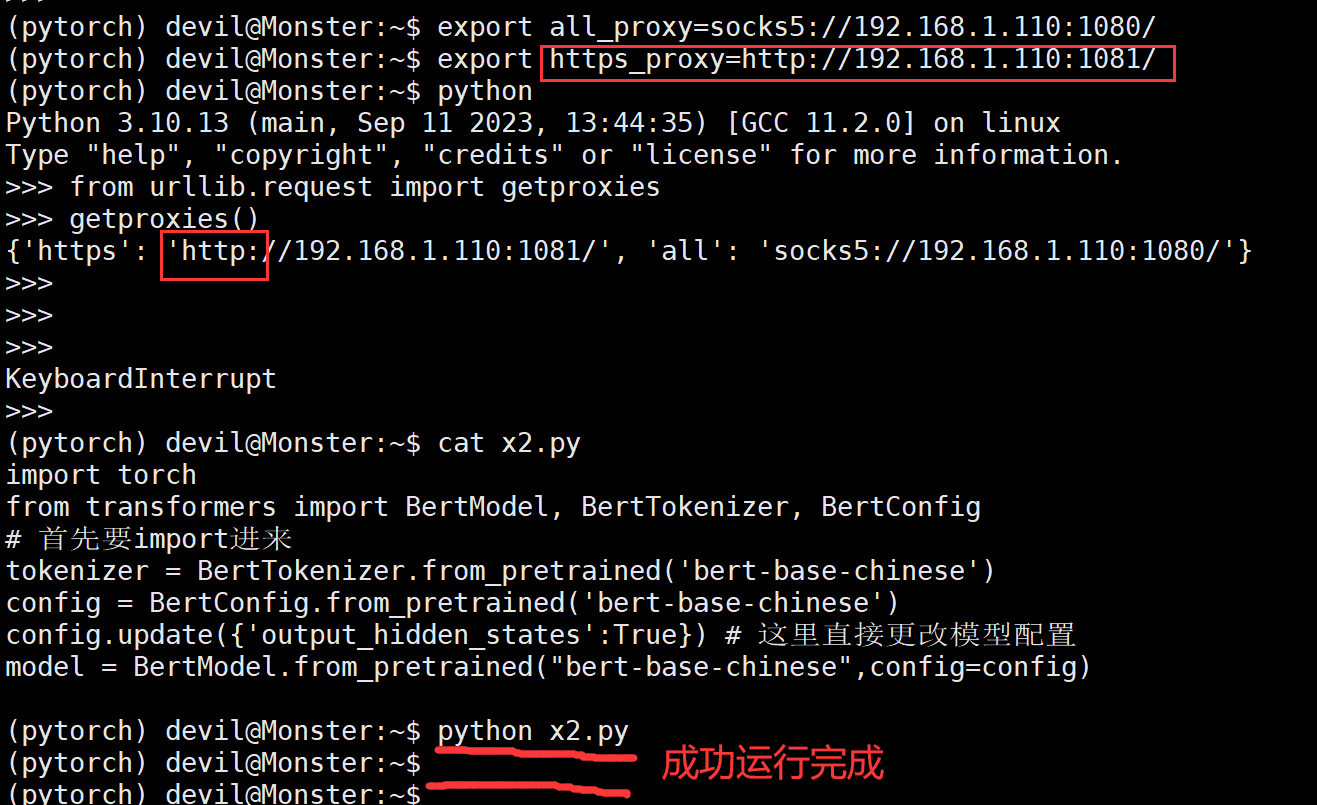

运行hugging face代码:

import torch from transformers import BertModel, BertTokenizer, BertConfig # 首先要import进来 tokenizer = BertTokenizer.from_pretrained('bert-base-chinese') config = BertConfig.from_pretrained('bert-base-chinese') config.update({'output_hidden_states':True}) # 这里直接更改模型配置 model = BertModel.from_pretrained("bert-base-chinese",config=config)

成功运行:

============================

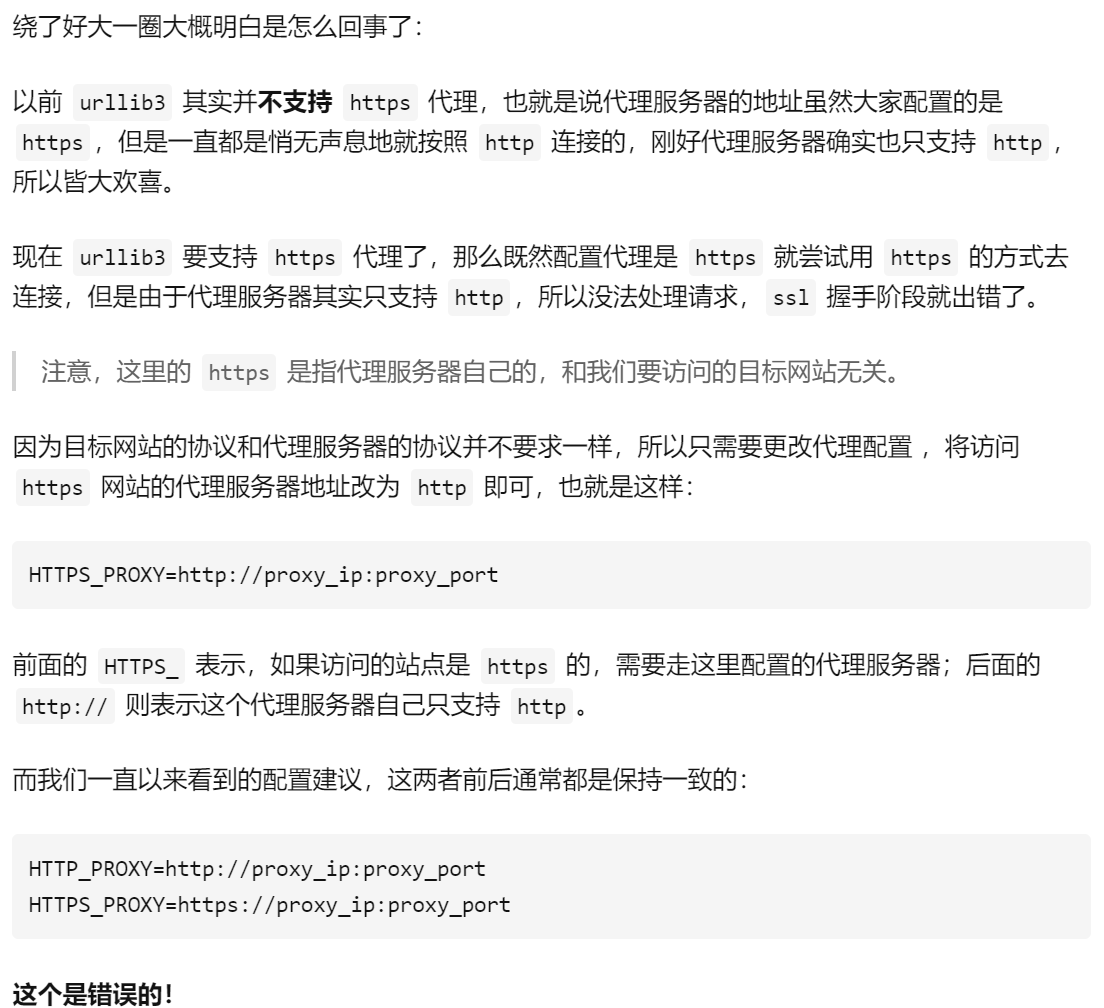

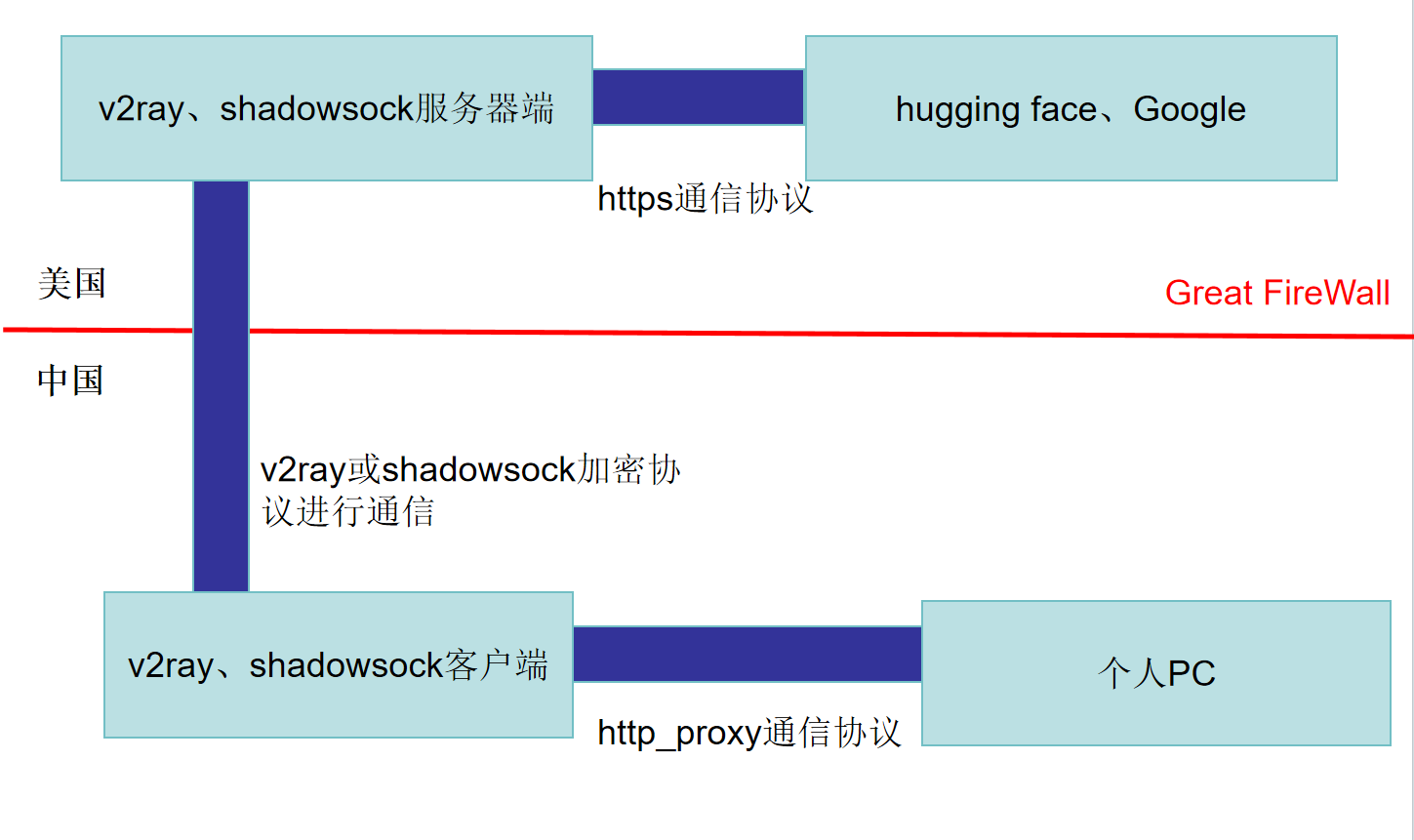

关于出现这个问题的解释(个人理解):

1. 不论哪种访问Google的proxy工具其实都是不支持https方式的proxy的,而是只支持http方式的proxy的;

2. 在python扩展包urllib3==1.26之前版本也是不支持https方式的proxy的,如果你指定使用https方式的proxy其实也是被后台默认转为http方式的proxy的;

3. 由于python扩展包urllib3==1.26开始支持https方式的proxy,而此时的可以访问Google的proxy工具依旧不支持https方式的proxy,于是就出现报错的现象,其实这个问题都不好说成是bug而是一种历史遗留的约定俗成的事情;

给出示意图:

也就是说,现有的工具只支持明文的http_proxy,我们PC将通信信息通过http_proxy发送给上网工具的客户端;然后上网工具的客户端通过加密协议与国外的工具服务器通信,这个过程使用的是密文;位于国外的上网工具的服务器通过加密的https协议去访问hugging face或Google。

一般情况下,个人PC访问上网工具服务器的客户端都是发生在个人PC主机上,或者是发生在局域网中,这种情况一般是可信的环境,但是这种http_proxy的传输方式在安全性上也确实没有https_proxy的高,毕竟这个过程是不加密的,但是可以支持https_proxy的外网访问工具也确实是没有的,那么这个过程是否会导致通信数据进行窃取和篡改呢?其实答案是否定的。

我们可以参考一下:

HTTPS具体流程

由于https可以很好的实现端到端的信息加密,因此只要个人PC端发起的访问协议是HTTPS那么即使中间有上网工具做proxy也不会导致通信数据进行窃取和篡改。因为在HTTPS通信过程中被访问端(hugging face、Google)的私钥是被保留在网站上的,而个人PC的通信私钥也是被保存在PC上的,不论中间过程是否有其他proxy也不会导致信息被破解窃取或篡改(个人PC和目标网站Google之间的HTTPS连接建立后)。也正因如此,个人PC和上网工具的客户端之间通信使用http_proxy是没有问题的。

如果在个人PC与上网工具客户端之间使用https_proxy通信,那么也就等于在这两者通信之间使用了两层的https加密,也就是内层使用个人PC和目标网站之间的HTTPS加密,外层使用个人PC与上网客户端之间的HTTPS加密。

既然在个人PC和目标网站Google之间的HTTPS连接建立后个人PC和上网客户端之间的通信内容是加密的,那么http_proxy和https_proxy的区别在哪呢?

答案是更加安全。

如果个人PC和上网客户端之间的proxy是https_proxy的话,那么在个人PC与目标网站Google之间建立https连接之前个人PC就需要先和上网客户端之间建立好https传输通道,之后个人PC与目标网站Google之间建立https的整个过程也都是在这个https通道中进行的。要知道https连接建立成功之前所有的通信都是明问的,也就是说在https连接建立成功之前工具者是可以知道个人PC是要和哪个目标网站建立连接的,但是如果再建立这个PC和目标网站之间的https连接之前就有一个可以使用的https连接那么就可以保证这部分信息也是加密的(个人PC是要和哪个目标网站建立连接的)。所以,如果个人PC和目标网站本就是HTTPS连接的情况下,使用https_proxy的用处就是建立一个双层的HTTPS连接,使用外层的PROXY HTTPS连接保证内层的个人PC和目标网站间的HTTPS连接建立过程也被加密。

参考:

https://imququ.com/post/web-proxy.html

https://imququ.com/post/web-proxy-2.html

https://github.com/urllib3/urllib3/commit/8c7a43b4a4ca0c8d36d55f132daa2a43d06fe3c4

----------------------------------------------

posted on 2023-12-02 23:16 Angry_Panda 阅读(13128) 评论(0) 收藏 举报

浙公网安备 33010602011771号

浙公网安备 33010602011771号