NVIDIA显卡cuda的多进程服务——MPS(Multi-Process Service)

相关内容:

tensorflow1.x——如何在C++多线程中调用同一个session会话

tensorflow1.x——如何在python多线程中调用同一个session会话

参考:

https://blog.csdn.net/weixin_41997940/article/details/124241226

官方的技术文档:

=============================================

NVIDIA显卡在进行CUDA运算时一个时刻下只能运行一个context的计算,一个context默认就是指一个CPU进程下对CUDA程序进行调用时在NVIDIA GPU端申请的资源和运行数据等。

也就是说默认情况下CUDA运算时一个GPU上在一个时刻内只能运行一个CPU进程下的调用,也就是说GPU上默认不能实现任务的并发,而是并行。

但是如果显卡支持HYPER-Q功能,在开启mps服务后可以实现多个CPU进程共享一个GPU上的context,以此实现GPU上的多个进程并发执行,从而实现GPU上一个时刻下有大于1的CPU进程的调用在执行。

相关介绍:

https://blog.csdn.net/weixin_41997940/article/details/124241226

特别注意:

1. mps服务不能单独为某个显卡进行设置,该服务的开启意味着所有NVIDIA cuda显卡均开启mps服务。

2. mps服务需要sudo权限进行开启,mps服务的关闭命令往往失效,需要手动的sudo kill pid号

3. mps服务是用户独显的(如果是多显卡主机,mps开启后多个显卡都被单用户独占cuda),也就是说一个显卡上运行了某用户的nvidia-cuda-mps-server进程,那么该显卡上只能运行该用户的cuda程序,而其他的用户的进程则被阻塞不能执行,只有等待上个用户的所有cuda任务结束并且该用户的nvidia-cuda-mps-server进程退出才可以启动下个用户的nvidia-cuda-mps-server进程然后运行下个用户的cuda进程。需要注意这里说的任务结束并不是指分时系统的调配而是指一个进程的cuda调用结束。

从上面的mps特点上我们可以看到mps服务只适合于单用户独占某块显卡,并且在该显卡上运行多个cuda进程的任务,可以说mps服务是单用户独占显卡的一种服务。正是由于mps服务的特点导致该服务在实际的生产环境下较少被使用,不过mps服务对于个人cuda用户来说还是非常不错的选择。

多核心GPU和多核心CPU在运算原理上有很大不同,多核心CPU可以在一个时刻运行多个进程,而多核心GPU在一个时刻只能运行一个进程,mps服务就是为提高gpu使用效率而设计的,开启mps后一个gpu上可以在一个时刻内运行多个进程的cuda调用(多个进程可能只是部分生命周期可以重叠并发执行),但是要求是这些进程必须属于同一个用户,而且只有当gpu上没有其他用户的cuda程序后才可以允许其他用户调用该GPU的cuda运算。

对于多用户的linux的cuda系统来说mps服务不可用,但是对于单用户的linux系统,mps服务可以大幅度提高单卡多进程的运行效率。

=============================================

mps服务的开启命令:

sudo nvidia-cuda-mps-control -d

需要注意的是如果你是多显卡主机,该命令意味为所有显卡均开启mps服务,mps服务不能单独指定显卡。

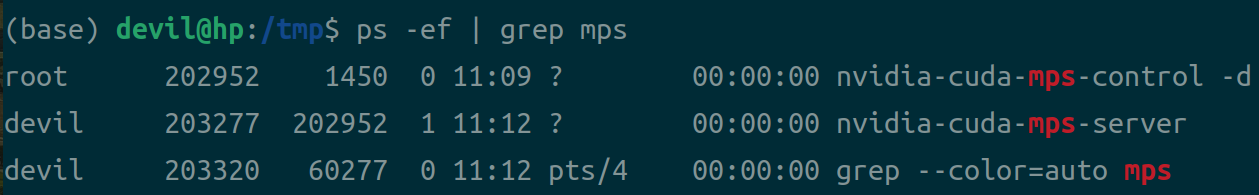

mps服务的查看命令:

ps -ef | grep mps

mps服务的关闭命令:

sudo nvidia-cuda-mps-control quit

需要注意的是该命令并不能强制关闭mps服务,如果查看mps服务没有被被关闭则需要使用sudo kill 进程号。

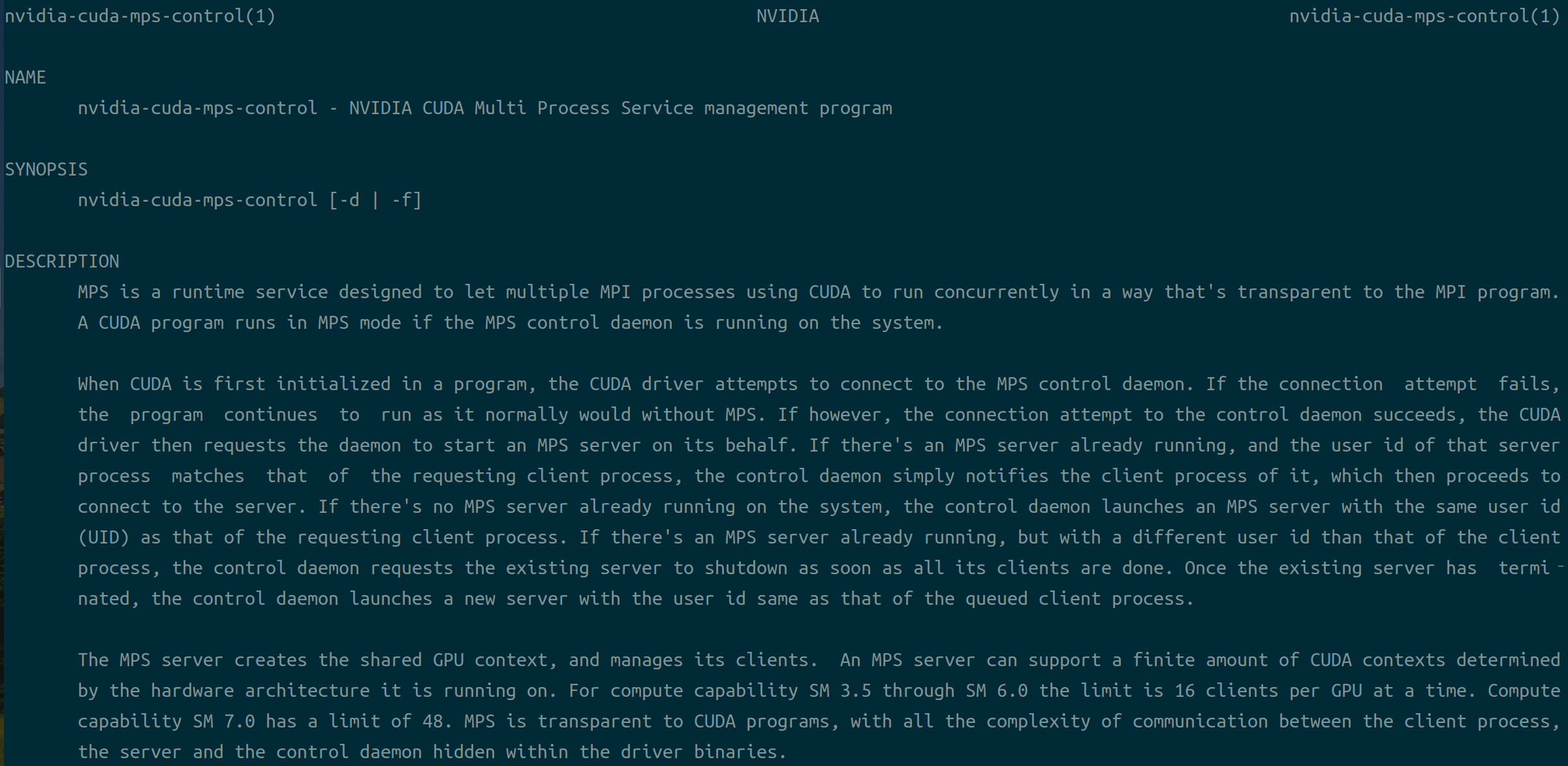

mps服务的帮助文档:

nvidia-cuda-mps-control(1) NVIDIA nvidia-cuda-mps-control(1) NAME nvidia-cuda-mps-control - NVIDIA CUDA Multi Process Service management program SYNOPSIS nvidia-cuda-mps-control [-d | -f] DESCRIPTION MPS is a runtime service designed to let multiple MPI processes using CUDA to run concurrently in a way that's transparent to the MPI program. A CUDA program runs in MPS mode if the MPS control daemon is running on the system. When CUDA is first initialized in a program, the CUDA driver attempts to connect to the MPS control daemon. If the connection attempt fails, the program continues to run as it normally would without MPS. If however, the connection attempt to the control daemon succeeds, the CUDA driver then requests the daemon to start an MPS server on its behalf. If there's an MPS server already running, and the user id of that server process matches that of the requesting client process, the control daemon simply notifies the client process of it, which then proceeds to connect to the server. If there's no MPS server already running on the system, the control daemon launches an MPS server with the same user id (UID) as that of the requesting client process. If there's an MPS server already running, but with a different user id than that of the client process, the control daemon requests the existing server to shutdown as soon as all its clients are done. Once the existing server has termi‐ nated, the control daemon launches a new server with the user id same as that of the queued client process. The MPS server creates the shared GPU context, and manages its clients. An MPS server can support a finite amount of CUDA contexts determined by the hardware architecture it is running on. For compute capability SM 3.5 through SM 6.0 the limit is 16 clients per GPU at a time. Compute capability SM 7.0 has a limit of 48. MPS is transparent to CUDA programs, with all the complexity of communication between the client process, the server and the control daemon hidden within the driver binaries. Currently, CUDA MPS is available on 64-bit Linux only, requires a device that supports Unified Virtual Address (UVA) and has compute capabil‐ ity SM 3.5 or higher. Applications requiring pre-CUDA 4.0 APIs are not supported under CUDA MPS. Certain capabilities are only available starting with compute capability SM 7.0. OPTIONS -d Start the MPS control daemon in background mode, assuming the user has enough privilege (e.g. root). Parent process exits when control daemon started listening for client connections. -f Start the MPS control daemon in foreground mode, assuming the user has enough privilege (e.g. root). The debug messages are sent to standard output. -h, --help Print a help message. <no arguments> Start the front-end management user interface to the MPS control daemon, which needs to be started first. The front-end UI keeps reading com‐ mands from stdin until EOF. Commands are separated by the newline character. If an invalid command is issued and rejected, an error message will be printed to stdout. The exit status of the front-end UI is zero if communication with the daemon is successful. A non-zero value is re‐ turned if the daemon is not found or connection to the daemon is broken unexpectedly. See the "quit" command below for more information about the exit status. Commands supported by the MPS control daemon: get_server_list Print out a list of PIDs of all MPS servers. start_server -uid UID Start a new MPS server for the specified user (UID). shutdown_server PID [-f] Shutdown the MPS server with given PID. The MPS server will not accept any new client connections and it exits when all current clients disconnect. -f is forced immediate shutdown. If a client launches a faulty kernel that runs forever, a forced shutdown of the MPS server may be required, since the MPS server creates and issues GPU work on behalf of its clients. get_client_list PID Print out a list of PIDs of all clients connected to the MPS server with given PID. quit [-t TIMEOUT] Shutdown the MPS control daemon process and all MPS servers. The MPS control daemon stops accepting new clients while waiting for cur‐ rent MPS servers and MPS clients to finish. If TIMEOUT is specified (in seconds), the daemon will force MPS servers to shutdown if they are still running after TIMEOUT seconds. This command is synchronous. The front-end UI waits for the daemon to shutdown, then returns the daemon's exit status. The exit status is zero iff all MPS servers have exited gracefully. Commands available to Volta MPS control daemon: get_device_client_list PID List the devices and PIDs of client applications that enumerated this device. It optionally takes the server instance PID. set_default_active_thread_percentage percentage Set the default active thread percentage for MPS servers. If there is already a server spawned, this command will only affect the next server. The set value is lost if a quit command is executed. The default is 100. get_default_active_thread_percentage Query the current default available thread percentage. set_active_thread_percentage PID percentage Set the active thread percentage for the MPS server instance of the given PID. All clients created with that server afterwards will ob‐ serve the new limit. Existing clients are not affected. get_active_thread_percentage PID Query the current available thread percentage of the MPS server instance of the given PID. ENVIRONMENT CUDA_MPS_PIPE_DIRECTORY Specify the directory that contains the named pipes and UNIX domain sockets used for communication among the MPS control, MPS server, and MPS clients. The value of this environment variable should be consistent in the MPS control daemon and all MPS client processes. Default directory is /tmp/nvidia-mps CUDA_MPS_LOG_DIRECTORY Specify the directory that contains the MPS log files. This variable is used by the MPS control daemon only. Default directory is /var/log/nvidia-mps FILES Log files created by the MPS control daemon in the specified directory control.log Record startup and shutdown of MPS control daemon, user commands issued with their results, and status of MPS servers. server.log Record startup and shutdown of MPS servers, and status of MPS clients. nvidia-cuda-mps-control 2013-02-26 nvidia-cuda-mps-control(1)

=============================================

给出一个TensorFlow1.x的代码:

import tensorflow as tf from tensorflow import keras import numpy as np import threading import time def build(): n = 8 with tf.device("/gpu:1"): x = tf.random_normal([n, 10]) x1 = tf.layers.dense(x, 10, activation=tf.nn.elu, name="fc1") x2 = tf.layers.dense(x1, 10, activation=tf.nn.elu, name="fc2") x3 = tf.layers.dense(x2, 10, activation=tf.nn.elu, name="fc3") y = tf.layers.dense(x3, 10, activation=tf.nn.elu, name="fc4") queue = tf.FIFOQueue(10000, y.dtype, y.shape, shared_name='buffer') enqueue_ops = [] for _ in range(1): enqueue_ops.append(queue.enqueue(y)) tf.train.add_queue_runner(tf.train.QueueRunner(queue, enqueue_ops)) return queue # with sess.graph.as_default(): if __name__ == '__main__': queue = build() dequeued = queue.dequeue_many(4) config = tf.ConfigProto(allow_soft_placement=True) config.gpu_options.per_process_gpu_memory_fraction = 0.2 with tf.Session(config=config) as sess: sess.run(tf.global_variables_initializer()) tf.train.start_queue_runners() a_time = time.time() print(a_time) for _ in range(100000): sess.run(dequeued) b_time = time.time() print(b_time) print(b_time-a_time) time.sleep(11111)

在2070super显卡上单独运行耗时约 37秒(https://www.cnblogs.com/devilmaycry812839668/p/16853040.html)

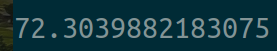

如果同样环境同时运行两个该进程的代码,用时:

可以看到在一块显卡同时运行两个相同的任务要比只运行一个任务要耗时很多,其用时大致是单任务下的2倍。

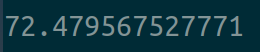

如果我们在显卡上开启mps服务后,用时:

可以看到在显卡上开启mps服务后可以有效的加速多进程程序的运行效率。注意的是mps对一个显卡上只运行用户的一个进程的情况无效,没有提升效果,并且需要注意mps开启后是用户独占的,只要运行mps的显卡上有某用户的cuda进程在运行就会阻塞其他用户的cuda调用(无法启动)。

======================================================

posted on 2022-11-05 19:47 Angry_Panda 阅读(8469) 评论(0) 编辑 收藏 举报